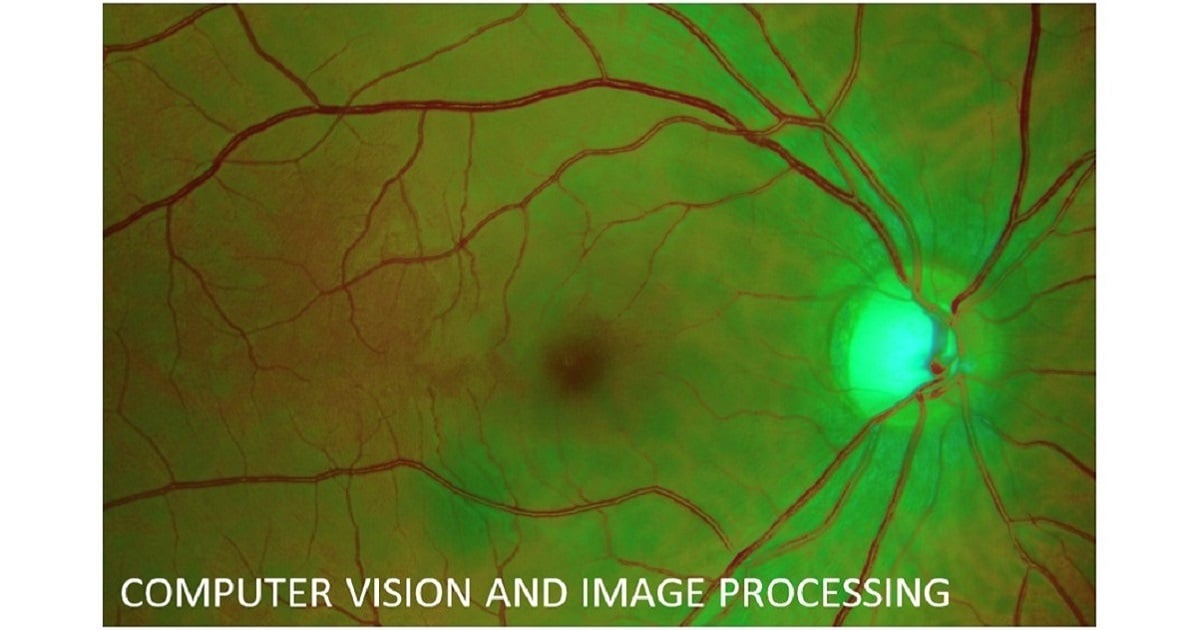

Computer Vision and Image Processing

Topic Information

Dear Colleagues,

Computer vision is a scientific discipline that aims at developing models for understanding our 3D environment using cameras. Further, image processing can be understood as the whole body of techniques that extract useful information directly from images or to process them for optimal subsequent analysis. At any rate, computer vision and image processing are two closely related fields which can be considered as a work area used in almost any research involving cameras or any image sensor to acquire information from the scenes or working environments. Thus, the main aim of this Topic is to cover some of the relevant areas where computer vision/image processing is applied, including but not limited to:

- Three-dimensional image acquisition, processing, and visualization

- Scene understanding

- Greyscale, color, and multispectral image processing

- Multimodal sensor fusion

- Industrial inspection

- Robotics

- Surveillance

- Airborne and satellite on-board image acquisition platforms.

- Computational models of vision

- Imaging psychophysics

- Etc.

Prof. Dr. Silvia Liberata Ullo

Topic Editor

Keywords

- 3D acquisition, processing, and visualization

- scene understanding

- multimodal sensor processing and fusion

- multispectral, color, and greyscale image processing

- industrial quality inspection

- computer vision for robotics

- computer vision for surveillance

- airborne and satellite on-board image acquisition platforms

- computational models of vision

- imaging psychophysics