Abstract

In recent years, large convolutional neural networks have been widely used as tools for image deblurring, because of their ability in restoring images very precisely. It is well known that image deblurring is mathematically modeled as an ill-posed inverse problem and its solution is difficult to approximate when noise affects the data. Really, one limitation of neural networks for deblurring is their sensitivity to noise and other perturbations, which can lead to instability and produce poor reconstructions. In addition, networks do not necessarily take into account the numerical formulation of the underlying imaging problem when trained end-to-end. In this paper, we propose some strategies to improve stability without losing too much accuracy to deblur images with deep-learning-based methods. First, we suggest a very small neural architecture, which reduces the execution time for training, satisfying a green AI need, and does not extremely amplify noise in the computed image. Second, we introduce a unified framework where a pre-processing step balances the lack of stability of the following neural-network-based step. Two different pre-processors are presented. The former implements a strong parameter-free denoiser, and the latter is a variational-model-based regularized formulation of the latent imaging problem. This framework is also formally characterized by mathematical analysis. Numerical experiments are performed to verify the accuracy and stability of the proposed approaches for image deblurring when unknown or not-quantified noise is present; the results confirm that they improve the network stability with respect to noise. In particular, the model-based framework represents the most reliable trade-off between visual precision and robustness.

1. Introduction

Image restoration is a discipline within the field of image processing focusing on the removal or reduction in distortions and artifacts from images. This topic is of interest in a wide range of applications, including medical imaging, satellite and aerial imaging, and digital photography. In this last case, blurring on images is quite frequent and several factors can cause it. To set some examples, Gaussian blur is caused by the diffraction of light passing through a lens and it is more prevalent in images captured with low-aperture lenses or in situations where the depth of field is shallow, whereas motion blur is due to handheld camera movements or low lighting conditions and slow shutter speeds [1,2,3]. Furthermore, noise seriously affects images; it is usually introduced by the acquisition systems.

Researchers have developed a number of algorithms for reducing blur and noise and image restoration is a very active field of research where new methods are continuously being proposed and developed. Such methodologies can be classified into two main categories: model-based and learning-based. The model-based techniques assume that the degradation process is known and it is mathematically described as an inverse problem [4]. The learning-based methods learn a map between the degraded and clean images during the training phase and use it to deblur new corrupted images [5].

1.1. Model-Based Mathematical Formulation

In model-based approaches, denoting by the compact and locally connected subset of of the ground truth sharp images, the relation between and its blurred and noisy observation is formulated as:

where K is the known blurring operator and represents the noise on the image. We can say that, with very high probability, . In this setting, the goal of model-based image deblurring methods is to compute a sharp and unobstructed image given and K, by solving the linear inverse problem. When noise is present, the problem (P) is typically reformulated into an optimization problem, where a data fit measure, namely, , is minimized. Since the blurring operator K is known to be severely ill-conditioned, a regularization term is added to the data-fidelity term to avoid noise propagation. The resulting optimization problem is formulated as:

where is the regularization parameter. This optimization problem can be solved using different iterative methods depending on the specific choices for and [1,6,7]. We remark that is set as the least-squares function in the case of Gaussian noise, whereas the regularization function can be tuned by the users according to the imaging properties they desire to enforce. Recently, plug-and-play techniques that plug a denoiser, usually a neural network, into an iterative procedure to solve the minimization problem have been used [8,9,10]. The value of can also be selected by automatic routines, image by image [11,12]. These features make model-based approaches mathematically explainable, flexible, and robust. However, a disadvantage is that the final result strongly depends on a set of parameters that are difficult to set up properly.

1.2. Deep-Learning-Based Formulation

In the last decade, deep learning algorithms have been emerging as good alternatives to model-based approaches. Disregarding any mathematical blurring operator, convolutional neural networks (NNs) can be trained to identify patterns characterizing blur on images, thus they can learn several kinds of blur and adapt to each specific imaging task. Large and complex convolutional neural networks, called UNet, have been proposed to achieve high levels of accuracy by automatically tuning and defining their inner filters and proper transformations for blur reduction, without needing any parameter setting [13,14,15,16]. Indeed, the possibility to process large amounts of data in parallel makes networks highly efficient for image processing tasks and prone to play a key role in the development of new and more advanced techniques in the future.

However, challenges and limitations in using neural networks are known from the literature. Firstly, it is difficult to understand and precisely interpret how they are making decisions and predictions, as they act as unexplainable black boxes mapping the input image towards directly. Secondly, neural networks are prone to overfitting, which occurs when they become too specialized for the training samples and perform poorly on new, unseen images. Lastly, the high performance of neural networks is typically evaluated only in the so-called in-domain case, i.e., the test procedure is performed on images sharing exactly the same corruption with the training samples, hence the impact of unquantified perturbations (as noise can be) has been not widely studied yet. In other words, the robustness of NN-based image deblurring with respect to unknown noise is not guaranteed [17,18,19,20].

1.3. Contributions of the Article

Motivated by the poor stability but high accuracy of NN-based approaches in solving inverse imaging problems such as deblurring, this paper proposes strategies to improve stability, maintaining good accuracy, acting similarly to regularization functions in the model-based approach. Based on seeking a result showing a trade-off between stability and accuracy, we propose to use a very small neural network in place of the UNet, which is less accurate but is much more stable than larger networks. Since it has only a few parameters to identify, it consumes relatively little time and energy, thus meeting the green AI principles.

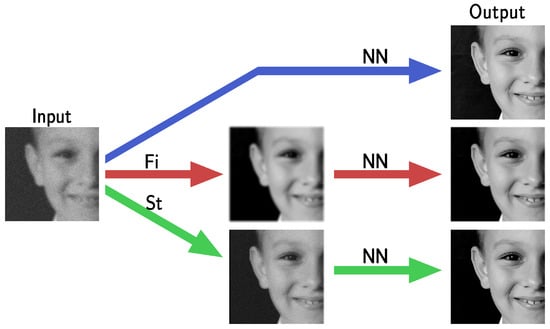

Moreover, we propose two new NN-based schemes, embedding a pre-processing step to face the network instability when solving deblurring problems, as in (P). The first scheme, denoted as FiNN, applies a model-free low-pass filter to the datum, before passing it as input to the NN. This is a good approach to be applied whenever an unknown noise is present because it does not need any model information or parameter tuning. The second scheme, called stabilized neural network (StNN), exploits an estimation of the noise statistics and the mathematical modeling of both noise and the image corruption process. Figure 1 shows a draft of the proposed frameworks, whose robustness is evaluated from a theoretical perspective and tested on an image data set.

Figure 1.

A graphical draft highlighting the introduction of pre-processing steps Fi and St defining the proposed frameworks FiNN and StNN, respectively.

1.4. Structure of the Article

The work is organized as follows. In Section 2, we formulate the NN-based action as an image reconstructor for the problem (P). In Section 3 we show our experimental setup and motivate our work on some experiments, thus, we state our proposals and derive their main properties in Section 4. Finally, in Section 5 we will report the results of some experiments to test the methods and empirically validate the theoretical analysis, before concluding with final remarks in Section 6.

2. Solving Imaging Inverse Problems with Deep-Learning-Based Operators

As stated in (P), image restoration is mathematically modeled as an inverse problem which derives from the discretization of Fredholm integral equations; these are ill-posed and the noise on the data is amplified in the numerically computed solution of . A rigorous theoretical analysis of the solution of such problems with variational techniques, which can be formulated as in Equation (1), has been performed, both in the continuous and discrete settings, and regularization techniques have been proposed to limit the noise spread in the solution [1,6].

To our best knowledge, a similar analysis for deep-learning-based algorithms is not present in the literature and it is quite mysterious how these algorithms behave in the presence of noise on the data. In this paper, we use some of the mathematical tools defined and proved in [20] and we propose here some techniques to limit noise spread. More details about the proposed mathematical framework in a more general setting can be found in [20].

In the following, if not differently stated, as a vector norm we consider the Euclidean norm. We first formalize the concept of the reconstructor associated with (P) with the following definition.

Definition 1.

Denoting by the range of K, we call the set of corrupted images according to . Any continuous function , mapping (where with ) to an , is called a reconstructor.

The associated reconstructing error is

Definition 2.

We quantify the accuracy of the reconstructor ψ, by defining the measure as:

We say that ψ is -accurate [6].

We now consider a neural network as a particular reconstructor.

Definition 3.

Given a neural network architecture , where , , is the width of each layer and is the set of matrices representing the skip connections, we define the parametric family of neural network reconstructors with architecture , parameterized by , as:

where is given by:

and is the weight matrix, and is the bias vector.

We now analyze the performance of NN-based reconstructors when noise is added to their input.

Definition 4.

Given , the δ-stability constant of an -accurate reconstructor is defined as:

Since from Definition 4 we interestingly observe that the stability constant amplifies the noise in the data:

with the noiseless datum, we can give the following definition:

Definition 5.

Given , a neural network reconstructor is said to be δ-stable if .

The next theorem states an important relation between the stability constant and the accuracy of a neural network as a solver of an inverse problem.

Theorem 1.

Let be an -accurate reconstructor. Then, for any and for any , with such that

where is the Moore–Penrose pseudo-inverse of K.

For the proof, see [20].

We emphasize that, even if neural networks used as reconstructors do not use any information on the operator K, the stability of is related to the pseudo-inverse of that operator.

3. Experimental Setting

Here, we describe our particular setting using neural networks as reconstructors for a deblurring application.

3.1. Network Architectures

We have considered three different neural network architectures for deblurring: the widely used UNet [21], the recently proposed NAFNet [22], and a green-AI-inspired 3L-SSNet [23].

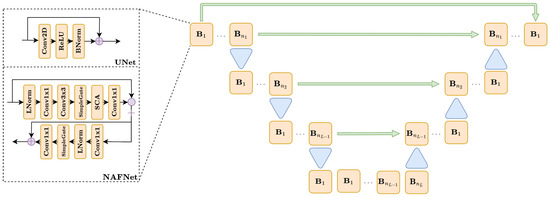

The UNet and NAFNet architectures are complex, multi-scale networks, with similar overall structure but very different behavior. As shown in Figure 2, both UNet and NAFNet are multi-resolution networks, where the input is sequentially processed by a sequence of blocks , and downsampled after that. After downsampling, the image is then sequentially upsampled again to the original shape through a sequence of blocks, symmetrically to what happened in the downsampling phase. At each resolution level , the corresponding image in the downsampling phase is concatenated to the first block in the upsampling phase, to keep the information through the network. Moreover, a skip connection has also been added between the input and the output layer of the model to simplify the training, as described in [23]. The left-hand side of Figure 2 shows that the difference between UNet and NAFNet is in the structure of each block. In particular, the blocks in UNet are simple residual convolutional layers, defined as a concatenation of convolutions, ReLU, BatchNormalizations, and a skip connection. On the other side, each block in NAFNet is far more complex, containing a long sequence of gates, and convolutional and normalization layers. The key property of NAFNet, as described in [22], is that no activation function is used in the blocks, since they have been substituted by non-linear gates, thus obtaining improved expressivity and more training efficiency.

Figure 2.

A diagram representing the UNet and NAFNet architectures.

The 3-layer single-scale network (3L-SSNet) is a very simple model defined, as suggested by its name, by just three convolutional layers, each of them composed by a linear filter, followed by a ReLU activation function and a BatchNormalization layer. Since by construction the network works on single-scale images (the input is never downsampled to low-resolution level, as is common in image processing), the kernel size is crucial to increase the receptive field of the model. For this reason, we considered a 3L-SSNet with width and kernel size .

3.2. Data Set

As a data set for our experiments we choose the widely used GoPro [24], which is composed of a large number of photographic images acquired from a GoPro camera. All the images have been cropped into patches (with no overlapping), converted into grayscale, and normalized into [0,1]. We synthesize the blurring of each image according to (P) by considering a Gaussian corrupting effect, implemented with the Gaussian kernel defined as

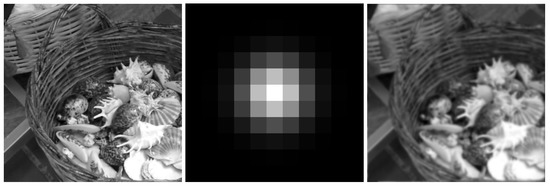

with variance . The kernel is visualized in Figure 3, together with one of the GoPro images and its blurred counterpart.

Figure 3.

From left to right: ground truth clean image, blurring kernel, blurred corrupted image.

3.3. Neural Networks Training and Testing

To train the neural network for deblurring, the set of available images is split into train and test subsets, with and images, respectively. Then, we consider a set for a given . Since we set a mean squared error (MSE) loss function, an NN-based reconstructor is uniquely defined as the solution of:

Each network has been trained by performing 50 epochs of the Adam optimizer with , , and a learning rate of . We focus on the next two experiments.

Experiment A. In this experiment, we train the neural networks on images only corrupted by blur (). With the aim of checking the networks’ accuracy, defined as in Section 2, we test on images without noise (in-domain tests). Then, to verify Theorem 1, we consider test images with added Gaussian noise, with (out-of-domain tests).

Experiment B. A common practice for enforcing network stability is noise injection [25], consisting of training a network by adding noise components to the input. In particular, we have added a vector noise , with . To test the stability of the proposed frameworks with respect to noise, we test with higher noise with respect to the training.

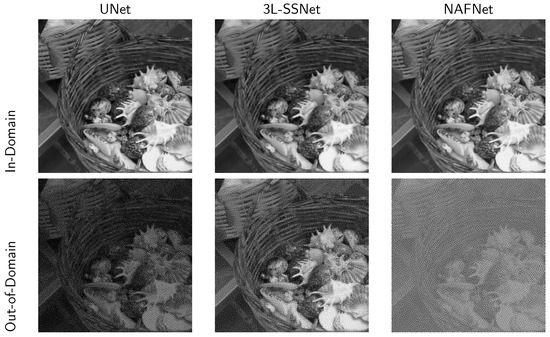

3.4. Robustness of the End-to-End NN Approach

Preliminary results obtained from experiment A are shown in Figure 4. The first row displays the reconstructions obtained from the in-domain tests, where we can appreciate the accuracy of all the three considered architectures. In the second row, we can see the results obtained from the out-of-domain tests, where the noise on the input data strongly corrupts the solution of the ill-posed inverse problem computed by UNet and NAFNet. Confirming what was stated by Theorem 1, the best result is obtained with the very light 3L-SSNET, which is the only NN able to handle the noise.

Figure 4.

Results from experiment A with the three considered neural networks. Upper row: reconstruction from non-noisy data. Lower row: reconstruction from noisy data ().

4. Improving Noise-Robustness in Deep-Learning-Based Reconstructors

As observed in Section 3, merely using a neural network to solve an inverse problem is an unstable routine. To enforce the robustness of reconstructors, we propose modifying the deep-learning-based approach by introducing a suitable operator, defined in the following as a stabilizer, into the reconstruction process.

Definition 6.

A continuous functions is called a δ-stabilizer for a neural network reconstructor if with , and with such that:

In this case, the reconstructor is said to be δ-stabilized. The smallest constant for which the definition holds is the stability constant of ϕ.

Intuitively, applying a pre-processing with reduces the perturbation of the input data by converting a noise of amplitude bounded by to a corruption with norm bounded by . This intuition has been mathematically explained in [20]; Proposition 4.2, where a relationship between the stability constant of the stabilized reconstructor and the stability constant of has been proved. In particular, if is a -stabilized reconstructor, , are the local Lipschitz constants of and , respectively, then:

As a consequence, if , then the stability constant of is smaller than the Lipschitz constant of , which implies that is more stable to input perturbations.

We underline that the -stabilizers are effective if they preserve the characteristics and the details of the input image . In this paper, we focus on the two following proposals of -stabilizers .

4.1. Stabilized Neural Network (StNN) Based on the Imaging Model

If the blurring operator K is known, it can be exploited to derive a -stabilizer function . We argue that information on K will contribute to improving the reconstruction accuracy. Specifically, we consider an iterative algorithm, converging to the solution of (1), represented by the scheme:

where is the action of the k-th iteration of the algorithm. Given a positive integer and a fixed starting iterate , let us define the -stabilizer:

By definition, maps a corrupted image to the solution computed by the iterative solver in M iterations.

In the following, we set as the objective function in (1) the Tikhonov-regularized least-squared function that has been analyzed in our previous work [20]:

In the paper, we showed that the Tikhonov-regularized formulation makes it possible to choose M such that . We are aware that we could also explore different Tikhonov-like functions, such as (with D difference operator), but this is beyond the scope of this paper. Hence, given and , it is always possible to use as a pre-processing step, stabilizing . We refer to as the stabilized neural network (StNN). In the numerical experiments, we use as the iterative method for the solution of (15) the conjugate gradient least squares (CGLS) iterative method [11]. Concerning the choice of the regularization parameter , we have chosen, for each experiment, the value minimizing the average relative error over the training set.

4.2. Filtered Neural Network (FiNN)

The intuition that a pre-processing step should reduce the noise present in the input data naturally leads to our second proposal, implemented by a Gaussian denoising filter. The Gaussian filter is a low-pass filter that reduces the impact of noise on the high frequencies [26]. Thus, the resulting pre-processed image is a low-frequency version of and the neural network has to recover the high frequencies corresponding to the image details. Let represent the operator that applies the Gaussian filter to the input. We will refer to the reconstructor as filtered neural network (FiNN).

Note that even if FiNN is employed to reduce the impact of the noise and consequently to stabilize the network solution, its constant is not smaller than one. In fact, for any with , it holds:

as a consequence of the linearity of .

5. Results

In this section we present the results obtained in our deblurring experiments described in Section 3. To evaluate and compare the deblurred images, we use visual inspection on a selected test image and exploit the structural similarity index (SSIM) [27] on the test set. Details on the implementation can be found in the GitHub repository at https://github.com/devangelista2/Ambiguity-in-solving-Inverse-Problems, accessed on 31 May 2023.

5.1. Results of Experiment A

We show and comment on the results obtained in experiment A, described in Section 3.3. We remark that the aim of these tests is to measure the accuracy of the three considered neural reconstructors and of the stabilizers proposed in Section 4 and verify their sensitivity to noise in the input data. In a word, how these reconstructors handle the ill-posedness of the imaging inverse problem.

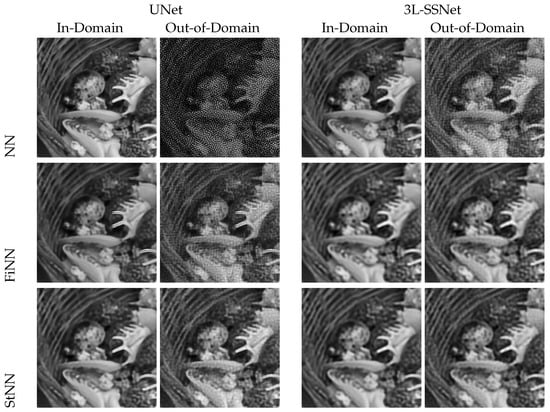

To this purpose, we visually compare the reconstructions of a single test image by the UNet and 3L-SSNet in Figure 5. The first row (which replicates some of the images of Figure 4) shows the results of the deep-learning-based reconstructors, where the out-of-domain images are clearly damaged by the noise. The FiNN and, particularly, the StNN stabilizer drastically reduce the noise, producing accurate results even for out-of-domain tests.

Figure 5.

Results from experiment A with UNet and 3L-SSNet.

In order to analyze the accuracy and stability of our proposals, we compute the empirical accuracy and the empirical stability constant , respectively defined as:

and

where is the test set and is a noise realization from , with (different for any datum ).

The computed values are reported in Table 1. Focusing on the estimated accuracies, the results confirm that NN is the most accurate method, followed by NAFNet and 3L-SSNet, as expected. As a consequence of Theorem 1, the values of the stability constant are in reverse order: the most accurate is the least stable (note the very high value of for NN!). By applying the stabilizers, the accuracy is slightly lower but the stability is highly improved (in most of the cases the constant is less than one), confirming the efficacy of the proposed solutions to handle noise and, at the same time, maintain good image quality. In particular, StNN is a stable reconstructor independent of the architecture.

Table 1.

Estimated accuracy and stability constants for experiment A on out-of-domain test (input images corrupted by noise with ).

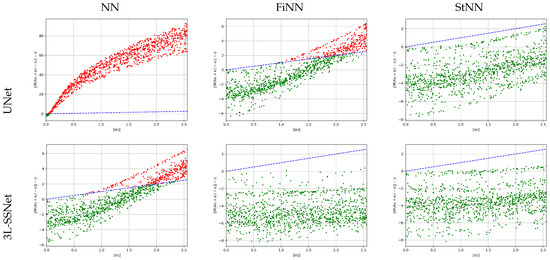

To analyze the stability of the test set with respect to noise, we have plotted in Figure 6, for each test image, vs. , where the reconstruction error is defined in (2). With green and red dots we have plotted the experiments with stability constants less and greater than one, respectively, and with the blue dashed line the bisect. We note that the values reported in Table 1 for the empirical stability constant computed as supremum (see Equation (18)) are not outliers but they are representative of the results of the whole test set.

Figure 6.

Results from experiment A. Plot of vs. for all the test images. The blue dashed line represents the bisect.

5.2. Results of Experiment B

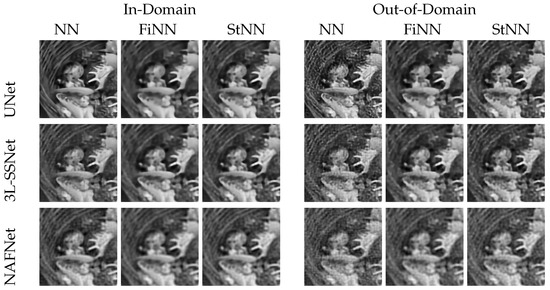

In this experiment, we used noise injection in the neural networks’ training, as described in Section 3.3. This quite common strategy reduces the networks’ accuracy but improves their stability with respect to noise. However, we show that the reconstructions are not totally satisfactory when we test on out-of-domain images, i.e., when the input images are affected by noise of different intensities with respect to the training.

Figure 7 displays the reconstructions obtained by testing with both in-domain (on the left) and out-of-domain (on the right) images. Even if the NN reconstructions (column 4) are not so affected by noise as in experiment A (see Figure 4), however, noise artifacts are clearly visible, especially in UNet and NAFNet. Both the stabilizers proposed act efficiently and remove most of the noise. We observe that the restorations obtained with FiNN are smoother but also more blurred with respect to the ones computed by StNN.

Figure 7.

Results from experiment B. On the left, tests on images with the same noise as in the training (). On the right, tests on images with higher noise than in the training ().

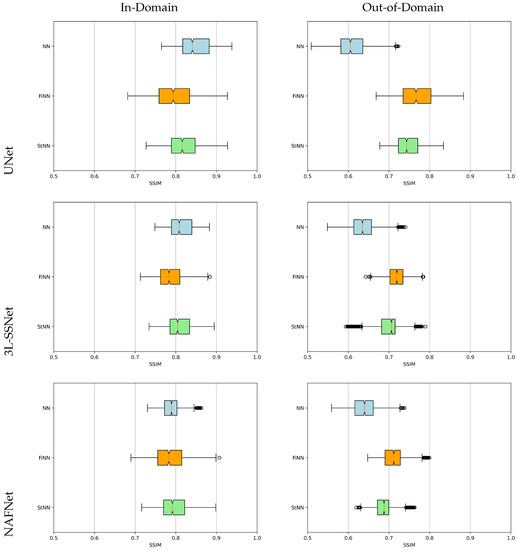

An overview of the tests is displayed by the boxplots of the SSIM values sketched in Figure 8. The light blue, orange, and green boxes represent the results obtained with the NN, FiNN, and StNN methods, respectively. They confirm that the neural networks’ performance worsens with noisy data (see the different positions of light blue boxes from the left to the right column), whereas the proposed frameworks including FiNN and StNN are far more stable.

Figure 8.

Boxplots for the SSIM values in experiment B. The light blue, orange, and green boxplots represent the results computed by NN, FiNN, and StNN, respectively.

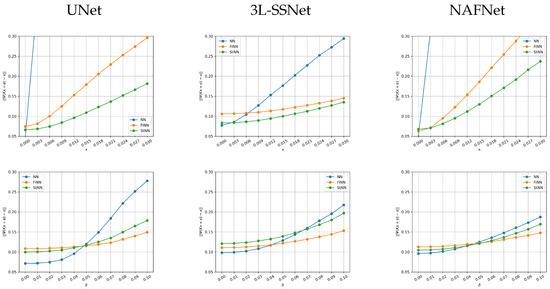

5.3. Analysis with Noise Varying on the Test Set

Finally, we have analyzed the performance of the methods when the input image is corrupted by noise from , with varying.

In Figure 9, we plot, for one image in the test set, the absolute error between the reconstruction and the true image vs. the noise standard deviation . In the upper row, the results from experiment A (we remark that in this experiment we trained the networks on non-noisy data) are shown. The NN error (blue line) is out of range for very small values of for both UNet and NAFNet, whereas the 3L-SSNet is far more stable. In all the cases, the orange and green lines shows that FiNN and StNN improve the reconstruction error. In particular, StNN performs best in all these tests.

Figure 9.

Plots of the absolute error vs. the variance σ of the noise for one image in the test set. Upper row: experiment A. Lower row: experiment B.

Concerning experiment B (in the lower row of the figure), it is very interesting to note that when the noise is smaller than the training one (corresponding to ), the NN methods are the best performing for all the considered architectures. When the behavior changes and the stabilized methods are more accurate.

6. Conclusions

Starting from the consideration that the most popular neural networks used for image deblurring, such as the family of convolutional UNets, are very accurate but unstable with respect to noise in the test images, we have proposed two different approaches to obtain stability without losing too much accuracy. The first method is a very light neural architecture, called 3L-SSNET, and the second one is to stabilize the deep learning framework by introducing a pre-processing step. Numerical results on the GoPro dataset have demonstrated the efficiency and robustness of the proposed approaches under several settings, encompassing in-domain and out-of-domain testing scenarios. The 3L-SSNet overcomes UNet and NAFNet in every test where the noise on the test images exceeds the noise on the training set, combining the desired characteristics of execution speed (from a green AI perspective) and high stability. The FiNN proposal increases the stability of the NN-based restoration (the values of its SSIM do not change remarkably in all the experiments), but the restored images appear too smooth and a few small details are lost. The StNN proposal, exploiting a model-based formulation of the underlying imaging process, achieves the highest SSIM values in the most challenging out-of-domain cases, confirming its great theory-grounded potential. It represents, indeed, a good compromise between stability and accuracy. We finally remark that the proposed approach can be simply extended to other imaging applications modeled as an inverse problem, such as super-resolution, denoising, or tomography, where the neural networks learning the map from the input to the ground truth image cannot efficiently handle noise in the input data. It can be also applied to color images, by simply using a kernel K which defines the blur on the three RGB channels and by adapting the neural network to handle color images.

This work represents one step further in shedding light on the black-box essence of NN-based image processing.

Author Contributions

Conceptualization, E.M.; methodology, D.E.; software, D.E.; validation, J.N.; formal analysis, E.L.P.; writing—original draft preparation, E.M. and E.L.P.; writing—review and editing, D.E., E.M., E.L.P. and J.N.; project administration, J.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the US National Science Foundation, under grants DMS 2038118 and DMS 2208294.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hansen, P.C.; Nagy, J.G.; O’leary, D.P. Deblurring Images: Matrices, Spectra, and Filtering; SIAM: Philadelphia, PA, USA, 2006. [Google Scholar]

- Whyte, O.; Sivic, J.; Zisserman, A.; Ponce, J. Non-uniform deblurring for shaken images. Int. J. Comput. Vis. 2012, 98, 168–186. [Google Scholar] [CrossRef]

- Raskar, R.; Agrawal, A.; Tumblin, J. Coded exposure photography: Motion deblurring using fluttered shutter. In ACM Siggraph 2006 Papers; ACM: New York, NY, USA, 2006; pp. 795–804. [Google Scholar]

- Bertero, M.; Boccacci, P.; De Mol, C. Introduction to Inverse Problems in Imaging; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Zhang, K.; Ren, W.; Luo, W.; Lai, W.S.; Stenger, B.; Yang, M.H.; Li, H. Deep image deblurring: A survey. Int. J. Comput. Vis. 2022, 130, 2103–2130. [Google Scholar] [CrossRef]

- Engl, H.W.; Hanke, M.; Neubauer, A. Regularization of Inverse Problems; Springer Science & Business Media: New York, NY, USA, 1996. [Google Scholar]

- Scherzer, O.; Grasmair, M.; Grossauer, H.; Haltmeier, M.; Lenzen, F. Variational Methods in Imaging; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Venkatakrishnan, S.V.; Bouman, C.A.; Wohlberg, B. Plug-and-play priors for model based reconstruction. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; pp. 945–948. [Google Scholar]

- Kamilov, U.S.; Mansour, H.; Wohlberg, B. A plug-and-play priors approach for solving nonlinear imaging inverse problems. IEEE Signal Process. Lett. 2017, 24, 1872–1876. [Google Scholar] [CrossRef]

- Cascarano, P.; Piccolomini, E.L.; Morotti, E.; Sebastiani, A. Plug-and-Play gradient-based denoisers applied to CT image enhancement. Appl. Math. Comput. 2022, 422, 126967. [Google Scholar] [CrossRef]

- Hansen, P.C. Discrete Inverse Problems: Insight and Algorithms; SIAM: Philadelphia, PA, USA, 2010. [Google Scholar]

- Lazzaro, D.; Piccolomini, E.L.; Zama, F. A nonconvex penalization algorithm with automatic choice of the regularization parameter in sparse imaging. Inverse Probl. 2019, 35, 084002. [Google Scholar] [CrossRef]

- Hradiš, M.; Kotera, J.; Zemcık, P.; Šroubek, F. Convolutional neural networks for direct text deblurring. In Proceedings of the BMVC, Swansea, UK, 7–10 September 2015; Volume 10. [Google Scholar]

- Koh, J.; Lee, J.; Yoon, S. Single-image deblurring with neural networks: A comparative survey. Comput. Vis. Image Underst. 2021, 203, 103134. [Google Scholar] [CrossRef]

- Sun, J.; Cao, W.; Xu, Z.; Ponce, J. Learning a convolutional neural network for non-uniform motion blur removal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 769–777. [Google Scholar]

- Zhang, K.; Luo, W.; Zhong, Y.; Ma, L.; Stenger, B.; Liu, W.; Li, H. Deblurring by realistic blurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2737–2746. [Google Scholar]

- Gottschling, N.M.; Antun, V.; Hansen, A.C.; Adcock, B. The Troublesome Kernel—On Hallucinations, No Free Lunches and the Accuracy-Stability Trade-Off in Inverse Problems. arXiv 2020, arXiv:2001.01258. [Google Scholar]

- Amjad, J.; Sokolić, J.; Rodrigues, M.R. On deep learning for inverse problems. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Roma, Italy, 3–7 September 2018; pp. 1895–1899. [Google Scholar]

- Bastounis, A.; Hansen, A.C.; Vlačić, V. The mathematics of adversarial attacks in AI–Why deep learning is unstable despite the existence of stable neural networks. arXiv 2021, arXiv:2109.06098. [Google Scholar]

- Evangelista, D.; Nagy, J.; Morotti, E.; Piccolomini, E.L. To be or not to be stable, that is the question: Understanding neural networks for inverse problems. arXiv 2022, arXiv:2211.13692. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part VII. Springer: Berlin/Heidelberg, Germany, 2022; pp. 17–33. [Google Scholar]

- Morotti, E.; Evangelista, D.; Loli Piccolomini, E. A green prospective for learned post-processing in sparse-view tomographic reconstruction. J. Imaging 2021, 7, 139. [Google Scholar] [CrossRef] [PubMed]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep Multi-Scale Convolutional Neural Network for Dynamic Scene Deblurring. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Bishop, C.M. Training with noise is equivalent to Tikhonov regularization. Neural Comput. 1995, 7, 108–116. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Pearson Education India: Delhi, India, 2009. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 29 October–1 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).