Abstract

Imaging sonar systems play an important role in underwater target detection and location. Due to the influence of reverberation noise on imaging sonar systems, the task of sonar target segmentation is a challenging problem. In order to segment different types of targets in sonar images accurately, we proposed the gated fusion-pyramid segmentation attention (GF-PSA) module. Specifically, inspired by gated full fusion, we improved the pyramid segmentation attention (PSA) module by using gated fusion to reduce the noise interference during feature fusion and improve segmentation accuracy. Then, we improved the SOLOv2 (Segmenting Objects by Locations v2) algorithm with the proposed GF-PSA and named the improved algorithm Attentive SOLO. In addition, we constructed a sonar target segmentation dataset, named STSD, which contains 4000 real sonar images, covering eight object categories with a total of 7077 target annotations. The experimental results show that the segmentation accuracy of Attentive SOLO on STSD is as high as 74.1%, which is 3.7% higher than that of SOLOv2.

1. Introduction

As countries pay more attention to the ocean environment, marine exploration plays an important role in the field of marine research. Moreover, the growing demand for marine surveys has greatly promoted the development of imaging sonar systems, which can be carried by a surveying ship, USV and UUV to implement locate, identify and tracking tasks.

The sonar system obtains images based on the calculating process of transmitting and recovering sound waves, in which the transmitted sound wave will be reflected back and received after encountering the target object. Therefore, the received echo contains the significant sound wave absorption characteristics of different objects. However, the performance of the sonar system is constrained by the limitations of natural unstructured terrain. Due to the complexity of an underwater acoustic channel and the variability in sound wave scattering, the received echo is also mixed with interference, including environmental noise, reverberation and sonar self-noise, which present significant challenges to the accurate target segmentation of sonar images.

Over the past few decades, researchers have proposed various traditional sonar image segmentation methods, including geometric features, probability models, level sets and Markov random field (MRF) theory. Chen et al. [1] established a new energy function combining unified MRF and level sets. Unified MRF is used for integrating the pixel-level and region-level information to analyze inter-pixel and inter-region neighborhood relationships. Further, LS evolved according to the results of UMRF, so that the model can accurately segment sonar images. Ye et al. [2] proposed two new level sets for sonar image segmentation. Firstly, the local texture feature is extracted by using a Gauss–Markov random field model and integrated into the level set energy function to dynamically select the region of interest. Then, the proposed two-phase and multi-phase level set models are obtained by optimizing the energy function. Finally, the segmentation results are obtained according to the two-level sets. Song et al. [3] employed simple linear iterative clustering (SLIC) to segment sonar images into homogeneous super pixels and then used a uniformity facet and adaptive intensity constraint strategy to maximize MRF segmentation outcomes in each iteration. Abu et al. [4] proposed a novel segmentation method called EnFK, in which local space and statistical information are treated as fuzzy terms. Firstly, the sonar images are denoised and both the processed and original images are fed into the segmentation process to enhance convergence. Then, the two novel fuzzy terms are used to obtain the final segmentation results. Wang et al. [5] proposed a region-growing-based segmentation using the likelihood ratio testing method (RGLT), which focuses on the regions of the target and shadows. Specifically, it obtains the seed points of highlight and shadow regions by likelihood ratio test based on statistical probability distribution and then grew them according to similarity criterion. Consequently, the method avoids the processing of seabed reverberation area and considerably reduces the segmentation time. Although these methods can perform well in some sonar image segmentation tasks, the segmentation performance will be greatly reduced when dealing with sonar images with severe noise and uneven intensity.

Since deep learning methods have been proven to achieve significant advantages in RGB image segmentation, detection and tracking, more and more researchers try to adopt deep learning methods for imaging sonar systems. Liu et al. [6] proposed a CNN with multi-scale inputs (MSCNN), which is trained by strategy of data expansion and integration learning and then combined the MSCNN with Markov random field (MRF) to obtain a final segmentation map. Zhao et al. [7] proposed an encoder–decoder network called Dilated Convolutional Network (DcNet), which uses extended convolution and depth separable convolution between the encoder and decoder to obtain more context information and improve segmentation accuracy. Wu et al. [8] proposed an encoder–decoder network named ECNet and utilized a fully convolutional network (FCN) and a deeply supervised network for end-to-end prediction. The ECNet explored an encoder to obtain context information from sonar images and used a decoder for recovering feature maps with high resolution from a low-resolution context feature map and, finally, obtained the pixel-based segmentation map based on the output of the decoder. Wang et al. [9] used a depth separable residual module for multi-scale feature extraction of the target regions to suppress noise interference. A multi-channel feature fusion method was used to enhance the feature information of the convolution layer and an adaptive supervision function was used to classify pixels and objects of different categories. Jiao et al. [10] proposed a relative loss function method that aims to solve small-target segmentation in sonar images by simultaneously considering the probability of pixels in target and non-target regions. In this method, the loss function of the back propagation process of the FCN (fully convolutional network) [11] is improved to speed up the convergence of the network and improve the segmentation accuracy.

Existing deep-learning-based segmentation methods can achieve good performance in most scenes, yet most of the above methods are semantic segmentation methods, which cannot identify the category of the targets. In this paper, we employed the instance segmentation method to deal with the sonar image segmentation task. Attention module is a common method to improve the accuracy of the segmentation algorithm. While considering the differences between RGB images and sonar images, such as the principle of imaging sonar systems, leads to high noise interference, weak boundary information and difficult target feature extraction of sonar images. We proposed our GF-PSA module, which can analyze the sonar feature maps from multi-scale, extract boundary information in different scales and fuse the information organically to ensure the accuracy of segmentation. We embedded a GF-PSA module into SOLOv2 [12] and the new network is named Attentive SOLO. As SOLOv2 segment objects by locations, it ignores the channel-wise information between multi-scale feature maps, so we employed channel attention in GF-PSA to obtain more useful information in multi-scale feature maps. In the process of feature fusion, we used the gated full fusion (GFF) [13] mechanism, which can reduce the noise interference to improve the fusion method in the pyramid segmentation attention (PSA) [14]. We tested the feasibility of the algorithm on a real sonar dataset named STSD and the results showed that our proposed Attentive SOLO method outperformed other existing segmentation algorithms on the sonar dataset.

The main contributions of this work are summarized as follows:

- (1)

- A new model named Attentive SOLO for sonar image segmentation is designed. The improved attention module of gated fusion pyramid segmentation was used to extract the boundary information of sonar image targets, improving the accuracy of the segmentation results.

- (2)

- A GF-PSA module is designed. The GFF was used to improve the fusion method of PSA, reducing the noise in the PSA module during feature fusion and improving the segmentation accuracy.

- (3)

- A sonar image dataset named STSD for sonar target segmentation is constructed. The sonar dataset was collected by Pengcheng Laboratory in Shenzhen, Guangdong Province, China, in the sea area near Zhanjiang City, Guangdong Province. We annotated the dataset, which contains 4000 real sonar images, eight different object categories and 7077 instance annotations.

This paper is organized as follows. In Section 2, we review the related works, including instance segmentation algorithm, attention mechanisms, multi-scale feature fusion methods and sonar datasets. In Section 3, we describe the detailed content of Attentive SOLO. The experiments and results are reported in Section 4. Finally, we conclude our work in Section 5.

2. Related Works

2.1. Instance Segmentation Algorithm

In recent years, the instance segmentation algorithm has improved considerably. Instance segmentation includes two tasks: semantic segmentation and object detection. Based on the two tasks, current instance segmentation methods are classified into two types: top-down and bottom-up methods. The top-down method firstly uses the target detection method to get an a priori bounding box and then semantic segmentation is performed within the priori bounding box. Representative algorithms include Mask R-CNN [15] and PANet [16]. The bottom-up method carries out semantic segmentation and then distinguishes different instances using clustering and metric learning. Representative algorithms include SGN [17] and SSAP [18]. All the above methods have two stages. Recently, borrowing from one-stage target detection research, some one-stage instance segmentation methods have been proposed. The one-stage methods can also be divided into two classes. One approach borrows from the idea of YOLO [19] and the representative algorithms include YOLACT [20] and SOLO [21]. Another approach is inspired by FCOS [22] and the representative algorithms are PolarMask [23] and AdaptIS [24]. Compared with other instance segmentation algorithms, SOLO adopts a fully convolutional, box-free and grouping-free approach to directly output the instance mask and the corresponding class, which balances speed and accuracy better.

Instance segmentation is widely used in many tasks, such as target tracking, human representing learning and underwater object detection. Zhou et al. [25] applied instance segmentation to the unsupervised video multi-target segmentation task, which solved the verification of unseen targets. Specifically, a novel network, which combines foreground region estimation and instance grouping, is proposed to characterize an unseen target. Further, the appearance model is employed to capture more fine-grained information. Zhou et al. [26] proposed a new bottom-up network, which utilizes sparse key points to ease the human represent. In the training process, a projected gradient descent and Dykstra’s cyclic projection algorithm is used for supervising the whole learning process. Xu et al. [27] proposed an active Mask-Box Scoring R-CNN method, which efficiently balances the boxIoU and NMS score. In addition, a triplet-measure-based active learning (TBAL) method and a balanced-sampling method is used to improve the performance of the network.

2.2. Attention Mechanism

Attention mechanism has been extensively exploited in various deep learning tasks, such as image processing, speech recognition and natural language processing. By using an attention mechanism, CNNs can obtain more useful detailed information and suppress other useless information, improving network robustness.

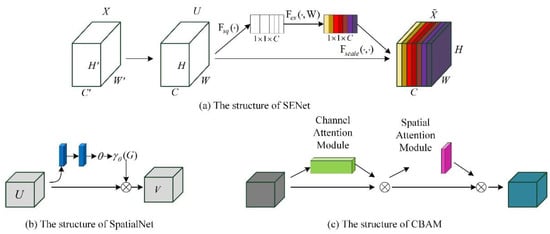

At present, many excellent attention modules have been proposed. SENet [28], proposed by Hu et al., contains two parts, where squeeze establishes dependencies between channels and excitation recalibrates features. The structure of SENet is shown in Figure 1a, where H’, W’ and C’ represent the height, width and channel number of the input image X, respectively, and H, W and C represent that of the feature maps U and the results . Fsq(·) is used to shrink the special size of U, making it 1 × 1. Fex(·,W) is used to capture the dependencies between channels. Fscale(·,·) denotes channel-wise multiplication. Jaderberg et al. [29] proposed Spatial Transformer Networks (STN), which use nonlinear interpolation to affine transform the input and output to obtain the mapping relationship. As shown in Figure 1b, the input feature map U is passed to a localization network, which regresses the transformation parameters θ. The regular spatial grid G is transformed to the sampling grid , which is applied to U to produce the output feature map V. Woo et al. [30] proposed a generic module called Convolutional Block Attention Module (CBAM), which can be integrated into the existing network with little computation. CBAM fuses channel attention and spatial attention in a series way (shown in Figure 1c). Firstly, a channel attention similar to SENet is employed to analyze the relationship between channels, except that a parallel max-pooling layer is added in CBAM. Then, the spatial channel utilizes global max-pooling and global average pooling to obtain two feature maps, which are aggregated by a concat operation and then the final output is generated by sigmoid function. Fu et al. [31] proposed DANet, which contains two new attention modules, including Position Attention Module and Channel Attention Module. DANet extracts global context information based on local features generated by extended residual network and obtains better feature expression for pixel-level prediction.

Figure 1.

The structure of several typical attention networks. (a) represents SENet, which mainly focuses on channel relationship between feature maps, (b) represents SpatialNet, which mainly focuses on spatial relationship between feature maps and (c) represents CBAM, which focuses on both channel and spatial attention.

2.3. Multi-Scale Feature Fusion

Fusing feature maps of different scales in convolutional neural networks is an important means to improve segmentation performance. Low-level feature maps contain more details, but they contain less semantic information because of fewer convolution times. High-level feature maps have more deep semantic information and the receptive field is larger than low-level feature maps, but the detection effect for small targets is poor. To solve this problem, FCN uses mid-level feature prediction to improve the detail structure of segmentation, whereas Hariharan et al. [32] directly combined multi-scale feature maps for prediction. The U-Net [33] model proposed by Ronneberger et al. incorporated jump connections between decoder and encoder structures to reuse the low-level features. Zhang et al. [34] improved U-Net by fusing high-level features into low-level features. Lin et al. [35] suggested that every two adjacent feature maps in the feature pyramid network (FPN) were fused into a feature map and the new feature maps were fused continuously to obtain a final feature map.

These feature fusion methods achieved remarkable results, but the useful information of each feature map is ignored in the fusion process, resulting in the fusion results being influenced by the semantic differences in each feature map.

2.4. Sonar Datasets

Though sonar image analysis has attracted a lot of research attention, the publicly available sonar datasets are relatively limited, which can be divided into two types:

One is generated by simulation data, such as the Multi-target Noise interference Sonar Dataset (MNSD) and Single-target Reverberation interference Sonar Dataset (SRSD) [36], while the MNSD is generated by three-dimensional imaging sonar data simulation experiment and the SRSD is a single-class bionic dataset with seabed reverberation interference. Liu et al. [37] utilizes an acoustic image simulator to generate a forward-looking sonar dataset and CycleGAN is used to enhance the dataset.

Another one is collected by real environment collection, such as the datasets used in paper [7] being collected by an autonomous underwater vehicle (AUV) equipped with dual-frequency side-scan sonar, yet the data collection is mainly nearby a seabed reef and a sand wave, which do not contain common underwater targets. Moreover, the datasets used in [9] are dealt with a pseudo-color processing on sonar images, which contains three underwater targets, yet each image only contains one instance. Singh et al. [38] collected a forward-looking sonar dataset using an ARIS Explorer 3000 sensor. The dataset consists of 1868 forward-looking sonar images and 11 categories in total.

Presently, the sonar datasets are still relatively scarce. How to construct a dataset containing more targets and more scenes is still worthy of in-depth study. Therefore, this paper built a new sonar dataset. Table 1 compares several existing sonar datasets; as some datasets are not named, we describe them with the relevant references in the table. Although the image number of STSD is not the largest in the dataset collected in the real environment, it contains eight instance categories. Compared with the dataset mentioned in [38], STSD has more images, which avoids over fitting.

Table 1.

Comparison of several sonar datasets.

3. Methods

3.1. Attentive SOLO

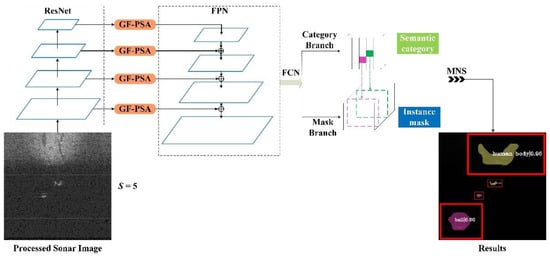

As illustrated in Figure 2, Attentive SOLO is built on a backbone called ResNet and FPN is added after the backbone to deal with multi-scale targets. The proposed GF-PSA is embedded between ResNet and FPN to improve the feature extraction capability. Same as SOLOv2, of which the core idea is to realize instance segmentation by position and size of objects, Attentive SOLO first divides the input images into grids. The center of the target falls in a certain grid, then the grid has two tasks, corresponding to the two branches mentioned in SOLOv2. One is the category branch for predicting the semantic category and the other is the mask branch for predicting the instance mask. The prediction results of two branches are correlated by their reference grid and the instance segmentation results of each grid are directly formed, which is determined by the following equation,

where . Finally, the instance segmentation results of each grid are collected and processed by matrix non-maximum suppression to form the final results.

Figure 2.

The structure of the proposed Attentive SOLO. The sonar image is log-transformed processed in order to artificially identify the location and category of the target. The GF-PSA module is embedded between the ResNet and FPN. In Section 4.4, we also embed the GF-PSA between the FPN and FCN.

3.2. Gated Fusion Module

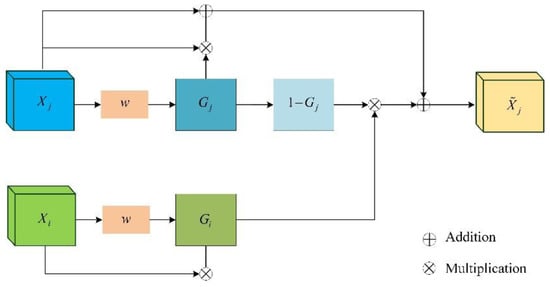

In CNNs, it is a common operation to fuse feature maps of different scales. The purpose is to combine the advantages of low-level and high-level feature maps and improve the prediction results. Concatenation is a simple operation that aggregates all the information in multiple feature maps by stitching them together. Addition is another straightforward way to combine all the information of different feature maps by adding features at each location. Both of these methods superimpose the information of each feature map, fusing useless information together.

Therefore, we use gating fusion module to solve the problem of redundant useless information. Based on addition fusion, information flow fusion is controlled by gate maps. Specifically, each input X is connected to a gate map and during the fusion process, the feature vector of feature map i at the position of (x,y) is fused to feature map j (i ≠ j) only when the value of gate map is greater than the value of . The gate map is determined by the following equation,

where refers to a convolutional layer and represents the number of channels of feature map i. The specific operation of gating fusion is shown in Figure 3. With these gate maps, the addition-based fusion is defined as:

where denotes element-wise multiplication broadcasting in the channel dimension.

Figure 3.

The structure of gated fusion model.

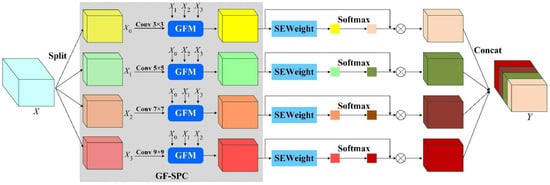

3.3. Gated Fusion-Pyramid Split Attention Block

The sonar images are not only subject to the severe noise interference but also affected by ocean currents. Ocean currents can drive the sand and gravel to form different shapes, which have similar features to those of the targets. To accurately separate the targets from the interference, we proposed the GF-PSA model. The structure of GF-PSA is shown in Figure 4.

Figure 4.

The structure of GF-PSA (n = 4).

Firstly, the multi-scale feature maps are extracted by the proposed GF-SPC module. The GF-SPC module firstly divides the feature map X into n parts according to the channel dimension, which are represented as . Among them, the size of each part is and the number of channels is . The size of convolution kernel directly affects the size of receptive field, thus, affecting the segmentation accuracy. To better segment objects of different scales, we used convolutional kernel with a size of . However, the increase in kernel size increased in the number of parameters significantly. In order to handle the input tensor with different kernel sizes without increasing the computational effort, we utilized the group convolution method. The relationship between group size and kernel size can be expressed as:

Table 2 records the kernel size and group size used in the experiment when n = 4.

Table 2.

The kernel size and group size when n = 4.

After obtaining the multi-scale feature maps, we used gated fusion to aggregate the useful information from each scale feature map. The useful information was obtained from the fused feature maps by additive fusion, which is controlled by gate map.

In order to fuse context information at different scales, SEWeight module is used to obtain channel-wise attention vectors from feature maps at different scales so that the high-level feature map can fuse more detailed information. The channel-wise attention vectors corresponding to feature maps and all channel-wise attention vectors are obtained in the following ways,

where represents the concat operation. In order to balance spatial attention while obtaining channel attention, we used cross-channel soft attention to adaptively obtain spatial attention weights and re-calibrate the obtained . The soft attention weights are shown as follows,

where the Softmax operation was used to obtain re-calibrated attention weight , which included all the position information in the space and the attention weights in the channels. By doing this, the global information of spatial and channel were combined with local information. We multiplied the multi-scale attention vector with the corresponding feature map to obtain a new feature map with multi-scale attention weight. Finally, we spliced together by the concat operation to obtain a new feature map with the same number of channels as the original feature map.

From the above description, it is clear that GF-PSA can fuse useful information from multi-scale feature maps and consider both channel attention and spatial attention. Therefore, our proposed GF-PSA module has good information interaction between local and global attention.

4. Experiences and Results

4.1. Dataset

The dataset used in this paper, STSD, is a forward-looking sonar image dataset collected by Pengcheng Laboratory in Shenzhen, Guangdong Province, China, in the sea off Zhanjiang City, Guangdong Province. The dataset is collected using a Tritech Gemini 1200i (Aberdeenshire, UK) forward-looking sonar and is the original echo intensity information of the sonar, which exists in the form of a two-dimensional matrix. For the convenience of processing, it is stored in bmp image format. The dataset contains eight categories, namely human body, tire, round cage, square cage, metal bucket, cube, sphere and cylinder. It contains 4000 sonar images, which are divided into training and test set in a ratio of 3:1. STSD contains 7077 instance annotations. Table 3 shows the number and ratio of each target category of the STSD.

Table 3.

The number and ratio of each category of STSD.

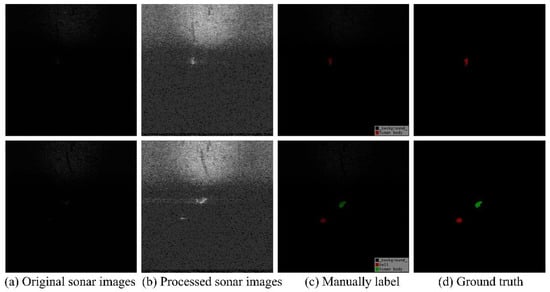

For the training process, the error of the predicted results and the ground truth is used to iteratively optimize the model parameters. The ground truth is the standard segmentation result of manual annotation and it is the key to establish the benchmark of the segmentation result. We used the LableMe software developed by MIT (Cambridge, Boston, MA, USA) to annotate the sonar images. However, the original sonar image contains different types of noise and is displayed as a nearly black image, which makes annotation extremely difficult. We processed the image by logarithmic transformation in order to artificially determine the class of targets contained in the sonar images. Figure 5 displays the original sonar image, processed image and the ground truth we annotated.

Figure 5.

Several images in STSD, where (a) represents the original sonar images, which are displayed as nearly black images, (b) represents the log-transformed sonar images, (c) is the manually label annotated by using LabelMe software and (d) is ground truth.

We conducted experiments with the original sonar images and the processed images separately and obtained similar results. This indicates that image processing has little effect on the accuracy of the algorithm. Therefore, we still use the original sonar image during the experiments, but the image inserted in the article is the log-transformed processed sonar image in order to artificially identify the location and category of the target.

4.2. Implementation Detail

The hardware environment for training and testing is as follows: Intel Core i7-11800H@ 2.30 GHz, NVIDIA GeForce RTX 3060 Laptop GPU. All experiments were conducted in real time using the same hardware environment. The experimental operating system is Ubuntu 20.04, Python 3.7, and PyTorch 1.8.0 framework. As SOLOv2 requires that the size of an input image must be a multiple of 32, we set the sonar image size to 1024 × 1024. The loss function used to evaluate the performance of the model is as follows:

where denotes the conventional focus loss [39] for semantic category classification. is for the loss of mask prediction and its calculation method is as follows:

where k, i and j are related as k = i × S + j. represents the number of positive samples, and represent the category and mask target, respectively. represents the indicator function, which is 1 when and 0 when the opposite is true. For , we use the Dice Loss function used by SOLO v2 and the expression is as follows:

where D is the Dice function with the following expression:

where and refer to pixel values located at in the prediction mask and the ground truth mask .

4.3. Evaluation Index

In the task of object segmentation, we usually use IoU (intersection over union) to determine whether the predicted result is a positive sample. IoU refers to the ratio of the intersection between the predicted mask and the ground truth value and its union. It can be expressed as follows:

where refers to the overlapping area of the prediction mask and the ground truth mask and refers to the union area of the prediction mask and the ground truth mask. We set a threshold . When the value of IoU is greater than t, the predicted mask is considered a positive sample.

The evaluation criteria of target segmentation tasks usually include two indexes: precision and recall. Precision is the ratio of the number of correctly classified positive samples to the number of all predicted positive samples. Recall is the ratio of the number of correctly classified positive samples to the number of all positive samples. The two indexes are calculated as follows:

where indicates true-positive cases, indicates false-positive cases and indicates false-negative cases. The precision–recall curve, which is obtained with precision as the y-axis and recall as the x-axis, is an excellent graphical method for visualizing and evaluating the performance of target segmentation. The mAP (mean average precision) is a quantitative metric to evaluate the effectiveness of multicategory target segmentation by calculating the area under the PR curve. In this paper, we used the mAP calculation standard in COCO. The mAP at a total of 10 thresholds with gradual increments of 0.05 from 0.5 to 0.95 (mAP0.5:0.95) and the mAP at a threshold t = 0.5 (mAP0.5) and t = 0.75 (mAP0.75) were evaluated. In addition, COCO also defined mAPS, mAPM and mAPL with the target scale as constraints. However, the scales of targets in STSD are all small, so we do not use these indicators in this paper. AR (average recall) is the maximum recall of a fixed number detected in each image. Different from mAP, the constraint of AR is only the target scale, so we only use AR without constraints.

Typically, FPS (frames per second) is used to measure the speed of the algorithm. In this paper, we also used this parameter to compare the real-time performance of each algorithm.

4.4. Ablation Experiments

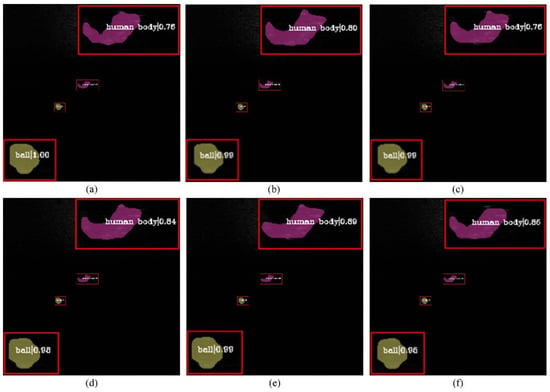

In order to obtain better results, we set different values of n and embedded GF-PSA module into different positions of the network. GF-SPC module split feature maps into n parts according to the channel dimension, so n must be divisible by the number of channels. In order to ensure that each part of the feature maps contains enough information, the value of n cannot be too large. In this paper, we discuss six cases according to the different values of n and the different positions of GF-PSA. The visual segmentation results of different cases are shown in Figure 6. Among them, Model e has the highest precision for each target.

Figure 6.

The results of different values of n and the different positions of GF-PSA. (a–c): the GF-PSA is embedded between the ResNet and FPN; (d–f): the GF-PSA is embedded after FPN. (a,d) n = 2; (b,e) n = 4; (c,f) n = 8.

We conducted a quantitative analysis of these algorithms and evaluate the effectiveness of different models by mAP.5:0.95, mAP0.5, mAP0.75, AR (average recall) and FPS. Table 4 records the experimental results of different cases. As shown in Table 1, the speeds of the six models are all around 12 FPS, but the accuracy is quite different. By comparison, the value of n has a great influence on the results. The accuracy of the model increases with the increase in n. The values of mAP0.5 are only 67.5% (Model c) and 67.9% (Model f) when n = 8 and is 70.6% (Model a) and 70.9% (Model d) when n = 2. When n = 4, the accuracy of the model achieves the highest and the values of mAP0.5 are 74.1% (Model b) and 74.9% (Model e), respectively. The location of the GF-PSA module has little effect on the accuracy of the model; mAP0.5 changes a little, changing the position of GF-PSA, when the value of n remains unchanged.

Table 4.

Segmentation results on STSD dataset. FPS is evaluated using CUDA11.0 and CUDNN 8.2 on a Geforce RTX Graphics Card. The bold numbers represent the highest one of each indicator.

4.5. Comparative Experiment

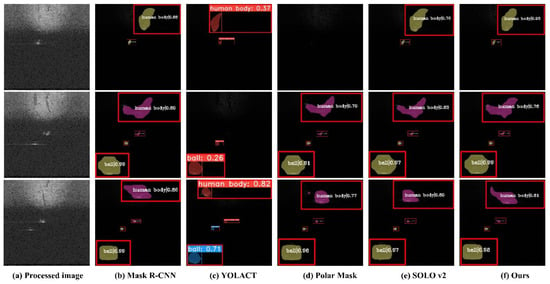

In order to verify the performance of the proposed Attentive SOLO, this paper compares Attentive SOLO with Mask R-CNN, YOLACT, PolarMask and SOLOv2. The dataset uses STSD. Some experimental results are shown in Figure 6.

Figure 7a represents the processed sonar image by logarithmic transformation. As the size of the target is too small to discern, we enlarged the masks and precisions at the corner of the result maps. Figure 7f shows the results of Attentive SOLO and compares Figure 7b, Mask R-CNN, Figure 7c YOLACT, Figure 7d Polar Mask and Figure 7e SOLO. From Figure 7, we can know that in scene (A), Polar Mask missed detection and our Attentive SOLO obtains the highest precision (0.93) compared to other algorithms. In scene (B), YOLACT missed detection and the precision is poor; other algorithms have similar results. In scene (C), all algorithms can detect the target accurately. We performed a quantitative analysis of these algorithms. Table 5 displays the results, from which we can know that the mAP0.5 of Attentive SOLO is 7.3% higher than YOLACT, 6.5% higher than Polar Mask, 3.1% higher than Mask R-CNN and 3.7% higher than SOLOv2. For the other evaluating indicators, our proposed Attentive SOLO also has the best results compared to other segmentation models.

Figure 7.

The segmentation results of Mask R-CNN (b), YOLAC (c), Polar Mask (d), SOLO v2 (e) and Attentive SOLO (f), where (a) represents the processed images. For Attentive SOLO, we set n = 4 and embed the GF-PSA module between ResNet and FPN.

Table 5.

Evaluation Results of Different Segmentation Models. The bold numbers represent the highest one of each indicator.

In addition, we compared the proposed GF-PSA module with SENet, CBAM, self-attention, DAnet and PSA attention models. All attention mechanisms were added between Resnet and FPN and Table 4 records the experimental results of adding different attention models to SOLO v2. The GF-PSA module proposed in this paper has a better effect. Specifically, our method achieved the best results in all four indicators. From Table 6, we can know that the mAP0.5 of GF-PSA is 9.3% higher than CBAM, 5.1% higher than DANet, 4.5% higher than PSA and 3.5% higher than SENet. For the other evaluating indicators, GF-PSA also has the best results compared to other attention models. From the results, the gated fusion model can effectively improve the performance of PSA and it is more effective than other attention mechanisms.

Table 6.

Evaluation results of adding different attention mechanisms. The bold numbers represent the highest one of each indicator.

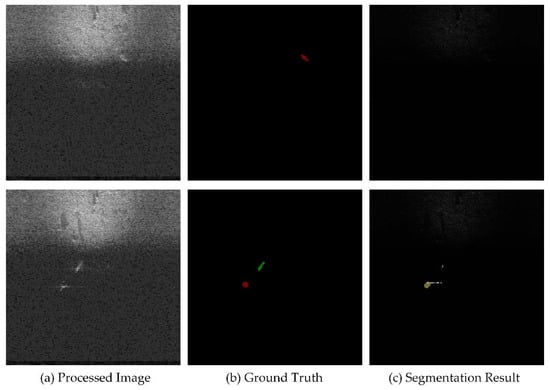

Although Attentive SOLO achieves better performance than the existing methods, it cannot segment some difficult images accurately (shown in Figure 8). Most of these failed cases are about the “human body”. We analyzed the possible reasons. In the process of data collection, we put the manikin into the sea and its posture changed greatly with the ocean current. This makes it difficult to learn the features of the human body.

Figure 8.

Some failed cases, which are all about “human body”. Where (a) represents the processed image, (b) represents the ground truth, (c) represents the failed results.

5. Conclusions

On the basis of SOLOv2, this paper improves the multi-scale prediction using PSA and GFF. A sonar target segmentation algorithm named Attentive SOLO suitable for complex environments is proposed. The Attentive SOLO uses gated fusion to improve the fusion mechanism of PSA and adds it to SOLOv2, which significantly reduces the information redundancy in feature fusion and enhances the ability of context information transmission and network feature extraction. Experiments show that the proposed Attentive SOLO model has better detection accuracy than SOLOv2 and other instance segmentation algorithms on real sonar datasets. In addition, we constructed a dataset named STSD for the sonar image segmentation task and annotated it. Future work will focus on optimizing the detection algorithm, reducing the number of parameters while ensuring segmentation accuracy and improving the detection speed of the algorithm.

Author Contributions

Conceptualization, H.H. and Z.Z.; methodology, H.H.; software, H.H. and B.S.; validation, H.H., B.S. and J.Z.; formal analysis, H.H. and P.W.; data curation, H.H., J.Z. and P.W.; writing—original draft preparation, H.H.; writing—review and editing, Z.Z., P.W. and B.S.; project administration, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Hunan Province of China, grant number 2020JJ5672; National Natural Science Foundation of China, grant number 52101377; and Hunan Province Innovation Foundation for Postgraduate, grant number CX20210020.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available now due to deficient maintenance capacity.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, Z.; Wang, Y.; Tian, W.; Liu, J.; Zhou, Y.; Shen, J. Underwater sonar image segmentation combining pixel-level and region-level information. Comput. Electr. Eng. 2022, 100, 107853. [Google Scholar] [CrossRef]

- Ye, X.; Zhang, Z.; Liu, P.; Guan, H. Sonar image segmentation based on GMRF and level-set models. Ocean Eng. 2010, 37, 891–901. [Google Scholar] [CrossRef]

- Song, Y.; Liu, P. Segmentation of sonar images with intensity inhomogeneity based on improved MRF. Appl. Acoust. 2020, 158, 107051. [Google Scholar] [CrossRef]

- Abu, A.; Diamant, R. Enhanced fuzzy-based local information algorithm for sonar image segmentation. IEEE Trans. Image Processing 2020, 29, 445–460. [Google Scholar] [CrossRef]

- Wang, X.; Wang, L.; Li, G.; Xie, X. A Robust and Fast Method for Sidescan Sonar Image Segmentation Based on Region Growing. Sensors 2021, 21, 6960. [Google Scholar] [CrossRef]

- Liu, P.; Song, Y. Segmentation of sonar imagery using convolutional neural networks and Markov random field. Multidimens. Syst. Signal Processing 2020, 31, 21–47. [Google Scholar] [CrossRef]

- Zhao, X.; Qin, R.; Zhang, Q.; Yu, F.; Wang, Q.; He, B. DcNet: Dilated Convolutional Neural Networks for Side-Scan Sonar Image Semantic Segmentation. J. Ocean Univ. China 2021, 20, 1089–1096. [Google Scholar] [CrossRef]

- Wu, M.; Wang, Q.; Rigall, E.; Li, K.; Zhu, W.; He, B.; Yan, T. ECNet: Efficient Convolutional Networks for Side Scan Sonar Image Segmentation. Sensors 2019, 19, 2009. [Google Scholar] [CrossRef]

- Wang, Z.; Guo, J.; Huang, W.; Zhang, S. Side-Scan Sonar Image Segmentation Based on Multi-Channel Fusion Convolution Neural Networks. IEEE Sens. J. 2022, 22, 5911–5928. [Google Scholar] [CrossRef]

- Jiao, S.; Zhao, C.; Xin, Y. Research on Convolutional Neural Network Model for Sonar IMAGE Segmentation. MATEC Conf. 2018, 220, 10004. [Google Scholar] [CrossRef][Green Version]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and Fast Instance Segmentation. Adv. Neural Inf. Processing Syst. 2020, 33, 17721–17732. [Google Scholar]

- Li, X.; Zhao, H.; Han, L.; Tong, Y.; Tan, S.; Yang, K. Gated Fully Fusion for Semantic Segmentation. arXiv 2020, arXiv:1904.01803v2. [Google Scholar] [CrossRef]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An Efficient Pyramid Split Attention Block on Convolutional Neural Network. arXiv 2021, arXiv:2105.14447v1. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; Volume 42, pp. 386–397. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. arXiv 2018, arXiv:1803.01534v4. [Google Scholar]

- Liu, S.; Jia, J.; Fidler, S.; Urtasun, R. SGN: Sequential Grouping Networks for Instance Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Gao, N.; Shan, Y.; Wang, Y.; Zhao, X.; Huang, K. SSAP: Single-Shot Instance Segmentation with Affinity Pyramid. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; Volume 31, pp. 661–673. [Google Scholar]

- Joseph, R.; Santosh, D.; Girshick, R.; Ali, F. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640v5. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT Real-time Instance Segmentation. arXiv 2019, arXiv:1904.02689v2. [Google Scholar]

- Wang, X.; Zhang, R.; Shen, C.; Kong, T.; Li, L. SOLO: A Simple Framework for Instance Segmentation. arXiv 2021, arXiv:2106.15947v1. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. arXiv 2019, arXiv:1904.01355v5. [Google Scholar]

- Xie, E.; Sun, P.; Song, X.; Wang, W.; Liang, D.; Shen, C.; Luo, P. PolarMask: Single Shot Instance Segmentation with Polar Representation. arXiv 2020, arXiv:1909.13226v4. [Google Scholar]

- Sofiiuk, K.; Barinova, O.; Konushin, A. AdaptIS: Adaptive Instance Selection Network. arXiv 2019, arXiv:1909.07829v1. [Google Scholar]

- Zhou, T.; Li, J.; Li, X.; Shao, L. Target-Aware Object Discovery and Association for Unsupervised Video Multi-Object Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Zhou, T.; Wang, W.; Liu, S.; Yang, Y.; Gool, L.V. Differentiable Multi-Granularity Human Representation Learning for Instance-Aware Human Semantic Parsing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Xu, F.; Huang, H.; Wu, J.; Jiang, L. Active Mask-Box Scoring R-CNN for Sonar Image Instance Segmentation. Electronics 2022, 11, 2048. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and- Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, GA, USA, 18–22 June 2018; Volume 42, pp. 2011–2023. [Google Scholar]

- Max, J.; Karen, S.; Andrew, Z.; Koray, K. Spatial Transformer Networks. arXiv 2015, arXiv:1506.02025v3. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521v2. [Google Scholar]

- Sun, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. arXiv 2019, arXiv:1809.02983v4. [Google Scholar]

- Hariharan, B.; Arbelaez, P.; Grishick, R. Hypercolumns for Object Segmentation and Fine-grained Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597v1. [Google Scholar]

- Zhang, Z.; Zhang, X.; Peng, C.; Xue, X.; Sun, J. Exfuse: Enhancing feature fusion for semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Lin, D.; Ji, Y.; Lischinski, D.; Cohen, D.; Huang, H. Multi-scale context intertwining for semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Kong, W.; Hong, J.; Jia, M.; Yao, J.; Cong, W.; Hu, H.; Zhang, H. YOLOv3-DPFIN: A Dual-Path Feature Fusion Neural Network for Robust Real-Time Sonar Target Detection. IEEE Sens. J. 2020, 20, 3745–3756. [Google Scholar] [CrossRef]

- Liu, D.; Wang, Y.; Ji, Y.; Tsuchiya, H.; Yamashita, A.; Asama, H. CycleGAN-based realistic image dataset generation for forward-looking sonar. Adv. Robot. 2021, 35, 242–254. [Google Scholar] [CrossRef]

- Singh, D.; Valdenegro, M. The Marine Debris Dataset for Forward-Looking Sonar Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Li, T.Y.; Goyal, P.; Grishick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; Volume 42, pp. 318–327. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).