- Article

Applying Nondestructive Ultrasonic Technique in the Metrological Control of Heat Treatment of AISI 1045 Steels

- Carlos Otávio Damas Martins,

- José Carlos Bizerra Costa Junior and

- Jorge Luís Braz Medeiros

- + 1 author

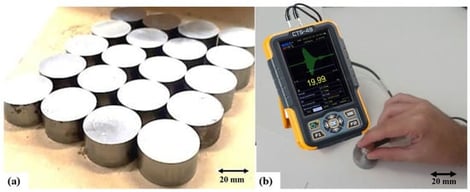

The characterization of mechanical properties in heat-treated carbon steels, which is crucial for quality control, traditionally relies on destructive testing. This study evaluated the reliability of the non-destructive ultrasonic technique as a metrological alternative for AISI 1045 steel. Samples subjected to six heat treatment conditions (Annealing, Normalizing, Quenching, and Tempering) were characterized by hardness, metallography, and ultrasound. Through linear regression analyses, the multiparametric model combining sound velocity, attenuation, and FWHM demonstrated exceptional metrological precision, resulting in a coefficient of determination of (R2 = 96.687%). The metrological robustness of the model was validated by quantifying the Expanded Uncertainty (U), following the GUM (Guide to the Expression of Uncertainty in Measurement). It is concluded that the multiparametric ultrasonic methodology is an accurate, robust, and non-destructive alternative for the quantitative determination of Vickers Hardness in AISI 1045 steels, contributing to the optimization of industrial processes and metrological rigor.

24 February 2026