- Article

Visual Evaluation Strategies in Art Image Viewing: An Eye-Tracking Comparison of Art-Educated and Non-Art Participants

- Adem Korkmaz,

- Sevinc Gülsecen and

- Grigor Mihaylov

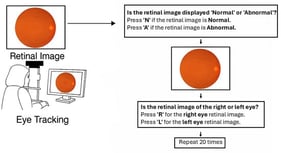

Understanding how tacit knowledge embedded in visual materials is accessed and utilized during evaluation tasks remains a key challenge in human–computer interaction and visual expertise research. Although eye-tracking studies have identified systematic differences between experts and novices, findings remain inconsistent, particularly in art-related visual evaluation contexts. This study examines whether tacit aspects of visual evaluation can be inferred from gaze behavior by comparing individuals with and without formal art education. Visual evaluation was assessed using a structured, prompt-based task in which participants inspected artistic images and responded to items targeting specific visual elements. Eye movements were recorded using a screen-based eye-tracking system. Areas of Interest (AOIs) corresponding to correct-answer regions were defined a priori based on expert judgment and item prompts. Both AOI-level metrics (e.g., fixation count, mean, and total visit and gaze durations) and image-level metrics (e.g., fixation count, saccade count, and pupil size) were analyzed using appropriate parametric and non-parametric statistical tests. The results showed that participants with an art-education background produced more fixations within AOIs, exhibited longer mean and total AOI visit and gaze durations, and demonstrated lower saccade counts than participants without art education. These patterns indicate more systematic and goal-directed gaze behavior during visual evaluation, suggesting that formal art education may shape tacit visual evaluation strategies. The findings also highlight the potential of eye tracking as a methodological tool for studying expertise-related differences in visual evaluation processes.

30 January 2026