Head and Eye Movements During Pedestrian Crossing in Patients with Visual Impairment: A Virtual Reality Eye Tracking Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Subjects

- Central visual impairment: BSE uncorrected VA ≤ 0.6 and ≥0.05 (Snellen decimal); binocular VF radius > 50°.

- Peripheral visual impairment: BSE uncorrected VA ≥ 0.4; binocular VF radius ≤ 50° and ≥5°.

- Combined visual impairment: BSE uncorrected VA < 0.4 and ≥0.05; binocular VF radius ≤ 50° and ≥5°.

2.2. Visual Acuity Testing and Visual Field Testing and Imaging

2.3. Visual Impairment Categories

2.4. Cognitive Impairment Screening

2.5. Traffic Orientation Testing

2.5.1. VR Apparatus (Headset and Eye Tracking)

2.5.2. Tasks and Recordings

2.6. Statistical Analysis

3. Results

3.1. Participant Demographics

3.2. Visual Task Performance

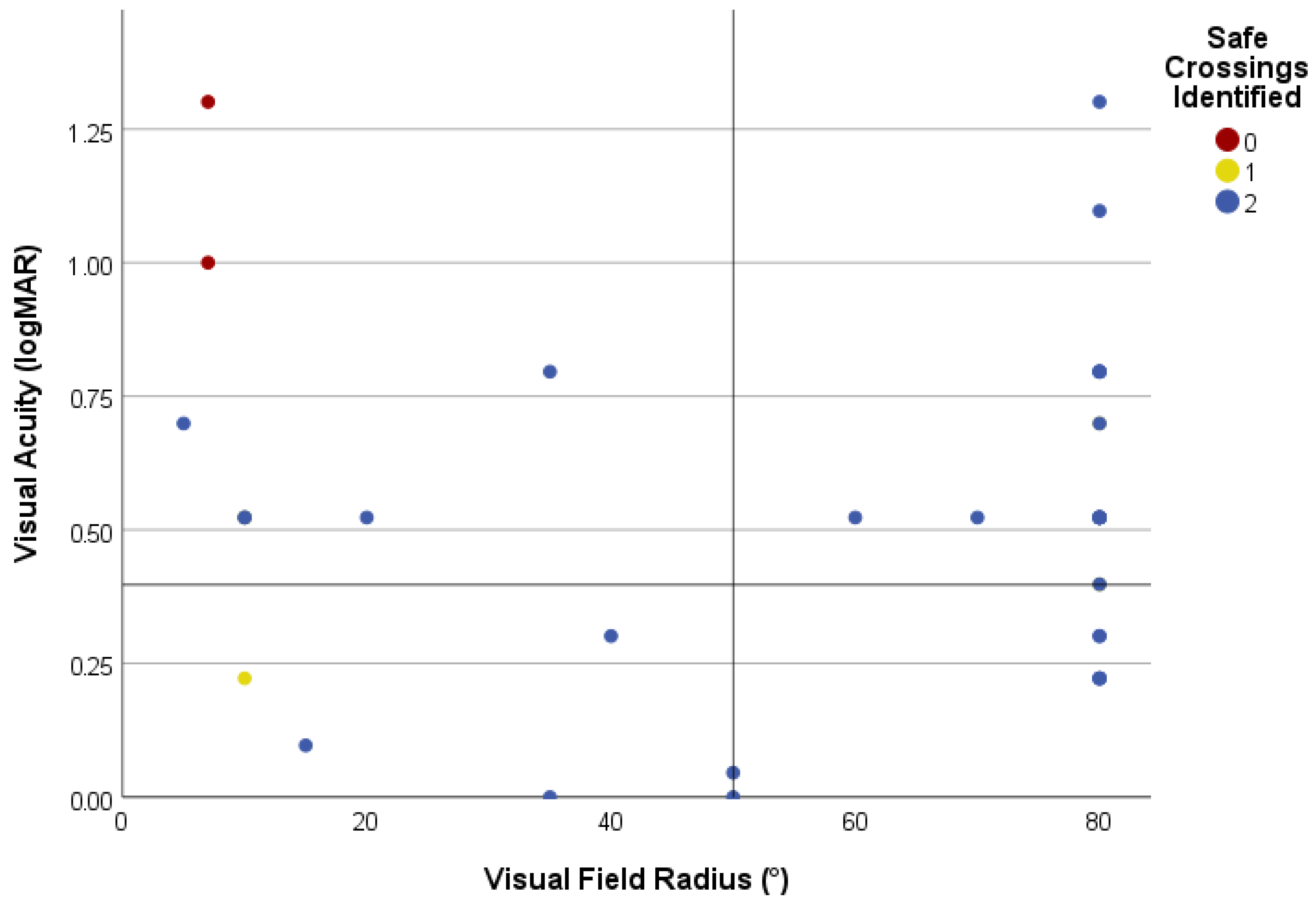

3.2.1. Identification of Safe Road Crossing

3.2.2. Identification of Safe Road Crossing by Type of Visual Impairment

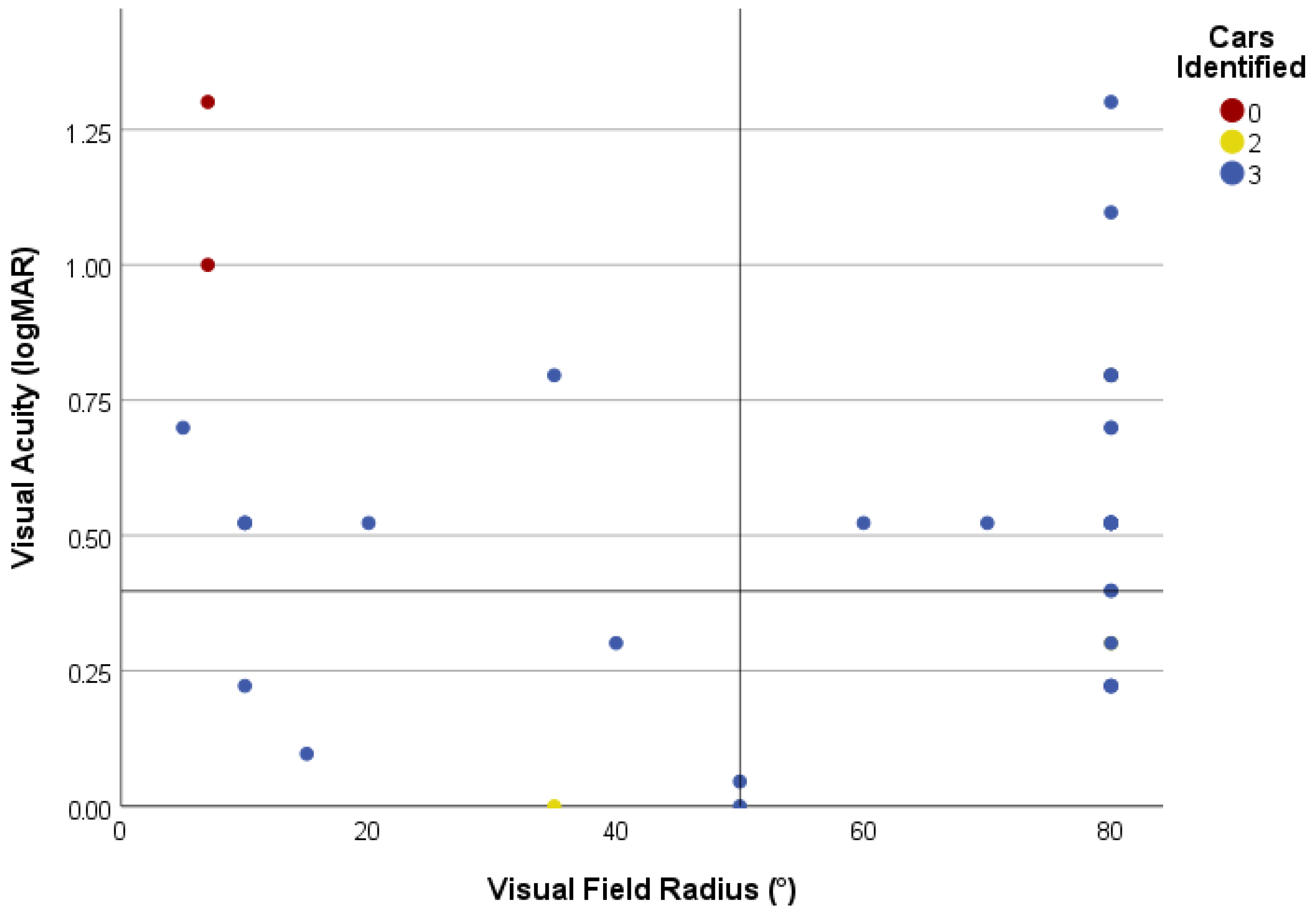

3.2.3. Car Counting

3.3. Eye and Head Movements

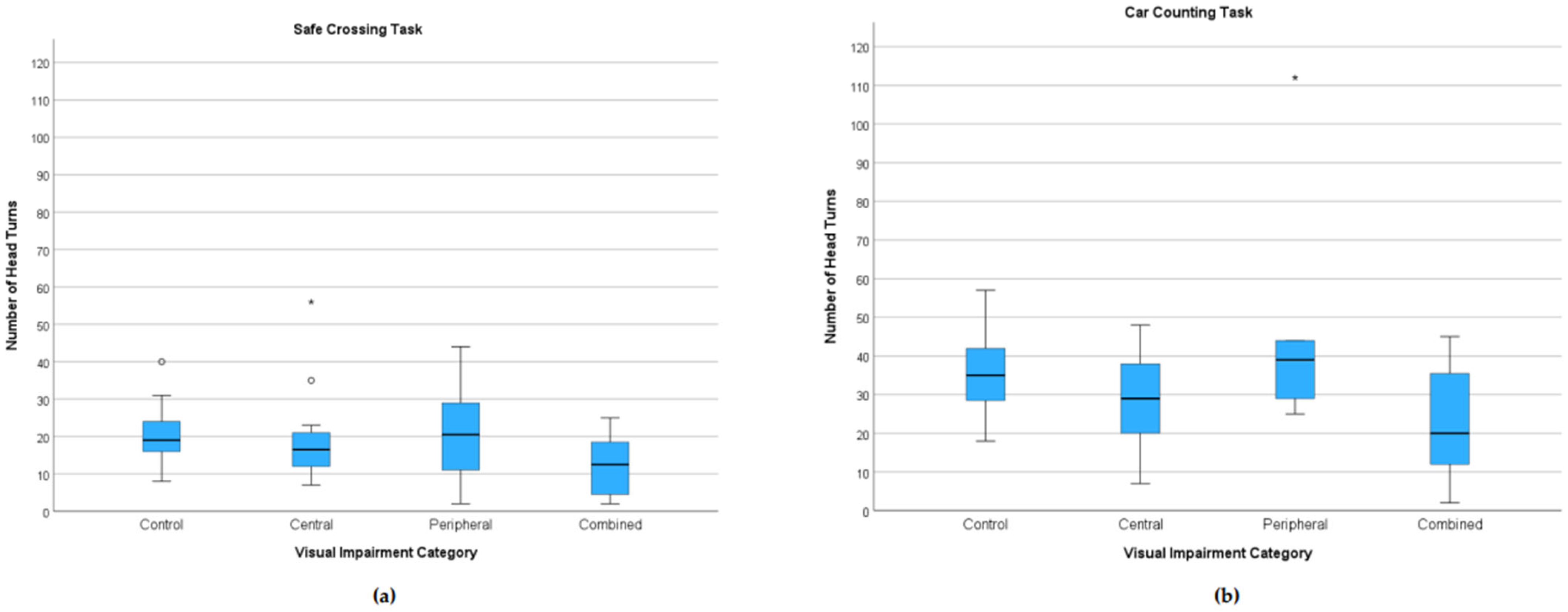

3.3.1. Head Turns

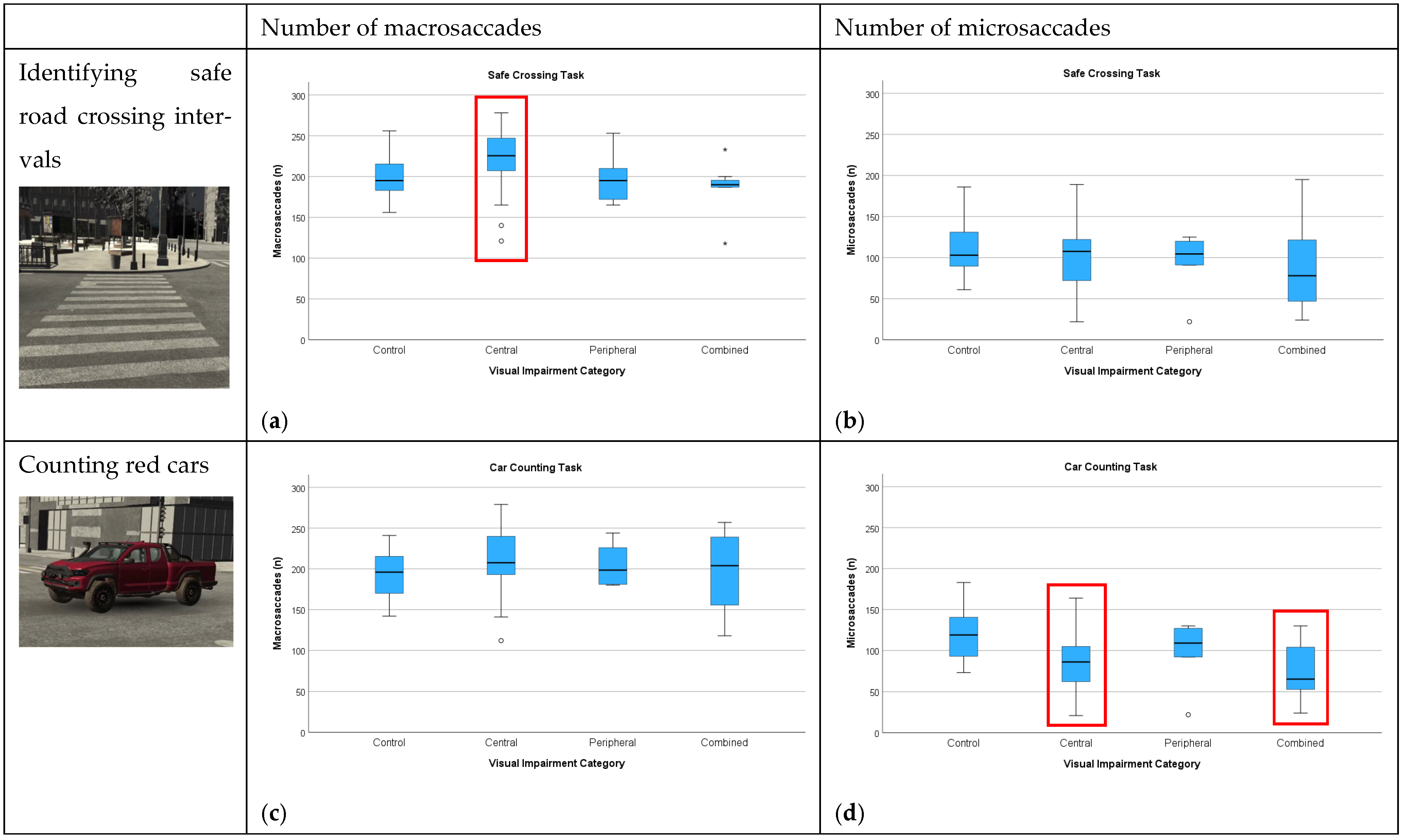

3.3.2. Number of Macro- and Microsaccades

3.3.3. Saccade Kinematics

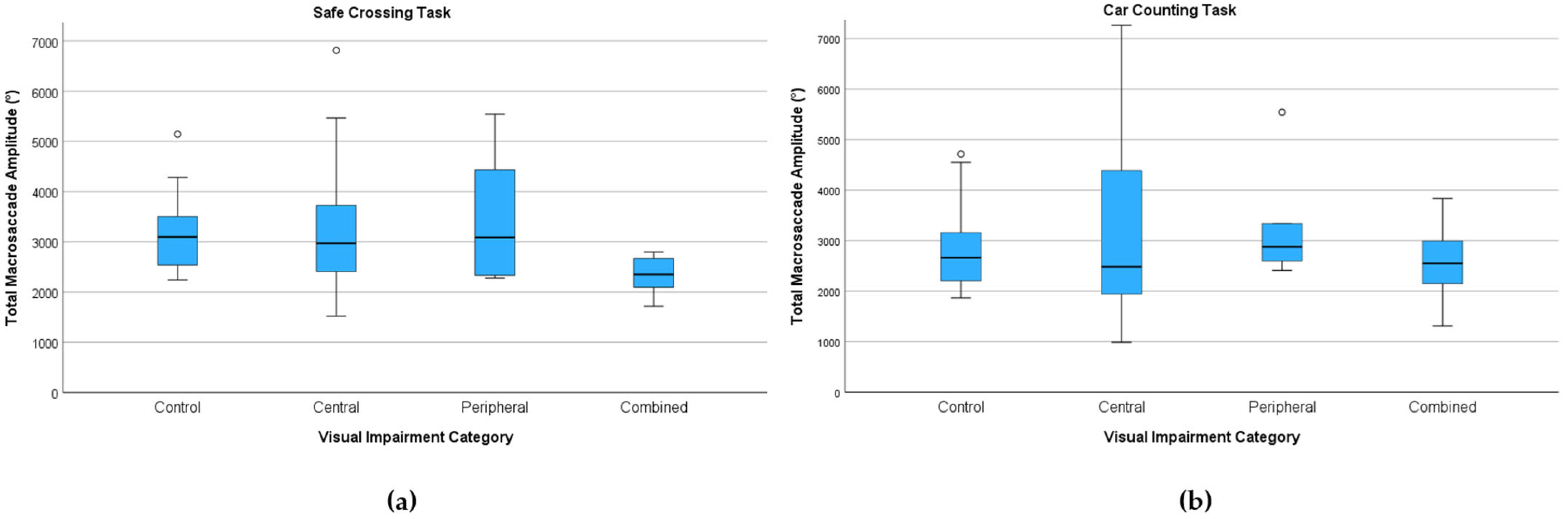

Total Macrosaccade Amplitude (°)

Average Microsaccade Velocity (°/s)

3.3.4. Visual Task Performance by WHO Visual Impairment Category (Exploratory)

4. Discussion

4.1. Visual Task Performance

4.2. Head and Eye Movement Analysis

4.3. Categorization of Visual Impairment

4.4. Implications for Functional Classification and the Potential of VR Assessment

4.5. Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. World Report on Vision; WHO: Geneva, Switzerland, 2019. [Google Scholar]

- GBD 2020 Blindness and Vision Impairment Collaborators; Vision Loss Expert Group of the Global Burden of Disease Study. Trends in prevalence of blindness and distance and near vision impairment over 30 years: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e130–e143. [Google Scholar] [CrossRef]

- Vashist, P.; Senjam, S.S.; Gupta, V.; Gupta, N.; Kumar, A. Definition of blindness under National Programme for Control of Blindness: Do we need to revise it? Indian J. Ophthalmol. 2017, 65, 92–96. [Google Scholar] [CrossRef]

- González-Vides, L.; Cañadas, P.; Gómez-Pedrero, J.A.; Hernández-Verdejo, J.L. Real-time assessment of eye movements during reading in individuals with central vision loss using eye-tracking technology: A pilot study. J. Optom. 2025, 18, 100544. [Google Scholar] [CrossRef]

- Veerkamp, K.; Müller, D.; Pechler, G.A.; Mann, D.L.; Olivers, C.N.L. The effects of simulated central and peripheral vision loss on naturalistic search. J. Vis. 2025, 25, 6. [Google Scholar] [CrossRef]

- Authié, C.N.; Poujade, M.; Talebi, A.; Defer, A.; Zenouda, A.; Coen, C.; Mohand-Said, S.; Chaumet-Riffaud, P.; Audo, I.; Sahel, J.A. Development and Validation of a Novel Mobility Test for Rod-Cone Dystrophies: From Reality to Virtual Reality. Am. J. Ophthalmol. 2024, 258, 43–54. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.T.; Bowers, A.R.; Savage, S.W. The Effects of Age, Distraction, and Simulated Central Vision Impairment on Hazard Detection in a Driving Simulator. Optom. Vis. Sci. 2020, 97, 239–248. [Google Scholar] [CrossRef] [PubMed]

- Žugelj, N.; Peterlin, L.; Muznik, U.; Klobučar, P.; Jaki Mekjavić, P.; Vidović Valentinčić, N.; Fakin, A. Face Recognition Characteristics in Patients with Age-Related Macular Degeneration Determined Using a Virtual Reality Headset with Eye Tracking. J. Clin. Med. 2024, 13, 636. [Google Scholar] [CrossRef] [PubMed]

- Clay, V.; König, P.; König, S. Eye Tracking in Virtual Reality. J. Eye Mov. Res. 2019, 12, 1–18. [Google Scholar] [CrossRef]

- Nieboer, W.; Ghiani, A.; de Vries, R.; Brenner, E.; Mann, D.L. Eye Tracking to Assess the Functional Consequences of Vision Impairment: A Systematic Review. Optom. Vis. Sci. 2023, 100, 861–875. [Google Scholar] [CrossRef]

- González-Vides, L.; Hernández-Verdejo, J.L.; Cañadas-Suárez, P. Eye Tracking in Optometry: A Systematic Review. J. Eye Mov. Res. 2023, 16, 1–55. [Google Scholar] [CrossRef]

- Ma, M.K.I.; Saha, C.; Poon, S.H.L.; Yiu, R.S.W.; Shih, K.C.; Chan, Y.K. Virtual reality and augmented reality—Emerging screening and diagnostic techniques in ophthalmology: A systematic review. Surv. Ophthalmol. 2022, 67, 1516–1530. [Google Scholar] [CrossRef]

- Ahuja, A.S.; Paredes III, A.A.; Eisel, M.L.S.; Ahuja, S.A.; Wagner, I.V.; Vasu, P.; Dorairaj, S.; Miller, D.; Abubaker, Y. The Utility of Virtual Reality in Ophthalmology: A Review. Clin. Ophthalmol. 2025, 19, 1683–1692. [Google Scholar] [CrossRef]

- Williams, M. Virtual reality in ophthalmology education: Simulating pupil examination. Eye 2022, 36, 2084–2085. [Google Scholar] [CrossRef] [PubMed]

- Soni, T.; Kohli, P. Commentary: Simulators for vitreoretinal surgical training. Indian J. Ophthalmol. 2022, 70, 1793–1794. [Google Scholar] [CrossRef] [PubMed]

- Nair, A.G.; Ahiwalay, C.; Bacchav, A.E.; Sheth, T.; Lansingh, V.C. Assessment of a high-fidelity, virtual reality-based, manual small-incision cataract surgery simulator: A face and content validity study. Indian J. Ophthalmol. 2022, 70, 4010–4015. [Google Scholar] [CrossRef] [PubMed]

- Wegner-Clemens, K.; Rennig, J.; Magnotti, J.F.; Beauchamp, M.S. Using principal component analysis to characterize eye movement fixation patterns during face viewing. J. Vis. 2019, 19, 2. [Google Scholar] [CrossRef]

- Kobal, N.; Hawlina, M. Comparison of visual requirements and regulations for obtaining a driving license in different European countries and some open questions on their adequacy. Front. Hum. Neurosci. 2022, 16, 927712. [Google Scholar] [CrossRef]

- Rosser, D.A.; Laidlaw, D.A.; Murdoch, I.E. The development of a “reduced logMAR” visual acuity chart for use in routine clinical practice. Br. J. Ophthalmol. 2001, 85, 432–436. [Google Scholar] [CrossRef]

- Rubin, G.S.; Muñoz, B.; Bandeen-Roche, K.; West, S.K. Monocular versus Binocular Visual Acuity as Measures of Vision Impairment and Predictors of Visual Disability. Invest. Ophthalmol. Vis. Sci. 2000, 41, 3327–3334. [Google Scholar]

- Schuetz, I.; Fiehler, K. Eye Tracking in Virtual Reality: Vive Pro Eye Spatial Accuracy, Precision, and Calibration Reliability. J. Eye Mov. Res. 2022, 15, 1–18. [Google Scholar] [CrossRef]

- Hou, B.J.; Abdrabou, Y.; Weidner, F.; Gellersen, H. Unveiling Variations: A Comparative Study of VR Headsets Regarding Eye Tracking Volume, Gaze Accuracy, and Precision. In Proceedings of the 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Orlando, FL, USA, 16–21 March 2024. [Google Scholar] [CrossRef]

- Warburton, M.; Mon-Williams, M.; Mushtaq, F.; Morehead, J.R. Measuring motion-to-photon latency for sensorimotor experiments with virtual reality systems. Behav. Res. Methods 2023, 55, 3658–3678. [Google Scholar] [CrossRef] [PubMed]

- Privitera, C.M.; Carney, T.; Klein, S.; Aguilar, M. Analysis of microsaccades and pupil dilation reveals a common decisional origin during visual search. Vis. Res. 2014, 95, 43–50. [Google Scholar] [CrossRef] [PubMed]

- Lobjois, R.; Cavallo, V. Age-related differences in street-crossing decisions: The effects of vehicle speed and time constraints on gap selection in an estimation task. Accid. Anal. Prev. 2007, 39, 934–943. [Google Scholar] [CrossRef]

- Oxley, J.A.; Ihsen, E.; Fildes, B.N.; Charlton, J.L.; Day, R.H. Crossing roads safely: An experimental study of age differences in gap selection by pedestrians. Accid. Anal. Prev. 2005, 37, 962–971. [Google Scholar] [CrossRef]

- Miyata, K.; Yoshikawa, T.; Harano, A.; Ueda, T.; Ogata, N. Effects of visual impairment on mobility functions in elderly: Results of Fujiwara-kyo Eye Study. PLoS ONE 2021, 16, e0244997. [Google Scholar] [CrossRef]

- Wu, H.; Ashmead, D.H.; Adams, H.; Bodenheimer, B. Using Virtual Reality to Assess the Street Crossing Behavior of Pedestrians with Simulated Macular Degeneration at a Roundabout. Front. ICT 2018, 5, 1–11. [Google Scholar] [CrossRef]

- Musa, A.; Lane, A.R.; Ellison, A. The effects of induced optical blur on visual search performance and training. Q. J. Exp. Psychol. 2022, 75, 277–288. [Google Scholar] [CrossRef]

- Verghese, P.; Vullings, C.; Shanidze, N. Eye Movements in Macular Degeneration. Annu. Rev. Vis. Sci. 2021, 7, 773–791. [Google Scholar] [CrossRef]

- Wiecek, E.; Pasquale, L.R.; Fiser, J.; Dakin, S.; Bex, P.J. Effects of peripheral visual field loss on eye movements during visual search. Front. Psychol. 2012, 3, 472. [Google Scholar] [CrossRef]

- Renninger, L.; Ma-Wyatt, A. Recalibration of eye and hand reference frames in age-related macular degeneration. J. Vis. 2011, 11, 954. [Google Scholar] [CrossRef]

- Kasneci, E.; Sippel, K.; Aehling, K.; Heister, M.; Rosenstiel, W.; Schiefer, U.; Papageorgiou, E. Driving with binocular visual field loss? A study on a supervised on-road parcours with simultaneous eye and head tracking. PLoS ONE 2014, 9, e87470. [Google Scholar] [CrossRef]

- Bowers, A.R.; Ananyev, E.; Mandel, A.J.; Goldstein, R.B.; Peli, E. Driving with hemianopia: IV. Head scanning and detection at intersections in a simulator. Invest. Ophthalmol. Vis. Sci. 2014, 55, 1540–1548. [Google Scholar] [CrossRef] [PubMed]

- Otero-Millan, J.; Langston, R.E.; Costela, F.; Macknik, S.L.; Martinez-Conde, S. Microsaccade generation requires a foveal anchor. J. Eye Mov. Res. 2020, 12, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Kwon, M. Altered Eye Movements During Reading with Simulated Central and Peripheral Visual Field Defects. Investig. Ophthalmol. Vis. Sci. 2023, 64, 21. [Google Scholar] [CrossRef] [PubMed]

- Pastukhov, A.; Braun, J. Rare but precious: Microsaccades are highly informative about attentional allocation. Vis. Res. 2010, 50, 1173–1184. [Google Scholar] [CrossRef]

- Otero-Millan, J.; Macknik, S.L.; Langston, R.E.; Martinez-Conde, S. An oculomotor continuum from exploration to fixation. Proc. Natl. Acad. Sci. USA 2013, 110, 6175–6180. [Google Scholar] [CrossRef]

- Boey, D.; Fitzmaurice, K.; Tse, T.; Chan, M.L.; Carey, L.M. Classifying Types of Visual Loss Linked with Function to Inform Referral to Vision Rehabilitation for Older Adults in Singapore. Gerontol. Geriatr. Med. 2022, 8, 23337214221130652. [Google Scholar] [CrossRef]

- Cordes, C.; Heutink, J.; Tucha, O.M.; Brookhuis, K.A.; Brouwer, W.H.; Melis-Dankers, B.J. Vision-related fitness to drive mobility scooters: A practical driving test. J. Rehabil. Med. 2017, 49, 270–276. [Google Scholar] [CrossRef]

- Kanonidou, E. Reading performance and central field loss. Hippokratia 2011, 15, 103–108. [Google Scholar]

- Ivanov, I.V.; Mackeben, M.; Vollmer, A.; Martus, P.; Nguyen, N.X.; Trauzettel-Klosinski, S. Eye Movement Training and Suggested Gaze Strategies in Tunnel Vision—A Randomized and Controlled Pilot Study. PLoS ONE 2016, 11, e0157825. [Google Scholar] [CrossRef]

- Bowman, E.L.; Liu, L. Individuals with severely impaired vision can learn useful orientation and mobility skills in virtual streets and can use them to improve real street safety. PLoS ONE 2017, 12, e0176534. [Google Scholar] [CrossRef]

- Aubin, G.; Phillips, N.; Jaiswal, A.; Johnson, A.P.; Joubert, S.; Bachir, V.; Kehayia, E.; Wittich, W. Visual and cognitive functioning among older adults with low vision before vision rehabilitation: A pilot study. Front. Psychol. 2023, 14, 1058951. [Google Scholar] [CrossRef]

- Leal-Vega, L.; Coco-Martín, M.; Molina-Martín, A.; Cuadrado-Asensio, R.; Vallelado-Álvarez, A.I.; Sánchez-Tocino, H.; Mayo-Íscar, A.; Hernández-Rodríguez, C.J.; Arenillas Lara, J.F.; Piñero, D.P. NEIVATECH pilot study: Immersive virtual reality training in older amblyopic children with non-compliance or non-response to patching. Sci. Rep. 2024, 14, 28062. [Google Scholar] [CrossRef]

| Category of Visual Impairment | pVA and VF Criteria | Number of Patients |

|---|---|---|

| Below normal but above threshold of WHO low-vision criteria | pVA ≤ 0.6 and ≥0.3 or VF < 80 and >20 | 12 |

| 1 (low vision) | pVA < 0.3 and ≥0.1 | 17 |

| 2 (low vision) | pVA < 0.1 and ≥0.05 or VF ≤ 20 and >10 | 3 |

| 3 (blindness) | pVA < 0.05 and ≥0.02 or VF ≤ 10 and >5 | 7 |

| 4 (blindness) | pVA < 0.02 and ≥light perception or VF ≤ 5 | 1 |

| Controls | Patients | Patients with Central Visual Impairment | Patients with Peripheral Visual Impairment | Patients with Combined Visual Impairment | |

|---|---|---|---|---|---|

| N | 19 | 40 | 26 | 6 | 8 |

| Age (years), median [min–max] | 50.0 [21.0–86.0] | 71.5 [25.0–90.0] | 78.5 [25.0–90.0] | 55.5 [27.0–71.0] | 56.5 [32.0–76.0] |

| Binocular uncorrected VA, median [min–max] (decimal/logMAR) | >0.8 */<0.10 * | 0.30 [0.05–1.00]/0.52 [0.00–1.30] | 0.30 [0.05–0.60]/0.52 [0.22–1.30] | 0.85 [0.50–1.00]/0.07 [0.00–0.30] | 0.25 [0.05–0.30]/0.61 [0.52–1.30] |

| Visual field radius (°), median [min–max] | >80 * | 80 [5–80] | 80 [60–80] | 38 [10–50] | 10 [5–35] |

| MoCA (0–22), median [min–max] | 22 [20–22] | 20 [15–22] | 20 [15–22] | 21 [20–21] | 20 [17–22] |

| WHO categories of visual impairment | N/A | ||||

| Not categorized by WHO (n) | 12 | 8 | 4 | 0 | |

| WHO category 1 (n) | 17 | 16 | 0 | 1 | |

| WHO category 2 (n) | 3 | 1 | 1 | 1 | |

| WHO category 3 (n) | 7 | 1 | 1 | 5 | |

| WHO category 4 (n) | 1 | 0 | 0 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mervic, M.; Grašič, E.; Jaki Mekjavić, P.; Vidovič Valentinčič, N.; Fakin, A. Head and Eye Movements During Pedestrian Crossing in Patients with Visual Impairment: A Virtual Reality Eye Tracking Study. J. Eye Mov. Res. 2025, 18, 55. https://doi.org/10.3390/jemr18050055

Mervic M, Grašič E, Jaki Mekjavić P, Vidovič Valentinčič N, Fakin A. Head and Eye Movements During Pedestrian Crossing in Patients with Visual Impairment: A Virtual Reality Eye Tracking Study. Journal of Eye Movement Research. 2025; 18(5):55. https://doi.org/10.3390/jemr18050055

Chicago/Turabian StyleMervic, Mark, Ema Grašič, Polona Jaki Mekjavić, Nataša Vidovič Valentinčič, and Ana Fakin. 2025. "Head and Eye Movements During Pedestrian Crossing in Patients with Visual Impairment: A Virtual Reality Eye Tracking Study" Journal of Eye Movement Research 18, no. 5: 55. https://doi.org/10.3390/jemr18050055

APA StyleMervic, M., Grašič, E., Jaki Mekjavić, P., Vidovič Valentinčič, N., & Fakin, A. (2025). Head and Eye Movements During Pedestrian Crossing in Patients with Visual Impairment: A Virtual Reality Eye Tracking Study. Journal of Eye Movement Research, 18(5), 55. https://doi.org/10.3390/jemr18050055