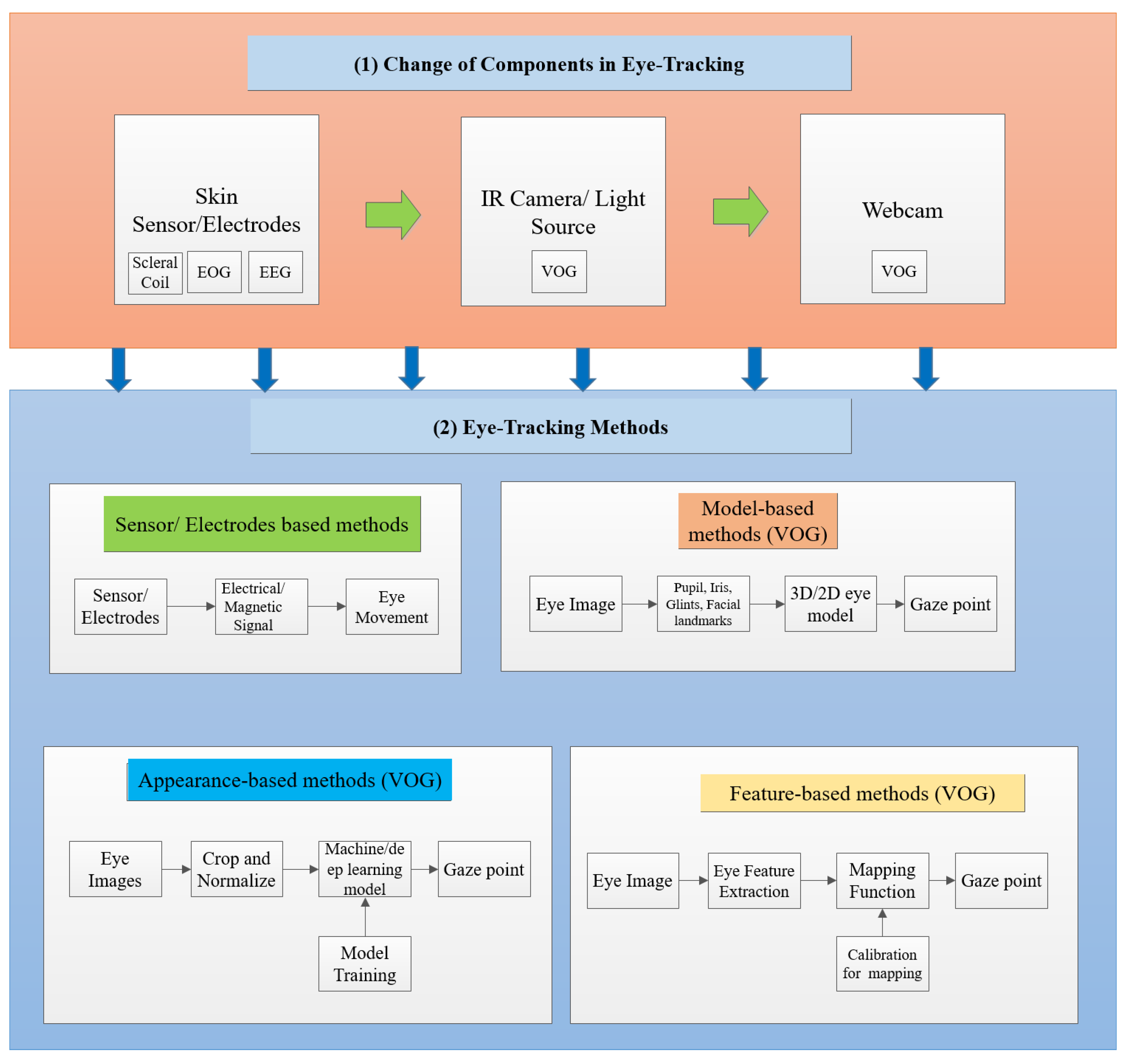

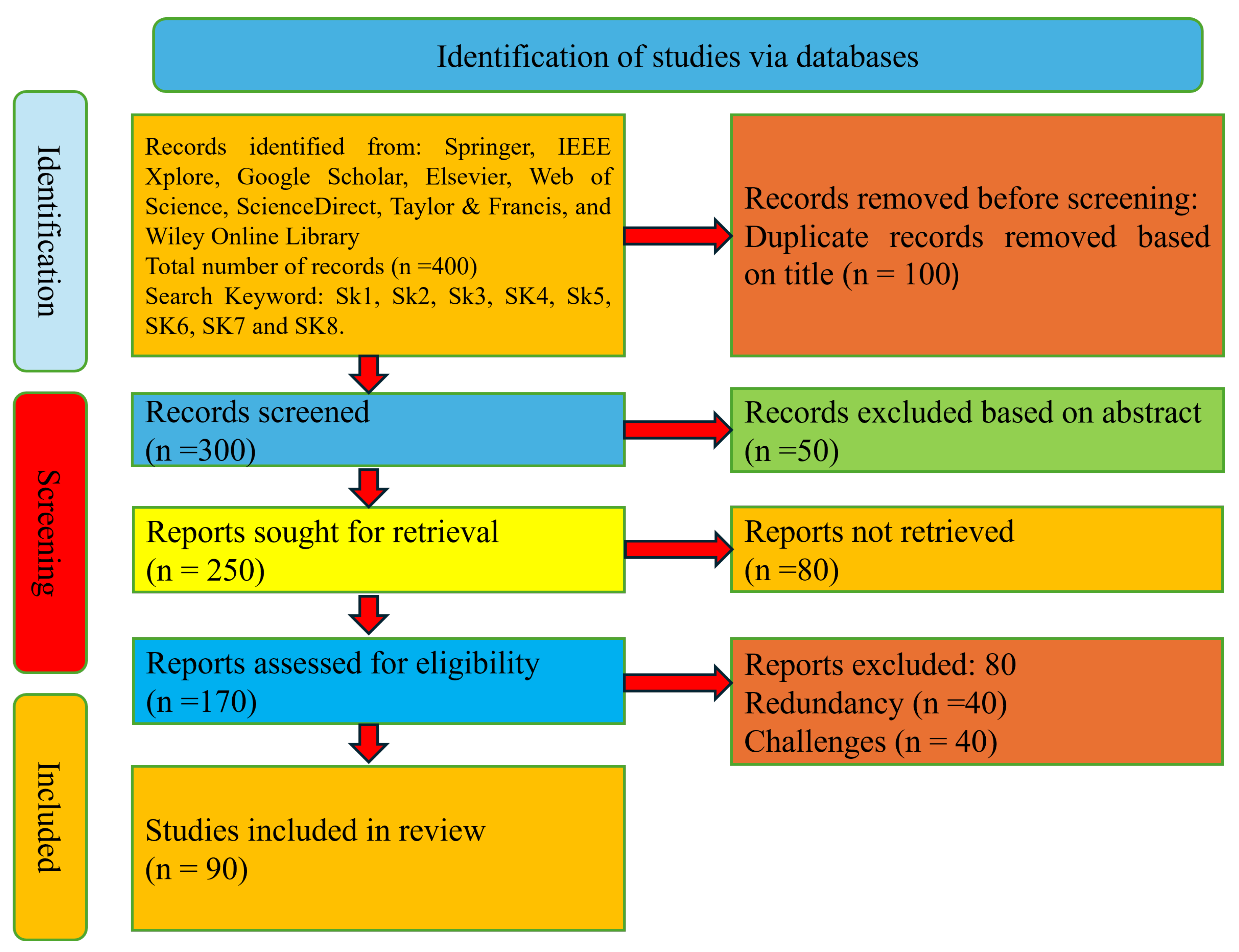

5.4.1. Model-Based Eye-Tracking Methods

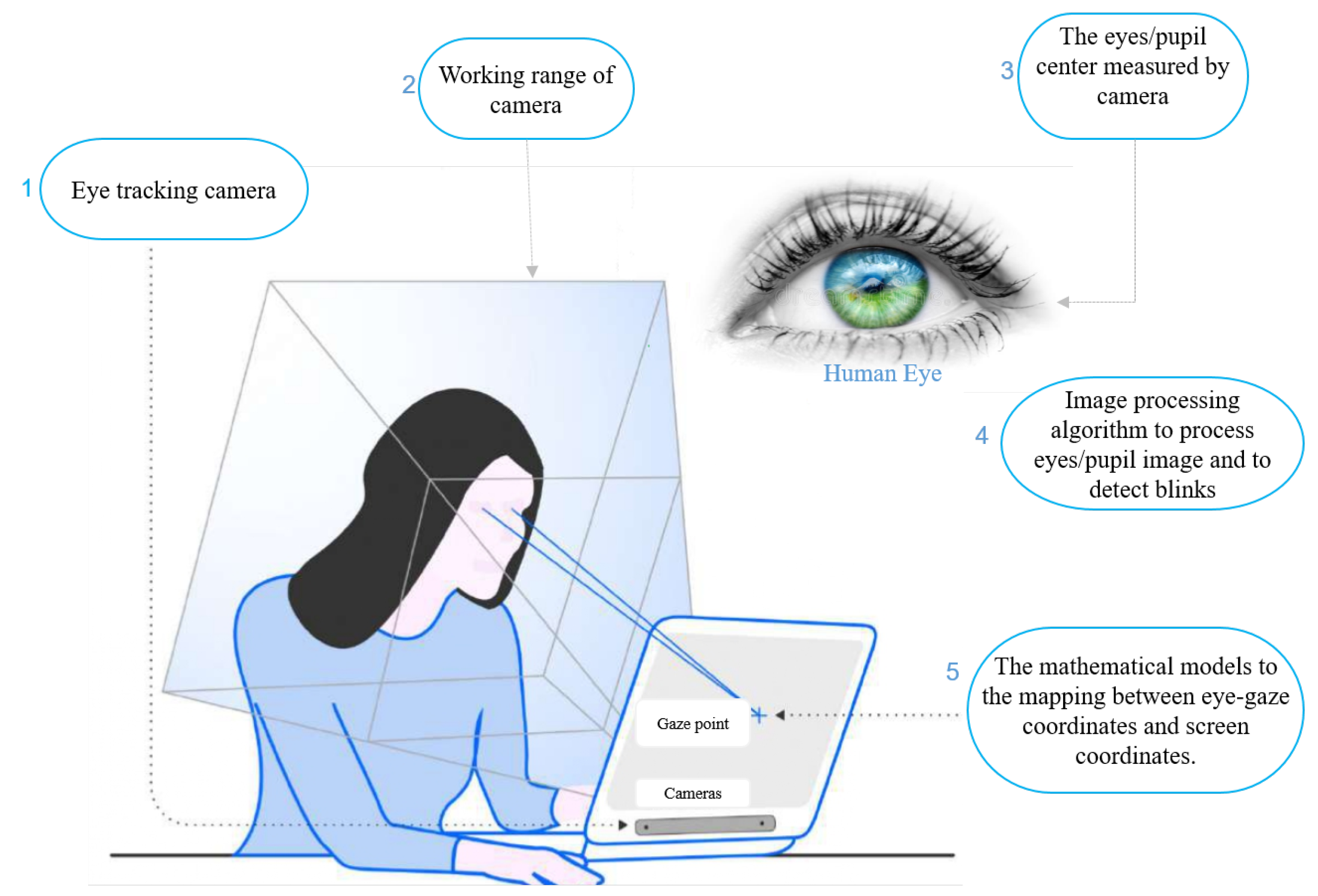

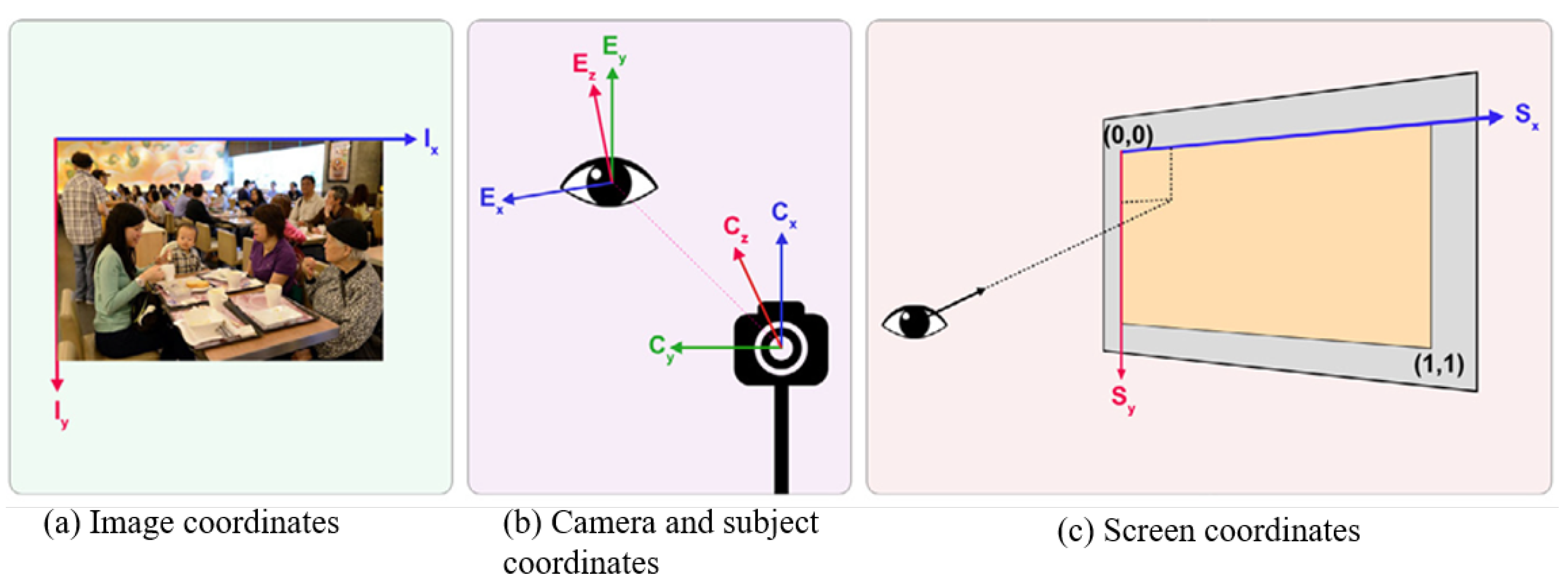

Model-based eye tracking employs mathematical models to simulate eye behavior and estimate the point of gaze using 2D and 3D methods. These 2D and 3D eye tracking techniques utilize such models to interpret eye data and determine where the participant is looking [

49]. In 2D eye tracking, a camera captures eye images or videos, which are then analyzed to extract features like pupil position and corneal reflection. These features are fed into a mathematical model to estimate gaze in two-dimensional space, commonly represented as coordinates on a screen or image. Two-dimensional eye tracking is more straightforward, affordable, and suitable for usability testing and market research applications. Standard methods for 2D eye tracking include the pupil/iris-corner technique (PCT/ICT), pupil/iris-corneal reflection technique (PCRT/ICRT), cross-ratio (CR), and homography normalization (HN). In contrast, 3D eye tracking extends gaze estimation into three-dimensional space by modeling eye geometry and movements, often using sensors or depth-sensing cameras for accuracy. Three-dimensional methods, such as corneal reflection and pupil refraction (CRPR), facial features (FF), and depth sensor (DS), provide the detailed spatial information essential for tasks like depth perception and interaction with 3D environments. These advancements offer enhanced accuracy and versatility in gaze estimation across different scenarios [

40,

66].

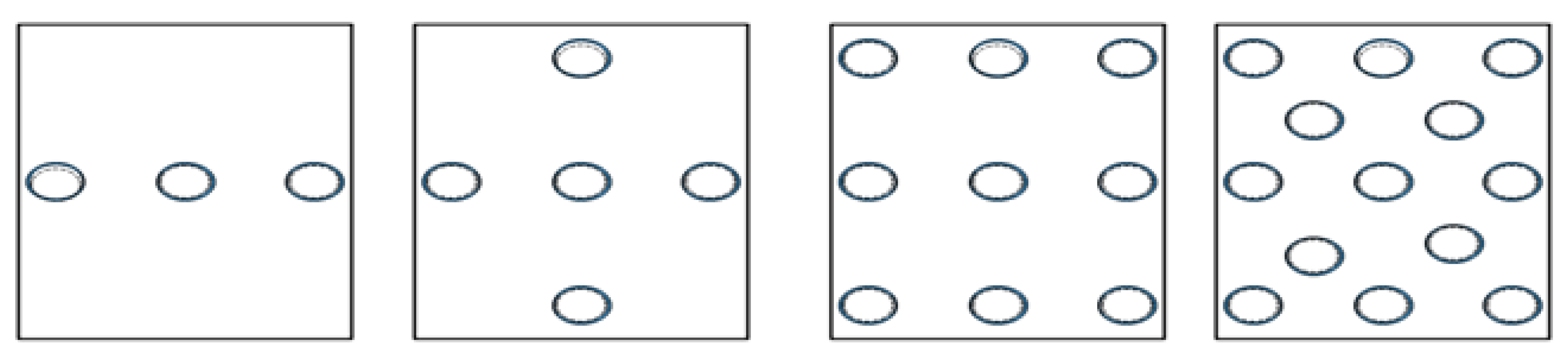

The PCT/ICT-based method relies on the relationship between the vector formed by the pupil/iris center and the eye corner point to determine the Point of Regard (POR). When the user’s head remains stationary, the position of the eye corner also remains fixed, while the pupil/iris feature changes with gaze movement. Consequently, the vector from the pupil/iris center to the eye corner point serves as a 2D variation feature containing gaze information for constructing a mapping function with the POR. During personal calibration, the user sequentially fixates on multiple preset calibration points displayed on the screen. This process generates multiple sets of corresponding vectors and PORs, which are then used to regress the coefficients of the mapping function. Upon subsequent use of the system, the mapping function enables direct estimation of the POR using the vector extracted from newly captured images, without the need for recalibration. Cheung et al. [

128] presented a method for eye gaze tracking using a webcam in a desktop setting, eliminating the need for specialized hardware and complex calibration procedures. The approach involved real-time face tracking to extract eye regions, followed by iris center localization and eye corner detection using intensity and edge features. A sinusoidal head model was employed to estimate head pose, enhancing accuracy to compensate for head movement. The integration of eye vector and head movement data enabled precise gaze tracking. Key contributions included robust eye region extraction, accurate iris center detection, and a novel weighted adaptive algorithm for pose estimation, improving overall gaze tracking accuracy. Experimental results demonstrated effectiveness under varying light conditions, achieving an average accuracy of 1.28° without head movement and 2.27° with minor head movement. Wibirama et al. [

129] introduced a pupil localization method for real-time video-oculography (VOG) systems, particularly addressing extreme eyelid occlusion scenarios. It significantly improves pupil localization accuracy by enhancing the geometric ellipse fitting technique, with cumulative histogram processing for robust binarization and random sample consensus (RANSAC) for outlier removal, especially during extreme eyelid occlusion. Experimental results demonstrated a 51% accuracy improvement over state-of-the-art methods under 90% eyelid occlusion conditions. Moreover, it achieved real-time pupil tracking, with less than 10 ms of computational time per frame, showcasing promising results for accurately measuring horizontal and vertical eye movements. The work in [

130] proposed a practical approach to identify the Iris Center (IC) in low-resolution images, dealing with noise, occlusions, and position fluctuations. The system uses the eye’s geometric features and a two-stage algorithm to locate the IC. The initial phase employs a rapid convolution-based method to determine the approximate IC position, while the subsequent phase enhances this by boundary tracing and elliptical fitting. The technique surpasses current approaches and was tested on public datasets such as BioID and Gi4E. It has little processing complexity and the possibility for improvement by using geometrical models to boost gaze-tracking accuracy. The work primarily examined in-plane rotations but proposed the potential for creating pose-invariant models through advanced 3D methods.

Several studies have employed the PCRT/ICRT technique, which utilizes the glint formed by the corneal reflection of a light source on the pupil/iris. Similarly to the PCT/ICT-based method, it relies on a mapping function between the glint vector and the Point of Regard (POR), typically represented by a set of polynomials. Moucary et al. [

131] introduced a cost-effective and efficient automatic eye-tracking technology to aid patients with movement impairments, such as Amyotrophic Lateral Sclerosis (ALS). The system uses a camera and software to identify and monitor eye movements, providing a different way to interact with digital devices. The main features include letter typing prompts, form drawing capability, and gesture-based device interaction. Hosp et al. [

132] presented an inexpensive and computationally efficient system for precise monitoring of rapid eye movements, essential for applications in vision research and other disciplines. It reached operational frequencies above 500 Hz, allowing efficient detection and tracking of glints and pupils, even at high velocities. The system provided an accurate gaze estimate, with an error rate below 1 degree and an accuracy of 0.38°, all at a significantly lower cost (less than EUR 600) than commercial equivalents. Furthermore, its cost-effectiveness enables researchers to customize the system to their needs, regardless of eye-tracker suppliers, making it a versatile and economical option for rapid eye tracking. The study in [

133] proposed a representation-learning-based, non-intrusive, cost-effective eye-tracking system that functions in real-time and needs just a small number of calibration photos. A regression model was used to account for individual differences in eye appearance, such as eyelid size and foveal offset. Compensation techniques were incorporated to account for different head positions. The authors validated the efficacy framework using point-wise and pursuit-based methods, leading to precise gaze estimations. The system was evaluated against a baseline model, Webgazer, using an open-source benchmark dataset called Eye of the Typer Dataset (EOTT). The authors demonstrated the applicability of the created framework by analyzing users’ gaze behavior on online sites and items exhibited on a shelf. Ban et al. [

134] suggested a dual-camera eye-tracking system integrated with machine learning and deep learning methods to enhance accurate human–machine interaction. The system utilized a data classification technique to consistently categorize gaze and eye movements, allowing for robotic arm control. The system achieved 99.99% accuracy in detecting eye directions using a deep-learning algorithm and the pupil center-corneal reflection approach. Examples involves controlling a robotic arm in real-time to perform activities such as playing chess and manipulating dice. It has various application domains, such as controlling surgical robots, warehousing systems, and construction tools, demonstrating adaptability and efficacy in human–computer interaction duties. The study in [

135] introduced a technique based on pupil glint for clinical use. The study utilized a dark-pupil technique incorporating up to 12 corneal reflections, resulting in high-resolution imaging of both the pupil and cornea. The pupil-glint vector normalization factor improves vertical tracking accuracy, revealing ways to optimize spatial precision without increasing light sources.

The cross-ratio-based method capitalizes on the consistent properties of cross-ratios in projective transformations to ascertain a screen point aligned with the pupil center, only requiring knowledge of light source positions and screen dimensions. Nonetheless, this identified point represents the intersection of the eyeball’s optical axis (OA) and the screen rather than pinpointing the POR. Liu et al. [

136] introduced a CR-based method incorporating weighted averaging and polynomial compensation. The process began with estimating the 3D corneal center and the average vector of the virtual pupil plane. Subsequently, reference planes parallel to the virtual pupil plane were established, and screen points at their intersections with lines connecting the camera’s optical center and pupil center were calculated. The resulting POR was refined through a weighted average of these points, followed by polynomial compensation. Experimental findings demonstrated a gaze accuracy of 1.33°. Arar et al. [

137] introduced a CR-based automatic gaze estimation system that functions accurately under natural head movements. A subject-specific calibration method, relying on regularized least-squares regression (LSR), significantly enhanced accuracy, especially when fewer calibration points are available. Experimental results underscored the system’s capacity to generalize with minimal calibration effort, while preserving high accuracy levels. Furthermore, an adaptive fusion scheme was implemented to estimate the point of regard (PoR) from both eyes, utilizing the visibility of eye features, resulting in a notable reduction in error by approximately 20%. This enhancement notably improved the estimation coverage, particularly during natural head movements. Additionally, they presented [

138] a comprehensive analysis of regression-based user calibration techniques, introducing a novel weighted least squares regression method alongside a real-time cross-ratio-based gaze estimation framework. This integrated approach minimized user effort, while maximizing estimation accuracy, facilitating the development of user-friendly HCI applications. Some methods use two homography transformations to estimate the point of regard (POR): one from the picture to the cornea, and another from the cornea to the screen. These approaches assume that the pupil center and the corneal reflection plane are coplanar. Morimoto et al. [

139] introduced the Screen-Light Decomposition (SLD) framework, which utilizes the homography normalization method to provide a comprehensive process for determining the point-of-gaze (PoG) using a single uncalibrated camera and several light sources. Their system incorporates a unique eye-tracking approach called Single Normalized Space Adaptive Gaze Estimation (SAGE). Their user trials showed that SAGE improved performance relative to previous systems by gently adapting its performance when corneal reflections were not detected, even during calibration. Another study [

140] introduced a strategy to improve the reliability of gaze tracking in varying lighting situations for HCI applications. This procedure comprises multiple steps: It first creates a coded corneal reflection (CR) pattern by employing time-multiplexed infrared (IR) light sources. It identifies CR candidates in eye pictures and adjusts their locations according to the user’s head and eye motions. It chooses genuine CRs from the motion-compensated CR candidates by employing a new cost function. Empirical findings showed that this strategy greatly enhanced gaze-tracking accuracy in different lighting situations compared to traditional techniques.

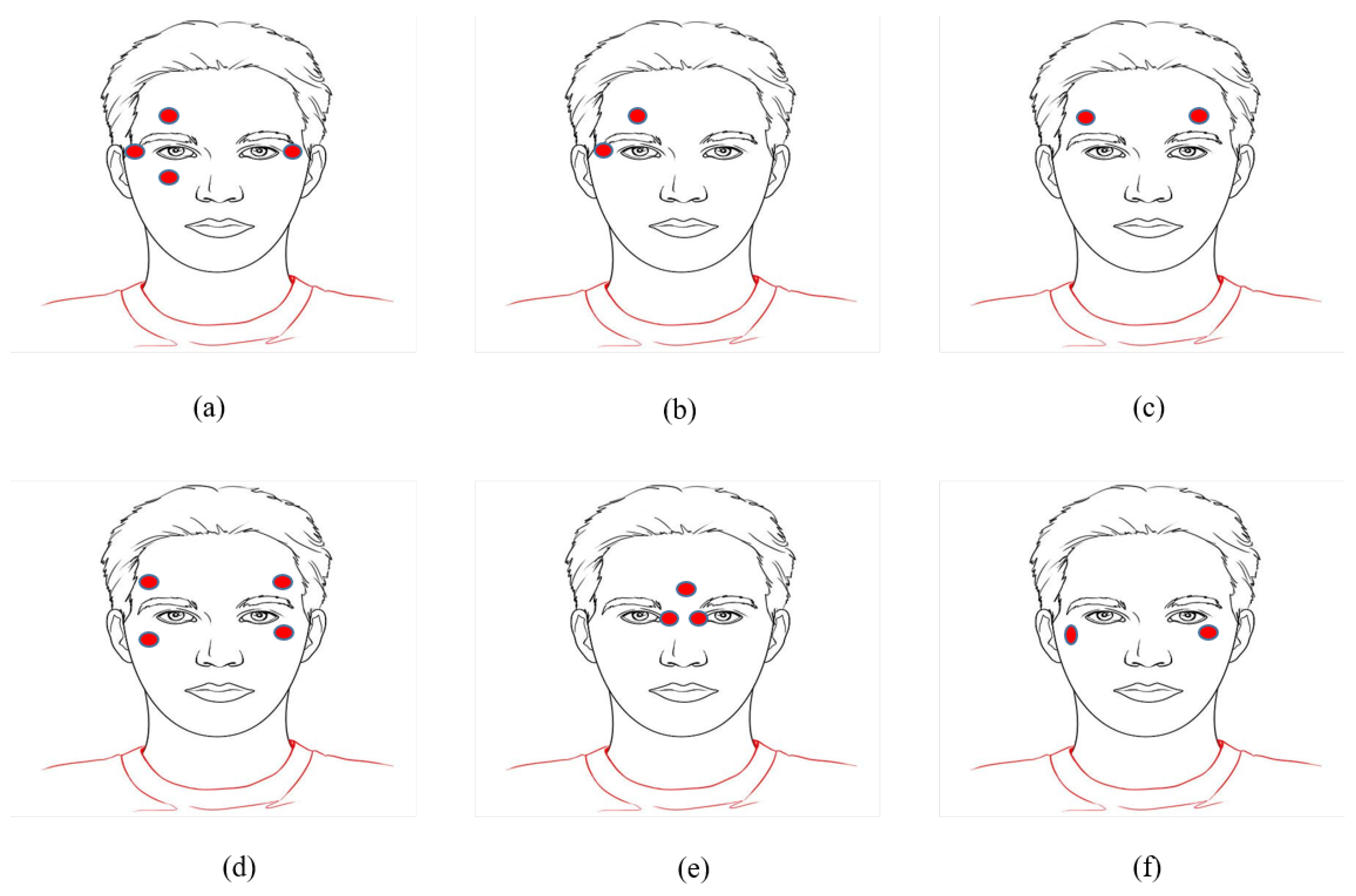

Three-dimensional model-based techniques use eye invariant characteristics to calibrate and estimate spatial eye parameters by analyzing eye ball structure and a spatial geometric imaging model. A typical method involves corneal reflection and pupil refraction (CRPR-based), recognized for its excellent accuracy, due to high-resolution cameras, infrared (IR) light, and exact feature extraction. Raghavendran et al. [

141] developed a 9-point calibration-based method using Corneal Reflections for cognitive evaluation. The authors suggested employing Near Infrared Rays, Active Light, and Corneal Reflections (CR) to mitigate any risks of IR exposure. This provides an efficient and painless way to test cognitive function, while minimizing the risks linked to conventional IR-based methods. In their study in [

26], the authors proposed a novel 3D gaze estimation model with few unknown parameters and an implicit calibration method using gaze patterns, addressing the challenges of burdensome personal calibration and complex all-device calibration. Their method leveraged natural and prominent gaze patterns to implicitly calibrate unknown parameters, eliminating the need for explicit calibration. By simultaneously constructing an optical axis projection (OAP) plane and a visual axis projection (VAP) plane, the authors represented the optical axis and the visual axis as 2D points, facilitating a similarity transformation between OAP and gaze patterns. This transformation allowed a 3D gaze estimation model to predict the VAP using the OAP as an eye feature. The unknown parameters were calculated separately by linearly aligning OAP patterns to natural and easily detectable gaze patterns. Chen et al. [

142] developed a branch-structured eye detector that uses a coarse-to-fine method to address the difficulties of eye recognition and pupil localization in video-based eye-tracking systems. The detector combines three classifiers: an ATLBP-THACs-based cascade classifier, a branch Convolutional Neural Network (CNN), and a multi-task CNN. Additionally, they developed a method for coarse pupil localization to improve the efficiency and performance of pupil localization methods. This involves utilizing a CNN to estimate the positions of seven landmarks on downscaled eye images, followed by pupil center and radius estimation, pupil enhancement, image binarization, and connected component analysis. Moreover, the authors curated the neepuEYE dataset, containing 5500 NIR eye images from 109 individuals with diverse eye characteristics. Wan et al. [

143] developed an estimation technique to overcome the challenges posed by glints and slippage in gaze estimation. Their methodology involves leveraging pupil contours and refracting virtual pupils to accurately determine real pupil axes, converting them into gaze directions. For 2D gaze estimation, they implemented a regression approach utilizing spherical coordinates of the real pupil normal to pinpoint the gaze point. To mitigate the impact of noise and outliers in calibration data, the researchers integrated aggregation filtering and random sample consensus (RANSAC). Additionally, they introduced a 3D gaze estimation method that translates the pupil axis into the gaze direction, employing a two-stage analytical algorithm for iterative calibration of the eye center and rotation matrix. Notably, their approach, encompassing 2D and 3D estimation techniques, yielded results on par with state-of-the-art methods, offering the dual advantages of being glint-free and resilient against slippage. A summary of selected model-based eye-tracking studies is given in

Table 7.

5.4.2. Feature-Based Eye Tracking

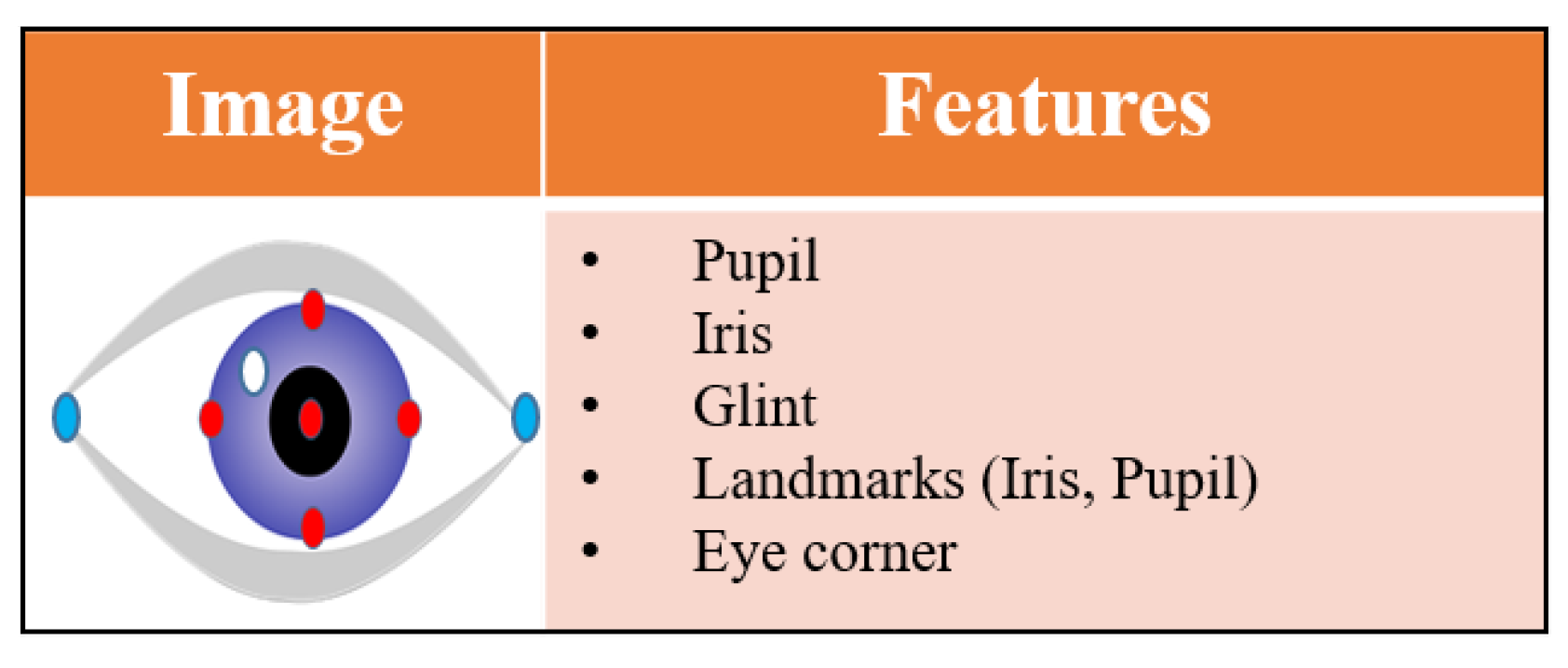

Feature-based eye tracking is a technique that calculates gaze direction by utilizing specific eye characteristics. However, rather than analyzing the entire eye image, it selectively extracts key features such as the pupil center, eye corner, and glint reflection. These extracted features serve as crucial inputs for accurately estimating the direction of the eye gaze. Sun et al. [

144] devised a binocular eye-tracking system to enhance the precision of estimating 3D gaze location by utilizing extracted features such as pupils, corneal reflections, and eyelids. They highlighted the constraints of existing eye trackers, especially in reliably measuring gaze depth, and the importance of enhanced technology for future uses, such as human–computer interaction with 3D displays. The device incorporates 3D stereo imaging technology, to provide users with an immersive visual experience without needing other equipment. It also captures eye movements under 3D stimuli without any disruption. They developed a regression-based 3D eye-tracking model employing eye movement data gathered under stereo stimuli to estimate gaze in both 2D and 3D. Their approach successfully assessed gaze direction and depth in different workspace volumes, as demonstrated by experimental data. The device’s development was impeded by cables and components, leading to poor utilization of equipment space, potentially limiting its utility and practicality. Aunsri et al. [

145] introduced novel and efficient characteristics tailored for a gaze estimation system compatible with a basic and budget-friendly eye-tracking setup. These characteristics encompassed various vectors and angles involving the pupil, glint, and inner eye corner, as well as a distance vector and deviation angle. Through their experimentation, the authors demonstrated the superiority of an artificial neural network (ANN) with two hidden layers, achieving a remarkable classification accuracy of 97.71% across 15 regions of interest, surpassing alternative methods. They underscored the affordability and simplicity of their proposed features, highlighting their suitability for real-time applications and their potential to assist individuals with disabilities and special needs in human–computer interaction settings. Nevertheless, the authors acknowledged the potential limitations of their proposed features under extreme face angles, signaling an area for future improvement and refinement. The article in [

146] introduced a method for determining gaze direction from noisy eye images by detecting key points within the ocular area using a single-eye image. Employing the HRNet backbone network, the system acquired image representations at varying resolutions, ensuring stability even at lower resolutions. Utilizing numerous recognized landmarks as inputs for a compact neural network enabled accurate prediction of gaze direction. Evaluation on the MPIIGaze dataset under realistic settings showcased cutting-edge performance, yielding a gaze estimate error of 4.32°.

The study in [

17] introduced a cost-effective system based on features for human–computer interaction (HCI). Utilizing the Dlib library, the authors extracted eye landmarks and employed a 5-point calibration technique to accurately map these landmarks’ coordinates to screen coordinates. Through experimentation involving participants with varying abilities, including disabled and non-disabled individuals, the system showcased noteworthy performance metrics. These include an impressive average blinking accuracy of 97.66%; typing speeds of 15 and 20 characters per minute for disabled and non-disabled participants, respectively; and a visual angle accuracy averaging 2.2° for disabled participants and 0.8° for non-disabled participants. Bozomitu et al. [

68] developed a real-time computer interface using eye tracking. The authors compared eight pupil-detection methods to find the best interface option. The authors constructed six of these algorithms using state-of-the-art methods, while two were well-known open-source algorithms used for comparison. Using a unique testing technique, 370,182 eye images were analyzed to assess each algorithm in different lighting situations. The circular Hough transform technique had the most significant detection rate of 91.39% in a 50-pixel target region, making it ideal for real-time applications on the suggested interface. Cursor controllability, screen stability, running time, and user competency were all assessed. The study emphasized the relevance of algorithm accuracy and other elements in real-time eye-tracking system performance and selecting a suitable algorithm for the application. The system typed 20.74 characters per minute with mean TER, NCER, and CER rates of 3.55, 0.60, and 2.95. The article in [

147] used eye-gaze features to predict students’ learning results during embodied activities. Students’ eye-tracking data, learning characteristics, academic achievements, and task completion length were carefully monitored throughout the trial. The authors used predictive modeling to analyze the data. The findings implied that eye-gaze monitoring, learning traces, and behavioral traits can predict student learning outcomes. It illuminated eye-gaze tracking and learning traces, highlighting its importance in understanding students’ behavior in embodied learning environments. The study in [

148] presented an HCI system that enables interaction via eye gaze features. The system initiated its operation by utilizing the Dlib library to capture and monitor eye movements to identify the coordinates of 68 landmark points. Following that, a model was implemented to identify four unique eye gazes. The system can perform tasks such as segmenting tools and components, selecting objects, and toggling interfaces based on eye gaze detection by capturing and tracking eye movements with a standard RGB webcam. The experimental findings demonstrated that the proposed eye gaze recognition method obtained an accuracy of more than 99% when observing from the recommended distance from the webcam. The potential impact of varying illumination conditions on the robustness of the proposed system was duly acknowledged.

5.4.3. Appereance-Based Eye-Tracking Methods

With the rapid advancement of machine learning and deep learning techniques, the appearance-based method has garnered increasing attention for gaze tracking [

149]. These techniques use the eye’s photometric appearance to estimate gaze direction, utilizing a single camera [

66]. By constructing gaze estimation models from eye images, appearance-based methods can implicitly extract image features without relying on hand-engineered features. They rely on machine learning, and the choice of learning approach influences performance. Supervised methods, such as CNN-based models, require large labeled datasets and often deliver high accuracy, but demand extensive computation and user calibration, reducing scalability. In contrast, unsupervised methods work with unlabeled data, making them more adaptable and easier to deploy in low-cost or large-scale scenarios. However, they generally offer lower accuracy, particularly in high-precision applications. While requiring a larger dataset for training than model-based techniques, appearance-based methods offer the advantage of learning invariance to appearance disparities [

150]. Moreover, they are known to yield favorable results in real-world scenarios [

151]. However, their direct screen location prediction limits their use of a single device and orientation. Some studies have devised techniques to predict gaze relative to the camera’s coordinate system, offering a more versatile approach [

152]. Unlike 2D eye feature regression methods, appearance-based methods do not necessitate specialized devices for detecting geometric features; they utilize image features like image pixels or deep features for regression. Various machine learning models, such as neural networks, Gaussian process regression models, adaptive linear regression models, and convolutional neural networks (CNN), have been used in appearance-based methods. However, tackling this remains challenging, despite advancements due to the complex and nuanced characteristics of eye appearance. Variability in individual features, subtle nuances in gaze behavior, and environmental factors further complicate the development of accurate and robust gaze estimation models [

49].

The appearance-based eye tracking method integrates various elements to accurately estimate gaze direction. It utilizes a camera to capture eye images, often complemented by considerations of head pose. By including head pose information, the system can adjust for variations in head orientation, enhancing the robustness of gaze estimation across different viewing angles. A comprehensive dataset comprising a diverse range of eye images and corresponding gaze directions is essential to develop reliable gaze estimation models. These meticulously curated and annotated datasets provide the training data necessary for machine learning algorithms to learn the intricate mapping between eye appearance and gaze direction. Training involves feeding these datasets into various regression models, such as neural networks, Gaussian process regression models, or convolutional neural networks. Through iterative learning processes, the models refine their ability to extract relevant features from eye images and accurately predict gaze direction.

Convolutional neural networks (CNNs) have found extensive applications in various computer vision tasks, including object recognition and image segmentation. They have also demonstrated remarkable performance in the realm of gaze estimation, employing diverse learning strategies tailored to different tasks, namely supervised, semi-supervised, self-supervised, and unsupervised CNNs. Supervised CNNs are predominantly employed in appearance-based gaze estimation, relying on large-scale labeled datasets for training. Given that gaze estimation involves learning a mapping function of raw images onto human gaze, deeper CNN architectures typically yield superior performance, akin to conventional computer vision tasks [

49]. Several CNN architectures, such as LeNet [

151], AlexNet [

153], VGG [

154], ResNet18 [

60], and ResNet50 [

155], originally proposed for standard computer vision tasks, have been successfully adapted for gaze estimation. Conversely, semi-supervised, self-supervised, and unsupervised CNNs leverage unlabeled images to enhance gaze estimation performance, offering a cost-efficient alternative to collecting labeled data. While semi-supervised CNNs utilize both labeled and unlabeled images for optimization, unsupervised CNNs solely rely on unlabeled data. Nonetheless, optimizing CNNs without a ground truth poses a significant challenge in unsupervised settings.

Appearance-based methodologies have been widely implemented across numerous computer vision applications, showcasing exceptional efficacy and performance. The study in [

151] concentrated on presenting a Convolutional Neural Network (CNN)-based gaze estimation system in real-world environments. The authors presented the MPIIGaze dataset, which includes 213,659 images collected using laptops from 15 users. The dataset has far more variety in appearance and lighting than other datasets. Using a multimodal CNN, they also created a technique for estimating gaze in natural settings based on appearance. This method performs better than the most advanced algorithms, especially in complex cross-dataset assessments and gaze estimation in realistic settings. Furthermore, the work in [

151] was extended for the development of the “GazeNet” model [

154], featuring an updated network architecture utilizing a 16-layer VGGNet. The authors significantly expanded the annotations of 37,667 images, encompassing six facial landmarks, including the four eye corners, two mouth corners, and pupil centers. Fresh evaluations were incorporated to address critical challenges in domain-independent gaze estimation, particularly emphasizing disparities in gaze range, illumination conditions, and individual appearance variations. Karmi et al. [

156] introduced a three-phase CNN-based approach for gaze estimation. Initially, they utilized a CNN for head position estimation and integrated Viola Jones’ algorithm to extract the eye region. Subsequently, various CNN architectures were employed for mapping, including pre-trained models, CNNs trained from scratch, and bilinear convolutional neural networks. The authors conducted model training using the Columbia gaze database. Remarkably, their proposed model achieved an impressive accuracy rate of 96.88% in accurately estimating three gaze directions while considering a single head pose.

Several studies have constructed machine learning models utilizing facial landmarks, including the eye, iris, and face, to accurately detect gaze in relation to head pose and eye position. This approach offers a robust and versatile method for gaze estimation, capable of accommodating changes in head pose and eye position to accurately assess where an individual is looking. Modi et al. [

157] utilized a CNN to develop a model for real-time monitoring of user behavior on websites via gaze tracking. The authors conducted experiments involving 15 participants, to observe their real-time interactions with Pepsi’s Facebook page. Through their investigation, their model demonstrated an accuracy rate of 84.3%, even in the presence of head movements. Leblond et al. [

158] presented an innovative eye-tracking framework that utilizes facial features and is designed to control robots. The framework demonstrated reliable performance in both indoor and outdoor settings. Their cutting-edge CNN model enables a face alignment method with a more straightforward configuration, decreasing expenses related to gaze tracking, face position estimation, and pose estimation. The authors highlighted the versatility of their suggested technique for mobile devices, demonstrating an average error rate of around 4.5° on the MPIIGaze dataset [

154]. In addition, their system showed remarkable precision on the UTMultiview [

159] and GazeCapture [

160] datasets, achieving average errors of 3.9° and 3.3°, respectively. Furthermore, it drastically reduced calculation time by up to 91%. Additionally, Sun et al. [

161] introduced the gaze estimation model “S2LanGaze” by employing semi-supervised learning on the face image captured by an RGB camera. The researchers assessed the performance of this model across three publicly available gaze datasets: MPIIFaceGaze, Columbia Gaze, and ETH-XGaze. The work [

162] developed a gaze estimation technique for low-resolution 3D Time-of-Flight (TOF) cameras to identify gaze from pictures. An infrared picture and YOLOv8 neural network model were used to reliably identify eye landmarks and determine a subject’s 3D gaze angle. Experimental validation in real-time automobile driving conditions showed reliable gaze detection across vehicle locations. The approach can overcome illumination issues, since it only uses infrared pictures, especially at night. According to experimental validation, its horizontal and vertical root mean square errors were 6.03° and 4.83°, respectively. The “iTracker” system, developed by Krafka et al. [

160], integrates data from left and right eye images, facial images, and facial grid data. The facial grid provides information about the location of the facial region within the captured image.

In addition to the features derived from images, temporal data extracted from videos play a significant role in gaze estimation processes. Typically, CNNs extract features from the facial images in each video frame, subsequently fed into the network for analysis. The network autonomously captures and integrates the temporal information crucial for accurate gaze estimation through this process. Ren et al. [

163] contributed substantially to gaze estimation by providing a new framework called FE-net, which incorporates a temporal network. This system integrates channel attention and self-attention modules, which improve the effective utilization of extracted data and strengthen the importance of critical areas for gaze estimation. Incorporating an RNN architecture allows the model to understand the time-dependent patterns of eye movement processes, significantly enhancing the accuracy of predicting gaze direction. Notably, FE-net offers separate predictions for the gaze directions of the left and right eyes based on monocular and facial features, culminating in the computation of the overall gaze direction. Experimental validation demonstrated that FE-net achieved an accuracy of 3.19° and 3.16° on the EVE [

164] and MPIIFaceGaze datasets, respectively. In addition, Zhou et al. [

165] improved the “ITracker” [

160] system’s performance by adding bidirectional Long Short-Term Memory (bi-LSTM) units, which can extract time-related data for gaze estimation from a single image frame. To do away with the need for the face grid, the authors modified the network architecture to combine the pictures of both eye areas. On the MPIIGaze dataset, experimental results revealed that the improved Itracker model outperformed other models by 11.6%, while retaining substantial estimate accuracy across different image resolutions. Furthermore, experimental results on the EyeDiap dataset showed that adding bi-LSTM units to characterize temporal relationships between frames improved gaze estimation in video sequences by 3%. Rustagi et al. [

166] proposed a touchless typing gaze estimation technique that utilized head-movement-based gestures. Users were able to interact with a virtual QWERTY keyboard by gesturing towards desired letters, with these gestures being captured using a face detection deep neural network. The resulting video frames were processed and input into a pre-trained HopeNet model, a CNN-based head pose estimator designed to compute intrinsic Euler angles (yaw, pitch, and roll) from RGB images. Subsequently, the output from the HopeNet model was utilized to train an RNN, which predicted the sequence of clusters corresponding to the letters the user had observed. Evaluation of this technique on a dataset comprising 2234 video sequences from 22 subjects demonstrated an accuracy rate of 91.81%. Alsharif et al. [

167] also proposed a gaze-based typing method employing an LSTM network. Through training with input data and leveraging the Connectionist Temporal Classification (CTC) loss function, this network can directly output characters, without the need for Hidden Markov Model (HMM) states. Experimental findings indicated a classification accuracy of 92% post-restriction of the output to a limited set of words using a Finite State Transducer.

Some deep learning techniques have aimed to decompose the gaze into various interconnected features and have constructed efficient models to estimate gaze using these features. The study in [

168] presented a driver monitoring system that utilizes a vehicle safety system fitted with a camera to observe the driver’s level of attention and promptly activate alerts when inattentiveness is detected. The researchers devised a CNN architecture called DANet, which combines many tasks, such as Dual-loss Block and head posture prediction, into a unified model. This integrated model generates a wide range of driver face expressions, while minimizing the level of intricacy. DANet exhibited impressive outcomes in terms of both speed and accuracy, achieving a rate of 15 frames per second on a vehicle computing platform. This represents a noteworthy progression in the field of driver attention monitoring. Furthermore, Lu et al. [

169] utilized the Xception network for gaze estimation to minimize hardware requirements. They applied convolutional neural networks (CNNs) to analyze images captured by cameras, thereby enhancing classification accuracy and stability through attention mechanisms and optimization techniques. The authors employed an automatic network structure search and tested the FGSM algorithm to improve model efficiency and robustness. In parallel, the enhanced Xception network facilitated mobile deployment for practical human–machine interaction. Zeng et al. [

170] utilized self-supervised learning to develop an attention detection system employing gaze estimation, to enable educators to assess learning outcomes efficiently in virtual classrooms. The system achieved an average gaze angle error of 6.5° when evaluated on the MPIIFaceGaze dataset. Subsequent cross-dataset testing confirmed the system’s robustness, with assessments performed on the RT-GENE and Columbia datasets resulting in average gaze angle errors of 13.8 and 5.5°, respectively. The work in [

171] used semi-supervised learning for gaze detection estimates, enabling generalized solutions with limited labeled gaze datasets and fresh face pictures. Models were evaluated using ETH-XGaze. A two-stage model was proposed. Initially, the model maximized vector representation agreement to learn representations from input pictures. Next, embeddings from the pre-training phase were used to minimize a loss function and train a prediction model. The model obtained a mean accuracy of 2.152° for gaze estimation.

Table 8 shows a summary of selected appearance-based studies.

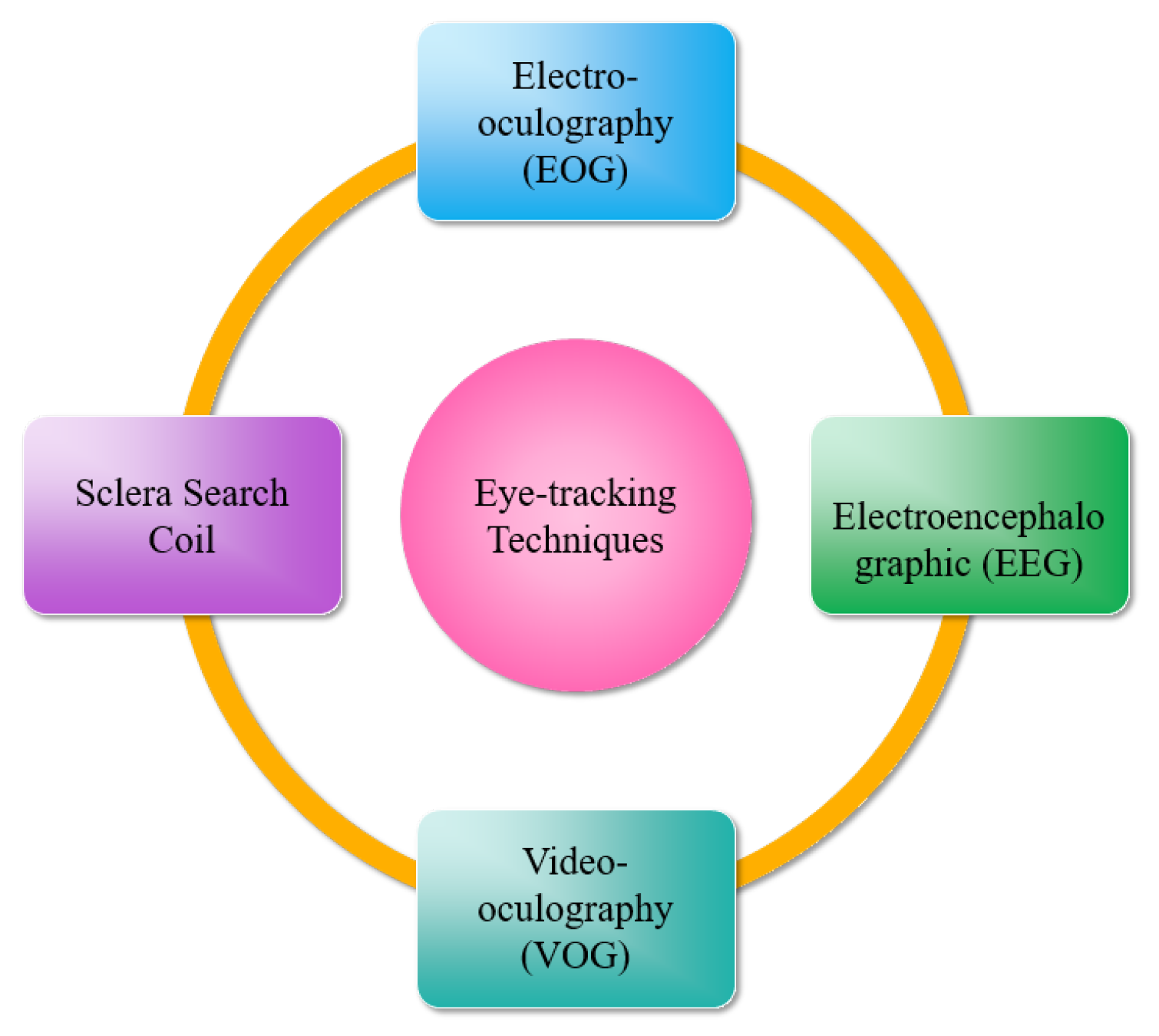

Among the methods reviewed, the scleral coil, EEG, and some EOG-based approaches are primarily experimental and used in controlled research environments. In contrast, video-based techniques are widely adopted in commercial eye-tracking solutions. To provide clarity on technological maturity,

Table 9 summarizes the readiness level of each eye-tracking method, distinguishing widely commercialized approaches from those primarily used in research or emerging applications.