- Article

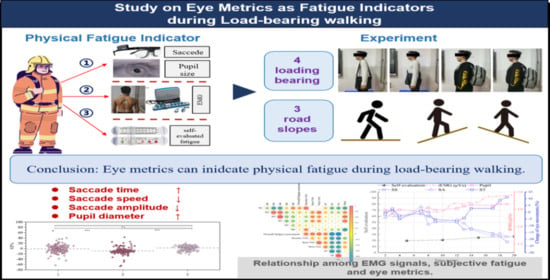

Including Eye Movement in the Assessment of Physical Fatigue Under Different Loading Types and Road Slopes

- Yixuan Wei,

- Xueli Wen and

- Longzhe Jin

- + 3 authors

Background: Emergency rescuers frequently carry heavy equipment for extended periods, making musculoskeletal disorders a major occupational concern. Loading type and road slope play important roles in inducing physical fatigue; however, the assessment of physical fatigue under these conditions remains limited. Aim: This study aims to investigate physical fatigue under different loading types and road slope conditions using both electromyography (EMG) and eye movement metrics. In particular, this work focuses on eye movement metrics as a non-contact data source in comparison with EMG, which remains largely unexplored for physical fatigue assessment. Method: Prolonged load-bearing walking was simulated to replicate the physical demands experienced by emergency rescuers. Eighteen male participants completed experimental trials incorporating four loading types and three road slope conditions. Results: (1) Loading type and road slope significantly affected EMG activity, eye movement metrics, and perceptual responses. (2) Saccade time (ST), saccade speed (SS), and saccade amplitude (SA) exhibited significant differences in their rates of change across three stages defined by perceptual fatigue. ST, SS, and SA showed strong correlations with subjective fatigue throughout the entire load-bearing walking process, whereas pupil diameter demonstrated only a moderate correlation with subjective ratings. (3) Eye movement metrics were incorporated into multivariate quadratic regression models to quantify physical fatigue under different loading types and road slope conditions. Conclusions: These findings enhance the understanding of physical fatigue mechanisms by demonstrating the potential of eye movement metrics as non-invasive indicators for multidimensional fatigue monitoring in work environments involving varying loading types and road slopes.

27 January 2026