- Article

Uncertainty-Aware Evidential Fusion for Multi-Modal Object Detection in Autonomous Driving

- Qihang Yang,

- Yang Zhao and

- Hong Cheng

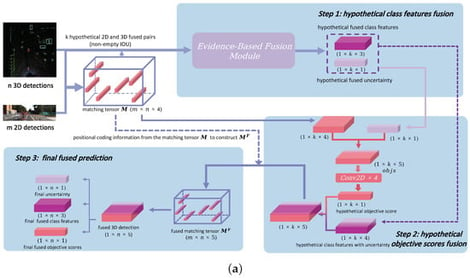

The advancement of autonomous driving technologies necessitates the development of sophisticated object detection systems capable of integrating heterogeneous sensor data to overcome the inherent limitations of unimodal approaches. While multi-modal fusion strategies offer promising solutions, they confront significant challenges such as data alignment complexities in early fusion and computational burdens coupled with overfitting risks in deep fusion methodologies. To address these issues, we propose a Multi-modal Multi-class Late Fusion (MMLF) framework that operates at the decision level. This late-fusion strategy preserves the architectural integrity of individual detectors and facilitates the flexible integration of diverse modalities. A key innovation of our approach is the incorporation of an evidence-theoretic uncertainty quantification mechanism, based on Dempster-Shafer theory, which provides a mathematically grounded confidence measure. Comprehensive offline evaluations on the KITTI benchmark dataset demonstrate the effectiveness of our framework, showing substantial performance improvements across multiple metrics (including 2D detection, 3D detection, and bird’s-eye view tasks) while simultaneously achieving significant reductions in uncertainty estimates—by approximately 77% for cars, 76% for pedestrians, and 67% for cyclists. These results collectively enhance both the reliability and interpretability of object detection outcomes. This work provides a versatile and scalable solution for multi-modal object detection that effectively addresses critical challenges in autonomous driving applications.

13 February 2026