Journal Description

Big Data and Cognitive Computing

Big Data and Cognitive Computing

is an international, peer-reviewed, open access journal on big data and cognitive computing published monthly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), dblp, Inspec, Ei Compendex, and other databases.

- Journal Rank: JCR - Q1 (Computer Science, Theory and Methods) / CiteScore - Q1 (Computer Science Applications)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 23.1 days after submission; acceptance to publication is undertaken in 4.6 days (median values for papers published in this journal in the second half of 2025).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

- Journal Cluster of Artificial Intelligence: AI, AI in Medicine, Algorithms, BDCC, MAKE, MTI, Stats, Virtual Worlds and Computers.

Impact Factor:

4.4 (2024);

5-Year Impact Factor:

4.2 (2024)

Latest Articles

Evaluating Architecture Scalability and Transfer Learning in Urban Scene Segmentation Using Explainable AI

Big Data Cogn. Comput. 2026, 10(3), 75; https://doi.org/10.3390/bdcc10030075 (registering DOI) - 1 Mar 2026

Abstract

►

Show Figures

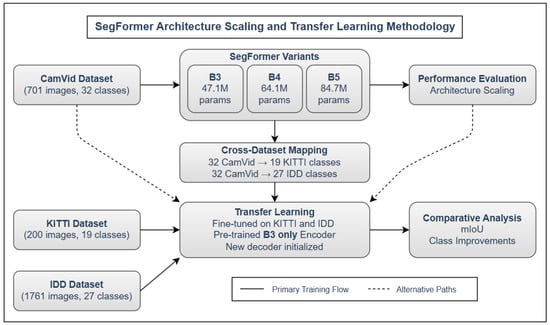

Semantic segmentation plays a pivotal role in autonomous driving, enabling pixel-level understanding of road scenes. Although transformer-based models such as SegFormer have shown exceptional performance on large datasets, their generalization to smaller and geographically diverse datasets remains underexplored. In this work, we analyze

[...] Read more.

Semantic segmentation plays a pivotal role in autonomous driving, enabling pixel-level understanding of road scenes. Although transformer-based models such as SegFormer have shown exceptional performance on large datasets, their generalization to smaller and geographically diverse datasets remains underexplored. In this work, we analyze the scalability and transferability of SegFormer variants (B3, B4, B5) using CamVid as the base dataset. We perform cross-dataset transfer learning to KITTI and IDD, evaluate class-level performance, and explore explainable AI via confidence heatmaps. Our findings show that SegFormer-B5 achieves the highest accuracy (

Open AccessArticle

Data-Driven Ergonomic Load Dynamics for Human–Autonomy Teams

by

Nikitas Gerolimos, Vasileios Alevizos and Georgios Priniotakis

Big Data Cogn. Comput. 2026, 10(3), 74; https://doi.org/10.3390/bdcc10030074 (registering DOI) - 28 Feb 2026

Abstract

►▼

Show Figures

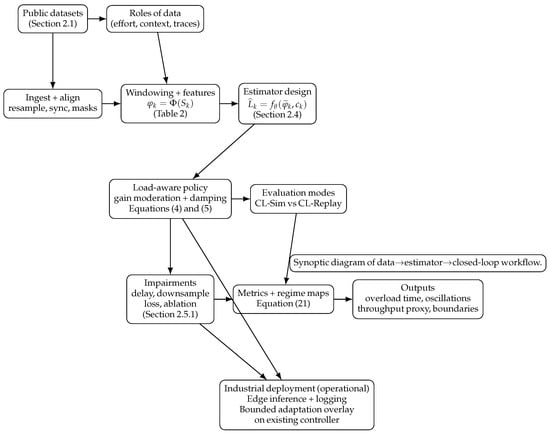

Ergonomic load in human–autonomy teams is commonly treated as a static score or a post-hoc audit, even though modern sensing and communication enable real-time regulation of operator effort. We model ergonomic load as a dissipative dynamical state inferred online from multimodal effort proxies

[...] Read more.

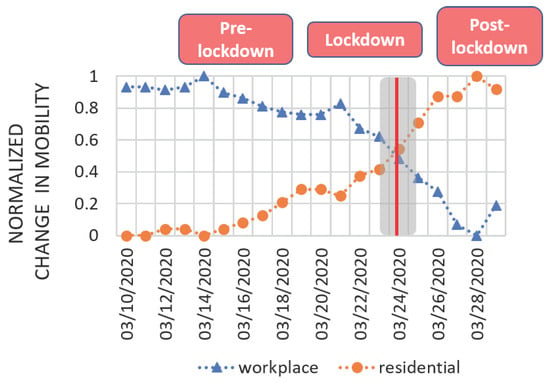

Ergonomic load in human–autonomy teams is commonly treated as a static score or a post-hoc audit, even though modern sensing and communication enable real-time regulation of operator effort. We model ergonomic load as a dissipative dynamical state inferred online from multimodal effort proxies and task context, and couple it to autonomy through load-dependent gain moderation and compliance shaping. The method is evaluated on public human–swarm and human–robot interaction traces together with effort-proximal wearable and myographic datasets using a unified, windowed pipeline and controlled stress tests that emulate latency, downsampling, packet loss, and channel dropouts. On a large human–swarm benchmark, the estimator achieves strong discrimination and calibration for rare high-load events (up to AUROC

Figure 1

Open AccessArticle

Building Prototype Evolution Pathway for Emotion Recognition in User-Generated Videos

by

Yujie Liu, Zhenyang Dong, Yante Li and Guoying Zhao

Big Data Cogn. Comput. 2026, 10(3), 73; https://doi.org/10.3390/bdcc10030073 (registering DOI) - 28 Feb 2026

Abstract

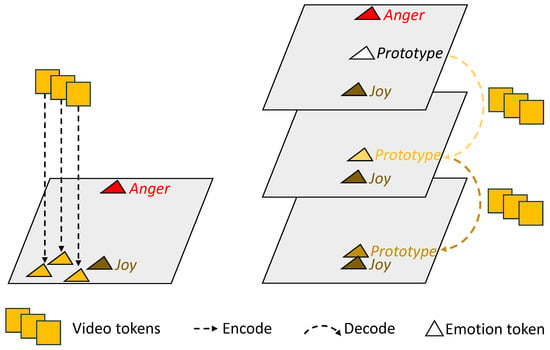

Large-scale pretrained foundation models are increasingly essential for affective analysis in user-generated videos. However, current approaches typically reuse generic multi-modal representations directly with task-specific adapters learned from scratch, and their performance is limited by the large affective domain gap and scarce emotion annotations.

[...] Read more.

Large-scale pretrained foundation models are increasingly essential for affective analysis in user-generated videos. However, current approaches typically reuse generic multi-modal representations directly with task-specific adapters learned from scratch, and their performance is limited by the large affective domain gap and scarce emotion annotations. To address these issues, we introduce a novel paradigm that leverages auxiliary cross-modal priors to enhance unimodal emotion modeling, effectively exploiting modality-shared semantics and modality-specific inductive biases. Specifically, we propose a progressive prototype evolution framework that gradually transforms a neutral prototype into discriminative emotional representations through fine-grained cross-modal interactions with visual cues. The auxiliary prior serves as a structural constraint, reframing the adaptation challenge from a difficult domain shift problem into a more tractable prototype shift within the affective space. To ensure robust prototype construction and guided evolution, we further design category-aggregated prompting and bidirectional supervision mechanisms. Extensive experiments on VideoEmotion-8, Ekman-6, and MusicVideo-6 validate the superiority of our approach, achieving state-of-the-art results and demonstrating the effectiveness of leveraging auxiliary modality priors for foundation-model-based emotion recognition.

Full article

(This article belongs to the Special Issue Sentiment Analysis in the Context of Big Data)

►▼

Show Figures

Figure 1

Open AccessArticle

Automating Data Product Discovery with Large Language Models and Metadata Reasoning

by

Michalis Pingos, Artemis Photiou and Andreas S. Andreou

Big Data Cogn. Comput. 2026, 10(3), 72; https://doi.org/10.3390/bdcc10030072 (registering DOI) - 28 Feb 2026

Abstract

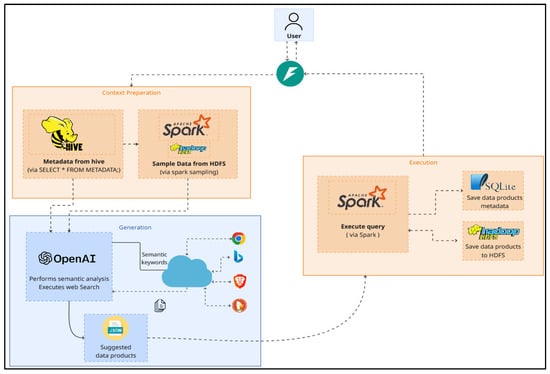

The exponential growth of data over the past decade has created new challenges in transforming raw information into actionable knowledge, particularly through the development of data products. The latter is essentially the result of querying and retrieving specific portions of data from a

[...] Read more.

The exponential growth of data over the past decade has created new challenges in transforming raw information into actionable knowledge, particularly through the development of data products. The latter is essentially the result of querying and retrieving specific portions of data from a data storage architecture at various levels of granularity. Traditionally, this transformation depends on domain experts manually analyzing datasets and providing feedback to effectively describe or annotate data that facilitates data retrieval. Nevertheless, this is a very time-consuming process that highlights the need for its potential automation. To address this challenge, the present paper proposes a framework which utilizes Large Language Models to support data product discovery through semantic metadata reasoning and executable query prototyping. The framework is evaluated across two domains and three levels of concept complexity to assess the LLM’s ability to identify relevant datasets and generate executable data product queries under varying analytical demands. The findings indicate that LLMs perform effectively in simpler scenarios, but their performance declines as conceptual complexity and dataset volume increase.

Full article

(This article belongs to the Special Issue Large Language Models for Cutting Edge Applications in Science and Humanities)

►▼

Show Figures

Figure 1

Open AccessArticle

A Convergent Method for Energy Optimization in Modern Hopfield Networks

by

Yida Bao, Mohammad Arifuzzaman, Tran Duc Le, Tao Jiang, Jing Hou, Yuan Xing and Dongfang Hou

Big Data Cogn. Comput. 2026, 10(3), 71; https://doi.org/10.3390/bdcc10030071 (registering DOI) - 28 Feb 2026

Abstract

Modern Hopfield networks are energy-based associative memory models whose performance critically depends on the structure and optimization of their energy functions. While recent formulations substantially improve storage capacity, the resulting non-convex energy landscapes are often optimized using heuristic update rules that can be

[...] Read more.

Modern Hopfield networks are energy-based associative memory models whose performance critically depends on the structure and optimization of their energy functions. While recent formulations substantially improve storage capacity, the resulting non-convex energy landscapes are often optimized using heuristic update rules that can be sensitive to initialization and may not provide monotonic energy descent or rigorous convergence guarantees. In this work, we propose a new energy formulation for modern Hopfield networks together with a principled iterative optimization scheme. The proposed energy admits a natural decomposition that allows optimization via the concave–convex procedure (CCCP), yielding well-defined network dynamics with guaranteed energy descent beyond classical Hopfield updates. We establish fundamental theoretical properties of the proposed framework, including non-negativity, boundedness, and monotonic decrease in the energy along iterations. In particular, we prove that the induced dynamics converge to a stationary point of the energy function, providing explicit convergence guarantees for the resulting Hopfield-type model. We further evaluate the proposed approach on synthetic classification tasks and compare its optimization behavior with that of the original Hopfield network and several standard machine learning baselines. Experimental results demonstrate improved stability, convergence behavior, and competitive classification performance. We also validate the approach on real-world benchmark datasets to demonstrate utility beyond controlled experiments. Overall, this work provides a theoretically grounded energy-based optimization framework for modern Hopfield networks, clarifying the role of principled optimization in achieving stable and convergent associative memory dynamics.

Full article

(This article belongs to the Special Issue Application of Pattern Recognition and Machine Learning)

Open AccessArticle

TransGoT: Structured Graph-of-Thoughts Reasoning for Machine Translation with Large Language Models

by

Danying Zhang, Yixin Liu, Jie Zhao and Cai Xu

Big Data Cogn. Comput. 2026, 10(3), 70; https://doi.org/10.3390/bdcc10030070 - 27 Feb 2026

Abstract

Machine translation with large language models has recently attracted growing attention due to its flexibility and strong zero-shot and few-shot capabilities. However, most prompt-based LLM translation methods rely on linear generation or shallow self-refinement, implicitly committing to a single reasoning path. Such designs

[...] Read more.

Machine translation with large language models has recently attracted growing attention due to its flexibility and strong zero-shot and few-shot capabilities. However, most prompt-based LLM translation methods rely on linear generation or shallow self-refinement, implicitly committing to a single reasoning path. Such designs are brittle when translating long and syntactically complex sources, where reliable translation often requires structured planning and hypothesis exploration. In this paper, we propose TransGoT, a novel machine translation framework inspired by the graph-of-thoughts paradigm, which formulates translation as a structured, multi-stage reasoning process over a graph of intermediate thoughts. TransGoT explicitly decomposes translation into constraint identification, draft generation, and culture- and style-aware refinement, enabling systematic exploration and aggregation of alternative translation hypotheses. To better adapt graph-based reasoning to translation, we design two key mechanisms: (1) Uncertainty-driven thought transformation. Unlike general reasoning tasks, translation uncertainty is often localized and unevenly distributed across tokens, making holistic regeneration inefficient. We therefore design uncertainty-driven thought transformation, which leverages model-internal confidence signals to guide targeted token-level revision; (2) Dispersion-adaptive thought scoring. It emphasizes evaluation criteria with stronger inter-candidate variance to enable robust multi-criteria thought selection. We evaluate TransGoT on the WMT22 benchmarks and experimental results demonstrate that TransGoT consistently outperforms strong LLM-based translation baselines, validating the effectiveness of structured graph-based reasoning for machine translation.

Full article

(This article belongs to the Special Issue Natural Language Processing Applications in Big Data)

Open AccessReview

Generative AI as a General-Purpose Technology: Foundations, Applications, and Labor Market Implications Through 2030

by

Maikel Leon

Big Data Cogn. Comput. 2026, 10(3), 69; https://doi.org/10.3390/bdcc10030069 - 27 Feb 2026

Abstract

Generative Artificial Intelligence (AI) has transitioned from a research milestone to a general-purpose technology with wide-ranging implications for organizations, labor markets, and information systems. Thanks to improvements in deep learning, generative adversarial networks (GANs), variational autoencoders (VAEs), diffusion models, transformer-based language models, and

[...] Read more.

Generative Artificial Intelligence (AI) has transitioned from a research milestone to a general-purpose technology with wide-ranging implications for organizations, labor markets, and information systems. Thanks to improvements in deep learning, generative adversarial networks (GANs), variational autoencoders (VAEs), diffusion models, transformer-based language models, and reinforcement learning from human feedback (RLHF), generative AI can now create high-quality text, images, audio, code, and other types of content. This review synthesizes the core technical foundations and best practices for training, evaluation, and governance, with an emphasis on scalability and human oversight. The paper examines applications across customer service, marketing, software development, healthcare, finance, law, logistics, and the creative industries, and assesses the labor implications of generative AI using a sociotechnical lens. This study also develops a disruption index that integrates task exposure, adoption rates, time savings, and skill complementarity. The paper concludes with actionable recommendations for policymakers, organizations, and workers, emphasizing the importance of reskilling, algorithmic transparency, and inclusive innovation. Taken together, these contributions situate generative AI within broader debates about automation, augmentation, and the future of work.

Full article

(This article belongs to the Section Large Language Models and Embodied Intelligence)

Open AccessArticle

An Intelligent Simulation Training System for Power Grid Control and Operations

by

Sheng Yang, Shengyuan Li, Yuan Fu, Wei Jiang, Wenlong You and Min Chen

Big Data Cogn. Comput. 2026, 10(3), 68; https://doi.org/10.3390/bdcc10030068 - 27 Feb 2026

Abstract

►▼

Show Figures

With the increasing complexity of power grid operations, operator training requires timely feedback and objective assessment. Traditional approaches based on lectures and scripted simulations provide limited personalization and weak explainability. This paper presents AI Instructors, an intelligent simulation training system for power-grid

[...] Read more.

With the increasing complexity of power grid operations, operator training requires timely feedback and objective assessment. Traditional approaches based on lectures and scripted simulations provide limited personalization and weak explainability. This paper presents AI Instructors, an intelligent simulation training system for power-grid control and dispatching. The system is organized into learning, training, assessment, and analysis modules, and is built around two core technical components: (i) parameterized item generation from rule/knowledge bases using a phrase-enhanced transformer (PET), and (ii) solver-grounded, topology-aware grading with hierarchical feedback for both numeric and free-text responses. A voice interaction module is integrated to simulate telephone-based dispatch orders. We validate the system through a pilot deployment with licensed dispatch operators and scenario experiments on benchmark cases. Compared with a conventional scripted DTS workflow, AI Instructors achieves higher stepwise procedure accuracy (68%→90%), a lower topology-violation rate (32%→11%), and shorter response time (120 s→72 s), while increasing the proportion of parameterized questions and accelerating skill acquisition. These results suggest that combining adaptive sequencing with topology-safe, explainable evaluation can improve training effectiveness and operational safety.

Full article

Figure 1

Open AccessArticle

Validating the Effectiveness of Fine-Tuning for Semantic Classification of Japanese Katakana Words: An Analysis of Frequency and Polysemy Effects on Accuracy

by

Kazuki Kodaki and Minoru Sasaki

Big Data Cogn. Comput. 2026, 10(3), 67; https://doi.org/10.3390/bdcc10030067 - 26 Feb 2026

Abstract

In semantic classification of katakana words using large language models and pre-trained language models, semantic divergences from original English meanings, such as those found in Wasei-Eigo which is Japanese-made English, and the inherent sense ambiguity in katakana words may affect model accuracy. To

[...] Read more.

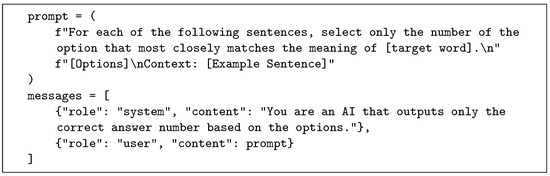

In semantic classification of katakana words using large language models and pre-trained language models, semantic divergences from original English meanings, such as those found in Wasei-Eigo which is Japanese-made English, and the inherent sense ambiguity in katakana words may affect model accuracy. To analyze the impact of these loanword semantic characteristics on classification accuracy, we created a large-scale dataset from the Balanced Corpus of Contemporary Written Japanese. We extracted 403,819 sentences covering 230 katakana words defined in dictionaries and suitable for word sense disambiguation tasks, and used the gpt-4.1-mini model to predict the meaning of the target words based on their context, to create annotation data. We then fine-tuned the pre-trained language model DeBERTa V3 with this data. We compared baseline and fine-tuned model accuracy, dividing data into four quadrants based on frequency and polysemy to conduct statistical analysis and explore strategies for improving accuracy. We also tested the hypothesis that high-frequency, low-polysemy words would achieve the highest accuracy, while low-frequency, high-polysemy words would achieve the lowest. As a result, the fine-tuned model showed an average accuracy improvement of approximately 53% compared to the baseline model. As hypothesized, high-frequency, low-polysemy words achieved the highest accuracy (93.93%), while low-frequency, high-polysemy words achieved the lowest (81.14%). Our analysis quantitatively revealed that both frequency and polysemy contributed to accuracy improvement, but polysemy had a greater impact on accuracy than frequency.

Full article

(This article belongs to the Special Issue Artificial Intelligence (AI) and Natural Language Processing (NLP))

►▼

Show Figures

Figure 1

Open AccessArticle

Comparative Read Performance Analysis of PostgreSQL and MongoDB in E-Commerce: An Empirical Study of Filtering and Analytical Queries

by

Jovita Urnikienė, Vaida Steponavičienė and Svetoslav Atanasov

Big Data Cogn. Comput. 2026, 10(2), 66; https://doi.org/10.3390/bdcc10020066 - 19 Feb 2026

Abstract

►▼

Show Figures

This paper presents a comparative analysis of read performance for PostgreSQL and MongoDB in e-commerce scenarios, using identical datasets in a resource-constrained single-host environment. The results demonstrate that PostgreSQL executes complex analytical queries 1.6–15.1 times faster, depending on the query type and data

[...] Read more.

This paper presents a comparative analysis of read performance for PostgreSQL and MongoDB in e-commerce scenarios, using identical datasets in a resource-constrained single-host environment. The results demonstrate that PostgreSQL executes complex analytical queries 1.6–15.1 times faster, depending on the query type and data volume. The study employed synthetic data generation with the Faker library across three stages, processing up to 300,000 products and executing each of 6 query types 15 times. Both filtering and analytical queries were tested on non-indexed data in a controlled localhost environment with PostgreSQL 17.5 and MongoDB 7.0.14, using default configurations. PostgreSQL showed 65–80% shorter execution times for multi-criteria queries, while MongoDB required approximately 33% less disk space. These findings suggest that normalized relational schemas are advantageous for transactional e-commerce systems where analytical queries dominate the workload. The results are directly applicable to small and medium e-commerce developers operating in budget-constrained, single-host deployment environments when choosing between relational and document-oriented databases for structured transactional data with read-heavy analytical workloads. A minimal indexed validation confirms that the baseline trends remain consistent under a simple indexing configuration. Future work will examine broader indexing strategies, write-intensive workloads, and distributed deployment scenarios.

Full article

Figure 1

Open AccessPerspective

Integration of Lean Analytics and Industry 6.0: A Novel Meta-Theoretical Framework for Antifragile, Generative AI-Orchestrated, Circular–Regenerative, and Hyper-Connected Manufacturing Ecosystems

by

Mohammad Shahin, Mazdak Maghanaki and F. Frank Chen

Big Data Cogn. Comput. 2026, 10(2), 65; https://doi.org/10.3390/bdcc10020065 - 17 Feb 2026

Abstract

The convergence of Lean manufacturing principles with Industry 4.0 has yielded significant operational improvements, yet the emerging paradigm of Industry 6.0—characterized by antifragile, autonomous, and sustainable systems—demands a fundamental rethinking of existing analytical frameworks. This paper introduces the Industry 6.0 Lean Analytics (I6LA)

[...] Read more.

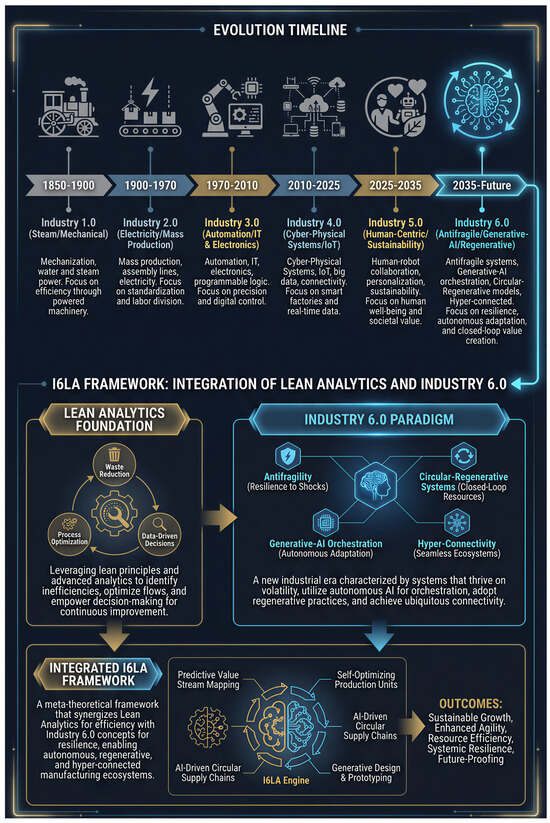

The convergence of Lean manufacturing principles with Industry 4.0 has yielded significant operational improvements, yet the emerging paradigm of Industry 6.0—characterized by antifragile, autonomous, and sustainable systems—demands a fundamental rethinking of existing analytical frameworks. This paper introduces the Industry 6.0 Lean Analytics (I6LA) Framework, a novel meta-theoretical approach that integrates Lean principles with the core concepts of Industry 6.0. By systematically analyzing the limitations of current Lean analytics in the context of Industry 6.0 requirements, we identify critical gaps in areas such as system resilience, AI-driven autonomy, and circular economy integration. The I6LA Framework addresses these gaps through four new theoretical pillars: Antifragile Lean Systems Theory, generative AI-Orchestrated Value Streams, Circular–Regenerative Analytics, and Hyper-Connected Ecosystem Integration. This research provides a new set of mathematical models for measuring antifragility, generative orchestration efficiency, and circularity, offering a comprehensive analytical toolkit for the next generation of manufacturing. The framework’s primary contribution is a paradigm shift from optimizing stable, human-in-the-loop systems to managing dynamic, autonomous ecosystems that thrive on volatility and are regenerative by design. This paper provides both a robust theoretical foundation and practical implementation guidance for organizations navigating the transition to Industry 6.0.

Full article

(This article belongs to the Section Cognitive System)

►▼

Show Figures

Figure 1

Open AccessArticle

Efficient Time Series Visual Exploration for Insight Discovery

by

Heba Helal and Mohamed A. Sharaf

Big Data Cogn. Comput. 2026, 10(2), 64; https://doi.org/10.3390/bdcc10020064 - 16 Feb 2026

Abstract

Visual exploration of time series data is essential for uncovering meaningful insights in domains such as healthcare monitoring and financial analysis, yet it remains computationally challenging due to the combinatorial explosion of potential subsequence comparisons. For long time series, an exhaustive comparison of

[...] Read more.

Visual exploration of time series data is essential for uncovering meaningful insights in domains such as healthcare monitoring and financial analysis, yet it remains computationally challenging due to the combinatorial explosion of potential subsequence comparisons. For long time series, an exhaustive comparison of all possible subsequence pairs becomes prohibitively expensive, limiting interactive exploration. This paper presents the TiVEx (Time Series Visual Exploration) family of algorithms for efficiently discovering the top-k most dissimilar subsequence pairs in comparative time series analysis. TiVEx achieves scalability through three complementary strategies: TiVEx-sharing exploits computational reuse across overlapping subsequence windows, eliminating redundant distance calculations; TiVEx-pruning employs distance-based upper bounds to eliminate unpromising candidates without exhaustive evaluation; and TiVEx-hybrid integrates both mechanisms to maximize efficiency gains. The key observation is that overlapping subsequences share a substantial computational structure, which can be systematically exploited while maintaining result optimality through provably correct pruning bounds. Extensive experiments on six diverse datasets demonstrate that TiVEx-hybrid achieves up to 84% reduction in distance calculations compared to exhaustive search while producing identical top-k results. Compared to state-of-the-art subsequence comparison methods, TiVEx-hybrid achieves 2.3× improvement in computational efficiency. Our effectiveness analysis confirms that TiVEx achieves result quality within 5% of exhaustive search even when exploring only a subset of candidate positions, enabling scalable visual exploration without compromising insight quality.

Full article

(This article belongs to the Special Issue Application of Pattern Recognition and Machine Learning)

►▼

Show Figures

Figure 1

Open AccessReview

Cognitive Assemblages: Living with Algorithms

by

Stéphane Grumbach

Big Data Cogn. Comput. 2026, 10(2), 63; https://doi.org/10.3390/bdcc10020063 - 16 Feb 2026

Abstract

►▼

Show Figures

The rapid expansion of algorithmic systems has transformed cognition into an increasingly distributed and collective enterprise, giving rise to what can be described as cognitive assemblages, dynamic constellations of humans, institutions, data infrastructures, and artificial agents. This paper traces the historical and conceptual

[...] Read more.

The rapid expansion of algorithmic systems has transformed cognition into an increasingly distributed and collective enterprise, giving rise to what can be described as cognitive assemblages, dynamic constellations of humans, institutions, data infrastructures, and artificial agents. This paper traces the historical and conceptual evolution that has led to this shift. First, we show how cognition, once conceived as the property of autonomous individuals, has progressively become embedded in socio-technical networks in which algorithmic processes participate as co-agents. Second, we revisit the progressive awareness of human cognitive limits, from bounded rationality to contemporary theories of extended mind. These frameworks anticipate and help explain today’s hybrid cognitive ecologies. Third, we assess the philosophical implications for Enlightenment ideals of autonomy, rationality, and self-governance, showing how these concepts must be reinterpreted in light of pervasive algorithmic intermediation. Finally, we examine global initiatives that seek to integrate augmented cognitive capacities into large-scale cybernetic forms of societal coordination, ranging from digital platforms and data spaces to AI-driven governance systems. These developments offer new opportunities for steering complex societies under conditions of globalization, environmental disruption, and the rise of autonomous intelligent systems, yet they also raise profound questions regarding control, accountability, and democratic legitimacy. We argue that understanding cognitive assemblages is essential to designing socio-technical systems capable of supporting collective intelligence while preserving human values in an era of accelerating complexity.

Full article

Figure 1

Open AccessArticle

Skill Classification of Youth Table Tennis Players Using Sensor Fusion and the Random Forest Algorithm

by

Yung-Hoh Sheu, Cheng-Yu Huang, Li-Wei Tai, Tzu-Hsuan Tai and Sheng K. Wu

Big Data Cogn. Comput. 2026, 10(2), 62; https://doi.org/10.3390/bdcc10020062 - 15 Feb 2026

Abstract

This study addresses the issue of inaccurate results in traditional table tennis player classification, which is often influenced by subjective judgment and environmental factors, by proposing a youth table tennis player classification system based on sensor fusion and the random forest algorithm. The

[...] Read more.

This study addresses the issue of inaccurate results in traditional table tennis player classification, which is often influenced by subjective judgment and environmental factors, by proposing a youth table tennis player classification system based on sensor fusion and the random forest algorithm. The system utilizes an embedded intelligent table tennis racket equipped with an ICM20948 nine-axis sensor and a wireless transmission module to capture real-time acceleration and angular velocity data during players’ strokes while synchronously employing a camera with OpenPose to extract joint angle variations. A total of 40 players’ stroke data were collected. Due to the limited sample size of top-tier players, the Synthetic Minority Over-sampling Technique (SMOTE) was applied, resulting in a final dataset of 360 records. Multiple key motion indicators were then computed and stored in a dedicated database. Experimental results showed that the proposed system, powered by the random forest algorithm, achieved a classification accuracy of 91.3% under conventional cross-validation, while subject-independent LOSO validation yielded a more conservative accuracy of 70.89%, making it a valuable reference for coaches and referees in conducting objective player classification. Future work will focus on expanding the dataset of domestic high-performance athletes and integrating precise sports science resources to further enhance the system’s performance and algorithmic models, thereby promoting the scientific selection of national team players and advancing the intelligent development of table tennis.

Full article

(This article belongs to the Section Artificial Intelligence and Multi-Agent Systems)

►▼

Show Figures

Figure 1

Open AccessArticle

Underwater Visual-Servo Alignment Control Integrating Geometric Cognition Compensation and Confidence Assessment

by

Jinkun Li, Lingyu Sun, Minglu Zhang and Xinbao Li

Big Data Cogn. Comput. 2026, 10(2), 61; https://doi.org/10.3390/bdcc10020061 - 14 Feb 2026

Abstract

To meet the requirements for the automatic alignment, insertion, and inspection of guide-tube opening pins on the upper core plate in a component pool during refueling outages of nuclear power units, this paper proposes a cognition-enhanced visual-servoing framework that integrates geometric cognition-based compensation,

[...] Read more.

To meet the requirements for the automatic alignment, insertion, and inspection of guide-tube opening pins on the upper core plate in a component pool during refueling outages of nuclear power units, this paper proposes a cognition-enhanced visual-servoing framework that integrates geometric cognition-based compensation, observation-confidence modeling, and constraint-aware optimal control. The framework addresses the key challenge posed by the coexistence of long-term geometric drift and underwater observation uncertainty. Specifically, historical closed-loop data are leveraged to learn and compensate for systematic geometric errors online, substantially improving coarse-positioning accuracy. In addition, an explicit confidence model is introduced to quantitatively assess the reliability of visual measurements. Building on these components, a confidence-driven, finite-horizon, constrained model predictive control strategy is designed to achieve safe and efficient finite-step convergence while strictly respecting actuator physical constraints. Ground experiments and deep-water component-pool validations demonstrate that the proposed method reduces coarse-positioning error by approximately 75%, achieves stable sub-millimeter alignment with an ample engineering safety margin, and effectively decreases erroneous insertions and the need for manual intervention. These results confirm the engineering applicability and safety advantages of the proposed cognition-enhanced visual-servoing framework for underwater alignment tasks in nuclear component pools.

Full article

(This article belongs to the Special Issue Field Robotics and Artificial Intelligence (AI))

►▼

Show Figures

Figure 1

Open AccessArticle

Reliability of LLM Inference Engines from a Static Perspective: Root Cause Analysis and Repair Suggestion via Natural Language Reports

by

Hongwei Li and Yongjun Wang

Big Data Cogn. Comput. 2026, 10(2), 60; https://doi.org/10.3390/bdcc10020060 - 13 Feb 2026

Abstract

Large Language Model (LLM) inference engines are becoming critical system infrastructure, yet their increasing architectural complexity makes defects difficult to be diagnosed and repaired. Existing reliability studies predominantly focus on model behavior or training frameworks, leaving inference engine bugs underexplored, especially in settings

[...] Read more.

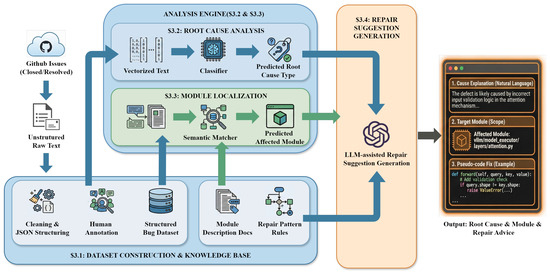

Large Language Model (LLM) inference engines are becoming critical system infrastructure, yet their increasing architectural complexity makes defects difficult to be diagnosed and repaired. Existing reliability studies predominantly focus on model behavior or training frameworks, leaving inference engine bugs underexplored, especially in settings where execution-based debugging is impractical. We present a static, issue-centric approach for automated root cause analysis and repair suggestion generation for LLM inference engines. Based solely on issue reports and developer discussions, we construct a real-world defect dataset and annotate each issue with a semantic root cause category and affected system module. Leveraging text-based representations, our framework performs root cause classification and coarse-grained module localization without requiring code execution or specialized runtime environments. We further integrate structured repair patterns with a large language model to generate interpretable and actionable repair suggestions. Experiments on real-world issues concerning vLLMs demonstrate that our approach achieves effective root cause identification and module localization under limited and imbalanced data. A cross-engine evaluation further shows promising generalization to TensorRT-LLM. Human evaluation confirms that the generated repair suggestions are correct, useful, and clearly expressed. Our results indicate that static, issue-level analysis is a viable foundation for scalable debugging assistance in LLM inference engines. This work highlights the feasibility of static, issue-level defect analysis for complex LLM inference engines and explores automated debugging assistance techniques. The dataset and implementation will be publicly released to facilitate future research.

Full article

(This article belongs to the Special Issue Advanced Software and Machine Learning Techniques for System Architectures and Big Data)

►▼

Show Figures

Figure 1

Open AccessArticle

PLTA-FinBERT: Pseudo-Label Generation-Based Test-Time Adaptation for Financial Sentiment Analysis

by

Hai Yang, Hainan Chen, Chang Jiang, Juntao He and Pengyang Li

Big Data Cogn. Comput. 2026, 10(2), 59; https://doi.org/10.3390/bdcc10020059 - 11 Feb 2026

Abstract

►▼

Show Figures

Financial sentiment analysis leverages natural language processing techniques to quantitatively assess sentiment polarity and emotional tendencies in financial texts. Its practical application in investment decision-making and risk management faces two major challenges: the scarcity of high-quality labeled data due to expert annotation costs,

[...] Read more.

Financial sentiment analysis leverages natural language processing techniques to quantitatively assess sentiment polarity and emotional tendencies in financial texts. Its practical application in investment decision-making and risk management faces two major challenges: the scarcity of high-quality labeled data due to expert annotation costs, and semantic drift caused by the continuous evolution of market language. To address these issues, this study proposes PLTA-FinBERT, a pseudo-label generation-based test-time adaptation framework that enables dynamic self-learning without requiring additional labeled data. The framework consists of two modules: a multi-perturbation pseudo-label generation mechanism that enhances label reliability through consistency voting and confidence-based filtering, and a test-time dynamic adaptation strategy that iteratively updates model parameters based on high-confidence pseudo-labels, allowing the model to continuously adapt to new linguistic patterns. PLTA-FinBERT achieves 0.8288 accuracy on the sentiment classification dataset of financial sentiment analysis, representing an absolute improvement of 2.37 percentage points over the benchmark. On the FiQA sentiment intensity prediction task, it obtains an

Figure 1

Open AccessArticle

Bias Correction and Explainability Framework for Large Language Models: A Knowledge-Driven Approach

by

Xianming Yang, Qi Li, Chengdong Qian, Haitao Wang, Yonghui Wu and Wei Wang

Big Data Cogn. Comput. 2026, 10(2), 58; https://doi.org/10.3390/bdcc10020058 - 10 Feb 2026

Abstract

Large Language Models (LLMs) have demonstrated extraordinary capabilities in natural language generation; however, their real-world deployment is frequently hindered by the generation of factually incorrect or biased content, along with an inherent deficiency in transparency. To address these critical limitations and thereby enhance

[...] Read more.

Large Language Models (LLMs) have demonstrated extraordinary capabilities in natural language generation; however, their real-world deployment is frequently hindered by the generation of factually incorrect or biased content, along with an inherent deficiency in transparency. To address these critical limitations and thereby enhance the reliability and explainability of LLM outputs, this study proposes a novel integrated framework, namely the Adaptive Knowledge-Driven Correction Network (AKDC-Net), which incorporates three core algorithmic innovations. Firstly, the Hierarchical Uncertainty-Aware Bias Detector (HUABD) performs multi-level linguistic analysis (lexical, syntactic, semantic, and pragmatic) and, for the first time, decomposes predictive uncertainty into epistemic and aleatoric components. This decomposition enables principled, interpretable bias detection with clear theoretical underpinnings. Secondly, the Neural-Symbolic Knowledge Graph Enhanced Corrector (NSKGEC) integrates a temporal graph neural network with a differentiable symbolic reasoning module, facilitating logically consistent and factually grounded corrections based on dynamically updated knowledge sources. Thirdly, the Contrastive Learning-driven Multimodal Explanation Generator (CLMEG) leverages a cross-modal attention mechanism within a contrastive learning paradigm to generate coherent, high-quality textual and visual explanations that enhance the interpretability of LLM outputs. Extensive evaluations were conducted on a challenging medical domain dataset to validate the effectiveness of the proposed AKDC-Net framework. Experimental results demonstrate significant improvements over state-of-the-art baselines: specifically, a 14.1% increase in the F1-score for bias detection, a 19.4% enhancement in correction quality, and a 31.4% rise in user trust scores. These findings establish a new benchmark for the development of more trustworthy and transparent artificial intelligence (AI) systems, laying a solid foundation for the broader and more reliable application of LLMs in high-stakes domains.

Full article

(This article belongs to the Special Issue Enhancement Optimization Techniques on Large Language Model)

►▼

Show Figures

Figure 1

Open AccessArticle

Enhancing the Artificial Rabbit Optimizer Using Fuzzy Rule Interpolation

by

Mohammad Almseidin

Big Data Cogn. Comput. 2026, 10(2), 57; https://doi.org/10.3390/bdcc10020057 - 10 Feb 2026

Abstract

►▼

Show Figures

Metaheuristic optimization algorithms have demonstrated their effectiveness in solving complex optimization tasks, such as those related to Intrusion Detection Systems (IDSs). It was widely used to enhance the detection rate of various types of cyber attacks by reducing the feature space or tuning

[...] Read more.

Metaheuristic optimization algorithms have demonstrated their effectiveness in solving complex optimization tasks, such as those related to Intrusion Detection Systems (IDSs). It was widely used to enhance the detection rate of various types of cyber attacks by reducing the feature space or tuning the model’s hyperparameters. The Artificial Rabbit Optimizer (ARO) mimics rabbits’ intelligent foraging and hiding behavior. The ARO algorithm has seen widespread adoption in the optimization field. The widespread use of the ARO algorithm occurs due to its simple design and ease of implementation. However, ARO can get trapped in local optima due to its limited diversity in population dynamics. Although the transition between phases is managed via an energy shrink factor, fine-tuning this balance remains challenging and unexplored. These limitations could limit the ARO algorithm’s effectiveness in high-dimensional space, as with IDS systems. This paper introduces a novel enhancement of the original ARO by integrating Fuzzy Rule Interpolation (FRI) to compute the energy factor during the optimization process dynamically. In this work, we integrate the FRI along with the ARO algorithm to improve solution accuracy, maintain population diversity, and accelerate convergence, particularly in high-dimensional and complex problems such as IDS. The integration of the FRI and ARO aimed to control the exploration-exploitation balance in the IDS application area. To validate our proposed hybrid approach, we tested it on a diverse set of intrusion datasets, covering eight different benchmark intrusion detection datasets. The suggested hybrid approach has been demonstrated to be effective in handling various intrusion classification tasks. For binary intrusion classification tasks, it achieved accuracy rates ranging from 96% to 99.9%. In the case of multiclass intrusion classification tasks, the accuracy was slightly more consistent, falling between 91.6% and 98.9%. The suggested approach effectively reduced the number of feature spaces, achieving reduction rates from 56% up to 96%. Furthermore, the proposed approach outperformed other state-of-the-art methods in terms of detection rate.

Full article

Figure 1

Open AccessArticle

ISFJ-RAG: Interventional Suppression of Hallucinations via Counter-Factual Joint Decoding Retrieval-Augment Generation

by

Yuezhao Liu, Wei Li, Yijie Wang, Ningtong Chen and Min Chen

Big Data Cogn. Comput. 2026, 10(2), 56; https://doi.org/10.3390/bdcc10020056 - 9 Feb 2026

Abstract

►▼

Show Figures

Although retrieval-augmented generation (RAG) technology mitigates the hallucination issue in large language models (LLMs) by incorporating external knowledge, and combining reasoning models can further enhance RAG system performance, retrieval noise and attention bias still lead to the diffusion of factual errors in problems

[...] Read more.

Although retrieval-augmented generation (RAG) technology mitigates the hallucination issue in large language models (LLMs) by incorporating external knowledge, and combining reasoning models can further enhance RAG system performance, retrieval noise and attention bias still lead to the diffusion of factual errors in problems such as factual queries, multi-hop questions, and unanswerable questions. Existing methods struggle to effectively suppress “high-confidence hallucinations” in long-chain reasoning due to their failure to decouple knowledge bias effects from causal reasoning paths. To address this, this paper proposes the ISFJ-RAG framework, which dynamically intervenes in hallucinations through counterfactual joint decoding. First, a structural causal model (SCM) reveals three root causes of hallucinations in RAG systems: irrelevant knowledge interference, reasoning path bias, and spurious correlations in self-attention mechanisms. A dual-decoder architecture is further designed: the total causal effect decoder models the global relationship between user queries and knowledge, while the knowledge bias effect decoder captures potential biases induced by external knowledge. A dynamic modulation module converts the latter’s output into a proxy measure of hallucination bias. By computing individual treatment effects (ITEs), the bias component is removed from the full generation distribution, achieving simultaneous suppression of knowledge-irrelevant and reasoning-irrelevant hallucinations. Ablation experiments validate the robustness of average token log-probability as a confidence metric. Experiments demonstrate that on the RAGEval benchmark, ISFJ-RAG improves generation completeness to 86.89% (+5.49%) while reducing hallucination rates to 10.39% (−2.5%) and irrelevance rates to 4.44% (−2.99%).

Full article

Figure 1

Journal Menu

► ▼ Journal Menu-

- BDCC Home

- Aims & Scope

- Editorial Board

- Reviewer Board

- Topical Advisory Panel

- Instructions for Authors

- Special Issues

- Topics

- Sections & Collections

- Article Processing Charge

- Indexing & Archiving

- Editor’s Choice Articles

- Most Cited & Viewed

- Journal Statistics

- Journal History

- Journal Awards

- Conferences

- Editorial Office

Journal Browser

► ▼ Journal BrowserHighly Accessed Articles

Latest Books

E-Mail Alert

News

6 November 2025

MDPI Launches the Michele Parrinello Award for Pioneering Contributions in Computational Physical Science

MDPI Launches the Michele Parrinello Award for Pioneering Contributions in Computational Physical Science

9 October 2025

Meet Us at the 3rd International Conference on AI Sensors and Transducers, 2–7 August 2026, Jeju, South Korea

Meet Us at the 3rd International Conference on AI Sensors and Transducers, 2–7 August 2026, Jeju, South Korea

Topics

Topic in

AI, BDCC, Fire, GeoHazards, Remote Sensing

AI for Natural Disasters Detection, Prediction and Modeling

Topic Editors: Moulay A. Akhloufi, Mozhdeh ShahbaziDeadline: 31 March 2026

Topic in

Applied Sciences, Electronics, J. Imaging, MAKE, Information, BDCC, Signals

Applications of Image and Video Processing in Medical Imaging

Topic Editors: Jyh-Cheng Chen, Kuangyu ShiDeadline: 30 April 2026

Topic in

Actuators, Algorithms, BDCC, Future Internet, JMMP, Machines, Robotics, Systems

Smart Product Design and Manufacturing on Industrial Internet

Topic Editors: Pingyu Jiang, Jihong Liu, Ying Liu, Jihong YanDeadline: 30 June 2026

Topic in

Sensors, Electronics, Technologies, AI, Entropy, Quantum Reports, BDCC

Responsible Classic/Quantum AI Technologies for Industrial Applications

Topic Editors: Youyang Qu, Khandakar Ahmed, Zhiyi TianDeadline: 31 July 2026

Conferences

Special Issues

Special Issue in

BDCC

Advances in Artificial Intelligence for Computer Vision, Augmented Reality Virtual Reality and Metaverse

Guest Editors: A F M Saifuddin Saif, Antonio Celesti, Yu-Sheng Lin, Edelberto SilvaDeadline: 18 March 2026

Special Issue in

BDCC

Advances in Complex Networks

Guest Editors: Domenico Ursino, Gianluca Bonifazi, Michele MarchettiDeadline: 28 March 2026

Special Issue in

BDCC

Machine Learning and AI Technology for Sustainable Development

Guest Editors: Wei-Chen Wu, Jason C. Hung, Yuchih Wei, Jui-hung KaoDeadline: 31 March 2026

Special Issue in

BDCC

Applications of Artificial Intelligence and Data Management in Data Analysis

Guest Editors: Jorge Bernardino, Le Gruenwald, Elio Masciari, Laurent D'OrazioDeadline: 31 March 2026