1. Introduction

Over the last decade, software architecture has undergone significant migration and evolution. Early software systems primarily adopted a monolithic architecture, which integrates all features into a single, cohesive structure. As the complexity and scale of the features increased, this architecture revealed significant limitations in terms of scalability, adaptability, and maintainability. To address these challenges, researchers have explored other architectures, such as service-oriented architecture (SOA), which separates software into reusable components connected through well-defined interfaces. However, SOA’s services rely on centralized control and the enterprise service bus (ESB) to work together, which may lead to performance challenges, reduced flexibility, and the creation of a single point of failure. These advancements ultimately paved the way for the emergence of microservices architecture (MSA) [

1].

MSA is characterized by a collection of services, each acting autonomously to fulfill a distinct business need, which are loosely interconnected and interact through various interaction mechanisms, such as RESTful Application Programming Interfaces (APIs), asynchronous messaging queues (e.g., RabbitMQ or Kafka), or event-driven architectures utilizing event sourcing. Each microservice was independently developed, tested, released, and scaled, enabling faster releases and updates. Moreover, MSA is designed to align service boundaries with business domains. This ensures that each service encapsulates its functionality and can evolve independently without impacting the entire software system [

2,

3].

Owing to these advantages, MSA has gained substantial traction across the global software industry. Organizations such as Amazon, Uber, and Netflix have adopted microservices to manage their complex, large-scale, and high-traffic systems, recognizing microservices as a mature and widely adopted architectural paradigm [

2,

4,

5].

Although the popularity of MSA continues to rise, the migration process remains a complex and time-intensive task. This journey involves major challenges related to service decomposition, inter-service communication, and database design. Moreover, the absence of a standardized methodology increases the risk of errors, leading to higher system complexity and degraded quality [

4,

6].

As a result, there has been growing interest in leveraging machine learning (ML) algorithms to support migration toward MSA. However, most existing studies concentrate on a single pattern, such as a decomposition pattern, with the aim of accurately breaking down a monolithic system into microservices. These studies often overlook other essential patterns, such as database patterns and communication patterns [

1,

7].

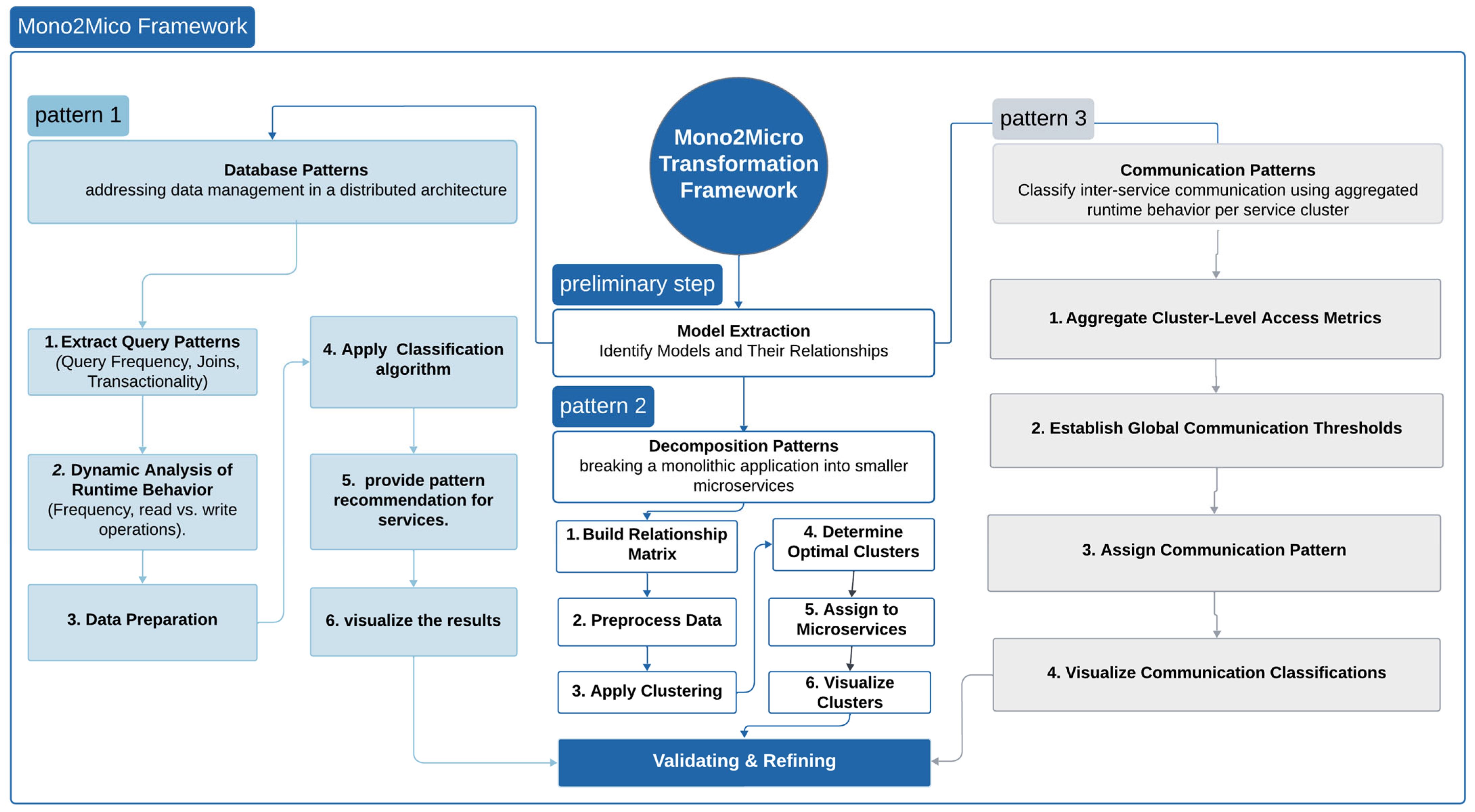

To support the migration from monolithic systems to microservices, this paper proposes the Mono2Micro Transformation Framework, a pattern-driven approach that facilitates systematic decomposition. The framework begins with model extraction and applies three core patterns, specifically database, decomposition, and communication patterns, to address data distribution, service identification, and inter-service communication. Each pattern leverages automated techniques such as clustering, classification, and runtime analysis to provide a comprehensive microservice extraction. The framework was applied to a real-world monolithic Student Information System (SIS) for faculty management, demonstrating its practical applicability and effectiveness.

This study addresses the following four primary research questions:

How can AI technologies be effectively utilized to automate the migration process?

What are the key challenges organizations encounter during the migration process, and how can AI-driven approaches address these challenges?

What criteria should be used to evaluate the effectiveness and success of AI-driven migration strategies?

What best practices and guidelines support the integration of AI technologies into software migration processes?

The remainder of this paper is organized as follows.

Section 2 reviews work related to monolithic-to-microservices migration.

Section 3 introduces the proposed Mono2Micro Framework architecture in detail.

Section 4 presents an evaluation of the framework and demonstrates its application to a real-world SIS to validate its effectiveness.

Section 5 discusses the experimental results and addresses the research questions posed in this study.

Section 6 outlines the potential threats to validity. Finally,

Section 7 concludes the paper and suggests directions for future work.

2. Related Work

This section reviews two main categories of research. The first focuses on approaches for migrating to MSA. The second explores the use of machine learning and other automated techniques to facilitate migration to MSA.

2.1. Systematic Literature Review for MSA

Velepucha et al. [

8] conducted a systematic literature review (SLR), identifying challenges and benefits associated with the migration from monolithic to microservice architectures, as summarized in

Table 1. Their study emphasized the importance of analyzing architectural trade-offs and advancing automation within the migration process. In a follow-up study, Velepucha et al. [

9] focused on microservice decomposition strategies and proposed future directions, including the integration of micro-frontend paradigms, the development of automated decomposition techniques, and extending evaluation beyond object-oriented architectures.

Francisco et al. [

10] analyzed 20 studies on MSA migrations and found that most approaches, targeting web-based systems, rely on design artifacts, dependency graphs, and clustering algorithms. However, the study was limited by the use of a single search engine and potential researcher bias. The authors emphasized the need for broader investigations, including deeper analysis of migration approaches and database transition strategies.

Authors in [

11,

12,

13] conducted an SLR revealing that microservice decomposition remains an emerging field, with limited methodologies for effectively integrating static, dynamic, and evolutionary data. They further identified the lack of robust tool support as a significant barrier and emphasized the need for additional research on deployment strategies as well as the establishment of standardized evaluation metrics for microservice migration.

Other studies have highlighted the challenges related to service identification [

14,

15,

16,

17]. These works emphasize the need for improved automation techniques and standardized evaluation metrics. While various service identification algorithms have been proposed, many focus on specific aspects of the migration process, often overlooking other critical patterns. Furthermore, comprehensive solutions that support the full migration pipeline remain limited. Some studies also suggest organizing services by category to improve reusability at scale and facilitate real-world applicability.

Recent studies have investigated migration in both directions between monolithic and MSA. Su et al. [

18] found that organizations reverted to monolithic systems due to concerns related to cost, complexity, performance, scalability, and organizational difficulties. In contrast, Razzaq et al. [

19] highlighted the benefits of MSA adoption, such as independent deployment, scalability, and efficient communication. Both studies underscore the need for further empirical research and practical solutions to facilitate the migration process.

2.2. Tools and Techniques for MSA

Recent studies present a wide range of approaches for automating the identification of microservices during migration from monolithic systems. Many studies propose the use of clustering methods based on business processes, use cases, UML models, and runtime relationships to extract the boundaries of microservices [

20,

21,

22]. While these approaches have demonstrated improved performance in specific scenarios, they share a common limitation: a lack of generalizability and standardized evaluation metrics. Their methodologies are often tailored to specific domains, and they provide limited support for adapting to heterogeneous systems or evolving architectural quality requirements.

In parallel, Desai et al. [

23] and Santos et al. [

6] focused on metric-based assessments to evaluate the quality and complexity of microservice decompositions. Desai et al. proposed a graph neural network model, the Clustering and Outlier-aware Graph Convolution Network (COGCN), which combines clustering and outlier detection. They evaluated it using structural modularity and interface-based metrics. Santos et al. introduced a complexity metric that integrates access sequences, read/write operations, and relaxed transactional consistency. Although these studies present robust evaluation frameworks, they do not integrate comprehensive migration pipelines and lack generalizability. Additionally, Ma et al. [

24] adopted Microservices Identification using Analysis for Database Access (MIADA), which emphasizes “database per service” clustering to facilitate the identification of microservices. While their approach demonstrated promising results in two service-oriented systems, MIADA remains specialized and lacks broader applicability.

On the other hand, numerous studies have explored various strategies to support the migration to MSA, focusing on performance, modularization, and architectural decision-making. Authors in [

6,

25] demonstrated that modular monolithic architectures can ease migration and improve scalability; however, the reliance on individual case studies limits generalizability. Additionally, authors in [

26,

27] conducted performance comparisons, showing that while monolithic architectures perform better on single machines and are suitable for small- to medium-scale systems, microservices scale more efficiently in distributed environments. Furthermore, authors in [

28,

29] introduced tools for code transformation and performance modeling, although both require broader validation and integration with automation tools. Finally, authors in [

30,

31] addressed modularization and stakeholder challenges but did not sufficiently assess architectural complexity in large-scale systems.

The literature emphasizes a critical need for generalizable, automated, and multi-dimensional frameworks that incorporate architectural quality, runtime behavior, and system context. There is also a notable gap in comprehensive tool support, empirical benchmarking, and real-world validation across diverse platforms.

Table 2 presents the categories and thematic summary of the reviewed studies. To contextualize the contributions of the proposed Mono2Micro framework,

Table 3 presents a structured comparison of recent monolith-to-microservices migration approaches. The comparison outlines the degree to which each paper addresses database pattern support, service decomposition, inter-service communication, integration of machine learning techniques, evaluation methodologies, and applicability to real-world systems.

As illustrated in

Table 3, only one prior work [

6] addresses all three architectural dimensions. However, it lacks the incorporation of AI techniques and does not provide a comprehensive multi-metric evaluation. The majority of reviewed studies focus primarily on service decomposition, omitting others. Specifically, only 1 out of 13 studies covers all three dimensions, while nearly half focus on a single architectural pattern. While these works address isolated aspects of the migration process, the proposed Mono2Micro framework is the first to systematically integrate all three core patterns into a unified, AI-driven migration pipeline. The proposed approach delivers end-to-end migration support by combining supervised and unsupervised learning methods, validated on a real-world system, and demonstrates effectiveness through quantitative metrics across all transformation phases, as detailed in

Section 4.

3. Research Methodology

This section introduces the Mono2Micro Transformation Framework, a comprehensive pattern-driven methodology designed to facilitate the migration of monolithic systems to MSA. The framework addresses key aspects of the transformation process, including data decomposition, service identification, and inter-service communication, through a sequence of structured steps.

As illustrated in

Figure 1, the framework consists of three core architectural patterns: database, decomposition, and communication. These are preceded by a model extraction phase and followed by a validation and refinement stage. The proposed Mono2Micro framework is distinctive in that it simultaneously addresses all three architectural dimensions, rather than focusing on a single aspect, as seen in most existing studies.

3.1. Database Patterns

The Database Patterns component of the Mono2Micro framework addresses one of the most critical challenges in MSA: organizing and managing data in a distributed environment. In monolithic systems, data is typically centralized and accessed directly [

32]. However, in MSA, each service is expected to manage its own data independently—a principle commonly referred to as “Database per Service.” Ensuring consistency, performance, and logical separation of data becomes a non-trivial task during migration.

In this approach, both static and dynamic database interactions within the SIS system were analyzed to recommend the most appropriate database architecture pattern for each entity. Additionally, a prediction model was developed using machine learning algorithms to support and validate these recommendations. The process consists of the following steps:

3.1.1. Extract Query Pattern

Following the extraction phase, in which all relevant database entities from the SIS were identified, runtime behavior was monitored to capture query patterns, including read, write, and join operations. These patterns were then used as input for subsequent processing steps.

3.1.2. Dynamic Analysis of Runtime Behavior

Dynamic analysis was performed to monitor the behavior of database entities at runtime and to gain deeper insights into data access patterns within the SIS system. The analysis focused on three primary query types: read, write, and join operations. For each operation type, a robust statistical approach was employed to determine representative thresholds. Specifically, the median was used to mitigate the effect of outliers and capture the central tendency in skewed query distributions [

20,

21,

22,

26]. In cases where the median equaled zero—a situation common in sparse operations—the mean was applied as a fallback to avoid degenerate thresholds and ensure consistent classification. This design choice balances robustness and inclusiveness, providing stable thresholds across varying workloads. Since thresholds are computed over normalized ratios rather than absolute counts, the method is inherently scalable and generalizable to systems of different sizes, workloads, and query distributions, while remaining adaptable to domain-specific tuning.

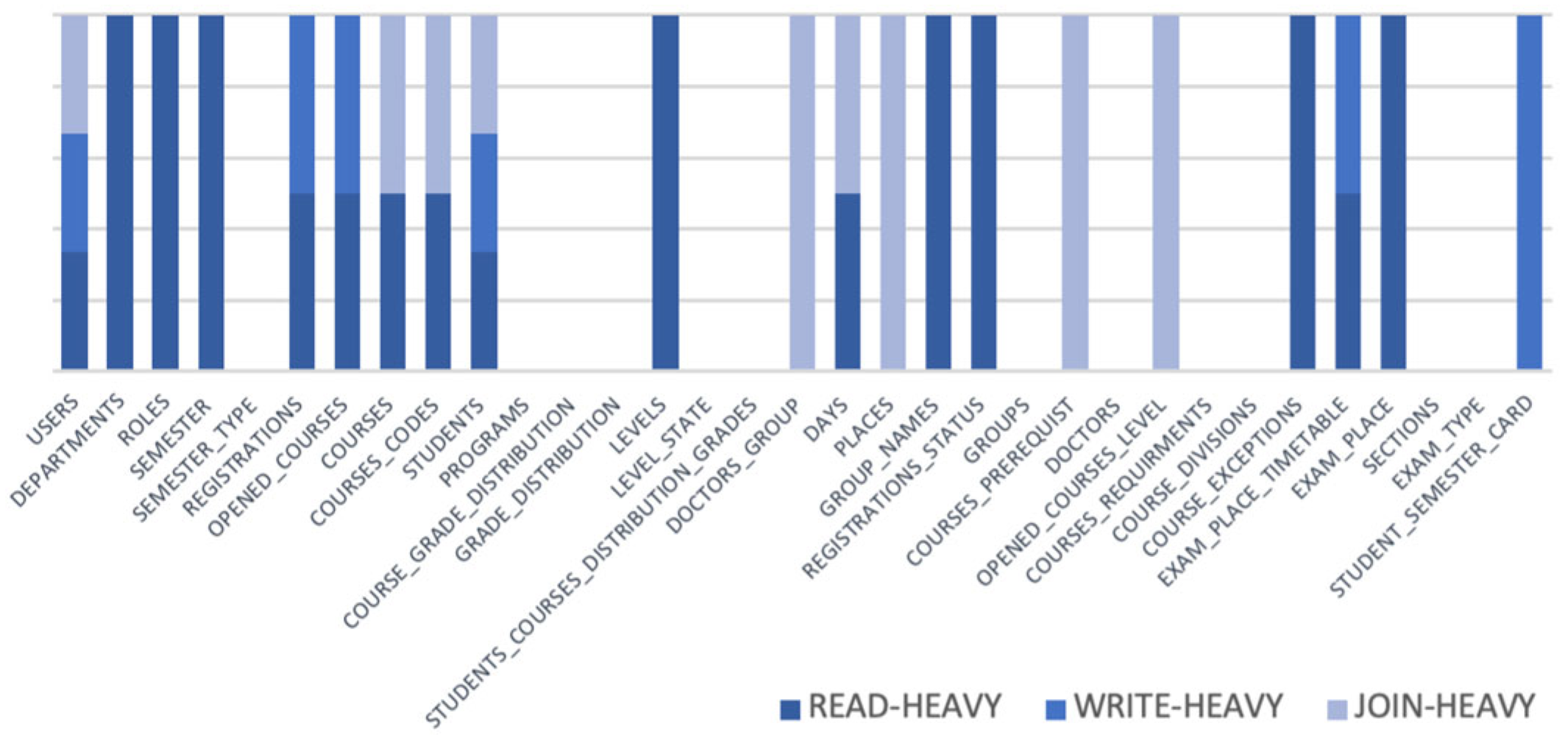

Entities were categorized based on their query frequency relative to the computed thresholds, as shown in

Table 4. An entity was labeled as read-heavy, write-heavy, or join-heavy if the count of its corresponding operation exceeded the relevant median value. This classification provided the foundation for subsequent analysis, particularly in recommending appropriate database patterns for each entity.

3.1.3. Data Preparation

In this step, a set of rule-based classification heuristics was defined to map entities to suitable database architectural patterns based on their observed runtime query behavior. The classification logic was constructed around the six most common database architecture patterns, formed by combinations of the three behavioral indicators: read-heavy, write-heavy, and join-heavy.

Command Query Responsibility Segregation (CQRS): separates read and write operations into distinct models, enhancing performance and scalability. It is well-suited for read-heavy and join-heavy workloads.

Event Sourcing: records all changes to application state as a series of immutable events. This pattern is optimal for systems characterized by write-heavy activity and complex data relationships (join-heavy).

Independent Database: this pattern is suitable for scenarios in which data access or modification is infrequent. This pattern allows complete decoupling from other services.

Read Replicas: uses replicated databases to enhance read performance. It is ideal for entities with read-heavy and minimal join operations.

Sharding: distributes data across multiple databases based on a shard key. This pattern is advantageous for write-heavy workloads with low join frequency.

Database per Service: assigns each service its own database. This pattern is suitable for join-heavy interactions within a single bounded context where read and write frequencies are relatively low.

The raw query metrics—read, write, and join counts—were normalized using Min-Max Scaling to bring all values into a common range between 0 and 1. This normalization ensured consistent feature weighting and mitigated bias caused by differing value scales.

Following this preprocessing step, the recommended database architectural patterns were initially stored as categorical labels, which are incompatible with most ML algorithms. To address this, the labels were transformed into numerical form using a label encoding technique, where each unique pattern was assigned a distinct integer value. This structured dataset was used to train and evaluate the ML model in the next step.

3.1.4. Apply Classification Algorithm

Random Forest (RF), a supervised ML algorithm, was employed to assess how well the prepared dataset could predict suitable database architectural patterns. The model was trained on normalized read, write, and join operation counts to predict architectural pattern labels that were systematically generated through our rule-based labeling strategy (detailed in

Section 3.1.2 and

Section 3.1.3). For example, entities with a read ratio above the global median were labeled as read-heavy, while those dominated by write or join operations were labeled accordingly. The dataset was split into 60% training and 40% testing, and features (read, write, join counts) were normalized using Min-Max scaling. The RF classifier was configured with standard hyperparameters (100 trees, Gini impurity, fixed random seed) to balance performance and reproducibility.

The RF classifier was chosen for its robustness and ability to model non-linear boundaries. After training, it predicted architectural patterns on the test set, which we compared against the ground truth to assess performance.

3.1.5. Provide Pattern Recommendations for Services

After the classification model was trained and evaluated, it was applied to predict architectural patterns for each database entity in the SIS system. Each entity, represented by its normalized read, write, and join metrics, was assigned a recommended architectural pattern based on the model’s output. These predictions were interpreted as pattern recommendations to guide how each entity should be treated during microservice decomposition.

3.1.6. Visualize the Results

This step yields summary distributions of access behavior (read, write, join) and the resulting architectural pattern allocations; the corresponding plots and quantitative analysis are presented in

Section 5.2.

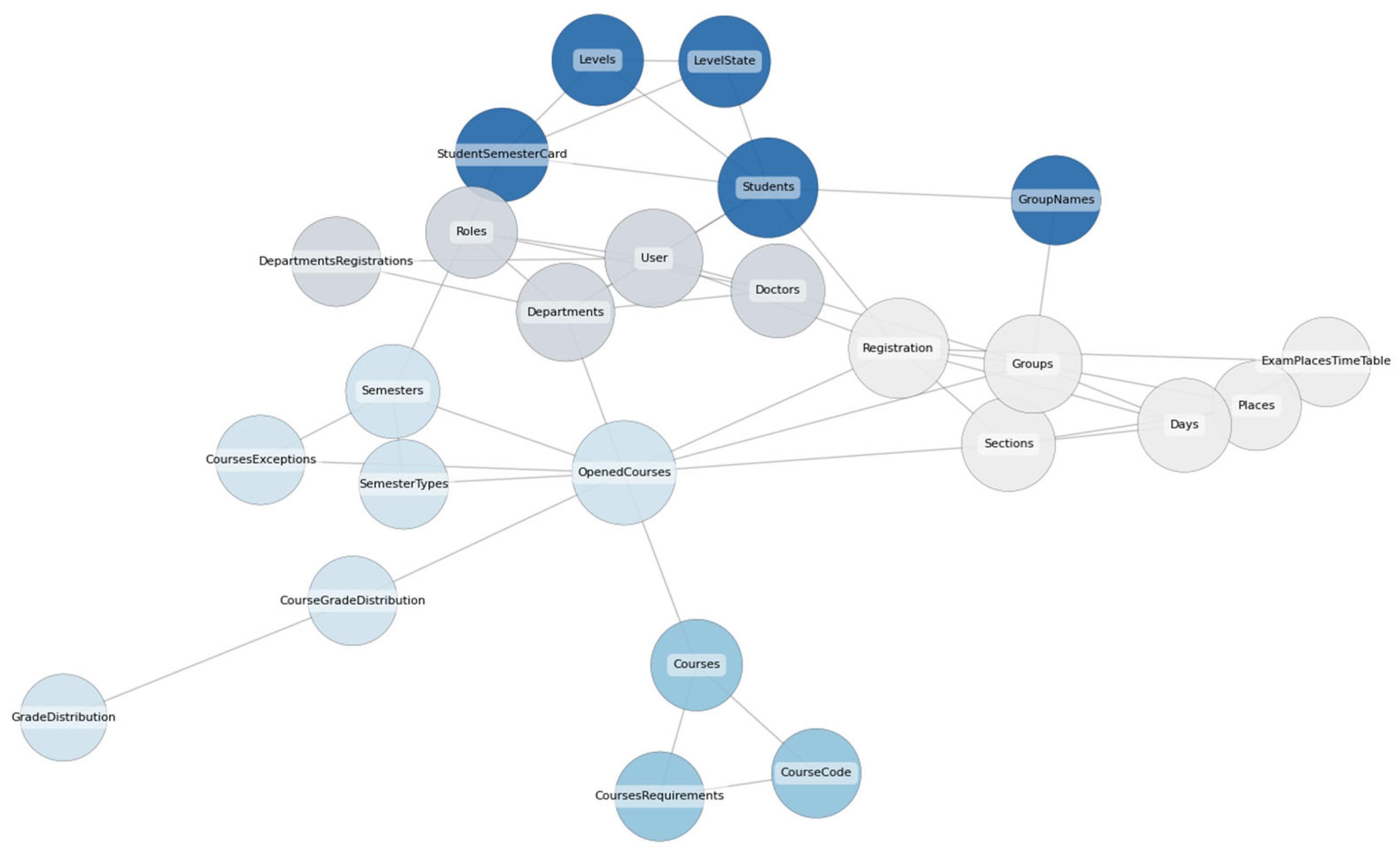

3.2. Service Decomposition

The second phase of the Mono2Micro framework focuses on decomposing the system’s structure by analyzing the relationships among entities to identify potential microservices [

33]. The objective of this stage is to uncover cohesive groups of entities that serve as candidates for independently deployable services. A graph-based clustering approach is employed to model and quantify the strength of inter-entity relationships. To improve the accuracy of decomposition and better reflect actual system behavior, the structural graph is enriched with runtime controller-to-entity mappings. This hybrid representation captures both static dependencies and dynamic usage patterns, thereby enabling data-driven partitioning of the monolithic system into functionally coherent service boundaries [

34]. The decomposition process consists of the following six steps:

3.2.1. Build Relationship Matrix

Following the extraction phase, each model within the SIS system was identified, along with its associated relationships. The extracted structure includes entities and their relational types, such as has_one, has_many, belongs_to, and belongs_to_many, which collectively form the foundation of the relationship matrix. In this matrix, each entity is represented as a node in a graph, and the relationships are encoded as weighted edges based on their type and relative importance. In addition, the model is extended with controller-to-entity mappings derived from service-layer analysis to reflect real-world usage. A co-access matrix was constructed based on entity co-occurrence within services, capturing functional relationships not evident in static code. This hybrid matrix forms the basis for building a graph that incorporates both architectural and behavioral dimensions of the system.

This matrix shows how SIS models depend on each other and serves as the basis for constructing a weighted undirected graph for the clustering process in subsequent phases.

3.2.2. Preprocess Data

In this step, the hybrid graph was prepared for clustering by applying a custom weighting scheme that accounts for both structural cohesion and functional behavior.

Structural edges were assigned weights according to relationship type, reflecting their relative semantic strength, as follows: belongs_to (1.0), belongs_to_many (0.75), has_many (0.5), and has_one (0.25). Although these weights were assigned empirically, the ordering follows established concepts in software coupling metrics, such as coupling between objects and other concepts established by ORM conventions (e.g., Laravel Eloquent, Hibernate), where ownership relations represent stronger coupling than aggregation or references. To assess the robustness of this choice, a sensitivity analysis was conducted by varying the assigned weights by ±25% and ±50%. The results showed stable clustering structures with only minor metric fluctuations, confirming that the method is not sensitive to moderate changes in weight assignment.

Concurrently, behavioral edges were derived from runtime co-access frequencies, to ensure comparability with structural edges and to mitigate the influence of extreme outliers; these raw co-access frequencies (f) were normalized using a logarithmic transformation followed by percentile-based scaling. It is formally defined as:

where

and

denote the 10th and 90th percentiles of all non-zero co-access values, respectively. This scaling bounds all values between 0 and 1, mitigates the effect of extreme outliers, and preserves the relative ranking of interaction strengths. Following a mild quadratic transformation,

was applied to further emphasize stronger behavioral connections while reducing the influence of weaker ones.

Then, Edges from both sources were adaptively merged through weighted averaging. It is defined as:

This equal weighting was selected after preliminary experiments demonstrated that it consistently produced higher modularity and silhouette scores than alternative ratios (e.g., 0.6–0.4, 0.7–0.3). To prevent spurious links, new runtime-only edges were added selectively: only when both nodes belonged to the same preliminary Louvain cluster and exceeded a minimum normalized strength. These optimizations preserved the design-time semantics of the structural model while incorporating runtime behavior in a controlled manner. The result is a fully connected, undirected, weighted graph that reflects both structural cohesion and functional interaction and serves as input to the clustering algorithm in the next step.

3.2.3. Apply Clustering

After the preprocessing step, a graph-based clustering algorithm was applied to decompose the SIS into structurally cohesive groups. The Louvain community detection algorithm was selected for its efficiency in optimizing modularity and identifying well-defined communities in large entity relationship graphs, constructed from the SIS system’s structural and behavioral data. As the Louvain algorithm is unsupervised, it does not involve a training phase in the conventional sense. Instead, it operates directly on a weighted undirected graph, optimizing modularity to detect cohesive communities, which are interpreted as candidate microservices.

Each node in the graph represents a model entity from the SIS system, and each edge corresponds to a defined entity’s relationship, such as belongs_to, has_many, has_one, or belongs_to_many, or a runtime co-access behavioral pattern. These relationships are assigned numeric weights based on their semantic strength and frequency, following a custom weighting scheme. If an entity pair shares multiple relationships, the weights are aggregated accordingly. The resulting graph structure encodes the architectural relationships between entities and is used as input for the Louvain algorithm.

The algorithm operates by iteratively maximizing modularity Q, as defined in (7), to enhance intra-cluster edge density while minimizing inter-cluster connections. As a result, entities with strong relationships are grouped into clusters that represent potential microservice boundaries. Each resulting cluster consists of tightly coupled models and serves as a candidate for an independently deployable service.

3.2.4. Determine Optimal Cluster

Following the clustering process, a validation step is performed to evaluate the coherence and separation of the resulting clusters. Although the Louvain algorithm inherently maximizes modularity, additional analysis is necessary to confirm that the resulting groups correspond to meaningful microservice boundaries. The structural quality of the clusters is assessed using established evaluation metrics, and the results are presented and discussed in

Section 4.

3.2.5. Assign to Microservices

Once optimal clusters are identified, each cluster is interpreted as a candidate microservice. Entities grouped within the same cluster are assumed to exhibit strong structural cohesion and are therefore assigned to a common service boundary. This assignment reflects the principle of high internal cohesion and low external coupling, which is fundamental to microservice-oriented design. Each cluster encapsulates related functionalities, forming the basis for independently deployable and maintainable services within the system architecture.

3.2.6. Visualize Clustering

This step yields a weighted graph of entities and community assignments (Louvain). The corresponding visualization and observations are presented in

Section 5.3.

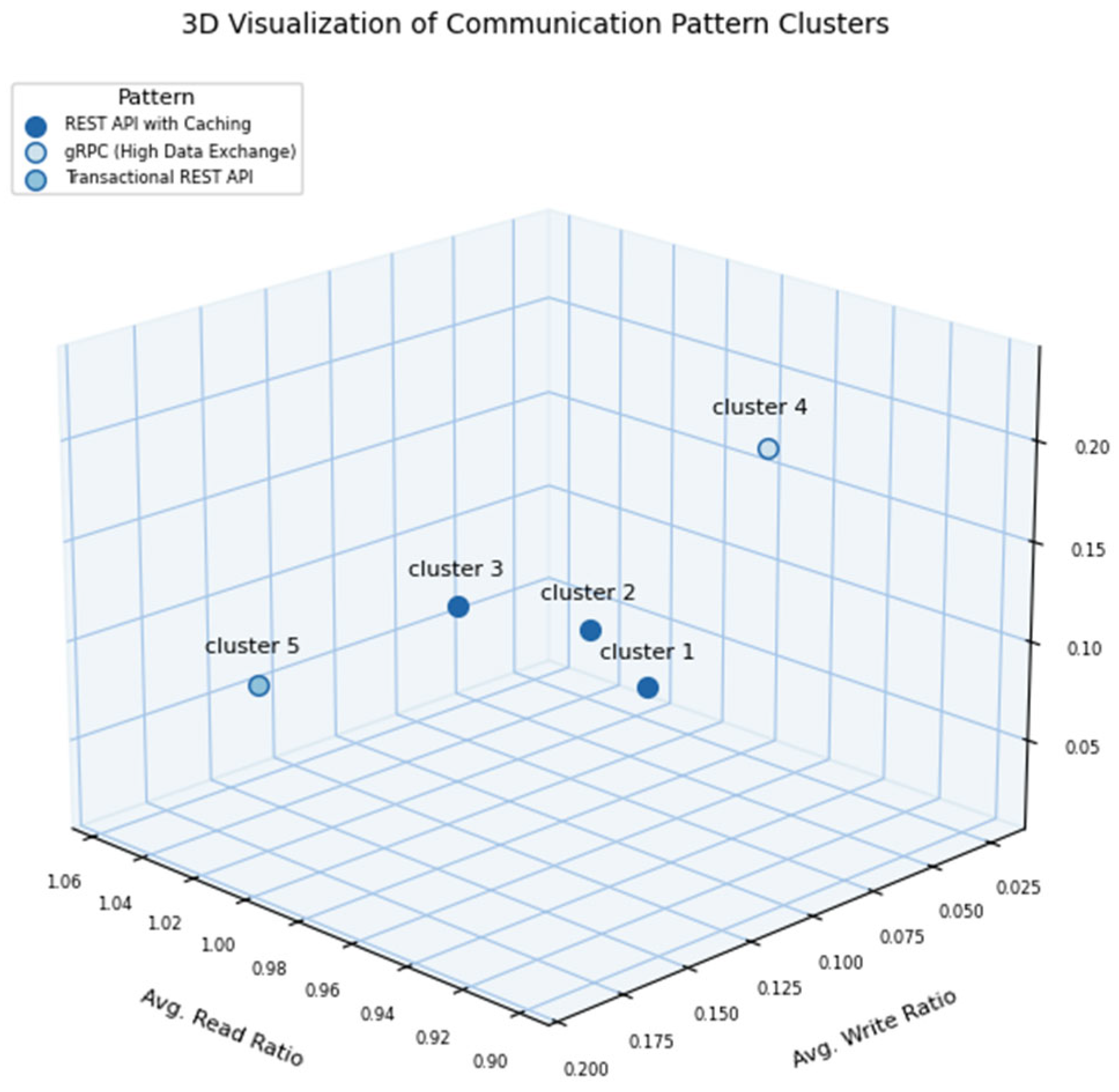

3.3. Communication Patterns

The third phase of the Mono2Micro framework focuses on analyzing the communication behavior between clustered SIS entities to recommend suitable interaction protocols [

35]. This phase evaluates how services should exchange data based on aggregated access ratios, join frequencies, and operation intensity. By examining both static relationships and dynamic runtime behavior, the framework aims to assign communication strategies—such as REST APIs, GraphQL, or asynchronous messaging—that align with scalability, responsiveness, and architectural best practices.

These communication strategy recommendations are based on normalized read, write, and join ratios, computed per cluster using query log data. Thresholds are derived from global averages across all entities. Clusters with read or join activity above these thresholds are classified as having frequent interaction, where synchronous protocols such as GraphQL or gRPC are recommended. Clusters with lower interaction intensity are considered loosely coupled and better suited for asynchronous messaging solutions like RabbitMQ or Kafka. These mappings are applied over the same clusters defined in the earlier decomposition phase and serve as experience-driven, data-informed heuristics. These strategies are presented as heuristic guidance rather than strict rules and will be refined through future expert validation.

The communication pattern process is structured into the following key steps:

3.3.1. Aggregate Cluster-Level Access Metrics

To characterize service-level communication behavior, access metrics are aggregated across all entities within each identified cluster. Specifically, the average read, write, and join ratios are calculated by first computing the access ratios for each entity—based on the proportion of each access type to total operations—and then averaging these values across the cluster. This aggregation provides a representative summary of communication behavior for each service, minimizes the influence of outliers, and supports consistent comparisons across clusters.

3.3.2. Establish Global Communication Threshold

Global thresholds are defined to provide a consistent baseline for classifying communication patterns. These thresholds are determined by averaging the normalized read, write, and join ratios across all the entities within the SIS. The resulting values serve as benchmarks to assess whether a cluster exhibits relatively high or low access characteristics. This thresholding mechanism ensures that communication decisions are grounded in system-wide statistical behavior rather than arbitrary values.

3.3.3. Assign Communication Pattern

The global thresholds for read, write, and join ratios were calculated as 0.933, 0.803, and 1.216, respectively, representing the mean normalized values across all entities. For each cluster, the average read, write, and join ratios were computed and compared against these thresholds to guide protocol selection. Clusters with a join ratio above the global join threshold and a read ratio above the global read threshold were assigned GraphQL, as it supports flexible, multi-entity queries. When the join ratio was high, but the read ratio remained below the global average, gRPC was selected for its efficiency in high-volume data exchange. Clusters with a write ratio above the global write threshold (without a high join ratio) were mapped to transactional REST APIs, while those with a high read ratio (without high join or write ratios) were assigned REST APIs with caching. All remaining clusters defaulted to asynchronous messaging solutions (e.g., Kafka, RabbitMQ). This mapping aligns communication styles with workload profiles and architectural goals.

3.3.4. Visualize Communication Classifications

This step generates a three-dimensional scatter plot representing each cluster’s communication profile, with axes corresponding to average read, write, and join ratios. Clusters are color-coded according to their assigned communication pattern. The corresponding visualization and interpretation are reported in

Section 5.4.

4. Experiment Evaluation

This section presents the evaluation strategy applied to the three transformation patterns proposed by the Mono2Micro framework. Each pattern was assessed using validation criteria tailored to its specific objectives. Standard evaluation metrics—such as accuracy, precision, recall, F1 score, classification report, confusion matrix, modularity, conductance, and silhouette score—were used to provide a comprehensive and reliable assessment, as detailed in the subsections.

4.1. Database Pattern Evaluation

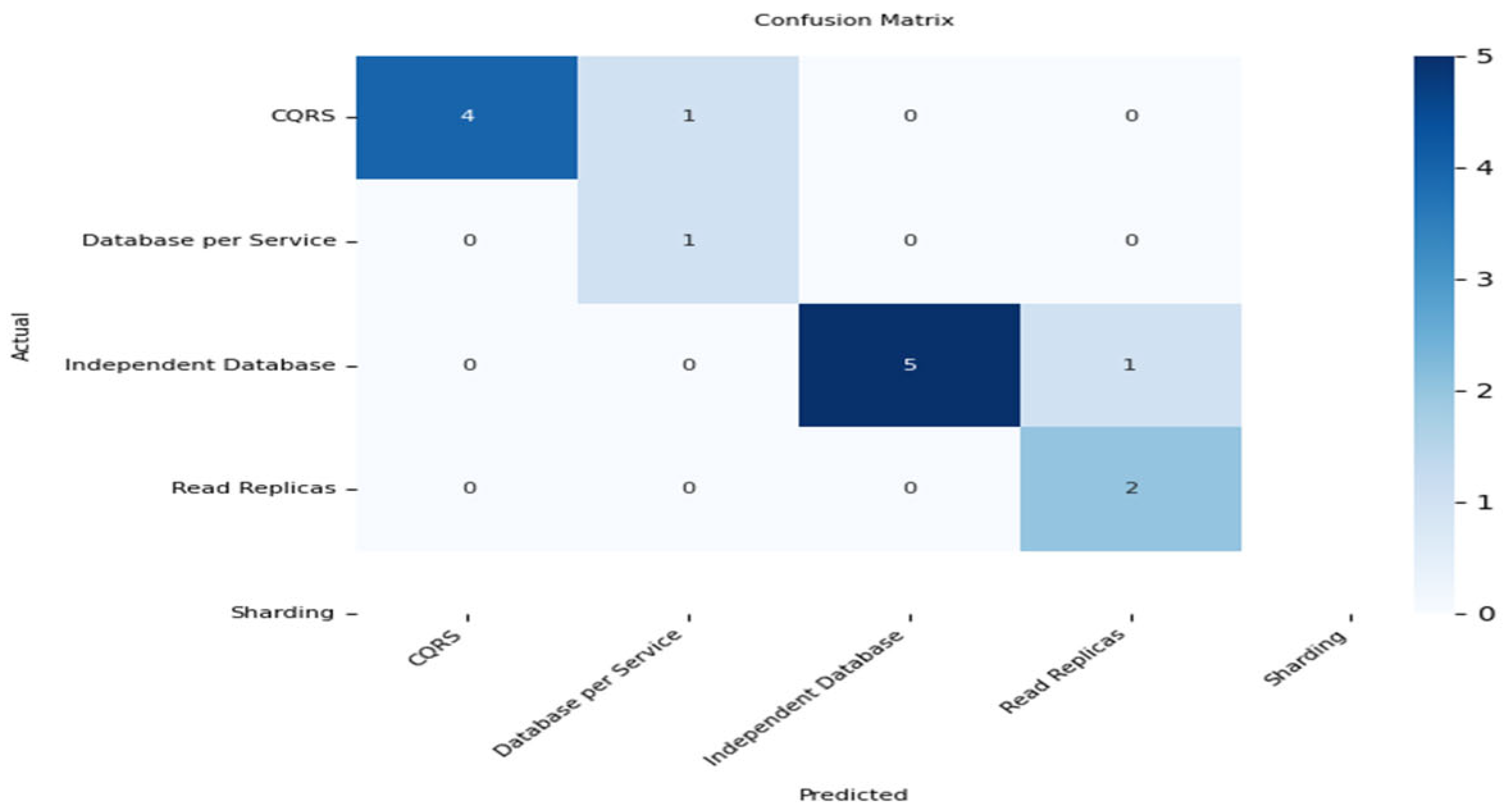

The database pattern recommendations were evaluated by comparing the predictions of the RF classifier against the heuristic-based labels assigned during preprocessing. Evaluation metrics included accuracy, precision, recall, F1 score, and a confusion matrix. These measures assess the classifier’s ability to correctly identify database architectural patterns. Results indicated strong predictive performance and reliable pattern identification. The model’s accuracy was computed by evaluating its predictions on the test subset comprising 40% of the labeled data. Accuracy was defined as the ratio of correct predictions to the total number of test samples. In addition, weighted precision, recall, and F1-score were calculated to assess the model’s ability to correctly classify each architectural pattern. A confusion matrix was also generated to analyze misclassification trends. All evaluation metrics were computed using the Scikit-learn Python library version 1.5.1.

4.1.1. Accuracy

Accuracy is a fundamental metric for evaluating model performance [

36]. It measures the proportion of correctly classified instances out of the total number of instances. In this context, accuracy quantifies how often the database pattern classifier produces the correct prediction. It is formally defined as:

where TP denotes true positives, TN true negatives, FP false positives, and FN false negatives.

4.1.2. Precision

Precision is a critical metric for evaluating Mono2Micro models, especially in cases where false positives carry a high cost. It measures the proportion of correctly predicted positive observations to the total predicted positive observations [

36], reflecting the model’s ability to avoid misclassifying negative samples as positive. A high precision score indicates a low false positive rate. It is defined as:

4.1.3. Recall

Recall, also known as sensitivity or true positive rate (TPR), measures the model’s effectiveness in identifying all relevant positive cases. Mono2Micro uses recall to evaluate how well the classifier captures all relevant database patterns [

36]. It is defined as:

4.1.4. F1-Score

The F1 score is the harmonic mean of precision and recall, offering a single measure that balances both concerns. It is especially important in contexts where both false positives and false negatives must be minimized [

36]. A high score indicates that the model achieves both high precision and recall. It is defined as:

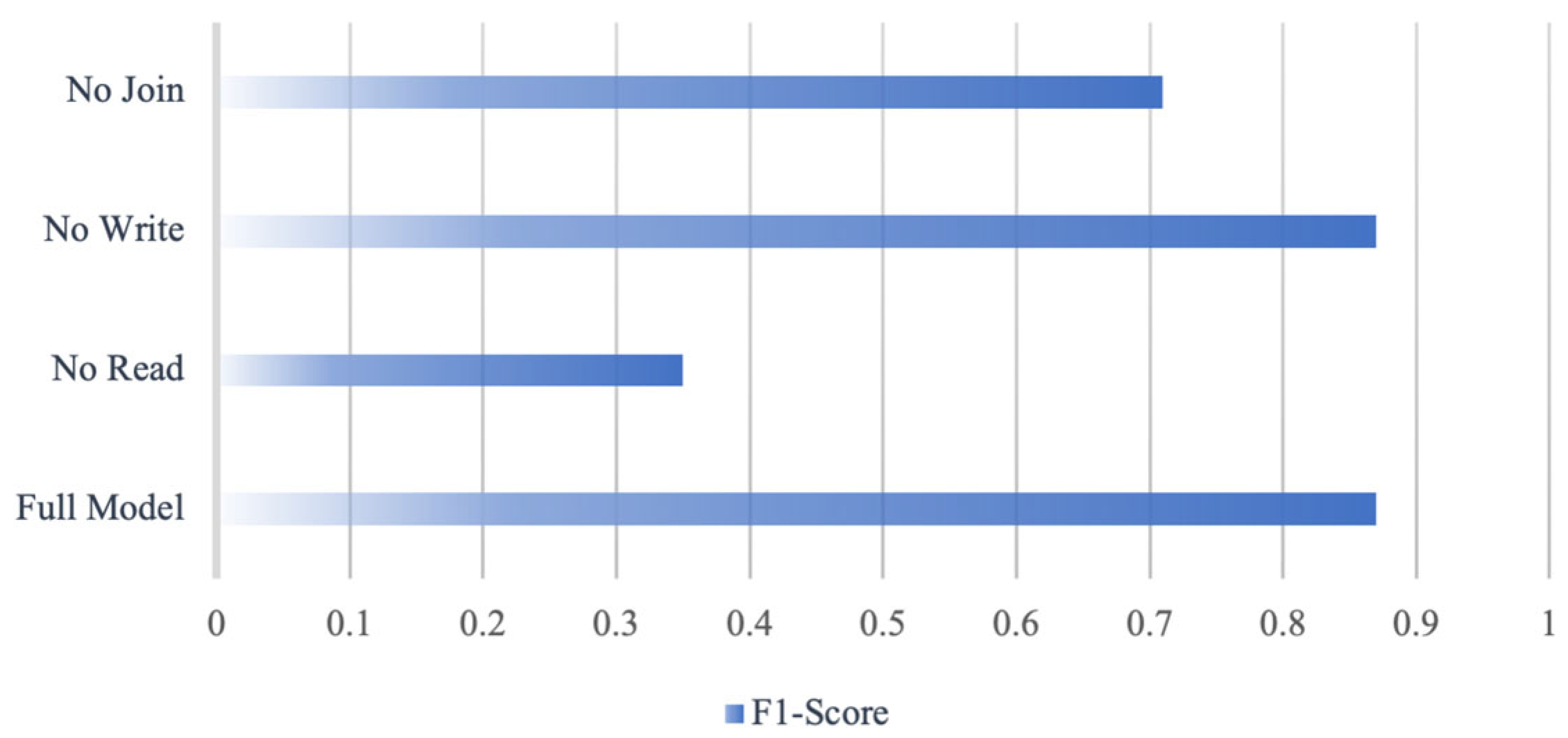

To assess the contribution of each input feature, an ablation study was conducted by independently removing read, write, and join operation metrics from the classifier input. As shown in

Figure 2, excluding the read feature caused the largest performance degradation, reducing the F1-score by approximately 60%. Removing join operations resulted in an 18.7% decrease, while excluding write operations had a negligible impact. These results confirm the significance of both read and join metrics in predicting appropriate database architectural patterns.

4.1.5. Confusion Matrix

The confusion matrix summarizes classification performance by presenting a comparison between the actual and predicted labels. It presents the number of true positives, true negatives, false positives, and false negatives in a tabular format, offering insights into class-specific errors.

4.2. Decomposition Pattern Evaluation

The second pattern evaluation focuses on assessing the quality of the Mono2Micro decomposition step. Several clustering validation metrics were used to measure the effectiveness of the resulting partitions. Specifically, modularity was used to quantify the strength of the division into communities, conductance was applied to evaluate inter-cluster separation, and the silhouette score was calculated to assess cluster cohesion and separation. To better reflect functional behavior, the decomposition graph was augmented with controller-to-entity mappings, capturing dynamic interactions in addition to static dependencies.

As the Louvain algorithm is unsupervised, its performance was not evaluated using classification accuracy. Instead, these structural metrics were used as standard measures for validating graph-based clustering outcomes, offering insight into the cohesion and separation of the identified service clusters. These metrics collectively provide a comprehensive evaluation of the decomposition quality.

4.2.1. Modularity

Modularity measures the density of edges within clusters compared to the density between clusters. A higher modularity value indicates stronger community structures with dense internal connections and sparse external ones [

22,

37]. Modularity is defined as:

where

is the weight of the edge between nodes

,

and

are the sum of weights of edges attached to nodes

, m is the total weight of all edges in the graph, and

is 1 if

are in the same community and 0 otherwise.

4.2.2. Conductance

Conductance measures the fraction of edge volume that points outside a cluster relative to the total volume of the cluster. Lower conductance values indicate better-isolated clusters, meaning fewer external connections relative to internal ones [

38]. Conductance is defined as:

where

is the sum of weights of edges connecting

to the rest of the graph

, and

is the sum of degrees of all nodes in

.

4.2.3. Silhouette Score

The silhouette score measures how well an entity fits within its assigned cluster relative to others. It considers cohesion within clusters and separation between clusters. The silhouette score metric is formally defined as:

where

is the average distance between

and all other points in the same cluster, and

is the lowest average distance between

and all points in any other cluster.

4.3. Communication Pattern Evaluation

In the third pattern, the evaluation focuses on validating the correctness of the communication pattern classification among clustered SIS models. Each cluster’s communication behavior was analyzed based on three aggregated metrics: average read ratio, average write ratio, and average join ratio. Communication patterns were assigned based on threshold comparisons derived from the global dataset statistics. These thresholds guided the assignment of patterns such as REST API with Caching, GraphQL (Read-Optimized), gRPC (High Data Exchange), Transactional REST API, or Asynchronous Messaging.

Since the focus in this pattern is on the clustering of services rather than individual entities, traditional quantitative metrics such as accuracy or F1-score were not applied. Instead, a 3D scatter plot visualization was employed to qualitatively assess the separation and accuracy of the communication patterns across service clusters based on their aggregated operational characteristics. The evaluation relied on the following:

Average Read Ratio: Measures the proportion of read operations relative to total operations inside each cluster.

Average Write Ratio: Measures the proportion of write operations relative to total operations inside each cluster.

Average Join Ratio: Measures the frequency of join operations relative to total operations inside each cluster.

Clusters were then visualized in a 3D plot, where each axis represents one of the three metrics, and each cluster was color-coded based on the assigned communication pattern, as will be discussed in detail in

Section 5.4.

5. Results and Discussion

This section presents the experimental results obtained by applying the Mono2Micro framework to the SIS system. The outcomes for each transformation pattern—database architecture, service decomposition, and communication—are illustrated and analyzed. The results are interpreted using the evaluation metrics introduced earlier, offering insights into the framework’s effectiveness, limitations, and practical implications.

5.1. Experimental Setup

The experimental evaluation was conducted on a real-world SIS system, which serves as the core platform for managing student records, course registrations, academic scheduling, and administrative processes. The SIS application comprises over 40 interconnected entities, offering a realistic and sufficiently complex case study for analyzing service decomposition and architectural modernization. Its diverse access patterns, rich entity relationships, and high volume of transactional operations made it a suitable environment for evaluating the Mono2Micro framework across database design, microservice decomposition, and communication strategy patterns.

To support the evaluation, the chosen SIS system was originally developed using the Laravel framework, with MySQL serving as the underlying database management system. The dataset used for experimentation was extracted through a combination of static and dynamic analysis. Static structural information was obtained by parsing the ORM (Eloquent) relationships defined in the Laravel models, while dynamic access patterns were recorded by analyzing real-time SQL query logs captured during production usage.

This comprehensive extraction approach provided a reliable foundation for evaluating the Mono2Micro framework’s predictions across database design, service decomposition, and communication patterns. The following subsections summarize the key results and discuss their implications.

5.2. Database Pattern Results

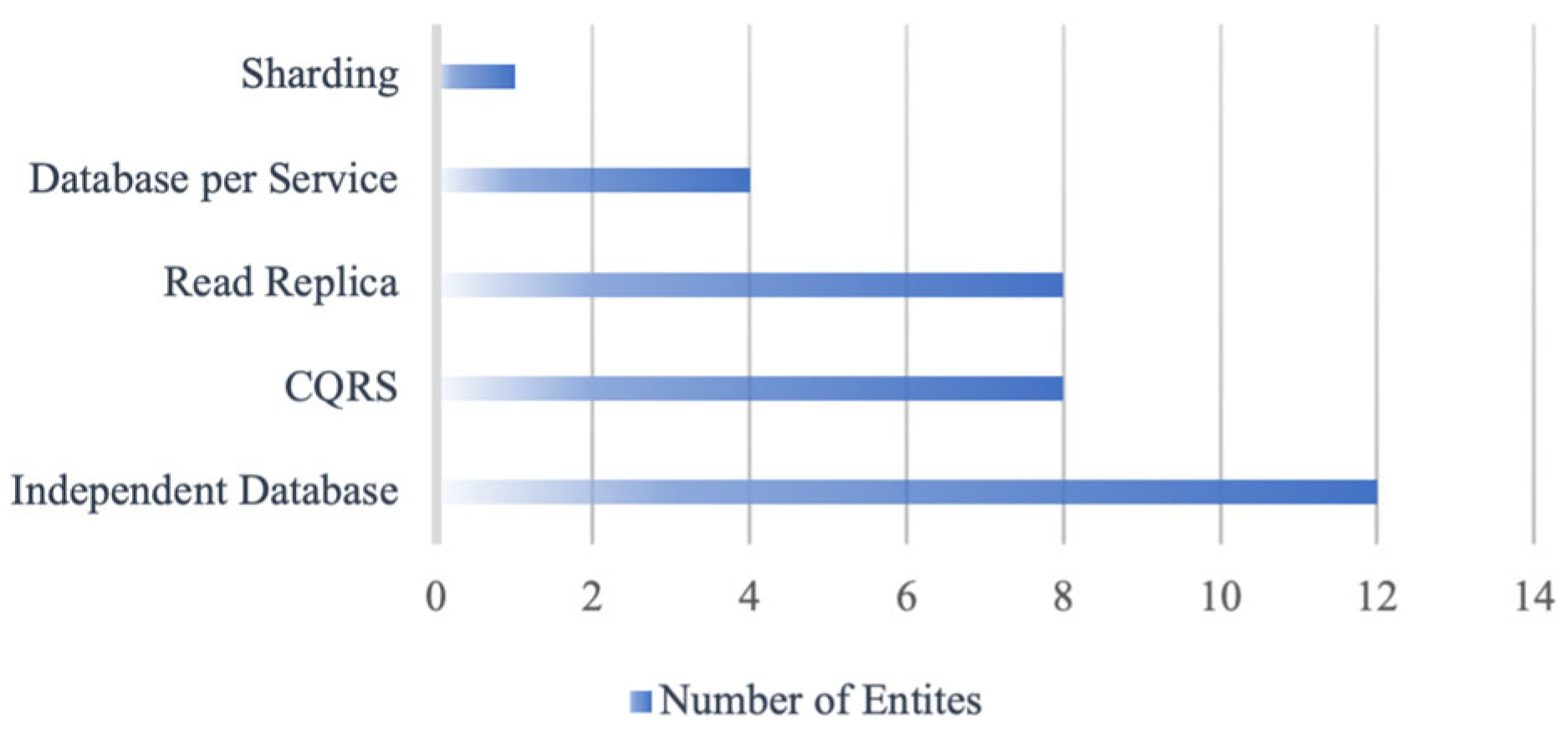

Initially, a bar chart was constructed to illustrate the distribution of recommended architectural patterns across all database entities, as shown in

Figure 3. This overview highlights how variations in read, write, and join behaviors influence the assignment of architectural patterns such as CQRS, event sourcing, sharding, and others. In addition to the bar chart, a stacked bar chart was created to represent the composition of access heaviness, read, write, and join for each entity, as shown in

Figure 4. Each bar corresponds to a distinct entity and is segmented according to the presence of specific access behaviors. This visualization demonstrates how various combinations of access operations influence the classification outcomes and the resulting architectural pattern recommendations.

Subsequently, the database pattern classification yielded strong results. The RF classifier demonstrated reliable predictive performance across key evaluation metrics. Specifically, the model achieved an accuracy of 86%, a precision of 91.7%, a recall of 86%, and an F1-score of 85.8%. These results indicate that the system effectively classified entities into their appropriate database architectural patterns.

Although the initial architectural labels were generated using rule-based heuristics, the integration of the RF classifier provided enhanced generalization capabilities. Unlike fixed-threshold rules, the classifier learns soft decision boundaries and captures complex, non-linear interactions among input features. Although the classification dataset is deterministic and not drawn from a probabilistic distribution, a Repeated Stratified K-Fold Cross-Validation (5 folds, 10 repetitions) was conducted to assess the statistical reliability of the model’s performance across multiple data splits. This evaluation procedure resulted in a mean weighted F1-score of 0.8578, accompanied by a 95% confidence interval of [0.8181, 0.8975], indicating the model’s robustness and stable predictive capability across diverse partitions.

Furthermore, the ablation study presented in

Figure 2 demonstrates the critical role of read and join features in accurate pattern classification. This machine learning-based approach also improves scalability, as new architectural patterns can be incorporated through retraining without modifying existing rules.

The confusion matrix and pattern distribution in

Figure 5 demonstrate that the model accurately assigned entities to their respective architectures, with Independent Database and CQRS emerging as dominant patterns. This outcome aligns with the SIS system’s operational characteristics, which emphasize independent data management and read-intensive workloads—typical of systems preparing for migration of microservices.

5.3. Decomposition Pattern Results

To enhance interpretability, the final clusters are visualized as a weighted graph, as shown in

Figure 6. Each node represents an SIS entity, and edges indicate the relationships between them, scaled by their assigned weights. Clusters are color-coded based on community assignments, while node sizes are proportional to their degree of connectivity. This visual representation provides a clear overview of the SIS’s structural decomposition and highlights how tightly coupled components are grouped. Such visualization supports stakeholders’ understanding of the proposed microservice boundaries and aids in validating the decomposition results.

Subsequently, the decomposition pattern evaluation employed modularity, conductance, and silhouette coefficient metrics. To enrich the analysis and improve decomposition accuracy, the structural graph was augmented with runtime controller-to-entity access data, enabling the graph to reflect both static relationships and dynamic usage patterns. This hybridization enhanced the relevance of the clusters from a functional perspective.

A modularity score of 0.529 was achieved, indicating strong internal density within clusters relative to the overall network structure. This value exceeds the typical range (0.1–0.25) reported in recent studies on microservice decomposition [

23], reflecting the effectiveness of the proposed clustering in producing coherent service boundaries. Furthermore, the silhouette score was 0.6448, which lies within the moderate-to-high range considered acceptable given the complexity of real-world systems and the inclusion of runtime behavior. While the silhouette metric is rarely reported in existing microservice decomposition studies, it is included here as part of a more comprehensive and interpretable evaluation framework.

Additionally, conductance values, illustrated in

Figure 7, ranged from 0.14 to 0.30, demonstrating that the clusters maintained low external connectivity and acceptable cohesion. Overall, these results affirm the effectiveness of the decomposition phase in generating meaningful service boundaries suitable for migration of microservices.

To assess the effect of incorporating runtime controller-entity mappings, we compared the original static-only clustering with a hybrid model that blends structural and behavioral interactions.

Table 5 summarizes the results across four evaluation dimensions. This comparison highlights the improvement in cohesion Strength—measured as the sum of intra-cluster edge weights, representing the total internal relationship strength within each cluster—and functional separation achieved through the integration of runtime controller-to-entity access patterns. While modularity increased only slightly, the silhouette score improved considerably, indicating more coherent and well-separated clusters. In addition, average conductance decreased, reflecting fewer inter-cluster connections and stronger boundaries between services.

In addition, to ensure a fair and rigorous evaluation, the proposed framework was re-executed on the same open-source projects utilized by MIADA, with particular emphasis on the largest and most complex dataset, Spring-Boot-Blog-REST-API. A reliable ground truth was established through manual decompositions provided by five independent domain experts, and the consensus was employed for validation. The results show that our framework consistently achieved higher Adjusted Rand Index (ARI) values compared to MIADA’s reported best score of 0.893, thereby demonstrating superior clustering accuracy. Furthermore, the direct comparison was extended to include internal quality measures that were not addressed in MIADA, namely average conductance (0.32), an average silhouette score of 0.6481, and a modularity score of 0.341, as shown in

Table 6. These metrics confirm that the generated clusters exhibit both strong cohesion and clear separation. In addition, our Mono2Micro framework was validated on a Java/Spring Boot project, thereby demonstrating its applicability across heterogeneous technology stacks beyond SIS/Laravel. These results show that the suggested method is more robust and generalizable than MIADA when applied to diverse and complex systems.

5.4. Communication Pattern Results

To facilitate interpretation and validation, a three-dimensional scatter plot is constructed to represent each cluster’s communication profile, as shown in

Figure 8. The axes correspond to average read ratio, write ratio, and join ratio, with clusters color-coded according to their assigned communication pattern. This visualization provides an intuitive overview of behavioral clustering and illustrates the correlation between access intensity and communication strategy. Such graphical analysis enhances transparency and supports the validation of the classification logic.

The communication behavior among the clustered services was evaluated using aggregated access metrics, including average read, write, and join ratios. Based on the calculated thresholds, services were classified into appropriate interaction strategies such as GraphQL (read-optimized), gRPC (high data exchange), REST API with caching, and asynchronous messaging. The classification results were visualized using a 3D scatter plot, shown in

Figure 8, which revealed clear separation among service groups according to their operational characteristics. This visualization supports the validity of the communication pattern assignments and highlights how access behavior drives the selection of inter-service communication strategies.

In addition to the threshold-based classification, performance simulations were conducted to evaluate latency and throughput across the proposed communication protocols, thereby reinforcing the validity of the communication interaction patterns. Baseline latency and throughput values were derived from prior benchmarking studies [

6,

25,

35] and scaled according to each cluster’s workload profile (read, write, or join-heavy). To ensure that the simulation captured the comparative strengths of the protocols, scaling adjustments were applied to emphasize the well-documented advantages of caching in REST-based systems, the efficiency of GraphQL in join-intensive queries, and the superior throughput characteristics of gRPC in data-intensive workloads. These adjustments were informed by empirical trends reported in the literature rather than fixed rules, and their selection was guided by the need to balance interpretability with realism. Sensitivity analysis further confirmed that moderate variation by ±25% in these adjustments did not alter the overall protocol assignment, thereby supporting the robustness of the simulation.

Figure 9 presents the performance results, which demonstrate that REST API + Caching achieves the lowest latency in read-heavy clusters, GraphQL improves performance in join-heavy scenarios, gRPC provides the highest throughput under data-intensive workloads, and the Transactional REST API remains stable in write dominated contexts. These results extend the threshold-based classification with empirical simulation evidence and support the effectiveness of the selected inter-service communication strategies.

5.5. Summary of Findings

This study employed a combination of machine learning, graph-based clustering, and runtime behavior analysis techniques to support the Mono2Micro migration framework. Specifically, Random Forest classification was applied for database architectural pattern identification, Louvain community detection was used for service decomposition based on both entity relationships and dynamic controller-to-entity mappings, and a threshold-based approach was employed to assign appropriate communication patterns across clustered services. These integrated steps are summarized in Algorithm 1.

The evaluation results demonstrated the effectiveness of the proposed approach across all migration phases. High classification accuracy was achieved for database patterns, strong modularity and conductance scores were observed in service decomposition, and the communication patterns were consistently assigned based on empirical operational characteristics. These outcomes confirm that the framework can effectively automate critical aspects of monolithic system migration preparation. In addition, the comparison with existing tools, such as MIADA [

24], confirmed that our framework consistently outperformed MIADA’s reported best ARI and further demonstrated robustness through additional quality measures (Conductance, Silhouette, Modularity). Unlike MIADA and Mono2Micro, which remain restricted to specific environments, our approach proved to be more generalizable and effective across heterogeneous systems. It should also be noted that the ablation study in

Section 4 is limited to the supervised classification stage (database patterns), as decomposition and communication phases are based on unsupervised clustering and heuristic mapping, for which feature-level ablation is not directly applicable. Their robustness was instead assessed using structural quality metrics, such as modularity and conductance, which reflect the cohesion and separation of the resulting service clusters.

| Algorithm 1 Mono2Micro Framework Classification Process |

Input:- -

Static Metadata (Entity Models and Relationships). - -

Runtime Query Statistics (Read, Write, Join Counts)

|

| Output: |

- -

Database Architectural Patterns. - -

Service Clusters. - -

Communication Patterns

|

| Steps: |

1: Database Pattern Detection:- 1.1

Load entity runtime query statistics. - 1.2

Determine read-heavy, write-heavy, and join-heavy flags using median thresholds. - 1.3

Apply rule-based classification. - 1.4

Normalize features using Min-Max scaling. - 1.5

Train the RF classifier for database prediction. - 1.6

Evaluate model performance and visualize the results.

|

2: Service Decomposition:- 2.1

Load static entity-relationship metadata and runtime controller-to-entity mappings. - 2.2

Construct a hybrid undirected weighted graph. - 2.3

Apply the Louvain community detection algorithm to identify service clusters. - 2.4

Evaluate the model and visualize the results.

3: Communication Pattern Classification:Aggregate runtime metrics per cluster. Compare against global thresholds. Assign communication strategies (REST, gRPC, GraphQL, Messaging). Visualize cluster communication behavior

|

The research questions guiding this work were addressed as follows:

RQ1: How can AI technologies be effectively utilized to automate the migration process?

The proposed Mono2Micro framework successfully employed AI techniques, specifically the Random Forest classifier and graph-based clustering (Louvain algorithm), to automate the key phases of database pattern identification, service decomposition, and communication pattern assignment. Both the Random Forest model and the Louvain community detection algorithm demonstrated strong performance during evaluation, contributing to accurate and efficient migration outcomes. This approach significantly minimized manual engineering effort and validated the practical applicability of AI in streamlining the migration process.

RQ2: What are the key challenges organizations encountered during the migration process, and how can AI-driven approaches address these challenges?

The Mono2Micro framework addressed major migration challenges, including extracting static metadata from ORM models and capturing dynamic runtime behavior through SQL query logs without disrupting the production environment, and accurately grouped entities into services while addressing hidden couplings and runtime variability. By combining empirical data analysis with machine learning and graph clustering techniques, the framework enabled a scalable and automated migration process.

RQ3: What are the criteria for evaluating the effectiveness and success of AI-driven migration strategies?

The effectiveness of the Mono2Micro-based migration process was validated through a comprehensive set of quantitative metrics. These included accuracy, precision, recall, F1 score, modularity, conductance values, and silhouette coefficients that were employed to rigorously evaluate the classification, decomposition, and communication pattern stages, ensuring the robustness and reliability of the framework.

RQ4: What best practices and guidelines support the integration of AI technologies into software migration processes?

The findings emphasize that combining static metadata analysis with dynamic runtime behavior is essential for building effective AI-driven migration strategies. The Mono2Micro framework demonstrated that static structures alone are insufficient; instead, incorporating real-world system behavior leads to more accurate service boundaries and communication pattern assignments. This integrated approach serves as a best practice for organizations aiming to adopt AI-assisted software modernization, ensuring more reliable, scalable, and context-aware migration outcomes.

6. Threats to Validity

This section examines the validity of the study by analyzing potential internal, external, and construct-related threats, following established evaluation guidelines [

39].

Internal validity reflects efforts made to ensure accurate and unbiased results by using real-world production data for both static metadata and dynamic runtime behavior extraction. However, the framework remains sensitive to the quality of SQL logs: any incompleteness, sampling bias, or limited observation period in these logs may result in distorted ratios of read, write, and join operations, which in turn can affect the accuracy of database and communication pattern assignments. To mitigate the risk of biased or incomplete SQL logs, query statistics were collected over operational periods and complemented with static ORM metadata. Furthermore, runtime co-access frequencies were normalized using logarithmic transformation and percentile-based scaling, ensuring that outliers were controlled while preserving the relative ranking of interactions. This normalization reduced the dependency on raw query distributions and improved the robustness of the results across varying workloads. In addition, the manual assignment of relationship weights for structural edges may introduce bias. To address this, we applied sensitivity analysis by varying weights ±25% and ±50%, which confirmed stable clustering with only minor metric fluctuations. Future work will explore automated weight learning and optimization to further reduce dependence on manual rules.

External validity relates to the generalizability of the findings beyond the specific case examined. The proposed Mono2Micro framework was validated on a real-world SIS system developed using Laravel and MySQL and further evaluated on the open-source Java/Spring Boot dataset used in the MIADA study. These experiments confirmed that the framework is applicable across heterogeneous technology stacks, rather than being limited to a single environment. In addition, because the proposed Mono2Micro framework operates at the level of entities and SQL query logs, it can be extended beyond monolithic systems to different architectural styles, such as client-server and service-oriented architectures. While the current evaluation focused on monolith-to-microservices migration, the underlying principles are not restricted to this context, and future work will investigate the additional complexities of applying the framework in more distributed settings.

Ablation analysis was limited to the supervised learning component for database pattern classification, where input features (read, write, join) could be isolated and evaluated using the F1-score. The decomposition component, based on unsupervised Louvain clustering over a structural relationship graph, does not involve labeled outputs, making traditional ablation inapplicable. As part of future work, we plan to extend the framework to support additional patterns and broaden comparisons with other state-of-the-art tools.

Finally, the construct validity ensures that the study accurately measures the intended concepts and objectives. In this study, established metrics such as accuracy, precision, recall, modularity, conductance, and silhouette scores were used to rigorously validate the results of each Mono2Micro phase. The framework’s evaluation approach was aligned with the research questions, reinforcing the validity of the constructs being assessed.

7. Conclusions and Future Work

This study introduced the Mono2Micro framework as a comprehensive, AI-driven solution for facilitating the migration from monolithic architectures to microservices. By integrating machine learning classification, graph-based community detection, and runtime behavior analysis, the framework successfully automated the critical phases of the migration process. It specifically addressed three key dimensions: database architectural pattern identification, service decomposition, and communication strategy assignment. The evaluation results demonstrated that combining static metadata with dynamic execution traces can yield high-accuracy models, coherent service clusters, and context-aware communication protocols, thus enhancing both the precision and practicality of microservice migration.

The Mono2Micro Framework was rigorously evaluated using diverse metrics, including classification accuracy, modularity, conductance, and silhouette scores. Each migration phase was assessed independently, providing a multi-perspective validation of the framework’s effectiveness and robustness. The experimental application on a real-world SIS system further underscored the practicality and generalizability of the approach.

The novelty of the proposed Mono2Micro framework lies in its tri-pattern foundation, which is not addressed in combination by any prior work. By applying supervised and unsupervised learning techniques across structural and behavioral layers of the system, Mono2Micro offers a differentiated and comprehensive approach to automated microservices migration.

Future studies will focus on enhancing the scalability and adaptability of the proposed Mono2Micro framework, including the exploration of advanced learning techniques, such as deep learning, for capturing nuanced behavioral patterns, extending the framework to accommodate a broader range of communication protocols, and empirically validating protocol recommendations. Moreover, the framework will be tested on larger-scale enterprise systems to assess its robustness and generalizability across diverse industrial domains. Ultimately, the Mono2Micro framework aims to evolve into an industrial-grade tool, offering practical support for researchers and software engineers in automating and optimizing the migration process from monolithic to microservice architectures.