QiGSAN: A Novel Probability-Informed Approach for Small Object Segmentation in the Case of Limited Image Datasets

Abstract

1. Introduction

- A novel probability-informed ensemble architecture QiGSAN was developed to improve the accuracy of small object semantic segmentation in high-resolution imagery datasets with imbalanced classes and a limited volume of samples;

- New quadtree-informed architectural blocks have been introduced to capture the interconnections between features in images at different spatial resolutions during the graph convolution of superpixel subregions [32];

- The theorem concerning faster loss reduction in the quadtree-informed graph convolutional neural networks was proved;

- Using QiGSAN, the ship segmentation accuracy (-score) on high resolution SAR images (HRSID [33] and SSDD [34] datasets) increased up to compared SegFormer [35] and LWGANet, a new state-of-the-art transformer for UAV (Unmanned Aerial Vehicles) and SAR image processing [36]. It also improves the results of non-informed convolutional architectures, such as ENet [37] and DeepLabV3 [38] (up to in -score), and other ensemble implementations with the various graph NNs (up to in -score).

2. Related Works

2.1. Small Data in Image Processing

2.2. Graph Neural Network Image Analysis

2.3. Probability-Informed Neural Networks

2.4. Summary

3. Methodology

3.1. Problem Statement

3.2. Quadtree-Informed Graph Self-Attention Networks

3.3. Pre-Processing of Image Features by Convolutional Layers

3.4. Superpixels

3.5. Quadtree Informing

3.6. Graph Self-Attention Operation

4. Experiments

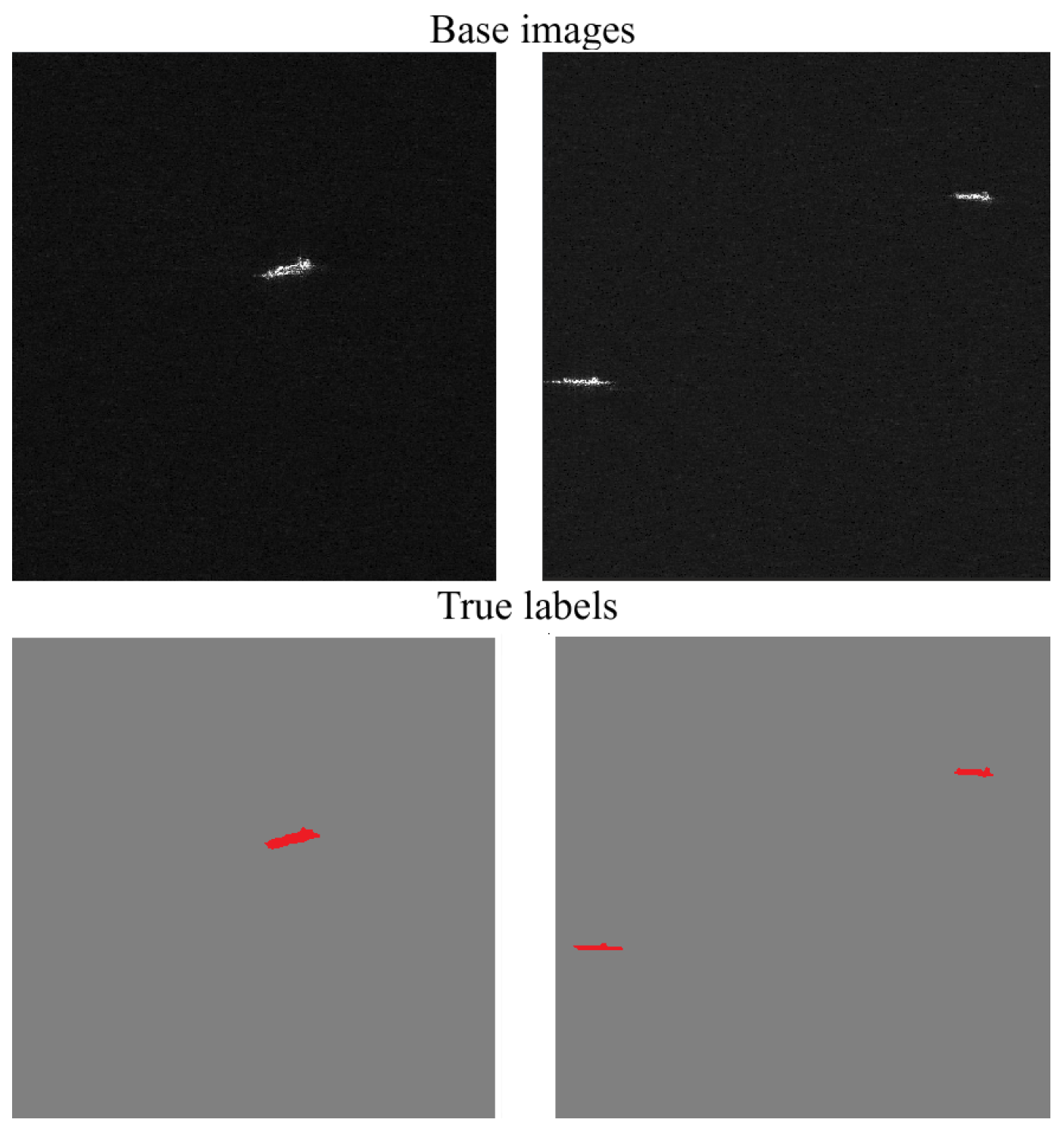

4.1. Datasets

4.2. Training, Metrics, and Hyperparameters

4.3. Variants of the Network

- QiGSAN uses self-attention graph layers;

- GCN-QT and GAT-QT are based on vanilla GCN and GAT, respectively.

4.4. Main Results on SAR Images

5. Ablation Study

5.1. HRSID

5.2. SSDD

5.3. Comparison of QiGSAN with Other Configurations of Graph Networks

6. Conclusions and Discussion

6.1. Discussion

6.2. Summary

6.3. Furher Research Directions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Elhassan, M.A.; Zhou, C.; Khan, A.; Benabid, A.; Adam, A.B.; Mehmood, A.; Wambugu, N. Real-time semantic segmentation for autonomous driving: A review of CNNs, Transformers, and Beyond. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102226. [Google Scholar] [CrossRef]

- Song, Y.; Xu, F.; Yao, Q.; Liu, J.; Yang, S. Navigation algorithm based on semantic segmentation in wheat fields using an RGB-D camera. Inf. Process. Agric. 2023, 10, 475–490. [Google Scholar] [CrossRef]

- Lei, P.; Yi, J.; Li, S.; Li, Y.; Lin, H. Agricultural surface water extraction in environmental remote sensing: A novel semantic segmentation model emphasizing contextual information enhancement and foreground detail attention. Neurocomputing 2025, 617, 129110. [Google Scholar] [CrossRef]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Semantic Segmentation of Small Objects and Modeling of Uncertainty in Urban Remote Sensing Images Using Deep Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 680–688. [Google Scholar] [CrossRef]

- Dede, A.; Nunoo-Mensah, H.; Tchao, E.T.; Agbemenu, A.S.; Adjei, P.E.; Acheampong, F.A.; Kponyo, J.J. Deep learning for efficient high-resolution image processing: A systematic review. Intell. Syst. Appl. 2025, 26, 200505. [Google Scholar] [CrossRef]

- Chen, P.; Liu, Y.; Ren, Y.; Zhang, B.; Zhao, Y. A Deep Learning-Based Solution to the Class Imbalance Problem in High-Resolution Land Cover Classification. Remote Sens. 2025, 17, 1845. [Google Scholar] [CrossRef]

- Nguyen, Q.D.; Thai, H.T. Crack segmentation of imbalanced data: The role of loss functions. Eng. Struct. 2023, 297, 116988. [Google Scholar] [CrossRef]

- Genc, A.; Kovarik, L.; Fraser, H.L. A deep learning approach for semantic segmentation of unbalanced data in electron tomography of catalytic materials. Sci. Rep. 2022, 12, 16267. [Google Scholar] [CrossRef]

- Hossain, M.S.; Betts, J.M.; Paplinski, A.P. Dual Focal Loss to address class imbalance in semantic segmentation. Neurocomputing 2021, 462, 69–87. [Google Scholar] [CrossRef]

- Chopade, R.; Stanam, A.; Pawar, S. Addressing Class Imbalance Problem in Semantic Segmentation Using Binary Focal Loss. In Proceedings of the Ninth International Congress on Information and Communication Technology, London, UK, 19–22 February 2024; Yang, X.S., Sherratt, S., Dey, N., Joshi, A., Eds.; Springer: Singapore, 2024; pp. 351–357. [Google Scholar] [CrossRef]

- Saeedizadeh, N.; Jalali, S.M.J.; Khan, B.; Kebria, P.M.; Mohamed, S. A new optimization approach based on neural architecture search to enhance deep U-Net for efficient road segmentation. Knowl.-Based Syst. 2024, 296, 111966. [Google Scholar] [CrossRef]

- Debnath, R.; Das, K.; Bhowmik, M.K. GSNet: A new small object attention based deep classifier for presence of gun in complex scenes. Neurocomputing 2025, 635, 129855. [Google Scholar] [CrossRef]

- Liu, W.; Kang, X.; Duan, P.; Xie, Z.; Wei, X.; Li, S. SOSNet: Real-Time Small Object Segmentation via Hierarchical Decoding and Example Mining. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 3071–3083. [Google Scholar] [CrossRef]

- Sang, S.; Zhou, Y.; Islam, M.T.; Xing, L. Small-Object Sensitive Segmentation Using Across Feature Map Attention. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 6289–6306. [Google Scholar] [CrossRef]

- Kiobya, T.; Zhou, J.; Maiseli, B. A multi-scale semantically enriched feature pyramid network with enhanced focal loss for small-object detection. Knowl.-Based Syst. 2025, 310, 113003. [Google Scholar] [CrossRef]

- Hou, X.; Bai, Y.; Xie, Y.; Ge, H.; Li, Y.; Shang, C.; Shen, Q. Deep collaborative learning with class-rebalancing for semi-supervised change detection in SAR images. Knowl.-Based Syst. 2023, 264, 110281. [Google Scholar] [CrossRef]

- Chen, Y.; Li, X.; Luan, C.; Hou, W.; Liu, H.; Zhu, Z.; Xue, L.; Zhang, J.; Liu, D.; Wu, X.; et al. Cross-level interaction fusion network-based RGB-T semantic segmentation for distant targets. Pattern Recognit. 2025, 161, 111218. [Google Scholar] [CrossRef]

- Liu, Z.; Lv, Q.; Lee, C.H.; Shen, L. Segmenting medical images with limited data. Neural Netw. 2024, 177, 106367. [Google Scholar] [CrossRef]

- Wang, C.; Gu, H.; Su, W. SAR Image Classification Using Contrastive Learning and Pseudo-Labels with Limited Data. IEEE Geosci. Remote. Sens. Lett. 2022, 19, 4012505. [Google Scholar] [CrossRef]

- Wang, C.; Luo, S.; Pei, J.; Huang, Y.; Zhang, Y.; Yang, J. Crucial feature capture and discrimination for limited training data SAR ATR. ISPRS J. Photogramm. Remote Sens. 2023, 204, 291–305. [Google Scholar] [CrossRef]

- Dong, Y.; Li, F.; Hong, W.; Zhou, X.; Ren, H. Land cover semantic segmentation of Port Area with High Resolution SAR Images Based on SegNet. In Proceedings of the 2021 SAR in Big Data Era (BIGSARDATA), Nanjing, China, 22–24 September 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Z.; Wang, R.; Zhang, S.; Zhang, Y. Limited Data-Driven Multi-Task Deep Learning Approach for Target Classification in SAR Imagery. In Proceedings of the 2024 5th International Conference on Geology, Mapping and Remote Sensing (ICGMRS), Wuhan, China, 12–14 April 2024; pp. 239–242. [Google Scholar] [CrossRef]

- Lyu, J.; Zhou, K.; Zhong, Y. A statistical theory of overfitting for imbalanced classification. arXiv 2025, arXiv:2502.11323. [Google Scholar] [CrossRef]

- Li, Z.; Kamnitsas, K.; Glocker, B. Analyzing Overfitting Under Class Imbalance in Neural Networks for Image Segmentation. IEEE Trans. Med. Imaging 2021, 40, 1065–1077. [Google Scholar] [CrossRef]

- Gorshenin, A.; Kozlovskaya, A.; Gorbunov, S.; Kochetkova, I. Mobile network traffic analysis based on probability-informed machine learning approach. Comput. Netw. 2024, 247, 110433. [Google Scholar] [CrossRef]

- Karniadakis, G.; Kevrekidis, I.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-Informed Machine Learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Dostovalova, A. Neural Quadtree and its Applications for SAR Imagery Segmentations. Inform. Primen. 2024, 18, 77–85. [Google Scholar] [CrossRef]

- Dostovalova, A.; Gorshenin, A. Small sample learning based on probability-informed neural networks for SAR image segmentation. Neural Comput. Appl. 2025, 37, 8285–8308. [Google Scholar] [CrossRef]

- Pastorino, M.; Moser, G.; Serpico, S.B.; Zerubia, J. Semantic Segmentation of Remote-Sensing Images Through Fully Convolutional Neural Networks and Hierarchical Probabilistic Graphical Models. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5407116. [Google Scholar] [CrossRef]

- Schmitt, M.; Ahmadi, S.; Hansch, R. There is No Data Like More Data - Current Status of Machine Learning Datasets in Remote Sensing. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2021), Brussels, Belgium, 12–16 July 2021; pp. 1206–1209. [Google Scholar] [CrossRef]

- Jhaveri, R.H.; Revathi, A.; Ramana, K.; Raut, R.; Dhanaraj, R.K. A Review on Machine Learning Strategies for Real-World Engineering Applications. Mob. Inf. Syst. 2022, 2022, 1833507. [Google Scholar] [CrossRef]

- Bae, J.H.; Yu, G.H.; Lee, J.H.; Vu, D.T.; Anh, L.H.; Kim, H.G.; Kim, J.Y. Superpixel Image Classification with Graph Convolutional Neural Networks Based on Learnable Positional Embedding. Appl. Sci. 2022, 12, 9176. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. SAR Ship Detection Dataset (SSDD): Official Release and Comprehensive Data Analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Spasev, V.; Dimitrovski, I.; Chorbev, I.; Kitanovski, I. Semantic Segmentation of Unmanned Aerial Vehicle Remote Sensing Images Using SegFormer. In Proceedings of the Intelligent Systems and Pattern Recognition. Communications in Computer and Information Science, Istanbul, Turkey, 26–28 June 2024; Volume 2305, pp. 1416–1425. [Google Scholar] [CrossRef]

- Lu, W.; Chen, S.B.; Ding, C.H.Q.; Tang, J.; Luo, B. LWGANet: A Lightweight Group Attention Backbone for Remote Sensing Visual Tasks. arXiv 2025, arXiv:2501.10040. [Google Scholar] [CrossRef]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. In Proceedings of the 2017 Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Li, J.; Wei, X. Research on efficient detection network method for remote sensing images based on self attention mechanism. Image Vis. Comput. 2024, 142, 104884. [Google Scholar] [CrossRef]

- Brigato, L.; Iocchi, L. A Close Look at Deep Learning with Small Data. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Virtual, 10–15 January 2021; pp. 2490–2497. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. Transfer Learning with Deep Convolutional Neural Network for SAR Target Classification with Limited Labeled Data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Zhou, Y.; Jiang, X.; Li, Z.; Liu, X. SAR Target Classification with Limited Data via Data Driven Active Learning. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Virtual, 26 September–2 October 2020; pp. 2475–2478. [Google Scholar] [CrossRef]

- Yu, J.; Zhou, G.; Zhou, S.; Yin, J. A Lightweight Fully Convolutional Neural Network for SAR Automatic Target Recognition. Remote Sens. 2021, 13, 3029. [Google Scholar] [CrossRef]

- Wang, W.; Jiang, Z.; Liao, J.; Ying, Z.; Zhai, Y. Explorations of Contrastive Learning in the Field of Small Sample SAR ATR. Procedia Comput. Sci. 2022, 208, 190–195. [Google Scholar] [CrossRef]

- Chong, Q.; Ni, M.; Huang, J.; Wei, G.; Li, Z.; Xu, J. Let the loss impartial: A hierarchical unbiased loss for small object segmentation in high-resolution remote sensing images. Eur. J. Remote Sens. 2023, 56, 2254473. [Google Scholar] [CrossRef]

- Chong, Q.; Ni, M.; Huang, J.; Liang, Z.; Wang, J.; Li, Z.; Xu, J. Pos-DANet: A dual-branch awareness network for small object segmentation within high-resolution remote sensing images. Eng. Appl. Artif. Intell. 2024, 133, 107960. [Google Scholar] [CrossRef]

- Wu, S.; Sun, F.; Zhang, W.; Xie, X.; Cui, B. Graph Neural Networks in Recommender Systems: A Survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Vrahatis, A.G.; Lazaros, K.; Kotsiantis, S. Graph Attention Networks: A Comprehensive Review of Methods and Applications. Future Internet 2024, 16, 318. [Google Scholar] [CrossRef]

- Tanis, J.H.; Giannella, C.; Mariano, A.V. Introduction to Graph Neural Networks: A Starting Point for Machine Learning Engineers. arXiv 2024, arXiv:2412.19419. [Google Scholar] [CrossRef]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1025–1035. [Google Scholar]

- Jiang, M.; Liu, G.; Su, Y.; Wu, X. Self-attention empowered graph convolutional network for structure learning and node embedding. Pattern Recognit. 2024, 153, 110537. [Google Scholar] [CrossRef]

- Ihalage, A.; Hao, Y. Formula Graph Self-Attention Network for Representation-Domain Independent Materials Discovery. Adv. Sci. 2022, 9, 2200164. [Google Scholar] [CrossRef]

- Peng, Y.; Xia, J.; Liu, D.; Liu, M.; Xiao, L.; Shi, B. Unifying topological structure and self-attention mechanism for node classification in directed networks. Sci. Rep. 2025, 15, 805. [Google Scholar] [CrossRef]

- Ronen, T.; Levy, O.; Golbert, A. Vision Transformers with Mixed-Resolution Tokenization. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 4613–4622. [Google Scholar]

- Ke, L.; Danelljan, M.; Li, X.; Tai, Y.W.; Tang, C.K.; Yu, F. Mask Transfiner for High-Quality Instance Segmentation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4402–4411. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, J.; Zhu, S.; Tan, P. Quadtree Attention for Vision Transformers. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Chitta, K.; Álvarez, J.M.; Hebert, M. Quadtree Generating Networks: Efficient Hierarchical Scene Parsing with Sparse Convolutions. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2019; pp. 2009–2018. [Google Scholar]

- Yang, F.; Ma, Z.; Xie, M. Image classification with superpixels and feature fusion method. J. Electron. Sci. Technol. 2021, 19, 100096. [Google Scholar] [CrossRef]

- Zi, W.; Xiong, W.; Chen, H.; Li, J.; Jing, N. SGA-Net: Self-Constructing Graph Attention Neural Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2021, 13, 4201. [Google Scholar] [CrossRef]

- Cao, P.; Jin, Y.; Ruan, B.; Niu, Q. DIGCN: A Dynamic Interaction Graph Convolutional Network Based on Learnable Proposals for Object Detection. J. Artif. Intell. Res. 2024, 79, 1091–1112. [Google Scholar] [CrossRef]

- Liu, X.; Li, Y.; Liu, X.; Zou, H. Dark Spot Detection from SAR Images Based on Superpixel Deeper Graph Convolutional Network. Remote Sens. 2022, 14, 5618. [Google Scholar] [CrossRef]

- Gorshenin, A.; Kuzmin, V. Statistical Feature Construction for Forecasting Accuracy Increase and Its Applications in Neural Network Based Analysis. Mathematics 2022, 10, 589. [Google Scholar] [CrossRef]

- Gorshenin, A.; Vilyaev, A. Finite Normal Mixture Models for the Ensemble Learning of Recurrent Neural Networks with Applications to Currency Pairs. Pattern Recognit. Image Anal. 2022, 32, 780–792. [Google Scholar] [CrossRef]

- Gorshenin, A.; Vilyaev, A. Machine Learning Models Informed by Connected Mixture Components for Short- and Medium-Term Time Series Forecasting. AI 2024, 5, 1955–1976. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. A review of predictive uncertainty estimation with machine learning. Artif. Intell. Rev. 2024, 57, 94. [Google Scholar] [CrossRef]

- Wang, Z.; Nakahira, Y. A Generalizable Physics-informed Learning Framework for Risk Probability Estimation. In Proceedings of the 5th Annual Learning for Dynamics and Control Conference, Philadelphia, PA, USA, 15–16 June 2023; Matni, N., Morari, M., Pappas, G.J., Eds.; PMLR: Cambridge, MA, USA, 2023; Volume 211, pp. 358–370. [Google Scholar]

- Zhang, Z.; Li, J.; Liu, B. Annealed adaptive importance sampling method in PINNs for solving high dimensional partial differential equations. J. Comput. Phys. 2025, 521, 113561. [Google Scholar] [CrossRef]

- Zuo, L.; Chen, Y.; Zhang, L.; Chen, C. A spiking neural network with probability information transmission. Neurocomputing 2020, 408, 1–12. [Google Scholar] [CrossRef]

- Batanov, G.; Gorshenin, A.; Korolev, V.Y.; Malakhov, D.; Skvortsova, N. The evolution of probability characteristics of low-frequency plasma turbulence. Math. Model. Comput. Simulations 2012, 4, 10–25. [Google Scholar] [CrossRef]

- Batanov, G.; Borzosekov, V.; Gorshenin, A.; Kharchev, N.; Korolev, V.; Sarksyan, K. Evolution of statistical properties of microturbulence during transient process under electron cyclotron resonance heating of the L-2M stellarator plasma. Plasma Phys. Control. Fusion 2019, 61, 075006. [Google Scholar] [CrossRef]

- Gorshenin, A. Concept of online service for stochastic modeling of real processes. Inform. Primen. 2016, 10, 72–81. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. Gaussian Error Linear Units GELUs. arXiv 2023, arXiv:1606.08415. [Google Scholar] [CrossRef]

- Kang, J.; Liu, R.; Li, Y.; Liu, Q.; Wang, P.; Zhang, Q.; Zhou, D. An Improved 3D Human Pose Estimation Model Based on Temporal Convolution with Gaussian Error Linear Units. In Proceedings of the International Conference on Virtual Rehabilitation, ICVR, Nanjing, China, 26–28 May 2022; pp. 21–32. [Google Scholar] [CrossRef]

- Satyanarayana, D.; Saikiran, E. Nonlinear Dynamic Weight-Salp Swarm Algorithm and Long Short-Term Memory with Gaussian Error Linear Units for Network Intrusion Detection System. Int. J. Intell. Eng. Syst. 2024, 17, 463–473. [Google Scholar] [CrossRef]

- Dostovalova, A. Using a Model of a Spatial-Hierarchical Quadtree with Truncated Branches to Improve the Accuracy of Image Classification. Izv. Atmos. Ocean. Phys. 2023, 59, 1255–1262. [Google Scholar] [CrossRef]

- Xu, K.; Zhang, M.; Jegelka, S.; Kawaguchi, K. Optimization of Graph Neural Networks: Implicit Acceleration by Skip Connections and More Depth. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR: Cambridge, MA, USA, 2021; Volume 139. [Google Scholar]

- Veličkovič, P.; Casanova, A.; Lió, P.; Cucurull, G.; Romero, A.; Bengio, Y. Graph attention networks. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018—Conference Track Proceedings, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar] [CrossRef]

- Qin, F. Blind Single-Image Super Resolution Reconstruction with Gaussian Blur. In Proceedings of the Mechatronics and Automatic Control Systems, Hangzhou, China, 10–11 August 2013; Wang, W., Ed.; Springer: Cham, Switzerland, 2014; pp. 293–301. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar] [CrossRef]

- Zhou, P.; Xie, X.; Lin, Z.; Yan, S. Towards Understanding Convergence and Generalization of AdamW. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6486–6493. [Google Scholar] [CrossRef]

- Zou, F.; Shen, L.; Jie, Z.; Zhang, W.; Liu, W. A sufficient condition for convergences of Adam and RMSProp. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11119–11127. [Google Scholar]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, B.; Yuan, X.; Pan, S.; Tong, H.; Pei, J. Trustworthy Graph Neural Networks: Aspects, Methods, and Trends. Proc. IEEE 2024, 112, 97–139. [Google Scholar] [CrossRef]

- Chandan, G.; Jain, A.; Jain, H.; Mohana. Real Time Object Detection and Tracking Using Deep Learning and OpenCV. In Proceedings of the International Conference on Inventive Research in Computing Applications, ICIRCA 2018, Coimbatore, India, 11–12 July 2018; pp. 1305–1308. [Google Scholar] [CrossRef]

- Belmoukari, B.; Audy, J.F.; Forget, P. Smart port: A systematic literature review. Eur. Transp. Res. Rev. 2023, 15, 4. [Google Scholar] [CrossRef]

| Dataset | Number of Images | Image Size | Resolution (m) | Small Objects | Middle Objects | Large Objects |

|---|---|---|---|---|---|---|

| SSDD | 1160 | 190–668 | 1–10 | 1529 | 935 | 76 |

| HRSID (test) | 1962 | 800 | 0.5–3 | 5176 | 619 | 127 |

| Hyperparameter | Description | Values |

|---|---|---|

| Encoder | Architecture of the basic segmenter | ENet, Fully-Conv. Network (FCN) based on ResNet50 [83], DeepLabV3 (DLv3), SegFormer, LWGANet |

| Pre-train | Pre-trained weights for base networks | None; PyTorch’s weights for DeepLabV3 and FCN; Imagenet, ADE20K |

| Epochs | Number of training epochs of base segmenter | 100–300 |

| lr_decay | Learning rate decay | None; 0.5; 0.8 |

| h | Quadtree height | 4; 5 |

| M | Superpixel size | 4; 8; 16 |

| NN | HRSID | SSDD | ||||

|---|---|---|---|---|---|---|

| Recall | Precision | -Score | Recall | Precision | -Score | |

| ENet | 79.70 ± 10.02 (82.12) | 68.87 ± 10.46 (70.87) | 73.77 ± 9.59 (77.74) | 74.54 ± 7.45 (75.02) | 23.51 ± 18.66 (17.96) | 33.04 ± 19.4 (29.28) |

| DeepLabV3 | 74.68 ± 15.25 (80.1) | 62.84 ± 5.65 (62.75) | 60.45 ± 18.67 (69.97) | 66.93 ± 11.68 (68.72) | 52.76 ± 5.26 (53.58) | 58.35 ± 4.47 (57.81) |

| FCN | 77.82 ± 8.25 (81.06) | 69.55 ± 6.83 (66.99) | 59.87 ± 32.15 (74.13) | 80.54 ± 4.15 (80.55) | 12.70 ± 3.48 (12.71) | 21.87 ± 5.35 (21.86) |

| SegFormer | 73.98 ± 12.84 (78.56) | 76.50 ± 20.42 (85.85) | 74.98 ± 16.59 (82.61) | 57.22 ± 26.57 (68.68) | 38.15 ± 8.43 (39.83) | 44.64 ± 16.05 (50.36) |

| LWGANet | 78.74 ± 12.35 (80.74) | 69.28 ± 16.14 (73.36) | 73.36 ± 13.58 (78.34) | 72.72 ± 9.39 (74.46) | 10.41 ± 2.43 (9.94) | 17.68 ± 3.29 (16.82) |

| Small object-oriented models | ||||||

| AFMA DeepLabV3 | 86.02 ± 11.42 (89.33) | 51.61 ± 15.19 (55.06) | 64.05 ± 14.92 (69.14) | 72.85 ± 8.87 (73.01) | 16.48 ± 5.08 (16.48) | 26.47 ± 6.49 (27.22) |

| AFMA U-Net++ | 88.72 ± 8.39 (91.51) | 47.56 ± 9.47 (51.54) | 62.06 ± 10.43 (66.12) | 63.37 ± 11.59 (64.22) | 33.23 ± 11.42 (31.19) | 40.04 ± 6.31 (39.29) |

| Ensembles specified by type of the network (on the left column) | ||||||

| GCN | 73.89 ± 11.72 (76.72) | 74.50 ± 8.39 (75.79) | 73.84 ± 9.56 (76.92) | 74.22 ± 4.64 (72.93) | 78.14 ± 3.21 (79.28) | 76.09 ± 3.48 (75.55) |

| GAT | 33.68 ± 29.03 (34.44) | 54.17 ± 39.36 (65.43) | 68.08 ± 9.02 (71.54) | 72.41 ± 5.64 (71.62) | 78.48 ± 9.99 (80.03) | 74.87 ± 4.01 (76.58) |

| GSAN | 74.77 ± 16.32 (80.1) | 67.03 ± 8.18 (65.79) | 69.38 ± 13.55 (72.22) | 81.87 ± 2.21 (82.11) | 75.95 ± 4.97 (76.74) | 78.71 ± 2.36 (78.38) |

| GCN-QT | 68.58 ± 13.36 (74.12) | 74.78 ± 18.42 (82.91) | 75.27 ± 10.00 (78.72) | 78.01 ± 6.46 (78.92) | 79.66 ± 5.67 (80.98) | 78.64 ± 4.27 (77.98) |

| GAT-QT | 72.57 ± 18.00 (78.38) | 75.95 ± 20.98 (86.11) | 76.53 ± 10.86 (80.36) | 78.14 ± 4.42 (77.92) | 82.52 ± 4.21 (83.89) | 80.46 ± 3.78 (80.27) |

| QiGSAN | 73.44 ± 12.71 (77.90) | 77.84 ± 21.31 (87.55) | 78.60 ± 11.38 (82.87) | 78.27 ± 4.12 (78.72) | 84.79 ± 4.59 (86.29) | 81.61 ± 3.6 (81.76) |

| Encoder | VanillaValues | Graph NN | |||||

|---|---|---|---|---|---|---|---|

| GCN-QT | QiGSAN | GAT-QT | GCN | GSAN | GAT | ||

| ENet | 73.77 ± 9.59 (77.74) | 71.97 ± 9.01 (75.38) | 75.66 ± 14.6 (79.62) | 72.68±6.79 (74.81) | 61.57 ± 17.39 (64.86) | 56.40 ± 5.1 (58.40) | 11.77 ± 10.29 (9.65) |

| DeepLabV3 | 60.45 ± 18.67 (69.97) | 67.23 ± 14.69 (75.32) | 70.03 ± 14.1 (76.97) | 44.18 ± 28.9 (51.15) | 66.28 ± 13.9 (74.44) | 50.91 ± 19.68 (63.00) | 31.46 ± 19.62 (38.41) |

| FCN | 59.87 ± 32.15 (74.13) | 72.77 ± 8.16 (76.02) | 74.94 ± 7.9 (78.47) | 72.64 ± 4.95 (74.45) | 50.65 ± 34.05 (65.58) | 58.15 ± 6.47 (60.09) | 23.65 ± 21.7 (22.49) |

| SegFormer | 74.98 ± 16.59 (82.61) | 71.48 ± 15.74 (78.82) | 75.31 ± 16.89 (82.59) | 71.67 ± 17.70 (78.75) | 65.51 ± 15.19 (66.83) | 41.06 ± 33.14 (44.86) | 51.75 ± 34.72 (66.55) |

| LWGANet | 73.36 ± 13.58 (78.34) | 75.27 ± 10.00 (78.72) | 78.60 ± 11.38 (82.87) | 76.53 ± 10.86 (80.36) | 73.84 ± 9.56 (76.92) | 69.38 ± 13.55 (72.22) | 68.08 ± 9.02 (71.54) |

| Encoder | VanillaValues | Graph NN | |||||

|---|---|---|---|---|---|---|---|

| GCN-QT | QiGSAN | GAT-QT | GCN | GSAN | GAT | ||

| ENet | 33.04 ± 19.4 (29.28) | 72.63 ± 5.78 (74.56) | 75.54 ± 8.5 (80.07) | 76.09 ± 3.48 (75.55) | 78.71 ± 2.36 (78.38) | 74.87 ± 4.01 (76.58) | |

| DeepLabV3 | 58.35 ± 4.47 (57.81) | 68.20 ± 7.51 (70.23) | 67.81 ± 3.24 (68.51) | 65.84 ± 7.25 (65.73) | 50.92 ± 34.4 (65.25) | 52.72 ± 5.98 (55.80) | |

| FCN | 21.87 ± 5.35 (21.86) | 78.64 ± 4.27 (77.98) | 72.71 ± 10.8 (76.82) | 74.61 ± 5.62 (74.56) | 74.96 ± 3.92 (73.86) | 71.98 ± 7.63 (73.83) | |

| SegFormer | 44.64 ± 16.05 (50.36) | 55.89 ± 19.80 (63.64) | 58.33 ± 20.75 (65.43) | 50.73 ± 20.4 (56.58) | 59.37 ± 13.14 (60.93) | 54.35 ± 15.10 (59.02) | |

| LWGANet | 17.68 ± 3.29 (16.82) | 65.59 ± 21.89 (74.16) | 80.46 ± 3.78 (80.27) | 73.19 ± 1.44 (73.16) | 69.93 ± 17.53 (78.08) | 72.11 ± 8.99 (75.58) | |

| Encoder | VanillaValues | Graph NN | |||||

|---|---|---|---|---|---|---|---|

| GCN-QT | QiGSAN | GAT-QT | GCN | GSAN | GAT | ||

| ENet | 25.08 ± 3.83 (25.98) | 75.05 ± 3.08 (75.05) | 77.14 ± 0.41 (77.94) | 73.32 ± 0.88 (73.32) | 78.90 ± 4.53 (78.92) | 71.02 ± 4.3 (73.12) | |

| DeepLabV3 | 51.32 ± 5.80 (50.59) | 63.26 ± 7.54 (63.09) | 66.11 ± 3.75 (63.74) | 62.47 ± 4.80 (60.51) | 55.82 ± 19.73 (62.84) | 50.32 ± 12.56 (51.52) | |

| FCN | 25.69 ± 13.06 (25.37) | 69.78 ± 5.68 (69.38) | 68.24 ± 9.13 (68.14) | 71.06 ± 2.09 (71.16) | 67.35 ± 10.53 (67.75) | 70.41 ± 2.51 (70.85) | |

| SegFormer | 45.32 ± 5.12 (44.47) | 67.19 ± 2.49 (68.09) | 72.95 ± 1.17 (72.75) | 67.28 ± 0.81 (65.18) | 65.04 ± 1.94 (63.74) | 66.2 ± 0.28 (65.9) | |

| LWGANet | 20.34 ± 8.82 (20.34) | 80.41 ± 1.47 (81.91) | 82.83 ± 0.78 (82.22) | 79.61 ± 1.97 (78.06) | 80.01 ± 2.63 (79.11) | 78.16 ± 3.39 (76.55) | |

| ENet | DeepLabV3 | FCN | LWGANet | Segformer | GCN | GAT | GSAN | QiGCN | QiGAT | QiGSAN | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GFLOPs | 46.6 | 157.74 | 2360 | 196 | 88.7 | 168.16 | 168.08 | 168.08 | 174.84 | 174.72 | 174.76 |

| Params | 358.7 | 11029 | 35322 | 12583 | 3755 | 49.8 | 48.5 | 48.5 | 370.3 | 370.1 | 370.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gorshenin, A.; Dostovalova, A. QiGSAN: A Novel Probability-Informed Approach for Small Object Segmentation in the Case of Limited Image Datasets. Big Data Cogn. Comput. 2025, 9, 239. https://doi.org/10.3390/bdcc9090239

Gorshenin A, Dostovalova A. QiGSAN: A Novel Probability-Informed Approach for Small Object Segmentation in the Case of Limited Image Datasets. Big Data and Cognitive Computing. 2025; 9(9):239. https://doi.org/10.3390/bdcc9090239

Chicago/Turabian StyleGorshenin, Andrey, and Anastasia Dostovalova. 2025. "QiGSAN: A Novel Probability-Informed Approach for Small Object Segmentation in the Case of Limited Image Datasets" Big Data and Cognitive Computing 9, no. 9: 239. https://doi.org/10.3390/bdcc9090239

APA StyleGorshenin, A., & Dostovalova, A. (2025). QiGSAN: A Novel Probability-Informed Approach for Small Object Segmentation in the Case of Limited Image Datasets. Big Data and Cognitive Computing, 9(9), 239. https://doi.org/10.3390/bdcc9090239