1. Introduction

It has long been known that the quality, frequency and consistency of training have a direct impact on the perceived preparedness of first responders. According to Xie et al. [

1], traditional training methods include classroom instruction, web-based training using presentation materials and realistic exercises. Hsu et al. [

2] show that although these forms are widely used and effective for acquiring knowledge, they face significant limitations, particularly in terms of time demands, financial costs and safety risks. The authors also emphasize that during large-scale crisis events, the effectiveness of the response depends not only on acquired skills but also on the psychological state of responders and their prior experience.

As an innovative alternative, technologies based on virtual reality (VR) have gained prominence in recent years. In their study, Kman et al. [

3] state that the development of an effective, sustainable and adaptable VR training and assessment system will contribute to preparing first responders for the effective management of skills related to triage and mass casualty incident (MCI) site management, ultimately leading to lives saved. According to Lindner et al. [

4], VR-based simulation training with automated feedback can offer long-term learning benefits compared to traditional emergency medicine seminars. Halford et al. [

5], in a study on the acceptability of VR training, found that participants expressed a preference for VR-based training over classroom instruction. Due to its ability to simulate realistic and interactive scenarios that are otherwise difficult or dangerous to conduct, such as armed assailant interventions [

6], large-scale fires [

1] or mass casualty incidents [

3], VR offers new opportunities for improving professional training, decision making processes and tactical procedures of first responders. Articles [

7] and [

8] further state that VR is considered a safe and effective tool for training in high-risk scenarios. In addition to tactical interventions in crisis situations, VR also enables systematic crime scene investigation (SI) training, whether in the form of police forensic examination or fire cause analysis. Evidence collection, marking, documentation and evaluation place high demands on precision, expertise and adherence to established forensic procedures, as noted by LuminousXR [

9]. Furthermore, Alketbi [

10] points out that even seemingly minor errors, such as contamination of a crime scene or neglecting key evidence, can result in the invalidation of evidence and jeopardize entire criminal proceedings. Document [

11] then describes that corresponding VR applications allow practicing the documentation, collection and marking of evidence.

Research on the use of VR technologies and applications for first responder training represents an interdisciplinary field, linking education (new didactic approaches), technology (development of VR platforms, interactive tools and wearable systems) and security applications (police and firefighter training). This study therefore focuses on the use of VR in the security and educational context, with an emphasis on monitoring biomedical data, which can serve as objective indicators for assessing the effectiveness of training. This study addressed two main research questions: whether exposure to VR training scenarios induces measurable physiological changes in heart rate and heart rate variability, and whether these responses differ between police and firefighter scenarios. The aim is to demonstrate that the combination of immersive VR environments with biomedical data monitoring allows for an objective assessment of participants’ physiological and cognitive load. The article is structured into several chapters: Chapter 2 summarizes the current state of VR applications in training and biomedical data monitoring, Chapter 3 describes the methodology of the pilot study, Chapter 4 presents the results of physiological and performance measurements, Chapter 5 discusses the main findings and their limitations in relation to the literature, and Chapter 6 provides conclusions and recommendations for future research.

2. Current State

Most existing VR applications intended for first responders focus primarily on tactical and crisis scenarios. A concrete example is the study by Xie et al. [

1], which describes the development of VR technologies for various training applications, particularly in police and firefighting interventions. The study by Kman et al. [

3] subsequently describes VR applications for managing mass casualty incidents. Another study by Zechner et al. [

6] focuses on VR applications for improving operational police training, especially in situations such as active shooter incidents. In these types of events, the effectiveness of the intervention and its resolution depend on whether first responders can remain calm and perform their duties according to prescribed procedures. However, in both police and firefighting practice, a significant part of the work also involves systematic and methodical crime scene investigation, whether from the perspective of police criminal investigations (e.g., in cases of homicide, burglary, or violent crimes) or fire cause investigations conducted by firefighters (e.g., suspected arson, technical malfunction, or negligence). These activities are less dynamic than crisis interventions but are crucial for subsequent criminal or administrative proceedings.

VR scenarios for crime scene investigation differ significantly from tactical and crisis simulations in several key aspects. RealityCapture Training in cooperation with the Bavarian LKA [

12] emphasizes the need for detail and accuracy through the use of authentic 3D models of real crime scenes. The RiVR Investigate platform [

13] provides a wide range of interactive tools and aids that support documentation and evidence collection. The Envision XR system [

14] and the DHS report [

11] highlight the importance of feedback and evaluation of methodological accuracy. Zbrog and Steele [

15] emphasize the repeatability and variability of scenarios, which enable participants to repeatedly train under changing conditions.

In response to the growing demand for effective, safe, and standardized training in crime scene investigation, the use of VR as a tool for realistic simulation of various investigative scenarios has been increasingly promoted in recent years. The following examples illustrate how VR can be used to practice standardized procedures in documenting, collecting, and evaluating evidence at crime scenes. In forensic investigations, VR scenarios focus on simulating violent crimes, burglaries, or traffic accidents. Users can freely move around in an immersive environment, take photo documentation, mark and collect evidence, using tools such as ultraviolet lights, measuring tapes, or markers. A key element of these scenarios is their non-linear structure, which develops critical thinking, decision making, and adaptability to changing conditions. A specific area is the use of VR in firefighter and fire investigation training, where the emphasis is placed primarily on reconstructing fire sites. Advanced functions such as 3D scanning of real scenes are used. VR environments thus faithfully reproduce burn traces, types of equipment damage, and different sources of fire origin. Users can move freely in VR, collect evidence using interactive tools (e.g., gas detectors, photo documentation), mark discovered traces, and the entire process is recorded for later instructor analysis.

Several commercial and academic solutions offer training platforms for teaching crime scene investigation. An example is the VR application developed by Envision Innovative Solutions, which created the modular Envision XR Forensic Crime-Scene Simulator. According to the DHS report [

11], this system includes 10 different immersive environments ranging from rural locations to urban areas, both during the day and at night. Users can freely move within the VR scene, photograph the crime scene, collect evidence, and store it in the correct containers. The designed system also supports visual and audio recording and playback of training sessions using the so-called “see-what-I-see” method, which allows instructors to review the complete record, analyze the sequence of steps, training duration, and the amount of evidence collected. Luminous XR, according to the information available on its official website [

11], offers a flexible educational platform called Crime Scene Forensic Training Simulation. Scenarios are not pre-programmed but are data-driven, which allows variability such as changing the type and location of evidence and adapting the scene to individual needs. Another example is the VR platform developed by RealityCapture Training, which, according to information on its official website [

12], in cooperation with the Bavarian State Criminal Police Office (Bayerisches LKA), created the innovative training system Investigations in Virtual Reality. Training is conducted within a specialized three-day course, “3D Crime Scene & Accident Reconstruction with RealityCapture,” aimed at teaching forensic experts how to acquire evidence from crime scenes using photogrammetry, LiDAR technology, and aerial imaging. VR scenarios are based on authentic 3D models of real crime scenes, including fire and traffic accident sites, recorded in controlled conditions in cooperation with LKA. The scenarios allow for role division among investigators, experts, or observers, and also offer advanced tools for retrospective analysis, including motion tracking, eye-tracking, action timelines, or visualization of individual investigative steps. The system is built on the Unreal Engine platform with an open architecture that supports flexible deployment on various VR devices (e.g., HTC Vive, HP Reverb G2). A commercial example is the RiVR Investigate training system developed by Reality in Virtual Reality, which is described in detail on the manufacturer’s official website [

13]. The system includes the VR Monitor module, which allows recording of the entire investigative sequence, its replay, and instructor analysis of each step, including metrics such as timeline, decision accuracy, and methodological compliance. This training system is used, for example, by British firefighters and police, and according to the information in a product case study [

16], it meets the current IAAI certification standards for fire investigator training. Another example is the university VR platform for forensic training of students, developed at Wilfrid Laurier University [

17], in which users independently decide on the course of the investigation, from entering the crime scene through documentation and evidence collection to final analysis. An interesting feature is the possibility of linking to a physical forensic laboratory, where evidence found in a VR scenario can be exported and further analyzed as part of a follow-up exercise. Through an integrated system, the results are then evaluated and visualized in performance dashboards, which enable instructors to objectively assess performance, identify errors, and provide more targeted feedback.

With the growing use of VR technologies and training platforms, there are also increasing demands for objective evaluation of their contribution to improving performance in real-life situations. As noted in the IEEE Public Safety report [

8], systematic measurement and evaluation of VR training effectiveness is a key aspect of implementing immersive technologies into professional preparation, as it allows verification of the actual impact on first responders’ performance and preparedness. Effectiveness is commonly assessed using quantitative and qualitative methods, which include direct measurement of participants’ performance (e.g., reaction times, decision accuracy, compliance with procedures, standardized questionnaires), physiological measurement in VR, as well as subjective perception, confidence, or transferability of acquired skills and experiences. According to the review study by Xie et al. [

1], quantitative evaluation most often includes pre- and post-tests, while the study by Kman et al. [

3] mentions performance metrics. In the study by Naismith et al. [

18], questionnaires play a significant role, allowing the comparison of baseline and achieved participant levels after completing VR scenarios. A major advantage of VR is the ability to automatically record detailed performance data, for example, through integrated performance dashboards, which according to Zbrog and Steele [

15] enable instructors to objectively evaluate performance, identify errors, and provide targeted feedback. These systems log, for example, reaction times, task accuracy, number of errors or correct decisions, and other indicators. The study by Kman et al. [

3] shows that in triage training, evaluation typically focuses on the accuracy of patient prioritization, sorting speed, and the quality of patient information handover. A similar approach can be applied in police simulations, where according to RealityCapture Training [

12], it is possible to evaluate how correctly and quickly responders identified the threat, whether they followed prescribed procedures, etc.

In the context of subjective performance evaluation through questionnaires, Aguilar Reyes et al. [

19] report that it is possible to assess factors such as user experience (UX), adverse effects using the System Usability Scale (SUS), cognitive load using the NASA Task Load Index (NASA-TLX), or technology acceptance using the Technology Acceptance Model (TAM). In the study by Halford et al. [

5], the authors conducted a questionnaire survey based on TAM to evaluate the benefits of a VR fire scene simulation for forensic training purposes. In another study, Way et al. [

20] used questionnaires and two open-ended questions to evaluate a VR mass casualty simulation from the perspective of healthcare workers. The results enabled researchers to comprehensively assess the effectiveness of VR training in terms of benefits, usability, and suggestions for improvement. For the evaluation of specific skills, specialized scales are also used. An example is the DePICT™ scale, designed for police officers to assess de-escalation skills, which was applied by Muñoz et al. [

21] to objectively evaluate performance in a VR crisis intervention scenario.

For objective performance evaluation, physiological measurements can also be used, aimed at capturing the body’s biological responses during training. Common approaches include monitoring heart activity (HR), specifically its variability (HRV). In the study by Tovar et al. [

22], HRV was used to monitor sympathetic nervous system (SNS) responses in medical trainees and physicians during a VR mass casualty simulation. Other physiological parameters include blood pressure, electrodermal activity (EDA), stress hormone levels, or electroencephalography (EEG). Alexander [

23] describes the use of EEG measurement in combination with VR to assess police decision making processes, while Rosa et al. [

24] state that in long-duration simulated VR missions, it is possible to monitor not only cognitive performance and fatigue but also participants’ physiological strain. In recent years, only a few studies have focused directly on training and simultaneously monitoring biomedical data in VR. For example, Martaindale et al. [

25] combined a VR shooting simulation with EEG recordings to compare police officers’ neural responses when making “shoot/don’t shoot” decisions depending on their training level. Another study by Groer et al. [

26] examined police officers’ physiological responses to stress in VR by measuring cortisol levels. The results of this study suggested that VR can induce stress and immune responses. Linssen et al. [

27] subsequently monitored physiological stress in soldiers during dynamic VR scenarios using accelerometry and HR measurements. The study by Tovar et al. [

22] broadly assessed whether VR simulations can be considered an adequate substitute for in-person training of doctors and paramedics in mass casualty incidents, based on monitoring changes in sympathetic nervous system activity (SNS) via HRV. In the study by Brunyé and Giles [

28], the Decision Making under Uncertainty and Stress (DeMUS) methodology was introduced, designed to induce and measure stress responses in VR through physiological indicators (HR, pupil diameter) and biochemical markers (salivary alpha-amylase, cortisol). The ESC/NASPE Task Force [

29] defines the standards for HRV measurement and interpretation, while the study by Solhjoo et al. [

30] demonstrated that HR and HRV correlate with clinical reasoning and self-reported cognitive load. In the study by Slater et al. [

31], HR measurement was used to confirm the illusion of body ownership in VR, showing a slowing of the heart rate in response to a threat to the virtual body. These results demonstrate that VR can elicit real stress and emotional responses. The study by Berntson et al. [

32] further states that key psychophysiological indicators of the cardiovascular system include HR and blood pressure, with HRV playing an important role as a sensitive indicator of autonomic regulation and stress load. In the study by Ma and Hommel [

33], electrodermal activity (EDA) was measured to verify whether participants physiologically responded to a threat directed at a virtual hand. Brouwer et al. [

34] used a VR bomb attack simulation combined with EEG, HRV, and cortisol measurement to objectively capture stress responses. The study confirmed that VR can reliably induce stress and that these physiological indicators can be used to assess participants’ load.

Physiological measurements can be used not only to assess stress load but also to evaluate cognitive load, which can directly affect the ability to learn, make decisions, and manage demanding situations. Its assessment provides valuable feedback on the appropriateness and difficulty of VR scenarios. Mugford et al. [

35] describe training strategies based on cognitive load theory for optimizing police training. Allen et al. [

36] showed that increased cognitive load impairs the accuracy of behavioral decision making. Pušica et al. [

37] demonstrated that the use of EEG and deep learning methods makes it possible to classify levels of cognitive load. Objective monitoring of physiological manifestations can therefore serve not only to detect acute overload, stress, and cognitive load but also as a tool for evaluating individual readiness.

3. Materials and Methods

Based on the findings from the review of the current state of research, a method for evaluating the effectiveness of VR training was proposed and subsequently tested in a pilot measurement. Two types of training scenarios focused on crime scene investigation were compared, both designed to simulate realistic situations requiring a systematic approach, attention to detail, and adherence to standardized procedures. The effectiveness of the training was assessed through participants’ physiological responses using HRV parameters, as well as through the evaluation of cognitive load during the resolution of individual scenarios. In addition, performance metrics were included, specifically the number of pieces of evidence found, the accuracy of scenario identification, and the duration of VR exposure. This chapter provides a complete description of the methodology of the pilot measurement, including participant characteristics, a description of the VR scenarios, the technology used for recording physiological parameters, the selected physiological indicators, the data processing procedure, and the applied statistical analyses.

Measurement procedures

The pilot measurement was conducted on 10 participants. The basic characteristics of the participant sample included an age range of 21–28 years, height of 160–181 cm, and weight of 53–85 kg. To eliminate the influence of unfamiliarity with VR controls, an introductory session was conducted first. This session took place a few days before the start of the so-called “main measurement.” During the familiarization session, participants completed a tutorial in which they learned basic movement within the environment, object manipulation, methods of evidence documentation, etc. This introductory session ensured a uniform level of skills and orientation in the simulated environment for all participants. Subsequently, each participant completed two separate main measurements, conducted approximately one week apart. The first measurement focused on police scenarios, while the second measurement focused on firefighter scenarios. Both types of scenarios were designed to realistically simulate the process of crime scene investigation within the respective professional domain. Each measurement followed an identical structure, consisting of three blocks. Each block represented a different scenario variant. In each block, the participant first underwent a so-called “baseline” (BL) measurement, which served to determine initial physiological values. This measurement was important for establishing reference values, which were then used as a comparison framework against the values obtained during the VR measurement. The duration of the BL measurement was set to 10 min. After the BL measurement, the VR session followed, with a maximum duration of 10 min. This time was based on previous pilot testing of individual scenarios and was considered sufficient to elicit relevant physiological responses without excessive strain. Between individual blocks, there was always at least a one-hour break, which was deemed sufficient to ensure rest and the return of physiological parameters to BL levels. This measure prevented potential results from being influenced by cumulative load or fatigue from the previous block. The measurement also included the monitoring of performance indicators (i.e., duration, number of documented pieces of evidence, determination of cause of death) of participants within each scenario. These indicators served as basic metrics for evaluating task completion success and allowed comparison of cognitive performance between individual participants and scenarios. All measurements were carried out in designated laboratory spaces that provided sufficient room for free movement.

Description of the Virtual Reality Scenarios

Both training scenarios (police and firefighter) were designed according to the same principle. Each scenario was divided into three blocks (Block 1–3), which represented different variants of the incident. The structure of the environment remained consistent across blocks, with only the cause of the incident and the type or distribution of forensic evidence changing. The task of the participants in each block was to systematically examine the scene, document all available evidence using an interactive tool (a virtual camera or a smartwatch-like device), and finally determine the cause of the incident. The instructor subsequently recorded not only the correctness of the selected conclusion but also the number of documented pieces of evidence, the time spent in VR, and the approach used to evaluate individual causes. The results were deliberately not disclosed to the participants after completing each block in order to minimize cognitive bias in subsequent scenarios. This ensured that participants were not influenced by their previous success or failure, and their decision making in the following blocks remained independent. At the same time, this approach allowed for an objective comparison of performance across blocks without the confounding effect of learning or strategy adjustment based on prior feedback.

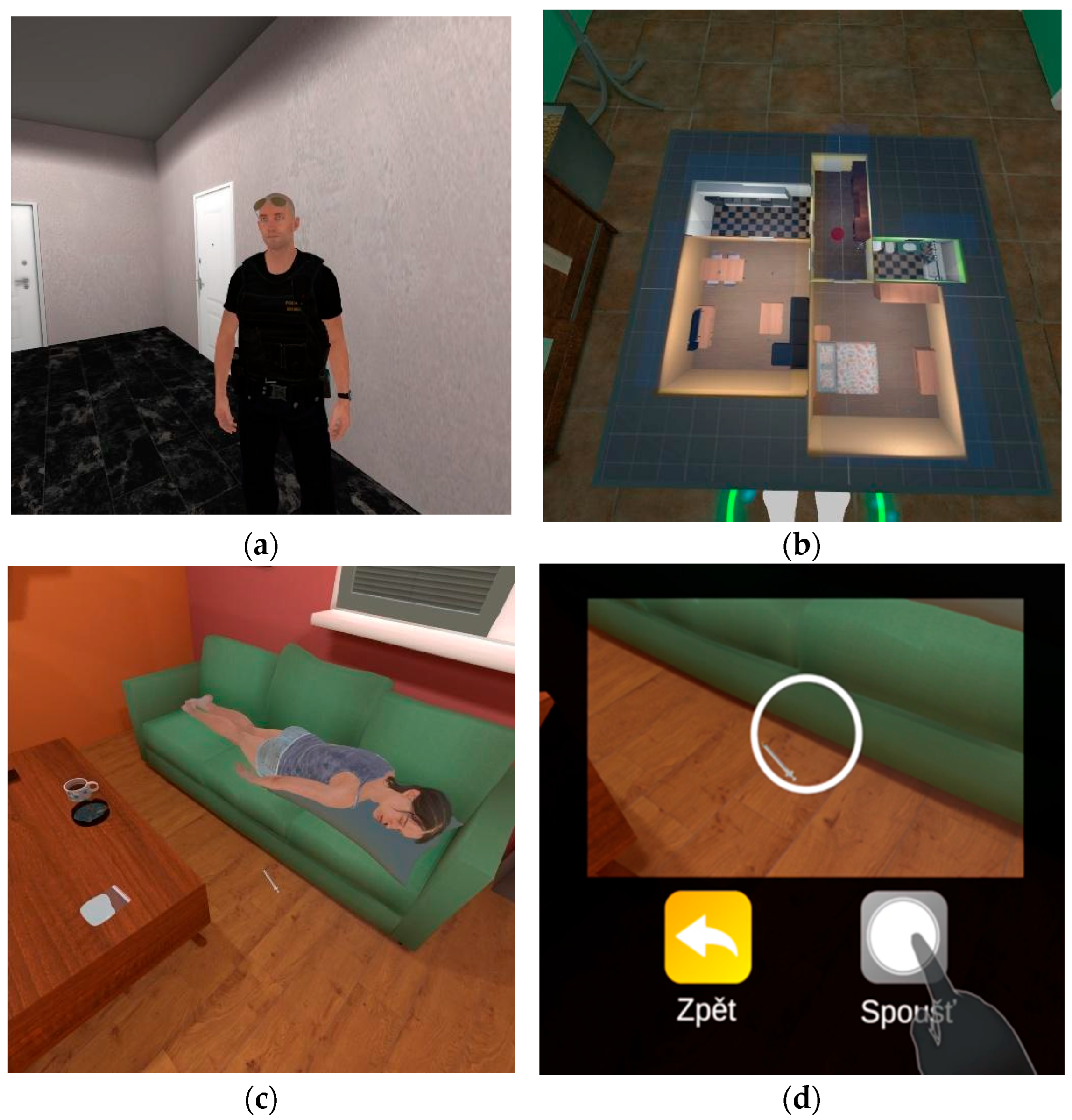

The police scenario involved three causes of death: Block 1—sudden natural death, Block 2—suicide, Block 3—homicide. The key interactive element was the documentation of evidence (e.g., the victim’s body, blood traces, weapons, medications, or letters) using a smartwatch with an integrated camera. An illustration of the VR scene is shown in

Figure 1, which demonstrates, based on screenshots from the application, the main elements of user interaction, i.e., the investigator entering the crime scene, the use of an interactive map for navigation, the discovery of the victim, and the documentation of forensic evidence.

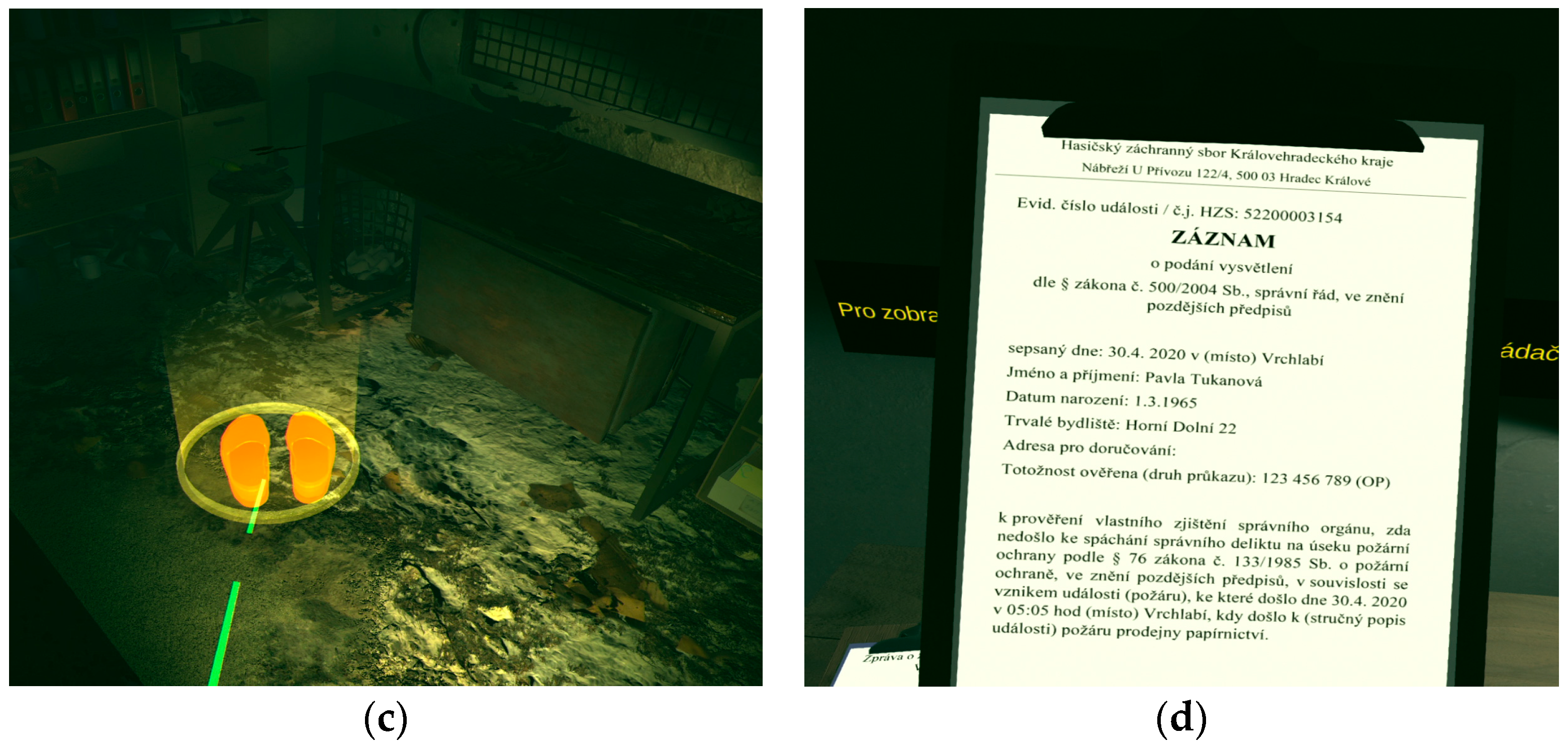

The firefighter scenario simulated the investigation of a fire in a real commercial stationery store. The blocks differed in the cause of the fire: Block 1—technical fault (short circuit in an electrical socket), Block 2—human negligence (a cigarette butt left in an ashtray), Block 3—proximity of flammable textile to a heating unit. The key interactive element was the documentation of evidence (e.g., burn marks, charred electrical appliances, or remnants of the heating unit) using a virtual camera. An illustration of the VR scene is shown in

Figure 2, where screenshots from the application present the participants’ key tasks, i.e., working with the camera, collecting evidence, navigating the environment, and accessing the report from the responding firefighters.

VR Technology

Virtual reality in the study was implemented using two types of devices. For the police scenarios, the Meta Quest 2 VR headset (scenario developer and VR system assembly: XR Institute s.r.o., Pilsen, Czech Republic) was used, which operates as a standalone device and does not require connection to an external computer. Interaction with the virtual environment was carried out using hand tracking technology, which detects and recognizes hand movements without the need for controllers. The headset has integrated cameras and sensors for gesture detection and spatial manipulation, enabling natural control (e.g., when documenting evidence or moving between rooms).

For the firefighter scenarios, the more advanced Meta Quest 3 headset (scenario developer and VR system assembly: CPBS Institute z.s., Prague, Czech Republic) was used in combination with standard handheld controllers and an external computer.

Technology for Physiological Measurement

For monitoring physiological parameters, in both cases a Movesense Flash wireless sensor (Movesense Ltd., Vantaa, Finland) was used to record ECG data. The sensor was placed on an elastic chest strap secured around the participant’s chest. Throughout the entire duration of the pilot measurement, ECG signals were continuously recorded. The collected ECG data served as the basis for calculating HRV parameters, which were then used to evaluate the level of cognitive load during interaction with the individual scenarios.

Selected Physiological Indicators

HRV analysis is a commonly used tool for assessing the activity of the autonomic nervous system (ANS) under mental and physical load. For the purposes of this study, three basic indicators were selected—HR and HRV parameters in the time domain, specifically the standard deviation of NN intervals (SDNN) and the root mean square of successive differences (RMSSD). These indicators were chosen for their simplicity and ease of interpretation. Their applicability has also been demonstrated in studies on VR training described in the state-of-the-art section of this paper.

The following description of the selected indicators is based on the methodological standards of HRV analysis outlined in [

38,

39,

40,

41]. HR is the most fundamental indicator of cardiac activity. Under increased load, the sympathetic branch of the ANS is activated, which is typically manifested by an increase in HR. In the context of VR exposure, measuring HR therefore makes it possible to track the immediate physiological response of participants to simulated stressful situations. The SDNN parameter reflects the overall variability of heart rate, as it incorporates contributions from both sympathetic and parasympathetic activity. It is defined as the standard deviation of NN intervals, with abnormal beats (e.g., ectopic beats) removed from the analysis. Higher and more irregular HRV results in higher SDNN values, which can thus serve as an indicator of physiological resilience to stress. During higher cognitive load, SDNN tends to decrease, whereas during lower load or relaxation, it may increase. RMSSD reflects short-term changes in heart rate variability between successive beats and is considered the main time-domain indicator reflecting vagally mediated parasympathetic activity. Due to its nature, RMSSD is highly sensitive to changes associated with stress and cognitive load, where under higher load, vagal activity is suppressed, leading to shorter NN intervals and a decrease in RMSSD. Conversely, during lower load or relaxation, RMSSD increases, indicating enhanced parasympathetic activity and vagal tone. For both of these indicators, a minimum recording length of 5 min is recommended.

Since some recordings in this study were shorter than 5 min, logarithmically transformed indicators lnSDNN and lnRMSSD were used for the analysis. These transformed parameters are considered reliable even for short-term and ultra-short-term recordings and were therefore selected to ensure consistency of analysis across all measured blocks. This approach has specifically been applied in the studies of Tanoue et al. [

42] and Hung et al. [

43].

Data Processing

Heart activity data and HRV parameters were obtained using the Movesense Flash wireless sensor, which continuously recorded the ECG signal throughout the entire duration of the experiment. The collected data were analyzed using a script implemented in the Python 3.10 programming language. The recordings were organized into a directory structure reflecting participant IDs, scenario types, and measurement phases. Based on time stamps, it was possible to precisely reconstruct the course of each block. For the purposes of analysis, the signals were divided into overlapping time segments of 30 s with a 15 s overlap. This approach made it possible to capture the dynamics of changes in heart activity with higher temporal resolution. Each segment was then subjected to preprocessing, which included the removal of artifacts caused, for example, by movement. For this purpose, a fifth-order Butterworth high-pass filter with a cutoff frequency of 0.5 Hz was applied to suppress slow baseline drifts typical for ECG signals recorded during motion. The next step of the analysis was the detection of R-waves using an algorithm based on stationary wavelet transform (SWT) instead of a conventional bandpass filter. SWT is a method of decomposing a signal into uniform frequency bands using a mother wavelet and is used to remove noise and enhance QRS complexes. In the applied algorithm, a third-order Daubechies wavelet was used for SWT. The same ECG signal processing procedure was successfully applied in a previous study [

44] focusing on measuring physiological load during various types of physical activities. Subsequently, standard HRV parameters were calculated. Each segment was then classified according to the phase of the experiment in which it was recorded, i.e., as baseline (resting phase prior to measurement) or VR exposure (active block).

In addition to classical HRV analysis, an alternative approach was tested in parallel, based on cognitive load classification using a deep learning model. After the measurements were completed, the ECG recordings were downloaded and further processed in Python using the Keras library for the implementation of a machine learning model. For this purpose, a neural network classifier trained on a reference dataset containing ECG recordings of individuals exposed to typical forms of cognitive load (e.g., solving mathematical and logical tasks, public speaking, etc.) was used. The output was a probabilistic classification of cognitive load intensity. The recordings were analyzed in 30 s time windows. The applied model achieved 93% accuracy, 98% sensitivity, and 84% specificity.

Statistical Analysis

Descriptive data obtained from ECG recordings (parameters HR, lnSDNN, lnRMSSD) were processed in overlapping segments of 30 s with a 15 s shift within each scenario (police, firefighter), block (Block 1–3), and phase (baseline [BL], VR exposure [VR]). Thus, each block and phase produced several dozen values for each participant. The aggregated results across the entire sample (n = 10) are presented as medians, first quartiles (Q1), and third quartiles (Q3). The tables in the Results section therefore represent the distribution of values for the given parameter across all analyzed segments in each condition.

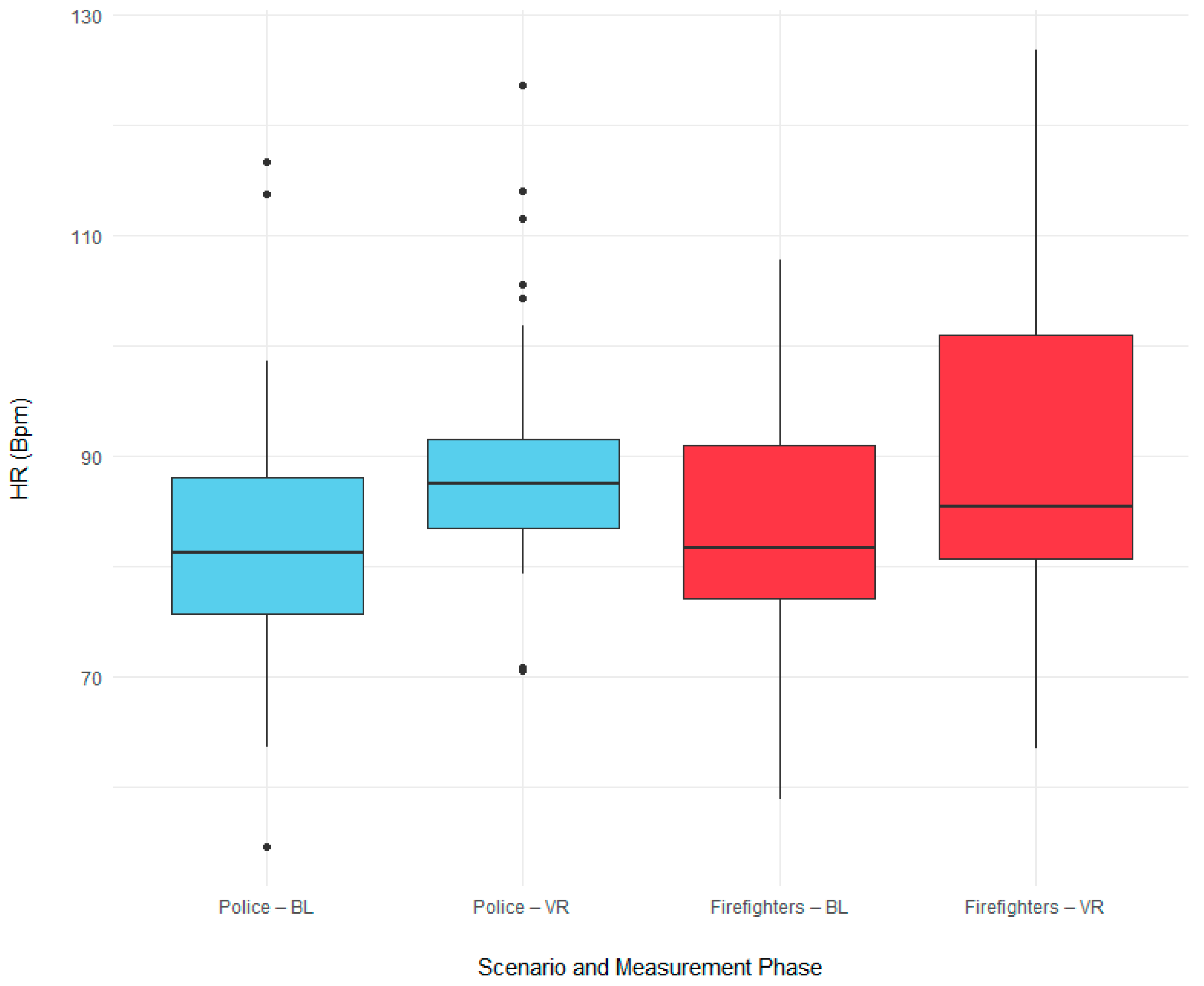

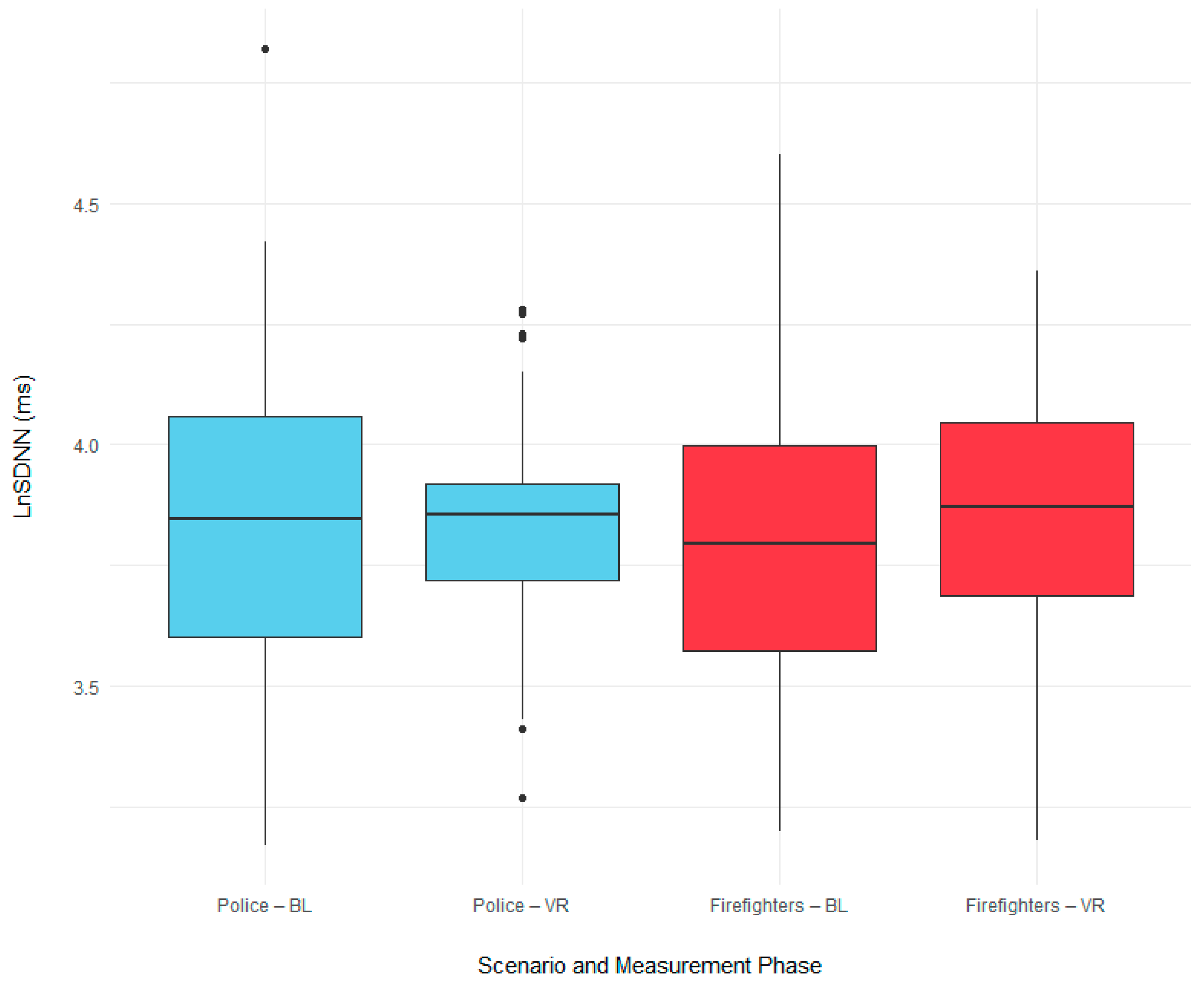

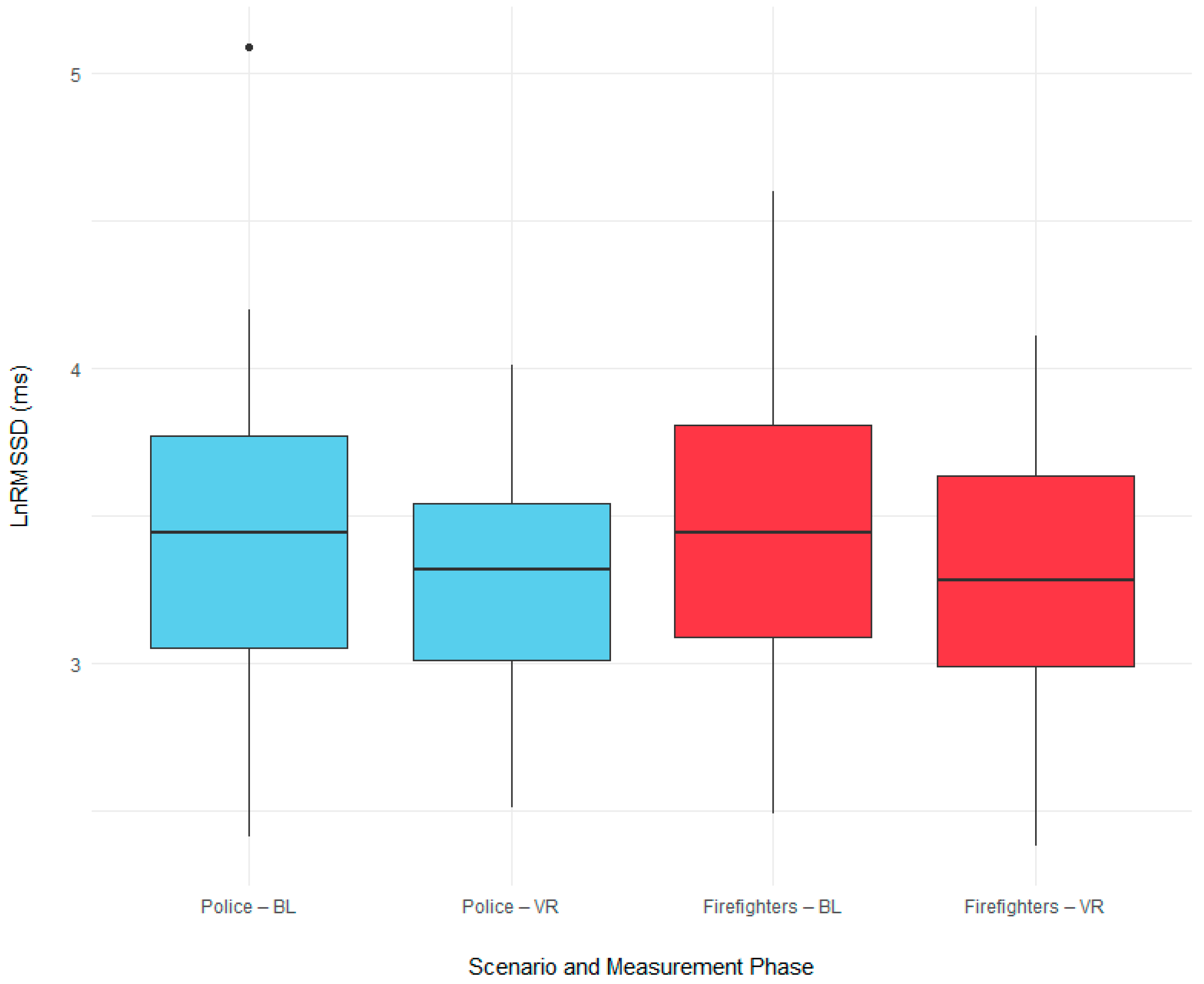

To illustrate overall differences between the BL and VR exposure within individual scenarios, summary boxplots were created. These plots include all measurements across individual blocks (Block 1–Block 3) and all participants (P1–P10). Each box represents an aggregated value distribution (n = 30).

The normality of the data distribution was tested separately for each participant, block, and scenario using the Shapiro–Wilk test. For most HRV parameters, the assumption of normality was not met; therefore, non-parametric methods were used to compare values between phases, specifically the Wilcoxon signed-rank test (applied separately for each block, participant, and scenario). Since the duration of VR and BL phases could differ in some recordings, only the BL segment corresponding to the VR duration in the given block and scenario was analyzed. Statistical significance was evaluated at the p ≤ 0.05 level.

5. Discussion

In the review of the current state of the field, it is evident that when searching for VR applications for first responder training, the majority of results are still primarily oriented toward practicing crisis and tactical response procedures. In recent years, however, development has also expanded to include scenarios for systematic crime scene investigation and forensic tasks. Properly designed VR training should allow for realistic practice, with particular emphasis on attention to detail and accuracy, the availability of interactive tools, the provision of feedback and evaluation of methodological correctness, as well as repeatability and variability of scenarios. These aspects are crucial for ensuring the effective transfer of simulated situations into real first responder practice.

Within the pilot measurement, two different types of scenarios were tested depending on the professional domain (police and firefighters). Both scenarios, however, focused on systematic crime scene investigation in a realistic and at the same time-controlled environment. The police scenario was designed as a series of three cases, in which it was necessary to determine the cause of death based on available forensic evidence and contextual information. The firefighter scenario was thematically focused on analyzing the cause of a fire in a building, where the task was to gradually exclude incorrect causes and determine the most probable one based on documentation of individual traces. In both scenarios, participants had access to a large amount of evidence, which they had to evaluate for relevance. This detailed work with traces corresponds to the requirements of real investigations, where every detail can influence the direction of the case. As emphasized by Luminous XR [

11], precision and the ability to document even minor traces are critical for successful forensic investigation.

In the VR environment, participants also had access to interactive tools that supported documentation and orientation. A key element was the camera. In the police scenario, evidence was documented using a smartwatch camera, whereas in the firefighter scenario participants were equipped with a virtual camera. The firefighter scenario also included additional aids such as lamps and measuring tapes. Modern VR simulators for forensic training typically include such interactive elements. For example, RiVR Investigate [

13] provides a virtual camera, markers, a flashlight, and a gas detector to enable realistic evidence collection.

The police scenario allowed for the automatic recording of the number of documented items and the selected cause of death, which was evaluated at the end in the form of a summary table. Thus, participants received immediate feedback on their performance. In contrast, in the firefighter scenario, participants were required to complete a form excluding possible fire causes step by step, and the success rate was assessed by the instructor. Therefore, the firefighter scenario did not include automatic performance evaluation and required the presence of an instructor. In the development of advanced VR platforms, such as the Envision XR Forensic Crime Scene Simulator [

11] and RiVR Investigate [

13], emphasis is placed on detailed monitoring and evaluation of trainees’ performance, with many platforms offering “see what I see” functions for instructors as well as automatically generated reports on the scenario process. Within our pilot measurement, however, we decided not to display interim results or correctness of conclusions directly to participants after completing each block. This approach prevented cognitive bias in subsequent scenarios, ensuring that participants were not influenced by previous success or failure and that their decision making in each block remained independent. This procedure also ensured that individual performances could be objectively compared across scenarios, without the learning effect or strategy adjustments based on prior feedback.

Both tested scenarios allowed for repeatability and variability, which was demonstrated through individual blocks, with each block containing a different scenario variant. The structure of the environment and controls remained consistent across all scenarios, ensuring comparable conditions for all participants and enabling subsequent comparison of results between blocks and participants. From a training perspective, such variability is important for developing adaptability and critical thinking. As highlighted in [

45], VR training represents a significant advantage over standard exercises, since the virtual environment can be reset and repeated multiple times without financial and logistical burden.

In summary, both tested scenarios met the established criteria, as they offered detailed and accurate environments with relevant traces, provided participants with necessary tools, enabled measurement and evaluation of their performance, and were designed to be repeatable with situational variations. The main limitations, compared to the most advanced commercial VR applications, were a narrower range of interactive tools and the absence of subsequent evidence handling and packaging, which would contribute to more realistic forensic practice training.

To address the research questions of this study, namely whether exposure to VR training scenarios induces measurable physiological changes in HR and HRV, and whether these responses differ between police and firefighter contexts, an objective evaluation of scenario effectiveness was carried out through pilot testing. The HRV parameters, which reliably reflect autonomic regulation, were statistically assessed. Analysis of the results showed that in both tested scenarios, significant differences were observed in HR and lnRMSSD across all participants and blocks (

p ≤ 0.05), see

Table 3 and

Table 4. The greatest changes were observed in HR. Only in Block 2 were no significant differences recorded, specifically in three of 30 cases in the police scenario (

p = 0.017 for P1,

p = 0.082 for P4,

p = 0.112 for P9) and in two of 30 cases in the firefighter scenario (

p = 0.419 for P2,

p = 0.369 for P8). Regarding lnRMSSD, significant changes in at least two blocks were observed in eight out of ten participants across both scenarios (

p < 0.05). Only participant P8 showed no statistically significant differences in the police scenario. In contrast, for lnSDNN, significant changes in at least two blocks were observed in only four out of ten participants. Across the entire measurement, only participant P6 showed statistically significant differences in all measured parameters across all blocks and both scenarios.

To illustrate overall differences between the two scenarios, summary boxplots were created, including all measurements across blocks and participants for each VR scenario separately (police and firefighter), see

Figure 3,

Figure 4 and

Figure 5. The aim was to compare physiological changes and determine in which type of training environment group changes were more pronounced. In the VR phase of both scenarios, an increase in medians compared to BL is observable, with a more pronounced spread in the police scenario (see

Figure 3 and

Figure 4). In the firefighter scenario, a slight increase in the median during the VR phase is evident, while in the police scenario the median remained approximately the same. The wider interquartile range and the presence of numerous outliers in the VR phase of the police scenario suggest considerable interindividual variability (see

Figure 3 and

Figure 4). In the VR phase of both scenarios, a decrease in the median lnRMSSD is observable (see

Figure 5). This parameter serves as a sensitive indicator of parasympathetic activity.

During the measurement, cognitive load during the resolution of individual scenarios was also evaluated, see

Table 5 and

Table 6. This combined approach enables objective assessment of how VR training affects the physiological state of participants and how demanding the individual scenarios are in terms of cognitive load, which is crucial for evaluating the benefits and effectiveness of the training method. Across scenarios and blocks, an increase in cognitive load compared to BL was observed in the VR phase. The highest VR values were recorded in the police scenario, where in several cases the proportion of cognitive load exceeded 90 percent. Some participants (P5, P8, and P9) consistently had high cognitive load across all blocks. In contrast, in the firefighter scenario, the values were generally lower, in some participants (e.g., P1, P2, P3, and P10), the proportion of cognitive load during VR did not exceed 35 percent. However, high values were also recorded in some cases (e.g., P5 91.7 percent in the third block). This increased cognitive demand likely affected participants’ performance, see

Table 6.

Higher success rates were observed in the police scenario, where participants correctly identified 80 percent of cases (24 out of 30). In the firefighter scenario, the success rate was significantly lower at 46.7 percent (14 out of 30). The obtained data indicate that higher cognitive load does not necessarily represent a negative factor, as pointed out by Muñoz et al. [

21] and Solhjoo et al. [

30]. On the contrary, it may reflect effective task engagement. Significant interindividual variability was also recorded in both exposure duration and number of collected evidence items. These differences are not surprising and correspond to findings by Muñoz et al. [

21], showing that stress responses among responders vary depending on experience, training, and personality characteristics. Therefore, VR training should be able to adaptively account for these differences, providing additional support to individuals experiencing excessive stress, or increasing difficulty for more resilient participants.

The possibility of continuous real time evaluation of physiological parameters represents a potential tool for developing adaptive VR training systems that can dynamically respond to the participant’s current stress level and adjust the scenario’s course and difficulty accordingly. This approach appears particularly promising for highly dynamic and stressful interventions, such as active shooter simulations, crisis negotiations, or mass casualty responses, rather than for SI type scenarios. These scenarios are primarily focused on precise and methodical evidence work, where significant stress responses are not typically expected. In such cases, measured data are more suitable for objectively assessing the difficulty and effectiveness of training.