Explainable AI-Driven Analysis of Construction and Demolition Waste Credit Selection in LEED Projects

Abstract

1. Introduction

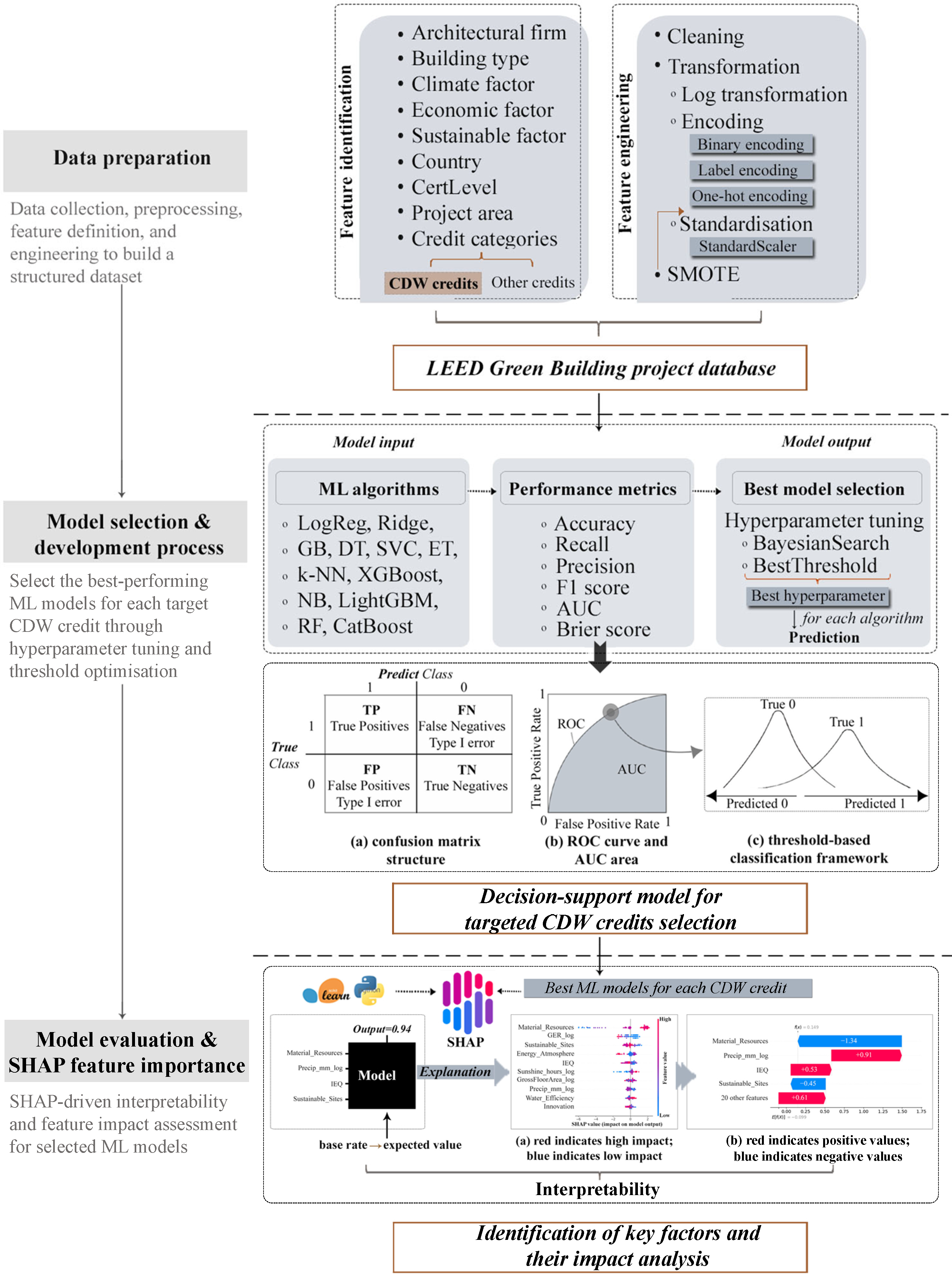

2. Research Methodology

2.1. Research Framework

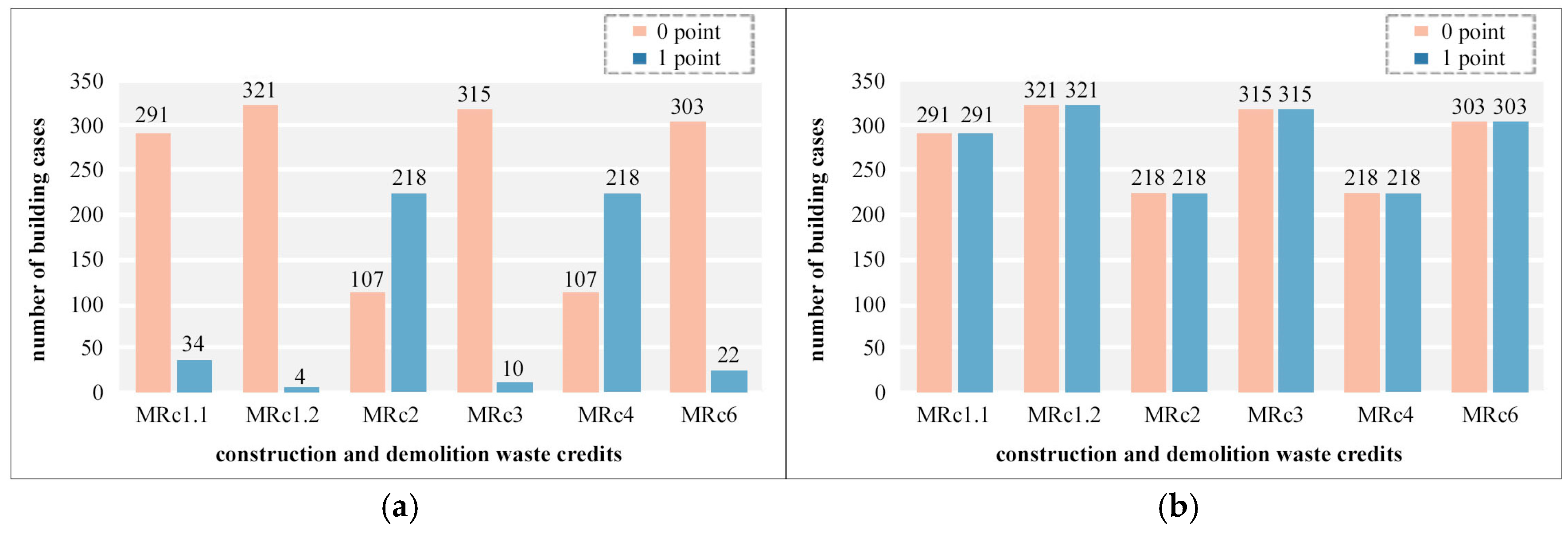

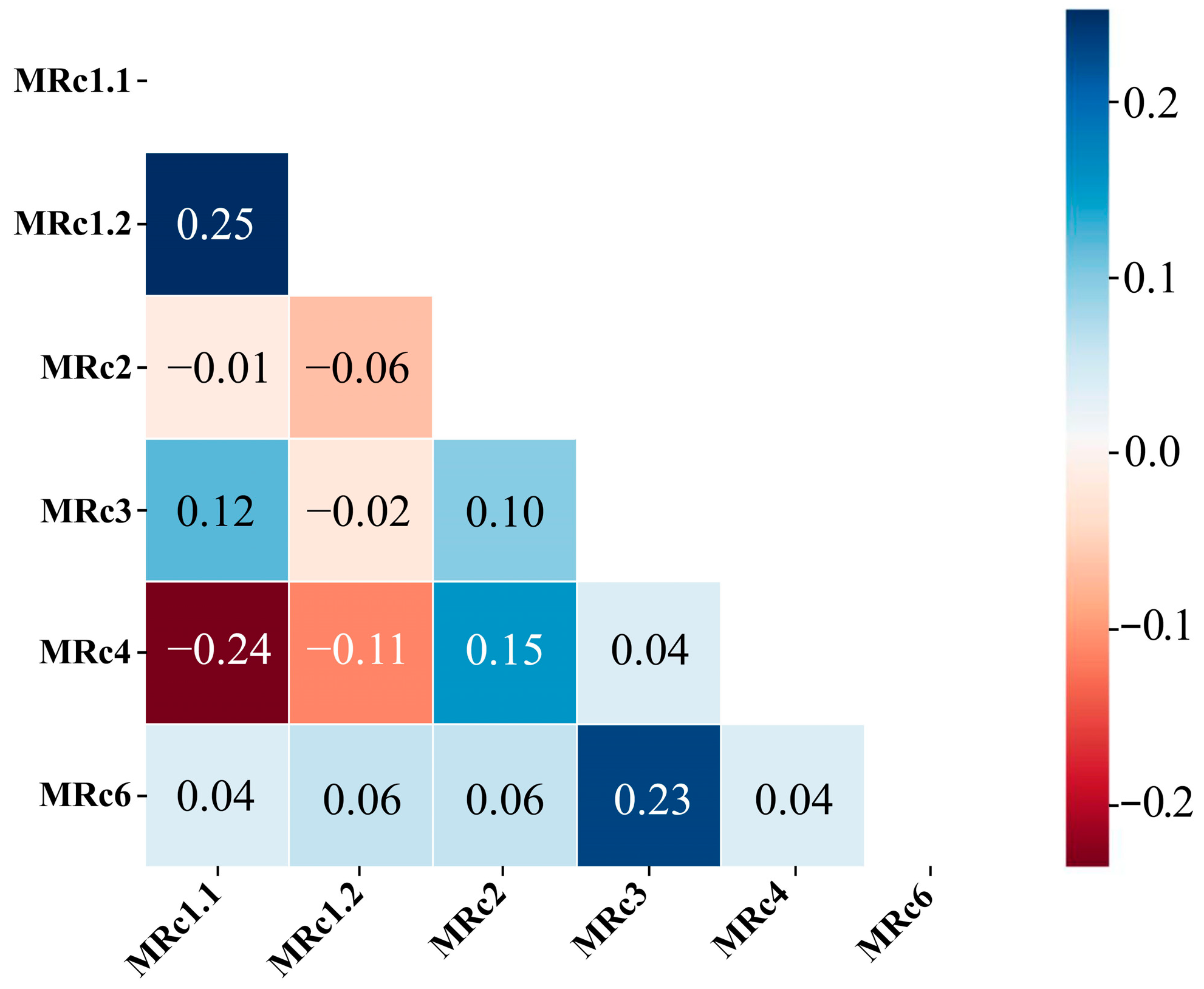

2.2. Data Preparation

2.2.1. Data Preprocessing

2.2.2. Feature Engineering

3. Model Selection and Development Process

3.1. Algorithm Selection and Model Configuration

3.2. Hyperparameter Tuning

3.3. Model Evaluation Metrics

3.4. SHAP Analysis for Explainability

4. Results

4.1. Evaluation Results of ML Models

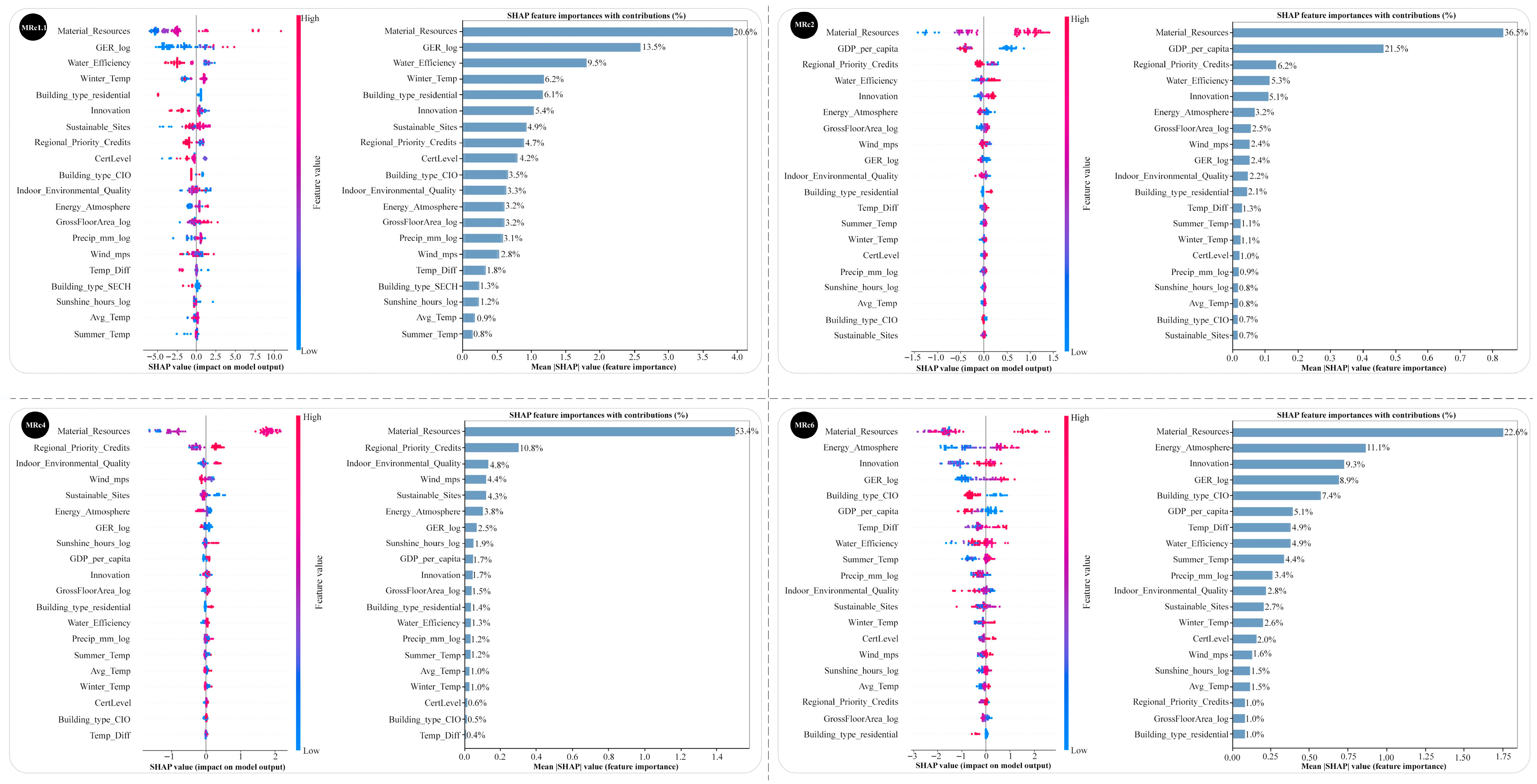

4.2. SHAP Analysis Result

- a.

- For MRc1.1, where the aim is to preserve structural elements, the impact of material resources, GER, and water efficiency highlights the importance of project-level material planning and environmental performance. Winter temperature and building type (residential) also played moderate roles, while GDP per capita and firm features had a negligible effect.

- b.

- In MRc2, the prominent role of material resources underscores the central role of effective waste handling strategies. Regional priority credits and indoor environmental quality were also significant, whereas firm-level or typological characteristics had minimal impact.

- c.

- For MRc4, both material resources and GDP per capita were crucial, highlighting the significance of economic capacity in promoting the use of recycled materials. Features such as innovation, GER, and gross floor area indicate that creativity and project scale also play essential roles.

- d.

- Finally, MRc6, achieved in only 27 projects, was influenced by material resources, energy, atmosphere, and innovation. Moderate contributions from GER, Temp_Diff, and water efficiency highlight the need for integrated sustainability and climatic adaptability. Once again, firms and regional priority credits had minimal impact.

5. Discussion

5.1. Interpretation of ML Model Results

5.2. Discussion of SHAP-Based Factor Effects

5.2.1. Certification Level

5.2.2. Building Type

5.2.3. Economic, Sustainability, Climatic Factors

5.3. Thresholds and Challenges in CDW Credits

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| MRc1.1 | MRc2 | MRc4 | MRc6 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | Metric | Without Smote | With Smote | Without Smote | With Smote | Without Smote | With Smote | Without Smote | With Smote |

| Ridge | Accuracy | 0.890 | 0.866 | 0.780 | 0.854 | 0.720 | 0.683 | 0.939 | 0.780 |

| Precision | 0.000 | 0.429 | 0.761 | 0.852 | 0.776 | 0.784 | 0.000 | 0.158 | |

| Recall | 0.000 | 0.667 | 0.982 | 0.945 | 0.818 | 0.727 | 0.000 | 0.600 | |

| F1 Score | 0.000 | 0.522 | 0.857 | 0.897 | 0.796 | 0.755 | 0.000 | 0.250 | |

| AUC | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | |

| Brier Score | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | |

| LogReg | Accuracy | 0.890 | 0.890 | 0.854 | 0.866 | 0.768 | 0.768 | 0.951 | 0.854 |

| Precision | 0.500 | 0.500 | 0.841 | 0.867 | 0.765 | 0.743 | 1.000 | 0.231 | |

| Recall | 0.778 | 0.778 | 0.964 | 0.945 | 0.945 | 1.000 | 0.200 | 0.600 | |

| F1 Score | 0.609 | 0.609 | 0.898 | 0.904 | 0.846 | 0.853 | 0.333 | 0.333 | |

| AUC | 0.805 | 0.802 | 0.861 | 0.867 | 0.798 | 0.787 | 0.839 | 0.803 | |

| Brier Score | 0.088 | 0.114 | 0.137 | 0.133 | 0.165 | 0.179 | 0.057 | 0.154 | |

| DT | Accuracy | 0.878 | 0.829 | 0.866 | 0.890 | 0.780 | 0.805 | 0.841 | 0.939 |

| Precision | 0.429 | 0.222 | 0.978 | 0.926 | 0.794 | 0.820 | 0.100 | 0.500 | |

| Recall | 0.333 | 0.222 | 0.818 | 0.909 | 0.909 | 0.909 | 0.200 | 0.800 | |

| F1 Score | 0.375 | 0.222 | 0.891 | 0.917 | 0.847 | 0.862 | 0.133 | 0.615 | |

| AUC | 0.644 | 0.506 | 0.907 | 0.899 | 0.773 | 0.815 | 0.542 | 0.951 | |

| Brier Score | 0.103 | 0.181 | 0.106 | 0.127 | 0.169 | 0.164 | 0.159 | 0.145 | |

| ET | Accuracy | 0.902 | 0.866 | 0.890 | 0.854 | 0.780 | 0.768 | 0.951 | 0.939 |

| Precision | 0.571 | 0.438 | 0.960 | 0.864 | 0.753 | 0.750 | 0.667 | 0.500 | |

| Recall | 0.444 | 0.778 | 0.873 | 0.927 | 1.000 | 0.982 | 0.400 | 0.600 | |

| F1 Score | 0.500 | 0.560 | 0.914 | 0.895 | 0.859 | 0.850 | 0.500 | 0.545 | |

| AUC | 0.813 | 0.854 | 0.916 | 0.885 | 0.787 | 0.790 | 0.868 | 0.857 | |

| Brier Score | 0.082 | 0.143 | 0.139 | 0.164 | 0.171 | 0.171 | 0.045 | 0.139 | |

| k-NN | Accuracy | 0.890 | 0.854 | 0.695 | 0.780 | 0.756 | 0.744 | 0.951 | 0.854 |

| Precision | 0.500 | 0.364 | 0.688 | 0.836 | 0.778 | 0.750 | 1.000 | 0.182 | |

| Recall | 0.222 | 0.444 | 1.000 | 0.836 | 0.891 | 0.927 | 0.200 | 0.400 | |

| F1 Score | 0.308 | 0.400 | 0.815 | 0.836 | 0.831 | 0.829 | 0.333 | 0.250 | |

| AUC | 0.605 | 0.715 | 0.711 | 0.731 | 0.699 | 0.706 | 0.621 | 0.740 | |

| Brier Score | 0.103 | 0.165 | 0.201 | 0.213 | 0.199 | 0.232 | 0.066 | 0.139 | |

| NB | Accuracy | 0.829 | 0.915 | 0.768 | 0.756 | 0.744 | 0.756 | 0.878 | 0.854 |

| Precision | 0.353 | 0.667 | 0.810 | 0.754 | 0.750 | 0.746 | 0.273 | 0.267 | |

| Recall | 0.667 | 0.444 | 0.855 | 0.945 | 0.927 | 0.964 | 0.600 | 0.800 | |

| F1 Score | 0.462 | 0.533 | 0.832 | 0.839 | 0.829 | 0.841 | 0.375 | 0.400 | |

| AUC | 0.769 | 0.664 | 0.739 | 0.724 | 0.694 | 0.679 | 0.847 | 0.875 | |

| Brier Score | 0.115 | 0.292 | 0.195 | 0.199 | 0.290 | 0.298 | 0.477 | 0.476 | |

| RF | Accuracy | 0.829 | 0.878 | 0.915 | 0.927 | 0.793 | 0.805 | 0.829 | 0.890 |

| Precision | 0.368 | 0.462 | 0.962 | 0.962 | 0.779 | 0.783 | 0.235 | 0.357 | |

| Recall | 0.778 | 0.667 | 0.909 | 0.927 | 0.964 | 0.982 | 0.800 | 1.000 | |

| F1 Score | 0.500 | 0.545 | 0.935 | 0.944 | 0.862 | 0.871 | 0.364 | 0.526 | |

| AUC | 0.795 | 0.880 | 0.945 | 0.962 | 0.822 | 0.855 | 0.831 | 0.943 | |

| Brier Score | 0.084 | 0.116 | 0.110 | 0.115 | 0.156 | 0.146 | 0.050 | 0.088 | |

| SVM | Accuracy | 0.878 | 0.878 | 0.866 | 0.854 | 0.780 | 0.768 | 0.866 | 0.963 |

| Precision | 0.444 | 0.462 | 0.879 | 0.864 | 0.761 | 0.765 | 0.286 | 1.000 | |

| Recall | 0.444 | 0.667 | 0.927 | 0.927 | 0.982 | 0.945 | 0.800 | 0.400 | |

| F1 Score | 0.444 | 0.545 | 0.903 | 0.895 | 0.857 | 0.846 | 0.421 | 0.571 | |

| AUC | 0.763 | 0.796 | 0.892 | 0.879 | 0.799 | 0.772 | 0.847 | 0.868 | |

| Brier Score | 0.090 | 0.115 | 0.129 | 0.119 | 0.163 | 0.174 | 0.045 | 0.087 | |

| GB | Accuracy | 0.793 | 0.890 | 0.902 | 0.841 | 0.805 | 0.805 | 0.939 | 0.939 |

| Precision | 0.318 | 0.500 | 0.912 | 0.862 | 0.783 | 0.791 | 0.500 | 0.500 | |

| Recall | 0.778 | 0.667 | 0.945 | 0.909 | 0.982 | 0.964 | 0.400 | 0.800 | |

| F1 Score | 0.452 | 0.571 | 0.929 | 0.885 | 0.871 | 0.869 | 0.444 | 0.615 | |

| AUC | 0.817 | 0.848 | 0.952 | 0.907 | 0.834 | 0.848 | 0.782 | 0.958 | |

| Brier Score | 0.110 | 0.152 | 0.095 | 0.123 | 0.150 | 0.142 | 0.068 | 0.050 | |

| XGBoost | Accuracy | 0.780 | 0.829 | 0.902 | 0.902 | 0.817 | 0.793 | 0.841 | 0.915 |

| Precision | 0.304 | 0.381 | 0.961 | 0.927 | 0.845 | 0.764 | 0.250 | 0.400 | |

| Recall | 0.778 | 0.889 | 0.891 | 0.927 | 0.891 | 1.000 | 0.800 | 0.800 | |

| F1 Score | 0.438 | 0.533 | 0.925 | 0.927 | 0.867 | 0.866 | 0.381 | 0.533 | |

| AUC | 0.836 | 0.869 | 0.927 | 0.925 | 0.833 | 0.857 | 0.860 | 0.932 | |

| Brier Score | 0.096 | 0.107 | 0.105 | 0.106 | 0.147 | 0.145 | 0.054 | 0.075 | |

| LightGBM | Accuracy | 0.890 | 0.866 | 0.890 | 0.902 | 0.793 | 0.805 | 0.963 | 0.878 |

| Precision | 0.500 | 0.429 | 0.926 | 0.980 | 0.797 | 0.800 | 1.000 | 0.333 | |

| Recall | 0.667 | 0.667 | 0.909 | 0.873 | 0.927 | 0.945 | 0.400 | 1.000 | |

| F1 Score | 0.571 | 0.522 | 0.917 | 0.923 | 0.857 | 0.867 | 0.571 | 0.500 | |

| AUC | 0.820 | 0.845 | 0.927 | 0.937 | 0.853 | 0.864 | 0.860 | 0.930 | |

| Brier Score | 0.090 | 0.117 | 0.106 | 0.101 | 0.149 | 0.140 | 0.037 | 0.105 | |

| CatBoost | Accuracy | 0.890 | 0.878 | 0.902 | 0.927 | 0.805 | 0.817 | 0.963 | 0.951 |

| Precision | 0.500 | 0.509 | 0.943 | 0.980 | 0.783 | 0.794 | 1.000 | 0.571 | |

| Recall | 0.556 | 0.778 | 0.909 | 0.909 | 0.982 | 0.982 | 0.400 | 0.800 | |

| F1 Score | 0.526 | 0.615 | 0.926 | 0.944 | 0.871 | 0.878 | 0.571 | 0.667 | |

| AUC | 0.884 | 0.874 | 0.945 | 0.958 | 0.854 | 0.871 | 0.938 | 0.958 | |

| Brier Score | 0.093 | 0.125 | 0.099 | 0.088 | 0.168 | 0.136 | 0.035 | 0.050 | |

References

- EPA (Environmental Protection Agency). Green Buildings. 2021. Available online: https://www.epa.gov/greeningepa/green-buildings-epa (accessed on 17 February 2025).

- Fowler, K.; Rauch, E. Sustainable Building Rating Systems; Pacific Northwest National Laboratory (PNNL): Richland, WA, USA, 2006. Available online: https://www.pnnl.gov/main/publications/external/technical_reports/pnnl-15858.pdf (accessed on 15 February 2025).

- Khodadadzadeh, T. Green building project management: Obstacles and solutions for sustainable development. J. Proj. Manag. 2016, 1, 21–26. [Google Scholar] [CrossRef]

- World Green Building Council. Annual Report; World Green Building Council: London, UK, 2022; Available online: https://worldgbc.org/wp-content/uploads/2023/08/WorldGBC-Annual-Report-2022_FINAL.pdf (accessed on 12 February 2025).

- Zuo, J.; Zhao, Z.-Y. Green building research–current status and future agenda: A review. Renew. Sustain. Energy Rev. 2014, 30, 271–281. [Google Scholar] [CrossRef]

- Raouf, A.M.; Al-Ghamdi, S.G. Managerial practitioners’ perspectives on quality performance of green-building projects. Buildings 2020, 10, 71. [Google Scholar] [CrossRef]

- Sönmez, N.; Maçka Kalfa, S. Investigation of construction and demolition wastes in the European Union member states according to their directives. Contemp. J. Econ. Financ. 2023, 1, 7–26. [Google Scholar]

- Prowler, D.; Vierra, S. Construction Waste Management. In Whole Building Design Guide; U.S. Army Corps of Engineers, Engineer Research and Development Center, Construction Engineering Research Laboratory: Champaign, IL, USA, 2016; Available online: https://www.wbdg.org/resources/construction-waste-management (accessed on 10 March 2024).

- Ismaeel, W.; Ali, A. Assessment of eco-rehabilitation plans: A case study ‘Richordi Berchet’ palace. J. Clean. Prod. 2020, 259, 120857. [Google Scholar] [CrossRef]

- Wu, Z.; Shen, L.; Yu, A.; Zhang, X. A comparative analysis of waste management requirements between five green building rating systems for new residential buildings. J. Clean. Prod. 2016, 112, 895–902. [Google Scholar] [CrossRef]

- Saradara, S.M.; Khalfan, M.; Rauf, A.; Qureshi, R. On the path towards sustainable construction—The case of the United Arab Emirates: A review. Sustainability 2023, 15, 14652. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Ma, D.; Gong, W. Exploring green building certification credit selection: A model based on explainable machine learning. J. Build. Eng. 2024, 95, 110279. [Google Scholar] [CrossRef]

- Carvalho, J.P.; Bragança, L.; Mateus, R. A systematic review of the role of BIM in building sustainability assessment methods. Appl. Sci. 2020, 10, 4444. [Google Scholar] [CrossRef]

- Akbari, S.; Sheikhkhoshkar, M.; Rahimian, F.P.; El Haouzi, H.B.; Najafi, M.; Talebi, S. Sustainability and building information modelling: Integration, research gaps, and future directions. Autom. Constr. 2024, 163, 105420. [Google Scholar] [CrossRef]

- Yu, D.; Tan, H.; Ruan, Y. A future bamboo-structure residential building prototype in China: Life cycle assessment of energy use and carbon emission. Energy Build. 2011, 43, 2638–2646. [Google Scholar] [CrossRef]

- Chi, B.; Lu, W.; Ye, M.; Bao, Z.; Zhang, X. Construction waste minimisation in green building: A comparative analysis of LEED-NC 2009 certified projects in the US and China. J. Clean. Prod. 2020, 256, 120749. [Google Scholar] [CrossRef]

- Daoud, A.O.; Omar, H.; Othman, A.E.; Ebohon, O.J. Integrated framework towards construction waste reduction: The case of Egypt. Int. J. Civ. Eng. 2023, 21, 695–709. [Google Scholar] [CrossRef]

- Li, X.; Feng, W.; Liu, X.; Yang, Y. A comparative analysis of green building rating systems in China and the United States. Sustain. Cities Soc. 2023, 93, 104520. [Google Scholar] [CrossRef]

- Choi, K.; Son, K.; Woods, P.; Park, Y.J. LEED Public Transportation Accessibility and Its Economic Implications. J. Constr. Eng. Manag. 2012, 138, 1095–1102. [Google Scholar] [CrossRef]

- Son, K.; Choi, K.; Woods, P.; Park, Y.J. Urban Sustainability Predictive Model Using GIS: Appraised Land Value versus LEED Sustainable Site Credits. J. Constr. Eng. Manag. 2012, 138, 1107–1112. [Google Scholar] [CrossRef]

- Jayasanka, T.; Darko, A.; Edwards, D.J.; Chan, A.; Jalaei, F. Automating building environmental assessment: A systematic review and future research directions. Environ. Impact Assess. Rev. 2024, 106, 107465. [Google Scholar] [CrossRef]

- Duan, Y.; Edwards, J.S.; Dwivedi, Y.K. Artificial intelligence for decision making in the era of big data–evolution, challenges and research agenda. Int. J. Inf. Manag. 2019, 48, 63–71. [Google Scholar] [CrossRef]

- Wan, J.; Wang, D.; Hoi, S.C.H.; Wu, P.; Zhu, J.; Zhang, Y.; Li, J. Deep Learning for Content-Based Image Retrieval: A Comprehensive Study. In Proceedings of the 22nd ACM International Conference on Multimedia (MM ’14), Orlando, FL, USA, 3–7 November 2014; ACM: New York, NY, USA, 2014; pp. 157–166. [Google Scholar] [CrossRef]

- Zabin, A.; Gonzalez, V.A.; Zou, Y.; Amor, R. Applications of machine learning to BIM: A systematic literature review. Adv. Eng. Inform. 2022, 51, 101474. [Google Scholar] [CrossRef]

- Abioye, S.O.; Oyedele, L.O.; Akanbi, L.; Ajayi, A.; Davila Delgado, J.M.; Bilal, M.; Akinade, O.O.; Ahmed, A. Artificial intelligence in the construction industry: A review of present status, opportunities and future challenges. J. Build. Eng. 2021, 44, 103299. [Google Scholar] [CrossRef]

- Soliman, K.; Naji, K.; Gunduz, M.; Tokdemir, O.B.; Faqih, F.; Zayed, T. BIM-based facility management models for existing buildings. J. Eng. Res. 2022, 10, 21–37. [Google Scholar] [CrossRef]

- Zhao, J.; Lam, K.P. Influential factors analysis on LEED building markets in U.S. East Coast cities by using Support Vector Regression. Sustain. Cities Soc. 2012, 5, 37–43. [Google Scholar] [CrossRef]

- Cheng, J.; Kwok, H.; Li, A.; Tong, J.; Lau, A. BIM-supported sensor placement optimisation based on genetic algorithm for multi-zone thermal comfort and IAQ monitoring. Build. Environ. 2022, 216, 108997. [Google Scholar] [CrossRef]

- Liu, P.; Tønnesen, J.; Caetano, L.; Bergsdal, H.; Alonso, M.J.; Kind, R.; Georges, L.; Mathisen, H.M. Optimizing ventilation systems considering operational and embodied emissions with life cycle-based method. Energy Build. 2024, 325, 115040. [Google Scholar] [CrossRef]

- Cheng, J.C.P.; Ma, L.J. A data-driven study of important climate factors on the achievement of LEED-EB credits. Build. Environ. 2015, 90, 232–244. [Google Scholar] [CrossRef]

- Ma, J.; Cheng, J. Data-driven study on the achievement of LEED credits using percentage of average score and association rule analysis. Build. Environ. 2016, 98, 121–132. [Google Scholar] [CrossRef]

- Bangwal, D.; Kumar, R.; Suyal, J.; Ghouri, A.M. Does AI-technology-based indoor environmental quality impact occupants’ psychological, physiological health, and productivity? Ann. Oper. Res. 2025, 350, 517–535. [Google Scholar] [CrossRef]

- Chokor, A.; El Asmar, M. Data-driven approach to investigate the energy consumption of LEED-certified research buildings in climate zone 2 B. J. Energy Eng. 2017, 143, 2. [Google Scholar] [CrossRef]

- Xu, J.; Cheng, M.; Sun, A. Assessing sustainable practices in architecture: A data-driven analysis of LEED certification adoption and impact in top firms from 2000 to 2023. Front. Archit. Res. 2025, 14, 784–796. [Google Scholar] [CrossRef]

- Mansouri, A.; Naghdi, M.; Erfani, A. Machine Learning for Leadership in Energy and Environmental Design Credit Targeting: Project Attributes and Climate Analysis Toward Sustainability. Sustainability 2025, 17, 2521. [Google Scholar] [CrossRef]

- Payyanapotta, A.; Thomas, A. An analytical hierarchy-based optimization framework to aid sustainable assessment of buildings. J. Build. Eng. 2021, 35, 102003. [Google Scholar] [CrossRef]

- Chen, X.; Lu, W.; Xue, F.; Xu, J. A cost-benefit analysis of green buildings concerning construction waste minimization using big data in Hong Kong. J. Green Build. 2018, 13, 61–76. [Google Scholar] [CrossRef]

- Doan, D.T.; Albsoul, H.; Hoseini, A.G. Enhancing construction waste management in New Zealand: Lessons from Hong Kong and other countries. Environ. Res. Commun. 2023, 5, 102001. [Google Scholar] [CrossRef]

- Illankoon, I.C.; Lu, W. Cost implications of obtaining construction waste management-related credits in green building. Waste Manag. 2020, 102, 722–731. [Google Scholar] [CrossRef]

- Lu, W.; Chen, X.; Peng, Y.; Liu, X. The effects of green building on construction waste minimization: Triangulating ‘big data’ with ‘thick data’. Waste Manag. 2018, 79, 142–152. [Google Scholar] [CrossRef]

- Lu, W.; Chi, B.; Bao, Z.; Zetkulic, A. Evaluating the effects of green building on construction waste management: A comparative study of three green building rating systems. Build. Environ. 2019, 155, 247–256. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18. [Google Scholar] [CrossRef]

- Saeed, W.; Omlin, C. Explainable AI (XAI): A systematic meta-survey of current challenges and future opportunities. Knowl.-Based Syst. 2023, 263, 110273. [Google Scholar] [CrossRef]

- Rai, A. Explainable AI: From black box to glass box. J. Acad. Market. Sci. 2020, 48, 137–141. [Google Scholar] [CrossRef]

- Cheong, B.C. Transparency and accountability in AI systems: Safeguarding wellbeing in the age of algorithmic decision-making. Front. Hum. Dyn. 2024, 6, 1421273. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 4765–4774. [Google Scholar]

- Cakiroglu, C.; Shahjalal, M.; Islam, K.; Mahmood, S.M.F.; Billah, A.H.M.M.; Nehdi, M.L. Explainable ensemble learning data-driven modeling of mechanical properties of fiber-reinforced rubberized recycled aggregate concrete. J. Build. Eng. 2023, 76, 107279. [Google Scholar] [CrossRef]

- Kroell, N.; Thor, E.; Göbbels, L.; Schönfelder, P.; Chen, X. Deep learning-based prediction of particle size distributions in construction and demolition waste recycling using convolutional neural networks on 3D laser triangulation data. Constr. Build. Mater. 2025, 466, 140214. [Google Scholar] [CrossRef]

- Yu, G.; Wang, C.; Zhuo, Q.; Wang, Z.; Huang, M. Machine learning predicting sintering temperature for ceramsite production from multiple solid wastes. Waste Manag. 2025, 203, 114903. [Google Scholar] [CrossRef]

- Ramakrishnan, J.; Seshadri, K.; Liu, T.; Zhang, F.; Yu, R.; Gou, Z. Explainable semi-supervised AI for green performance evaluation of airport buildings. J. Build. Eng. 2023, 79, 107788. [Google Scholar] [CrossRef]

- Ulucan, M.; Yildirim, G.; Alatas, B.; Alyamac, K.E. Modelling and evaluation of mechanical performance and environmental impacts of sustainable concretes using a multi-objective optimization based innovative interpretable artificial intelligence method. J. Environ. Manag. 2024, 372, 123364. [Google Scholar] [CrossRef] [PubMed]

- Jiang, R.; Zeng, S.; Song, Q.; Wu, Z. Deep-Chain Echo State Network with explainable temporal dependence for complex building energy prediction. IEEE Trans. Ind. Inform. 2023, 19, 426–434. [Google Scholar] [CrossRef]

- USGBC. 2024. Available online: https://www.usgbc.org (accessed on 4 March 2024).

- U.S. Green Building Council (USGBC). LEED-NC v2009: New Construction Reference Guide; U.S. Green Building Council: Washington, DC, USA, 2009. [Google Scholar]

- WGBC. 2021. Available online: https://worldgbc.org/ (accessed on 14 February 2021).

- Goodarzi, M.; Shayesteh, A.; Attallah, S. Assessing the consistency between the expected and actual influence of LEED-NC credit categories on the sustainability score. Epic. Ser. Built Environ. 2023, 4, 614–622. [Google Scholar] [CrossRef]

- Goodarzi, M.; Shayesteh, A. Does LEED BD+C for new construction provide a realistic and practical sustainability evaluation system? In Proceedings of the Construction Research Congress, Des Moines, IA, USA, 20–23 March 2024; pp. 505–514. [Google Scholar] [CrossRef]

- Wu, P.; Song, Y.; Shou, W.; Chi, H.; Chong, H.-Y.; Sutrisna, M. A comprehensive analysis of the credits obtained by LEED 2009 certified green buildings. Renew. Sustain. Energy Rev. 2017, 68, 370–379. [Google Scholar] [CrossRef]

- Wu, P.; Mao, C.; Wang, J.; Song, Y.; Wang, X. A decade review of the credits obtained by LEED v2.2 certified green building projects. Build. Environ. 2016, 102, 167–178. [Google Scholar] [CrossRef]

- Jun, M.; Cheng, J.C. Selection of target LEED credits based on project information and climatic factors using data mining techniques. Adv. Eng. Inf. 2017, 32, 224–236. [Google Scholar] [CrossRef]

- WGBC. Sustainable and Affordable Housing: Spotlighting Action from Across the World Green Building Council Network; World Green Building Council: London, UK, 2023; Available online: https://worldgbc.org/article/sustainable-and-affordable-housing/ (accessed on 10 October 2025).

- Braulio-Gonzalo, M.; Jorge-Ortiz, A.; Bovea, M.D. How are indicators in Green Building Rating Systems addressing sustainability dimensions and life cycle frameworks in residential buildings? Environ. Impact Assess. Rev. 2022, 95, 106793. [Google Scholar] [CrossRef]

- Lee, P.-H.; Han, Q.; de Vries, B. Advancing a sustainable built environment: A comprehensive review of stakeholder promotion strategies and dual forces. J. Build. Eng. 2024, 95, 110223. [Google Scholar] [CrossRef]

- Jorge-Ortiz, A.; Braulio-Gonzalo, M.; Bovea, M.D. Exploring how waste management is being approached in green building rating systems: A case study. Waste Manag. Res. 2023, 41, 1121–1133. [Google Scholar] [CrossRef]

- Building Design. World Architecture 100 (WA100); Building Design: London, UK, 2025; Available online: https://www.bdonline.co.uk/wa-100 (accessed on 12 February 2025).

- Eurostat. 2025. Available online: https://ec.europa.eu/eurostat (accessed on 12 February 2025).

- NOAA. 2025. Available online: https://www.noaa.gov (accessed on 12 February 2025).

- Pham, D.H.; Kim, B.; Lee, J.; Ahn, Y. An investigation of the selection of LEED version 4 credits for sustainable building projects. Appl. Sci. 2020, 10, 7081. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. earning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Tate, R.F. Correlation Between a Discrete and a Continuous Variable. Point-Biserial Correlation. Ann. Math. Stat. 1954, 25, 603–607. Available online: https://www.jstor.org/stable/2236844 (accessed on 10 October 2025). [CrossRef]

- Gholami, H.; Mohamadifar, A.; Sorooshian, A.; Jansen, J.D. Machine-learning algorithms for predicting land susceptibility to dust emissions: The case of the Jazmurian Basin, Iran. Atmos. Pollut. Res. 2020, 11, 1303–1315. [Google Scholar] [CrossRef]

- Hosmer, D.W.; Lemeshow, S. Applied Logistic Regression, 2nd ed.; John Wiley & Sons, Inc.: New York, NY, USA, 2000. [Google Scholar] [CrossRef]

- Agresti, A. Categorical Data Analysis, 3rd ed.; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Singh, J.; Anumba, C.J. Real-time pipe system installation schedule generation and optimization using artificial intelligence and heuristic techniques. J. Inf. Technol. Constr. (ITcon) 2022, 27, 173–190. [Google Scholar] [CrossRef]

- Zhao, D.D.; Hu, X.Y.; Xiong, S.W.; Tian, J.; Xiang, J.W.; Zhou, J.; Li, H.H. K-means clustering and kNN classification based on negative databases. Appl. Soft Comput. 2021, 110, 107732. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomised trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Kim, J.; Won, J.; Kim, H.; Heo, J. Machine learning-based prediction of land prices in Seoul, South Korea. Sustainability 2021, 13, 13088. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Shi, Y.; Li, J.; Li, Z. Gradient Boosting with Piece-Wise Linear Regression Trees. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 10–16 August 2019. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Curran Associates, Inc.: New York, NY, USA, 2018; pp. 6638–6648. [Google Scholar]

- Freund, Y.; Schapire, R. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine, 1999 Reitz Lecture. Ann. Stat. 2001, 29, 1189–1232. Available online: https://www.jstor.org/stable/2699986 (accessed on 10 October 2025).

- Chen, T.Q.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2016, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 3146–3154. [Google Scholar]

- Ostroumova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Berk, J.; Nguyen, V.; Gupta, S.; Rana, S.; Venkatesh, S. Exploration enhanced expected improvement for Bayesian optimization. In Proceedings of the Machine Learning and Knowledge Discovery in Databases, ECML PKDD 2018, Dublin, Ireland, 10–14 September 2018; Berlingerio, M., Bonchi, F., Gärtner, T., Hurley, N., Ifrim, G., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2019; Volume 11052, pp. 621–637. [Google Scholar] [CrossRef]

- Albahri, A.S.; Khaleel, Y.L.; Habeeb, M.A.; Ismael, R.D.; Hameed, Q.A.; Deveci, M.; Albahri, O.S.; Alamoodi, A.H.; Alzubaidi, L. A systematic review of trustworthy artificial intelligence applications in natural disasters. Comput. Electr. Eng. 2024, 118, 109409. [Google Scholar] [CrossRef]

- Shapley, L.S. A value for n-person games. In Contributions to the Theory of Games; Kuhn, H.W., Tucker, A.W., Eds.; Annals of Mathematics Studies; Princeton University Press: Princeton, NJ, USA, 1953; Volume 28, pp. 307–317. [Google Scholar] [CrossRef]

- Rodríguez-Pérez, R.; Bajorath, J. Interpretation of machine learning models using Shapley values: Application to compound potency and multi-target activity predictions. J. Comput. Aided Mol. Des. 2020, 34, 1013–1026. [Google Scholar] [CrossRef]

- Mao, Y.; Duan, Y.; Guo, Y.; Wang, X.; Gao, S. A study on the prediction of the house price index in first-tier cities in China based on heterogeneous integrated learning model. J. Math. 2022, 2022, 2068353. [Google Scholar] [CrossRef]

- Wang, Q.; Xie, X.; Shahrour, I.; Huang, Y. Use of deep learning, denoising technic, and cross-correlation analysis for the prediction of the shield machine slurry pressure in mixed ground conditions. Autom. Constr. 2021, 128, 103741. [Google Scholar] [CrossRef]

- Gilpin, L.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining explanations: An overview of the interpretability of machine learning. In Proceedings of the IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA 2018), Turin, Italy, 1–3 October 2018; pp. 80–89. [Google Scholar]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. 2018, 51, 5. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef] [PubMed]

- Häkkinen, T.; Belloni, K. Barriers and drivers for sustainable building. Build. Res. Inf. 2011, 39, 239–255. [Google Scholar] [CrossRef]

- USGBC. LEED 2009 for New Construction and Major Renovations Rating System (Updated October 2010). 2010. Available online: https://www.usgbc.org/resources/leed-new-construction-v2009-current-version (accessed on 15 February 2025).

- USGBC. MR Credit 6: Rapidly Renewable Materials. 2009. Available online: https://s3.amazonaws.com/ao-leedonline-prd/documents/usgbc/leed/content/CreditFormsDownload/nc/mr/mrc6/mrc6_sta.pdf (accessed on 15 February 2025).

| Overall Assessment Framework | Credits Related to CDW | |||

|---|---|---|---|---|

| Performance Category | Attainable Points | Attainable Points | ||

| Sustainable sites (SS) | 26 | MRp1 | Storage and collection of recyclables | prerequisite |

| Water efficiency (WE) | 10 | MRc1.1 | Building reuse—maintain existing walls, floors, and roof | 3 |

| Energy & atmosphere (EA) | 35 | MRc1.2 | Building reuse—maintain existing interior non-structural elements | 1 |

| Materials & resources (MR) | 14 | MRc2 | Construction waste management | 2 |

| Indoor environmental quality (IEQ) | 15 | MRc3 | Materials reuse | 2 |

| Innovation (IN) | 6 | MRc4 | Recycled content | 2 |

| Regional priority (PRC) | 4 | MRc6 | Rapidly renewable materials | 1 |

| Total | 110 | 11 | ||

| Category/Factor | Feature | Feature Type | Feature Encoding | Sources |

|---|---|---|---|---|

| Certification level | Certified | Categorical | Label encoding (0/1/2/3) | [53] |

| Silver | ||||

| Gold | ||||

| Platinum | ||||

| Building type | Residential buildings | Categorical | One-hot encoding [1, 0, 0], [0, 1, 0], [0, 0, 1] | Adapted from [12,53,59] |

| Science, education, culture, and health buildings (SECH) | ||||

| Commercial, industrial, and office buildings (CIO) | ||||

| Architectural firm | Whether the architectural design firm ranks among the top 100 (WE100) | Categorical | Binary encoding (0/1) | [65] |

| Economic factor | GDP per capita | Numerical | Continuous feature | [66] |

| Sustainable factor | GER (Green Efficiency Ratio) | Numerical | Continuous feature | Derived based on [53] |

| Climate factor | Average temperature | Numerical | Continuous feature | [67] |

| Summer temperature | ||||

| Winter temperature | ||||

| Temperature difference | ||||

| Annual precipitation | ||||

| Annual sunshine time | ||||

| Average wind speed | ||||

| LEED credit categories | Sustainable sites | Numerical | Continuous feature | [53] |

| Water efficiency | ||||

| Energy atmosphere | ||||

| Material resources | ||||

| Indoor environmental quality | ||||

| Innovation | ||||

| Regional priority credits | ||||

| Project area | Gross floor area | Numerical | Continuous feature | [53] |

| Model Abbreviations | Model | Parameters |

|---|---|---|

| Ridge | Ridge Classifier | Alpha 0.01–10.0 (log-uniform) |

| LogReg | Logistics Regression | C 0.01–10.0 (log-uniform) |

| DT | Decision Tree | max_depth 3–10 |

| ET | Extra Trees | n_estimators 50–200, max_depth 3–10 |

| k-NN | K-Nearest Neighbours | n_neighbors 3–15 |

| NB | Gaussian Naive Bayes | var_smoothing 1 × 10−9 to 1 × 10−5 (log-uniform) |

| RF | Random Forest | n_estimators 50–200, max_depth 3–10 |

| SVM | Support Vector Machine | C 0.01–10.0 (log-uniform), gamma 0.001–1.0 (log-uniform) |

| GBM | Gradient Boosting Machine | n_estimators 50–200, learning_rate 0.01–0.5 (log-uniform), max_depth 3–7 |

| XGBoost | Extreme Gradient Boosting | n_estimators 50–200, learning_rate 0.01–0.5 (log-uniform), max_depth 3–7 |

| LightGBM | Light Gradient Boosting Machine | n_estimators 50–200, learning_rate 0.01–0.5 (log-uniform), max_depth 3–7 |

| CatBoost | Categorical Boosting | iterations 50–500, learning rate 0.01–1.0 (log-uniform), depth 3–7, l2 leaf regularisation 1–5 (log-uniform), bagging temperature 0.5–1.5 |

| Metric | Formula | Description |

|---|---|---|

| Accuracy | The model’s overall predictive performance | |

| Precision | The degree of precision in the model’s correct predictions | |

| Recall | The proportion of actual positive instances correctly identified | |

| F1 Score | A useful hybrid metric for imbalanced classes | |

| AUC | Measures the model’s ability to distinguish positive and negative classes | |

| Brier Score | BS = | An error metric measuring prediction accuracy and reliability |

| MRc1.1 | MRc2 | MRc4 | MRc6 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | Metric | Without Smote | With Smote | Without Smote | With Smote | Without Smote | With Smote | Without Smote | With Smote |

| Ridge | F1 score | 0.000 | 0.522 | 0.857 | 0.897 | 0.796 | 0.755 | 0.000 | 0.250 |

| Brier score | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | |

| LogReg | F1 score | 0.609 | 0.609 | 0.898 | 0.904 | 0.846 | 0.853 | 0.333 | 0.333 |

| Brier score | 0.088 | 0.114 | 0.137 | 0.133 | 0.165 | 0.179 | 0.057 | 0.154 | |

| DT | F1 score | 0.375 | 0.222 | 0.891 | 0.917 | 0.847 | 0.862 | 0.133 | 0.615 |

| Brier score | 0.103 | 0.181 | 0.106 | 0.127 | 0.169 | 0.164 | 0.159 | 0.145 | |

| ET | F1 score | 0.500 | 0.560 | 0.914 | 0.895 | 0.859 | 0.850 | 0.500 | 0.545 |

| Brier score | 0.082 | 0.143 | 0.139 | 0.164 | 0.171 | 0.171 | 0.045 | 0.139 | |

| k-NN | F1 score | 0.308 | 0.400 | 0.815 | 0.836 | 0.831 | 0.829 | 0.333 | 0.250 |

| Brier score | 0.103 | 0.165 | 0.201 | 0.213 | 0.199 | 0.232 | 0.066 | 0.139 | |

| NB | F1 score | 0.462 | 0.533 | 0.832 | 0.839 | 0.829 | 0.841 | 0.375 | 0.400 |

| Brier score | 0.115 | 0.292 | 0.195 | 0.199 | 0.290 | 0.298 | 0.477 | 0.476 | |

| RF | F1 score | 0.500 | 0.545 | 0.935 | 0.944 | 0.862 | 0.871 | 0.364 | 0.526 |

| Brier score | 0.084 | 0.116 | 0.110 | 0.115 | 0.156 | 0.146 | 0.050 | 0.088 | |

| SVM | F1 score | 0.444 | 0.545 | 0.903 | 0.895 | 0.857 | 0.846 | 0.421 | 0.571 |

| Brier score | 0.091 | 0.115 | 0.129 | 0.119 | 0.163 | 0.174 | 0.045 | 0.087 | |

| GB | F1 score | 0.452 | 0.571 | 0.929 | 0.885 | 0.871 | 0.869 | 0.444 | 0.615 |

| Brier score | 0.110 | 0.152 | 0.095 | 0.123 | 0.150 | 0.142 | 0.068 | 0.050 | |

| XGBoost | F1 score | 0.438 | 0.533 | 0.925 | 0.927 | 0.867 | 0.866 | 0.381 | 0.533 |

| Brier score | 0.096 | 0.107 | 0.105 | 0.106 | 0.147 | 0.145 | 0.054 | 0.075 | |

| LightGBM | F1 score | 0.571 | 0.522 | 0.917 | 0.923 | 0.857 | 0.867 | 0.571 | 0.500 |

| Brier score | 0.090 | 0.117 | 0.106 | 0.101 | 0.149 | 0.140 | 0.037 | 0.105 | |

| CatBoost | F1 score | 0.526 | 0.615 | 0.926 | 0.944 | 0.871 | 0.878 | 0.571 | 0.667 |

| Brier score | 0.093 | 0.125 | 0.099 | 0.088 | 0.168 | 0.136 | 0.035 | 0.050 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sönmez, N.; Kuruoğlu, M.; Maçka Kalfa, S.; Tokdemir, O.B. Explainable AI-Driven Analysis of Construction and Demolition Waste Credit Selection in LEED Projects. Architecture 2025, 5, 123. https://doi.org/10.3390/architecture5040123

Sönmez N, Kuruoğlu M, Maçka Kalfa S, Tokdemir OB. Explainable AI-Driven Analysis of Construction and Demolition Waste Credit Selection in LEED Projects. Architecture. 2025; 5(4):123. https://doi.org/10.3390/architecture5040123

Chicago/Turabian StyleSönmez, Nurşen, Murat Kuruoğlu, Sibel Maçka Kalfa, and Onur Behzat Tokdemir. 2025. "Explainable AI-Driven Analysis of Construction and Demolition Waste Credit Selection in LEED Projects" Architecture 5, no. 4: 123. https://doi.org/10.3390/architecture5040123

APA StyleSönmez, N., Kuruoğlu, M., Maçka Kalfa, S., & Tokdemir, O. B. (2025). Explainable AI-Driven Analysis of Construction and Demolition Waste Credit Selection in LEED Projects. Architecture, 5(4), 123. https://doi.org/10.3390/architecture5040123