Journal Description

Econometrics

Econometrics

is an international, peer-reviewed, open access journal on econometric modeling and forecasting, as well as new advances in econometrics theory, and is published quarterly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), EconLit, EconBiz, RePEc, and other databases.

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 35.4 days after submission; acceptance to publication is undertaken in 6.5 days (median values for papers published in this journal in the second half of 2025).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

Impact Factor:

1.4 (2024);

5-Year Impact Factor:

1.2 (2024)

Latest Articles

Using Subspace Algorithms for the Estimation of Linear State Space Models for Over-Differenced Processes

Econometrics 2026, 14(1), 12; https://doi.org/10.3390/econometrics14010012 (registering DOI) - 28 Feb 2026

Abstract

►

Show Figures

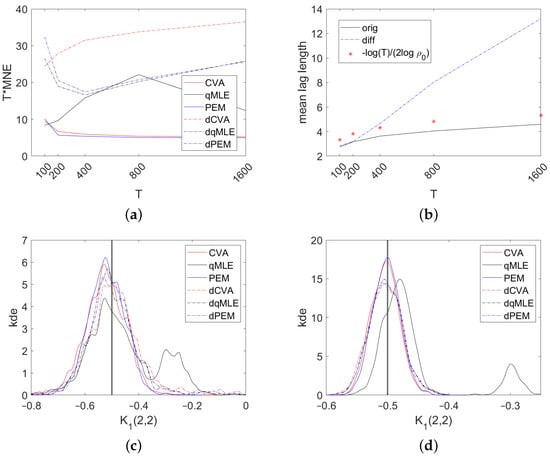

Subspace algorithms like canonical variate analysis (CVA) are regression-based methods for the estimation of linear dynamic state space models. They have been shown to deliver accurate (consistent and asymptotically equivalent to quasi-maximum likelihood estimation using the Gaussian likelihood) estimators for stably invertible stationary

[...] Read more.

Subspace algorithms like canonical variate analysis (CVA) are regression-based methods for the estimation of linear dynamic state space models. They have been shown to deliver accurate (consistent and asymptotically equivalent to quasi-maximum likelihood estimation using the Gaussian likelihood) estimators for stably invertible stationary autoregressive moving average (ARMA) processes. These results use the assumption that there are no zeros of the spectral density on the unit circle corresponding to the state space system. In this technical study, we consider vector processes made stationary by applying differencing to all variables, ignoring potential co-integrating relations. This leads to spectral zeros violating the above mentioned assumptions. We show consistency for the CVA estimators, closing a gap in the literature. However, a simulation exercise shows that over-differencing (while leading to consistent estimation of the transfer function) also complicates inference for CVA estimators, not just maximum likelihood-based estimators. This is also demonstrated in a real-world data example. The result also applies to seasonal differencing. The present paper hence suggests working with original data, not working in differences.

Full article

Open AccessArticle

Graph Attention Networks in Exchange Rate Forecasting

by

Joanna Landmesser-Rusek and Arkadiusz Orłowski

Econometrics 2026, 14(1), 11; https://doi.org/10.3390/econometrics14010011 - 25 Feb 2026

Abstract

►▼

Show Figures

Exchange rate forecasting is an important issue in financial market analysis. Currency rates form a dynamic network of connections that can be efficiently modeled using graph neural networks (GNNs). The key mechanism of GNNs is the message passing between nodes, allowing for better

[...] Read more.

Exchange rate forecasting is an important issue in financial market analysis. Currency rates form a dynamic network of connections that can be efficiently modeled using graph neural networks (GNNs). The key mechanism of GNNs is the message passing between nodes, allowing for better modeling of currency interactions. Each node updates its representation by aggregating features from its neighbors and combining them with its own. In convolutional graph neural networks (GCNs), all neighboring nodes are treated equally, but in reality, some may have a greater influence than others. To account for this changing importance of neighbors, graph attention networks (GAT) have been introduced. The aim of the study was to evaluate the effectiveness of GAT in forecasting exchange rates. The analysis covered time series of major world currencies from 2020 to 2024. The forecasting results obtained using GAT were compared with those obtained from benchmark models such as ARIMA, GARCH, MLP, GCN, and LSTM-GCN. The study showed that GAT networks outperform numerous methods. The results may have practical applications, supporting investors and analysts in decision-making.

Full article

Figure 1

Open AccessArticle

Application of Resolution Regression and Resolution Graphs in Evaluating Probability Forecasts Generated Using Binary Choice Models

by

Senarath Dharmasena, David A. Bessler and Oral Capps, Jr.

Econometrics 2026, 14(1), 10; https://doi.org/10.3390/econometrics14010010 - 24 Feb 2026

Abstract

►▼

Show Figures

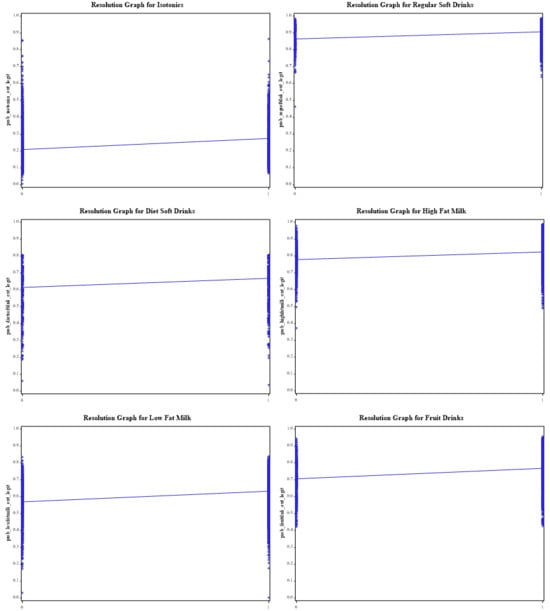

Binary choice models are widely used in econometric modeling when the dependent variable corresponds to discrete outcomes. With appropriate decision rules, these models provide predictions of binary choices generated from predicted probabilities. The accuracy of these predictions in terms of classifying probabilities to

[...] Read more.

Binary choice models are widely used in econometric modeling when the dependent variable corresponds to discrete outcomes. With appropriate decision rules, these models provide predictions of binary choices generated from predicted probabilities. The accuracy of these predictions in terms of classifying probabilities to events that occurred versus those that did not is a key issue. The use of expectation-prediction success at present is the standard method used to assess the accuracy of these predictions. However, this method is limited in its ability to correctly classify probabilities in the absence of appropriate predetermined cut-off levels. We propose alternative methods to classify probabilities generated through binary choice models, namely resolution graphs and resolution regressions that measure the ability to sort predicted probabilities against observed outcomes. Using probabilities generated from the use of logit models applied to purchasing decisions of various non-alcoholic beverages made by U.S. households, we compare probability sorting power using expectation-prediction success as well as resolution graphs and resolution regressions. Based on expectation-prediction success, the logit models performed better at classifying outcomes related to purchasing isotonic drinks, regular soft drinks, diet drinks, bottled water, and tea. Based on resolution regressions, the null hypothesis of perfect sorting of probabilities was rejected for all non-alcoholic beverages. Although the logit models generated upward-sloping resolution graphs as expected, they were relatively flat compared to the 45-degree perfect sorting line. Going forward, we recommend using resolution regression and resolution graphs to capture sorting of probabilities in addition to the conventional metrics used in ascertaining the ability of binary choice models to predict out-of-sample behavior.

Full article

Figure 1

Open AccessArticle

Econometric Analysis and Forecasts on Exports of Emerging Economies from Central and Eastern Europe

by

Liviu Popescu, Mirela Găman, Laurențiu Stelian Mihai, Cristian Ovidiu Drăgan, Daniel Militaru and Ion Buligiu

Econometrics 2026, 14(1), 9; https://doi.org/10.3390/econometrics14010009 - 14 Feb 2026

Abstract

►▼

Show Figures

This study examines the evolution, heterogeneity, and short-term prospects of export performance in seven Central and Eastern European (CEE) economies—Croatia, Czech Republic, Hungary, Poland, Romania, Bulgaria, and Slovakia—over the period 1995–2024. Using annual World Bank data, exports are modeled as a share of

[...] Read more.

This study examines the evolution, heterogeneity, and short-term prospects of export performance in seven Central and Eastern European (CEE) economies—Croatia, Czech Republic, Hungary, Poland, Romania, Bulgaria, and Slovakia—over the period 1995–2024. Using annual World Bank data, exports are modeled as a share of GDP to ensure cross-country comparability and to capture differences in trade dependence. The analysis combines descriptive and inferential statistics with Augmented Dickey–Fuller tests, non-parametric comparisons, Granger causality analysis, and country-specific ARIMA models to investigate export dynamics, the role of foreign direct investment (FDI), and future export trajectories. The results reveal a common long-term upward trend in export intensity across all countries, driven by European integration and structural transformation, but with pronounced cross-country differences in export dependence and volatility. Highly open economies such as Slovakia, Hungary, and the Czech Republic exhibit strong export performance alongside greater exposure to external shocks, while larger domestic markets such as Poland and Romania display lower export intensity and greater stabilization. Granger causality tests indicate that FDI contributes to export growth in several economies, often with multi-year lags, highlighting the importance of absorptive capacity and institutional quality in translating investment inflows into export competitiveness. ARIMA-based forecasts for 2025–2027 suggest continued export expansion and relative stabilization despite recent global disruptions. This study’s primary contribution lies in integrating comparative export analysis, causality testing, and short-term forecasting within a unified econometric framework, offering policy-relevant insights into export-led growth and economic convergence in post-transition European economies.

Full article

Figure 1

Open AccessArticle

Posterior Probabilities of Dominance for Wealth Distributions

by

William Griffiths and Duangkamon Chotikapanich

Econometrics 2026, 14(1), 8; https://doi.org/10.3390/econometrics14010008 - 12 Feb 2026

Abstract

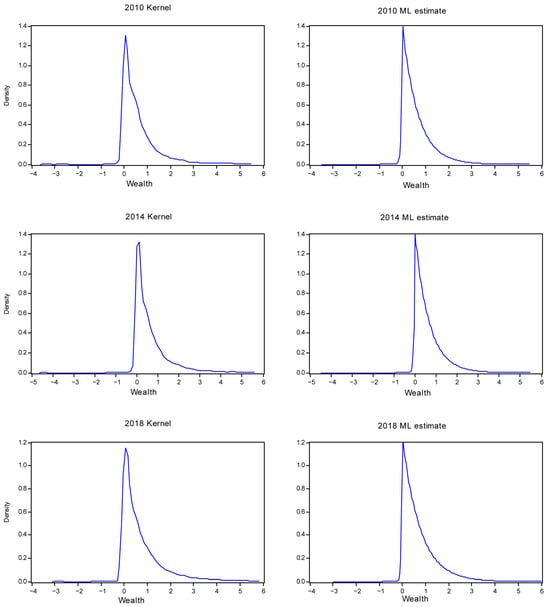

Probability distributions, which are typically used to describe income distributions, are not suitable to describe a population’s distribution of wealth because of the existence of negative observations and a large concentration of values close to zero. To overcome these problems, we describe how

[...] Read more.

Probability distributions, which are typically used to describe income distributions, are not suitable to describe a population’s distribution of wealth because of the existence of negative observations and a large concentration of values close to zero. To overcome these problems, we describe how the asymmetric Laplace distribution can be used for modelling wealth distributions and illustrate how it can be used to compute the posterior probabilities of first- and second-order stochastic dominance. Stochastic dominance concepts are useful for comparing wealth distributions and assessing whether changes in welfare have increased or decreased welfare in society. We use three distributions to make two such comparisons. The results are such that, in one comparison, one distribution clearly dominates the other. There is more uncertainty about dominance in the other comparison, with no dominance being the most likely outcome.

Full article

(This article belongs to the Special Issue Innovations in Bayesian Econometrics: Theory, Techniques, and Economic Analysis)

►▼

Show Figures

Figure 1

Open AccessArticle

Social Security Transfers and Fiscal Sustainability in Turkey: Evidence from 1984–2024

by

Huriye Gonca Diler, Nurgül E. Barın, Ercan Özen and Simon Grima

Econometrics 2026, 14(1), 7; https://doi.org/10.3390/econometrics14010007 - 31 Jan 2026

Abstract

Social security systems constitute a structurally significant component of public finance in developing economies and often generate persistent fiscal pressures through budgetary transfers. Demographic transformation, widespread informality in labor markets, and weaknesses in contribution-based financing increase the dependence of social security systems on

[...] Read more.

Social security systems constitute a structurally significant component of public finance in developing economies and often generate persistent fiscal pressures through budgetary transfers. Demographic transformation, widespread informality in labor markets, and weaknesses in contribution-based financing increase the dependence of social security systems on public resources. The objective of this study is to examine whether budget transfers to the social security system affect fiscal sustainability in Turkey by analyzing their relationship with the budget deficit and the public sector borrowing requirement. The analysis employs annual data for Turkey covering the period of 1984–2024. A comprehensive time-series econometric framework is adopted, incorporating conventional and structural-break unit root tests, the ARDL bounds testing approach with error correction modeling, and the Toda–Yamamoto causality method. The empirical findings provide evidence of a stable long-run relationship among the variables. The results indicate that social security budget transfers exert a statistically significant and persistent effect on the public sector borrowing requirement, while no direct long-run effect on the headline budget deficit is detected. Causality results further confirm that fiscal pressures associated with social security financing materialize primarily through borrowing dynamics rather than short-term budgetary imbalances. By explicitly modelling social security budget transfers as an independent fiscal channel over a long historical horizon, this study contributes to the literature by offering new empirical insights into the fiscal sustainability implications of social security financing in Turkey. The findings also provide policy-relevant evidence for developing economies facing similar institutional, demographic, and fiscal challenges.

Full article

Open AccessArticle

Binance USD Delisting and Stablecoins Repercussions: A Local Projections Approach

by

Papa Ousseynou Diop and Julien Chevallier

Econometrics 2026, 14(1), 6; https://doi.org/10.3390/econometrics14010006 - 16 Jan 2026

Abstract

►▼

Show Figures

The delisting of Binance USD (BUSD) constitutes a major regulatory intervention in the stablecoin market and provides a unique opportunity to examine how targeted regulation affects liquidity allocation, market concentration, and short-run systemic risk in crypto-asset markets. Using daily data for 2023 and

[...] Read more.

The delisting of Binance USD (BUSD) constitutes a major regulatory intervention in the stablecoin market and provides a unique opportunity to examine how targeted regulation affects liquidity allocation, market concentration, and short-run systemic risk in crypto-asset markets. Using daily data for 2023 and a linear and nonlinear Local Projections event-study framework, this paper analyzes the dynamic market responses to the BUSD delisting across major stablecoins and cryptocurrencies. The results show that liquidity displaced from BUSD is reallocated primarily toward USDT and USDC, leading to a measurable increase in stablecoin market concentration, while decentralized and algorithmic stablecoins absorb only a limited share of the shock. At the same time, Bitcoin and Ethereum experience temporary liquidity contractions followed by a relatively rapid recovery, suggesting conditional resilience of core crypto-assets. Overall, the findings document how a regulatory-induced exit of a major stablecoin reshapes short-run market dynamics and concentration patterns, highlighting potential trade-offs between regulatory enforcement and market structure. The paper contributes to the literature by providing the first empirical analysis of the BUSD delisting and by illustrating the usefulness of Local Projections for studying regulatory shocks in cryptocurrency markets.

Full article

Figure 1

Open AccessArticle

Shock Next Door: Geographic Spillovers in FinTech Lending After Natural Disasters

by

David Kuo Chuen Lee, Weibiao Xu, Jianzheng Shi, Yue Wang and Ding Ding

Econometrics 2026, 14(1), 5; https://doi.org/10.3390/econometrics14010005 - 15 Jan 2026

Abstract

►▼

Show Figures

We examine geographic spillovers in digital credit markets by studying how natural disasters affect borrowing behavior in adjacent, physically undamaged regions. Using granular loan-level data from Indonesia’s largest FinTech lender (2021–2023) and leveraging quasi-random variation in disaster timing and location, we estimate fixed-effects

[...] Read more.

We examine geographic spillovers in digital credit markets by studying how natural disasters affect borrowing behavior in adjacent, physically undamaged regions. Using granular loan-level data from Indonesia’s largest FinTech lender (2021–2023) and leveraging quasi-random variation in disaster timing and location, we estimate fixed-effects specifications that incorporate spatially lagged disaster exposure (an SLX-type spatial approach) to quantify spillovers. Disasters generate economically significant spillovers in neighboring provinces: a 1% increase in disaster frequency raises local borrowing by 0.036%, approximately 20% of the direct effect. Spillovers vary sharply with geographic connectivity—land-connected provinces experience effects about 6.6 times larger than sea-connected provinces. These results highlight that digital lending platforms can transmit geographically proximate risks beyond directly affected areas through channels that differ from traditional banking networks. The systematic nature of these spillovers suggests that disaster-response strategies may be more effective when they consider adjacent regions. That platform risk management can be strengthened by integrating spatial disaster exposure and connectivity into credit monitoring and decision rules.

Full article

Figure 1

Open AccessArticle

A Theory-Based Formal-Econometric Interpretation of an Econometric Model

by

Bernt Petter Stigum

Econometrics 2026, 14(1), 4; https://doi.org/10.3390/econometrics14010004 - 6 Jan 2026

Abstract

The references of most of the observations that econometricians have are ill defined. To use such data in an empirical analysis, the econometrician in charge must find a way to give them economic meaning. In this paper, I have data and an econometric

[...] Read more.

The references of most of the observations that econometricians have are ill defined. To use such data in an empirical analysis, the econometrician in charge must find a way to give them economic meaning. In this paper, I have data and an econometric model, and I set out to show how economic theory can be used to interpret the variables and parameters of my econometric model. According to Ragnar Frisch, that is a difficult task. Economic theories reside in a Model World and the econometrician’s data reside in the Real World; the rational laws in the model world are fundamentally different from the empirical laws in the real world; and between the two worlds there is a gap that can never be bridged To accomplish my task, I build a bridge between Frisch’s two worlds with applied formal-econometric arguments, invent a pertinent model-world economic theory, walk the bridge with the invented theory, and use it to give economic meaning to the variables and parameters of my econometric model. At the end I demonstrate that the invented theory and the bridge I use in my analysis are empirically relevant in the empirical context of my econometric model.

Full article

Open AccessArticle

Bayesian Panel Variable Selection Under Model Uncertainty for High-Dimensional Data

by

Pathairat Pastpipatkul and Htwe Ko

Econometrics 2026, 14(1), 3; https://doi.org/10.3390/econometrics14010003 - 4 Jan 2026

Abstract

►▼

Show Figures

Selecting the relevant covariates in high-dimensional panel data remains a central challenge in applied econometrics. Conventional fixed effects and random effects models are not designed for systematic variable selection under model uncertainty. In addition, many existing models such as LASSO in machine learning

[...] Read more.

Selecting the relevant covariates in high-dimensional panel data remains a central challenge in applied econometrics. Conventional fixed effects and random effects models are not designed for systematic variable selection under model uncertainty. In addition, many existing models such as LASSO in machine learning or Bayesian approaches like model averaging, Bayesian Additive Regression Trees, and Bayesian Variable Selection with Shrinking and Diffusing Priors have been primarily developed for time series analysis. This paper develops and applies Bayesian Panel Variable Selection (BPVS) models to simulation and empirical applications. These models are designed to assist researchers in identifying which input covariates matter most, while also determining whether their effects should be treated as fixed or random through Bayesian hierarchical modeling and posterior inference, which jointly accounts for variable importance ranking. Both the simulation studies and the empirical application to socioeconomic determinants of subjective well-being show that Bayesian panel models outperform classical models, especially in terms of convergence stability, predictive accuracy, and reliable variable selection. Classical panel models, in contrast, remain attractive for their computational efficiency and simplicity. The Hausman test is used as a robustness check. The study adds an econometric approach for dealing with model uncertainty in high-dimensional panel analysis and offers open-source R 4.5.1 code to support future applications.

Full article

Figure 1

Open AccessArticle

I(2) Cointegration in Macroeconometric Modelling: Tourism Price and Inflation Dynamics

by

Sergej Gričar, Štefan Bojnec and Bjørnar Karlsen Kivedal

Econometrics 2026, 14(1), 2; https://doi.org/10.3390/econometrics14010002 - 4 Jan 2026

Abstract

This study enhances macroeconometric modelling by utilising an I(2) cointegration framework to analyse the dynamic link between tourism prices and inflation in Slovenia and the Eurozone. Using monthly data from 2000 to 2017, we estimate cointegrated VAR models that capture long-run equilibria, short-run

[...] Read more.

This study enhances macroeconometric modelling by utilising an I(2) cointegration framework to analyse the dynamic link between tourism prices and inflation in Slovenia and the Eurozone. Using monthly data from 2000 to 2017, we estimate cointegrated VAR models that capture long-run equilibria, short-run adjustments, and persistent deviations inherent in I(2) processes. The results reveal strong spillover effects from Slovenian tourism and input prices to Eurozone inflation and hospitality prices in the short run, while Eurozone-wide shocks dominate the long-run dynamics. By explicitly accounting for nonstationarity, structural breaks, and seasonal patterns, the I(2) model provides a more reliable framework than traditional I(1)-based approaches, which are often prone to misspecification when higher-order integration and persistent deviations are ignored. The findings contribute to macroeconometric theory by demonstrating the value of I(2) cointegration in modelling complex price systems and offer policy insights into inflation management and competitiveness in tourism-dependent economies.

Full article

(This article belongs to the Special Issue Advancements in Macroeconometric Modeling and Time Series Analysis)

►▼

Show Figures

Figure 1

Open AccessArticle

Complexity-Aware Vector-Valued Machine Learning of State-Level Bond Returns: Evidence on South African Trade Spillovers Under SALT and OBBBA

by

Gordon Dash, Nina Kajiji, Domenic Vonella and Helper Zhou

Econometrics 2026, 14(1), 1; https://doi.org/10.3390/econometrics14010001 - 23 Dec 2025

Abstract

►▼

Show Figures

This study examines the impact of international trade shocks from South Africa and recent U.S. federal tax reforms on state-level municipal bond returns within the United States. Employing a unique transaction-level dataset comprising more than 50 million municipal bond trades from 2020 to

[...] Read more.

This study examines the impact of international trade shocks from South Africa and recent U.S. federal tax reforms on state-level municipal bond returns within the United States. Employing a unique transaction-level dataset comprising more than 50 million municipal bond trades from 2020 to 2024, the empirical approach integrates machine learning estimators with econometric volatility models to examine daily nonlinear spillovers and structural complexity across twenty U.S. states. The study introduces and extends the application of a vector radial basis function neural network framework, leveraging its universal approximation capacity to jointly model multiple state-level outcomes and uncover complex response patterns The empirical results reveal substantial cross-state heterogeneity in bond-return resilience, influenced by variation in state tax regimes, economic complexity, and differential exposure to external financial forces. States exhibiting higher economic adaptability demonstrate faster recovery and weaker shock amplification, whereas structurally rigid states experience persistent volatility and slower mean reversion. These findings demonstrate that complexity-aware predictive modeling, when combined with granular fiscal and trade-linkage data, provides valuable insight into the pathways through which global and domestic shocks propagate into U.S. municipal bond markets and shape subnational financial stability.

Full article

Figure 1

Open AccessArticle

Econometric and Python-Based Forecasting Tools for Global Market Price Prediction in the Context of Economic Security

by

Dmytro Zherlitsyn, Volodymyr Kravchenko, Oleksiy Mints, Oleh Kolodiziev, Olena Khadzhynova and Oleksandr Shchepka

Econometrics 2025, 13(4), 52; https://doi.org/10.3390/econometrics13040052 - 15 Dec 2025

Abstract

►▼

Show Figures

Debate persists over whether classical econometric or modern machine learning (ML) approaches provide superior forecasts for volatile monthly price series. Despite extensive research, no systematic cross-domain comparison exists to guide model selection across diverse asset types. In this study, we compare traditional econometric

[...] Read more.

Debate persists over whether classical econometric or modern machine learning (ML) approaches provide superior forecasts for volatile monthly price series. Despite extensive research, no systematic cross-domain comparison exists to guide model selection across diverse asset types. In this study, we compare traditional econometric models with classical ML baselines and hybrid approaches across financial assets, futures, commodities, and market index domains. Universal Python-based forecasting tools include month-end preprocessing, automated ARIMA order selection, Fourier terms for seasonality, circular terms, and ML frameworks for forecasting and residual corrections. Performance is assessed via anchored rolling-origin backtests with expanding windows and a fixed 12-month horizon. MAPE comparisons show that ARIMA-based models provide stable, transparent benchmarks but often fail to capture the nonlinear structure of high-volatility series. ML tools can enhance accuracy in these cases, but they are susceptible to stability and overfitting on monthly histories. The most accurate and reliable forecasts come from models that combine ARIMA-based methods with Fourier transformation and a slight enhancement using machine learning residual correction. ARIMA-based approaches achieve about 30% lower forecast errors than pure ML (18.5% vs. 26.2% average MAPE and 11.6% vs. 16.8% median MAPE), with hybrid models offering only marginal gains (0.1 pp median improvement) at significantly higher computational cost. This work demonstrates the domain-specific nature of model performance, clarifying when hybridization is effective and providing reproducible Python pipelines suited for economic security applications.

Full article

Figure 1

Open AccessArticle

Credit Rationing, Its Determinants and Non-Performing Loans: An Empirical Analysis of Credit Markets in Polish Banking Sector

by

Cenap Mengü Tunçay and Elżbieta Grzegorczyk-Akın

Econometrics 2025, 13(4), 51; https://doi.org/10.3390/econometrics13040051 - 8 Dec 2025

Cited by 1

Abstract

►▼

Show Figures

In a situation where the number of non-performing loans (NPLs) increases, lenders may raise interest rates to compensate for potential losses, and the amount of credit granted in the market may decrease, leading to credit rationing. Such actions may become vital based on

[...] Read more.

In a situation where the number of non-performing loans (NPLs) increases, lenders may raise interest rates to compensate for potential losses, and the amount of credit granted in the market may decrease, leading to credit rationing. Such actions may become vital based on their potential consequences for the economy, entrepreneurs and consumers, which makes this topic extremely important. This study, by using an empirical VAR analysis, has strived to determine whether credit rationing by banks operating in the Polish banking sector is driven by risky loans (which are the main determinant of credit rationing and are represented by the ratio of NPLs to total loans). According to the results, it has been found that credit rationing, made by Polish banks, is not statistically significant when the risk in the credit market rises due to non-performing loans. Therefore, it can be claimed that the risky structure due to NPL in the credit market may not be one of the determinant factors of credit rationing in the Polish banking sector. The low sensitivity of the Polish banking sector to the risky structure of the credit market may result from the relatively low share of loans in total assets compared to debt instruments. Furthermore, restrictive lending policies and the predominance of mortgage loans secured directly by real estate limit portfolio risk, which may reduce the need for a risk-sensitive lending strategy.

Full article

Figure 1

Open AccessArticle

Exploring Poverty and SDG Indicators in Italy: An Identity Spline Approach to Partial Least Squares Regression

by

Rosaria Lombardo, Jean-François Durand, Ida Camminatiello and Corrado Cuccurullo

Econometrics 2025, 13(4), 50; https://doi.org/10.3390/econometrics13040050 - 8 Dec 2025

Abstract

►▼

Show Figures

Poverty is a complex global issue, closely linked to economic and social inequalities. It encompasses not only a lack of financial resources but also disparities in access to education, healthcare, employment, and social participation. In alignment with the United Nations’ Sustainable Development Goals—specifically

[...] Read more.

Poverty is a complex global issue, closely linked to economic and social inequalities. It encompasses not only a lack of financial resources but also disparities in access to education, healthcare, employment, and social participation. In alignment with the United Nations’ Sustainable Development Goals—specifically SDGs 3 (Good Health and Well-being), 4 (Quality Education), and 8 (Decent Work and Economic Growth)—this study investigates the relationship between poverty and a set of socioeconomic indicators across Italy’s 20 regions. To explore how poverty levels respond to different predictors, we apply an identity spline transformation to simulate controlled changes in the poverty indicator. The resulting scenarios are analyzed using partial least squares regression, enabling the identification of the most influential variables. The findings offer insights into regional disparities and contribute to evidence-based strategies aimed at reducing poverty and promoting inclusive, sustainable development.

Full article

Figure 1

Open AccessArticle

Choosing Right Bayesian Tools: A Comparative Study of Modern Bayesian Methods in Spatial Econometric Models

by

Yuheng Ling and Julie Le Gallo

Econometrics 2025, 13(4), 49; https://doi.org/10.3390/econometrics13040049 - 4 Dec 2025

Abstract

We compare three modern Bayesian approaches, Hamiltonian Monte Carlo (HMC), Variational Bayes (VB), and Integrated Nested Laplace Approximation (INLA), for two classic spatial econometric specifications: the spatial lag model and spatial error model. Our Monte Carlo experiments span a range of sample sizes

[...] Read more.

We compare three modern Bayesian approaches, Hamiltonian Monte Carlo (HMC), Variational Bayes (VB), and Integrated Nested Laplace Approximation (INLA), for two classic spatial econometric specifications: the spatial lag model and spatial error model. Our Monte Carlo experiments span a range of sample sizes and spatial neighborhood structures to assess accuracy and computational efficiency. Overall, posterior means exhibit minimal bias for most parameters, with precision improving as sample size grows. VB and INLA deliver substantial computational gains over HMC, with VB typically fastest at small and moderate samples and INLA showing excellent scalability at larger samples. However, INLA can be sensitive to dense spatial weight matrices, showing elevated bias and error dispersion for variance and some regression parameters. Two empirical illustrations underscore these findings: a municipal expenditure reaction function for Île-de-France and a hedonic price for housing in Ames, Iowa. Our results yield actionable guidance. HMC remains a gold standard for accuracy when computation permits; VB is a strong, scalable default; and INLA is attractive for large samples provided the weight matrix is not overly dense. These insights help practitioners select Bayesian tools aligned with data size, spatial neighborhood structure, and time constraints.

Full article

(This article belongs to the Special Issue Innovations in Bayesian Econometrics: Theory, Techniques, and Economic Analysis)

►▼

Show Figures

Figure 1

Open AccessArticle

Construction and Applications of a Composite Model Based on Skew-Normal and Skew-t Distributions

by

Jingjie Yuan and Zuoquan Zhang

Econometrics 2025, 13(4), 48; https://doi.org/10.3390/econometrics13040048 - 2 Dec 2025

Abstract

►▼

Show Figures

Financial return distributions often exhibit central asymmetry and heavy-tailed extremes, challenging standard parametric models. We propose a novel composite distribution integrating a skew-normal center with skew-t tails, partitioning the support into three regions with smooth junctions. The skew-normal component captures moderate central

[...] Read more.

Financial return distributions often exhibit central asymmetry and heavy-tailed extremes, challenging standard parametric models. We propose a novel composite distribution integrating a skew-normal center with skew-t tails, partitioning the support into three regions with smooth junctions. The skew-normal component captures moderate central asymmetry, while the skew-t tails model extreme events with power-law decay, with tail weights determined by continuity constraints and thresholds selected via Hill plots. Monte Carlo simulations show that the composite model achieves superior global fit, lower-tail KS statistics, and stable parameter estimation compared with skew-normal and skew-t benchmarks. We further conduct simulation-based and empirical backtesting of risk measures, including Value-at-Risk (VaR) and Expected Shortfall (ES), using generated datasets and 2083 TSLA daily log returns (2017–2025), demonstrating accurate tail risk capture and reliable risk forecasts. Empirical fitting also yields improved log-likelihood and diagnostic measures (P–P, Q–Q, and negative log P–P plots). Overall, the proposed composite distribution provides a flexible theoretically grounded framework for modeling asymmetric and heavy-tailed financial returns, with practical advantages in risk assessment, extreme event analysis, and financial risk management.

Full article

Figure 1

Open AccessArticle

Robust Learning of Tail Dependence

by

Omid M. Ardakani

Econometrics 2025, 13(4), 47; https://doi.org/10.3390/econometrics13040047 - 20 Nov 2025

Cited by 1

Abstract

►▼

Show Figures

Accurate estimation of tail dependence is difficult due to model misspecification and data contamination. This paper introduces a class of minimum f-divergence estimators for the tail dependence coefficient that unifies robust estimation with extreme value theory. I establish strong consistency and derive

[...] Read more.

Accurate estimation of tail dependence is difficult due to model misspecification and data contamination. This paper introduces a class of minimum f-divergence estimators for the tail dependence coefficient that unifies robust estimation with extreme value theory. I establish strong consistency and derive the semiparametric efficiency bound for estimating extremal dependence, the extremal Cramér–Rao bound. I show that the estimator achieves this bound if and only if the second derivative of its generating function at unity equals one, formally characterizing the trade-off between robustness and asymptotic efficiency. An empirical application to systemic risk in the US banking sector shows that the robust Hellinger estimator provides stability during crises, while the efficient maximum likelihood estimator offers precision during normal periods.

Full article

Figure 1

Open AccessArticle

A Model of the Impact of Government Revenue and Quality of Governance on the Pupil/Teacher Ratio for Every Country in the World

by

Stephen G. Hall and Bernadette O’Hare

Econometrics 2025, 13(4), 46; https://doi.org/10.3390/econometrics13040046 - 19 Nov 2025

Abstract

►▼

Show Figures

This study explores the relationship between government revenue per capita, governance quality, and the supply of teachers—an indicator under Sustainable Development Goal 4 (Target 4.c). Using annual data from 217 countries spanning 1980 to 2022, we apply a non-linear panel model with a

[...] Read more.

This study explores the relationship between government revenue per capita, governance quality, and the supply of teachers—an indicator under Sustainable Development Goal 4 (Target 4.c). Using annual data from 217 countries spanning 1980 to 2022, we apply a non-linear panel model with a logistic function that incorporates country-specific governance measures. Our findings reveal that increased government revenue is positively associated with teacher supply, and that improvements in governance amplify this effect. The model provides predictive insights into how changes in revenue may influence progress toward education-related SDG targets at the country level.

Full article

Figure 1

Open AccessArticle

Dynamic Volatility Spillovers Among G20 Economies During the Global Crisis Periods—A TVP VAR Analysis

by

Himanshu Goel, Parminder Bajaj, Monika Agarwal, Abdallah AlKhawaja and Suzan Dsouza

Econometrics 2025, 13(4), 45; https://doi.org/10.3390/econometrics13040045 - 14 Nov 2025

Abstract

►▼

Show Figures

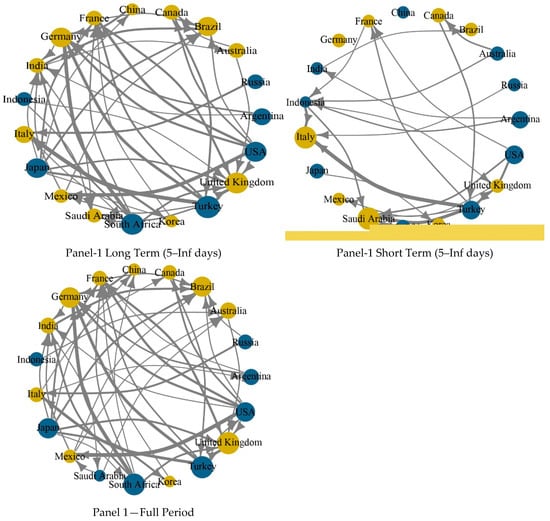

Previous research on financial contagion has mostly looked at volatility spillovers using static or fixed parameter models. These models don’t always take into account how inter-market links change and depend on frequency during big crises. This study fills in that gap by looking

[...] Read more.

Previous research on financial contagion has mostly looked at volatility spillovers using static or fixed parameter models. These models don’t always take into account how inter-market links change and depend on frequency during big crises. This study fills in that gap by looking at how changes in volatility in the G20 equity markets affected four big global events: the global financial crisis of 2008, the European debt crisis, the COVID-19 pandemic, and the Russia-Ukraine war. The study uses a Time-Varying Parameter Vector Autoregression (TVP VAR) framework along with the Baruník-Křehlík frequency domain spillover measure to look at how connectedness changes over short-term (1–5 days) and long-term (5–Inf days) time periods. The results show that systemic connectedness changes a lot during crises. For example, the Total Connectedness Index (TCI) was 24–25 percent during the GFC and EDC, 34 percent during COVID-19, and a huge jump to 60 percent during the Russia-Ukraine war. During the global financial crisis and the war between Russia and Ukraine, the US constantly emerged as the largest transmitter. During the European debt crisis, on the other hand, emerging markets like Turkey, South Africa, and Japan acted as net transmitters. During all crisis times, short-term spillovers are the most common. This shows how important high-frequency volatility transmission is. This study is different from others because it uses both time-varying and frequency domain views. This gives us a better idea of how crises change the way global finances are linked. The results are very important for policymakers and investors because they show how important it is to coordinate risk management, improve market safety, and make systemic stress testing better in a global financial world.

Full article

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Econometrics, Economies, JRFM, Forecasting

Modern Challenges and Innovations in Financial Econometrics

Topic Editors: Agnieszka Szmelter-Jarosz, Hamed NozariDeadline: 31 August 2027

Conferences

Special Issues

Special Issue in

Econometrics

Innovations in Bayesian Econometrics: Theory, Techniques, and Economic Analysis

Guest Editor: Deborah GefangDeadline: 31 May 2026

Special Issue in

Econometrics

Labor Market Dynamics and Wage Inequality: Econometric Models of Income Distribution

Guest Editor: Marc K. ChanDeadline: 25 June 2026

Special Issue in

Econometrics

Advancements in Macroeconometric Modeling and Time Series Analysis

Guest Editor: Julien ChevallierDeadline: 31 October 2026