Abstract

This paper introduces a new econometric framework for modeling fractional outcomes bounded between zero and one. We propose the Fractional Probit with Cross-Sectional Volatility (FPCV), which specifies the conditional mean through a probit link and allows the conditional variance to depend on observable heterogeneity. The model extends heteroskedastic probit methods to fractional responses and unifies them with existing approaches for proportions. Monte Carlo simulations demonstrate that the FPCV estimator achieves lower bias, more reliable inference, and superior predictive accuracy compared with standard alternatives. The framework is particularly suited to empirical settings where fractional outcomes display systematic variability across units, such as participation rates, market shares, health indices, financial ratios, and vote shares. By modeling both mean and variance, FPCV provides interpretable measures of volatility and offers a robust tool for empirical analysis and policy evaluation.

1. Introduction

Fractional dependent variables, defined on the unit interval, arise in a wide range of economic applications. Examples include participation rates in labor markets, market shares in industrial organizations, bounded indices in health economics, financial ratios, and vote shares in political economies. Standard linear regression models are inappropriate in these contexts because they may predict values outside the admissible range and fail to account for heteroskedasticity.

To address these issues, () introduced the fractional logit and probit models, providing quasi-maximum likelihood estimators that are robust and widely used for modeling proportions and rates. However, the conditional variance in these models is constrained to the Bernoulli form, which can be too restrictive when the dispersion of outcomes varies across observational units. () proposed beta regression, which parameterizes the variance indirectly through a precision parameter, allowing greater flexibility in modeling bounded data. While this approach accommodates varying dispersion, its indirect variance specification limits interpretability, particularly when heterogeneity arises from observable characteristics.

Subsequent research has expanded these frameworks in several directions. () and () provided comprehensive discussions of estimation and testing strategies for fractional regressions. () extended beta regression to incorporate mean–variance relationships for bounded psychological and behavioral outcomes, while () explored nonlinear fractional models in applied finance. More recent studies have improved inference and robustness: () developed Bartlett-corrected tests for varying-precision beta regressions; () proposed robust estimation via maximum- likelihood; () introduced outlier detection methods for improving robustness; and () addressed bounded responses in high dimensions using Bayesian shrinkage priors. In addition, () and () advanced generalized additive models for location, scale, and shape (GAMLSS), offering flexible semiparametric tools for modeling both the mean and variance of bounded outcomes. Despite these advances, most existing fractional response models either impose homoskedasticity or employ indirect variance parameterizations. Yet in many empirical contexts, the variance of fractional outcomes differs systematically across cross-sectional units, reflecting observable heterogeneity. In parallel, the heteroskedastic probit model introduced by () and () established a tractable variance-function approach for binary data, allowing the error variance to depend explicitly on covariates.

This paper develops a Fractional Probit with Cross–Sectional Volatility (FPCV) to fill this gap. The model specifies the conditional mean via a probit link and introduces a multiplicative variance function that scales dispersion by observable characteristics. This structure directly unifies the variance-function tradition of heteroskedastic probits with the bounded-outcome framework of fractional and beta regressions.

Our contributions are threefold. First, we extend the heteroskedastic probit beyond binary outcomes to fractional responses. Second, we propose a tractable and interpretable variance specification that links volatility directly to observable heterogeneity. Third, through Monte Carlo simulations, we show that the FPCV estimator reduces bias, improves coverage, and achieves superior predictive accuracy relative to standard alternatives. Collectively, these contributions fill a long-standing gap in the econometric analysis of bounded outcomes with heteroskedasticity.

2. Materials and Methods

2.1. Model Specification

Let denote a random sample, where is a fractional response, is a vector of covariates entering the conditional mean, and is a positive scalar function of an vector of covariates determining the conditional variance.

The conditional mean is specified by the probit link

where denotes the standard normal cumulative distribution function and is a finite-dimensional parameter vector.

The observed response is represented as

with a multiplicative heteroskedastic variance structure,

where is a positive scale parameter. In practice, can be parameterized as , where is an vector of observable covariates.

Equation (1) ensures that the conditional mean lies strictly within the interval, while (3) allows the conditional variance to depend systematically on observable heterogeneity. Although the normality assumption in (2) implies unbounded support, it serves here as a working quasi-likelihood rather than a literal distribution for . The proposed model therefore follows the quasi–maximum likelihood framework ( (), (), and ()), where consistency and asymptotic normality hold under the correct specification of the conditional mean even if the true distribution of is non-normal. This approach extends the heteroskedastic probit model of () to the case of fractional responses by incorporating cross-sectional heteroskedasticity directly into the variance function.

2.2. Likelihood Function

Given (1)–(3), the conditional distribution of is

Hence the conditional density is

where denotes the standard normal p.d.f.

We define the standardized residual as

Using (6), the sample log-likelihood is

Equation (7) is maximized with respect to to obtain the QMLE of the model.

Score functions. Let and note that . The derivatives of the standardized residual are

Differentiating (7), the score with respect to is

and the score with respect to is

Variance parameterization. If the variance scaling factor is parameterized as , with known covariates and unknown, then

This nests Harvey-type log–variance functions in the fractional setting.

To ensure model identifiability, we follow standard practice in heteroskedastic probit models () by excluding an intercept term from . This normalization prevents confounding between the global scale parameter and the intercept component of , ensuring that uniquely captures the overall scale while governs relative cross-sectional heteroskedasticity.

Information matrix. Under correct specification, is conditional on , so , , and . It follows that the mean and variance parameters are orthogonal, i.e.,

The Fisher information blocks are

and, if ,

2.3. Estimation and Asymptotic Properties

Estimation. The parameters are estimated by maximizing the log-likelihood function in (7) using QMLE. Optimization is implemented via the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm, a quasi-Newton method that iteratively updates an approximation to the Hessian matrix until convergence. This approach provides stable and efficient optimization performance for nonlinear likelihood models (). Standard errors are computed from the inverse observed information matrix or, more generally, from heteroskedasticity-robust sandwich estimators that remain valid under mild misspecification.

Asymptotic properties. Let (or when applicable) denote the maximizers of the log-likelihood function. Under standard regularity conditions—including the full column rank of X, strictly positive , interior support of , and i.i.d. sampling—the QMLE satisfies

with a corresponding result for when the variance function is parameterized.

More generally, under the M-estimation framework (; ; ; ), the estimator is consistent and asymptotically normal:

where and denotes the Fisher information matrix. Because the mean and variance parameters are orthogonal, the information matrix is block-diagonal, simplifying inference and enabling efficient estimation.

Finally, the model nests familiar specifications as special cases: the standard fractional probit when and the heteroskedastic probit when the dependent variable is binary. This nesting highlights the generality of the FPCV framework as a unified structure for modeling both fractional and discrete bounded outcomes.

3. Simulation Study

3.1. Competing Approaches

To evaluate the finite-sample performance of the proposed FPCV model, we conducted Monte Carlo simulations and compared the model with two benchmark estimators: the standard fractional probit (FP) and beta regression (BR) models. All estimators were applied to data generated from the same underlying processes to ensure a fair and meaningful comparison. The purpose of this exercise was not to suggest that these competing models solve different problems but rather to examine how well each performs when confronted with identical fractional data, particularly when cross-sectional heteroskedasticity is present in the true data-generating process. In this way, the simulation assessed both robustness and efficiency under varying variance structures, illustrating how the FPCV model improves estimation and prediction when the standard assumptions of homoskedasticity are violated.

- Fractional Probit (FP) ():with variance restricted to the Bernoulli form:Estimation proceeds by quasi-maximum likelihood using a Bernoulli log-likelihood for .

- Beta Regression (BR) ():with link functions for the mean and precision parameters,The conditional variance is given by

The three models differ primarily in their variance structure: FP fixes variance to the Bernoulli form, BR uses an indirect precision parameterization, and FPCV specifies a direct multiplicative variance function.

3.2. Design of Experiments

We consider two classes of data-generating processes (DGPs):

Homoskedastic case.

Heteroskedastic case.

where varies systematically with covariates.

Covariates were generated as , and the parameters were set such that for all i. Following the evaluation framework suggested by (), our simulation design considered multiple sample sizes comparisons to assess predictive performance. We considered sample sizes , and each experiment was repeated 1000 times.

The performance of competing estimators is evaluated along several dimensions. First, we compute the finite-sample bias and root mean squared error (RMSE) of across Monte Carlo replications, which provide direct measures of estimator accuracy and efficiency. Second, we examine the coverage probabilities of nominal 95% confidence intervals to assess the validity of asymptotic inference. Third, we compare overall model fit using standard information criteria, namely the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC). Finally, we evaluate out-of-sample predictive accuracy by reporting the mean squared prediction error (MSPE) of a holdout sample. These complementary metrics provide a comprehensive assessment of both estimation and prediction performance.

3.3. Results: Bias, Coverage, and MSPE

Table 1 reports the finite-sample bias and RMSE of the estimated parameters for sample sizes . The results highlight the superior performance of FPCV, particularly under moderate and large samples, where both bias and RMSE are substantially smaller than those of competing methods.

Table 1.

Bias and RMSE of parameter estimates across models and sample sizes.

Table 2 reports the empirical coverage probabilities of nominal 95% confidence intervals for the regression parameters across sample sizes. Ideally, coverage should be close to 95%. The results show that the fractional probit (FP) systematically undercovers, with coverage rates as low as 82% for when and remaining below the nominal level even at . Beta regression (BR) improves upon FP, delivering coverage rates between 87–93%, but still falls short of the nominal level, particularly for the variance parameter. By contrast, the proposed FPCV achieves coverage rates much closer to 95% across all parameters and sample sizes. For example, with , coverage is 96% for , 95% for , and 95% for , which is essentially on target. These findings indicate that accounting explicitly for cross-sectional heteroskedasticity not only reduces bias but also yields more reliable interval estimates.

Table 2.

Coverage probability of 95% confidence intervals.

Table 3 reports the mean squared prediction error (MSPE) across replications. FPCV consistently delivers the lowest MSPE for all sample sizes, with relative gains most pronounced in small samples. The fractional probit exhibits the weakest predictive performance, while beta regression improves upon FP but remains less accurate than FPCV. These results confirm the predictive advantage of explicitly modeling cross-sectional heteroskedasticity.

Table 3.

Predictive fit: Mean squared prediction error (MSPE) of .

Table 4 presents the log-likelihood, AIC, and BIC values for each estimator. Across all sample sizes, FPCV achieves the highest log-likelihood and the lowest AIC and BIC, indicating superior model fit. While BR improves upon FP, the gains are smaller than those achieved by FPCV. The results confirm that incorporating an explicit variance function improves both in-sample fit and penalized likelihood criteria, strengthening the case for the proposed estimator.

Table 4.

Model fit comparison using log-likelihood, AIC, and BIC.

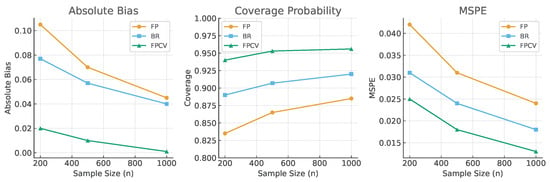

Figure 1 provides a graphical summary of the finite-sample properties of the competing estimators. Absolute bias (left panel) declines with sample size for all methods, but FPCV consistently achieves the smallest bias, approaching zero already at , while FP remains systematically biased. Coverage probabilities (center panel) highlight the undercoverage of FP and the partial improvement of BR, in contrast to FPCV which attains values essentially indistinguishable from the nominal 95% across all sample sizes. Predictive performance, measured by the MSPE (right panel), follows the same ranking: FP performs the worst, BR improves on FP, and FPCV dominates with the lowest prediction error in every case. Taken as a whole, the figure clearly demonstrates the efficiency and robustness gains of the proposed FPCV relative to standard alternatives.

Figure 1.

Monte Carlo results: average absolute bias (left), coverage probability (center), and MSPE (right) as functions of sample size.

4. Example of Real Data

To facilitate comparability with earlier studies, we employ the same 401(k) dataset analyzed by (). This benchmark dataset has been widely used to illustrate fractional response models, making it an appropriate platform for evaluating methodological advances. While () focused on mean estimation using the fractional probit and logit under homoskedasticity, our FPCV model explicitly allows the conditional variance to depend on observable covariates. Applying the FPCV specification to the same data provides a direct conceptual comparison: our estimates yield similar marginal effects on the mean participation probability but reveal systematic cross-sectional variation in volatility linked to firm size and plan characteristics. This finding highlights the additional information captured by modeling heteroskedasticity directly, demonstrating the empirical advantage of the proposed approach over conventional homoskedastic fractional models.

According to Table 5, consistent with economic intuition, all three models—the fractional logit, beta regression, and the proposed FPCV—yield qualitatively similar covariate effects. Participation increases significantly with the employer match rate (mrate), the age of the plan (age), and when the 401(k) is the sole retirement option (sole), whereas firm size (totemp1) is associated with lower participation rates. These signs and magnitudes align closely with the benchmark findings of (), confirming the validity of the FPCV mean specification.

Table 5.

Comparison of fractional models: coefficient estimates with standard errors and model fit.

The models differ, however, in their treatment of cross-sectional variability. The beta regression estimates a relatively high precision parameter (), which affects the variance only indirectly through the precision term, limiting its interpretability. By contrast, the FPCV model introduces a directly interpretable volatility component () that explicitly links dispersion to observable heterogeneity. This specification produces noticeably tighter standard errors for the slope coefficients, improving estimation efficiency.

Model comparison metrics reinforce these gains. FPCV achieves a log-likelihood of , substantially higher than the values for the fractional logit () and beta regression (), and correspondingly lower AIC and BIC values. Collectively, these results confirm established evidence of 401(k) participation while demonstrating that explicitly modeling cross-sectional volatility yields a more efficient and better-fitting specification for fractional outcomes.

5. Conclusions

This paper has proposed a Fractional Probit with Cross-Sectional Volatility, a new framework that integrates the variance-function approach of heteroskedastic probit models with the bounded-outcome setting of fractional response models. By specifying the conditional mean through a probit link and allowing the variance to scale multiplicatively with observable heterogeneity, the model provides a tractable and interpretable characterization of cross-sectional volatility in proportions. Monte Carlo experiments demonstrate that the estimator achieves lower bias, more accurate inference, and superior predictive performance relative to existing alternatives. An empirical illustration using 401(k) participation data further confirms that FPCV delivers both improved model fit and economically meaningful measures of dispersion. Taken together, the results suggest that FPCV offers a statistically robust, policy-relevant, and broadly applicable tool for the analysis of fractional outcomes.

Future research could extend the framework to panel structures, Bayesian estimation, and zero–one inflated settings, further broadening its scope in applied econometric work. In addition, the FPCV model is not limited to cross-sectional data; it can be adapted to other types of datasets, including long time series or panel data, whenever the dependent variable is defined on a fractional scale between 0 and 1. Moreover, the framework could be combined with machine learning algorithms that emphasize predictive accuracy, such as hybrid ensemble or deep learning models, to capture more complex nonlinearities and improve forecasting performance (; ).

Author Contributions

Conceptualization, S.S. and A.W.; methodology, S.S.; software, W.Y.; validation, S.S., W.Y. and A.W.; formal analysis, S.S.; investigation, W.Y.; data curation, W.Y. and J.S.; writing—original draft preparation, A.W. and S.S.; writing—review and editing, A.W. and W.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received funding fund from the Center of Excellence in Econometrics, Faculty of Economics, Chiang Mai University, and the Faculty of Commerce and Accountancy, Thammasat University. Grant Number: CEE2025.

Data Availability Statement

We use the simulation study and example data from http://fmwww.bc.edu/repec/bocode/k/k401.dta (accessed on 17 September 2025).

Acknowledgments

The authors are grateful to the Center of Excellence in Econometrics, Faculty of Economics, Chiang Mai University, and the Faculty of Commerce and Accountancy, Thammasat University, for financial support and encouragement.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alahmadi, M. F., & Yilmaz, M. T. (2025). Prediction of IPO performance from prospectus using multinomial logistic regression, a machine learning model. Data Science in Finance and Economics, 5(1), 105–135. [Google Scholar] [CrossRef]

- Amemiya, T. (1985). Advanced econometrics. Harvard University Press. [Google Scholar]

- Baum, C. F. (2006). Econometrics of fractional response variables. Stata Press. [Google Scholar]

- Davidson, R., & MacKinnon, J. G. (1984). Convenient specification tests for logit and probit models. Journal of Econometrics, 25(3), 241–262. [Google Scholar] [CrossRef]

- Davidson, R., & MacKinnon, J. G. (2004). Econometric theory and methods. Oxford University Press. [Google Scholar]

- Ferrari, S., & Cribari-Neto, F. (2004). Beta regression for modelling rates and proportions. Journal of Applied Statistics, 31(7), 799–815. [Google Scholar] [CrossRef]

- Gourieroux, C., Monfort, A., & Trognon, A. (1984). Pseudo maximum likelihood methods: Theory. Econometrica, 52(3), 681–700. [Google Scholar] [CrossRef]

- Guedes, A. C., Cribari-Neto, F., & Espinheira, P. L. (2021). Bartlett-corrected tests for varying precision beta regressions with application to environmental biometrics. PLoS ONE, 16(6), e0253349. [Google Scholar] [CrossRef] [PubMed]

- Harvey, A. C. (1976). Estimating regression models with multiplicative heteroscedasticity. Econometrica, 44(3), 461–465. [Google Scholar] [CrossRef]

- Huber, P. J. (1967). The behavior of maximum likelihood estimates under nonstandard conditions. In Proceedings of the fifth berkeley symposium on mathematical statistics and probability (Vol. 1, No. 1, pp. 221–233). Mathematics Statistics Library. [Google Scholar]

- Hubrich, K., & West, K. D. (2010). Forecast evaluation of small nested model sets. Journal of Applied Econometrics, 25(4), 574–594. [Google Scholar] [CrossRef]

- Kayit, A. D., & Ismail, M. T. (2025). Leveraging hybrid ensemble models in stock market prediction: A data-driven approach. Data Science in Finance and Economics, 5(3), 355–386. [Google Scholar] [CrossRef]

- Kieschnick, R., & McCullough, B. D. (2003). Regression analysis of variates observed on (0, 1): Percentages, proportions and fractions. Statistical Modelling, 3(3), 193–213. [Google Scholar] [CrossRef]

- Mai, T. T. (2025). Handling bounded response in high dimensions: A Horseshoe prior Bayesian Beta regression approach. arXiv, arXiv:2505.22211. [Google Scholar] [CrossRef]

- Papke, L. E., & Wooldridge, J. M. (1996). Econometric methods for fractional response variables with an application to 401(k) plan participation rates. Journal of Applied Econometrics, 11(6), 619–632. [Google Scholar] [CrossRef]

- Rahmashari, O. D., & Srisodaphol, W. (2025). Advanced outlier detection methods for enhancing beta regression robustness. Decision Analytics Journal, 14, 100557. [Google Scholar] [CrossRef]

- Ramalho, E. A., Ramalho, J. J., & Murteira, J. M. (2011). Alternative estimating and testing empirical strategies for fractional regression models. Journal of Economic Surveys, 25(1), 19–68. [Google Scholar] [CrossRef]

- Ribeiro, T. K., & Ferrari, S. L. (2023). Robust estimation in beta regression via maximum Lq-likelihood. Statistical Papers, 64(1), 321–353. [Google Scholar] [CrossRef]

- Rigby, R. A., Stasinopoulos, M. D., Heller, G. Z., & De Bastiani, F. (2019). Distributions for modeling location, scale, and shape: Using GAMLSS in R. Chapman and Hall/CRC. [Google Scholar]

- Smithson, M., & Verkuilen, J. (2006). A better lemon squeezer? Maximum-likelihood regression with beta-distributed dependent variables. Psychological Methods, 11(1), 54–71. [Google Scholar] [CrossRef] [PubMed]

- Stasinopoulos, M. D., Rigby, R. A., Heller, G. Z., Voudouris, V., & De Bastiani, F. (2017). Flexible regression and smoothing: Using GAMLSS in R. CRC Press, Taylor & Francis Group. [Google Scholar]

- White, H. (1982). Maximum likelihood estimation of misspecified models. Econometrica, 50(1), 1–25. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).