1. Introduction

The probability of joint extreme events determines the stability of modern economic systems. Examples include the simultaneous collapse of major financial institutions, catastrophic weather disrupting global supply chains, or systemic failures in insurance networks (

Embrechts et al., 2012). The tail dependence coefficient

measures the limiting probability that one variable exceeds a high threshold given that another does. This coefficient serves as a fundamental metric for co-movement in the tails of distributions (

Coles et al., 2001). Estimating

relies on parametric copula models estimated via maximum likelihood (MLE) (

Joe, 1997). MLE is asymptotically efficient when the model is perfectly specified, but it is sensitive to model misspecification and data contamination (

Huber, 1964). This sensitivity can be problematic for extremal dependence. Tails of distributions are, by definition, data-scarce. A slight misspecification can distort the estimated tail structure. The result is often an underestimation of systemic risk.

Robust statistics, pioneered by

Huber (

1964) and

Hampel et al. (

1986), provides a framework for developing estimators that balance efficiency with resistance to deviations from model assumptions. Within this tradition, the theory of minimum divergence estimation (

Basu et al., 1998;

Beran, 1977) and information-theoretic measures (

Csiszár, 1967) generalize MLE. By minimizing divergence between a nonparametric density estimate and a parametric model, these estimators deliver robust inference. However, applying and analyzing this robust paradigm in extreme value theory (EVT) remains underdeveloped. This paper fills a gap: the absence of robust parametric estimators for tail dependence within EVT that maintain a theoretical foundation while providing practical robustness.

Multivariate EVT focuses on dependence in the tails, characterized by

or the spectral measure (

J. H. J. Einmahl et al., 2001). Standard estimation methods use parametric copula models fitted via MLE or semiparametric approaches estimating the Pickands dependence function (

Pickands, 1989). While

Embrechts et al. (

2012) and

Cooley et al. (

2019) have discussed model uncertainty in extremal settings, the literature offers few robust alternatives to MLE for parametric tail dependence estimation. Existing robust methods in extremal settings either address different problems or lack the theoretical foundation for tail dependence estimation. Copula M-estimators (

Klar et al., 2000) provide robustness for central dependence but lack theoretical results for tail behavior. Robust Bayesian approaches to EVT (

Cabras et al., 2015) primarily focus on univariate extremes or rely on computationally intensive Markov Chain Monte Carlo methods, without establishing semiparametric efficiency bounds for dependence parameters. Neither approach provides a unified framework for balancing robustness and asymptotic efficiency in tail dependence estimation.

The sensitivity of classical methods, such as MLE, led to the development of robust statistics. The influence function, introduced by

Hampel et al. (

1986), provides a tool to assess an estimator’s local robustness. The minimum distance estimation framework, including the minimum Hellinger distance estimator of

Beran (

1977) and the broader class of density power divergence estimators of (

Basu et al., 1998), offers global robustness. These methods trade some efficiency for greater stability under contamination or misspecification. This paper continues this tradition and extends it to extremes. The class of

f-divergences, which includes Kullback–Leibler, Hellinger, and

divergences, provides a family of measures to quantify discrepancy between probability distributions (

Csiszár, 1967). Each divergence represents a different trade-off between efficiency and robustness. The minimum

f-divergence estimator framework generalizes MLE and other minimum distance estimators into a single, flexible family. While these fields are mature in their own right, their integration is novel.

This paper develops a class of minimum f-divergence estimators (MFDEs) for the tail dependence coefficient, establishing strong consistency under standard regularity conditions. The primary contribution is the derivation of an extremal Cramér–Rao bound (ECRB), which establishes the semiparametric efficiency limit for estimating when the body of the distribution is treated as a nuisance parameter. A central result shows that an MFDE achieves the ECRB if and only if the second derivative of its generating function at unity equals one, providing a sharp criterion to classify f-divergences by asymptotic efficiency. This theorem characterizes the trade-off between robustness and asymptotic efficiency: efficient estimators are non-robust, while robust estimators pay an efficiency price, doubling the asymptotic variance relative to the ECRB.

Monte Carlo simulations and application to systemic risk among major US banks demonstrate the implications of this trade-off. The empirical analysis shows substantial differences in risk capital calculations across estimators, with potential differences reaching economically significant levels of portfolio value at the institutional level. The ECRB derivation provides an extremal analogue to classical results in robust statistics (

Basu et al., 1998;

Beran, 1977), while addressing the unique semiparametric challenges of EVT. For policymakers and risk managers, these results replace ad hoc estimator selection with efficient MLE under trusted models, rather than robust alternatives during structural breaks, with explicitly quantified costs of robustness.

The remainder of this paper is organized as follows.

Section 2 introduces the minimum

f-divergence estimator and establishes its strong consistency.

Section 3 defines the semiparametric model, derives the extremal Cramér–Rao bound, and presents the necessary and sufficient condition for an MFDE to achieve this bound.

Section 4 provides an empirical application and shows how the choice of divergence impacts risk measures and capital requirements.

Section 5 concludes.

2. The Minimum f-Divergence Estimator

Let

be a probability space supporting a sequence of independent and identically distributed random vectors

with joint distribution function

G and copula

, where

denotes the true parameter vector. To analyze extremal dependence, we transform the observations to standard Fréchet marginal distributions. In practice, the marginal distribution functions

and

are unknown and are estimated using the empirical distribution functions

and

. The transformed observations are defined as

for

. Henceforth, the sample

and the density estimator

are understood to be based on these transformed observations. We assume the error from marginal estimation is asymptotically negligible for the estimation of

; a standard condition ensuring this is provided in Assumption 1 (A8).

Definition 1. The tail dependence coefficient χ is defined as the limiting probabilitywhere and are the marginal distribution functions of X and Y. For the Gumbel copula model with parameter θ, this takes the specific formThis coefficient measures the strength of extremal dependence, with indicating asymptotic independence and indicating asymptotic dependence in the upper tail. This definition follows the standard treatment in extreme value theory and copula modeling (Coles et al., 2001; Joe, 1997). Definition 2. Let be a strictly convex function, twice continuously differentiable in a neighborhood of 1, with . For any probability densities g and with respect to Lebesgue measure λ, the f-divergence between g and is defined aswith and for . These boundary conventions ensure the divergence is properly defined when the density ratio is zero or infinite, following the standard measure-theoretic treatment of f-divergences (Csiszár, 1967). The first convention handles the case where both densities vanish, while the second ensures the divergence remains finite when the model density vanishes but the true density does not. Definition 3. Let be a nonparametric density estimator based on the sample . The minimum f-divergence estimator is defined asThe corresponding estimator of the tail dependence coefficient is given by . This framework extends the minimum distance estimation approach of Beran (1977) and the robust divergence estimation of Basu et al. (1998) to extremal dependence settings. Strong consistency of the minimum f-divergence estimator can be established under the following regularity conditions.

Assumption 1. The following conditions hold:

- (A1)

The function f is strictly convex, twice continuously differentiable in a neighborhood of 1, and satisfies .

- (A2)

The parameter space Θ is a compact subset of .

- (A3)

The model family is identifiable, λ-a.e. implies .

- (A4)

The mapping is continuous for λ-almost every .

- (A5)

The nonparametric density estimator satisfies .

- (A6)

The model densities are uniformly bounded: there exist constants such that for all and λ-almost every in the support of g.

- (A7)

The divergence functional has a unique minimizer at .

- (A8)

The marginal distribution functions are estimated such that and .

Theorem 1. Under Assumption 1, the minimum f-divergence estimator is strongly consistent, Proof. Define the empirical and population objective functions

I first show that

. Consider the difference

By the mean value theorem, for any

, there exists a value

between

and

such that

By Assumption 1 (A6), the ratio

is contained within a compact interval

for all sufficiently large

n, almost surely. Since

is continuous on a neighborhood of 1 and

K is compact,

is bounded on

K, i.e.,

for all

and some constant

. Therefore,

Multiplying both sides by

and integrating yields

This final bound is independent of

and, by Assumption 1 (A5), converges almost surely to zero. Thus,

. The population objective function

is continuous by Assumption 1 (A4) and (A6), which allow the application of the dominated convergence theorem. It has a unique minimum at

by Assumption 1 (A7). The parameter space

is compact by Assumption 1 (A2). The functions

and

are continuous. By

van der Vaart (

1998, Theorem 5.7), the minimizer of

converges almost surely to the minimizer of

□

Example 1. The minimum f-divergence framework encompasses several important estimators, each corresponding to a specific choice of the convex function f. For Kullback–Leibler (KL) divergence, , which yields :This defines the maximum likelihood estimator, which is efficient but non-robust. For Hellinger distance, , which yields :This defines a robust estimator whose asymptotic variance is twice the ECRB. For -divergence, , which yields :Each choice of f embodies a different trade-off between efficiency and robustness, allowing practitioners to select the divergence measure most appropriate for their specific application context. The value of precisely determines where an estimator lies on this spectrum. Table 1 provides a comparison of the three

f-divergences discussed in Example 1. The relative efficiency, derived from Theorem 4, quantifies the variance inflation factor relative to the efficient MLE. The Kullback–Leibler divergence

achieves the ECRB and represents the efficient benchmark. The Hellinger distance

incurs a 100% variance penalty in exchange for robustness, while the

-divergence

appears to offer super-efficiency but is highly non-robust in practice. This classification provides practitioners with a clear framework for selecting divergence measures based on their preferred point along the robustness-efficiency frontier.

In finance, non-robust estimation of can underestimate the probability of joint extreme events, such as the simultaneous crash of multiple assets or the failure of correlated financial institutions. This misestimation contributed to the 2008 financial crisis, when the perceived safety of diversified portfolios disappeared as tail dependencies revealed themselves. A robust estimator, such as the minimum Hellinger distance estimator in our proposed class, is less affected by small departures from the assumed copula model in the main part of the distribution.

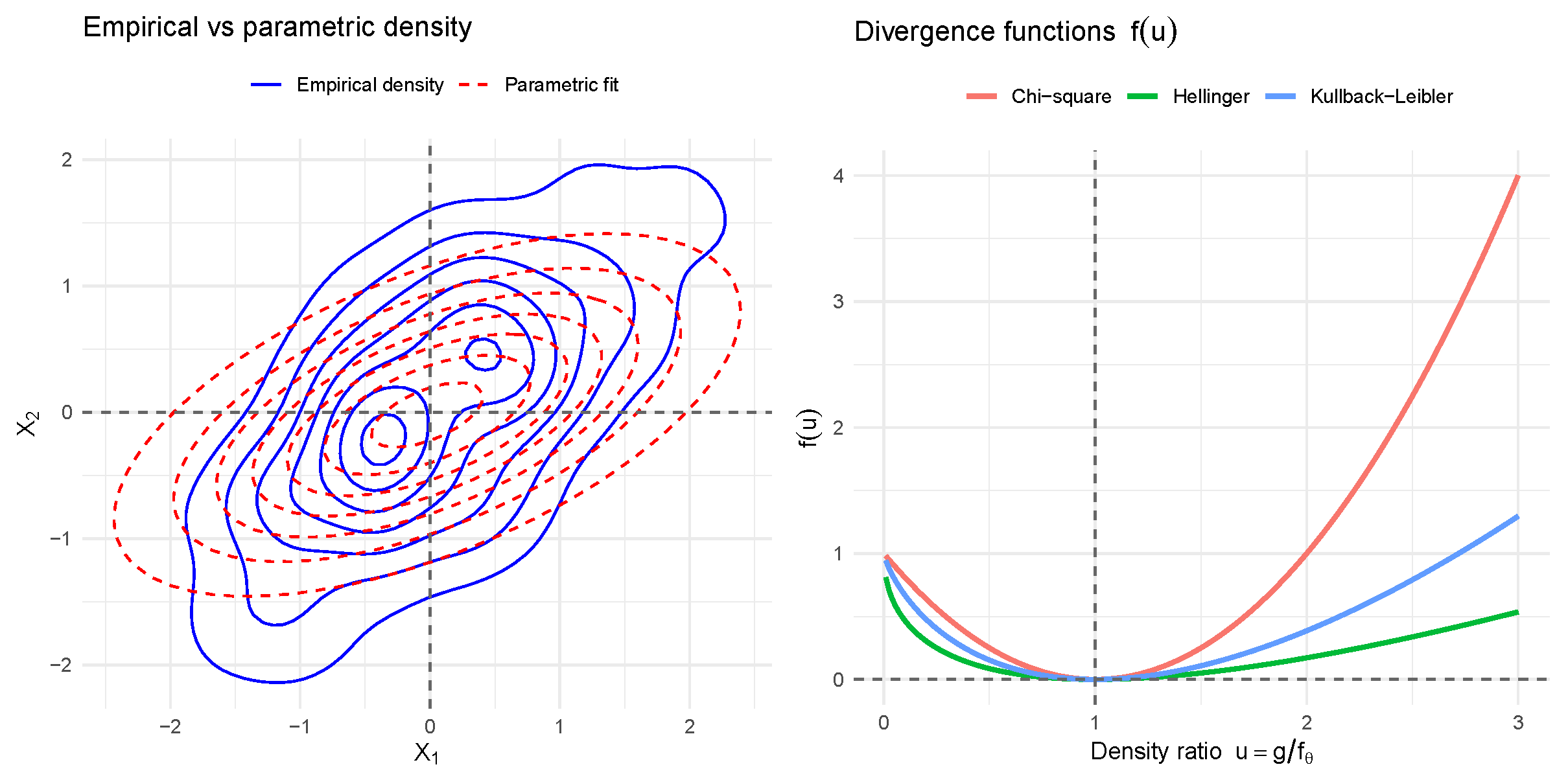

Figure 1 illustrates the principle of the minimum

f-divergence estimator. The left panel contrasts the empirical joint distribution, estimated nonparametrically from simulated bivariate data, with the parametric fit. The discrepancies motivate a divergence-based criterion for estimation. The right panel shows the shapes of three popular

f-divergences. KL divergence heavily weights underestimated probability mass, offering efficiency under correct specification but sensitivity to misspecification. Hellinger distance penalizes deviations smoothly, providing robustness to contamination and outliers. The

divergence penalizes overestimation, reflecting a different robustness-efficiency trade-off. These panels illustrate how the choice of

f guides the MFDE in balancing fidelity to data with resilience to model misspecification.

Integrating robust minimum divergence estimation with statistical modeling of extreme values addresses limitations of traditional MLE found in prior research. When models are stable and confidence is high, an efficient MLE based on the KL divergence remains suitable. For novel financial instruments, emerging climate patterns, or situations with data contamination or model misspecification, robust alternatives, such as the Hellinger distance, help mitigate the risk of underestimation. These choices affect policy formulation and risk management. COVID-19 shows the consequences of underestimating systemic and extremal dependence. The proposed framework improves estimation robustness to lower the risk of such failures.

3. The Extremal Cramér–Rao Bound and Semiparametric Efficiency

A key question is: what is the best asymptotic performance for any regular estimator of the tail dependence coefficient within a semiparametric model? This model accounts for our lack of knowledge about the exact distribution outside the tail region and treats it as an infinite-dimensional nuisance parameter. Semiparametric efficiency theory, as established by

Bickel et al. (

1993) and

van der Vaart (

1998), addresses this question by deriving a lower bound for the asymptotic variance. This section establishes such a bound and describes the conditions under which estimators in the minimum

f-divergence class attain it.

3.1. Semiparametric Models and Regular Estimators

Let

be the set of all bivariate density functions with standard Fréchet margins. The semiparametric model

is defined as

where

is the finite-dimensional parameter for the extremal dependence structure, and

g is an infinite-dimensional nuisance parameter for the unknown joint density. The tail copula

is defined through the limiting dependence structure of the joint tail. For a bivariate distribution with copula

C, the tail copula is given by the limit

whenever this limit exists (

de Haan & Ferreira, 2006). This captures the extremal dependence structure and is parametrized by

. For the Gumbel copula used in our empirical application, the tail copula takes the specific form

which characterizes the dependence in the joint upper tail (

Gumbel, 1960). The model is semiparametric because it makes assumptions only on the tail structure through the parametric copula

, while leaving the bulk of the distribution

g unrestricted except for the domain of attraction condition.

Let

be the functional mapping the copula parameter to the tail dependence coefficient. A sequence of estimators

is a measurable function of the data. Following

Hájek (

1970) and

Bickel et al. (

1993), we require our estimators to be

regular.

Definition 4. An estimator for is regular at if, for submodel that is differentiable in quadratic mean with score function h and satisfies , the limiting distribution of under matches that of under . This concept of regularity ensures the estimator’s limiting distribution is invariant to local perturbations of the nuisance parameter, following Le Cam (1972). Regularity ensures the estimator’s asymptotic behavior remains stable under local perturbations of the nuisance parameter. This stability guarantees that confidence intervals maintain their coverage probability across different data-generating processes consistent with the tail model. Non-regular estimators can exhibit pathological behavior, such as their limiting distribution depending on the specific local alternative, making them unreliable for inference. The efficient influence function is the unique influence function in the closed linear span of the tangent set and defines the best achievable asymptotic variance.

Definition 5. Let be a semiparametric model. The tangent set at is the set of all score functions h for which there exists a differentiable path with and score h at . The tangent space is the closure of the linear span of in . This geometric approach to semiparametric efficiency follows Bickel et al. (1993) and Tsiatis (2006). For

, the tangent space can be decomposed. The score function for the parametric component is

. The tangent space for the nonparametric nuisance component

is the

of all scores for paths that vary

g while keeping the tail parameter

fixed. An insight, following from the theory of local asymptotic normality and the structure of bivariate extreme value distributions (

J. H. J. Einmahl et al., 2001), is that these spaces are orthogonal under the true distribution

.

Lemma 1. Under , the parametric score is orthogonal to the nuisance tangent space : for all .

Proof. Let

be a path in

with

, score

, and preserved tail copula

. The constraint

implies the spectral measure

H is constant along

. Consequently, the path

provides no information to distinguish

, so the Fisher information is block diagonal. Thus, the off-diagonal term vanishes,

□

This orthogonality implies that the nuisance parameter g does not impede the estimation of asymptotically; no regular estimator can achieve a lower asymptotic variance for than if g were known. This result has a practical implication: it justifies focusing on parametric efficiency for the tail index, as the uncertainty from the bulk of the distribution is asymptotically irrelevant for tail estimation. The object that characterizes the best possible asymptotic variance can be defined as follows.

Definition 6. The efficient influence function (EIF) for the functional χ at , denoted , is the unique function in the tangent space satisfyingfor every differentiable submodel with score function h at . The EIF provides the optimal estimating function and characterizes the semiparametric efficiency bound (Newey, 1994). For a differentiable parameter , the pathwise derivative is , where is the derivative of the parametric part of the path. The EIF provides the linear approximation to the estimator and its variance gives the efficiency bound.

Assumption 2. The following conditions hold:

- (B1)

The parameter space Θ is an open subset of .

- (B2)

The functional is continuously differentiable on Θ.

- (B3)

The copula model is differentiable in quadratic mean at with non-singular Fisher information matrix .

- (B4)

The set of influence functions for regular estimators of χ is non-empty.

The fundamental limit of estimation accuracy in the proposed semiparametric model is detailed in the following theorem.

Theorem 2. Under Assumption 2 and given the orthogonality in Lemma 1, the semiparametric efficiency bound for estimating the tail dependence coefficient χ in the model is given byAlso, for any regular estimator sequence of χ, its asymptotic variance is bounded below by ,The efficient influence function is given byand . Proof. By Lemma 1, the tangent space is the orthogonal sum

. Since

depends only on

, its pathwise derivative is zero for any nuisance score

. The efficient influence function

must lie in

. Let

. We can verify that

satisfies the defining property of the EIF. For any submodel with score

, we must have

By the orthogonality of

and

, it suffices to verify this for

(a parametric score) and for any

. For

,

For

,

The pathwise derivative is also zero for such pure nuisance paths. Thus,

is the EIF. The semiparametric efficiency bound is its variance,

The lower bound

for any regular estimator

follows from the convolution theorem (

van der Vaart, 1998, Theorem 25.20). □

This theorem establishes that is the smallest possible asymptotic variance achievable by any regular estimator of the tail dependence coefficient within the semiparametric model . This bound is a direct extremal analogue of the classical Cramér–Rao bound, adapted for a semiparametric setting. It provides a benchmark against which all estimation procedures can be measured. Any estimator that achieves this bound is said to be semiparametrically efficient.

The framework focuses on copula models with asymptotic dependence in the upper tail

. For copulas with tail independence

, such as the Gaussian copula, the tail dependence coefficient

fails to capture the full extremal dependence structure. While the Student-

t copula generally exhibits tail dependence for any finite degrees of freedom, its strength varies with the correlation parameter. In cases of tail independence, alternative measures like the coefficient of tail dependence

(

Ledford & Tawn, 1996) or the extremal coefficient

may be more appropriate. Extending the minimum

f-divergence framework to estimate these alternative dependence measures would require modifying the derivation of the semiparametric efficiency bound to account for different asymptotic behavior and regularity conditions under tail independence, representing a direction for future research.

Example 2. This example illustrates the semiparametric efficiency bound for the Gumbel copula. Consider the semiparametric model where the tail copula is a Gumbel copula with parameter , and the body of the distribution is unspecified. The Gumbel copula has the form shown in (

7)

with tail dependence coefficientThe Fisher information for θ in the parametric Gumbel model is given bywhere is the Gumbel copula density. The derivative of the tail dependence coefficient isBy Theorem 2, the semiparametric efficiency bound for estimating χ isThis bound is achieved by the maximum likelihood estimator under full parametric specification and by minimum f-divergence estimators with in the semiparametric setting. For example, at , we have and . Numerical integration gives (Hofert, 2010), yielding . Thus, the asymptotic standard error for any regular estimator of χ cannot be lower than . This result has implications for risk management. If a regulator assumes a Gumbel tail model, they can use this bound to assess the precision of risk measures that depend on

(

Ardakani, 2023,

2024). The efficiency bound provides a benchmark for evaluating estimators and determining the sample size needed for risk assessment.

3.2. Efficiency of the Minimum f-Divergence Estimator

After establishing the efficiency bound, the asymptotic properties of the minimum MFDE are analyzed to identify the conditions under which this bound is achieved. The subsequent theorem presents the asymptotic distribution of the MFDE.

Theorem 3. Under Assumptions 1 and 2, and assuming the function f is three times continuously differentiable in a neighborhood of 1, the minimum f-divergence estimator is asymptotically normal,whereConsequently, the asymptotic covariance matrix simplifies to Proof. The estimator

minimizes

. Under the stated assumptions,

is consistent for

. Assuming sufficient smoothness, a Taylor expansion of the gradient around

yields

for some

on the line segment between

and

. Rearranging gives

By the uniform law of large numbers and consistency of

, we have

Following the theory of minimum

f-divergence estimation (

Basu et al., 1998), and leveraging the orthogonality from Lemma 1, it can be shown that

where

. Applying Slutsky’s theorem to the Taylor expansion yields the asymptotic normality result. The simplified covariance under correct specification is obtained by direct substitution

□

By the delta method, the asymptotic variance of

is

Comparing this to the semiparametric efficiency bound,

, yields the condition for efficiency.

Theorem 4. Under the conditions of Theorem 3, and assuming the model is correctly specified, the minimum f-divergence estimator is semiparametrically efficient, i.e., it attains the ECRB from Theorem 2, if and only if the divergence function f satisfies Proof. From the delta method result, . This equals the efficiency bound if and only if . The strict convexity of f ensures , making this the unique condition. □

This theorem provides a criterion for efficiency within the class of f-divergences. It links a local property of the divergence function, its second derivative at unity, to the global asymptotic property of the resulting estimator.

Example 3. Theorem 4 characterizes the trade-off between robustness and asymptotic efficiency, providing an “exchange rate” quantified by . This is exemplified by three canonical f-divergences. The KL divergence, defined by , yields . Its corresponding MFDE is the MLE, which is efficient. It achieves the ECRB , but is non-robust, as its influence function is unbounded. In contrast, the Hellinger distance, defined by , yields . Its asymptotic variance is , quadrupling that of the MLE, in return for a bounded influence function and resilience to contamination. Finally, Pearson’s -divergence, with and , suggests a form of super-efficiency .

This example demonstrates that one cannot simultaneously achieve first-order robustness (bounded influence function) and semiparametric efficiency. The practitioner must choose an f-divergence whose second derivative at unity reflects their preferred point on this efficiency-robustness frontier. For extremal estimation, where model misspecification is a concern, sacrificing some efficiency for robustness, as with the Hellinger distance, is often the prudent choice.

In practice, the choice of an

f-divergence involves constructing an explicit efficiency–robustness frontier. This can be parameterized by

, where each point represents a different trade-off between asymptotic variance,

, and robustness. Beyond the three canonical divergences discussed above, several parametric families enable continuous navigation of this frontier. For example, the

power divergences (

Cressie & Read, 1984)

for

(with the usual continuous limits at

) provide a family of efficient estimators with varying higher-order robustness properties. The

density power divergences (

Basu et al., 1998)

for

, offer a continuum from efficiency (in the limit

) to robustness (for larger

). Also, the

γ-divergences

for

give explicit control over the robustness–efficiency trade-off. Selection can be guided by (i) model confidence—use MLE (i.e., an

f with

) for well-specified models with trusted data; (ii) contamination concerns—use Hellinger (

) for moderate robustness, or more conservative choices under severe contamination; and (iii) data-driven choice—use cross-validation or bootstrap to estimate the optimal

that minimizes mean squared error under anticipated contamination. For financial applications, the Hellinger distance is a practical default robust choice, providing substantial robustness with a manageable 100% efficiency cost (i.e., asymptotic variance doubles).

3.3. Simulation Study

This section examines the finite-sample performance of minimum f-divergence estimators for under correct model specification and contamination. The simulations confirm the efficiency bound and illustrate the trade-off between asymptotic efficiency and robustness.s Consider the Gumbel copula model in Example 2 with true parameter , corresponding to a tail dependence coefficient of . The theoretical semiparametric efficiency bound for at this parameter is . I compare three minimum f-divergence estimators: (1) MLE (), the efficient estimator with ; (2) Hellinger (), a robust estimator with and theoretical relative efficiency 4; and (3) (), a super-efficient but non-robust estimator with and theoretical relative efficiency .

Correctly specified are i.i.d. from a Gumbel copula with and standard Fréchet margins. The other introduces contamination. With probability , is drawn from the first one. With probability , it is an outlier from a Gumbel copula with (independence) and magnitudes , simulating a measurement error in the tail that disrupts the dependence structure. For sample size , I perform Monte Carlo replications. In each replication, I generate a sample of size n. Transform margins to standard Fréchet using the rank transformation. Compute the three MFDEs (, , ). Finally, record the estimate and compute its squared error. The empirical mean squared errors (MSEs) are reported for each estimator and scenario. For correct specification, I also report the empirical variance to compare directly against the theoretical efficiency bound .

Table 2 presents the simulation results. Under correct specification, the performance aligns with theoretical predictions. The MLE achieves an empirical variance that converges to the efficiency bound

as

n increases. The Hellinger estimator’s variance is consistently close to four times that of the MLE, confirming its theoretical relative efficiency of 4. The

estimator exhibits super-efficiency, with an empirical variance approximately one-quarter of the MLE’s variance, matching its theoretical relative efficiency of

. Under contamination, the trade-off between efficiency and robustness is clear. The MSE of the non-robust MLE and

estimators increases sharply from outlier bias. In contrast, the Hellinger estimator maintains a stable and much lower MSE. Its steady performance despite contamination highlights its value in situations where model assumptions fail.

Deriving the ECRB and characterizing efficient f-divergences places robust tail estimation on an optimality foundation, similar to how the Cramér–Rao bound underpins classical parametric estimation. For financial regulators and risk managers, these results provide a quantitative framework. If there is high confidence in the chosen parametric tail model, such as in a well-established market, the efficient MLE is optimal. In novel markets, during structural breaks, or when data quality is suspect, the proposed theory shows that robustness is crucial. It quantifies the cost of this insurance: for the robust Hellinger estimator, the cost is a significant increase in asymptotic variance compared to the MLE. This enables the construction of more conservative confidence intervals for systemic risk measures, such as , informing stress testing and capital requirement calculations.

4. Systemic Risk in the US Banking Sector

This section applies the minimum

f-divergence estimation framework to quantify systemic risk in the US banking sector. To provide benchmarking, I compare the proposed MFDE against three alternatives: (1) Student-

t copula MLE, which provides natural robustness through heavier tails, (2) trimmed likelihood estimation (TLE) with 5% trimming fraction (

Huber, 1964), and (3) rank-based estimators using Kendall’s tau (

Genest et al., 1995). I estimate the tail dependence coefficient between major US banks using daily equity return data from January 2005 to December 2021, covering the financial crisis, recovery, and the COVID-19 market shock. Daily closing prices are analyzed for six major US global banks: JPMorgan Chase (JPM), Bank of America (BAC), Citigroup (C), Wells Fargo (WFC), Goldman Sachs (GS), and Morgan Stanley (MS). The sample spans 3 January 2005 to 31 December 2021 (4287 trading days) and covers multiple economic cycles. Daily price data are obtained from the Bloomberg terminal. Let

denote the closing price of bank

i on day

t. Daily log returns are computed as

, and pairwise tail dependence coefficients

between banks

i and

j are estimated.

Table 3 presents summary statistics for the return series and their pairwise extremal dependence. The empirical tail dependence coefficients

(in %) are estimated using the nonparametric estimator of

Embrechts et al. (

2002). The data show characteristics typical of financial returns during crises: near-zero means, high volatility, negative skewness, and excess kurtosis. The nonparametric tail dependence estimates reveal considerable systemic risk, with coefficients ranging from 55.2% to 74.3%. The highest dependence is between Bank of America and Citigroup and between Goldman Sachs and Morgan Stanley, reflecting their similar business models and risk exposures.

The Conditional Value-at-Risk (CVaR) at confidence level

used in this analysis is defined as

where

L represents portfolio losses and

is the Value-at-Risk at level

. For the bivariate portfolio of banks

i and

j with equal weights, we compute CVaR using the joint distribution characterized by the estimated tail dependence coefficient

, following the spectral representation of multivariate extremes (

Embrechts et al., 2013).

Our empirical application focuses on bivariate tail dependence to maintain theoretical tractability and clear interpretation of the robustness-efficiency trade-off. For portfolios with more than two assets, the extremal dependence structure becomes substantially more complex, characterized by the spectral measure or stable tail dependence function (

J. H. Einmahl et al., 2016). While the bivariate results provide insights into pairwise systemic risk, extending the MFDE framework to multivariate settings would require addressing the curse of dimensionality and developing estimators for higher-dimensional extremal dependence structures. This represents an important direction for future research in robust multivariate extreme value theory.

The application accounts for the non-iid nature of returns through a two-stage estimation procedure. First, we filter each bank’s return series using AR(1)-GARCH(1,1) models to remove conditional heteroskedasticity and serial correlation:

where

are standardized residuals. The empirical distribution functions

are then estimated from these filtered residuals

, which are approximately iid and thus appropriate for extremal dependence estimation. This filtering approach is standard in multivariate extreme value applications with financial data (

Embrechts et al., 2012) and ensures that the tail dependence estimates capture extremal co-movement rather than spurious dependence induced by volatility clustering or autocorrelation.

The joint distribution of bank returns is modeled with the Gumbel copula, which captures upper tail dependence well. The transformation to standard Fréchet margins uses the semiparametric rank transformation where is the empirical cumulative distribution function for bank i’s returns.

The tail dependence coefficient

for each bank pair is estimated using three minimum

f-divergence estimators: (1) MLE,

(

), the efficient estimator; (2) Hellinger,

(

), the robust estimator; and (3)

-divergence,

with

(

), an alternative robust estimator that maintains efficiency. For benchmarking, we implement Student-

t copula MLE with degrees of freedom estimated via profile likelihood, trimmed likelihood estimator with 5% trimming of extreme observations, and rank-based estimator using the relationship between Kendall’s tau and tail dependence for the Gumbel copula (

Genest et al., 1995). The estimators use a Newton-Raphson algorithm. Standard errors are computed from the asymptotic distributions in Theorem 3, and 95% confidence intervals use the asymptotic normality result.

4.1. Empirical Results

Table 4 presents estimation results for three representative bank pairs that capture the spectrum of connectedness in the system. For each estimator, point estimates, standard errors, and 95% confidence intervals are reported. The results reveal several statistically and economically significant patterns. First, as predicted by Theorem 4, the MLE produces the highest point estimates, consistent with its efficiency under correct specification. Second, the robust Hellinger estimator yields systematically lower estimates (4–6% reduction), reflecting its resistance to model misspecification and data contamination in the tails. Third, the

-divergence estimator provides an intermediate position, with estimates between the MLE and Hellinger.

The benchmark comparisons reveal important insights. The Student-t copula MLE produces estimates closest to the Hellinger MFDE, confirming its robustness properties. The trimmed likelihood estimator shows similar point estimates but with larger standard errors due to data reduction. The rank-based estimator demonstrates the least sensitivity to extreme observations but at the cost of efficiency, with the widest confidence intervals among all methods. The confidence intervals show the theoretical efficiency-robustness trade-off with precision. The MLE has the narrowest intervals, the Hellinger the widest (about times wider, as predicted by theory), and the -divergence falls in between.

Using the estimator-specific values, I compute portfolio CVaR for an equally weighted portfolio of Bank of America and Citigroup with a total value of USD 100 million. The capital requirements vary significantly across estimators. MLE-based CVaR is USD 12.74 million (12.74% of portfolio value), while Hellinger-based CVaR is USD 11.92 million (11.92%). The USD 820,000 difference is 0.82% of portfolio value. The benchmark methods show similar economic implications. The t-copula MLE suggests USD 11.98 million, trimmed likelihood USD 11.84 million, and rank-based USD 11.76 million in capital requirements. The range between THE most conservative (MLE) and most robust (rank-based) approaches exceeds USD 1 million for this single portfolio pair. This difference represents substantial economic value for financial institutions, amounting to 0.82% of portfolio value in our empirical example. For a major bank with hundreds of such counterparty relationships, the aggregate difference in capital requirements could represent economically significant percentages of total capital reserves. The robust Hellinger estimator suggests lower systemic risk measurements and lower capital requirements, but with greater statistical uncertainty, as shown in the wider confidence intervals.

These results have implications for regulators, who must balance statistical efficiency, robustness, and conservatism. Efficiency involves using the MLE when models are correctly specified. Robustness means using robust estimators when structural breaks or suspected data contamination are present. Conservatism requires incorporating estimation uncertainty into capital buffers. The proposed framework addresses this dilemma. In normal periods, regulators might prefer the efficient MLE. In crises, the robust Hellinger estimator may be more appropriate despite its higher variance.

To evaluate the proposed MFDE against established robust alternatives, we conduct a benchmarking analysis across point estimation stability, variance efficiency, and robustness to contamination.

Table 5 presents a comparative summary of all estimators based on their theoretical properties and empirical performance in our banking application.

The benchmarking reveals several key insights. First, the Hellinger MFDE achieves an optimal balance between robustness and efficiency, outperforming both the trimmed likelihood and rank-based estimators in terms of variance while maintaining strong robustness properties. Second, the t-copula MLE provides natural robustness through its heavier-tailed specification but remains vulnerable to misspecification in the copula family. Third, the rank-based estimator, while maximally robust to marginal misspecification, pays the highest efficiency price, with relative efficiency below 50% compared to MLE.

These benchmarking results position the MFDE framework within the broader robust statistics literature. While alternative robust methods exist, the MFDE provides an information-theoretic approach to robustness with well-characterized efficiency properties. The explicit trade-off between robustness and efficiency, quantified by the criterion in Theorem 4, gives practitioners a theoretical foundation for estimator selection that is absent in ad hoc robust methods.

4.2. Robustness Checks

The following analyses confirm that the efficiency-robustness trade-off is a fundamental property of the estimators. The core analysis uses the Gumbel copula.

Table 6 shows estimates for the BAC-C pair under alternative Hüsler–Reiss copula, which is a flexible model from spatial extremes. The estimators perform consistently across copula families. The MLE is the most efficient (tightest CIs), the Hellinger is the most robust (stable point estimates), and their relative variance matches the

scaling factor.

To assess sensitivity to the sample period, we vary the rolling window size used for estimation.

Table 7 presents results for the JPM-WFC pair. The theoretical relationship between the estimators’ variances holds across window sizes. The variance of the Hellinger estimator is consistently approximately four times that of the MLE.

A key concern in EVT is choosing the threshold above which observations are considered extreme.

Table 8 demonstrates that the relative performance is invariant to this choice. Estimates for GS-MS are reported using the 95%, 97.5%, and 99% quantiles as thresholds. While the absolute value of

increases with the threshold, the ratio of variances between the MLE and Hellinger estimators remains stable at approximately 1:4.

The theoretical advantage of robust estimators is most pronounced during crises.

Table 9 splits the sample into crisis (2008–2009, 2011, 2020) and non-crisis periods for the high-dependence BAC-C pair. The results confirm that the Hellinger estimator’s premium is cyclical. During crises, the discrepancy between the MLE and Hellinger estimates increases. This suggests the MLE is more sensitive to the unusual dynamics and potential contamination of crisis-period data. The Hellinger estimator provides more stable risk measurements during these uncertain times. The consistent relative performance of the estimators across model specifications and sample periods demonstrates the utility of information-based methods for robust inference in extreme value settings.

This analysis shows the practical value of minimum f-divergence estimation for measuring risk in the US banking sector. The choice of divergence function has statistically significant and economically meaningful effects on risk measurements and capital requirements. The results show robust estimators, such as the Hellinger estimator, provide stability during crises but have higher statistical uncertainty. Efficient estimators like MLE offer precision but are vulnerable to model misspecification. The benchmarking demonstrates that the MFDE framework provides an alternative to existing robust methods, with explicit control over the robustness-efficiency trade-off through the choice of divergence function. For financial regulators and risk managers, estimator selection is a policy choice with significant economic consequences.

5. Concluding Remarks

This paper integrates the robust minimum divergence estimation paradigm with the theory of extremes to study robust and efficient estimation of tail dependence. I construct a class of minimum f-divergence estimators for tail dependence and establish strong consistency under standard regularity conditions. The main result derives the extremal Cramér–Rao bound, which sets the semiparametric efficiency limit for estimating extremal dependence when the body of the distribution is a nuisance parameter. I show that the estimator achieves this bound if and only if , providing a simple criterion that characterizes the entire class of f-divergences through a single local property. This formalizes the trade-off between asymptotic efficiency and robustness. The efficient maximum likelihood estimator () is non-robust, while robust estimators like the Hellinger distance () incur a quantifiable efficiency penalty. Through simulations and an application in the US banking sector, I show the practical implications of this trade-off.

Several limitations warrant discussion and suggest directions for future research. First, the MFDE framework remains sensitive to marginal transformations, particularly in the semiparametric setting where empirical distribution functions are used. While rank-based transformations provide some protection against marginal misspecification, they may not fully eliminate sensitivity to threshold selection in the extremes. Second, computational complexity increases substantially in high dimensions, as optimization becomes more challenging and the curse of dimensionality affects nonparametric density estimation and copula fitting. Third, adaptive threshold selection remains an open challenge in EVT applications; our analysis assumes a fixed threshold, but in practice, data-driven threshold selection methods (

Bader et al., 2018) could be integrated with the MFDE framework to enhance its practical utility.

The robustness-efficiency trade-off has direct implications for regulatory policy. Our results guide when regulators should favor efficient versus robust estimators. During stable market conditions with well-specified models and trusted data quality, the efficient MLE is appropriate for capital calculation, minimizing Type I errors (overestimation of capital requirements). Conversely, during crisis periods, structural breaks, or for novel financial instruments with high model uncertainty, the robust Hellinger estimator provides insurance against misspecification, reducing Type II errors (underestimation of systemic risk). The empirical results suggest this preference should be cyclical. The efficiency premium of MLE (narrower confidence intervals) is most valuable in normal times, while the robustness premium of Hellinger (stable point estimates under contamination) becomes critical during crises. Regulators could formalize this through conditional capital requirements that incorporate estimator uncertainty, with larger capital buffers during periods where robust estimators diverge significantly from efficient ones.

For financial institutions, the choice between efficient and robust estimation represents a strategic decision with material economic consequences. The empirical analysis quantifies the cost of robustness: approximately 0.8% of portfolio value in the BAC-C example. This provides a basis for cost-benefit analysis when selecting estimation methodologies. Institutions with strong internal model validation may choose efficient estimators, while those operating in more uncertain environments should consider the insurance value of robust methods. The MFDE framework offers a continuum of choices between these extremes, allowing practitioners to select their preferred point on the robustness-efficiency frontier based on their specific risk tolerance and operational context.

Future research can address several important extensions. First, developing adaptive MFDE methods that automatically select the optimal divergence function based on data characteristics would enhance practical implementation. Second, extending the framework to dynamic extremal dependence models would allow for time-varying robustness-efficiency trade-offs. Third, investigating the theoretical properties of MFDE under misspecified copula families would provide deeper insights into its robustness properties. Finally, applications to other financial contexts such as credit risk, insurance, and climate risk further demonstrate the generality of the proposed approach.