-

A Novel Method to Compute the Contact Surface Area Between an Organ and Cancer Tissue

A Novel Method to Compute the Contact Surface Area Between an Organ and Cancer Tissue -

Sowing, Monitoring, Detecting: A Possible Solution to Improve the Visibility of Cropmarks in Cultivated Fields

Sowing, Monitoring, Detecting: A Possible Solution to Improve the Visibility of Cropmarks in Cultivated Fields -

A Method for Estimating Fluorescence Emission Spectra from the Image Data of Plant Grain and Leaves Without a Spectrometer

A Method for Estimating Fluorescence Emission Spectra from the Image Data of Plant Grain and Leaves Without a Spectrometer -

SAVE: Self-Attention on Visual Embedding for Zero-Shot Generic Object Counting

SAVE: Self-Attention on Visual Embedding for Zero-Shot Generic Object Counting

Journal Description

Journal of Imaging

Journal of Imaging

is an international, multi/interdisciplinary, peer-reviewed, open access journal of imaging techniques published online monthly by MDPI.

- Open Accessfree for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), PubMed, PMC, dblp, Inspec, Ei Compendex, and other databases.

- Journal Rank: CiteScore - Q1 (Radiology, Nuclear Medicine and Imaging)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 18.3 days after submission; acceptance to publication is undertaken in 3.3 days (median values for papers published in this journal in the second half of 2024).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

Impact Factor:

2.7 (2023);

5-Year Impact Factor:

3.0 (2023)

Latest Articles

Dose Reduction in Scintigraphic Imaging Through Enhanced Convolutional Autoencoder-Based Denoising

J. Imaging 2025, 11(6), 197; https://doi.org/10.3390/jimaging11060197 (registering DOI) - 14 Jun 2025

Abstract

Objective: This study proposes a novel deep learning approach for enhancing low-dose bone scintigraphy images using an Enhanced Convolutional Autoencoder (ECAE), aiming to reduce patient radiation exposure while preserving diagnostic quality, as assessed by both expert-based quantitative image metrics and qualitative evaluation. Methods:

[...] Read more.

Objective: This study proposes a novel deep learning approach for enhancing low-dose bone scintigraphy images using an Enhanced Convolutional Autoencoder (ECAE), aiming to reduce patient radiation exposure while preserving diagnostic quality, as assessed by both expert-based quantitative image metrics and qualitative evaluation. Methods: A supervised learning framework was developed using real-world paired low- and full-dose images from 105 patients. Data were acquired using standard clinical gamma cameras at the Nuclear Medicine Department of the University General Hospital of Alexandroupolis. The ECAE architecture integrates multiscale feature extraction, channel attention mechanisms, and efficient residual blocks to reconstruct high-quality images from low-dose inputs. The model was trained and validated using quantitative metrics—Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM)—alongside qualitative assessments by nuclear medicine experts. Results: The model achieved significant improvements in both PSNR and SSIM across all tested dose levels, particularly between 30% and 70% of the full dose. Expert evaluation confirmed enhanced visibility of anatomical structures, noise reduction, and preservation of diagnostic detail in denoised images. In blinded evaluations, denoised images were preferred over the original full-dose scans in 66% of all cases, and in 61% of cases within the 30–70% dose range. Conclusion: The proposed ECAE model effectively reconstructs high-quality bone scintigraphy images from substantially reduced-dose acquisitions. This approach supports dose reduction in nuclear medicine imaging while maintaining—or even enhancing—diagnostic confidence, offering practical benefits in patient safety, workflow efficiency, and environmental impact.

Full article

(This article belongs to the Special Issue Clinical and Pathological Imaging in the Era of Artificial Intelligence: New Insights and Perspectives—2nd Edition)

Open AccessArticle

Generation of Synthetic Non-Homogeneous Fog by Discretized Radiative Transfer Equation

by

Marcell Beregi-Kovacs, Balazs Harangi, Andras Hajdu and Gyorgy Gat

J. Imaging 2025, 11(6), 196; https://doi.org/10.3390/jimaging11060196 - 13 Jun 2025

Abstract

The synthesis of realistic fog in images is critical for applications such as autonomous navigation, augmented reality, and visual effects. Traditional methods based on Koschmieder’s law or GAN-based image translation typically assume homogeneous fog distributions and rely on oversimplified scattering models, limiting their

[...] Read more.

The synthesis of realistic fog in images is critical for applications such as autonomous navigation, augmented reality, and visual effects. Traditional methods based on Koschmieder’s law or GAN-based image translation typically assume homogeneous fog distributions and rely on oversimplified scattering models, limiting their physical realism. In this paper, we propose a physics-driven approach to fog synthesis by discretizing the Radiative Transfer Equation (RTE). Our method models spatially inhomogeneous fog and anisotropic multi-scattering, enabling the generation of structurally consistent and perceptually plausible fog effects. To evaluate performance, we construct a dataset of real-world foggy, cloudy, and sunny images and compare our results against both Koschmieder-based and GAN-based baselines. Experimental results show that our method achieves a lower Fréchet Inception Distance (

(This article belongs to the Section Image and Video Processing)

Open AccessArticle

Optical Coherence Tomography (OCT) Findings in Post-COVID-19 Healthcare Workers

by

Sanela Sanja Burgić, Mirko Resan, Milka Mavija, Saša Smoljanović Skočić, Sanja Grgić, Daliborka Tadić and Bojan Pajic

J. Imaging 2025, 11(6), 195; https://doi.org/10.3390/jimaging11060195 - 12 Jun 2025

Abstract

Recent evidence suggests that SARS-CoV-2 may induce subtle anatomical changes in the retina, detectable through advanced imaging techniques. This retrospective case–control study utilized optical coherence tomography (OCT) to assess medium-term retinal alterations in 55 healthcare workers, including 25 individuals with PCR-confirmed COVID-19 and

[...] Read more.

Recent evidence suggests that SARS-CoV-2 may induce subtle anatomical changes in the retina, detectable through advanced imaging techniques. This retrospective case–control study utilized optical coherence tomography (OCT) to assess medium-term retinal alterations in 55 healthcare workers, including 25 individuals with PCR-confirmed COVID-19 and 30 non-COVID-19 controls, all of whom had worked in COVID-19 clinical settings. Comprehensive ophthalmological examinations, including OCT imaging, were conducted six months after infection. The analysis considered demographic variables, comorbidities, COVID-19 severity, risk factors, and treatments received. Central macular thickness (CMT) was significantly increased in the post-COVID-19 group (p < 0.05), with a weak but statistically significant positive correlation between CMT and disease severity (r = 0.245, p < 0.05), suggesting potential post-inflammatory retinal responses. No significant differences were observed in retinal nerve fiber layer (RNFL) or ganglion cell complex (GCL + IPL) thickness. However, mild negative trends in inferior RNFL and average GCL+IPL thickness may indicate early neurodegenerative changes. Notably, patients with comorbidities exhibited a significant reduction in superior and inferior RNFL thickness, pointing to possible long-term neurovascular impairment. These findings underscore the value of OCT imaging in identifying subclinical retinal alterations following COVID-19 and highlight the need for continued surveillance in recovered patients, particularly those with pre-existing systemic conditions.

Full article

(This article belongs to the Special Issue Learning and Optimization for Medical Imaging)

►▼

Show Figures

Figure 1

Open AccessArticle

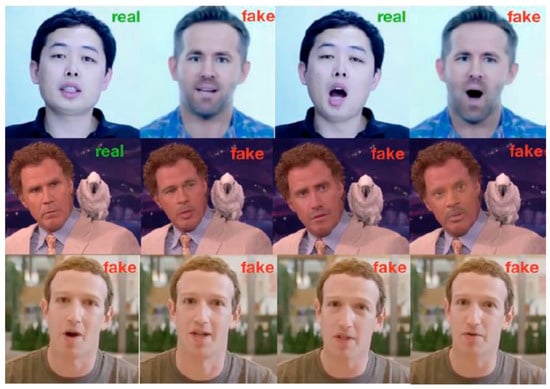

Evaluating Features and Variations in Deepfake Videos Using the CoAtNet Model

by

Eman Alattas, John Clark, Arwa Al-Aama and Salma Kammoun Jarraya

J. Imaging 2025, 11(6), 194; https://doi.org/10.3390/jimaging11060194 - 12 Jun 2025

Abstract

Deepfake video detection has emerged as a critical challenge in the realm of artificial intelligence, given its implications for misinformation and digital security. This study evaluates the generalisation capabilities of the CoAtNet model—a hybrid convolution–transformer architecture—for deepfake detection across diverse datasets. Although CoAtNet

[...] Read more.

Deepfake video detection has emerged as a critical challenge in the realm of artificial intelligence, given its implications for misinformation and digital security. This study evaluates the generalisation capabilities of the CoAtNet model—a hybrid convolution–transformer architecture—for deepfake detection across diverse datasets. Although CoAtNet has shown exceptional performance in several computer vision tasks, its potential for generalisation in cross-dataset scenarios remains underexplored. Thus, in this study, we explore CoAtNet’s generalisation ability by conducting an extensive series of experiments with a focus on discovering features and variations in deepfake videos. These experiments involve training the model using various input and processing configurations, followed by evaluating its performance on widely recognised public datasets. To the best of our knowledge, our proposed approach outperforms state-of-the-art models in terms of intra-dataset performance, with an AUC between 81.4% and 99.9%. Our model also achieves outstanding results in cross-dataset evaluations, with an AUC equal to 78%. This study demonstrates that CoAtNet achieves the best AUC for both intra-dataset and cross-dataset deepfake video detection, particularly on Celeb-DF, while also showing strong performance on DFDC.

Full article

(This article belongs to the Special Issue Advancements in Deepfake Technology, Biometry System and Multimedia Forensics)

►▼

Show Figures

Figure 1

Open AccessArticle

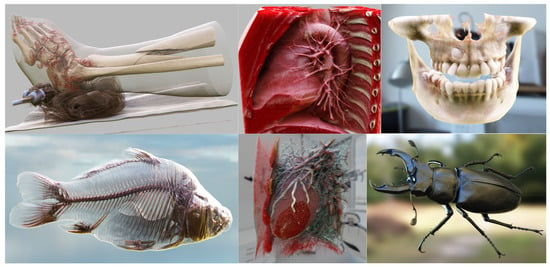

Efficient Multi-Material Volume Rendering for Realistic Visualization with Complex Transfer Functions

by

Chunxiao Xu, Xinran Xu, Jiatian Zhang, Yiheng Cao and Lingxiao Zhao

J. Imaging 2025, 11(6), 193; https://doi.org/10.3390/jimaging11060193 - 11 Jun 2025

Abstract

Physically based realistic direct volume rendering (DVR) is a critical area of research in scientific data visualization. The prevailing realistic DVR methods are primarily rooted in outdated theories of participating media rendering and often lack comprehensive analyses of their applicability to realistic DVR

[...] Read more.

Physically based realistic direct volume rendering (DVR) is a critical area of research in scientific data visualization. The prevailing realistic DVR methods are primarily rooted in outdated theories of participating media rendering and often lack comprehensive analyses of their applicability to realistic DVR scenarios. As a result, the fidelity of material representation in the rendered output is frequently limited. To address these challenges, we present a novel multi-material radiative transfer model (MM-RTM) designed for realistic DVR, grounded in recent advancements in light transport theories. Additionally, we standardize various transfer function techniques and propose five distinct forms of transfer functions along with proxy volumes. This comprehensive approach enables our DVR framework to accommodate a wide range of complex transfer function techniques, which we illustrate through several visualizations. Furthermore, to enhance sampling efficiency, we develop a new multi-hierarchical volumetric acceleration method that supports multi-level searches and volume traversal. Our volumetric accelerator also facilitates real-time structural updates when applying complex transfer functions in DVR. Our MM-RTM, the unified representation of complex transfer functions, and the acceleration structure for real-time updates are complementary components that collectively establish a comprehensive framework for realistic multi-material DVR. Evaluation from a user study indicates that the rendering results produced by our method demonstrate the most realistic effects among various publicly available state-of-the-art techniques.

Full article

(This article belongs to the Section Visualization and Computer Graphics)

►▼

Show Figures

Figure 1

Open AccessArticle

SADiff: Coronary Artery Segmentation in CT Angiography Using Spatial Attention and Diffusion Model

by

Ruoxuan Xu, Longhui Dai, Jianru Wang, Lei Zhang and Yuanquan Wang

J. Imaging 2025, 11(6), 192; https://doi.org/10.3390/jimaging11060192 - 11 Jun 2025

Abstract

Coronary artery disease (CAD) is a highly prevalent cardiovascular disease and one of the leading causes of death worldwide. The accurate segmentation of coronary arteries from CT angiography (CTA) images is essential for the diagnosis and treatment of coronary artery disease. However, due

[...] Read more.

Coronary artery disease (CAD) is a highly prevalent cardiovascular disease and one of the leading causes of death worldwide. The accurate segmentation of coronary arteries from CT angiography (CTA) images is essential for the diagnosis and treatment of coronary artery disease. However, due to small vessel diameters, large morphological variations, low contrast, and motion artifacts, conventional segmentation methods, including classical image processing (such as region growing and level sets) and early deep learning models with limited receptive fields, are unsatisfactory. We propose SADiff, a hybrid framework that integrates a dilated attention network (DAN) for ROI extraction, a diffusion-based subnet for noise suppression in low-contrast regions, and a striped attention network (SAN) to refine tubular structures affected by morphological variations. Experiments on the public ImageCAS dataset show that it has a Dice score of 83.48% and a Hausdorff distance of 19.43 mm, which is 6.57% higher than U-Net3D in terms of Dice. The cross-dataset validation on the private ImageLaPP dataset verifies its generalizability with a Dice score of 79.42%. This comprehensive evaluation demonstrates that SADiff provides a more efficient and versatile method for coronary segmentation and shows great potential for improving the diagnosis and treatment of CAD.

Full article

(This article belongs to the Section Computer Vision and Pattern Recognition)

►▼

Show Figures

Figure 1

Open AccessArticle

Prediction of PD-L1 and CD68 in Clear Cell Renal Cell Carcinoma with Green Learning

by

Yixing Wu, Alexander Shieh, Steven Cen, Darryl Hwang, Xiaomeng Lei, S. J. Pawan, Manju Aron, Inderbir Gill, William D. Wallace, C.-C. Jay Kuo and Vinay Duddalwar

J. Imaging 2025, 11(6), 191; https://doi.org/10.3390/jimaging11060191 - 10 Jun 2025

Abstract

Clear cell renal cell carcinoma (ccRCC) is the most common type of renal cancer. Extensive efforts have been made to utilize radiomics from computed tomography (CT) imaging to predict tumor immune microenvironment (TIME) measurements. This study proposes a Green Learning (GL) framework for

[...] Read more.

Clear cell renal cell carcinoma (ccRCC) is the most common type of renal cancer. Extensive efforts have been made to utilize radiomics from computed tomography (CT) imaging to predict tumor immune microenvironment (TIME) measurements. This study proposes a Green Learning (GL) framework for approximating tissue-based biomarkers from CT scans, focusing on the PD-L1 expression and CD68 tumor-associated macrophages (TAMs) in ccRCC. Our approach includes radiomic feature extraction, redundancy removal, and supervised feature selection through a discriminant feature test (DFT), a relevant feature test (RFT), and least-squares normal transform (LNT) for robust feature generation. For the PD-L1 expression in 52 ccRCC patients, treated as a regression problem, our GL model achieved a 5-fold cross-validated mean squared error (MSE) of 0.0041 and a Mean Absolute Error (MAE) of 0.0346. For the TAM population (CD68+/PanCK+), analyzed in 78 ccRCC patients as a binary classification task (at a 0.4 threshold), the model reached a 10-fold cross-validated Area Under the Receiver Operating Characteristic (AUROC) of 0.85 (95% CI [0.76, 0.93]) using 10 LNT-derived features, improving upon the previous benchmark of 0.81. This study demonstrates the potential of GL in radiomic analyses, offering a scalable, efficient, and interpretable framework for the non-invasive approximation of key biomarkers.

Full article

(This article belongs to the Special Issue Imaging in Healthcare: Progress and Challenges)

►▼

Show Figures

Figure 1

Open AccessArticle

Insulator Surface Defect Detection Method Based on Graph Feature Diffusion Distillation

by

Shucai Li, Na Zhang, Gang Yang, Yannong Hou and Xingzhong Zhang

J. Imaging 2025, 11(6), 190; https://doi.org/10.3390/jimaging11060190 - 10 Jun 2025

Abstract

Aiming at the difficulties of scarcity of defect samples on the surface of power insulators, irregular morphology and insufficient pixel-level localization accuracy, this paper proposes a defect detection method based on graph feature diffusion distillation named GFDD. The feature bias problem is alleviated

[...] Read more.

Aiming at the difficulties of scarcity of defect samples on the surface of power insulators, irregular morphology and insufficient pixel-level localization accuracy, this paper proposes a defect detection method based on graph feature diffusion distillation named GFDD. The feature bias problem is alleviated by constructing a dual-division teachers architecture with graph feature consistency constraints, while the cross-layer feature fusion module is utilized to dynamically aggregate multi-scale information to reduce redundancy; the diffusion distillation mechanism is designed to break through the traditional single-layer feature transfer limitation, and the global context modeling capability is enhanced by fusing deep semantics and shallow details through channel attention. In the self-built dataset, GFDD achieves 96.6% Pi.AUROC, 97.7% Im.AUROC and 95.1% F1-score, which is 2.4–3.2% higher than the existing optimal methods; it maintains excellent generalization and robustness in multiple public dataset tests. The method provides a high-precision solution for automated inspection of insulator surface defect and has certain engineering value.

Full article

(This article belongs to the Special Issue Self-Supervised Learning for Image Processing and Analysis)

►▼

Show Figures

Figure 1

Open AccessArticle

SAM for Road Object Segmentation: Promising but Challenging

by

Alaa Atallah Almazroey, Salma kammoun Jarraya and Reem Alnanih

J. Imaging 2025, 11(6), 189; https://doi.org/10.3390/jimaging11060189 - 10 Jun 2025

Abstract

Road object segmentation is crucial for autonomous driving, as it enables vehicles to perceive their surroundings. While deep learning models show promise, their generalization across diverse road conditions, weather variations, and lighting changes remains challenging. Different approaches have been proposed to address this

[...] Read more.

Road object segmentation is crucial for autonomous driving, as it enables vehicles to perceive their surroundings. While deep learning models show promise, their generalization across diverse road conditions, weather variations, and lighting changes remains challenging. Different approaches have been proposed to address this limitation. However, these models often struggle with the varying appearance of road objects under diverse environmental conditions. Foundation models such as the Segment Anything Model (SAM) offer a potential avenue for improved generalization in complex visual tasks. Thus, this study presents a pioneering comprehensive evaluation of the SAM for zero-shot road object segmentation, without explicit prompts. This study aimed to determine the inherent capabilities and limitations of the SAM in accurately segmenting a variety of road objects under the diverse and challenging environmental conditions encountered in real-world autonomous driving scenarios. We assessed the SAM’s performance on the KITTI, BDD100K, and Mapillary Vistas datasets, encompassing a wide range of environmental conditions. Using a variety of established evaluation metrics, our analysis revealed the SAM’s capabilities and limitations in accurately segmenting various road objects, particularly highlighting challenges posed by dynamic environments, illumination changes, and occlusions. These findings provide valuable insights for researchers and developers seeking to enhance the robustness of foundation models such as the SAM in complex road environments, guiding future efforts to improve perception systems for autonomous driving.

Full article

(This article belongs to the Section Computer Vision and Pattern Recognition)

►▼

Show Figures

Figure 1

Open AccessArticle

CSANet: Context–Spatial Awareness Network for RGB-T Urban Scene Understanding

by

Ruixiang Li, Zhen Wang, Jianxin Guo and Chuanlei Zhang

J. Imaging 2025, 11(6), 188; https://doi.org/10.3390/jimaging11060188 - 9 Jun 2025

Abstract

Semantic segmentation plays a critical role in understanding complex urban environments, particularly for autonomous driving applications. However, existing approaches face significant challenges under low-light and adverse weather conditions. To address these limitations, we propose CSANet (Context Spatial Awareness Network), a novel framework that

[...] Read more.

Semantic segmentation plays a critical role in understanding complex urban environments, particularly for autonomous driving applications. However, existing approaches face significant challenges under low-light and adverse weather conditions. To address these limitations, we propose CSANet (Context Spatial Awareness Network), a novel framework that effectively integrates RGB and thermal infrared (TIR) modalities. CSANet employs an efficient encoder to extract complementary local and global features, while a hierarchical fusion strategy is adopted to selectively integrate visual and semantic information. Notably, the Channel–Spatial Cross-Fusion Module (CSCFM) enhances local details by fusing multi-modal features, and the Multi-Head Fusion Module (MHFM) captures global dependencies and calibrates multi-modal information. Furthermore, the Spatial Coordinate Attention Mechanism (SCAM) improves object localization accuracy in complex urban scenes. Evaluations on benchmark datasets (MFNet and PST900) demonstrate that CSANet achieves state-of-the-art performance, significantly advancing RGB-T semantic segmentation.

Full article

(This article belongs to the Topic Transformer and Deep Learning Applications in Image Processing)

►▼

Show Figures

Figure 1

Open AccessArticle

Biomechanics of Spiral Fractures: Investigating Periosteal Effects Using Digital Image Correlation

by

Ghaidaa A. Khalid, Ali Al-Naji and Javaan Chahl

J. Imaging 2025, 11(6), 187; https://doi.org/10.3390/jimaging11060187 - 7 Jun 2025

Abstract

Spiral fractures are a frequent clinical manifestation of child abuse, particularly in non-ambulatory infants. Approximately 50% of fractures in children under one year of age are non-accidental, yet differentiating between accidental and abusive injuries remains challenging, as no single fracture type is diagnostic

[...] Read more.

Spiral fractures are a frequent clinical manifestation of child abuse, particularly in non-ambulatory infants. Approximately 50% of fractures in children under one year of age are non-accidental, yet differentiating between accidental and abusive injuries remains challenging, as no single fracture type is diagnostic in isolation. The objective of this study is to investigate the biomechanics of spiral fractures in immature long bones and the role of the periosteum in modulating fracture behavior under torsional loading. Methods: Paired metatarsal bone specimens from immature sheep were tested using controlled torsional loading at two angular velocities (90°/s and 180°/s). Specimens were prepared through potting, application of a base coat, and painting of a speckle pattern suitable for high-speed digital image correlation (HS-DIC) analysis. Both periosteum-intact and periosteum-removed groups were included. Results: Spiral fractures were successfully induced in over 85% of specimens. Digital image correlation revealed localized diagonal tensile strain at the fracture initiation site, with opposing compressive zones. Notably, bones with intact periosteum exhibited broader tensile stress regions before and after failure, suggesting a biomechanical role in constraining deformation. Conclusion: This study presents a novel integration of high-speed digital image correlation (DIC) with paired biomechanical testing to evaluate the periosteum’s role in spiral fracture formation—an area that remains underexplored. The findings offer new insight into the strain distribution dynamics in immature long bones and highlight the periosteum’s potential protective contribution under torsional stress.

Full article

(This article belongs to the Section Medical Imaging)

►▼

Show Figures

Figure 1

Open AccessArticle

NCT-CXR: Enhancing Pulmonary Abnormality Segmentation on Chest X-Rays Using Improved Coordinate Geometric Transformations

by

Abu Salam, Pulung Nurtantio Andono, Purwanto, Moch Arief Soeleman, Mohamad Sidiq, Farrikh Alzami, Ika Novita Dewi, Suryanti, Eko Adhi Pangarsa, Daniel Rizky, Budi Setiawan, Damai Santosa, Antonius Gunawan Santoso, Farid Che Ghazali and Eko Supriyanto

J. Imaging 2025, 11(6), 186; https://doi.org/10.3390/jimaging11060186 - 5 Jun 2025

Abstract

Medical image segmentation, especially in chest X-ray (CXR) analysis, encounters substantial problems such as class imbalance, annotation inconsistencies, and the necessity for accurate pathological region identification. This research aims to improve the precision and clinical reliability of pulmonary abnormality segmentation by developing NCT-CXR,

[...] Read more.

Medical image segmentation, especially in chest X-ray (CXR) analysis, encounters substantial problems such as class imbalance, annotation inconsistencies, and the necessity for accurate pathological region identification. This research aims to improve the precision and clinical reliability of pulmonary abnormality segmentation by developing NCT-CXR, a framework that combines anatomically constrained data augmentation with expert-guided annotation refinement. NCT-CXR applies carefully calibrated discrete-angle rotations (±5°, ±10°) and intensity-based augmentations to enrich training data while preserving spatial and anatomical integrity. To address label noise in the NIH Chest X-ray dataset, we further introduce a clinically validated annotation refinement pipeline using the OncoDocAI platform, resulting in multi-label pixel-level segmentation masks for nine thoracic conditions. YOLOv8 was selected as the segmentation backbone due to its architectural efficiency, speed, and high spatial accuracy. Experimental results show that NCT-CXR significantly improves segmentation precision, especially for pneumothorax (0.829 and 0.804 for ±5° and ±10°, respectively). Non-parametric statistical testing (Kruskal–Wallis, H = 14.874, p = 0.0019) and post hoc Nemenyi analysis (p = 0.0138 and p = 0.0056) confirm the superiority of discrete-angle augmentation over mixed strategies. These findings underscore the importance of clinically constrained augmentation and high-quality annotation in building robust segmentation models. NCT-CXR offers a practical, high-performance solution for integrating deep learning into radiological workflows.

Full article

(This article belongs to the Special Issue Clinical and Pathological Imaging in the Era of Artificial Intelligence: New Insights and Perspectives—2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

Comparison of Imaging Modalities for Left Ventricular Noncompaction Morphology

by

Márton Horváth, Dorottya Kiss, István Márkusz, Márton Tokodi, Anna Réka Kiss, Zsófia Gregor, Kinga Grebur, Kristóf Farkas-Sütő, Balázs Mester, Flóra Gyulánczi, Attila Kovács, Béla Merkely, Hajnalka Vágó and Andrea Szűcs

J. Imaging 2025, 11(6), 185; https://doi.org/10.3390/jimaging11060185 - 4 Jun 2025

Abstract

Left ventricular noncompaction (LVNC) is characterized by excessive trabeculation, which may impair left ventricular function over time. While cardiac magnetic resonance imaging (CMR) is considered the gold standard for evaluating LV morphology, the optimal modality for follow-up remains uncertain. This study aimed to

[...] Read more.

Left ventricular noncompaction (LVNC) is characterized by excessive trabeculation, which may impair left ventricular function over time. While cardiac magnetic resonance imaging (CMR) is considered the gold standard for evaluating LV morphology, the optimal modality for follow-up remains uncertain. This study aimed to assess the correlation and agreement among two-dimensional transthoracic echocardiography (2D_TTE), three-dimensional transthoracic echocardiography (3D_TTE), and CMR by comparing volumetric and strain parameters in LVNC patients and healthy individuals. Thirty-eight LVNC subjects with preserved ejection fraction and thirty-four healthy controls underwent all three imaging modalities. Indexed end-diastolic, end-systolic, and stroke volumes, ejection fraction, and global longitudinal and circumferential strains were evaluated using Pearson correlation and Bland–Altman analysis. In the healthy group, volumetric parameters showed strong correlation and good agreement across modalities, particularly between 3D_TTE and CMR. In contrast, agreement in the LVNC group was moderate, with lower correlation and higher percentage errors, especially for strain parameters. Functional data exhibited weak or no correlation, regardless of group. These findings suggest that while echocardiography may be suitable for volumetric follow-up in LVNC after baseline CMR, deformation parameters are not interchangeable between modalities, likely due to trabecular interference. Further studies are warranted to validate modality-specific strain assessment in hypertrabeculated hearts.

Full article

(This article belongs to the Section Medical Imaging)

►▼

Show Figures

Figure 1

Open AccessArticle

COSMICA: A Novel Dataset for Astronomical Object Detection with Evaluation Across Diverse Detection Architectures

by

Evgenii Piratinskii and Irina Rabaev

J. Imaging 2025, 11(6), 184; https://doi.org/10.3390/jimaging11060184 - 4 Jun 2025

Abstract

Accurate and efficient detection of celestial objects in telescope imagery is a fundamental challenge in both professional and amateur astronomy. Traditional methods often struggle with noise, varying brightness, and object morphology. This paper introduces COSMICA, a novel, curated dataset of manually annotated astronomical

[...] Read more.

Accurate and efficient detection of celestial objects in telescope imagery is a fundamental challenge in both professional and amateur astronomy. Traditional methods often struggle with noise, varying brightness, and object morphology. This paper introduces COSMICA, a novel, curated dataset of manually annotated astronomical images collected from amateur observations. COSMICA enables the development and evaluation of real-time object detection systems intended for practical deployment in observational pipelines. We investigate three modern YOLO architectures, YOLOv8, YOLOv9, and YOLOv11, and two additional object detection models, EfficientDet-Lite0 and MobileNetV3-FasterRCNN-FPN, to assess their performance in detecting comets, galaxies, nebulae, and globular clusters. All models are evaluated using consistent experimental conditions across multiple metrics, including mAP, precision, recall, and inference speed. YOLOv11 demonstrated the highest overall accuracy and computational efficiency, making it a promising candidate for real-world astronomical applications. These results support the feasibility of integrating deep learning-based detection systems into observational astronomy workflows and highlight the importance of domain-specific datasets for training robust AI models.

Full article

(This article belongs to the Section Computer Vision and Pattern Recognition)

►▼

Show Figures

Figure 1

Open AccessArticle

Platelets Image Classification Through Data Augmentation: A Comparative Study of Traditional Imaging Augmentation and GAN-Based Synthetic Data Generation Techniques Using CNNs

by

Itunuoluwa Abidoye, Frances Ikeji, Charlie A. Coupland, Simon D. J. Calaminus, Nick Sander and Eva Sousa

J. Imaging 2025, 11(6), 183; https://doi.org/10.3390/jimaging11060183 - 4 Jun 2025

Abstract

Platelets play a crucial role in diagnosing and detecting various diseases, influencing the progression of conditions and guiding treatment options. Accurate identification and classification of platelets are essential for these purposes. The present study aims to create a synthetic database of platelet images

[...] Read more.

Platelets play a crucial role in diagnosing and detecting various diseases, influencing the progression of conditions and guiding treatment options. Accurate identification and classification of platelets are essential for these purposes. The present study aims to create a synthetic database of platelet images using Generative Adversarial Networks (GANs) and validate its effectiveness by comparing it with datasets of increasing sizes generated through traditional augmentation techniques. Starting from an initial dataset of 71 platelet images, the dataset was expanded to 141 images (Level 1) using random oversampling and basic transformations and further to 1463 images (Level 2) through extensive augmentation (rotation, shear, zoom). Additionally, a synthetic dataset of 300 images was generated using a Wasserstein GAN with Gradient Penalty (WGAN-GP). Eight pre-trained deep learning models (DenseNet121, DenseNet169, DenseNet201, VGG16, VGG19, InceptionV3, InceptionResNetV2, and AlexNet) and two custom CNNs were evaluated across these datasets. Performance was measured using accuracy, precision, recall, and F1-score. On the extensively augmented dataset (Level 2), InceptionV3 and InceptionResNetV2 reached 99% accuracy and 99% precision/recall/F1-score, while DenseNet201 closely followed, with 98% accuracy, precision, recall and F1-score. GAN-augmented data further improved DenseNet’s performance, demonstrating the potential of GAN-generated images in enhancing platelet classification, especially where data are limited. These findings highlight the benefits of combining traditional and GAN-based augmentation techniques to improve classification performance in medical imaging tasks.

Full article

(This article belongs to the Topic Machine Learning and Deep Learning in Medical Imaging)

►▼

Show Figures

Figure 1

Open AccessArticle

A Structured and Methodological Review on Multi-View Human Activity Recognition for Ambient Assisted Living

by

Fahmid Al Farid, Ahsanul Bari, Abu Saleh Musa Miah, Sarina Mansor, Jia Uddin and S. Prabha Kumaresan

J. Imaging 2025, 11(6), 182; https://doi.org/10.3390/jimaging11060182 - 3 Jun 2025

Abstract

Ambient Assisted Living (AAL) leverages technology to support the elderly and individuals with disabilities. A key challenge in these systems is efficient Human Activity Recognition (HAR). However, no study has systematically compared single-view (SV) and multi-view (MV) Human Activity Recognition approaches. This review

[...] Read more.

Ambient Assisted Living (AAL) leverages technology to support the elderly and individuals with disabilities. A key challenge in these systems is efficient Human Activity Recognition (HAR). However, no study has systematically compared single-view (SV) and multi-view (MV) Human Activity Recognition approaches. This review addresses this gap by analyzing the evolution from single-view to multi-view recognition systems, covering benchmark datasets, feature extraction methods, and classification techniques. We examine how activity recognition systems have transitioned to multi-view architectures using advanced deep learning models optimized for Ambient Assisted Living, thereby improving accuracy and robustness. Furthermore, we explore a wide range of machine learning and deep learning models—including Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Temporal Convolutional Networks (TCNs), and Graph Convolutional Networks (GCNs)—along with lightweight transfer learning methods suitable for environments with limited computational resources. Key challenges such as data remediation, privacy, and generalization are discussed, alongside potential solutions such as sensor fusion and advanced learning strategies. This study offers comprehensive insights into recent advancements and future directions, guiding the development of intelligent, efficient, and privacy-compliant Human Activity Recognition systems for Ambient Assisted Living applications.

Full article

(This article belongs to the Section Computer Vision and Pattern Recognition)

►▼

Show Figures

Figure 1

Open AccessArticle

3D Echocardiographic Assessment of Right Ventricular Involvement of Left Ventricular Hypertrabecularization from a New Perspective

by

Márton Horváth, Kristóf Farkas-Sütő, Flóra Klára Gyulánczi, Alexandra Fábián, Bálint Lakatos, Anna Réka Kiss, Kinga Grebur, Zsófia Gregor, Balázs Mester, Attila Kovács, Béla Merkely and Andrea Szűcs

J. Imaging 2025, 11(6), 181; https://doi.org/10.3390/jimaging11060181 - 3 Jun 2025

Abstract

Right ventricular (RV) involvement in left ventricular hypertrabeculation (LVNC) remains under investigation. Due to its complex anatomy, assessing RV function is challenging, but 3D transthoracic echocardiography (3D_TTE) offers valuable insights. We aimed to evaluate volumetric, functional, and strain parameters of both ventricles in

[...] Read more.

Right ventricular (RV) involvement in left ventricular hypertrabeculation (LVNC) remains under investigation. Due to its complex anatomy, assessing RV function is challenging, but 3D transthoracic echocardiography (3D_TTE) offers valuable insights. We aimed to evaluate volumetric, functional, and strain parameters of both ventricles in LVNC patients with preserved left ventricular ejection fraction (EF) and compare findings to a control group. This study included 37 LVNC patients and 37 age- and sex-matched controls. 3D_TTE recordings were analyzed using TomTec Image Arena (v. 4.7) and reVISION software to assess volumes, EF, and global/segmental strains. RV EF was further divided into longitudinal (LEF), radial (REF), and antero-posterior (AEF) components. LV volumes were significantly higher in the LVNC group, while RV volumes were comparable. EF and strain values were lower in both ventricles in LVNC patients. RV movement analysis showed significantly reduced LEF and REF, whereas AEF remained normal. These findings suggest subclinical RV dysfunction in LVNC, emphasizing the need for follow-up, even with preserved EF.

Full article

(This article belongs to the Section Medical Imaging)

►▼

Show Figures

Figure 1

Open AccessBrief Report

Photon-Counting Detector CT Scan of Dinosaur Fossils: Initial Experience

by

Tasuku Wakabayashi, Kenji Takata, Soichiro Kawabe, Masato Shimada, Takeshi Mugitani, Takuya Yachida, Rikiya Maruyama, Satomi Kanai, Kiyotaka Takeuchi, Tomohiro Kotsuji, Toshiki Tateishi, Hideki Hyodoh and Tetsuya Tsujikawa

J. Imaging 2025, 11(6), 180; https://doi.org/10.3390/jimaging11060180 - 2 Jun 2025

Abstract

Beyond clinical areas, photon-counting detector (PCD) CT is innovatively applied to study paleontological specimens. This study presents a preliminary investigation into the application of PCD-CT for imaging large dinosaur fossils, comparing it with standard energy-integrating detector (EID) CT. The left dentary of Tyrannosaurus

[...] Read more.

Beyond clinical areas, photon-counting detector (PCD) CT is innovatively applied to study paleontological specimens. This study presents a preliminary investigation into the application of PCD-CT for imaging large dinosaur fossils, comparing it with standard energy-integrating detector (EID) CT. The left dentary of Tyrannosaurus and the skull of Camarasaurus were imaged using PCD-CT in ultra-high-resolution mode and EID-CT. The PCD-CT and EID-CT image quality of the dinosaurs were visually assessed. Compared with EID-CT, PCD-CT yielded higher-resolution anatomical images free of image deterioration, achieving a better definition of the Tyrannosaurus mandibular canal and the three semicircular canals of Camarasaurus. PCD-CT clearly depicts the internal structure and morphology of large dinosaur fossils without damaging them and also provides spectral information, thus allowing researchers to gain insights into fossil mineral composition and the preservation state in the future.

Full article

(This article belongs to the Section Computational Imaging and Computational Photography)

►▼

Show Figures

Figure 1

Open AccessArticle

Optimizing Remote Sensing Image Retrieval Through a Hybrid Methodology

by

Sujata Alegavi and Raghvendra Sedamkar

J. Imaging 2025, 11(6), 179; https://doi.org/10.3390/jimaging11060179 - 28 May 2025

Abstract

The contemporary challenge in remote sensing lies in the precise retrieval of increasingly abundant and high-resolution remotely sensed images (RS image) stored in expansive data warehouses. The heightened spatial and spectral resolutions, coupled with accelerated image acquisition rates, necessitate advanced tools for effective

[...] Read more.

The contemporary challenge in remote sensing lies in the precise retrieval of increasingly abundant and high-resolution remotely sensed images (RS image) stored in expansive data warehouses. The heightened spatial and spectral resolutions, coupled with accelerated image acquisition rates, necessitate advanced tools for effective data management, retrieval, and exploitation. The classification of large-sized images at the pixel level generates substantial data, escalating the workload and search space for similarity measurement. Semantic-based image retrieval remains an open problem due to limitations in current artificial intelligence techniques. Furthermore, on-board storage constraints compel the application of numerous compression algorithms to reduce storage space, intensifying the difficulty of retrieving substantial, sensitive, and target-specific data. This research proposes an innovative hybrid approach to enhance the retrieval of remotely sensed images. The approach leverages multilevel classification and multiscale feature extraction strategies to enhance performance. The retrieval system comprises two primary phases: database building and retrieval. Initially, the proposed Multiscale Multiangle Mean-shift with Breaking Ties (MSMA-MSBT) algorithm selects informative unlabeled samples for hyperspectral and synthetic aperture radar images through an active learning strategy. Addressing the scaling and rotation variations in image capture, a flexible and dynamic algorithm, modified Deep Image Registration using Dynamic Inlier (IRDI), is introduced for image registration. Given the complexity of remote sensing images, feature extraction occurs at two levels. Low-level features are extracted using the modified Multiscale Multiangle Completed Local Binary Pattern (MSMA-CLBP) algorithm to capture local contexture features, while high-level features are obtained through a hybrid CNN structure combining pretrained networks (Alexnet, Caffenet, VGG-S, VGG-M, VGG-F, VGG-VDD-16, VGG-VDD-19) and a fully connected dense network. Fusion of low- and high-level features facilitates final class distinction, with soft thresholding mitigating misclassification issues. A region-based similarity measurement enhances matching percentages. Results, evaluated on high-resolution remote sensing datasets, demonstrate the effectiveness of the proposed method, outperforming traditional algorithms with an average accuracy of 86.66%. The hybrid retrieval system exhibits substantial improvements in classification accuracy, similarity measurement, and computational efficiency compared to state-of-the-art scene classification and retrieval methods.

Full article

(This article belongs to the Topic Computational Intelligence in Remote Sensing: 2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

High-Throughput ORB Feature Extraction on Zynq SoC for Real-Time Structure-from-Motion Pipelines

by

Panteleimon Stamatakis and John Vourvoulakis

J. Imaging 2025, 11(6), 178; https://doi.org/10.3390/jimaging11060178 - 28 May 2025

Abstract

This paper presents a real-time system for feature detection and description, the first stage in a structure-from-motion (SfM) pipeline. The proposed system leverages an optimized version of the ORB algorithm (oriented FAST and rotated BRIEF) implemented on the Digilent Zybo Z7020 FPGA board

[...] Read more.

This paper presents a real-time system for feature detection and description, the first stage in a structure-from-motion (SfM) pipeline. The proposed system leverages an optimized version of the ORB algorithm (oriented FAST and rotated BRIEF) implemented on the Digilent Zybo Z7020 FPGA board equipped with the Xilinx Zynq-7000 SoC. The system accepts real-time video input (60 fps, 1920 × 1080 resolution, 24-bit color) via HDMI or a camera module. In order to support high frame rates for full-HD images, a double-data-rate pipeline scheme was adopted for Harris functions. Gray-scale video with features identified in red is exported through a separate HDMI port. Feature descriptors are calculated inside the FPGA by Zynq’s programmable logic and verified using Xilinx’s ILA IP block on a connected computer running Vivado. The implemented system achieves a latency of 192.7 microseconds, which is suitable for real-time applications. The proposed architecture is evaluated in terms of repeatability, matching retention and matching accuracy in several image transformations. It meets satisfactory accuracy and performance considering that there are slight changes between successive frames. This work paves the way for future research on the implementation of the remaining stages of a real-time SfM pipeline on the proposed hardware platform.

Full article

(This article belongs to the Special Issue Recent Techniques in Image Feature Extraction)

►▼

Show Figures

Figure 1

Journal Menu

► ▼ Journal Menu-

- J. Imaging Home

- Aims & Scope

- Editorial Board

- Reviewer Board

- Topical Advisory Panel

- Instructions for Authors

- Special Issues

- Topics

- Sections

- Article Processing Charge

- Indexing & Archiving

- Most Cited & Viewed

- Journal Statistics

- Journal History

- Journal Awards

- Conferences

- Editorial Office

- 10th Anniversary

Journal Browser

► ▼ Journal BrowserHighly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Applied Sciences, Computation, Entropy, J. Imaging, Optics

Color Image Processing: Models and Methods (CIP: MM)

Topic Editors: Giuliana Ramella, Isabella TorcicolloDeadline: 30 July 2025

Topic in

Applied Sciences, Bioengineering, Diagnostics, J. Imaging, Signals

Signal Analysis and Biomedical Imaging for Precision Medicine

Topic Editors: Surbhi Bhatia Khan, Mo SaraeeDeadline: 31 August 2025

Topic in

Animals, Computers, Information, J. Imaging, Veterinary Sciences

AI, Deep Learning, and Machine Learning in Veterinary Science Imaging

Topic Editors: Vitor Filipe, Lio Gonçalves, Mário GinjaDeadline: 31 October 2025

Topic in

Applied Sciences, Electronics, MAKE, J. Imaging, Sensors

Applied Computer Vision and Pattern Recognition: 2nd Edition

Topic Editors: Antonio Fernández-Caballero, Byung-Gyu KimDeadline: 31 December 2025

Conferences

Special Issues

Special Issue in

J. Imaging

Data-Centric Computer Vision for Image Processing

Guest Editors: Yue Yao, Tom GedeonDeadline: 30 June 2025

Special Issue in

J. Imaging

Advanced Imaging in Autonomous Vehicle and Intelligent Driving

Guest Editors: Wenzhao Zheng, Linqing ZhaoDeadline: 30 June 2025

Special Issue in

J. Imaging

Imaging and Color Vision, 2nd Edition

Guest Editors: Rafael Huertas, Samuel MorillasDeadline: 30 June 2025

Special Issue in

J. Imaging

Advancements in Deepfake Technology, Biometry System and Multimedia Forensics

Guest Editors: Luca Guarnera, Alessandro Ortis, Giulia OrrùDeadline: 30 June 2025