- Article

BigchainDB for Precision Agriculture Data Sharing: A Feasibility Study

- Željko Džafić,

- Branko Milosavljević and

- Slobodanka Pavlović

- + 1 author

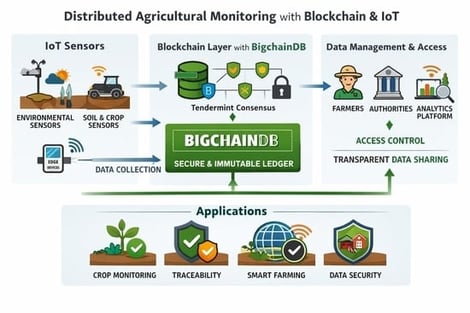

Centralized agricultural data platforms raise concerns about ownership, provenance, and vendor lock-in, motivating decentralized alternatives. This study evaluates BigchainDB as a blockchain-database hybrid for owner-controlled precision agriculture data sharing. We address three research questions: (1) functional feasibility for data integrity, access control, and heterogeneous sensor integration; (2) integration patterns bridging IoT ingestion with blockchain consensus; and (3) operational trade-offs versus centralized alternatives. A proof-of-concept implementation comprising a sensor simulator, FastAPI middleware, and three-node BigchainDB cluster demonstrates end-to-end data flow with cryptographic provenance. Key contributions include the following: identification of three integration patterns (message queue buffering for high-throughput ingestion, hierarchical asset modeling, and dual-key access control); comparative analysis against five blockchain-database alternatives; and characterization of deployment complexity. Results show BigchainDB satisfies the functional requirements for data integrity and access control, while requiring increased operational overhead compared to single-node databases. The architecture is viable when multi-party governance outweighs operational simplicity, though production deployments require further scalability validation, including detailed performance benchmarking.

27 February 2026