Abstract

Nowadays, driven by the exponential growth of parameters and training data of AI applications and Large Language Models, a single GPU is no longer sufficient in terms of computing power and storage capacity. Building high-performance multi-GPU systems or a GPU cluster via vertical scaling (scale-up) has thus become an effective approach to break the bottleneck and has further emerged as a key research focus. Given that traditional inter-GPU communication technologies fail to meet the requirement of GPU interconnection in vertical scaling, a variety of high-performance inter-GPU communication protocols tailored for the scale-up domain have been proposed recently. Notably, due to the emerging nature of these demands and technologies, academic research in this field remains scarce, with limited deep participation from the academic community. Inspired by this trend, this article identifies the challenges and requirements of a scale-up network, analyzes the bottlenecks of traditional technologies like PCIe in a scale-up network, and surveys the emerging scale-up targeted technologies, including NVLink, OISA, UALink, SUE, and other X-Links. Then, an in-depth comparison and discussion is conducted, and we express our insights in protocol design and related technologies. We also highlight that existing emerging protocols and technologies still face limitations, with certain technical mechanisms requiring further exploration. Finally, this article presents future research directions and opportunities. As the first review article fully focusing on intra-node GPU interconnection in a scale-up network, this article aims to provide valuable insights and guidance for future research in this emerging field, and we hope to establish a foundation that will inspire and direct subsequent studies.

1. Introduction and Background

In recent years, Artificial Intelligence (AI) has achieved groundbreaking progress across diverse domains, from natural language processing (NLP) and computer vision (CV) to scientific computing, driven by the continuous evolution of model architectures and the exponential growth of model parameters and training data. To pursue higher accuracy and broader applicability, large-scale deep learning models such as Large Language Models (LLMs) and Mixtures of Experts (MoE) have emerged. Further fueled by the development of generative AI, these models now feature exponentially growing parameter scales and increasingly complex computational graphs.

This trend toward model scaling, while enabling unprecedented performance leaps, has also drastically escalated the demand for both computational power and memory resources. On the one hand, the computational power demanded by complex training iterations and high-throughput inference tasks frequently surpasses the on-chip processing capability of a single AI processor, such as a Graphics Processing Unit (GPU (for the sake of expression simplicity, this paper uses “GPU” as a generic term encompassing AI accelerators and AI chips, including but not limited to General-Purpose Computing on Graphics Processing Units (GPGPUs), Neural Processing Units (NPUs), Tensor Processing Units (TPUs), and other domain-specific AI accelerators (collectively referred to as XPUs))). Distributed parallel training on large-scale GPU clusters has become inevitable. For example, Meta’s LLaMa-2 70 B (Billion), a dense model, was trained for approximately 35 days and consumed a total of 1.72 million NVIDIA A100 GPU hours [], and the pretraining of LLaMA-3 takes approximately 54 days with 16K H100-80 GB GPU on Meta’s production cluster [,]. Similarly, DeepSeek-V3, a 671B-parameter MoE model, underwent training on 14.8 trillion high-quality tokens with 2048 NVIDIA H800 GPUs, whose full training lasted about 54 days and required 2.788 million H800 GPU hours []. On the other hand, the parameter scale of LLMs has undergone explosive and continuous expansion, which is a trend that directly drives the sharp growth of their demand for storage resources, and far exceeds the on-chip storage capacity of a GPU. During training, additional storage is required for gradients and optimizer states, intermediate activation values, and training data, pushing the total memory requirement to several terabytes (TB). By contrast, the memory of a single GPU is typically 24 GB (Gigabyte) to 80 GB, and even a high-end GPU struggles to handle this load. For instance, in Moonshot Kimi-K2, storing the model parameters in BF16 (Brain Floating Point 16) and their gradient accumulation buffer in FP32 (Single-Precision Floating Point 32) requires approximately 6 TB of GPU memory, distributed over a model-parallel group of 256 H800 GPUs []. Given the inherent limitations of a single GPU in meeting the requirements of trillion-parameter model training or high-performance inference, developing an efficiently coordinated multi-GPU system or high-performance GPU cluster has become an inevitable solution to break through these constraints.

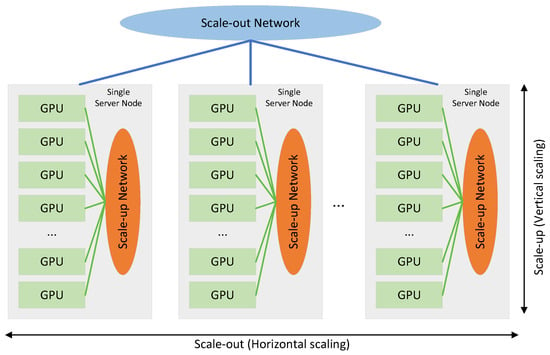

As Figure 1 shows, multi-GPU or GPU cluster system implementations can be categorized into two primary paradigms: scale-up and scale-out [,]. Scale-up, a vertical scaling approach, integrates multiple GPU cards within a single server; scale-out, by contrast, adopts horizontal scaling to efficiently interconnect multiple servers. More specifically, scale-up enhances a server’s computational and memory capabilities by integrating as many GPU cards as possible, leveraging intra-node GPU interconnects through point-to-point (P2P) communication. It employs memory semantics (e.g., load/store operations) and targets scenarios with smaller transaction scales within the server node, requiring high memory bandwidth and low-latency interconnection between multiple GPUs in the same server node. Essentially, a multi-GPU system expanded via scale-up can be regarded as an integrated “super GPU” or “giant GPU” possessing ultra high performance. Scale-out, on the other hand, improves the overall performance of GPU clusters by enabling efficient inter-node communication across multiple server nodes. It is primarily used in extreme large-scale deployment or data center scenarios, catering to large-scale data transmission between server nodes, and adopts network or message semantics like send/receive or RDMA (Remote Direct Memory Access) [].

Figure 1.

The overview of scale-up and scale-out.

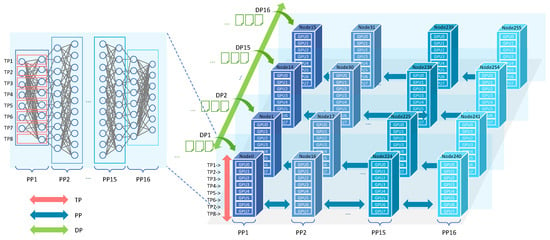

Although multi-GPU systems are the critical infrastructure for overcoming the memory and computing power limitations and enabling efficient training or inference, the totally effective computing power of a cluster is not the simple product of the single-GPU computing power and cluster scale. As Figure 2 shows, the distributed training of LLMs relies on parallelization strategies like tensor parallelism (TP), data parallelism (DP), and pipeline parallelism (PP), etc., which partition model parameter tensors, training data, and model layers across GPUs for parallel execution [,]. Meanwhile, extensive parameter synchronization and data transmission are required among GPUs during the distributed training or inference, placing extremely high demands on communication efficiency and network performance, for both the intra-node GPU interconnection within a single server node and the inter-node interconnection across multiple server nodes. Additionally, in MoE models, a typical approach is to distribute the experts across different GPUs as a single GPU cannot store all experts []. During the execution of MoE layers, there is also an intensive need for data exchange among GPUs. In the forward pass of several popular MoE models, the communication among devices accounts for 47% of the total execution time on average []. Due to the substantial communication overhead introduced by the distributed training, the cluster’s computing power cannot increase linearly with its size. The interconnect technology becomes the key to breaking through this bottleneck, and the performance of GPU interconnection directly determines the overall performance of the GPU cluster. In this paper, we mainly focus on the intra-node GPU interconnection within the scale-up domain.

Figure 2.

The overview of 3D (dimension) parallelism in distributed LLM training, including tensor parallelism (TP), data parallelism (DP), and pipeline parallelism (PP).

Unfortunately, traditional interconnection technologies fail to meet the emerging scale-up network requirements in the high-bandwidth domain (HBD), with large-scale multi-GPU deployments hindered by bottlenecks rooted in conventional architectures. The Peripheral Component Interconnect Express (PCIe) technology is limited by its master–slave architecture and tree topology, centering on the Central Processing Unit (CPU), resulting in low peer-to-peer communication efficiency and restricted interconnection scale []. Moreover, the escalating imbalance between computational power and storage capacity has exposed critical flaws in conventional “CPU + GPU” interconnection architecture based on PCIe for performance scaling in multi-GPU systems, exacerbating the “memory wall” problem, a key obstacle to scaling multi-GPU systems and improving the overall performance []. Specifically, while GPUs outperform CPUs in computational power by a large margin, their memory capacity is typically an order of magnitude lower than that of CPUs. Compounding this, the rapid advancements in CPU and GPU computing performance have far outpaced improvements in memory bandwidth and latency optimization. Compute Express Link (CXL) significantly enhances GPU memory access efficiency by reducing remote memory access latency and improving bandwidth utilization through cache coherence protocols and memory pooling technology, but the technology is still developing, with commercial solutions and ecosystem maturity yet to be improved [,]. Moreover, RDMA-based or traditional Ethernet-based solutions suffer from large protocol header overhead and high end-to-end latency, failing to meet fine-grained communication needs. Proprietary interconnection protocols, due to their closed ecosystems, limit the flexibility of hardware selection and absence of switch chips. Overall, although these interconnection technologies can technically interconnect multiple GPUs in a server node, they are ill-suited for high-performance, large-scale scale-up deployments, constraining efficiency (e.g., throughput, latency) and scalability, and failing to meet modern multi-GPU demands.

Against this backdrop, traditional interconnect technologies can no longer keep up with current requirements, prompting the successive proposal of a series of new scale-up protocols which aim to establish a large-scale, high-performance scale-up domain. NVIDIA proposed the NVLink technology in 2014 and it has been applied in its GPU products with continuous evolution []. AMD also introduced its Infinity Fabric Link technology in its products to enhance the interconnection capability between GPU computing units from 2017 [,]. Notably, in recent years, alongside the exponential growth in the scale of large models and the new requirements for scale-up interconnection posed by model training and inference, the development of intra-node GPU interconnection technologies has entered a brand-new phase. Apart from the proprietary interconnection protocols, open interconnection protocols are also continuously being introduced. ChinaMobile proposed the Omnidirectional Intelligent Sensing Express Architecture (OISA) in 2023 and publicly published its newest version 2.0 recently [], UALink Consortium Promoter Group proposed Ultra Accelerator Link (UALink) in 2024 and published its specification in 2025 [], and Broadcom also publicly introduced its Scale-up Ethernet (SUE) protocol in 2025 [].

1.1. Literature Review and Our Motivations

This work is conducted as a review, aiming to comprehensively explore the intra-node GPU interconnection technologies for scale-up networks, while ensuring the transparency and reproducibility.

During the research process, we noticed there are many survey papers for RDMA (e.g., [,,]) and systematic research for data center networks (e.g., [,,]) or other hot scale-out technical topics. In contrast, the discussions on intra-node GPU interconnection technologies for scale-up are relatively limited, especially in academia. Instead, the development of these technologies is mostly led by GPU and switch manufacturers in the industry. This scarcity arises from the recent emergence of core demands. The explosive growth of trillion-scale parameter LLMs and AI applications has only taken place in the past few years, rendering the urgent need for ultra-high-performance intra-node interconnection to overcome computing and storage bottlenecks a newly emerging research focus. With the gradual opening of some interconnection protocols and the continuous clarification of application demands, the academic community has begun active participation in related research, but it is still in its early stages.

As shown in Table 1, ref. [] focuses on the evolution of PCIe technology; refs. [,] center their research on CXL technology; refs. [,] focus on RDMA-related technical fields; and ref. [] mainly analyzes and discusses Ultra Ethernet and UALink technologies. All these references are limited to exploration and review in a single technology. Refs. [,] conduct investigations on technologies such as PCIe, NVLink, and GPUDirect and perform practical tests on related technologies in combination with NVIDIA GPU products. Ref. [] pays more attention to the internal interconnection technologies of multi-GPU systems compared with the aforementioned surveys, covering not only PCIe and NVLink but also other private interconnection protocol X-Links (such as AMD Infinity Fabric Link, Intel Xe Link, and BLink). However, with the massive emergence of new open interconnection protocols in recent two years, its content needs further updating and supplementing. Compared with existing surveys, the proposed survey fully focuses on intra-node GPU interconnection and conducts a comprehensive and systematic review and analysis of traditional solutions such as PCIe, CXL, and Ethernet-based approaches, as well as various representative emerging interconnection protocol and technologies.

Table 1.

Comparison of the existing surveys.

Given the relative scarcity of academic reviews on emerging intra-node GPU interconnection technologies, we prioritized both publicly accessible academic papers and official industry technical specifications or whitepapers, focusing on works related to intra-node GPU hardware interconnection technologies. The search was conducted between January 2025 and August 2025. We conducted a systematic search across core academic databases, official technical platforms, and authoritative industry organization websites, including IEEE Xplore, ACM Digital Library, arXiv, Google Scholar, SpringerLink, ScienceDirect, and MDPI, as well as official technical portals of key technology providers (e.g., NVIDIA, UALink Consortium, etc.) and relevant standardization groups. Key search terms covered “GPU scale-up”, “multi-GPU system”, “intra-node GPU interconnection”, “GPU-GPU communication”, “PCIe”, “CXL”, “RDMA”, “NVLink/NVSwitch”, “UALink”, “Scale-up Ethernet (SUE)”, “OISA”, and other technical keywords, ensuring no critical public research or open specifications were omitted.

The literature selection strategy considers both temporal relevance and technical alignment. On the one hand, from the temporal perspective, we prioritized works published over the past decade to balance novelty and evolutionary completeness while also incorporating foundational literature and specifications to clarify the technical development context. On the other hand, from the perspective of technical relevance, we strictly included studies focusing on intra-node GPU interconnection technologies and excluded works on pure software optimization, inter-node networking, etc.

This survey focuses on the following core research objectives and scope:

- Based on the ultra-high-performance inter-GPU interconnection demands of the new AI era, a comprehensive review of intra-node GPU interconnection technologies in the GPU scale-up domain is conducted, systematically surveying and analyzing representative traditional and emerging technologies or protocols.

- The existing performance bottlenecks of these technologies and identify future optimization directions are analyzed.

- The academic gap in this understudied field is filled, providing a clear reference framework for relevant researchers.

- This inspires in-depth exchanges and discussions between academia and industry, thereby facilitating collaborative innovation across the two sectors.

1.2. Contributions

This survey focuses on the intra-node GPU interconnection in the scale-up domain. The key contributions of this work are as follows:

- We introduced and deeply analyzed the limitations and disadvantages of traditional interconnection technology such as PCIe in the AI era and the field of scale-up and discussed the design ideas that can be referenced in their technologies.

- We introduced and compared the representativeness and influence of emerging interconnect technologies aiming for the scale-up high-bandwidth domain and especially discussed and analyzed the key issues and core considerations in their design.

- We presented our insights and perspectives on the future challenges and opportunities and the development trends in the field of scale-up interconnection.

As far as we know, this paper is the first comprehensive survey fully focusing on GPU scale-up networks, discussing emerging interconnection technologies and delivering insightful perspectives.

The remainder of this paper is organized as follows. Section 2 analyzes the requirements, challenges, and status of intra-node GPU interconnection in a scale-up network. Section 3 outlines traditional inter-GPU interconnect solutions including PCIe, CXL, and Ethernet-based approaches and analyzes the bottlenecks they encounter. Section 4 surveys emerging representative scale-up protocols, such as NVIDIA NVLink, OISA, UALink, SUE, and other X-Links. Section 5 compares these emerging protocols, discusses their core design considerations, and shares our perspectives and insights across various dimensions. Section 6 assesses the current challenges for emerging methods and explores technical trends and future research directions in the field. Finally, Section 7 concludes the survey.

2. Requirements, Challenges, and Status of GPU Interconnection in Scale-Up Network

2.1. Requirements

Driven by current model and application requirements, in a scale-up system featuring multi-GPU collaboration within a single node, GPU interconnection in scale-up networks must meet the requirements for high-performance, lossless, and efficient memory accessing to enable the realization of a “Giant GPU” or a cohesive multi-GPU system.

Extremely High Bandwidth: A scale-up network strives to build a high-bandwidth domain (HBD), which is indispensable to cope with the massive data transfer demands in scenarios such as LLM training and high-performance computation (HPC) [,,,]. Frequent exchanges of parameters, intermediate calculation results, and other data between GPUs depend on sufficient bandwidth; otherwise, it will become a bottleneck that causes the computing power of GPUs to be underutilized [,]. Compared to the bandwidth of scale-out networks or traditional data center networks, the bandwidth requirement of scale-up networks is an order of magnitude higher []. Specifically, such demands reach 400 Gb/s or even 800 Gb/s per GPU port, hundreds of gigabytes per second, or even the terabytes per second per GPU, aiming to adapt to the speed of high-bandwidth memory [,,].

Ultra-low Latency: Within the HBD, latency requirements are equally stringent []. The single-hop forwarding latency of a switch must be capped at just a few hundred nanoseconds, while the Round-Trip Time (RTT) between any two GPUs should be maintained within a few microseconds, to ensure global memory access at a near-local speed in the “Giant GPU” [,]. This is crucial for tasks with intensive synchronization needs and the overall performance of GPU clusters []. On the one hand, operations like gradient synchronization in distributed training and collaborative instruction interactions among GPUs are extremely sensitive to latency. Reducing latency can remarkably shorten the overall execution cycle of tasks and enhance real-time performance in inference scenarios. On the other hand, reducing the communication latency between GPUs can prevent the waste and idleness of computing resources caused by GPUs waiting for data to arrive, which is important for the release of cluster performance [].

Scalability: Scalability is essential to accommodate the growing number of GPUs per node, a trend driven by today’s complex workloads [,,]. As computing power demands surge, a single node requires integrating more GPUs to form a high-performance computing unit, making it imperative for interconnection technologies to support scaling up GPU cluster sizes while preserving performance. An overly small scale-up domain will result in a large amount of inter-server communication and protocol conversion. As exemplified by Deepseek-V3 [], which is trained in NVIDIA H800 servers with 8 GPUs per node, the constrained scale-up scope leads to substantial computational resource (e.g., Streaming Multiprocessors—SMs) waste and excessive programming overhead in protocol conversion and communication guarantees []. Expanding the scope of the scale-up domain enables converting some scale-out communications into scale-up domain interactions. Leveraging its high bandwidth, low latency, and unified addressing capabilities, this approach better boosts the efficiency of applications like model training and inference.

Lossless: Lossless transmission serves as the cornerstone of GPU collaborative computing within the scale-up domain, as reliable data delivery directly underpins the stability and efficiency of multi-GPU coordinated tasks. At its core is the establishment of a robust network infrastructure, designed to guarantee the accuracy and integrity of data transmission within the high-bandwidth domain. This requires avoiding packet loss and errors during data transmission and demands rapid recovery if such issues occur [,]. Otherwise, the system performance will be affected due to data retransmission or packet loss []. Therefore, scale-up networks must incorporate key supporting technologies, including flow control (to regulate data flow and prevent congestion-induced packet loss) and retransmission (to recover lost or corrupted packets promptly), to fully uphold lossless transmission standards and lay a stable foundation for GPU-to-GPU data interaction.

Unified Memory Addressing: Unified memory addressing (UMA) stands as another core requirement for scale-up networks, mandated to resolve critical bottlenecks in collaborative computing efficiency, specifically those stemming from storage resource isolation and the “memory wall” phenomenon [,].

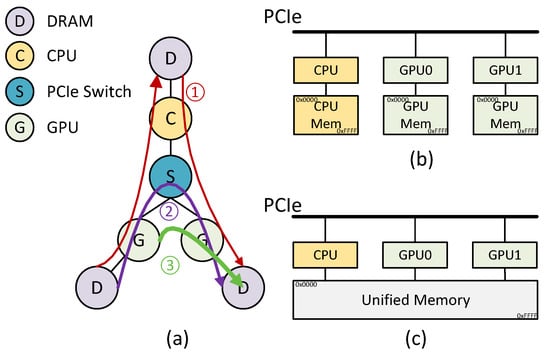

Figure 3 shows three ways for different GPUs within a server node to access memory. Path ① indicates that if a GPU wants to write data to another GPU, it first needs to copy the data from its own memory to the host’s memory and then write it to the storage of the other GPU. Path ② indicates that through the PCIe switch, GPUs can directly transfer data between each other. In Path ③, based on the new interconnection technology’s memory semantic mechanism and UMA mechanism, the unified memory pool is established and GPUs can directly access each other’s storage.

Figure 3.

(a) Three conceptual models of GPU memory access. (b) Memory spaces without UMA. (c) Unified memory based on UMA.

Fulfilling the unified memory addressing requirement delivers multi-dimensional benefits for scale-up systems. It enables seamless cross-GPU memory access, allowing each GPU to treat remote memory as if it were local and eliminating the overhead of explicit data copying that often wastes bandwidth and time. It also simplifies the programming model for multi-GPU applications, reducing the complexity of memory management for developers and lowering the barrier to multi-GPU software development. Additionally, it lays a critical hardware foundation for unified memory pooling and dynamic scheduling, supporting flexible allocation of memory resources across the entire scale-up system to match real-time computing demands.

2.2. Challenges

Building an ultra-high-performance GPU scale-up network entails multiple challenges, with core hurdles centering on reconciling performance bottlenecks, interconnect protocol limitations, interconnection topologies, efficiency, reliability, power consumption, and so on. These challenges are not isolated. Instead, they are deeply intertwined and mutually reinforcing, creating a more complex barrier than any single issue alone. From our current perspective, the two most prominent challenges stem from interconnect protocol design and hardware limits and physical constraints.

Protocol Design: Designing an efficient, feasible interconnect protocol is extremely challenging, as the protocol directly shapes efficiency and performance in the scale-up domain.

The protocol must strike a delicate balance between simplicity and reliability, a dual requirement that adds further complexity to the design [,,]. Firstly, the protocol must prioritize simplicity in packet format and mechanisms to ensure efficient transmission. On the one hand, large protocol headers severely consume bandwidth, leaving a link’s actual throughput far below its physical capacity, especially in scenarios where GPUs exchange massive, frequent small packets [,,]. In such cases, relative to the small payloads, the overhead of headers becomes disproportionately large. For example, a 32-byte header attached to a 128-byte data payload results in a total packet size of 160 bytes, with the header consuming 20% of the link’s capacity, which is a waste. This waste becomes exponentially more damaging in multi-GPU clusters, where large amounts of such small packets are exchanged across the cluster every second, turning the incremental overhead of each individual packet into a systemic bottleneck that throttles the entire collaborative computing workflow. On the other hand, overly redundant mechanisms, such as complex handshakes or duplicate error checks, must be avoided, as they introduce unnecessary processing delays that undermine the low-latency demands of GPU collaboration. Meanwhile, scale-up interconnect protocols must not overlook transmission reliability. They must dynamically regulate transmission speeds via flow control to avoid congestion or packet loss while also integrating recovery logic to address packet loss or transmission errors.

Moreover, protocol design must account for scalability, a requirement deeply intertwined with interconnect topology and transmission distance as the GPU scale-up domain expands [,]. As the number of GPUs in a scale-up system grows, the protocol must adapt to the network topology evolution, which is from simple direct point-to-point connected topology to complex switch-based fully connected architectures. Traditional protocols like PCIe, designed for small-scale, fixed-topology peripheral connections, are ill-suited for large-scale GPUs interconnection []. Transmission distance adds another layer of complexity. GPU interconnections span short on-board links (centimeters) to rack-level (meters) or cross-rack (tens of meters) connections, with signals degrading differently across ranges. The protocol should be flexible to balance reliability and efficiency.

Overall, protocol design is crucial yet highly challenging in large-scale GPU interconnect systems. It needs to integrate multiple attributes, including efficiency, reliability, scalability, etc. However, traditional protocols like PCIe and RoCE (RDMA over Converged Ethernet), as they were not purpose-built for large-scale intra-node GPU interconnect scenarios, struggle to meet these comprehensive requirements, ultimately becoming a key bottleneck that limits the improvement in system performance.

Hardware Limits and Physical Constraints: The realization of high performance and scalability is constrained by hardware capabilities and physical boundaries [,,,].

As the primary gateway for data transmission, SerDes (Serializer/Deserializer) at the physical layer poses severe constraints on both speed and density. Designing and implementing high-rate SerDes (e.g., 112 Gbps and 224 Gbps) is extremely challenging due to issues like signal integrity, circuit area, and power consumption []. Even with targeted optimizations, the number of SerDes ports that can be integrated for parallel transmission remains limited by the physical area and power budget of GPU dies or switch chips, directly restricting the maximum direct bandwidth a single device can provide.

Moreover, the scalability and interconnection topology of scale-up networks are tied to hardware limits, particularly the port counts of GPUs or switch chips [,,]. When the number of interconnected GPUs in the scale-up domain exceeds the port capacity of individual GPUs or switches, multiple switches must be deployed, inevitably introducing higher latency, more complex network management, and other complications. Together, these hardware limits and physical constraints form an interconnected set of challenges that directly restrict the scale, throughput, and efficiency of GPU scale-up networks.

2.3. The Development of GPU Scale-Up

From the perspective of development stages, we think that the evolution of GPU scale-up interconnection technology in recent years can be divided into the following three stages.

The first stage is the initial stage, where the industry generally relied on traditional PCIe or CXL technologies to achieve GPU interconnection [,,,]. However, such traditional solutions soon become a performance bottleneck for their shortcomings in bandwidth, latency, and interconnection scale [,,].

The second stage entered the exploration stage of proprietary protocols, where GPU manufacturers began to self-develop proprietary interconnection protocols based on PCIe or Ethernet technologies. However, except for NVIDIA’s NVLink, most proprietary protocols can only achieve an interconnection scale of eight cards due to the lack of supporting switch chips, making it difficult to support larger-scale computing power clusters [,,].

The third stage has entered the large-scale collaborative innovation stage. As the demand for GPU interconnection becomes clear, the design of various protocols has also become increasingly mature. GPU manufacturers and switch manufacturers have launched joint research and development to propose more new solutions supporting large-scale interconnection [,,]. On the one hand, they generally redefine the new public or standard protocols to achieve the interoperability among devices from different vendors, adopt a fully connected topology centered on switches to expand interconnection scale, and leverage high-speed SerDes technology to boost data rates. On the other hand, they integrate technologies such as Chiplet, IO Die, and optical interconnection to further reduce the power consumption and area of chips while achieving efficient interconnection, adapting to the needs of larger-scale computing power clusters [].

3. Traditional Solutions

3.1. PCIe

Peripheral Component Interconnect Express (PCIe) was first proposed by Intel in 2001 and is currently governed by the Peripheral Component Interconnect Special Interest Group (PCI-SIG) for developing []. Over more than two decades of development, it has maintained a consistent iteration rhythm: a major version update approximately every three years, with backward compatibility preserved across iterations. Functionally, PCIe has long served as a universal interface for interconnecting CPUs with GPUs, storage devices, Network Interface Cards (NICs), Data Processing Units (DPUs), Field Programmable Gate Array (FPGA) cards, and so on []. PCIe 6.0 was officially released in 2022, and PCIe 7.0 specification was also made available to its organization members in 2025, and PCI-SIG aims to release the PCIe 8.0 specification in 2028.

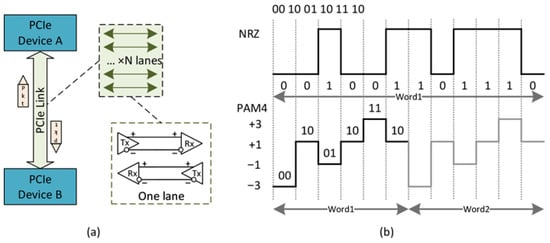

PCIe is a high-speed serial point-to-point dual-channel bus designed for high-bandwidth transmission. As shown in Figure 4a, a PCIe link comprises multiple lanes, and each lane consists of a pair of differential signal pairs (one for transmission, one for reception), enabling full-duplex communication. The PCIe link can be flexibly configured as ×N (where N denotes the number of lanes, e.g., ×1, ×4, and ×16) to balance cost and performance requirements.

Figure 4.

(a) Overview of PCIe link. (b) Comparison of NRZ and PAM4.

Largely driven by the continuous increase in per-lane bit rate across successive versions, PCIe has achieved an approximate doubling of bandwidth every three years. Beyond this, other key factors collectively boost its performance. Its integration has shifted from external chipsets to CPU cores, with supporting for more lanes. Meanwhile, encoding schemes have been upgraded to reduce transmission overhead and enhance reliability, and mechanisms like Forward Error Correction (FEC) have been introduced to balance latency and bit error rate (BER). Notably, encoding upgrades stand out. Starting from PCIe 6.0, 4-Level Pulse Amplitude Modulation (PAM4) has replaced the earlier Non-Return-to-Zero (NRZ) encoding. As shown in Figure 4b, unlike NRZ, which transmits 1 bit per clock cycle, PAM4 uses four signal levels to send 2 bits per cycle. This upgrade doubles the data rate to 64 GT/s at the same Nyquist frequency as PCIe 5.0’s NRZ, while PAM4’s operation at half the frequency reduces channel loss, keeping PCIe 6.0’s transmission distance comparable to PCIe 5.0’s. Detailed parameters of different PCIe versions are presented in Table 2.

Table 2.

Specification of each generation PCIe.

Although PCIe has achieved great success as an I/O bus, and its bidirectional bandwidth is up to 256 GB/s from version 6.0, it still faces multiple constraints that limit its application in AI scenarios and the GPU scale-up HBD.

Firstly, the topology and architecture of PCIe-based interconnection hinders the efficiency and scalability of GPU communication. PCIe’s master–slave or root complex (RC) architecture and tree topology block direct P2P communication between GPUs. To maintain backward compatibility with PCI software, PCIe only allows for tree-based hierarchical architecture which centers on the CPU with external devices mounted on the PCIe bus, prohibiting loops or other complex topological structures []. All inter-GPU communication must be relayed via the root complex or multi-level PCIe switches, with no direct path available. In GPU-intensive scale-up scenarios, all traffic must route through the CPU or a central PCIe switch, introducing unnecessary latency and wasting CPU resources. Even when P2P communication is enabled, due to the limited number of lanes of the CPU or PCIe Switch, the interconnection scale is restricted. Even with PCIe switch cascading, large-scale, high-efficiency expansion remains challenging.

Secondly, PCIe struggles with bandwidth mismatches and inefficient memory access. As the proportion of GPUs in multi-GPU systems grows, PCIe bandwidth remains far lower than the bandwidth between CPUs/GPUs and their respective memories. This gap is particularly pronounced in large-scale data movement across GPUs, where high throughput is critical. For current mainstream GPUs designed for AI workloads, the local memory bandwidth between the GPU core and on-board DRAM (Dynamic Random Access Memory) like GDDR (Graphics Double Data Rate) or HBM (High Bandwidth Memory) has reached a magnitude that far outpaces PCIe. For example, the local memory bandwidth of NVIDIA H100 SXM5 GPU (with HBM3 memory) reaches 3350 GB/s [], and AMD instinct MI300X (with HBM3) also exceeds 5000 GB/s []. By contrast, cross-GPU memory access over PCIe is extremely inefficient in terms of bandwidth and latency.

Furthermore, PCIe has not established a true shared memory pool for multi-GPU setups and lacks common coherence. Each GPU’s memory is independent with isolated address spaces, precluding unified memory addressing. When GPUs access shared data, coherence issues arise, requiring manual software-managed synchronization that further reduces efficiency.

These factors make traditional PCIe interconnection technology difficult to support the efficient interconnection and the expansion of the scale-up high-bandwidth domain. Nonetheless, PCIe remains an indispensable core protocol and the most widely adopted interface standard for communication between GPUs and CPUs in current computing systems. Its mature ecosystem, backward compatibility, and cost-effectiveness ensure it continues to play a key role in the scale-up domain.

3.2. CXL

Due to PCIe’s limitations in storage expansion and memory coherency, Compute Express Link (CXL) was proposed in 2019, with its Version 3.0 protocol officially released in 2022. As a high-speed cache-coherent interconnect protocol, CXL is designed for processors, memory expansion, and accelerators, with a core goal of addressing key bottlenecks in high-performance computing, including constrained memory capacity, insufficient memory bandwidth, and high I/O latency. CXL is built on top of the PCIe physical layer, which not only leverages the mature PCIe hardware ecosystem but also enables reliable cache and memory coherency across devices. By tapping into the widespread availability of PCIe interfaces, CXL further enables seamless memory sharing across a diverse set of hardware components, such as CPUs, NICs, DPUs, GPUs, other accelerators, and memory devices [].

CXL provides a rich set of protocols that include I/O semantics similar to PCIe (i.e., CXL.io), caching protocol semantics (i.e., CXL.cache), and memory access semantics (i.e., CXL.mem) over a discrete or on-package link []. CXL leverages the PCIe physical data link to map devices, referred to as endpoints (EPs), to a cacheable memory space accessible by the host. This architecture allows for compute units to directly access EPs via standard memory requests [], while CXL memory further supports cache-coherent access through standard load/store instructions.

With the theoretical analysis and memory simulation or emulation, CXL seems to be a promising memory interface technology to address PCIe’s shortcomings in memory access. However, many genuine CXL memory expansion tests show that it is difficult to meet the memory demands in the AI era. For example, the study in [] shows that the performance gains from tensor offloading based on CXL memory are very limited. This is because the tensor copies between CXL memory and GPU memory have to go through a longer data path compared to memory access directly from the CPU, which is also bottlenecked by the PCIe physical layer.

Although CXL can support certain AI scenarios, it is more of a design for disaggregated memory accessing in computing rather than a specialized AI network solution. It enables the GPU to access shared or pooled memory with extremely high efficiency, rather than directly enhancing the point-to-point data transmission between GPUs. Furthermore, the design built on and compatible with PCIe renders it rather complex and bulky and fails to meet the demands of the scale-up HBD network.

3.3. Ethernet-Based Solutions

Ethernet-based approaches, particularly RDMA (e.g., RoCE, RDMA over Converged Ethernet), have also been widely adopted for a time. However, neither RoCE nor standard Ethernet is designed for GPU scale-up networks, and both face critical limitations stemming from inefficient communication workflows and excessive protocol overhead. RoCE exemplifies these challenges.

On the one hand, RoCE relies on message semantics, with communication workflows involving multi-stage interactions between Work Queue Elements (WQEs), doorbell signals, and NICs [,]. Before data is finally transmitted, GPU cores must first copy data, store WQEs, await acknowledgments, and trigger doorbells, each step incurring microsecond-scale latency. Compounding this, the workflow is tightly bound to the host-side PCIe bus, whose bandwidth and latency constraints further degrade communication efficiency as GPU counts scale. While parallelizing requests can partially offset latency, it introduces prohibitive memory overhead (e.g., inflated send buffers), undermining scalability.

On the other hand, packet protocol overhead poses significant challenges for scale-up networks. Ethernet’s inherent frame structure (including preamble, header, and FCS fields) introduces baseline overhead, and RDMA’s control headers (for addressing, Queue Pair management, etc.) further expand packet size []. Although RoCEv2 excels at large data transfers in scale-out networks, it incurs non-negligible overhead in scale-up scenarios. Here, frequent small transactions and fine-grained parameter exchanges during multi-GPU synchronization exacerbate the header-to-payload ratio, wasting bandwidth and increasing end-to-end latency.

Table 3 compares these three traditional solutions. However, none fully meets the demands of current large-scale intra-node GPU interconnection. PCIe is limited by scalability, CXL’s ecosystem remains developing, and RoCE suffers from higher latency which is more suitable for cross-node interconnection. Together, their inherent constraints highlight the need for more optimized interconnect solutions for large-scale GPU clusters. As a result, for the evolving and highly specialized AI landscape, new solutions that are more specifically designed for AI needs are urgently required. Consequently, for the evolving and highly specialized AI landscape, new intra-node GPU interconnection solutions for scale-up are urgently needed.

Table 3.

Comparison of intra-node GPU interconnect technologies.

4. Emerging Representative Solutions

As demands for computing, memory, and cross-GPU communication continue to rise, the aforementioned traditional technologies can no longer meet the requirements of GPU interconnection. Therefore, a series of new protocols and technologies have emerged, which are reshaping the scale-up HBD. The scale-up protocol and its core technologies have now become a key enabler for breaking through performance bottlenecks and supporting complex AI scenarios. Among these emerging protocols, several have already gained considerable industry influence, backed by robust technical specifications and product support systems.

In this section, we will briefly introduce some representative scale-up technologies or protocols, including NVLink, OISA, UALink, SUE, and other X-links, concerning the protocol stack structure, packet format, interconnection topology, and other key technical features. In the next section, we will explore and discuss the design ideas of these emerging scale-up interconnection technologies.

4.1. NVLink

As an advanced interconnect technology and communication protocol, NVLink is redefining the paradigms of communication and cooperation between GPUs, even between CPUs and GPUs []. NVIDIA proposed NVLink firstly in 2014, and the NVLink 1.0 was introduced in its P100 GPU in 2016. By 2024, NVLink evolved to its fifth generation, deployed in the GB200/GB300 GPUs featuring the Blackwell architecture [,]. Notably, starting with NVLink 2.0, the NVLink Switch (NVSwitch) was integrated into intra-node GPU interconnections, marking a key breakthrough in expanding the scale of GPU interconnects and laying the foundation for more efficient data transmission. The combination of NVLink and NVSwitch brings significantly higher communication bandwidth and lower transmission latency, which in turn substantially enhances the overall performance and efficiency of the multi-GPU system.

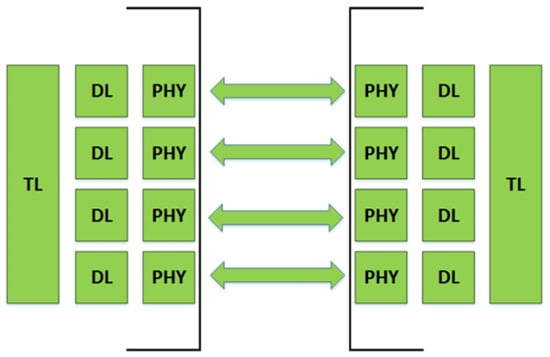

As Figure 5 shows, the protocol architecture of NVLink is layered and divided into the transaction layer (TL), data layer (DL), and physical layer (PHY), and NVLink adopts a P2P architecture and serial transmission to implement GPU-GPU communication. Similar to the PCIe link structure, each NVLink link comprises a pair of sub-links, and each sub-link handles one transmission direction and contains eight differential signal pairs. As illustrated in Table 4, NVLink has undergone iterative improvements in lane speed, lane count, and signaling mode, with each generation approximately doubling the link speed. Now, the P2P bidirectional bandwidth between GPUs has increased from 160 GB/s in NVLink 1.0 to 1800 GB/s in NVLink 5.0 [].

Figure 5.

Architecture of NVLink Protocol [].

Table 4.

Specification of each generation NVIDIA NVLinks and NVLink Switches.

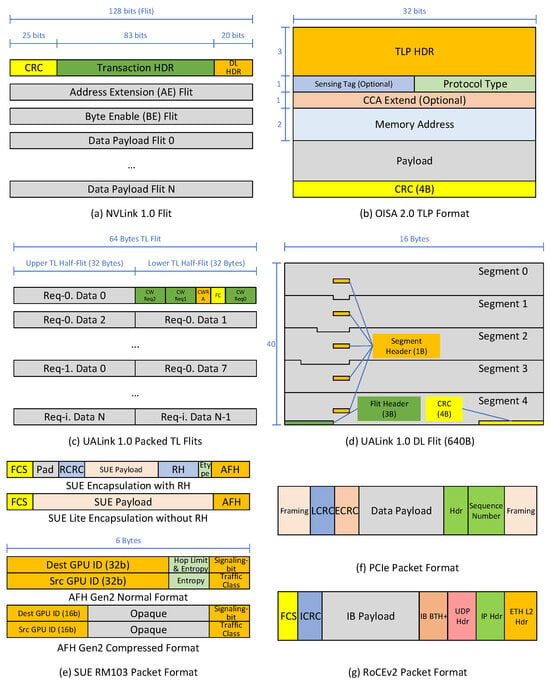

As shown in Figure 6a, NVLink adopts the Flit (Flow Control Digit) mode to organize its data for transmission. Each NVLink packet consists of 1∼18 flits, each of which contains 128-bit data. This design enables flexible transmission of variable-sized data. Except a header flit, an address extension (AE) flit and a byte enable (BE) flit are used to optimize the data transmission efficiency by retaining the static bits and transmitting only the changing bits. The header flit is structured with a 25-bit cyclic redundancy check (CRC) field, an 83-bit transaction field, and a 20-bit data link field []. The transaction field includes critical information such as transaction type, address, flow control bit, and tag identifier, while the data link carries packet length, application number labels, and acknowledgment identifiers.

Figure 6.

The packet format overview of (a) NVLink 1.0 Flit, (b) OISA 2.0 TLP, (c) UALink 1.0 Packed TL Flits, (d) UALink 1.0 DL Flit (640B), (e) SUE RM103 Packet, (f) PCIe Packet, (g) RoCEv2 Packet.

In practice, NVIDIA has deployed different generations of NVLink across its GPU product lines, paired with evolving interconnection topologies. Early systems like the P100-DGX-1 (NVLink 1.0) [] and V100-DGX-1 (NVLink 2.0) [] used a hybrid cube-mesh topology for GPU interconnection. While these implementations significantly boosted peer-to-peer (P2P) bandwidth, scalability was constrained by the number of links per GPU, limiting the interconnection scale. To address this, NVIDIA introduced the NVSwitch chip starting with the NVLink 2.0-based DGX-2 system [], enabling a switch-based fully connected topology.

The introduction of NVSwitch has brought a critical breakthrough to GPU interconnection at the topological level. First, with its increased number of NVLink ports, it successfully overcomes the previous limitation of relying on the number of links per GPU, enabling the construction of a non-blocking fully connected topology. This design allows all GPUs within a server to interconnect directly without routing through intermediate GPUs or CPUs, delivering a qualitative improvement in system scalability and aggregate bandwidth. As shown in Table 4, the latest NVSwitch (Gen 4.0) combined with NVLink 5.0 supports fully connecting up to 72 GPUs in its NVL72 products and is pursuing fully connecting up to 572 GPUs, completely breaking the scale constraints of early topologies. Meanwhile, it achieves a P2P bandwidth of 1800 GB/s and a total aggregate bandwidth of 130 TB/s, further validating the practical value of this scalability innovation. Building on this foundation, NVSwitch has further driven optimization of system performance. On the one hand, it can bypass traditional CPU-based resource allocation and scheduling mechanisms, and by integrating technologies such as Unified Virtual Addressing (UVA) [], it endows the NVLink Graphics Processing Cluster (GPC) with the capability to directly access local and cross-GPU HBM2 memory. This enables direct data exchange between GPUs without redundant data copying, significantly reducing latency and resource consumption caused by CPU-mediated transfers. On the other hand, its integration of NVIDIA SHArP technology provides hardware-level acceleration for collective communication operations such as All-Gather, Reduce-Scatter, and Broadcast Atomics, further reducing latency and boosting throughput in multi-GPU collaboration scenarios [,,].

In general, NVLink addresses the bottleneck limitations of traditional PCIe in scale-up GPU interconnection and significantly enhances the interconnect capability between multiple GPUs. NVIDIA has also rolled out a series of SuperPod server products based on NVLink technology, further validating the success of this technology. Additionally, NVIDIA has introduced NVLink Fusion [], which enables the integration of CPUs or switches from other manufacturers as hardware-based products within rack enclosures via NVlink C2C (Chip-to-Chip) IP (Intellectual Property) or NVLink IP. However, the specific technical details of NVLink remain undisclosed. Against this backdrop, a range of alternative interconnect technologies have been developed.

4.2. OISA

Omnidirectional Intelligent Sensing Express Architecture (OISA) [] was proposed by China Mobile in 2024 and evolved to version 2.0 in 2025, which aims to build an efficient, intelligent, flexible, and open GPU intra-node interconnection system, supporting data-intensive AI applications such as large model training, inference, and high-performance computing.

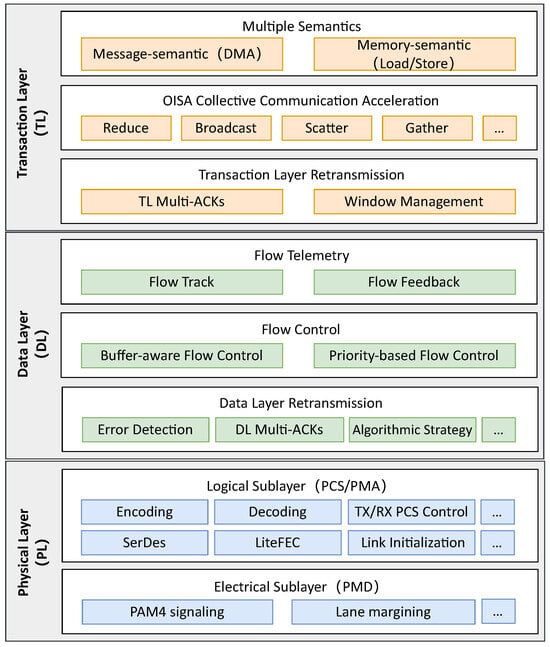

As Figure 7 shows, the protocol stack of OISA comprises three functional layers, the transaction layer (TL), the data layer (DL), and the physical layer (PL). Positioned at the top of the protocol stack, the transaction layer interfaces with the GPU’s NOC (Network on Chip). Upon receiving transaction-related signals transmitted from the GPU NOC, such as the transaction type, target GPU, address information, and transaction data, the TL maps and encapsulates this information into OISA transaction layer packets (TLPs), which are subsequently forwarded to the data layer. The DL inserts CRC fields into the received TLPs to enable error detection. OISA’s physical layer adopts an Ethernet-based implementation, leveraging mature Ethernet physical-layer technologies like SerDes to support reliable and high-speed data transmission between GPU devices.

Figure 7.

Protocol stack of OISA.

OISA defines three independent packet memory access modes, namely, Extreme-performance Access (EPA) mode, Intelligent Sensing Access (ISA) mode, and collective communication acceleration (CCA) mode.

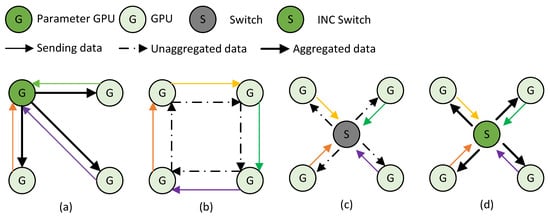

The EPA mode is centered on achieving minimum single-hop forwarding latency and maximum payload efficiency as its core objectives. In the switching topology architecture, switch chips can adopt a streamlined processing pipeline, which only execute essential steps such as packet parsing, address lookup, and port forwarding to achieve line-speed, low-latency forwarding. This mode is primarily designed for latency-sensitive memory semantic communication transactions.

Beyond this, OISA also emphasizes intelligent collaboration between GPUs and between GPUs and switches in the scale-up domain. In the ISA mode, the OISA processing engine (OPE) of the sender GPU inserts an intelligent sensing tag after the protocol field, allowing for the sensing and collection of critical state information of the switch and the destination GPU along the transmission path. The receiver GPU provides feedback as needed based on pre-configured alert mechanisms and feedback packet formats, thereby providing key state information and bottleneck locations to help the source GPU adjust subsequent data transmission strategies, including reducing the sending rate or changing the forwarding path. In the CCA mode, OISA offloads a subset of computing tasks to the switch chip, which then performs data calculations and collective communication operations like AllReduce and returns results directly to the GPUs. In this mode, switch chips no longer serve merely as simple packet forwarding nodes but rather become integral components of the computing system. This approach reduces communication traffic among GPU nodes generated by interactive computing data and intermediate results while also shortening overall task processing latency to achieve acceleration. Ultimately, this boosts the overall performance of the GPU cluster.

The packet format of OISA is shown in Figure 6b, which retains the protocol type field at the position of the 13th and 14th bytes to ensure compatibility with Ethernet forwarding, with other functional fields arranged in the regions preceding and following this field. The core components of an OISA packet include its TLP header, protocol type field, memory address, data payload, and checksum field. The TLP header of OISA contains two categories of key information, forwarding-related fields such as the source GPU identifier (SRC GPU ID), destination GPU identifier (DEST GPU ID), and virtual channel (VC), etc., as well as transaction processing-related fields like the transaction type, Transaction Tag ID, packet length, etc. In contrast to other flit-mode designs, OISA imposes no specific constraints on the payload length. Instead, it supports a variable-length payload, with its exact size indicated by the packet length field in the header explicitly. Notably, unlike other protocol packets, OISA balances standard forwarding between GPU chips and switch chips from different vendors with accommodation for the differences in architectures of various GPUs or their transaction operations. It specifically reserves several user-defined fields (UDFs) in the TLP header to enable users to implement customized extension designs. Based on the packet format of EPA, OISA designs the ISA packet and CCA packet with optional extension fields including the sensing tag fields and CCA Extend fields, respectively, and dedicated protocol types or signaling bits are used to indicate such variable packet structures and recognize ISA packets or CCA packets. To further increase the data payload ratio, OISA proposes aggregating multiple small transaction packets of the same type at the transaction layer. The aggregated TLP carries multiple small data packets with a single header, thereby spreading the fixed protocol overhead across multiple payloads and further boosting network bandwidth utilization.

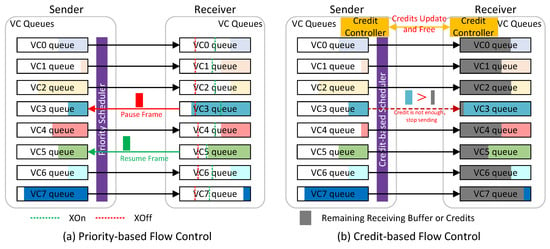

In terms of interconnect topology, OISA supports multiple topological structures, including direct interconnection via full-mesh topology and various interconnect topologies based on switching chips. Considering scalability and interconnect efficiency, it primarily adopts a switch-based single-layer fully connected topology, with forwarding and routing via GPU ID. By establishing a unified memory space within the scale-up domain, OISA enables efficient memory access among GPUs. Regarding communication modes, OISA mainly supports two types of memory semantics, synchronous memory semantics based on load/store and asynchronous memory semantics based on direct memory access (DMA), adapting to different granularities and requirements. To establish a lossless scale-up network, OISA introduces a flow track to sense the state of the network, priority-based flow control (PFC) and buffer-aware flow control mechanisms for traffic control, and data-layer retransmission (DLR) technologies at the data link layer, collectively ensuring lossless and efficient data transmission. Furthermore, at the physical layer, OISA leverages Ethernet-based SerDes to enable high-speed transmission. It employs Lite-FEC (RS272) and reduces interleaving ways to minimize latency, thus forming an efficient physical link layer.

In summary, as a GPU interconnect protocol designed for scale-up scenarios, OISA achieves efficient data transmission under GPU expansion of different scales through multi-dimensional technical optimizations. It not only focuses on efficient packet design and packet aggregation to reduce transmission overhead but also places particular emphasis on in-depth collaboration between GPUs, and between GPUs and switches, specifically in terms of traffic control and data computing. Meanwhile, while maintaining compatibility with the Ethernet physical layer, OISA exhibits flexibility in its upper protocol layers, enabling it to break through bandwidth and latency bottlenecks and adapt to various scenarios of multi-GPU clusters.

4.3. UALink

Ultra Accelerator Link (UALink), as an emerging standard to enable high-performance scale-up interconnects for next generation AI workloads, was proposed in 2024 initially and the UALink 200G 1.0 specification was officially released in April 2025 []. This specification was initially developed by the UALink Consortium Promoter Group, whose members include leading enterprises spanning technology, cloud computing, semiconductor, and system solution sectors, such as Alibaba, AMD, Astera Labs, Intel, and so on. It is now also supported and adopted by more than 70 contributors and adopter members [,].

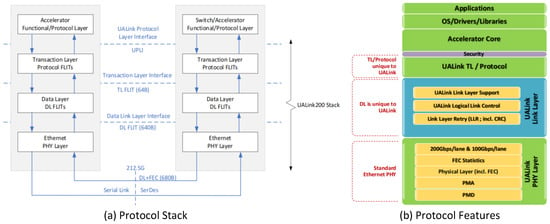

As Figure 8 shows, UALink 200G (UAL200) employs a layered architecture comprising the UALink Protocol Layer (UPL), transaction layer (TL), data layer (DL), and Ethernet PHY layer (PL) and provides symmetric paths in sending and receiving. The first three layers (UPL, TL, and DL) are distinctive to UALink, while the PHY layer is compatible with the standard Ethernet ecosystem. Each layer has its distinct functions and works in coordination, achieving low-latency and high-reliability data transmission between accelerators. At the protocol layer, the UAlink Protocol Layer Interface (UPLI) serves as the interface of the UALink stack, which consists of two pairs of Originator and Completer components across inbound and outbound channels, linking accelerators to the TL. The TL bridges the UPLI and DL, and it is responsible for the conversion between UPLI messages and TL flits, and between TL flits and DL flits. The DL sits between the TL and the PL, providing core functions such as the message service (including rate advertisement, device and port ID query, etc.) between link partners, DL flit packing, link-level replay, CRC computation and validation, and so on. The UAL200 physical layer (PL) is based on IEEE P802.3dj and IEEE 802.3 physical layer specifications []. The PL supports multi-channels and multi-rates and sends DL flit after serialization coding.

Figure 8.

(a) UAlink Protocol Stack, (b) UALink Protocol Features [].

Different from the traditional Ethernet-based packet with a hierarchical packet header structure or NVLink TLP flit mode, UALink delicately designs a set of flit-based packet formats, and the packet format varies across layers, especially in the TL and DL. In the UPLI, a set of channels transmits the original transaction information. In the transaction layer, the transactions are packed as TL flits. As shown in Figure 6c, each TL flit has a fixed length of 64 bytes, and a TL half-flit is fixed at 32 bytes. Based on UPLI signal types, TL flits are categorized into control half-flits and data flits. The former contain the control information like the request, response, flow control (FC), etc., while the latter carry the payload data and the byte mask. There may be one or multiple requests, responses, or FC fields in each TL control half-flit, and the responding data flits are arranged in order after the control half-flit. In the data layer, multiple 64-byte TL flits are repackaged into 640-byte fixed-size DL flits (Figure 6d), enabling accurate alignment with the RS (544,514) codeword in the PHY. Meanwhile, a 4-byte CRC field and a 3-byte DL header are appended into each DL flit, with five 1-byte segment headers inserted at a fixed position within the DL flit.

Regardless of flit length, UALink also imposes specific requirements on the placement of control half flits and data half flits, as well as the maximum number of requests in each half-flit and the data flit in each transaction. For example, a single write request or read response supports a maximum payload length of 256 bytes, equivalent to four TL flits or eight half-flits. Moreover, the request or response in control half-flit could be compressed, and the requests or responses across multiple destinations also could be packed into the same flit. Benefiting from header compression and efficient packaging, UALink achieves extremely high data transmission efficiency. In TL flits, when 20 out of 21 flits are data flits, the data efficiency can reach up to 95.2% []. In the data layer, there are only 12 bytes (3 bytes for the flit header, 5 bytes fort he segment header, and 4 bytes for the CRC) are used for DL functions, enabling data link efficiency of up to 98.125% (628/640) [].

Based on UALink switches, UALink adopts a single-layer multi-plane switching architecture to build a large-scale HBD, which supports up to 1024 GPUs in the scale-up domain and up to 800 Gbps per port. The number of switch planes scales with the bandwidth of the GPU for flexible expansion, and the rate of the port is adapted with the need. In the established scale-up domain, UALink enables direct communication among GPUs with memory sharing. From a memory semantic perspective, UALink uses small packets for low-latency load/store/atomic memory accesses and finely interleaves memory channels (e.g., with 256 B granularity) to maximize bandwidth to both local and peer GPU memory.

Furthermore, UALink introduces multiple key mechanisms to enable efficient and reliable data transmission []. To prevent congestion or packet loss, it employs ready-valid handshake, credit-based flow control (CBFC), and rate pacing/adaptation, ensuring smooth data interaction. Meanwhile, UALink also provides end-to-end protection for both control and data traffic from the source GPU to the destination GPU, where parity checking, CRC, and FEC are employed at its TL, DL, and PL, respectively. Even if packet loss or data corruption occurs on the link, the Link Layer Retransmission (LLR) would immediately retransmit missing or corrupted data flits to guarantee a lossless network. The protection mechanisms vary slightly across different layers, for instance, the transaction layer uses parity checking, the data layer employs CRC, and the physical layer adopts FEC. Moreover, it also simplifies the Eth-based FEC interleave to reduce the latency in the PHY.

Overall, through multiple technologies including packet design, switch-based topology, and physical layer optimization, UALink enables high-performance, low-latency data transmission and provides an open solution for building a large-scale HBD for GPU interconnection. Especially, by leveraging refined flit design and packaging rules in the transaction layer and data layer, it achieves extremely high packet transmission efficiency, and the specifications of DL flits are precisely aligned with the FEC encoding in the physical layer. However, the specialized protocol stack interfaces may have compatibility problems with the existing interfaces for some GPUs. Additionally, although the flit design and efficient package improves the overall performance in data transmission, its sophisticated packet flit design, where its packs requests and responses (even across multiple destinations) into the same FLIT, increases the difficulty of the flit parser and its chip-level implementation.

4.4. SUE

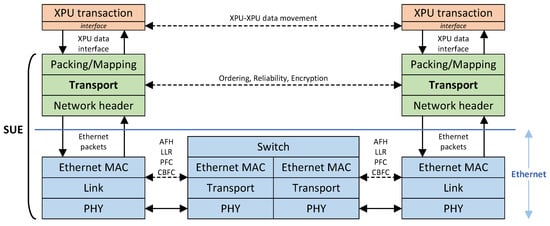

Scale-Up Ethernet (SUE) is an Ethernet-based GPU interconnection framework proposed by Broadcom Corporation. Its core goal is to provide low-latency, high-bandwidth connectivity for GPU clusters from the rack level to the cross-rack level, support efficient transfer of in-memory transactions (e.g., put, get, and atomic operations) between GPUs, and meet the parallel processing requirements of complex workloads such as machine learning and AI reasoning []. The initial specification version of SUE, Scale-Ethernet-RM100, was released in April 2025 and remains under update. The discussion in this article is based on the latest specification (RM103) and its AFH (AI forwarding header) Gen2 packet format.

SUE works over standard Ethernet, but simplified and optimized for AI scenarios and intra-node GPU interconnection, enabling efficient memory transaction transfer between GPUs in the scale-up domain. As Figure 9 shows, SUE has a layered protocol stack. At the top of the SUE stack, it provides a duplex data and control interface, connecting SUE with the NOC and getting commands and data. The mapping and packing layer is responsible for packing and mapping transactions from the GPU upper layer to form the core payload of the SUE protocol Data Unit (PDU). The transport layer provides the reliability header (RH) and a 32-bit reliability CRC (RCRC) to the PDU. The PDU, RH, and RCRC constitute the core carrier for transmission, implement sequence control and ACK/NACK feedback, and ensure the integrity of the PDU in transmission. The network layer encapsulates the data link header (SUE AFH) for the SUE PDU, forwarding the packets by the destination GPU ID. The bottom of the protocol stack is the Ethernet-based physical layer, allowing it to reuse Ethernet’s high-speed SerDes and other mature technologies.

Figure 9.

Overview of SUE stack []. (SUE Lite stack do not have the layer of transport).

Besides the standard mode, SUE also offers a lite encapsulation mode called SUE Lite. Both SUE and SUE Lite share the same hierarchical stack architecture but differ in content composition, packet format, and header length. SUE Lite simplifies the protocol stack by removing the reliable transport layer, eliminating the reliability header to reduce hardware overhead and transmission latency, and only retains the AI forwarding header for data forwarding. In distinct adaptation scenarios, they, respectively, meet the requirements of high reliability and lightweight deployment.

The length and position of the AFH are compatible with the MAC protocol, but it redefines the fields of destination and source MAC address fields by replacing them with GPU IDs and other content. SUE offers a variety of packet formats based on its AFH header, with SUE’s AFH Gen2 header further divided into normal and compressed formats. As shown in Figure 6e, the hop count and entropy fields are removed in the compressed format, and the GPU ID is halved. This shortens the AFH header length from 12 bytes (in the normal format) to 6 bytes, and the remaining fields are opaque to the data link layer during packet forwarding, which significantly reduces transmission overhead. In terms of the PDU length, the maximum size of SUE’s packed PDU is 4096 bytes, while that of SUE Lite’s packed PDU is only 1024 bytes. SUE Lite’s overall packet length is notably smaller than SUE’s, making it more suitable for lightweight scenarios, such as edge-side GPU interconnection, where requirements for hardware resource consumption and transmission latency are more stringent.

With the shared memory model, SUE enables efficient memory semantics for scale-up GPU clusters through a unified memory model and enhanced Ethernet transmission. At the memory layer, SUE builds a unified virtual global memory, encapsulating distributed memory resources (including local host memory, GPU memory, remote node memory, etc.) into a single virtual address space. The unified virtual address is packed into the SUE packet along with the GPU IDs and the memory operations. Working with the scale-up network and address translation units (ATUs) or memory management units (MMUs) in GPUs, GPUs within the same scale-up domain can directly access the memory in other devices without CPU-GPU data copying.

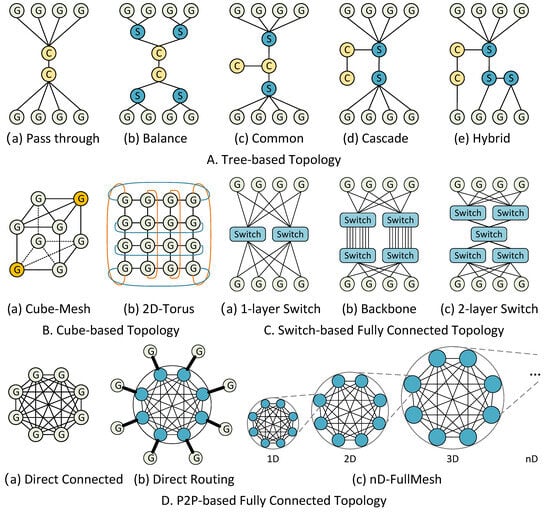

SUE features flexible deployment capabilities. In terms of topology, SUE supports single-layer switching topologies as shown in Figure 10(Ca) or multi-layer switching topologies like Figure 10(Cc), and direct mesh connection topologies like Figure 10(Da), while accommodating varying port configuration rates to meet the needs of both large-scale clusters (up to 1024 or 4096) and small-scale low-latency scenarios. Based on 112G/224G SerDes, SUE can achieve a single-port transmission rate of 100 Gbps up to 800 Gbps, and each SUE instance can support multiple port configurations. Additionally, to address requirements for data transmission isolation and resource security in multi-tenant scenarios, SUE reserves a 10-bit field in its RH specifically for multi-tenant isolation. Combined with VC priority partitioning and independent scheduling mechanisms, this ensures that traffic from different tenants or services does not interfere with each other. Moreover, SUE provides two command interfaces, an AXI4-based interface and a signal-based interface, to adapt to different GPU NoCs.

Figure 10.

Topology overview (C:CPU, G:GPU, and S:Switch). (A) Tree-based topology: (a) pass through, (b) balance, (c) common, (d) cascade, and (e) hybrid [,]. (B) Cube-based topology: (a) cube-mesh []; (b) 2D-Tours []. (C) Switch-based topology: (a) 1-layer switch [,,,], (b) backbone [,], and (c) 2-layer switch [,]. (D) P2P-based directly fully connected topology: (a) directed connected [], (b) directing routing [], and (c) nD-FullMesh [].

Despite the above design, SUE also promotes the transmission efficiency and reliability with many other technical mechanisms. To improve the bandwidth utilization and payload ratio, SUE relies on an opportunistic transaction packing strategy in the mapping and packing layer. Different from UALink’s TL Flit packing rules, SUE only packs transactions of the same type targeting the same GPU into a single PDU within the restricted length, and this is not mandatory. At the link layer, SUE integrates the IEEE 802.1Qbb-based PFC and the Ultra Ethernet Consortium (UEC) specification-based CBFC [], enabling fine-grained traffic control for different scenarios. Moreover, Link-Level Retry (LLR) is used to improve the reliability beyond the FEC by retransmitting dropped or incorrect packets. At the physical layer, SUE adopts a low-latency, lightweight FEC mechanism (such as RS-272) and supports non-interleaved or less interleaved modes of FEC blocks across lanes, which achieves a balance between error correction capability and processing latency. Additionally, SUE uses a two-level load balancing mechanism to optimize traffic distribution. Based on congestion status, SUE can efficiently distribute transaction data across different SUE instances on the GPU and different ports of these SUE instances.

In summary, SUE enables efficient memory semantics and interconnection for GPU clusters based on Ethernet, integrating the universality of the Ethernet ecosystem with the performance advantages of NVLink or UALink and improving the coordination efficiency of heterogeneous computing resources while ensuring compatibility. Meanwhile, Broadcom’s ultra-high-performance and ultra-low-latency switch chip products, such as Tomahawk Ultra (51.2 Tb/s throughput; 250 ns forwarding latency) [], have further guaranteed the implementation and deployment of SUE while providing high-performance guarantees.

4.5. Others

In addition to the new protocols introduced earlier, many GPU or switch chip manufacturers are also actively exploring and making breakthroughs in intra-node GPU interconnection, such as AMD Infinity Fabric Link [,], MTLink [] from Moor Threads, Blink [] from Biren Technology, etc. These protocols have features such as low protocol overhead, high transmission efficiency, and high bandwidth, etc. Taking the BR100 [] GPU product from Biren Technology as an example, on the basis of implementing CPU-GPU interconnection with PCIe Gen5×16, it adopts the Blink to interconnect GPUs. It achieves a single-card interconnection bandwidth of up to 448 GB/s and supports the full interconnection of eight cards in a single node with the topology shown in Figure 10(Da). The MTT S4000 [] GPU product from Moor Threads achieves a single-card interconnection bandwidth of up to 240 GB/s through MLink.

However, these protocol technologies have not been publicly released and have not had a significant impact on the industry on a large scale. Moreover, due to the limited scalability of the interconnection topology and the absence of dedicated protocol-supporting switch chips, most proprietary protocols are difficult to be applied in larger-scale HBDs. Therefore, in this article, we will not elaborate on their discussion any further.

5. Comparisons, Discussions, and Insights

In this chapter, we compare and discuss these interconnect technologies across key dimensions. In fact, although there are differences in packet format defined or specific technologies implementations among different protocols, the core design ideas and overall directions are gradually converging.

Overall, we believe that the design of the protocol is not inherently good or bad. Each protocol and its internal mechanisms have their own applicable scenarios and considerations. Table 5 conducts a multi-dimensional comparison of these emerging protocols. Since many GPU chips or switch chip products associated with emerging protocols are still in the design or manufacturing stage, many protocols cannot yet be fully and quantitatively compared in specific aspects. Hence, we do not limit our analysis to a superficial comparison of specific parameters, protocol features, or the granular implementation details discussed earlier; instead, we delve into in-depth, expansive analyses of critical technologies such as packet design, topology, flow control, retransmission, and network-computing coordination. We expect to explore the underlying design principles and philosophies through this comparison and discussion while articulating our technical insights in the analyses.

Table 5.

Comparison of scale-up protocols.

5.1. Evaluation Parameters

Given the emerging nature of intra-node GPU interconnection in scale-up networks, there is no industry-unified evaluation standard yet. While different protocols or interconnection technologies vary in their design objectives, clarifying key assessment dimensions is crucial for technical analysis and scenario adaptation evaluation. From the perspective of requirements and applications, the key parameters of an interconnection technology or protocol should include the port bandwidth it supports, end-to-end latency, scalability, protocol overhead, and transmission efficiency, as well as the deployment costs (such as area and power consumption) of the protocol engine on the chip. Among these, some of the former parameters may be related to specific demand scenarios, while the latter are likely associated with hardware implementation and manufacturing processes.

5.2. Protocol Scope

Interconnect protocols are designed around specific scenarios and application requirements, leading to distinct differences in their connected objects, scope, and core pursuits which are presented in Table 6.

Table 6.

Comparison of protocol scope.

PCIe is engineered for general-purpose interconnection, enabling communication between diverse devices within a server. It primarily targets CPU-peripheral connections, including CPU-GPU, CPU-NIC, CPU-Memory, etc., and it covers short-range domains from on-board to in-rack. Its core pursuit is versatility, ensuring compatibility with various peripheral types. In contrast, RoCE is tailored to the long-range network-level interconnection with RDMA-enabled devices, which is better suited for scale-out scenarios. To support scalability across hosts, racks, and data centers, it leverages Ethernet infrastructure to deliver flexible coverage.

The new scale-up technologies are tailored for the high performance of intra-node GPU interconnection and the collaboration within the scale-up domain. They optimize GPU-to-GPU data sharing for greater efficiency and directness, enabling a single GPU to access the high-bandwidth memory (HBM) of other GPUs in the same system directly. These GPU-to-GPU links relieve bandwidth pressure on each CPU’s PCIe uplink and eliminate the need for data to be routed through system memory or cross-CPU links. With fully connected topologies, all GPU-to-GPU traffic is handled via direct links like NVLink by P2P communication. While these new interconnect technologies provide high-speed interconnection channels between GPUs, PCIe-based heterogeneous connections remain essential. In the scale-up domain, it still relies on PCIe to connect GPUs to CPUs, supporting critical operations such as configuration, task distribution, control, and maintenance currently.

5.3. Communication Semantics

Message semantics and memory semantics are two core paradigms for inter-device communication in HPC and AI systems. These two semantics represent two fundamentally different communication philosophies in parallel computing and have important applications in GPU clusters and data center interconnection. As illustrated in Table 7, both message and memory semantics have their own pros and cons, distinguished by their design logic and application scenarios. Although some GPUs used message semantics in the earlier stages, currently the majority of GPUs and new protocols mainly adopt the memory semantics model.

Table 7.

Comparison between message semantics and memory semantics.

Message semantics relies on explicit message passing, where GPUs exchange data by sending and receiving discrete packets of messages via RDMA or TCP encapsulation. It transfers data in complete message units, favoring large data blocks (typically a few KB to several MB), requiring pre-allocated send or receive buffers, and each message has clear start and end boundaries. This paradigm enables flexible scale-out expansion for distributed independent server nodes like Ethernet-based AI clusters but introduces additional protocol overhead and microsecond-level communication latency. It requires programmers to explicitly invoke send/receive functions to drive communication, and each computing unit maintains an independent memory address space. In addition, it supports non-blocking asynchronous communication, making it well-suited for loosely coupled tasks under distributed memory architectures, such as data parallelism in AI training.