Research on a Blockchain Adaptive Differential Privacy Mechanism for Medical Data Protection

Abstract

1. Introduction

2. Related Work

2.1. Dynamic Differential Privacy

2.2. Blockchain and Smart Contracts

2.3. Verifiable Computation and Zero-Knowledge Proofs

2.4. Fairness and Incentive Compatibility

2.5. Characteristics of Medical Data and Privacy Threat Models

- (1)

- Curious users: infer individual privacy through frequent queries or correlation analysis;

- (2)

- Malicious nodes: falsify or tamper with data, undermining system fairness;

- (3)

- Colluding adversaries: multiple participants jointly analyze data to increase the likelihood of privacy breaches.

3. Problem Formulation and Framework Design

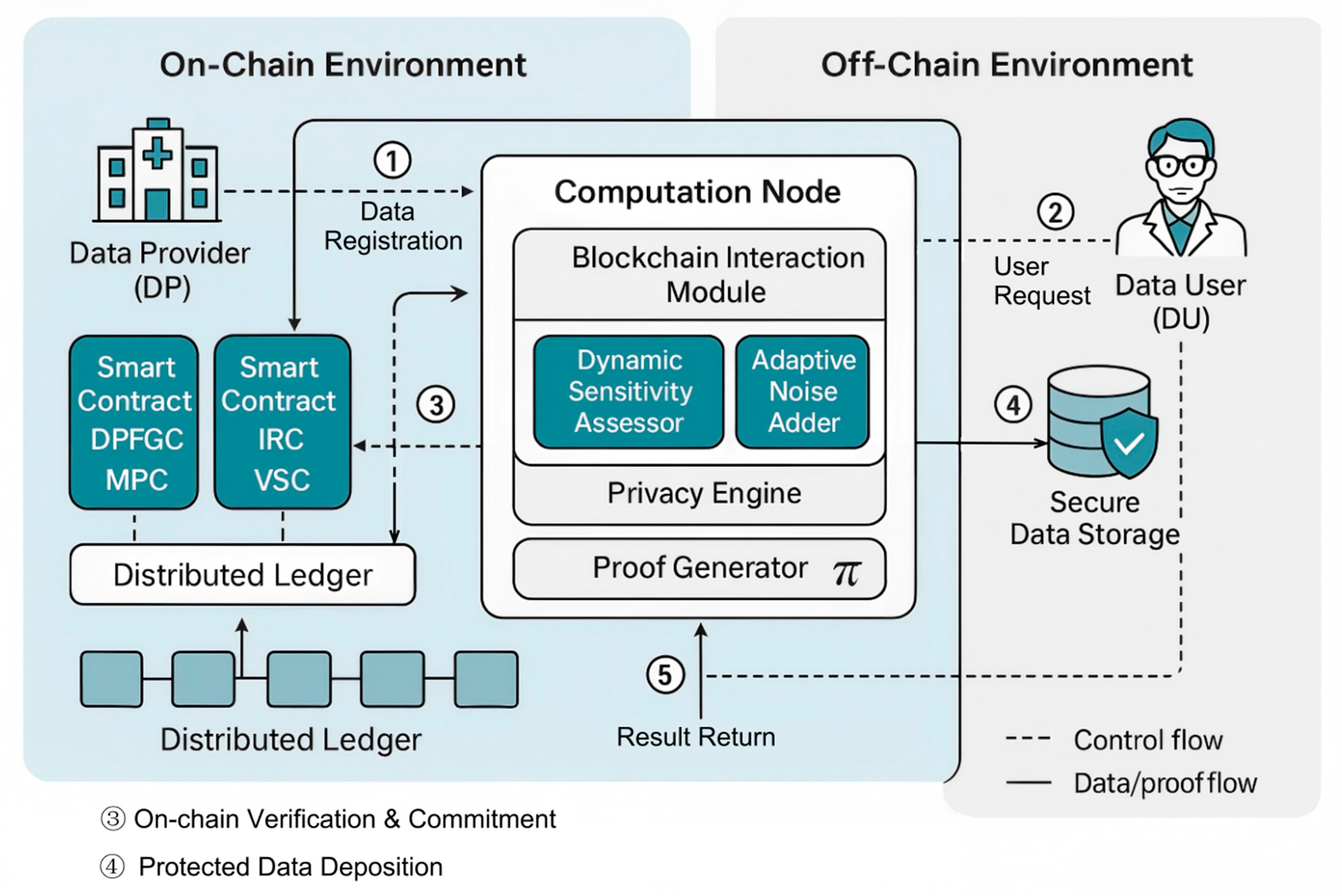

3.1. System Model

3.2. Threat Model

3.3. Design Goals and Requirements

3.4. Overall Architecture of the BFAV-DP Framework

4. Core Mechanism Design

4.1. Adaptive Differential Privacy Mechanism

4.1.1. Temporal Sensitivity Estimation

| Algorithm 1: Dynamic Sensitivity Evaluation (DSE) |

| Input: Query info, data attributes, historical patterns, risk signals |

| Output: Dynamic sensitivity |

| 1: Estimate baseline sensitivity using query type and attribute ranges |

| 2: Apply local and smoothed sensitivity estimation |

| 3: Perform frequency-domain regularization for stability |

| 4: Adjust sensitivity with historical patterns and risk signals |

| 5: Return final sensitivity |

4.1.2. Adaptive Budget Allocation

| Algorithm 2: Reputation-Aware Budget Allocation (RABA) |

| Input: Total budget, query states, user reputation, sensitivity |

| Output: Per-query budget |

| 1: Initialize total remaining budget |

| 2: For each query: |

| 3: Observe query state, historical patterns, and risk indicators |

| 4: Allocate budget using sensitivity, reputation, and risk weights |

| 5: Update remaining budget |

| 6: Return all allocated budgets |

4.1.3. Noise Calibration and Perturbation Generation

4.2. Fairness Assurance Mechanism

4.2.1. Fairness Model

4.2.2. Rule Design Based on Smart Contracts

4.2.3. Incentive Analysis for Participants

- Computation Nodes (CN)

- 2.

- Data Users (DU)

- 3.

- Data Providers (DP)

4.3. Verifiability Mechanism Design

4.3.1. Verification Objectives and Constraint Construction

4.3.2. Selection and Design of an Efficient Verifiable Scheme

- 1.

- Off-chain nodes perform DP computation and generate a proof;

- 2.

- The smart contract uses the verification key to complete proof verification efficiently;

- 3.

- Upon successful verification, results are written to the ledger and corresponding incentives or penalties are triggered.

| Algorithm 3: Verifiable DP Proof (VDP-SNARK) |

| Input: Public commitments, query parameters, private data and noise |

| Output: Zero-knowledge proof π_t |

| 1: Bind input data commitment and query parameters |

| 2: Verify sensitivity and noise parameters meet DP constraints |

| 3: Generate zero-knowledge proof for computation correctness |

| 4: Submit proof to blockchain for verification |

| 5: Return proof π_t |

5. System Implementation and Security Analysis

5.1. Implementation Details of the Framework

5.2. Formal Security Analysis and Proof

5.3. The Necessity of zk-SNARKs in a Permissioned Blockchain Environment

- (i)

- Verifies that noise is generated according to the allocated budget and has not been weakened;

- (ii)

- Verifies that sensitivity estimation follows the prescribed process;

- (iii)

- Verifies that budget consumption has not been skipped;

- (iv)

- Verifies that the final output is consistent with the DP computation;

- (v)

- Ensures that no private data or noise details are leaked throughout the process.

6. Experiments and Analysis

6.1. Experiment Preparation

6.1.1. Dataset Setup

- (1)

- HeartDisease is sourced from the UCI Machine Learning Repository [39] and contains multidimensional clinical examination indicators along with binary heart disease diagnostic labels. The dataset is of medium scale with a moderate number of features.

- (2)

- Diabetes (Pima Indians Diabetes) also comes from the UCI repository [40] (Pima Indians Diabetes) also comes from the UCI repository.

- (3)

- CardioTrain uses the open-source cardiovascular disease prediction dataset from Kaggle [41]. It has a larger sample size and higher feature dimensionality, closely reflecting the statistical structure of real-world clinical health examination data.

- (i)

- Perform rank transformation and Gaussianization of numerical features within each class;

- (ii)

- Estimate the covariance matrix within each class and apply ridge regularization;

- (iii)

- Save the empirical quantile functions for inverse transformation;

- (iv)

- Sample categorical features independently according to conditional multinomial distributions.

- (1)

- Syn-Base: replicates the original data distribution;

- (2)

- Syn-Skew: sets a sensitive attribute (e.g., gender) ratio to 0.2/0.8, creating a pronounced imbalance scenario;

- (3)

- Syn-Scale: scales the sample size to different magnitudes.

6.1.2. Experimental Assumptions and Application Levels

- 1.

- Threat Model and Trust Boundaries: All participants are considered honest-but-curious and cannot access raw medical data in plaintext. Blockchain nodes can record and verify statistics processed under differential privacy as well as zero-knowledge proofs. Differential privacy mechanisms provide individual-level anonymity, while homomorphic encryption ensures that intermediate values on-chain remain confidential.

- 2.

- Application Level and Statistical Consistency Goals: BFAV-DP targets aggregate-level medical analyses (e.g., risk assessment, cohort studies). It does not require per-sample consistency for individual predictions but emphasizes maintaining statistical trend consistency under noise addition [44]. That is, metrics such as ACC and MSE should follow stable patterns with varying and preserve relative ordering across different models.

- 3.

- Model Configuration: Based on the characteristics of structured medical data, standard models such as logistic regression and decision trees are employed in this chapter to highlight the impact of the privacy mechanisms themselves. The applicability of deep learning models is further discussed in Section 6.5.

6.1.3. Baseline Schemes

- (1)

- Central-DP: A purely centralized differential privacy model, where performance only includes the local execution time of the core algorithm.

- (2)

- StaticDP-Chain: Builds upon Central-DP by incorporating simulated blockchain-related latency and costs.

- (3)

- AdaDP-NoBC: The adaptive differential privacy algorithm proposed in this study, without blockchain or zero-knowledge proof overhead.

- (4)

- BFAV-DP-NoVer: A key ablation version of the proposed BFAV-DP framework, including the execution time of adaptive and fairness algorithms and the blockchain model overhead, but excluding zero-knowledge proof costs. This version isolates the performance impact of the verifiability mechanism.

6.1.4. Reference Algorithm Selection

- (1)

- AG-PLD: A traditional baseline differential privacy method relying on Gaussian noise perturbation, capable of balancing data utility and privacy protection to a certain extent [42].

- (2)

- LDP-OLH/OUE: Optimized Local Differential Privacy (LDP) protocols with hashing schemes, offering good communication efficiency and privacy protection, widely applied in industrial scenarios [43].

- (3)

- StaticDP-Chain: Introduces blockchain into a differential privacy mechanism to implement static budget allocation and verifiable storage, combining data protection with on-chain traceability [45].

- (4)

- zkDP-Chain: Employs zero-knowledge proof (ZKP) technology to integrate privacy protection with verifiable computation, enabling model verification and on-chain auditing without privacy leakage [46].

- (5)

- BFAV-DP (proposed method): The method proposed in this work, combining fair budget allocation and verifiable on-chain storage. It integrates data partition perturbation, multi-round averaging, homomorphic encryption, and blockchain collaboration, ensuring privacy protection while also achieving fairness, verifiability, and high data utility.

6.2. Evaluation Metrics

6.2.1. BFAV-DP Specific Metrics

- (1)

- EPUA: Enhanced Privacy–Utility Advantage

- (2)

- ASC: Adaptive Stability Coefficient

- (3)

- FAE: Fairness Advantage Evaluation

- (4)

- VLE: Verification Latency Efficiency

- (5)

- BIE: Blockchain Impact Elasticity

6.2.2. General Evaluation Metrics

6.3. Experimental Results and Analysis

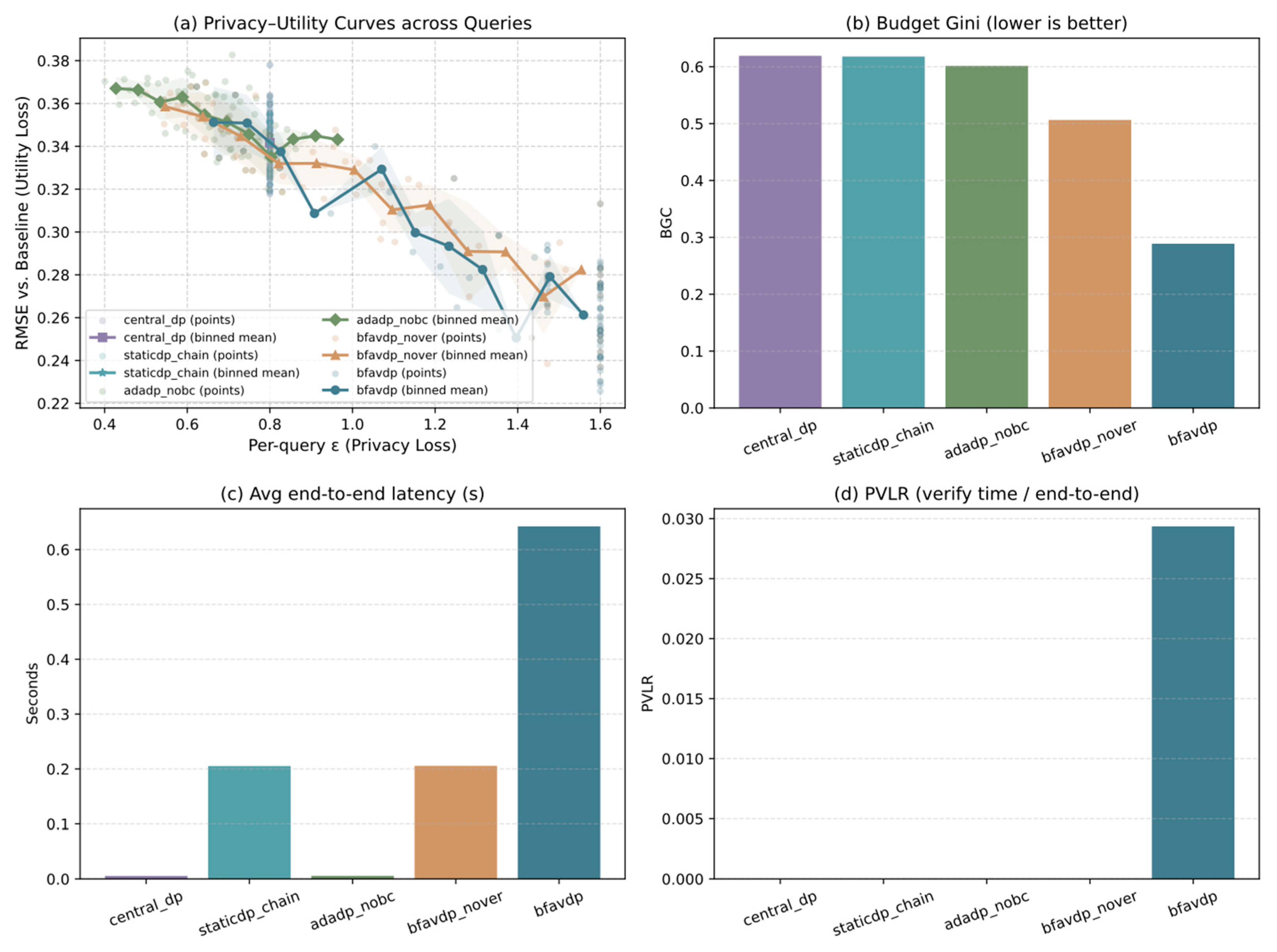

6.3.1. Comprehensive Comparison with Baseline Schemes

- (1)

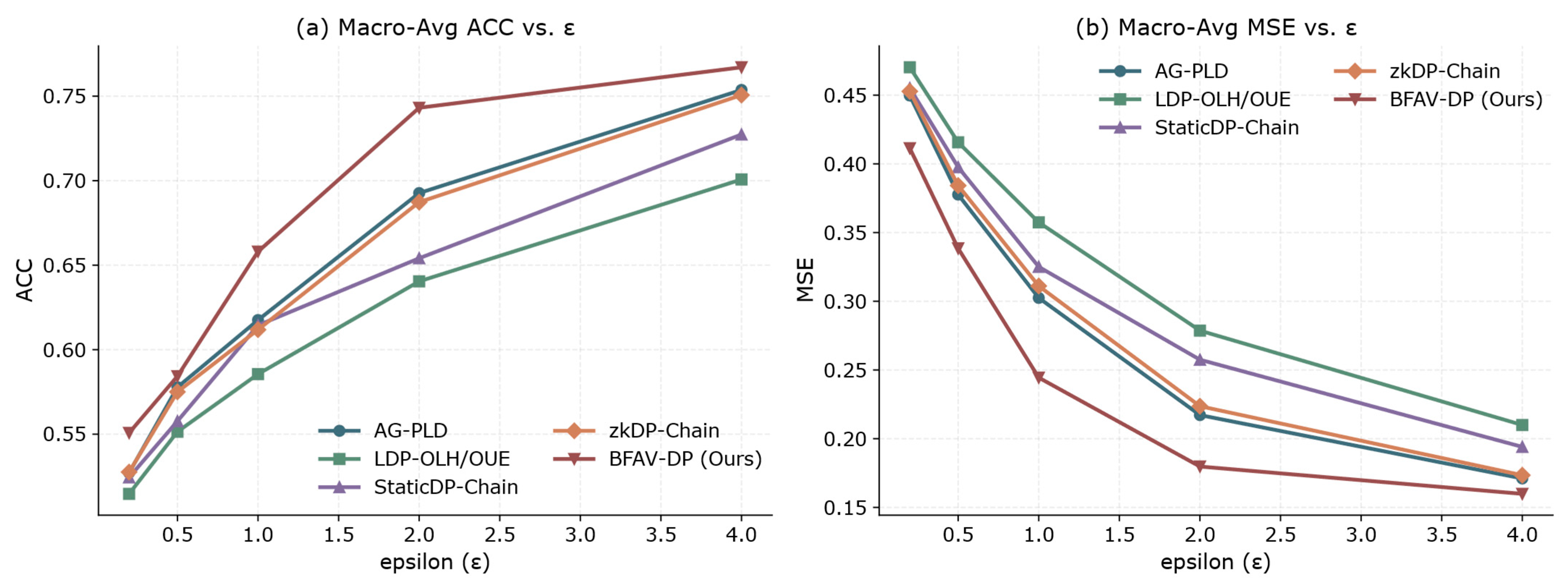

- Privacy-Utility Trade-off Analysis

- (2)

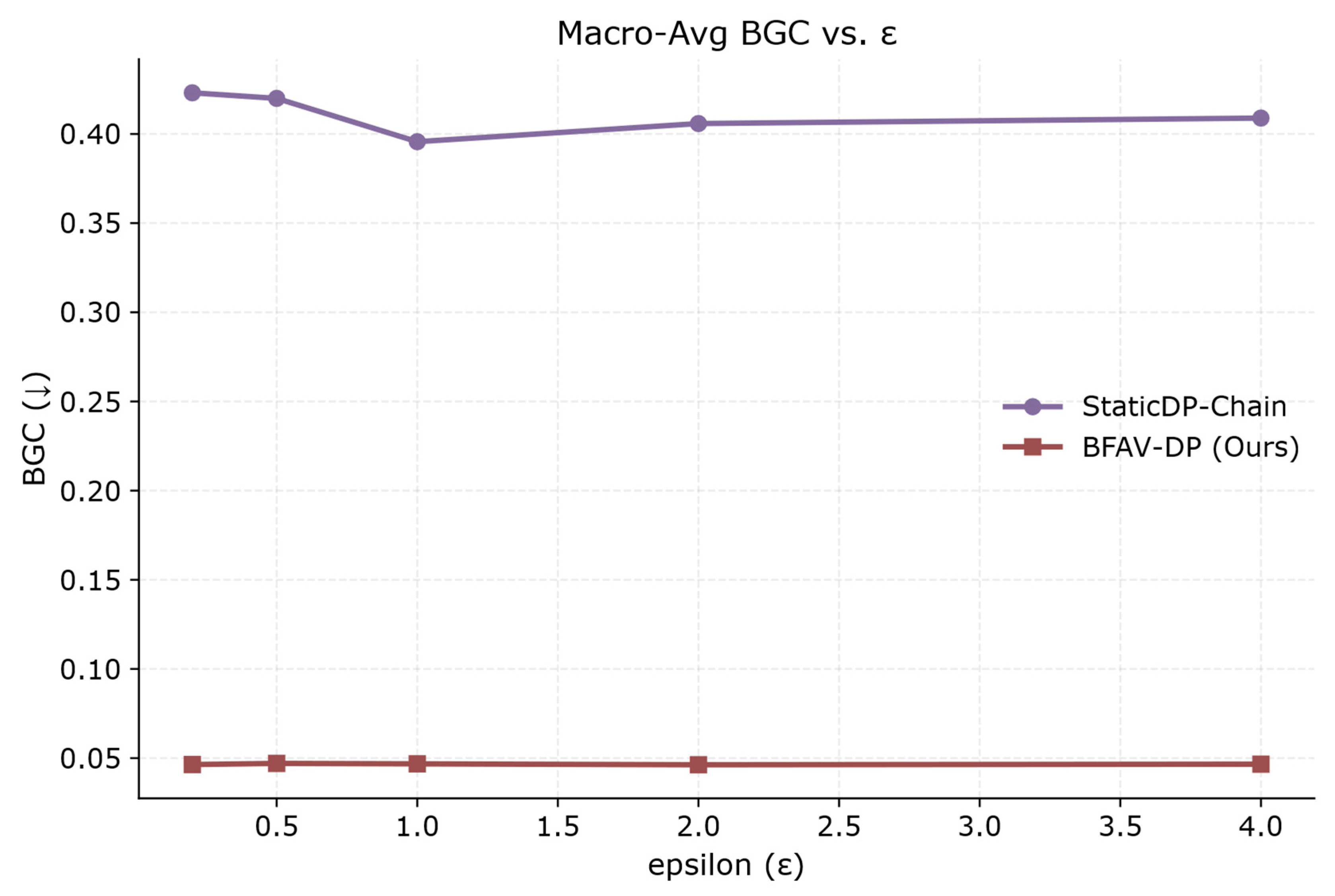

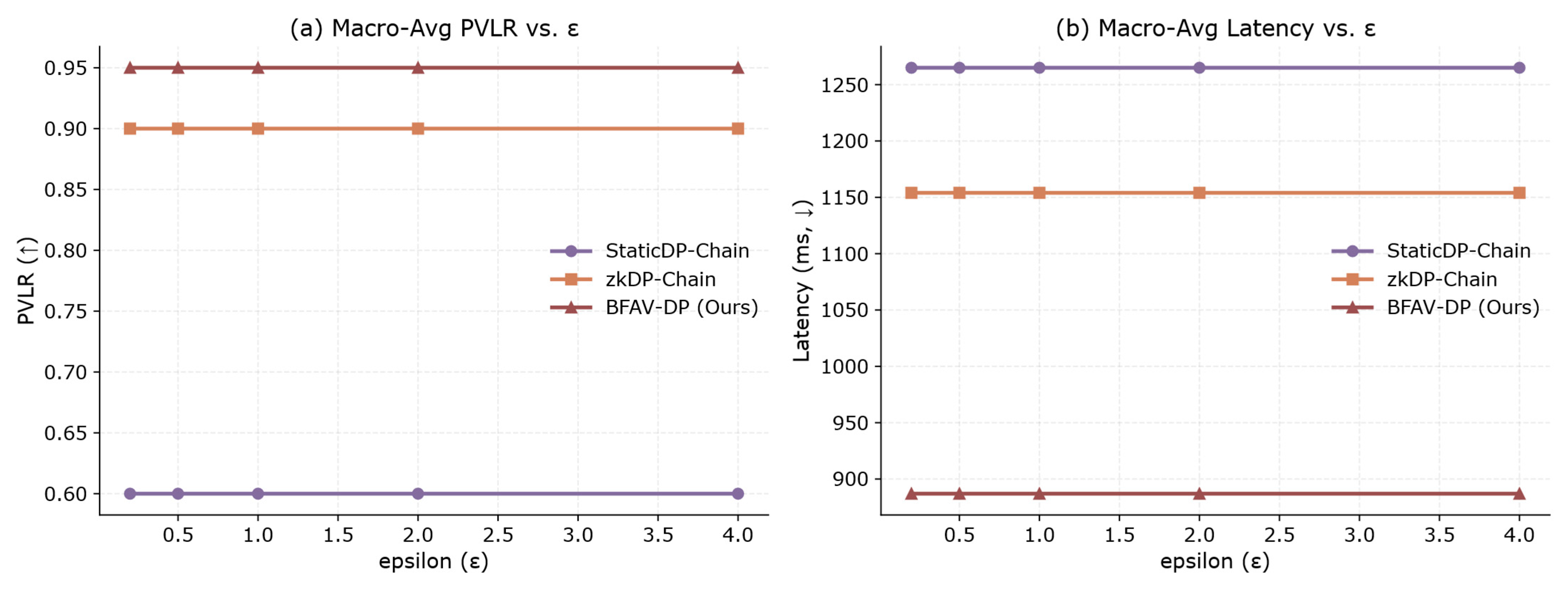

- Budget Allocation Fairness

- (3)

- System Performance Overhead

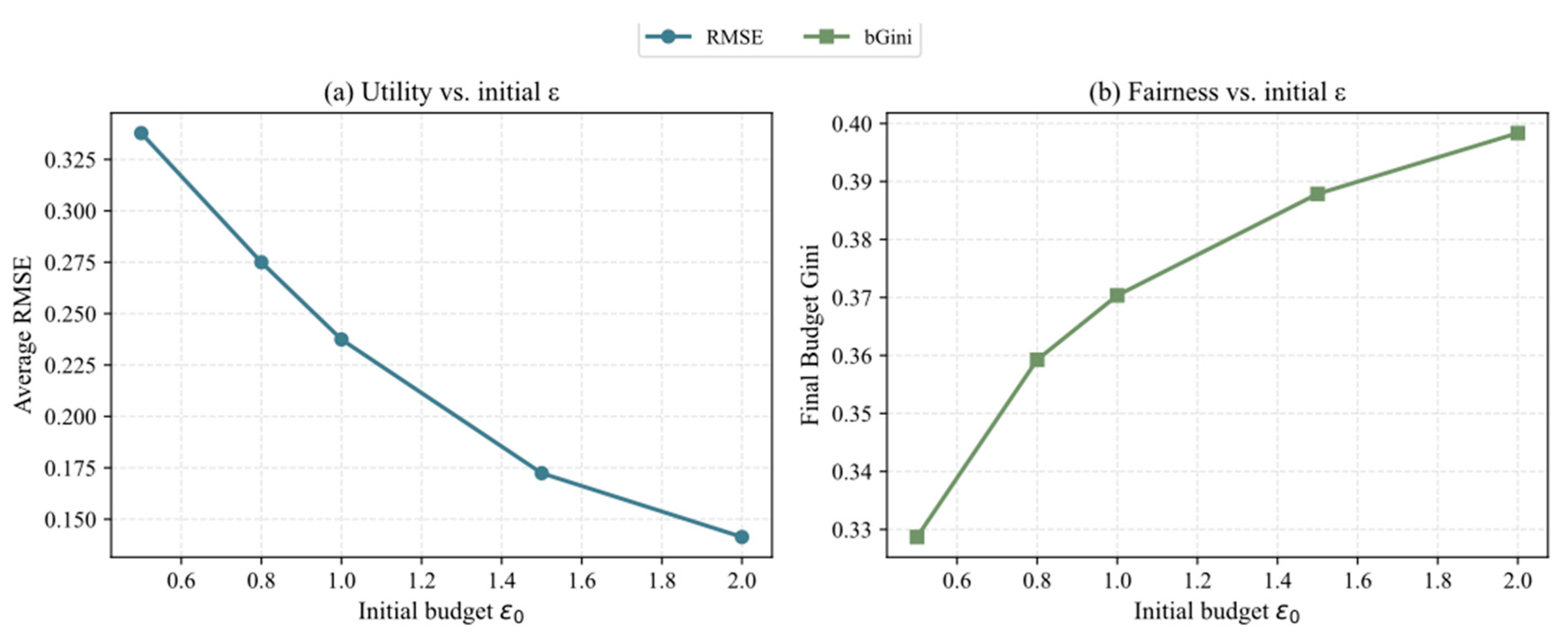

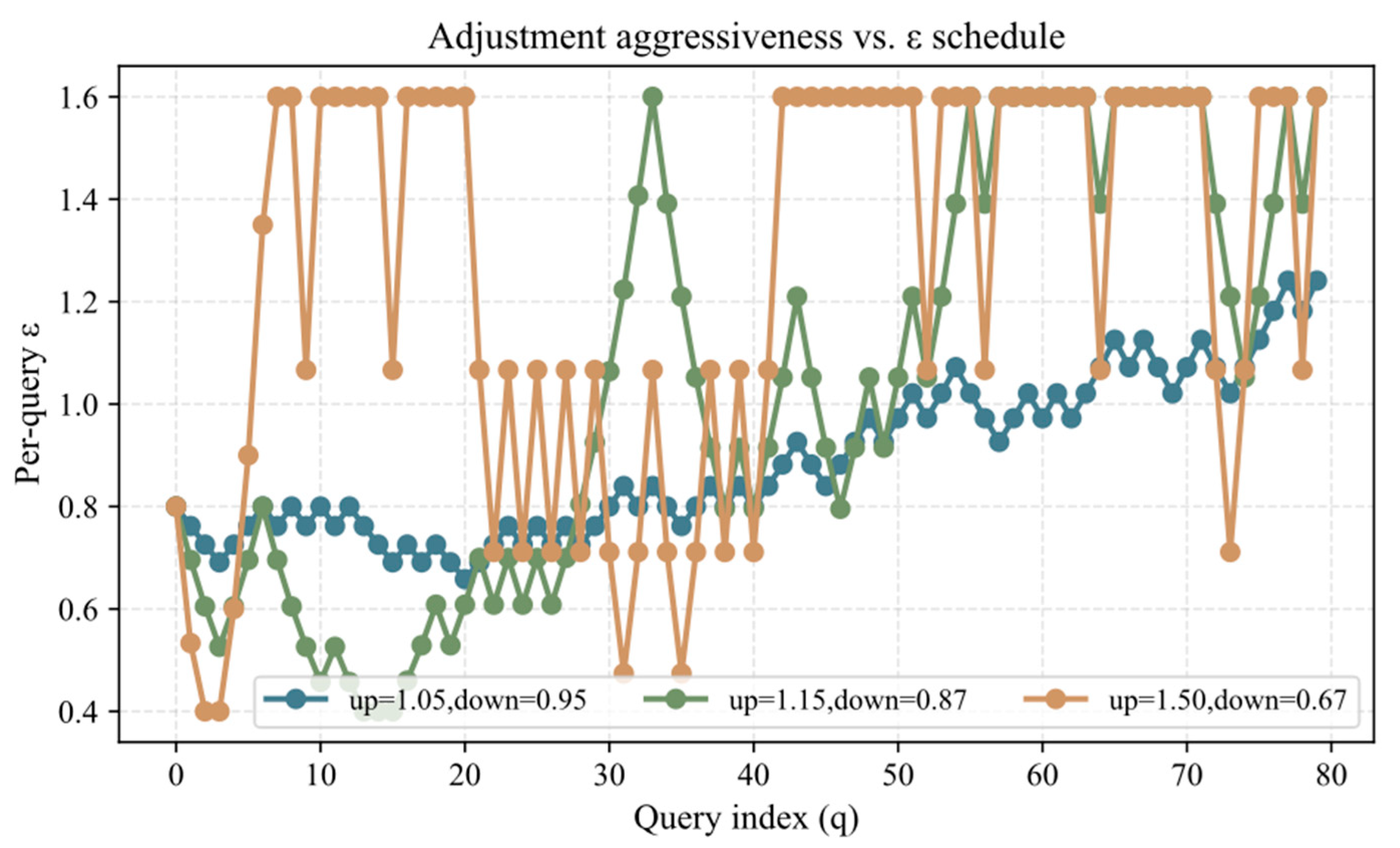

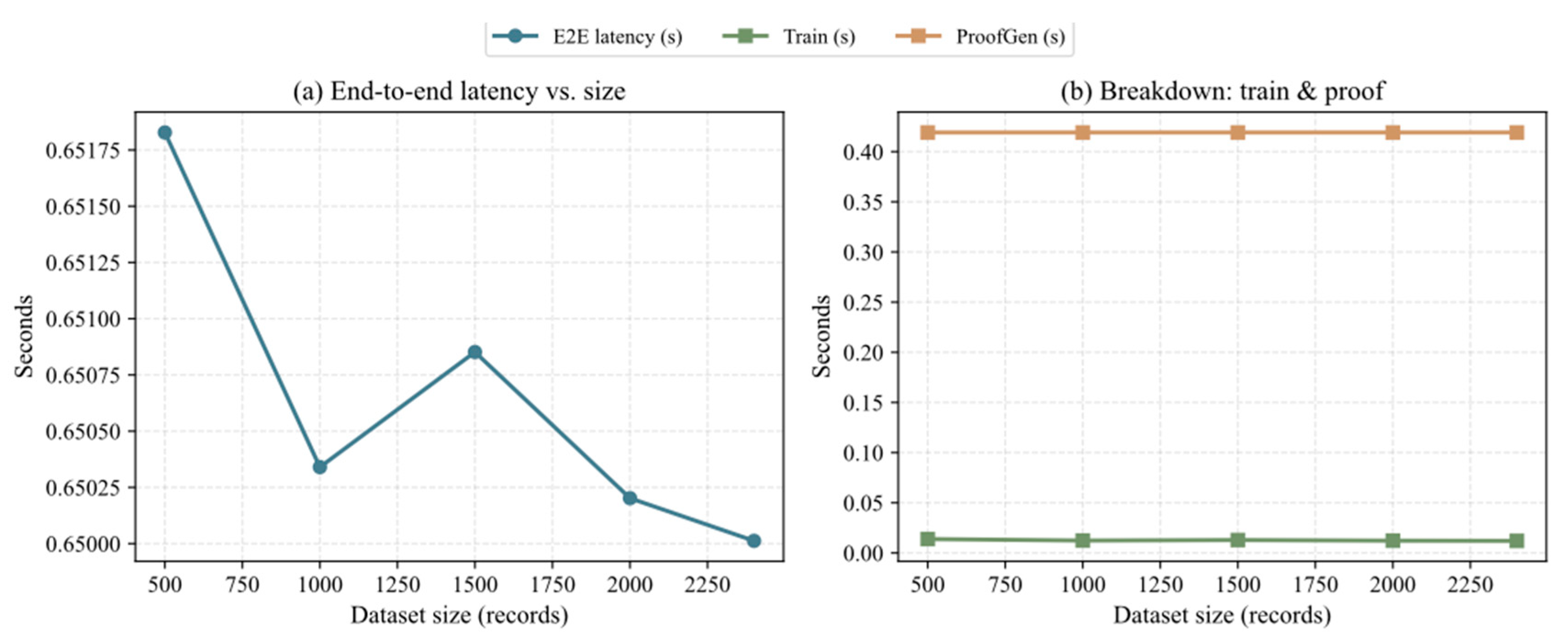

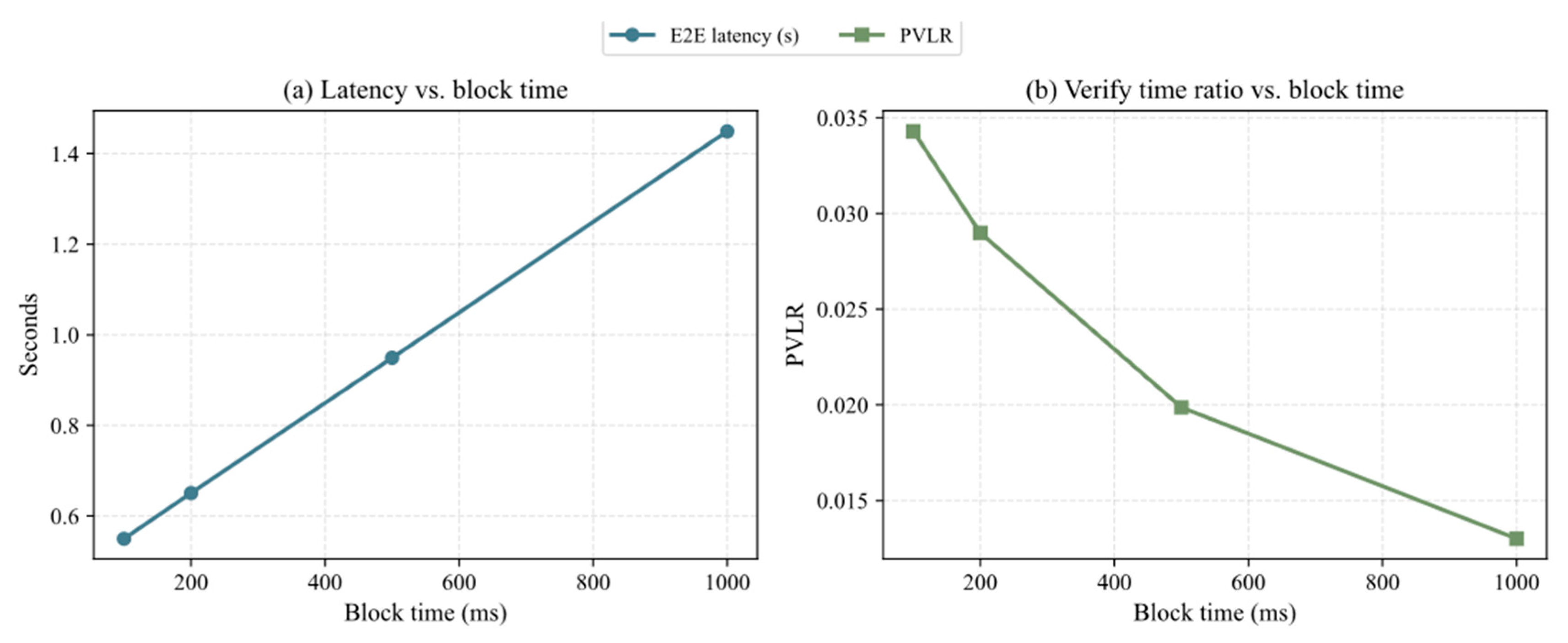

6.3.2. Analysis of Key Parameter Impacts

- (1)

- Impact of Initial Budget ()

- (2)

- Impact of Dynamic Adjustment Aggressiveness

- (3)

- Impact of Dataset Size

- (4)

- Impact of Blockchain Configuration (Block Time)

6.4. Comparative Experiments and Analysis

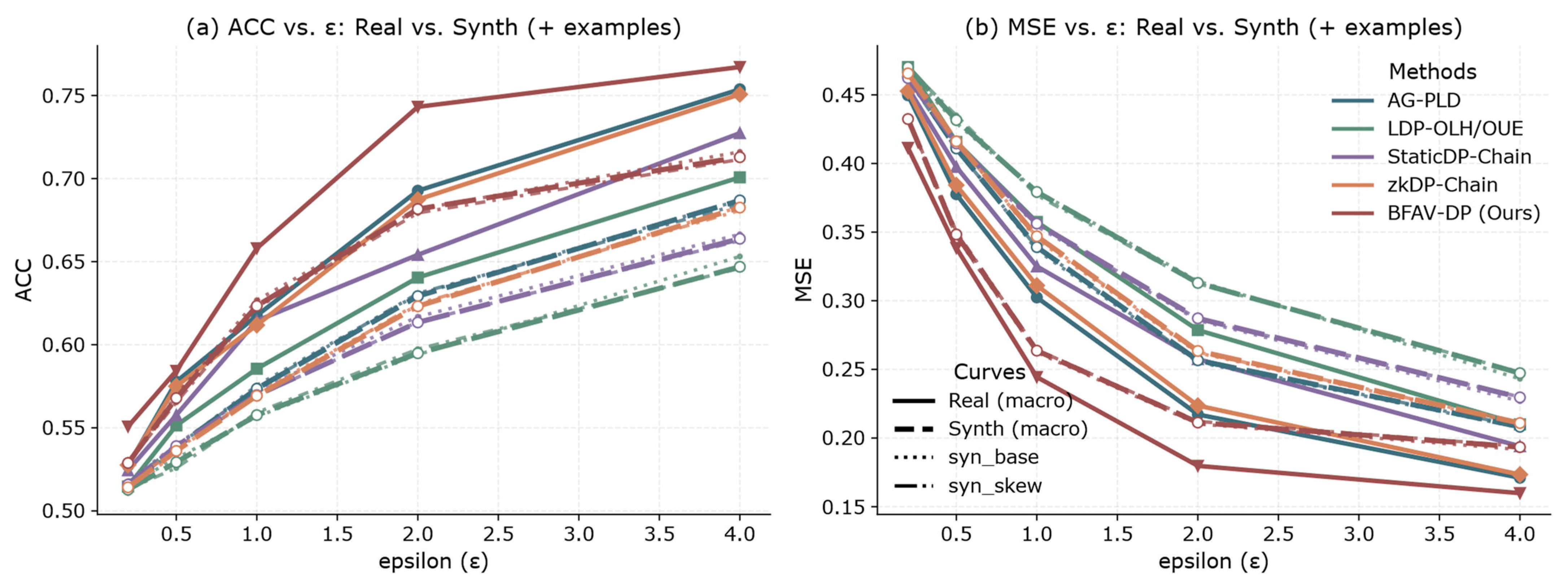

6.4.1. Utility Comparison

6.4.2. Fairness Comparison

6.4.3. Verifiability Comparison

6.4.4. Runtime Comparison

6.4.5. Comparative Trends Between Real and Synthetic Datasets

6.5. Applicability Discussion in Deep Learning Scenarios

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gymrek, M.; McGuire, A.L.; Golan, D.; Halperin, E.; Erlich, Y. Identifying personal genomes by surname inference. Science 2013, 339, 321–324. [Google Scholar] [CrossRef]

- Rieke, N.; Hancox, J.; Li, W.; Milletari, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The future ofdigital health with federated learning. NPJ Digit. Med. 2020, 3, 119. [Google Scholar] [CrossRef]

- Dwork, C. Differential privacy. In International Colloquium on Automata, Languages, and Programming; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–12. [Google Scholar]

- Zhu, L.; Song, H.; Chen, X. Dynamic privacy budget allocation for enhanced differential privacy in federated learning. Clust. Comput. 2025, 28, 999. [Google Scholar] [CrossRef]

- Errounda, F.Z.; Liu, Y. Adaptive differential privacy in vertical federated learning for mobility forecasting. Future Gener. Comput. Syst. 2023, 149, 531–546. [Google Scholar] [CrossRef]

- Zhang, R.; Xue, R.; Liu, L. Security and privacy for healthcare blockchains. IEEE Trans. Serv. Comput. 2021, 15, 3668–3686. [Google Scholar] [CrossRef]

- Garg, S.; Goel, A.; Jain, A.; Policharla, G.V.; Sekar, S. {zkSaaS}:{Zero-Knowledge}{SNARKs} as a Service. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 4427–4444. [Google Scholar]

- Bontekoe, T.; Karastoyanova, D.; Turkmen, F. Verifiable privacy-preserving computing. arXiv 2023, arXiv:2309.08248. [Google Scholar]

- Xuan, S.; Zheng, L.; Chung, I.; Wang, W.; Man, D.; Du, X.; Yang, W.; Guizani, M. An incentive mechanism for datasharing based on blockchain with smart contracts. Comput. Electr. Eng. 2020, 83, 106587. [Google Scholar] [CrossRef]

- Xu, Z.; Zheng, E.; Han, H.; Dong, X.; Dang, X.; Wang, Z. A secure healthcare data sharing scheme based ontwo-dimensional chaotic mapping and blockchain. Sci. Rep. 2024, 14, 23470. [Google Scholar]

- Zhu, D.; Li, Y.; Zhou, Z.; Zhao, Z.; Kong, L.; Wu, J.; Zheng, J. Blockchain-Based Incentive Mechanism for Electronic Medical Record Sharing Platform: An Evolutionary Game Approach. Sensors 2025, 25, 1904. [Google Scholar] [CrossRef]

- Cao, Y.; Yoshikawa, M.; Xiao, Y.; Xiong, L. Quantifying differential privacy under temporal correlations. In Proceedings of the 2017 IEEE 33rd International Conference on Data Engineering (ICDE), San Diego, CA, USA, 19–22 April 2017; pp. 821–832. [Google Scholar]

- Nakamoto, S. Bitcoin: A Peer-to-Peer Electronic Cash System. Available online: https://ssrn.com/abstract=3440802 (accessed on 25 October 2025).

- Crosby, M.; Pattanayak, P.; Verma, S.; Kalyanaraman, V. Blockchain technology: Beyond bitcoin. Appl. Innov. 2016, 2, 71. [Google Scholar]

- Zheng, Z.; Xie, S.; Dai, H.; Chen, X.; Wang, H. An overview of blockchain technology: Architecture, consensus, and future trends. In Proceedings of the 2017 IEEE International Congress on Big Data (BigData Congress), Honolulu, HI, USA, 25–30 June 2017; pp. 557–564. [Google Scholar]

- Jiang, S.; Cao, J.; Wu, H.; Yang, Y.; Ma, M.; He, J. Blochie: A blockchain-based platform for healthcare information exchange. In Proceedings of the 2018 IEEE International Conference on Smart Computing (Smartcomp), Sicily, Italy, 18–20 June 2018; pp. 49–56. [Google Scholar]

- Azaria, A.; Ekblaw, A.; Vieira, T.; Lippman, A. Medrec: Using blockchain for medical data access and permission management. In Proceedings of the 2016 2nd International Conference on Open and Big Data (OBD), Vienna, Austria, 22–24 August 2016; pp. 25–30. [Google Scholar]

- Qu, Y.; Ma, L.; Ye, W.; Zhai, X.; Yu, S.; Li, Y.; Smith, D. Towards privacy-aware and trustworthy data sharing using blockchain for edge intelligence. Big Data Min. Anal. 2023, 6, 443–464. [Google Scholar] [CrossRef]

- Goldwasser, S.; Micali, S.; Rackoff, C. The knowledge complexity of interactive proof-systems. In Providing Sound Foundations for Cryptography: On the Work of Shafi Goldwasser and Silvio Micali; Machinery: New York, NY, USA, 2019; pp. 203–225. [Google Scholar]

- Ben-Sasson, E.; Chiesa, A.; Genkin, D.; Tromer, E.; Virza, M. SNARKs for C: Verifying program executions succinctly and in zero knowledge. In Proceedings of the Annual Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 90–108. [Google Scholar]

- Groth, J. On the size of pairing-based non-interactive arguments. In Annual International Conference on the Theory and Applications of Cryptographic Techniques, Vienna, Austria, 8–12 May 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 305–326. [Google Scholar]

- Yang, M.; Feng, H.; Wang, X.; Jiao, X.; Wu, X.; Qin, S. Fair Incentive Allocation for Secure Data Sharing in Blockchain Systems: A Shapley Value Based Mechanism. In International Conference on Autonomous Unmanned Systems, Nanjing, China, 9–11 September 2023; Springer: Singapore, 2023; pp. 460–469. [Google Scholar]

- Li, H.; Zhu, L.; Shen, M.; Gao, F.; Tao, X.; Liu, S. Blockchain-based data preservation system for medical data. J. Med. Syst. 2018, 42, 141. [Google Scholar] [CrossRef]

- Commey, D.; Hounsinou, S.; Crosby, G.V. Securing health data on the blockchain: A differential privacy and federated learning framework. arXiv 2024, arXiv:2405.11580. [Google Scholar] [CrossRef]

- Zhang, R.; Xue, R.; Liu, L. Security and privacy on blockchain. ACM Comput. Surv. (CSUR) 2019, 52, 51. [Google Scholar] [CrossRef]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends® Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Mallat, S. A Wavelet Tour of Signal Processing; Elsevier: Amsterdam, The Netherlands, 1999. [Google Scholar]

- Ghosh, A.; Kleinberg, R. Optimal contest design for simple agents. ACM Trans. Econ. Comput. (TEAC) 2016, 4, 22. [Google Scholar] [CrossRef]

- Mishra, N.; Thakurta, A. (Nearly) optimal differentially private stochastic multi-arm bandits. In Proceedings of the Thirty-First Conference on Uncertainty in Artificial Intelligence, Amsterdam, The Netherlands, 12–16 July 2015; pp. 592–601. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Theil, H. Economics and Information Theory; North-Holland Publishing Company: Amsterdam, The Netherlands, 1967. [Google Scholar]

- Mironov, I. Rényi differential privacy. In Proceedings of the 2017 IEEE 30th Computer Security Foundations Symposium (CSF), Santa Barbara, CA, USA, 21–25 August 2017; pp. 263–275. [Google Scholar]

- Atkinson, A.B. On the measurement of inequality. J. Econ. Theory 1970, 2, 244–263. [Google Scholar] [CrossRef]

- Friedler, S.A.; Scheidegger, C.; Venkatasubramanian, S.; Choudhary, S.; Hamilton, E.P.; Roth, D. A comparative study of fairness-enhancing interventions in machine learning. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, Georgia, 29–31 January 2019; pp. 329–338. [Google Scholar]

- Myerson, R.B. Optimal auction design. Math. Oper. Res. 1981, 6, 58–73. [Google Scholar] [CrossRef]

- Zhou, W.; Zhang, D.; Han, G.; Zhu, W.; Wang, X. A Blockchain-Based Privacy-Preserving and Fair Data Transaction Model in IoT. Appl. Sci. 2023, 13, 12389. [Google Scholar] [CrossRef]

- Narayan, A.; Feldman, A.; Papadimitriou, A.; Haeberlen, A. Verifiable differential privacy. In Proceedings of the Tenth European Conference on Computer Systems, New York, NY, USA, 21–24 April 2015; pp. 1–14. [Google Scholar]

- Biswas, A.; Cormode, G. Verifiable differential privacy. arXiv 2022, arXiv:2208.09011. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI machine learning repository. Irvine, CA: University of California, School of Information and Computer Science. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 1, 1–29. Available online: http://archive.ics.uci.edu/ml (accessed on 25 October 2025).

- Smith, J.W.; Everhart, J.E.; Dickson, W.C.; Knowler, W.C.; Johannes, R.S. Using the ADAP learning algorithm to forecast the onset of diabetes mellitus. In Proceedings of the Annual Symposium on Computer Application in Medical Care, Washington, DC, USA, 6–9 November 1988; p. 261. [Google Scholar]

- Ruiz, S.A. Cardiovascular Disease. Dataset. Kaggle. 2019. Available online: https://www.kaggle.com/datasets/sulianova/cardiovascular-disease-dataset (accessed on 25 October 2025).

- Koskela, A.; Tobaben, M.; Honkela, A. Individual privacy accounting with gaussian differential privacy. arXiv 2022, arXiv:2209.15596. [Google Scholar]

- Wang, T.; Blocki, J.; Li, N.; Jha, S. Locally differentially private protocols for frequency estimation. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017; pp. 729–745. [Google Scholar]

- Liu, W.; Zhang, Y.; Yang, H.; Meng, Q. A survey on differential privacy for medical data analysis. Ann. Data Sci. 2024, 11, 733–747. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.S.; Chew, C.J.; Liu, J.Y.; Chen, Y.C.; Tsai, K.Y. Medical blockchain: Data sharing and privacy preserving of EHR based on smart contract. J. Inf. Secur. Appl. 2022, 65, 103117. [Google Scholar] [CrossRef]

- Anusuya, R.; Karthika Renuka, D.; Ghanasiyaa, S.; Harshini, K.; Mounika, K.; Naveena, K.S. Privacy-preserving blockchain-based EHR using ZK-Snarks. In Proceedings of the International Conference on Computational Intelligence, Cyber Security, and Computational Models, Coimbatore, India, 16–18 December 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 109–123. [Google Scholar]

- Chen, V.X.; Hooker, J.N. A guide to formulating fairness in an optimization model. Ann. Oper. Res. 2023, 326, 581–619. [Google Scholar] [CrossRef]

- Pan, K.; Ong, Y.-S.; Gong, M.; Li, H.; Qin, A.; Gao, Y. Differential privacy in deep learning: A literature survey. Neurocomputing 2024, 589, 127663. [Google Scholar] [CrossRef]

- Liu, Y.; Acharya, U.R.; Tan, J.H. Preserving privacy in healthcare: A systematic review of deep learning approaches for synthetic data generation. Comput. Methods Programs Biomed. 2024, 260, 108571. [Google Scholar] [CrossRef]

| Method | 0.2 | 0.5 | 1.0 | 2.0 | 4.0 |

|---|---|---|---|---|---|

| AG-PLD | 472.77 | 272.82 | 180.00 | 118.75 | 78.34 |

| LDP-OLH/OUE | 525.30 | 303.14 | 200.00 | 131.95 | 87.05 |

| BFAV-DP (Ours) | 577.83 | 333.45 | 220.00 | 145.14 | 95.76 |

| StaticDP-Chain | 656.63 | 378.92 | 250.00 | 164.93 | 108.81 |

| zkDP-Chain | 709.16 | 409.24 | 270.00 | 178.13 | 117.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feier, W.; Rongzuo, G. Research on a Blockchain Adaptive Differential Privacy Mechanism for Medical Data Protection. Future Internet 2025, 17, 539. https://doi.org/10.3390/fi17120539

Feier W, Rongzuo G. Research on a Blockchain Adaptive Differential Privacy Mechanism for Medical Data Protection. Future Internet. 2025; 17(12):539. https://doi.org/10.3390/fi17120539

Chicago/Turabian StyleFeier, Wang, and Guo Rongzuo. 2025. "Research on a Blockchain Adaptive Differential Privacy Mechanism for Medical Data Protection" Future Internet 17, no. 12: 539. https://doi.org/10.3390/fi17120539

APA StyleFeier, W., & Rongzuo, G. (2025). Research on a Blockchain Adaptive Differential Privacy Mechanism for Medical Data Protection. Future Internet, 17(12), 539. https://doi.org/10.3390/fi17120539