Abstract

Blockchain technology, originally designed as a secure and immutable ledger, has expanded its applications across various domains. However, its scalability remains a fundamental bottleneck, limiting throughput, specifically Transactions Per Second (TPS) and increasing confirmation latency. Among the many proposed solutions, sharding has emerged as a promising Layer 1 approach by partitioning blockchain networks into smaller, parallelized components, significantly enhancing processing efficiency while maintaining decentralization and security. In this paper, we have conducted a systematic literature review, resulting in a comprehensive review of sharding. We provide a detailed comparative analysis of various sharding approaches and emerging AI-assisted sharding approaches, assessing their effectiveness in improving TPS and reducing latency. Notably, our review is the first to incorporate and examine the standardization efforts of the ITU-T and ETSI, with a particular focus on activities related to blockchain sharding. Integrating these standardization activities allows us to bridge the gap between academic research and practical standardization in blockchain sharding, thereby enhancing the relevance and applicability of our review. Additionally, we highlight the existing research gaps, discuss critical challenges such as security risks and inter-shard communication inefficiencies, and provide insightful future research directions. Our work serves as a foundational reference for researchers and practitioners aiming to optimize blockchain scalability through sharding, contributing to the development of more efficient, secure, and high-performance decentralized networks. Our comparative synthesis further highlights that while Bitcoin and Ethereum remain limited to 7–15 TPS with long confirmation delays, sharding-based systems such as Elastico and OmniLedger have reported significant throughput improvements, demonstrating sharding’s clear advantage over traditional Layer 1 enhancements. In contrast to other state-of-the-art scalability techniques such as block size modification, consensus optimization, and DAG-based architectures, sharding consistently achieves higher transaction throughput and lower latency, indicating its position as one of the most effective Layer 1 solutions for improving blockchain scalability.

1. Introduction

Blockchain is a series of blocks of information securely linked to each other that grows chronologically and has timestamps with dates or times. It can be divided into two types: public blockchain and consortium blockchain. A public blockchain is one where the network is accessible to everyone, while a consortium blockchain is restricted to a group of organizations within the network. But this technology was mentioned in 1991 by Stuart Haber and W. Scott Stornetta, who introduced a digital document system that had a time-stamping feature; thus, it is not a new idea [1]. It gained popularity with the rise of Bitcoin as a cryptocurrency and has since expanded to incorporate smart contracts, which are self-executing agreements where code automatically enforces predefined conditions.

When smart contracts are incorporated, they help execute code and digitize contracts that may be applied to smart city services like voting [2], bills [3], identity management [4,5,6,7], and governance [8,9], enhancing security through immutability, integrity, and auditability [10].

Despite what blockchain may offer, blockchain faces scalability challenges, a critical issue highlighted in literature across domains like Smart City [9,11,12,13], Software-defined Networking [14,15,16], Identity Management [10,17,18], and Metaverse [19]. Scalability concerns arise with the increase in the number of nodes and transactions in a blockchain network, impacting performance, which is generally measured as Transactions Per Second (TPS) and latency (TPS measures the number of transactions a blockchain can process in a second, while latency refers to the delay before a transaction is confirmed and recorded). In the mainstream blockchain technology, Bitcoin processes 7 TPS with a 10 min confirmation time [20], whereas Ethereum improves on this with 15 TPS and a 19 s confirmation time [21]. However, these figures are low when compared to a network platform like VISA, which can perform up to 24,000 TPS with instant confirmation [20]. In order to make blockchain a widely adopted technology, the blockchain performance needs to be on par with VISA’s by improving its scalability [22]. Compared with these baseline figures, early static sharding systems such as Elastico [23] demonstrated improvements beyond the performance of Bitcoin and Ethereum, and subsequent layered and dynamic sharding designs [24,25,26] continued this trend. These comparative results indicate that sharding achieves higher throughput and lower latency than consensus modifications or block size adjustments, reinforcing its position as a state-of-the-art Layer 1 scalability technique. Therefore, improving blockchain scalability has been an interesting research avenue for many researchers.

Addressing scalability involves examining blockchain’s three-layer architecture, with each layer offering potential scalability enhancement opportunities [20,22,27,28]. When blockchain processes are separated into different layers as depicted in Figure 1, it is easier to understand how blockchain architecture or structure interacts with different elements. In blockchain, Layer 0 underpins data transportation [29], Layer 1 constitutes the blockchain architecture itself (on-chain) [27], and Layer 2 operates externally to relieve the on-chain system (off-chain) [27]. Among all the layers, the room for performance improvement is fundamental in Layer 1 [30,31,32,33,34].

Figure 1.

Blockchain layers.

Various approaches exist in Layer 1 to enhance the scalability performance, such as introducing new consensus mechanisms (e.g., Proof-of-Authority (PoA) [35], Proof-of-Burn [35] and Bitcoin-NG [33]), manipulation of block size (e.g., [30,36,37,38]), applying Directed Acyclic Graph (DAG) (e.g., [39,40]) and adopting sharding approaches (e.g., [41,42,43,44]). Consensus allows for transaction validation by nodes, which is related to scalability via adjustments in block size. However, altering consensus mechanisms and block sizes might lack long-term viability and adaptability in a blockchain network [29]. Therefore, implementing DAG and sharding provide more versatile alternatives for handling blockchain transactions. DAG necessitates a comprehensive redesign of blockchain architecture, enabling concurrent block generation by allowing multiple vertices to connect to a previous one, thus increasing transaction capacity [45,46]. In contrast, sharding segments transactions into “shards” for parallel processing, reducing individual workload. This makes sharding a compelling Layer 1 approach [22,47], potentially better addressing the blockchain trilemma of security, scalability, and decentralization than other Layer 1-based approaches for scalability improvement in blockchain. Alongside these advancements, recent studies have explored the incorporation of Artificial Intelligence (AI) to assist in blockchain sharding processes, such as shard management, node coordination, and consensus configuration (e.g., [48,49]). The timeline infographic presented in Figure 2 shows the evolution of sharding technology starting from 2016. Standardization activities have also advanced in parallel. For example, Recommendation ITU-T F.751.19 [50] defines a framework and technical requirements for sharded distributed ledger technology, specifying key components and requirements for scalability and security.

Figure 2.

Timeline of blockchain sharding development from Static (2016) to Dynamic (2020) and Layered (2021) approaches, alongside ITU-T’s 2024 standardization framework and recent AI integration. Sources: Luu et al. [23]; Hong et al. [24]; Tao et al. [25]; ITU-T Recommendation F.751.19 [50]; Kokoris-Kogias et al. [51]; R. Chen et al. [52]; Tennakoon and Gramoli [53]; Liu et al. [54]; Song et al. [55].

The structure of the paper, illustrated in Figure 3, offers a high-level overview of the organization of this review. Section 2 reviews prior studies on blockchain scalability, ranging from broad examinations of scalability challenges to surveys focused specifically on sharding, and identifies gaps that frame the rationale for our contribution. Section 3 outlines the specific contributions of this work. Section 4 describes in detail the systematic literature review methodology adopted in this study. Section 5 provides an overview of sharding and includes a detailed explanation of various sharding techniques. Section 6 highlights the existing standardization efforts exist in blockchain sharding introduced by major standardization bodies. Section 7 details the key features of these sharding techniques. Section 8 is dedicated to discussing these features further, while Section 9 explores the lessons learned, the open research issues and future directions. The paper concludes with Section 10, summarizing the work.

Figure 3.

High-level structure of our review.

2. Related Reviews

The rapid growth of blockchain applications has motivated numerous review studies that examine scalability challenges and solutions across different layers of the blockchain stack. However, these studies vary in scope and depth, with some focusing broadly on general scalability mechanisms while others target specific techniques such as sharding. A structured review of these works is therefore essential to situate our contribution within the broader research landscape, identify what prior surveys have addressed, and highlight the research gaps that remain unresolved. In the following subsections, we first summarize general blockchain scalability reviews and then narrow the focus to sharding-specific surveys, before synthesizing the comparative gaps that provide the rationale for our contributions.

2.1. General Blockchain Scalability Reviews

Although numerous surveys or reviews have examined blockchain scalability across different architectural layers, none of these focus primarily on sharding techniques and their classifications. For example, the reviews on general blockchain scalability are delivered in [20,22,27,28,29]. Nasir et al. in [20] provide an extensive review of blockchain scalability, addressing enhancements across various layers and discussing issues like block size limitations and consensus mechanism constraints. Nevertheless, only few works on sharding are explored in [20].

The survey presented in [22] is oriented more on the classification of existing scalability solutions, evaluating the trade-offs between security and performance, particularly with a focus on sharding. Hafid et al. in [22] offer a comparative analysis of various protocols, emphasizing metrics such as TPS and latency. However, the discussion remains conceptual and lacks in-depth empirical validation in [22]. Zhou et al. in [27] focus on potential scalability solutions classified within the three blockchain layers: Layer 2 (off-chain approaches), Layer 1 (on-chain approaches including sharding), and Layer 0 (data propagation techniques). They offer a detailed comparison of these solutions, noting their effects on TPS and latency, while it does not reflect more recent developments.

The survey in [29] shifts focus to scalability challenges in time-sensitive applications, particularly examining Layer 1 approaches such as increasing block size and modifying consensus mechanisms. However, the work in [29] lacks the critical depth on technical and implementation challenges. Meanwhile, Sanka and Cheung in [28] introduce a five-layer conceptual model consisting of the platform, network, consensus, data, and application layers. Their survey in [28] provides a comprehensive analysis of the performance and scalability of existing Layer 1 blockchain approaches. Although one analysis provides a classification based on write, read, and storage performance, it lacks empirical validation for its claims. This reflects a broader gap in the literature, where surveys often address general blockchain scalability without offering a thorough classification or detailed discussion of specific sharding techniques.

2.2. Sharding-Focused Reviews

There exist few survey works focusing on sharding techniques introduced in the existing literature [47,56,57,58,59]. Li et al. in [47] review state-of-the-art sharding-based blockchains for both permissioned and permissionless networks. Their work covers Static, Dynamic, and Layered Sharding approaches, addressing key components, performance, and security aspects. It also outlines common attack surfaces and countermeasures. However, the analysis remains literature-based without experimental validation.

The surveys in [56,57] further explore sharding mechanisms and emphasize their importance for blockchain scalability. For instance, Yu et al. in [56] provide a systematic overview of major sharding mechanisms, highlighting both efficiency and security limitations. Their detailed comparison and evaluation offer valuable insights into the features, limitations, and theoretical TPS upper bounds of these mechanisms, serving as critical benchmarks for understanding the scalability potential of blockchain technology.

Huang et al. in [57] review various sharding approaches, identifying factors to enhance blockchain performance that provides a detailed review of sharding approaches, emphasizing their role in achieving horizontal scaling by dividing the blockchain into shards for increased TPS. They have thoroughly highlighted the specific challenges, features, and limitations of sharding through a comprehensive analysis. However, both surveys in [56,57] fall short in discussing practical implementation details and offer only minimal coverage of evolving trends.

Meanwhile, Hashim et al. in [59] review various consensus mechanisms for sharding, suggesting improvements in areas such as cross-shard communication and shard formation. The review meticulously examines the scalability challenges in blockchain technology and the approaches offered by database sharding to mitigate these issues. It includes an in-depth analysis of various sharding consensus mechanisms and provides valuable insights into current strategies. Furthermore, they outline significant unresolved challenges, such as effective shard formation, node assignment to shards, any possibilities of shard takeovers, and determination of the optimal number of shards in the blockchain network, yet their review in [59] does not critically assess more recent methodologies or technological advancements.

Then, the survey in [58] breaks down sharding into functional components and presents a modular architecture for sharding approaches. While the survey offers valuable insights into the underlying models and components by detailing key functions such as Node Selection, Epoch Randomness, Node Assignment, Intra-shard Consensus, Cross-shard Transaction Processing, Shard Reconfiguration, and Motivation Mechanisms and by emphasizing their integration into a cohesive approach, it does not provide a comparative evaluation of practical implementations or real-world approaches.

While recent studies have concentrated on blockchain sharding technologies, their analyses offer specific insights. For instance, Xiao et al. in [60] introduce a categorization of sharding methods into network, transaction, and state sharding, and discusses intra-slice and inter-slice consensus mechanisms. However, their analysis lacks comprehensive comparative metrics, benchmarks, and detailed application insights. Similarly, Zhang et al. in [61] analyze classical sharding mechanisms by thoroughly examining key components such as shard formation, reshuffle processes, intra-shard consensus, and cross-shard communication. While highlighting the advantages and limitations of existing scalability approaches, this study does not offer detailed empirical performance evaluations and practical guidance. In contrast, Yang et al. in [62] provides a comprehensive overview, integrating theoretical perspectives with experimental evaluations. Although their analysis captures current advancements and future trends across diverse blockchain ecosystems, it lacks a structured comparative framework for deeper analysis.

The survey in [63] offers a more structured and comprehensive review by systematically classifying and evaluating existing sharding approaches for both permissionless and permissioned blockchains. It introduces a robust evaluation framework based on scalability (latency, TPS, communication overhead), applicability (e.g., Trusted Execution Environment dependence, smart contract compatibility, universality, privacy), and reliability (randomness and fault tolerance). The study reviews existing sharding approaches and presents its findings through detailed classification tables and critical thematic analysis. Unlike prior works, it provides a unified comparative structure, though it remains literature-based and does not aggregate or analyze quantitative performance data from simulations or testbeds.

2.3. Comparative Summary and Research Gap

To summarize, none of the surveys described above specifically focuses on a comprehensive classification of sharding techniques, as one can observe from the comparison between our work and previous surveys presented in Table 1. While Liu et al. in [58] introduce some features of sharding, it focuses on modular architecture rather than classifying sharding methods comprehensively. Our review fills this gap by providing a structured classification of sharding techniques into Static, Dynamic, and Layered approaches, along with a detailed analysis of features and implications for blockchain scalability. In addition, it is the first to incorporate and examine standardization efforts, such as those from ITU-T (e.g., [50]), and ETSI (e.g., [64]), offering a broader perspective that has not been considered in previous surveys and reviews. Furthermore, our review also identifies AI-assisted sharding approaches and maps them to relevant features such as Consensus Selection and Epoch Randomness. Most of these approaches are discussed in context but are not included within our sharding classifications, as they often span multiple functional aspects and remain in the early stages of development.

Table 1.

Comparison of the contributions of our review with previous reviews (✓ represents a criterion is met). Here, BC and Stand. indicate Blockchain and Standardization, respectively.

While Table 1 summarizes the scope of prior surveys across performance, sharding types, AI-assisted approaches, and standardization activities, our review extends the comparison dimensions by integrating both technical features (e.g., Trust Establishment, Consensus Selection, Epoch Randomness, Cross-shard Algorithm, Cross-shard Capacity, DAG, and Availability Enhancement) and practical considerations such as standardization. This multidimensional approach allows for a more holistic evaluation framework compared to earlier works, capturing both the technical and practical dimensions of blockchain scalability and sharding techniques.

3. Contributions

The previous section reveals that, to date, there is no comprehensive review focusing specifically on sharding classifications within blockchain technologies. Thus, we offer a unique and thorough review of the research centered on advancements in sharding methods, an area that has not yet been reviewed in detail. Our review specifically addresses the gaps in existing studies, aiming to refine blockchain methodologies through an in-depth exploration of sharding. The main contributions of our review are outlined as follows:

- Our review introduces a structured classification that highlights three distinct sharding techniques, primarily concentrating on Layer 1. This classification provides a foundation for understanding how each sharding technique enhances blockchain scalability within the context of secure and decentralized shard-based blockchain systems.

- To provide readers with a broad overview of standardization efforts in blockchain sharding, we review the initiatives introduced by major standardization bodies (e.g., ITU-T, ISO, IEEE, and ETSI) and key industry consortia. We also highlight the operational guidelines and technical frameworks proposed by these organizations for sharding.

- We conduct a systematic literature review, through which we delve into various sharding techniques to assess their characteristics and limitations. This includes a thorough examination of key features such as Trust Establishment, Consensus Selection, Epoch Randomness, Cross-shard Algorithm, Cross-shard Capacity, DAG Block Structure, and Availability Enhancement, along with recent developments in AI-assisted sharding approaches, considered within these key feature areas to provide a comprehensive understanding of each technique and its underlying mechanisms.

- Through a comparative analysis, our review focuses specifically on the performance outcomes of these sharding techniques, with a particular emphasis on their ability to increase TPS and reduce transaction latency. This review aims to offer insights into the effectiveness and efficiency of the different sharding approaches in improving blockchain performance.

- Based on this review, we derive important insights, identify the major challenges and provide important future research directions.

4. Methodology

Our review addresses the following questions by concentrating on blockchain scalability concerns. As illustrated in the process in Figure 4, this focus allows for the identification of specific issues from past literature that form the foundation of this review.

Figure 4.

Workflow of the systematic mapping process for blockchain sharding review.

To ensure rigor, reproducibility, and comprehensiveness, we adopted a systematic literature review methodology, following established guidelines in software engineering and information systems research [65]. A systematic approach was chosen because blockchain sharding is a relatively new research area (first introduced in 2016 with Elastico [23]), and studies are dispersed across multiple venues. This methodology allows us to minimize bias, justify inclusion/exclusion decisions, and provide a transparent, replicable process for identifying the most relevant works.

- What are the fundamental obstacles to blockchain scalability, and what strategies have researchers developed to overcome them?

- What are the methods or techniques used in blockchain sharding along with its advantages and disadvantages?

- How do different sharding methods adapt to the existing challenges?

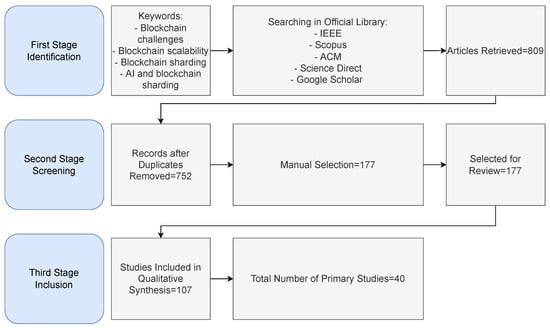

In the first phase of reviewing articles, sources were obtained from IEEE, Scopus, ACM, ScienceDirect, and Google Scholar. Keywords were used for the search to extract the relevant articles: “blockchain challenges”, “blockchain scalability”, “blockchain sharding” and “AI and blockchain sharding“. After conducting a consolidated search using all keywords, a total of 809 articles were identified. These include journal and conference papers. As blockchain sharding research only started in 2016, the number of related publications remains relatively small. Moreover, to further filter the articles, these two criteria need to be taken into account which are:

- Exclusion: Papers published before 2016, uncited works, duplicates, abstracts only, and non-English publications.

- Inclusion: English language articles published after 2016, with citations, and directly addressing the research questions.

Publications were chosen for this review based on their relevance to the research questions discussed earlier. Most pertinent publications are categorized based on the title, authors, year of publication, and proposed ideas. Followed by the manual filtering process where each of the chosen publications underwent a detailed analysis.

In the second phase of our methodology, 752 publications remained after duplicate removal. From these, 177 distinct papers were selected for initial review. These publications focused on the defined keywords, including blockchain scalability, sharding, and challenges, including the application of AI in blockchain sharding, as well as broader topics like cryptocurrency and smart contracts.

The third phase involved a refined relevance assessment based on predefined inclusion criteria, which focused on methodological depth, technical contribution, applicability to sharding-based scalability, and novelty. This process resulted in the selection of 107 papers that were deemed thematically and methodologically relevant. These comprised surveys, review articles, conceptual frameworks, system models, and foundational theoretical studies.

From the 107 papers, a final set of 40 primary studies was chosen for in-depth analysis. The selection was guided by factors such as citation impact, methodological rigor, system implementation clarity, and representativeness of different sharding techniques. This final set consisted of 6 survey articles, 5 review papers, 2 standardization papers, 8 papers on AI-assisted sharding, and 19 papers describing sharding-based blockchain projects.

To strengthen the reliability and reproducibility of our synthesis, we applied a consistent set of evaluation dimensions across all selected studies, focusing primarily on throughput (TPS), latency, and cross-shard efficiency. Although the underlying experiments varied, for example AWS EC2 simulations in Kronos [66], large-scale node experiments in DL-Chain [67], and protocol-specific testbeds in DYNASHARD [68], our classification framework (Figure 3) ensured that results were interpreted within these shared benchmarks. This uniform lens enhances comparability across heterogeneous systems. Nevertheless, differences in simulation environments, parameter choices, and node configurations can introduce variability that may limit strict reproducibility across versions of framework components. Highlighting these divergences is essential to provide a transparent assessment of robustness and to caution against overgeneralization of performance claims.

5. Overview of Sharding and Its Techniques

In this section, we provide an overview of sharding in blockchain technology and outline its three primary techniques: Static, Dynamic, and Layered Sharding. Each technique offers distinct approaches to partitioning blockchain data, which is important for enhancing the scalability and efficiency of transaction processing across different blockchain network conditions.

5.1. Sharding Overview

The concept of sharding, originally derived from database management, involves dividing a larger database into numerous smaller datasets across various nodes [56]. In the context of blockchain, sharding was first introduced in [23], subsequently leading to the development of various sharding approaches (e.g., [24,51,52]).

A blockchain network is divided into multiple shards (say, n number of shards). Each shard consists of a number of nodes (say, m). This defines the shard size (i.e., the number of nodes within each shard determines the size of a shard). Then, the total number of nodes in the blockchain network is . Figure 5 illustrates the concept of sharding in a blockchain network. Each shard maintains its own independent blockchain, processing its own sequence of blocks (e.g., Block 1 to Block x of Shard 1 in Figure 5) and transactions without any interactions with other shards (i.e., transactions are processed in parallel within their respective shards unless a cross-shard communication mechanism is implemented).

Figure 5.

Sharding architecture illustrates a sharding approach with n shards (Sn specifically denotes the nth shard), each containing m nodes. It demonstrates parallel transaction processing, where each shard independently processes transactions within its own sequence of blocks — S1 Block 1 (Shard 1), S2 Block 1 (Shard 2), and Sn Block 1 (Shard n). Block x and Transaction x represent the respective block number and transaction number within each shard.

When a transaction involves multiple shards communicating with each other, a cross-shard transaction occurs. Figure 6 illustrates an example process of cross-shard transactions involving shard 1 and shard 2, where transaction 2 in shard 1 requires communication with transaction 4 in shard 2 to complete the transaction process. Cross-shard transactions are more complex because they cause higher latency due to communication between shards, add coordination overhead to manage transaction steps across shards, and create security challenges in maintaining consistent and secure data across multiple shards.

Figure 6.

Cross-shard transaction involves interaction between different shards, such as a smart contract transaction from Node 1 in Shard 1 to Node 2 in Shard 2 as shown here. If both accounts are in the same shard, it is a normal sharding transaction. Else, Shard 1 sends transaction 2 to Shard 2, and Shard 2 verifies and records it as transaction 4 to complete.

The discussion on sharding raises questions about the number of shards, shard size, and their optimal values, which appear to be inconsistent across different usage contexts. This has led to the evolution of sharding techniques, including Static (e.g., Elastico [23]), Dynamic (e.g., User Distribution [69]), and Layered Sharding (e.g., Pyramid [24]).

5.2. Techniques of Sharding

This subsection provides a brief overview of each sharding technique.

5.2.1. Static Sharding

Static Sharding is a technique that determines the shard size and number of shards based on the number of nodes that will be allocated equally across different shards. Various predetermined numbers of shards and shard sizes have been tested to determine the optimal configuration that maximize transaction processing speed and minimize latency. However, conclusions about the optimal number of shards and shard sizes to enhance sharding effectiveness are still inconclusive. Static Sharding approaches in public blockchains include Elastico [23], OmniLedger [51], RapidChain [70], Monoxide [42], Chainspace [43], DL-Chain [67], and Kronos [66]. Notably, Chainspace [43] integrates smart contracts, while Meepo [71] represents a consortium blockchain within Static Sharding.

5.2.2. Dynamic Sharding

Compared to Static Sharding, Dynamic Sharding facilitates dynamic resource sharing to cope with demand. That may allocate the nodes across different shards and dynamically change the shard sizes and number of shards. Due to the weakness of cross-shard communication, such as the one discussed in [72], which could exhaust the network and reduce the scalability benefits of sharding, there is a high cross-shard communication overhead. An alternative Dynamic Sharding approach is investigated, as mentioned in the sharding overview section (see Section 5.1). Dynamic Sharding is one of the alternatives to increase the performance (TPS) of blockchain network that supports smart contracts as it may help reduce the overhead introduced by cross-shard communication overhead as discussed in [25]. There are eight different existing research efforts in Dynamic Sharding to the best of our knowledge, particularly in public blockchain: On Sharding Open Blockchains [25], User Distribution [69], Dynamic Blockchain Sharding [53], Effective Sharding Consensus Mechanism [52], DYNASHARD [68], AEROChain [55], SkyChain [73] and one consortium blockchain: Dynamic Sharding Protocol Design [72].

5.2.3. Layered Sharding

Since all the approaches in sharding employ complete sharding, this significantly adds additional overhead in ensuring cross-shard transaction atomicity and consistency, which in turn negatively affects the sharding performance [24]. To overcome this challenge, Layered Sharding is introduced. In this sharding technique, when a shard(s) is occupied with transactions, any subsequent transactions of the shard are assigned to a shard having sufficient processing capability, thereby avoiding the communications overhead that cross-sharding transaction imposes. Three notable works have investigated the Layered Sharding technique: Pyramid [24], OverlapShard [54] and SPRING [74]. Unlike complete sharding, in a Layered Sharding blockchain, the shards can validate and execute cross-shard transactions directly and efficiently. Though these sharding techniques leverage blockchain employing cross-shard transactions, there is a big room to further improve the blockchain performance by further improving cross-shard techniques.

6. Standardization Efforts in Blockchain Sharding

The evolution of blockchain technology has led to increased efforts by international and industry standards organizations to address scalability challenges, particularly through the standardization of sharding mechanisms. Organizations including ITU-T, ETSI, ISO, IEEE, and several national bodies have each addressed these challenges from distinct perspectives. While ISO, IEEE, and W3C primarily reference sharding in broad architectural frameworks or performance metrics, it is ITU-T and ETSI that have produced the most concrete and operational standards for sharding, with ETSI also pioneering guidelines on AI-assisted sharding.

ITU-T Recommendation F.751.19 [50] specifies a structured framework and technical requirements for sharded blockchains, delineating an “on-chain sharding” layer composed of five core functional components: shard management, sharding strategy management, intra-shard processing, cross-shard distribution, and cross-shard coordination. Each component is defined with mandatory capabilities, and for instance, shard management is required to oversee the lifecycle of shards and maintain data integrity during auto-scaling and migration, while sharding strategy management is responsible for mapping transactions to shards to facilitate near-linear scalability. Nevertheless, the recommendation in [50] primarily describes the objectives to be achieved and leaves substantial implementation challenges to system developers. These challenges include the design of flexible strategies and the management of distributed deadlocks. Furthermore, F.751.19 [50] mandates features to address critical risks, such as random validator allocation and dynamic reallocation, but does not prescribe the technical specifics necessary to ensure robust implementation. The voluntary and high-level nature of the recommendation in [50] may therefore result in inconsistent or suboptimal deployments, which highlights the ongoing need for more prescriptive standards and deeper technical analysis as sharding adoption matures.

In parallel, ETSI through its Industry Specification Group on Permissioned Distributed Ledger, has introduced explicit operational guidance on AI-driven sharding. ETSI GR PDL-032 V1.1.1 (2025-04) in [64], “Artificial Intelligence for PDL,” recommends the use of predictive models such as Long Short-Term Memory neural networks to forecast transaction loads and dynamically adjust shard boundaries. The report in [64] documents reductions of up to 23% in cross-shard interactions compared to Static Sharding. The specification further details adaptive shard allocation, intelligent cross-shard transaction management, and AI-based optimization of key DLT functions including security, consensus, and resource allocation. While this group report marks a significant advance in standardizing AI integration with blockchain sharding, its guidelines remain advisory, highlighting the ongoing need for more prescriptive, implementation-oriented standards to support broader industry adoption.

Other standardization organizations, such as ISO, particularly ISO/TR 24332:2025 [75], the IEEE, which develops blockchain and distributed ledger technology standards through the IEEE Standards Association [76], and the W3C with its Decentralized Identifier Resolution (DID Resolution) v0.3 [77], primarily address blockchain scalability and interoperability through high-level reference documents. For example, ISO/TR 24332:2025 [75] mentions sharding within its frameworks but does not define specific operational models. IEEE provides a broad set of standards covering interoperability, the Internet of Things (IoT), and cryptocurrencies, but does not prescribe sharding-specific techniques. Meanwhile, W3C focuses on ensuring interoperability for decentralized identity solutions, leaving the technical implementation details to individual platform developers.

In summary, while the landscape of blockchain standardization is broad, only ITU-T in [50] and ETSI [64] have advanced detailed, operational frameworks and guidelines for sharding, with ETSI further pioneering AI-based sharding management. Within the ITU-T framework, Recommendation F.75-1.19 in [50] provides a focused set of requirements for blockchain sharding, defining core functional components. While ITU-T’s Recommendation F.751.19 [50] and ETSI GR PDL-032 V1.1.1 (2025-04) [64] are valuable, their voluntary status means they provide limited technical depth and lack prescriptive implementation guidance. This highlights the need for more rigorous technical specifications, comprehensive analysis of implementation challenges and trade-offs, and a balanced assessment of risks and benefits as these technologies mature and converge. Meanwhile, the broader standards community continues to provide essential but more generic frameworks that support ongoing innovation and convergence in this rapidly evolving field.

7. Features in Existing Sharding Techniques

There has been a large body of research devoted in introducing efficient sharding techniques to date. Each sharding technique has its respective features to enhance blockchain performance. Beyond classifying sharding into Static, Dynamic, and Layered, our review improves the comparison by linking these techniques to seven key features, giving a clearer and more comprehensive framework for evaluation. This section aims at providing an extensive review of the works contributing to improving the different features of the sharding techniques along with the use of AI in relevant features (e.g., [66]). Although features such as Trust Establishment and Consensus Selection are foundational to blockchain technology in general, they require substantial re-engineering in sharded architectures to address decentralized shard formation, shard-specific security, and cross-shard transactional consistency.

The relationship between these features and key functional phases in sharded blockchain systems is illustrated in Figure 7, further reinforcing their importance as critical design pillars. Specifically, Trust Establishment relates to the Initialization phase, ensuring secure and decentralized formation of nodes into shards. Consensus Selection and DAG Block Structures contribute to Consensus and Block Formation, addressing intra-shard and cross-shard transaction ordering. Epoch Randomness underpins Security Enhancement by introducing unpredictability to mitigate shard takeover attacks. Cross-shard Algorithms and Cross-shard Capacity are integral to Cross-Shard Coordination, facilitating efficient and scalable inter-shard transaction handling. Lastly, Availability Enhancement supports Resiliency, ensuring system robustness even under partial shard failures. Although different systems may prioritize certain features differently, these aspects together form the core foundations that support the scalability, security, and resilience of sharded blockchain ecosystems.

Figure 7.

Features related to blockchain sharding architectures and their responsibilities.

7.1. Trust Establishment

Trust Establishment is the process of verifying a node’s or user’s identity to enable secure transactions within a blockchain. Within Static Sharding, the work in [23] introduces unique features, such as the Identity Establishment and Overlay Setup for Committees. Here, each processor autonomously generates an identity composed of a Proof-of-Work (PoW), IP address, and public key. During the Committee Formation phase, processors verify each other’s identities by solving a PoW problem with publicly verifiable solutions. Sharding typically employs a specialized method for identity verification, which can enhance overall security. However, the reliance on PoW introduces significant scalability challenges, as its computational demands become increasingly burdensome with network expansion.

Significant differences are observed in how various blockchain architectures implement Trust Establishment. For instance, Elastico [23] explores a two-stage Trust Establishment process, potentially enhancing security through multiple validations but complicating the consensus process and potentially slowing down transactions. Conversely, OmniLedger [51] and RapidChain [70] emphasize a single primary stage to enhance speed and simplicity, though this may make them more vulnerable to coordinated attacks. These differences underscore the crucial trade-off between security and efficiency in blockchain design, with each approach offering its own strengths and limitations.

Approaches such as Monoxide [42] and Chainspace [43] do not introduce novel methods for establishing trust, but instead integrate trust directly into their consensus mechanisms. Although this streamlined approach could enhance process efficiency, it also exposes to risks if the consensus mechanism itself is compromised. A notable drawback is that the absence of separate Trust Establishment feature could hinder these technologies’ ability to adapt to emergent security threats.

An interesting case is Meepo [71], a consortium blockchain, where Trust Establishment is inherently assured due to the closed nature of its approach, eliminating the need for new Trust Establishment methods. This built-in trust simplifies management and keeps transactions private within the group, but it also restricts the network’s openness and may not perform well in larger, more decentralized environments, which is a significant limitation in its design.

Liu et al. in [66] propose Kronos, which establishes trust through a secure shard configuration mechanism that may utilize PoW, Proof-of-Stake (PoS), or public randomness to assign nodes to shards and resist Sybil attacks at the entry point. This process ensures that nodes cannot cheaply fabricate multiple identities during initialization or periodic reconfiguration. Simulations across thousands of nodes on AWS EC2 validate the effectiveness of Kronos under different network models (synchronous, partially synchronous, and asynchronous). However, trust is statically assigned: once a node is verified, no ongoing trust reassessment is performed. Thus, if a node initially behaves correctly but later colludes or becomes compromised, the work in [66] has no built-in mechanisms to detect or expel it. The security model also critically depends on the honest majority assumption within shards, which may not hold under economically incentivized attacks. No behavioral monitoring or epochal re-verification is embedded, posing long-term scalability risks for highly dynamic public deployments.

Lin et al. in [67] introduce DL-Chain, a sharding system that establishes trust and node assignment at the start of each epoch using epoch randomness generated by Verifiable Random Functions (VRFs) combined with Verifiable Delay Functions (VDFs). Each node is randomly assigned to a Proposer Shard and a Finalizer Committee based on this process, ensuring fair and unpredictable allocation. This approach prevents adversaries from concentrating malicious nodes within a single shard, thereby enhancing security while avoiding the computational overhead of PoW-based approaches. Experimental results with up to 2550 nodes demonstrate that the work in [67] random assignment strategy maintains negligible failure probability and resists targeted shard capture. However, DL-Chain treats Trust Establishment as a static process within each epoch. There are no mechanisms for dynamic reassignment or behavior-based penalties during an epoch. As a result, malicious nodes admitted at epoch formation persist throughout the epoch without recertification or removal. While this work assumes the underlying randomness process is bias-resistant and publicly verifiable, it does not explicitly discuss the risks of potential collusion in VRF/VDF generation.

In Dynamic Sharding, Trust Establishment remains an essential process. However, the works in [52,53] do not introduce novel approaches. For example, the work in [69] employs the Louvain Algorithm to facilitate Trust Establishment, yet it uses a well-established method rather than presenting an innovative approach. However, this reliance on conventional methods may limit the potential for significant improvements in security and system flexibility.

Liu et al. [68] propose DYNASHARD, which utilizes a secure random process for managing committee selection to promote fairness and reduce the risk of collusion. While the protocol ensures committees are selected via internal randomness, it does not explicitly specify periodic or epoch-based reshuffling of committees, nor does it present simulation results quantifying committee diversity or capture rates over time. The Trust Establishment mechanism in DYNASHARD is solely randomness-driven, without incorporating historical node behavior or penalization for misbehavior in the committee selection process. Furthermore, the protocol does not describe any external or decentralized randomness beacon for committee selection, nor does it detail how the randomness source is made publicly verifiable. This lack of external verifiability could limit transparency and potentially undermine trust in adversarial scenarios.

AEROChain [55] establishes trust implicitly through its dual-shard architecture, where each node belongs to a physical shard for transaction validation and to a logical shard for account migration coordination. Both layers use Practical Byzantine Fault Tolerance (PBFT) for consensus, embedding trust into repeated voting among nodes. However, the approach lacks mechanisms for behavioral scoring or identity revalidation across epochs. Trust is static once nodes are assigned, which makes the work in [55] vulnerable to long-term adversarial drift. The scope in [55] focuses on scalable and balanced account migration, not direct trust modeling. No trust-specific simulations are presented, and findings center on the Deep Reinforcement Learning (DRL) based migration model.

SkyChain [73] proposes periodic re-sharding to dynamically reassign nodes across committees, aiming to balance performance and security in a dynamic blockchain environment. Their method leverages an adaptive ledger protocol and a DRL-based sharding mechanism to adjust shard configurations based on observed network state. However, identity establishment is handled through Sybil-resistant PoW puzzles, with no additional cryptographic attestation or behavioral scoring mechanisms in place. The re-sharding process is explicitly adaptive, driven by the DRL policy rather than fixed or deterministic intervals. The primary focus of SkyChain is on scalable dynamic re-sharding, with contributions centered around optimizing performance and security trade-offs. Findings are reported from simulation-based evaluations, focusing on TPS, latency, and safety metrics, without any explicit trust or reputation evaluation.

Similarly, in Layered Sharding, while trust is inherently addressed in every approach, one work in [24] adopts an Assignment System for Trust Establishment, whereas another work in [54] does not propose any specific new method. But inconsistency in addressing trust challenges underscores the need for more innovative solutions that can better meet the evolving security demands of blockchain networks.

SPRING [74] implements a Trust Establishment mechanism where nodes undergo a one-time PoW process at registration. The last bits of the PoW solution directly determine the initial shard assignment. The protocol employs a reconfiguration phase in which consensus nodes are regularly shuffled among shards for security, using VRF to generate unpredictable, bias-resistant randomness for node redistribution and leader selection. This ongoing reconfiguration mechanism is explicitly designed to prevent persistent collusion or the formation of static shard compositions. While the initial entry barrier is minimal compared to protocols with ongoing reputation or slashing, the security model relies on periodic node rotation and PBFT consensus within shards, not on dynamic behavioral trust assessment. Therefore, the risk of persistent malicious behavior is mitigated by the enforced protocol-level reshuffling of nodes, maintaining the integrity and unpredictability of shard compositions over time.

TBDD [78] introduces a promising response to these limitations through a trust-based and DRL-driven framework. It integrates multi-layered trust evaluation using historical, direct, and indirect feedback to generate local and global trust scores. These scores are used by a decentralized TBDD Committee (TC) to guide re-sharding through DRL, classifying nodes into risk levels and reallocating them to enhance security and reduce cross-shard transactions. Empirical results demonstrate up to 13% improvements in TPS and significantly better risk distribution under adversarial loads. However, maintaining accurate trust scores requires frequent updates and cross-node communication, posing challenges in high-churn environments like IoT. Additionally, aggregated trust metrics may lag behind behavioral shifts, and the committee structure introduces a potential point of failure if compromised. Bootstrapping new or low-activity nodes remains a vulnerability, and the centralized coordination role of the TC raises concerns about long-term decentralization.

Trust Establishment is a fundamental component of Static, Dynamic, and Layered Sharding. Static Sharding, leveraging innovative Trust Establishment methods, often achieves demonstrably superior security. In contrast, Dynamic and Layered Sharding, reliant on conventional techniques, face inherent limitations in security enhancement and adaptive system reconfiguration. This reliance not only impedes immediate progress but also creates a potential bottleneck for future advancements in resilience and agile scaling. While recent AI-driven frameworks such as TBDD introduce layered trust scoring and DRL-based re-sharding, they depend on centralized committee coordination and continuous cross-node communication, which may limit scalability in high-churn environments. Several approaches, including SPRING [74] and DL-Chain [67], treat trust as a static entry event without behavioral reassessment or epochal refresh, which increases vulnerability to long-term collusion. Furthermore, trust metrics in many models are not verifiably auditable or resistant to subtle manipulation, especially under adversarial conditions.

7.2. Consensus Selection

The selection of consensus mechanisms is critical for ensuring the integrity and efficiency of the networks. Various approaches have been developed to enhance the consensus process [79,80] across different blockchain architectures, each with its unique advantages and limitations.

Within Static Sharding, Elastico [23] demonstrates a model that leverages PoW alongside PBFT to establish secure consensus within committees. This hybrid consensus mechanism allows for a Final Consensus Broadcast after achieving agreement within a committee using a conventional Byzantine Agreement protocol. The final committee aggregates the results from all committees and uses a Byzantine consensus process to finalize the outcome then broadcast it to the entire network. The merging of PoW and PBFT is unique, but handling many committees and combining outcomes may slow down the process and increase overhead, especially as the network scales.

OmniLedger [51] employs ByzcoinX for its consensus mechanism. ByzcoinX extends the classical Byzantine consensus by forming a communication tree, where validators are organized hierarchically to reduce messaging overhead. For example, instead of every node broadcasting to all others, leaders in each subgroup aggregate votes and forward them upward, improving efficiency and reducing latency under high transaction loads. In OmniLedger [51], this enhances the traditional roles of PoW and PoS, which in this context do not directly contribute to transaction validation but rather to the representation of validators in the Identity Block Creation process. ByzcoinX addresses the need for more resilient communication within shards to manage transaction dependencies and improve block parallelization, even in scenarios where some validators fail. ByzcoinX enhances shard communication, but its reliance on validators for identifying block production could lead to inefficiencies or vulnerabilities if coordination fails.

RapidChain [70] offers an Intra-committee Consensus (PBFT) that leverages a unique gossiping protocol suitable for handling large blocks and achieving high TPS through block pipelining. This setup includes a two-tier validation process where a smaller group of validators processes transactions quickly, which are then re-verified by a larger, slower tier for enhanced security. Even though speed and security are increased by this two-tier method, delays may be introduced by the second validation phase.

Monoxide [42] proposes Asynchronous Consensus Zones to scale the block-chain network linearly without compromising on security or decentralization. Its consensus mechanism, Chu-Ko-Nu mining, also functions as a Trust Establishment tool by ensuring uniform mining power across zones and introducing Epoch Randomness. Chu-ko-nu mining, a novel proof-of-work scheme, is employed in Asynchronous Consensus Zones to enhance security. This allows miners to use a single PoW solution to create multiple blocks simultaneously, one per zone, ensuring that mining power is evenly distributed across all zones. As a result, attacking a single zone is as difficult as attacking the entire network. Asynchronous Consensus Zones of Monoxide [42] provide linear scalability, but the challenge of synchronizing randomization and coordinating consensus across multiple zones may lead to increased overhead and latency, particularly in larger networks.

Chainspace [43] utilizes the Sharded Byzantine Atomic Commit (S-BAC) protocol, a combination of Atomic Commit and Byzantine Agreement protocols, to ensure consistency across transactions that involve multiple shards. This method guarantees that a transaction must be unanimously approved by all shards it touches before it can be committed, thus maintaining transaction integrity. Although the S-BAC protocol maintains high integrity in cross-shard transactions, the need for unanimous clearance may introduce inefficiencies or delays, especially as the number of shards increases. In practice, S-BAC works like a two-phase commit extended to sharded environments. For instance, if a transaction spans Shard A and Shard B, both shards first enter a ‘prepare’ phase and lock the resources. Only if both confirm in the ‘commit’ phase is the transaction finalized; otherwise, both shards roll back. This ensures atomicity across shards, though at the cost of higher communication overhead.

Meepo [71], on the other hand, does not introduce a new consensus mechanism but rather employs a non-modified PoA, relying on the existing trust model inherent in its consortium blockchain framework. The blockchain’s flexibility and openness are restricted by Meepo’s use of PoA, which makes it less suitable for decentralized or permissionless networks, even though it simplifies the consensus process in a regulated setting.

Kronos [66] distinguishes between “happy” and “unhappy” paths in cross-shard transaction processing. In normal operations, transactions are processed or rejected with minimal overhead using standard intra-shard Byzantine Fault Tolerance (BFT), while the unhappy path invokes additional rollback mechanisms to ensure atomicity and shard consistency. AWS experiments demonstrate that Kronos achieves high TPS and low latency under various network synchrony and Byzantine fault conditions. The protocol’s transaction integrity and atomicity guarantees are established through formal security analysis presented in this work, rather than through empirical experiments. However, the rollback mechanism introduces significant communication overhead and increases finalization latency under persistent fault conditions. Moreover, the protocol assumes low rollback frequency, and frequent rollbacks in highly adversarial environments could lead to severe TPS degradation. Kronos also does not dynamically adapt committee memberships or quorum thresholds based on real-time fault rates, limiting flexibility.

DL-Chain [67] modularizes consensus by dividing transaction proposal and finalization into two distinct layers. Proposer shards are responsible for assembling and processing transactions, while finalizer committees independently validate and finalize these transactions. This architectural separation enhances parallelism and fault isolation, resulting in significant TPS improvements as demonstrated in experimental results. Node assignment to proposer shards and finalizer committees is performed at the beginning of each epoch using a randomness process, and these assignments remain fixed throughout the epoch. Consequently, DL-Chain does not support dynamic reassignment or migration of workloads during an epoch. In scenarios with highly variable or uneven transaction loads, some proposer shards may become bottlenecks due to the absence of intra-epoch load balancing mechanisms. If a finalizer committee leader becomes non-responsive due to validator churn or failure, DL-Chain employs a view change protocol within the Fast Byzantine Fault Tolerance (FBFT) consensus algorithm to replace the faulty leader and restore liveness within the committee.

In Dynamic sharding, DYNASHARD [68] adopts a hybrid consensus architecture, utilizing BFT for intra-shard transaction validation and a combination of Multiparty Computation (MPC) and threshold signature schemes for global coordination. This design ensures that transaction commitments, both within and across shards, are achieved with strong atomicity and security guarantees. The protocol’s evaluation demonstrates resilience to adversarial behaviors, including collusion and double-spending, through comprehensive security analysis and simulation-based validation. Nonetheless, the use of threshold signature aggregation and MPC introduces non-trivial computational and bandwidth overheads. These cryptographic protocols require each participant to compute and exchange partial signatures or intermediate values in multiple rounds, significantly increasing the computational workload and network traffic compared to traditional consensus mechanisms. As transaction volume and committee size scale, these overheads may impact TPS and latency, making DYNASHARD less suitable for high-frequency or latency-sensitive applications (e.g., [51]). Additionally, DYNASHARD does not incorporate early commitment or fast-finality optimizations, leaving it potentially susceptible to synchronization delays during periods of peak system concurrency.

AEROChain [55] uses PBFT at two levels: physical shards (validate local transactions) while the logical shard (handles migration proposals during the reconfiguration phase). The contribution lies in separating state migration from transaction consensus. This layered PBFT structure provides modularity but introduces coordination overhead and potential bottlenecks during high migration volumes. The approach was validated as part of the full AEROChain simulation, but no consensus-specific benchmarks were isolated. The absence of fast-path execution or rollback handling limits resilience to stalled consensus rounds.

SkyChain [73] uses standard BFT protocols for intra-shard consensus without modifications or enhancements. Its novelty lies in DRL-powered re-sharding, not consensus innovation. Their method assumes fewer than one-third faulty nodes but lacks rollback, fast-track, or speculative commit techniques. No simulations were presented to test consensus scalability. Thus, while structurally sound, its consensus mechanism is basic, and no fallback mechanisms are discussed.

While in Layered Sharding, SPRING [74] employs PBFT as the intra-shard consensus protocol for both A-Shard and T-Shard. The protocol operates under the partial synchrony assumption common to BFT systems. SPRING’s DRL agent dynamically assigns new addresses to shards in order to balance transaction load and minimize cross-shard transactions. In parallel, the protocol includes a periodic reconfiguration phase in which consensus nodes are reshuffled across shards to maintain security and prevent persistent collusion. While workload imbalance among shards may still arise due to the power-law distribution of transaction activity, SPRING’s design seeks to balance TPS and fairness without requiring dynamic committee resizing or more advanced consensus adaptations. Experimental results show that SPRING reduces cross-shard transaction ratio and improves TPS, but all evaluated consensus groups are periodically rotated and operate under standard PBFT assumptions.

In Dynamic (e.g., [25,52,53]) and Layered Sharding (e.g., [24,54]), a key challenge is designing consensus protocols that efficiently coordinate how blocks are generated and verified across shards. These approaches typically rely on existing, non-modified consensus mechanisms such as PoW, PBFT, or byzantine-based techniques, which may not introduce new consensus mechanisms but are essential for the proper functioning of these sharded techniques. As the demand for more effective and scalable approaches grows, reliance on pre-existing consensus processes may limit the flexibility and scalability of Dynamic and Layered Sharding systems.

Recent efforts have explored the integration of AI into sharding and consensus mechanisms to enhance blockchain scalability, fairness, and energy efficiency. El Mezouari and Omary in [81] present a hybrid consensus framework that combines PoS for block creation with AI-enhanced sharding for transaction validation. In their approach, decision tree algorithms dynamically allocate tasks to shards based on network load and historical node behavior. Entropy measures and Haversine distance metrics are used to optimize shard load distribution and minimize cross-shard communication overhead. El Mezouari and Omary in [81] argue that this model mitigates the centralization risks of pure PoS while improving decentralization, TPS, and energy efficiency compared to traditional PoW models. However, incorporating AI-driven shard management introduces challenges related to algorithmic transparency, susceptibility to model drift, and dependency on high-quality, unbiased training datasets. Additionally, the operational complexity of coordinating between PoS and intelligent sharding logic could pose risks to system stability if not rigorously optimized.

Similarly, Chen et al. in [82] propose Proof-of-Artificial Intelligence (PoAI) as an alternative to traditional PoW and PoS consensus mechanisms. In PoAI, nodes are classified into “super nodes” and “random nodes” using Convolutional Neural Networks (CNNs) trained on metrics such as transaction volume, network reliability, and security posture. Validators are dynamically selected based on capability scores rather than hash power or stake, aiming to reduce resource consumption and promote fairer node rotation. While PoAI offers improvements in efficiency and energy conservation, it introduces concerns about model explainability, fairness, and vulnerability to adversarial attacks. The criteria defining “super nodes” could inadvertently concentrate power among high-resource participants, undermining decentralization goals, particularly if CNN biases are not properly mitigated.

To address the limitations observed in conventional sharding and consensus mechanism designs, emerging AI-augmented mechanisms offer promising alternatives. Consensus mechanisms like PoAI [82] leverage machine learning models to intelligently assign validator roles, thereby improving transaction confirmation speed and reducing energy consumption. Similarly, hybrid approaches that integrate PoS with AI-based shard reconfiguration [81] provide dynamic adaptability to evolving network conditions. However, despite demonstrating measurable performance improvements, these AI-driven designs introduce new challenges related to transparency, fairness, and adversarial resilience. Future work must critically address these issues, including enhancing model interpretability, safeguarding against manipulative behaviors, and developing lightweight validation protocols, before widespread deployment in permissionless blockchain environments can be realized.

Li et al. in [83] focuses on improving TPS in sharded blockchain systems through optimization of consensus-layer parameters, such as block size, shard count, and time interval. It introduces Model-Based Policy Optimization for Blockchain Sharding (MBPOBS), a Reinforcement Learning (RL) framework that uses Gaussian Process Regression to model blockchain performance and guides parameter optimization via the Cross-Entropy Method. The DRL component is used to predict performance outcomes and select optimal configuration policies in a sample-efficient manner. Simulation results show that MBPOBS yields substantial TPS improvements (1.1× to 1.26×) compared to model-free RL baselines (Batch Deep Q-learning and Deep Q-Network with Successor Representation). The primary strength of this work lies in its statistically grounded, sample-efficient method for optimizing consensus-related parameters. However, the study is limited to consensus performance and does not incorporate aspects such as trust, shard reliability, or cross-shard fault tolerance. Additionally, all evaluation is performed in a simulated environment, though the model’s robustness is tested under varying rates of malicious nodes (adversarial settings).

In conclusion, while these various consensus mechanisms provide effective approaches for managing blockchain transactions across different systems, they also present challenges related to scalability, reliability, and complex implementation. A thorough evaluation of each approach is essential to determine the best strategy for maintaining system performance and security. The scalability and adaptability of blockchain networks, especially in large or dynamic environments, are at risk due to bottlenecks or inefficiencies, whether from traditional consensus mechanisms or newer, more complex methods that could cause new complications. Although AI-based models such as PoAI, hybrid PoS-AI designs, and model-based optimization frameworks have shown measurable improvements in simulation settings, most have not been validated under real-world or adversarial conditions. In addition, many of these approaches do not address Trust Establishment, cross-shard fault tolerance, or model explainability, limiting their practical assessment for deployment in decentralized environments.

7.3. Epoch Randomness

The integration of Epoch Randomness within various sharding techniques significantly enhances the security and fairness by introducing unpredictability in node and shard assignments. This feature is crucial for preventing manipulation and ensuring equitable distribution of network load and responsibilities. Epoch Randomness enhances security, but its application across different sharding techniques presents challenges in balancing operational efficiency with increased complexity.

In Static Sharding, Epoch Randomness is implemented through distinct methods in several approaches. Elastico [23] employs a Distributed Commit-and-XOR method, which creates a biased yet constrained set of random values that directly influence the PoW challenges in the subsequent epoch. This method ensures that randomness plays a role in the mining process, which helps strengthen security by making it harder for attackers to predict or manipulate the mining outcomes. Although the Commit-and-XOR method improves security, its complexity could result in excessive overhead, which may impact network efficiency as the network scales. OmniLedger [51] uses a combination of VRF [84,85] and RandHound [26], which ensures the randomness is both unbiased and unpredictable. RandHound’s approach, which involves dividing servers into smaller groups and using a commit-then-reveal protocol, ensures that the randomness includes contributions from at least one honest participant, thus maintaining integrity. The reliance on multiple server groups and protocols may slow down the process, especially in larger networks, potentially impacting overall performance. RapidChain [70] opts for a Distributed Random Generation protocol optimized by a brief reconfiguration protocol based on the Cuckoo Rule [86], allowing for rapid and unbiased randomness generation essential for its operational efficiency. Although RapidChain’s method speeds up transaction processes, it may not be able to expand when more complex random choices are required. As the need for sophisticated approaches grows, scalability issues may arise within blockchain networks.

Kronos [66] generates epoch randomness for shard assignment using public randomness, which can be derived from PoW, PoS, or other secure sources. This process provides non-predictable, deterministic validator assignment. The protocol is designed to ensure consistent shard diversity and resilience against validator collusion through its random assignment process, assuming the underlying randomness is secure. However, if the randomness generation relies on PoW or PoS outputs rather than a publicly verifiable randomness beacon, it may be vulnerable if those outputs become skewed or dominated by a colluding miner or staker group.

DL-Chain [67] generates randomness at each epoch using outputs VRFs, which provide unpredictability and allow local proof verification. The work in [67] claims that the assignment process based on this randomness is bias-resistant, attributing this property to the use of VRF and VDF technologies as established in prior work. However, DL-Chain does not include simulation studies or experiments specifically evaluating the bias-resistance or security of its own randomness mechanism. Additionally, the protocol does not incorporate decentralized randomness aggregation or zero-knowledge proofs for randomness generation. This absence could, in principle, allow adversaries who control VRF private keys to subtly bias role allocations, a limitation that this work does not explicitly address.

Dynamic Sharding also explores Epoch Randomness but with varying emphases and integration depths. For example, the work in [52] significantly focuses on incorporating Epoch Randomness within its cross-shard transaction process to enhance security. Other Dynamic Sharding approaches, such as those introduced in [25,53], recognize the importance of randomness but do not delve as deeply into its systematic integration as seen in Static Sharding. The integration of certain mechanism in Dynamic Sharding, such as those proposed in [69], lacks proper organization, making them potentially vulnerable to manipulation or attacks. This risk is heightened in complex networks with high transaction volumes, and as the system scales, the threat becomes more evident.

DYNASHARD [68] selects committees through an internal secure random process (in this context, committee selection refers to the random assignment of validators to serve as consensus groups for individual shards, responsible for transaction validation and consensus within the protocol). However, the protocol does not describe the frequency of reseeding or provide simulation evidence of committee diversity across epochs. Moreover, the randomness source is not publicly auditable, and in a permissionless adversarial setting, compromised entropy could bias committee selection without detection. This risk could be mitigated by adopting decentralized or externally verifiable randomness commitment protocols.

AEROChain [55] introduces a single shared random seed per epoch, which governs both node reassignment and migration transaction determinism. This ensures synchronization without external coordination overhead. However, this randomness is not generated through a verifiable process such as VRFs or public randomness beacons. Its centralization could lead to vulnerabilities if the seed is manipulated. The scope is to enable deterministic AERO policy execution, validated indirectly through simulation-based performance improvements but not through cryptographic robustness tests.

SkyChain [73] supports epoch-based reconfiguration, but the source and security of its randomness are unspecified. While re-sharding intervals and block size are adjusted based on DRL policies, the randomness mechanism remains opaque. As a result, it lacks public verifiability or resistance to seed manipulation. Its randomness approach is implicit and not evaluated independently.

Layered Sharding (e.g., Pyramid [24], and OverlapShard [54]), meanwhile, incorporates Epoch Randomness into the Cross-shard Algorithm process, ensuring that transactions across different layers of shards maintain unpredictability and security. This method is important for preventing targeted attacks and ensuring a fair distribution of transaction loads across the network. The multiple layers in this approach likely add to its complexity, which could lead to inefficiencies and affect overall performance. These risks are more likely to arise in large-scale implementations.

On the other hand, SPRING [74] assigns nodes to shards at registration based on the last bits of the PoW solution string. The protocol incorporates a periodic reconfiguration phase in which consensus nodes are regularly shuffled among shards using VRF-generated randomness, ensuring that shard compositions remain unpredictable and resistant to long-term adversarial planning or validator collusion.

Traditional approaches relying on static randomness in sharding risk inefficiency as workloads become increasingly dynamic and predictable over time. AI-Shard introduced in [49] addresses this by using a Graph Convolutional Network–Generative Adversarial Network (GCN-GAN) model to generate predictive node interaction matrices, enabling time-sensitive reshuffling that optimizes shard configurations based on anticipated workloads. This method demonstrably reduces cross-shard transactions and improves throughput in dynamic IoT environments. Wang et al. [49] present prediction-based sharding as superior to static randomization, it inherently trades pure randomness for workload-driven optimization. From a security perspective, reliance on historical data and model predictions could potentially introduce patterns susceptible to adversarial exploitation if model errors or biases occur—though such risks are not discussed by the authors. Simulation results confirm AI-Shard’s performance advantages, but the ultimate security and adaptability of the framework would depend on the ongoing accuracy and robustness of its predictive models. To further enhance adaptability, Wang et al. [49] introduce a dual-layer architecture with DRL-based parameter control (via Double Deep Q-Network), allowing for continuous reconfiguration in response to environmental changes. While this work highlights significant computational cost and challenges in real-world deployment, it lacks additional considerations such as robustness and model explainability.

Epoch Randomness holds an essential place in keeping blockchain networks safe and fair. Different sharding techniques use randomness in unique ways. Static Sharding uses solid and direct techniques. On the other hand, Dynamic and Layered Sharding are still evolving to improve randomness methods. Current research highlights the importance of randomness for the integrity and efficiency of blockchain tasks. It also identifies areas where these methods may not yet be fully effective. However, several approaches lack cryptographic verifiability, such as the use of non-transparent seed generation in AEROChain [55] and SkyChain [73], which do not provide public randomness proofs or resistance to manipulation. In AI-based approaches like AI-Shard [49], shard assignments are decided by model predictions rather than by secure random numbers. This means that if the model makes mistakes or is biased, attackers might find and use patterns in how nodes are assigned. Moreover, approaches such as SPRING [74] and DYNASHARD [68] do not incorporate decentralized or auditable randomness sources, raising concerns about long-term entropy integrity in adversarial environments.

7.4. Cross-Shard Algorithm

Cross-shard Algorithm helps optimize transaction processing across different shards, which plays an important role in improving the overall resilience of a blockchain network.

Within Static Sharding technique, the Two-Phase Commit, as utilized in OmniLedger [51], represents a fundamental approach where transactions affecting multiple shards are handled atomically. This method employs a bias-resistant public-randomness approach to select large, statistically representative shards, ensuring fair and efficient transaction execution. The cross-shard Atomix in [51] extends this concept by ensuring that transactions are either fully completed or entirely aborted, maintaining consistency across shards in a Byzantine environment. The Two-Phase Commit process can cause delays, especially in busy networks, leading to slower transaction speeds and a noticeable impact on overall performance.

Al-Bassam et al. in [43] implemented S-BAC further contributes to these robust cross-shard mechanisms by detailing a five-phase process starting from the Initial Broadcast to the Final Process Accept. This structure helps in mitigating issues such as rogue BFT-Initiators by implementing a two-phase procedure that waits for a timeout before taking action, thus safeguarding the integrity of transaction processing. Although S-BAC enhances security, its five-phase procedure is likely to cause unnecessary delays, particularly in time-sensitive situations, making transaction execution more difficult.