Journal Description

Entropy

Entropy

is an international and interdisciplinary peer-reviewed open access journal of entropy and information studies, published monthly online by MDPI. The International Society for the Study of Information (IS4SI) and Spanish Society of Biomedical Engineering (SEIB) are affiliated with Entropy and their members receive a discount on the article processing charge.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, SCIE (Web of Science), Inspec, PubMed, PMC, Astrophysics Data System, and other databases.

- Journal Rank: JCR - Q2 (Physics, Multidisciplinary) / CiteScore - Q1 (Mathematical Physics)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 21.8 days after submission; acceptance to publication is undertaken in 2.6 days (median values for papers published in this journal in the first half of 2025).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

- Testimonials: See what our editors and authors say about Entropy.

- Companion journals for Entropy include: Foundations, Thermo and Complexities.

Impact Factor:

2.0 (2024);

5-Year Impact Factor:

2.2 (2024)

Latest Articles

Time-Varying Autoregressive Models: A Novel Approach Using Physics-Informed Neural Networks

Entropy 2025, 27(9), 934; https://doi.org/10.3390/e27090934 - 4 Sep 2025

Abstract

►

Show Figures

Time series models are widely used to examine temporal dynamics and uncover patterns across diverse fields. A commonly employed approach for modeling such data is the (Vector) Autoregressive (AR/VAR) model, in which each variable is represented as a linear combination of its own

[...] Read more.

Time series models are widely used to examine temporal dynamics and uncover patterns across diverse fields. A commonly employed approach for modeling such data is the (Vector) Autoregressive (AR/VAR) model, in which each variable is represented as a linear combination of its own and others’ lagged values. However, the traditional (V)AR framework relies on the key assumption of stationarity, that autoregressive coefficients remain constant over time, which is often violated in practice, especially in systems affected by structural breaks, seasonal fluctuations, or evolving causal mechanisms. To overcome this limitation, Time-Varying (Vector) Autoregressive (TV-AR/TV-VAR) models have been developed, enabling model parameters to evolve over time and thus better capturing non-stationary behavior. Conventional approaches to estimating such models, including generalized additive modeling and kernel smoothing techniques, often require strong assumptions about basis functions, which can restrict their flexibility and applicability. To address these challenges, we introduce a novel framework that leverages physics-informed neural networks (PINN) to model TV-AR/TV-VAR processes. The proposed method extends the PINN framework to time series analysis by reducing reliance on explicitly defined physical structures, thereby broadening its applicability. Its effectiveness is validated through simulations on synthetic data and an empirical study of real-world health-related time series.

Full article

Open AccessArticle

Ascertaining Susceptibilities in Smart Contracts: A Quantum Machine Learning Approach

by

Amulyashree Sridhar, Kalyan Nagaraj, Shambhavi Bangalore Ravi and Sindhu Kurup

Entropy 2025, 27(9), 933; https://doi.org/10.3390/e27090933 - 4 Sep 2025

Abstract

The current research aims to discover applications of QML approaches in realizing liabilities within smart contracts. These contracts are essential commodities of the blockchain interface and are also decisive in developing decentralized products. But liabilities in smart contracts could result in unfamiliar system

[...] Read more.

The current research aims to discover applications of QML approaches in realizing liabilities within smart contracts. These contracts are essential commodities of the blockchain interface and are also decisive in developing decentralized products. But liabilities in smart contracts could result in unfamiliar system failures. Presently, static detection tools are utilized to discover accountabilities. However, they could result in instances of false narratives due to their dependency on predefined rules. In addition, these policies can often be superseded, failing to generalize on new contracts. The detection of liabilities with ML approaches, correspondingly, has certain limitations with contract size due to storage and performance issues. Nevertheless, employing QML approaches could be beneficial as they do not necessitate any preconceived rules. They often learn from data attributes during the training process and are employed as alternatives to ML approaches in terms of storage and performance. The present study employs four QML approaches, namely, QNN, QSVM, VQC, and QRF, for discovering susceptibilities. Experimentation revealed that the QNN model surpasses other approaches in detecting liabilities, with a performance accuracy of 82.43%. To further validate its feasibility and performance, the model was assessed on a several-partition test dataset, i.e., SolidiFI data, and the outcomes remained consistent. Additionally, the performance of the model was statistically validated using McNemar’s test.

Full article

(This article belongs to the Section Information Theory, Probability and Statistics)

►▼

Show Figures

Figure 1

Open AccessArticle

Channel Estimation for Intelligent Reflecting Surface Empowered Coal Mine Wireless Communication Systems

by

Yang Liu, Kaikai Guo, Xiaoyue Li, Bin Wang and Yanhong Xu

Entropy 2025, 27(9), 932; https://doi.org/10.3390/e27090932 - 4 Sep 2025

Abstract

The confined space of coal mines characterized by curved tunnels with rough surfaces and a variety of deployed production equipment induces severe signal attenuation and interruption, which significantly degrades the accuracy of conventional channel estimation algorithms applied in coal mine wireless communication systems.

[...] Read more.

The confined space of coal mines characterized by curved tunnels with rough surfaces and a variety of deployed production equipment induces severe signal attenuation and interruption, which significantly degrades the accuracy of conventional channel estimation algorithms applied in coal mine wireless communication systems. To address these challenges, we propose a modified Bilinear Generalized Approximate Message Passing (mBiGAMP) algorithm enhanced by intelligent reflecting surface (IRS) technology to improve channel estimation accuracy in coal mine scenarios. Due to the presence of abundant coal-carrying belt conveyors, we establish a hybrid channel model integrating both fast-varying and quasi-static components to accurately model the unique propagation environment in coal mines. Specifically, the fast-varying channel captures the varying signal paths affected by moving conveyors, while the quasi-static channel represents stable direct links. Since this hybrid structure necessitates an augmented factor graph, we introduce two additional factor nodes and variable nodes to characterize the distinct message-passing behaviors and then rigorously derive the mBiGAMP algorithm. Simulation results demonstrate that the proposed mBiGAMP algorithm achieves superior channel estimation accuracy in dynamic conveyor-affected coal mine scenarios compared with other state-of-the-art methods, showing significant improvements in both separated and cascaded channel estimation. Specifically, when the NMSE is

(This article belongs to the Special Issue Wireless Communications: Signal Processing Perspectives, 2nd Edition)

►▼

Show Figures

Figure 1

Open AccessReview

Sherlock Holmes Doesn’t Play Dice: The Mathematics of Uncertain Reasoning When Something May Happen, That You Are Not Even Able to Figure Out

by

Guido Fioretti

Entropy 2025, 27(9), 931; https://doi.org/10.3390/e27090931 - 4 Sep 2025

Abstract

While Evidence Theory (also known as Dempster–Shafer Theory, or Belief Functions Theory) is being increasingly used in data fusion, its potentialities in the Social and Life Sciences are often obscured by lack of awareness of its distinctive features. In particular, with this paper

[...] Read more.

While Evidence Theory (also known as Dempster–Shafer Theory, or Belief Functions Theory) is being increasingly used in data fusion, its potentialities in the Social and Life Sciences are often obscured by lack of awareness of its distinctive features. In particular, with this paper I stress that an extended version of Evidence Theory can express the uncertainty deriving from the fear that events may materialize, that one is not even able to figure out. By contrast, Probability Theory must limit itself to the possibilities that a decision-maker is currently envisaging. I compare this extended version of Evidence Theory to cutting-edge extensions of Probability Theory, such as imprecise and sub-additive probabilities, as well as unconventional versions of Information Theory that are employed in data fusion and transmission of cultural information. A possible application to creative usage of Large Language Models is outlined, and further extensions to multi-agent interactions are outlined.

Full article

(This article belongs to the Section Information Theory, Probability and Statistics)

►▼

Show Figures

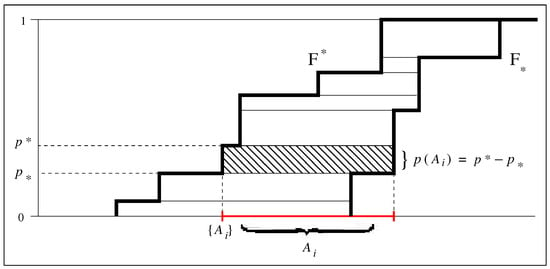

Figure 1

Open AccessArticle

Optimized Generalized LDPC Convolutional Codes

by

Li Deng, Kai Tao, Zhiping Shi, You Zhang, Yinlong Shi, Jian Wang, Tian Liu and Yongben Wang

Entropy 2025, 27(9), 930; https://doi.org/10.3390/e27090930 - 4 Sep 2025

Abstract

In this paper, some optimized encoding and decoding schemes are proposed for the generalized LDPC convolutional codes (GLDPC–CCs). In terms of the encoding scheme, a flexible doping method is proposed, which replaces multiple single parity check (SPC) nodes with one generalized check (GC)

[...] Read more.

In this paper, some optimized encoding and decoding schemes are proposed for the generalized LDPC convolutional codes (GLDPC–CCs). In terms of the encoding scheme, a flexible doping method is proposed, which replaces multiple single parity check (SPC) nodes with one generalized check (GC) node. Different types of BCH codes can be selected as the GC node by adjusting the number of SPC nodes to be replaced. Moreover, by fine-tuning the truncated bits and the extended parity check bits, or by reasonably adjusting the GC node distribution, the performance of GLDPC–CCs can be further improved. In terms of the decoding scheme, a hybrid layered normalized min-sum (HLNMS) decoding algorithm is proposed, where the layered normalized min-sum (LNMS) decoding is used for SPC nodes, and the Chase–Pyndiah decoding is adopted for GC nodes. Based on analysis of the decoding convergence of GC node and SPC node, an adaptive weight factor is designed for GC nodes that changes as the decoding iterations, aiming to further improve the decoding performance. In addition, an early stop decoding strategy is also proposed based on the minimum amplitude threshold of mutual information in order to reduce the decoding complexity. The simulation results have verified the superiority of the proposed scheme for GLDPC–CCs over the prior art, which has great application potential in optical communication systems.

Full article

(This article belongs to the Special Issue LDPC Codes for Communication Systems)

►▼

Show Figures

Figure 1

Open AccessArticle

Comprehensive Examination of Unrolled Networks for Solving Linear Inverse Problems

by

Yuxi Chen, Xi Chen, Arian Maleki and Shirin Jalali

Entropy 2025, 27(9), 929; https://doi.org/10.3390/e27090929 - 3 Sep 2025

Abstract

Unrolled networks have become prevalent in various computer vision and imaging tasks. Although they have demonstrated remarkable efficacy in solving specific computer vision and computational imaging tasks, their adaptation to other applications presents considerable challenges. This is primarily due to the multitude of

[...] Read more.

Unrolled networks have become prevalent in various computer vision and imaging tasks. Although they have demonstrated remarkable efficacy in solving specific computer vision and computational imaging tasks, their adaptation to other applications presents considerable challenges. This is primarily due to the multitude of design decisions that practitioners working on new applications must navigate, each potentially affecting the network’s overall performance. These decisions include selecting the optimization algorithm, defining the loss function, and determining the deep architecture, among others. Compounding the issue, evaluating each design choice requires time-consuming simulations to train, fine-tune the neural network, and optimize its performance. As a result, the process of exploring multiple options and identifying the optimal configuration becomes time-consuming and computationally demanding. The main objectives of this paper are (1) to unify some ideas and methodologies used in unrolled networks to reduce the number of design choices a user has to make, and (2) to report a comprehensive ablation study to discuss the impact of each of the choices involved in designing unrolled networks and present practical recommendations based on our findings. We anticipate that this study will help scientists and engineers to design unrolled networks for their applications and diagnose problems within their networks efficiently.

Full article

(This article belongs to the Special Issue Advances in Information Theory and Machine Learning for Computational Imaging)

►▼

Show Figures

Figure 1

Open AccessArticle

Sensor Fusion for Target Detection Using LLM-Based Transfer Learning Approach

by

Yuval Ziv, Barouch Matzliach and Irad Ben-Gal

Entropy 2025, 27(9), 928; https://doi.org/10.3390/e27090928 - 3 Sep 2025

Abstract

This paper introduces a novel sensor fusion approach for the detection of multiple static and mobile targets by autonomous mobile agents. Unlike previous studies that rely on theoretical sensor models, which are considered as independent, the proposed methodology leverages real-world sensor data, which

[...] Read more.

This paper introduces a novel sensor fusion approach for the detection of multiple static and mobile targets by autonomous mobile agents. Unlike previous studies that rely on theoretical sensor models, which are considered as independent, the proposed methodology leverages real-world sensor data, which is transformed into sensor-specific probability maps using object detection estimation for optical data and converting averaged point-cloud intensities for LIDAR based on a dedicated deep learning model before being integrated through a large language model (LLM) framework. We introduce a methodology based on LLM transfer learning (LLM-TLFT) to create a robust global probability map enabling efficient swarm management and target detection in challenging environments. The paper focuses on real data obtained from two types of sensors, light detection and ranging (LIDAR) sensors and optical sensors, and it demonstrates significant improvement in performance compared to existing methods (Independent Opinion Pool, CNN, GPT-2 with deep transfer learning) in terms of precision, recall, and computational efficiency, particularly in scenarios with high noise and sensor imperfections. The significant advantage of the proposed approach is the possibility to interpret a dependency between different sensors. In addition, a model compression using knowledge-based distillation was performed (distilled TLFT), which yielded satisfactory results for the deployment of the proposed approach to edge devices.

Full article

(This article belongs to the Special Issue Informational Coordinative and Teleological Control of Distributed and Multi Agent Systems)

►▼

Show Figures

Figure 1

Open AccessArticle

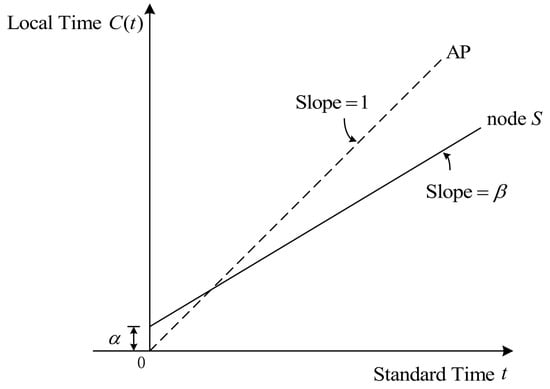

Noise-Robust-Based Clock Parameter Estimation and Low-Overhead Time Synchronization in Time-Sensitive Industrial Internet of Things

by

Long Tang, Fangyan Li, Zichao Yu and Haiyong Zeng

Entropy 2025, 27(9), 927; https://doi.org/10.3390/e27090927 - 3 Sep 2025

Abstract

Time synchronization is critical for task-oriented and time-sensitive Industrial Internet of Things (IIoT) systems. Nevertheless, achieving high-precision synchronization with low communication overhead remains a key challenge due to the constrained resources of IIoT devices. In this paper, we propose a single-timestamp time synchronization

[...] Read more.

Time synchronization is critical for task-oriented and time-sensitive Industrial Internet of Things (IIoT) systems. Nevertheless, achieving high-precision synchronization with low communication overhead remains a key challenge due to the constrained resources of IIoT devices. In this paper, we propose a single-timestamp time synchronization scheme that significantly reduces communication overhead by utilizing the mechanism of AP to periodically collect sensor device data. The reduced communication overhead alleviates network congestion, which is essential for achieving low end-to-end latency in synchronized IIoT networks. Furthermore, to mitigate the impact of random delay noise on clock parameter estimation, we propose a noise-robust-based Maximum Likelihood Estimation (NR-MLE) algorithm that jointly optimizes synchronization accuracy and resilience to random delays. Specifically, we decompose the collected timestamp matrix into two low-rank matrices and use gradient descent to minimize reconstruction error and regularization, approximating the true signal and removing noise. The denoised timestamp matrix is then used to jointly estimate clock skew and offset via MLE, with the corresponding Cramér–Rao Lower Bounds (CRLBs) being derived. The simulation results demonstrate that the NR-MLE algorithm achieves a higher clock parameter estimation accuracy than conventional MLE and exhibits strong robustness against increasing noise levels.

Full article

(This article belongs to the Special Issue Task-Oriented Communications in Industrial IoT: Age of Information and Beyond)

►▼

Show Figures

Figure 1

Open AccessArticle

Benchmarking Static Analysis for PHP Applications Security

by

Jiazhen Zhao, Kailong Zhu, Canju Lu, Jun Zhao and Yuliang Lu

Entropy 2025, 27(9), 926; https://doi.org/10.3390/e27090926 - 3 Sep 2025

Abstract

►▼

Show Figures

PHP is the most widely used server-side programming language, but it remains highly susceptible to diverse classes of vulnerabilities. Static Application Security Testing (SAST) tools are commonly adopted for vulnerability detection; however, their evaluation lacks systematic criteria capable of quantifying information loss and

[...] Read more.

PHP is the most widely used server-side programming language, but it remains highly susceptible to diverse classes of vulnerabilities. Static Application Security Testing (SAST) tools are commonly adopted for vulnerability detection; however, their evaluation lacks systematic criteria capable of quantifying information loss and uncertainty in analysis. Existing approaches, often based on small real-world case sets or heuristic sampling, fail to control experimental entropy within test cases. This uncontrolled variability makes it difficult to measure the information gain provided by different tools and to accurately differentiate their performance under varying levels of structural and semantic complexity. In this paper, we have developed a systematic evaluation framework for PHP SAST tools, designed to provide accurate and comprehensive assessments of their vulnerability detection capabilities. The framework explicitly isolates key factors influencing data flow analysis, enabling evaluation over four progressive dimensions with controlled information diversity. Using a benchmark instance, we validate the framework’s feasibility and show how it reduces evaluation entropy, enabling the more reliable measurement of detection capabilities. Our results highlight the framework’s ability to reveal the limitations in current SAST tools, offering actionable insights for their future improvement.

Full article

Figure 1

Open AccessArticle

Structural Complexity as a Directional Signature of System Evolution: Beyond Entropy

by

Donglu Shi

Entropy 2025, 27(9), 925; https://doi.org/10.3390/e27090925 - 3 Sep 2025

Abstract

We propose a universal framework for understanding system evolution based on structural complexity, offering a directional signature that applies across physical, chemical, and biological domains. Unlike entropy, which is constrained by its definition in closed, equilibrium systems, we introduce Kolmogorov Complexity (KC) and

[...] Read more.

We propose a universal framework for understanding system evolution based on structural complexity, offering a directional signature that applies across physical, chemical, and biological domains. Unlike entropy, which is constrained by its definition in closed, equilibrium systems, we introduce Kolmogorov Complexity (KC) and Fractal Dimension (FD) as quantifiable, scalable metrics that capture the emergence of organized complexity in open, non-equilibrium systems. We examine two major classes of systems: (1) living systems, revisiting Schrödinger’s insight that biological growth may locally reduce entropy while increasing structural order, and (2) irreversible natural processes such as oxidation, diffusion, and material aging. We formalize a Universal Law: expressed as a non-decreasing function Ω(t) = α·KC(t) + β·FD(t), which parallels the Second Law of Thermodynamics but tracks the rise in algorithmic and geometric complexity. This framework integrates principles from complexity science, providing a robust, mathematically grounded lens for describing the directional evolution of systems across scales-from crystals to cognition.

Full article

(This article belongs to the Section Complexity)

Open AccessArticle

Learnable Convolutional Attention Network for Unsupervised Knowledge Graph Entity Alignment

by

Weishan Cai and Wenjun Ma

Entropy 2025, 27(9), 924; https://doi.org/10.3390/e27090924 - 3 Sep 2025

Abstract

The success of current entity alignment (EA) tasks largely depends on the supervision information provided by labeled data. Considering the cost of labeled data, most supervised methods are challenging to apply in practical scenarios. Therefore, an increasing number of works based on contrastive

[...] Read more.

The success of current entity alignment (EA) tasks largely depends on the supervision information provided by labeled data. Considering the cost of labeled data, most supervised methods are challenging to apply in practical scenarios. Therefore, an increasing number of works based on contrastive learning, active learning, or other deep learning techniques have been developed, to solve the performance bottleneck caused by the lack of labeled data. However, existing unsupervised EA methods still face certain limitations; either their modeling complexity is high or they fail to balance the effectiveness and practicality of alignment. To overcome these issues, we propose a learnable convolutional attention network for unsupervised entity alignment, named LCA-UEA. Specifically, LCA-UEA performs convolution operations before the attention mechanism, ensuring the acquisition of structural information and avoiding the superposition of redundant information. Then, to efficiently filter out invalid neighborhood information of aligned entities, LCA-UEA designs a relation structure reconstruction method based on potential matching relations, thereby enhancing the usability and scalability of the EA method. Notably, a similarity function based on consistency is proposed to better measure the similarity of candidate entity pairs. Finally, we conducted extensive experiments on three datasets of different sizes and types (cross-lingual and monolingual) to verify the superiority of LCA-UEA. Experimental results demonstrate that LCA-UEA significantly improved alignment accuracy, outperforming 25 supervised or unsupervised methods, and improving by 6.4% in Hits@1 over the best baseline in the best case.

Full article

(This article belongs to the Special Issue Entropy in Machine Learning Applications, 2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

Simulating Public Opinion: Comparing Distributional and Individual-Level Predictions from LLMs and Random Forests

by

Fernando Miranda and Pedro Paulo Balbi

Entropy 2025, 27(9), 923; https://doi.org/10.3390/e27090923 - 2 Sep 2025

Abstract

Understanding and modeling the flow of information in human societies is essential for capturing phenomena such as polarization, opinion formation, and misinformation diffusion. Traditional agent-based models often rely on simplified behavioral rules that fail to capture the nuanced and context-sensitive nature of human

[...] Read more.

Understanding and modeling the flow of information in human societies is essential for capturing phenomena such as polarization, opinion formation, and misinformation diffusion. Traditional agent-based models often rely on simplified behavioral rules that fail to capture the nuanced and context-sensitive nature of human decision-making. In this study, we explore the potential of Large Language Models (LLMs) as data-driven, high-fidelity agents capable of simulating individual opinions under varying informational conditions. Conditioning LLMs on real survey data from the 2020 American National Election Studies (ANES), we investigate their ability to predict individual-level responses across a spectrum of political and social issues in a zero-shot setting, without any training on the survey outcomes. Using Jensen–Shannon distance to quantify divergence in opinion distributions and F1-score to measure predictive accuracy, we compare LLM-generated simulations to those produced by a supervised Random Forest model. While performance at the individual level is comparable, LLMs consistently produce aggregate opinion distributions closer to the empirical ground truth. These findings suggest that LLMs offer a promising new method for simulating complex opinion dynamics and modeling the probabilistic structure of belief systems in computational social science.

Full article

(This article belongs to the Section Multidisciplinary Applications)

►▼

Show Figures

Figure 1

Open AccessArticle

On the Relativity of Quantumness as Implied by Relativity of Arithmetic and Probability

by

Marek Czachor

Entropy 2025, 27(9), 922; https://doi.org/10.3390/e27090922 - 2 Sep 2025

Abstract

A hierarchical structure of isomorphic arithmetics is defined by a bijection

A hierarchical structure of isomorphic arithmetics is defined by a bijection

(This article belongs to the Special Issue Quantum Measurement)

►▼

Show Figures

Figure 1

Open AccessArticle

A Graph Contrastive Learning Method for Enhancing Genome Recovery in Complex Microbial Communities

by

Guo Wei and Yan Liu

Entropy 2025, 27(9), 921; https://doi.org/10.3390/e27090921 - 31 Aug 2025

Abstract

Accurate genome binning is essential for resolving microbial community structure and functional potential from metagenomic data. However, existing approaches—primarily reliant on tetranucleotide frequency (TNF) and abundance profiles—often perform sub-optimally in the face of complex community compositions, low-abundance taxa, and long-read sequencing datasets. To

[...] Read more.

Accurate genome binning is essential for resolving microbial community structure and functional potential from metagenomic data. However, existing approaches—primarily reliant on tetranucleotide frequency (TNF) and abundance profiles—often perform sub-optimally in the face of complex community compositions, low-abundance taxa, and long-read sequencing datasets. To address these limitations, we present MBGCCA, a novel metagenomic binning framework that synergistically integrates graph neural networks (GNNs), contrastive learning, and information-theoretic regularization to enhance binning accuracy, robustness, and biological coherence. MBGCCA operates in two stages: (1) multimodal information integration, where TNF and abundance profiles are fused via a deep neural network trained using a multi-view contrastive loss, and (2) self-supervised graph representation learning, which leverages assembly graph topology to refine contig embeddings. The contrastive learning objective follows the InfoMax principle by maximizing mutual information across augmented views and modalities, encouraging the model to extract globally consistent and high-information representations. By aligning perturbed graph views while preserving topological structure, MBGCCA effectively captures both global genomic characteristics and local contig relationships. Comprehensive evaluations using both synthetic and real-world datasets—including wastewater and soil microbiomes—demonstrate that MBGCCA consistently outperforms state-of-the-art binning methods, particularly in challenging scenarios marked by sparse data and high community complexity. These results highlight the value of entropy-aware, topology-preserving learning for advancing metagenomic genome reconstruction.

Full article

(This article belongs to the Special Issue Network-Based Machine Learning Approaches in Bioinformatics)

►▼

Show Figures

Figure 1

Open AccessArticle

Entropy-Fused Enhanced Symplectic Geometric Mode Decomposition for Hybrid Power Quality Disturbance Recognition

by

Chencheng He, Wenbo Wang, Xuezhuang E, Hao Yuan and Yuyi Lu

Entropy 2025, 27(9), 920; https://doi.org/10.3390/e27090920 - 30 Aug 2025

Abstract

►▼

Show Figures

Electrical networks face operational challenges from power quality-affecting disturbances. Since disturbance signatures directly affect classifier performance, optimized feature selection becomes critical for accurate power quality assessment. The pursuit of robust feature extraction inevitably constrains the dimensionality of the discriminative feature set, but the

[...] Read more.

Electrical networks face operational challenges from power quality-affecting disturbances. Since disturbance signatures directly affect classifier performance, optimized feature selection becomes critical for accurate power quality assessment. The pursuit of robust feature extraction inevitably constrains the dimensionality of the discriminative feature set, but the complexity of the recognition model will be increased and the recognition speed will be reduced if the feature vector dimension is too high. Building upon the aforementioned requirements, in this paper, we propose a feature extraction framework that combines improved symplectic geometric mode decomposition, refined generalized multiscale quantum entropy, and refined generalized multiscale reverse dispersion entropy. Firstly, based on the intrinsic properties of power quality disturbance (PQD) signals, the embedding dimension of symplectic geometric mode decomposition and the adaptive mode component screening method are improved, and the PQD signal undergoes tri-band decomposition via improved symplectic geometric mode decomposition (ISGMD), yielding distinct high-frequency, medium-frequency, and low-frequency components. Secondly, utilizing the enhanced symplectic geometric mode decomposition as a foundation, the perturbation features are extracted by the combination of refined generalized multiscale quantum entropy and refined generalized multiscale reverse dispersion entropy to construct high-precision and low-dimensional feature vectors. Finally, a double-layer composite power quality disturbance model is constructed by a deep extreme learning machine algorithm to identify power quality disturbance signals. After analysis and comparison, the proposed method is found to be effective even in a strong noise environment with a single interference, and the average recognition accuracy across different noise environments is 97.3%. Under the complex conditions involving multiple types of mixed perturbations, the average recognition accuracy is maintained above 96%. Compared with the existing CNN + LSTM method, the recognition accuracy of the proposed method is improved by 3.7%. In addition, its recognition accuracy in scenarios with small data samples is significantly better than that of traditional methods, such as single CNN models and LSTM models. The experimental results show that the proposed strategy can accurately classify and identify various power quality interferences and that it is better than traditional methods in terms of classification accuracy and robustness. The experimental results of the simulation and measured data show that the combined feature extraction methodology reliably extracts discriminative feature vectors from PQD. The double-layer combined classification model can further enhance the model’s recognition capabilities. This method has high accuracy and certain noise resistance. In the 30 dB white noise environment, the average classification accuracy of the model is 99.10% for the simulation database containing 63 PQD types. Meanwhile, for the test data based on a hardware platform, the average accuracy is 99.03%, and the approach’s dependability is further evidenced by rigorous validation experiments.

Full article

Figure 1

Open AccessArticle

A Novel Co-Designed Multi-Domain Entropy and Its Dynamic Synapse Classification Approach for EEG Seizure Detection

by

Guanyuan Feng, Jiawen Li, Yicheng Zhong, Shuang Zhang, Xin Liu, Mang I Vai, Kaihan Lin, Xianxian Zeng, Jun Yuan and Rongjun Chen

Entropy 2025, 27(9), 919; https://doi.org/10.3390/e27090919 - 30 Aug 2025

Abstract

Automated electroencephalography (EEG) seizure detection is meaningful in clinical medicine. However, current approaches often lack comprehensive feature extraction and are limited by generic classifier architectures, which limit their effectiveness in complex real-world scenarios. To overcome this traditional coupling between feature representation and classifier

[...] Read more.

Automated electroencephalography (EEG) seizure detection is meaningful in clinical medicine. However, current approaches often lack comprehensive feature extraction and are limited by generic classifier architectures, which limit their effectiveness in complex real-world scenarios. To overcome this traditional coupling between feature representation and classifier development, this study proposes DySC-MDE, an end-to-end co-designed framework for seizure detection. A novel multi-domain entropy (MDE) representation is constructed at the feature level based on amplitude-sensitive permutation entropy (ASPE), which adopts entropy-based quantifiers to characterize the nonlinear dynamics of EEG signals across diverse domains. Specifically, ASPE is extended into three distinct variants, refined composite multiscale ASPE (RCMASPE), discrete wavelet transform-based hierarchical ASPE (HASPE-DWT), and time-shift multiscale ASPE (TSMASPE), to represent various temporal and spectral dynamics of EEG signals. At the classifier level, a dynamic synapse classifier (DySC) is proposed to align with the structure of the MDE features. Particularly, DySC includes three parallel and specialized processing pathways, each tailored to a specific entropy variant. These outputs are then adaptively fused through a dynamic synaptic gating mechanism, which can enhance the model’s ability to integrate heterogeneous information sources. To fully evaluate the effectiveness of the proposed method, extensive experiments are conducted on two public datasets using cross-validation. For the binary classification task, DySC-MDE achieves an accuracy of 97.50% and 98.93% and an F1-score of 97.58% and 98.87% in the Bonn and CHB-MIT datasets, respectively. Moreover, in the three-class task, the proposed method maintains a high F1-score of 96.83%, revealing its strong discriminative performance and generalization ability across different categories. Consequently, these impressive results demonstrate that the joint optimization of nonlinear dynamic feature representations and structure-aware classifiers can further improve the analysis of complex epileptic EEG signals, which opens a novel direction for robust seizure detection.

Full article

(This article belongs to the Special Issue Entropy Analysis of ECG and EEG Signals)

►▼

Show Figures

Figure 1

Open AccessArticle

The Hungry Daemon: Does an Energy-Harvesting Active Particle Have to Obey the Second Law of Thermodynamics?

by

Simon Bienewald, Diego M. Fieguth and James R. Anglin

Entropy 2025, 27(9), 918; https://doi.org/10.3390/e27090918 - 30 Aug 2025

Abstract

Thought experiments like Maxwell’s Demon or the Smoluchowski–Feynman Ratchet can help in pursuing the microscopic origin of the Second Law of Thermodynamics. Here we present a more sophisticated mechanical system than a ratchet, consisting of a Hamiltonian (non-Brownian) active particle which can harvest

[...] Read more.

Thought experiments like Maxwell’s Demon or the Smoluchowski–Feynman Ratchet can help in pursuing the microscopic origin of the Second Law of Thermodynamics. Here we present a more sophisticated mechanical system than a ratchet, consisting of a Hamiltonian (non-Brownian) active particle which can harvest energy from an environment which may be in thermal equilibrium at a single temperature. We show that while a phenomenological description would seem to allow the system to operate as a Perpetual Motion Machine of the Second Kind, a full mechanical analysis confirms that this is impossible, and that perpetual energy harvesting within a mechanical system can only occur if the environment has an energetic population inversion similar to a lasing medium.

Full article

(This article belongs to the Special Issue Modern Perspectives on the Second Law of Thermodynamics and Infodynamics)

►▼

Show Figures

Figure 1

Open AccessReview

Constructing Hetero-Microstructures in Additively Manufactured High-Performance High-Entropy Alloys

by

Yuanshu Zhao, Zhibin Wu, Yongkun Mu, Yuefei Jia, Yandong Jia and Gang Wang

Entropy 2025, 27(9), 917; https://doi.org/10.3390/e27090917 - 29 Aug 2025

Abstract

High-entropy alloys (HEAs) have shown great promise for applications in extreme service environments due to their exceptional mechanical properties and thermal stability. However, traditional alloy design often struggles to balance multiple properties such as strength and ductility. Constructing heterogeneous microstructures has emerged as

[...] Read more.

High-entropy alloys (HEAs) have shown great promise for applications in extreme service environments due to their exceptional mechanical properties and thermal stability. However, traditional alloy design often struggles to balance multiple properties such as strength and ductility. Constructing heterogeneous microstructures has emerged as an effective strategy to overcome this challenge. With the rapid advancement of additive manufacturing (AM) technologies, their unique ability to fabricate complex, spatially controlled, and non-equilibrium microstructures offers unprecedented opportunities for tailoring heterostructures in HEAs with high precision. This review highlights recent progress in utilizing AM to engineer heterogeneous microstructures in high-performance HEAs. It systematically examines the multiscale heterogeneities induced by the thermal cycling effects inherent to AM techniques such as selective laser melting (SLM) and electron beam melting (EBM). The review further discusses the critical role of these heterostructures in enhancing the synergy between strength and ductility, as well as improving work-hardening behavior. AM enables the design-driven fabrication of tailored microstructures, signaling a shift from traditional “performance-driven” alloy design paradigms toward a new model centered on “microstructural control”. In summary, additive manufacturing provides an ideal platform for constructing heterogeneous HEAs and holds significant promise for advancing high-performance alloy systems. Its integration into alloy design represents both a valuable theoretical framework and a practical pathway for developing next-generation structural materials with multiple performance attributes.

Full article

(This article belongs to the Special Issue Recent Advances in High Entropy Alloys)

►▼

Show Figures

Figure 1

Open AccessArticle

A Criterion for Distinguishing Temporally Different Dynamical Systems

by

Evgeny Kagan

Entropy 2025, 27(9), 916; https://doi.org/10.3390/e27090916 - 29 Aug 2025

Abstract

The paper presents a method for distinguishing dynamical systems with respect to their behavior. The suggested criterion is interpreted as internal time of the ergodic dynamical system, which is a time generated by the system and differs from the external global or reference

[...] Read more.

The paper presents a method for distinguishing dynamical systems with respect to their behavior. The suggested criterion is interpreted as internal time of the ergodic dynamical system, which is a time generated by the system and differs from the external global or reference time. The paper includes a formal definition of the internal time of dynamical system in the form of the entropy ratio, considers its basic properties and gives examples of analysis of dynamical systems.

Full article

(This article belongs to the Special Issue Informational Coordinative and Teleological Control of Distributed and Multi Agent Systems)

Open AccessArticle

Modeling the Evolution of Dynamic Triadic Closure Under Superlinear Growth and Node Aging in Citation Networks

by

Li Liang, Hao Liu and Shi-Cai Gong

Entropy 2025, 27(9), 915; https://doi.org/10.3390/e27090915 - 29 Aug 2025

Abstract

Citation networks are fundamental for analyzing the mechanisms and patterns of knowledge creation and dissemination. While most studies focus on pairwise attachment between papers, they often overlook compound relational structures, such as co-citation. Combining two key empirical features, superlinear node inflow and the

[...] Read more.

Citation networks are fundamental for analyzing the mechanisms and patterns of knowledge creation and dissemination. While most studies focus on pairwise attachment between papers, they often overlook compound relational structures, such as co-citation. Combining two key empirical features, superlinear node inflow and the temporal decay of node influence, we propose the Triangular Evolutionary Model of Superlinear Growth and Aging (TEM-SGA). The fitting results demonstrate that the TEM-SGA reproduces key structural properties of real citation networks, including degree distributions, generalized degree distributions, and average clustering coefficients. Further structural analyses reveal that the impact of aging varies with structural scale and depends on the interplay between aging and growth, one manifestation of which is that, as growth accelerates, it increasingly offsets aging-related disruptions. This motivates a degenerate model, the Triangular Evolutionary Model of Superlinear Growth (TEM-SG), which excludes aging. A theoretical analysis shows that its degree and generalized degree distributions follow a power law. By modeling interactions among triadic closure, dynamic expansion, and aging, this study offers insights into citation network evolution and strengthens its theoretical foundation.

Full article

(This article belongs to the Topic Computational Complex Networks)

►▼

Show Figures

Figure 1

Journal Menu

► ▼ Journal Menu-

- Entropy Home

- Aims & Scope

- Editorial Board

- Reviewer Board

- Topical Advisory Panel

- Photography Exhibition

- Instructions for Authors

- Special Issues

- Topics

- Sections & Collections

- Article Processing Charge

- Indexing & Archiving

- Editor’s Choice Articles

- Most Cited & Viewed

- Journal Statistics

- Journal History

- Journal Awards

- Society Collaborations

- Conferences

- Editorial Office

Journal Browser

► ▼ Journal Browser-

arrow_forward_ios

Forthcoming issue

arrow_forward_ios Current issue - Vol. 27 (2025)

- Vol. 26 (2024)

- Vol. 25 (2023)

- Vol. 24 (2022)

- Vol. 23 (2021)

- Vol. 22 (2020)

- Vol. 21 (2019)

- Vol. 20 (2018)

- Vol. 19 (2017)

- Vol. 18 (2016)

- Vol. 17 (2015)

- Vol. 16 (2014)

- Vol. 15 (2013)

- Vol. 14 (2012)

- Vol. 13 (2011)

- Vol. 12 (2010)

- Vol. 11 (2009)

- Vol. 10 (2008)

- Vol. 9 (2007)

- Vol. 8 (2006)

- Vol. 7 (2005)

- Vol. 6 (2004)

- Vol. 5 (2003)

- Vol. 4 (2002)

- Vol. 3 (2001)

- Vol. 2 (2000)

- Vol. 1 (1999)

Highly Accessed Articles

Latest Books

E-Mail Alert

News

3 September 2025

Join Us at the MDPI at the University of Toronto Career Fair, 23 September 2025, Toronto, ON, Canada

Join Us at the MDPI at the University of Toronto Career Fair, 23 September 2025, Toronto, ON, Canada

1 September 2025

MDPI INSIGHTS: The CEO’s Letter #26 – CUJS, Head of Ethics, Open Peer Review, AIS 2025, Reviewer Recognition

MDPI INSIGHTS: The CEO’s Letter #26 – CUJS, Head of Ethics, Open Peer Review, AIS 2025, Reviewer Recognition

Topics

Topic in

Entropy, Algorithms, Computation, Fractal Fract

Computational Complex Networks

Topic Editors: Alexandre G. Evsukoff, Yilun ShangDeadline: 30 September 2025

Topic in

AI, Energies, Entropy, Sustainability

Game Theory and Artificial Intelligence Methods in Sustainable and Renewable Energy Power Systems

Topic Editors: Lefeng Cheng, Pei Zhang, Anbo MengDeadline: 31 October 2025

Topic in

Entropy, Environments, Land, Remote Sensing

Bioterraformation: Emergent Function from Systemic Eco-Engineering

Topic Editors: Matteo Convertino, Jie LiDeadline: 30 November 2025

Topic in

Applied Sciences, Electronics, Entropy, Mathematics, Symmetry, Technologies, Chips

Quantum Information and Quantum Computing, 2nd Volume

Topic Editors: Durdu Guney, David PetrosyanDeadline: 6 January 2026

Conferences

Special Issues

Special Issue in

Entropy

Entropy in Quantum Systems and Quantum Field Theory (QFT): Gravity, 3rd Edition

Guest Editor: Ignazio LicataDeadline: 8 September 2025

Special Issue in

Entropy

25 Years of Sample Entropy

Guest Editors: J Randall Moorman, Joshua RichmanDeadline: 9 September 2025

Special Issue in

Entropy

Coding for Aeronautical Telemetry

Guest Editor: Michael RiceDeadline: 10 September 2025

Special Issue in

Entropy

Black Hole Information Problem: Challenges and Perspectives

Guest Editors: Qingyu Cai, Baocheng Zhang, Christian CordaDeadline: 10 September 2025

Topical Collections

Topical Collection in

Entropy

Algorithmic Information Dynamics: A Computational Approach to Causality from Cells to Networks

Collection Editors: Hector Zenil, Felipe Abrahão

Topical Collection in

Entropy

Foundations of Statistical Mechanics

Collection Editor: Antonio M. Scarfone

Topical Collection in

Entropy

Feature Papers in Information Theory

Collection Editors: Raúl Alcaraz, Luca Faes, Leandro Pardo, Boris Ryabko