Learnable Convolutional Attention Network for Unsupervised Knowledge Graph Entity Alignment

Abstract

1. Introduction

- A reconstruction method of relation structure based on potential matching relations is designed, which improves alignment accuracy while reducing the computational cost of model training.

- A learnable GNN model is introduced to learn entity features, which performs convolution operations before the attention mechanism, ensuring the acquisition of structural information while avoiding the superposition of redundant information.

- A novel similarity function based on consistency is proposed, enabling more accurate measurement of the similarity between candidate entity pairs.

- Extensive experiments conducted on three well-known benchmark datasets demonstrated that LCA-UEA not only significantly outperforms 25 state-of-the-art models but also exhibits strong scalability and robustness.

2. Related Work

2.1. EA Based on Translation Model

2.2. EA Based on Relation Structures

2.3. EA Based on Auxiliary Information

2.4. Self-Supervised or Unsupervised EA Methods

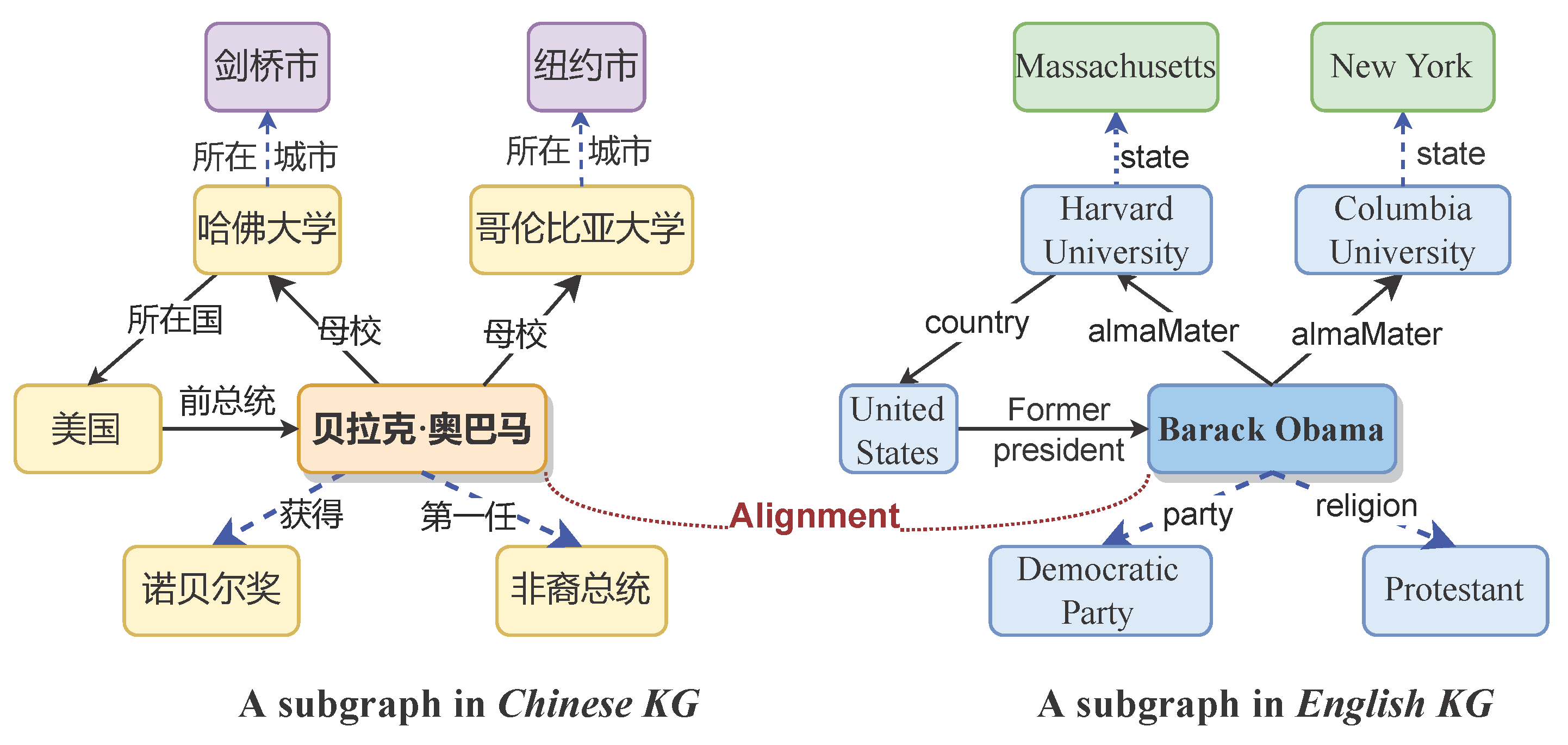

3. Preliminaries

4. Methodology

- The textual feature module extends traditional entity name embedding by introducing entity-context embedding, thereby enhancing the extraction of entity name information.

- The reconstruction of relation structure module aims to improve model efficiency and alignment performance by filtering out irrelevant neighborhood information for aligned entities during the data pre-processing stage.

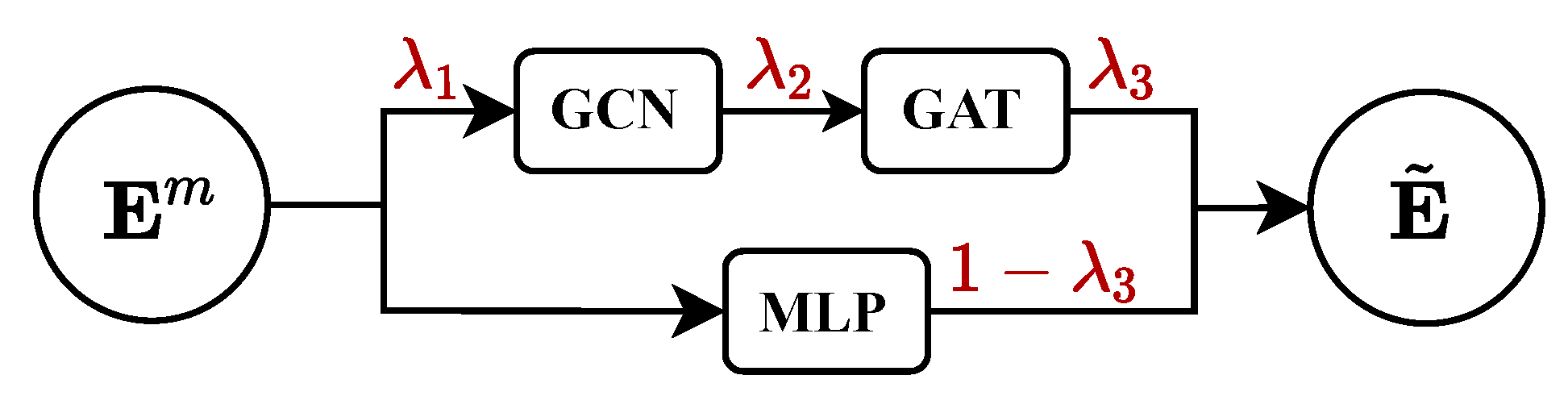

- The LCAT-based neighborhood aggregator module employs a simple yet effective method to extract graph relation structures for entities.

- The contrastive learning module enables LCA-UEA to operate in an unsupervised method, eliminating the reliance on alignment seeds.

- The alignment with consistency similarity module proposes a novel consistency-based similarity function, which can measure the similarity of candidate entity pairs more effectively.

4.1. Textual Feature

4.2. Reconstruction of Relation Structure

4.3. LCAT-Based Neighborhood Aggregator

| Algorithm 1 Procedure of reconstruction of relation structure. |

Input: , , textual features . Output: new relation triples .

|

▹ Generate pseudo-labels |

|

▹ Generate matching relations |

|

▹ Generate new relation structure |

|

4.4. Contrastive Learning

4.5. Alignment with Consistency Similarity

5. Experiments

5.1. Experiment Settings

- DBP-15K [18] is one of the most widely used datasets in the literature. It consists of three cross-lingual subsets derived from multi-lingual DBpedia: Chinese–English (), Japanese–English (), and French–English (). Each subset contains 15,000 aligned entity pairs but varies in the number of relation triples.

- WK31-15K [45] is designed to evaluate model performance on sparse and dense datasets. It comprises four subsets: , , , and . The V1 subsets represent sparse graphs obtained using the IDS algorithm, while the density of the V2 subsets is approximately twice that of the corresponding V1 subsets.

- DWY-100K [21] is a large-scale dataset suitable for evaluating the scalability of experimental models. It includes two monolingual KGs: DBpedia–Wikidata (DBP-WD) and DBpedia–YAGO3 (DBP-YG). Each KG contains 100,000 aligned entity pairs and nearly one million triples.

- Supervised methods with auxiliary information: These methods are based on both relation structure and some auxiliary information (e.g., attribute information, images), where JAPE [18], GCN-Align [48], MRAEA [27], AttrGNN [12], MHNA [13], and SDEA [33] use attribute or descriptive information and MMEA-cat [49] and GEEA [31] use image information.

- Unsupervised methods: These methods do not use training data, but some of them use some auxiliary information, including attribute information (MultiKE [20], AttrE [19], ICLEA [36], UDCEA [41]), descriptive information (ICLEA [36]), and images (EVA [37]). SEU [40] and SelfKG [39] only use the original relation structures.

5.2. Overall Results on DBP-15K and WK31-15K

5.3. Overall Results on DWY100K

5.4. Ablation Experiments

- w/o ECE+RRS: The modules for entity-context embedding and reconstruction of relation structure were removed;

- w/o ECE: The module for entity-context embedding was removed;

- w Cosine: The similarity function based on consistency was replaced with the cosine function;

- w GAT: The LCAT model was replaced with a simple GAT model;

- w GCN+GAT: The LCAT model was replaced with a stacked network of GCN+GAT.

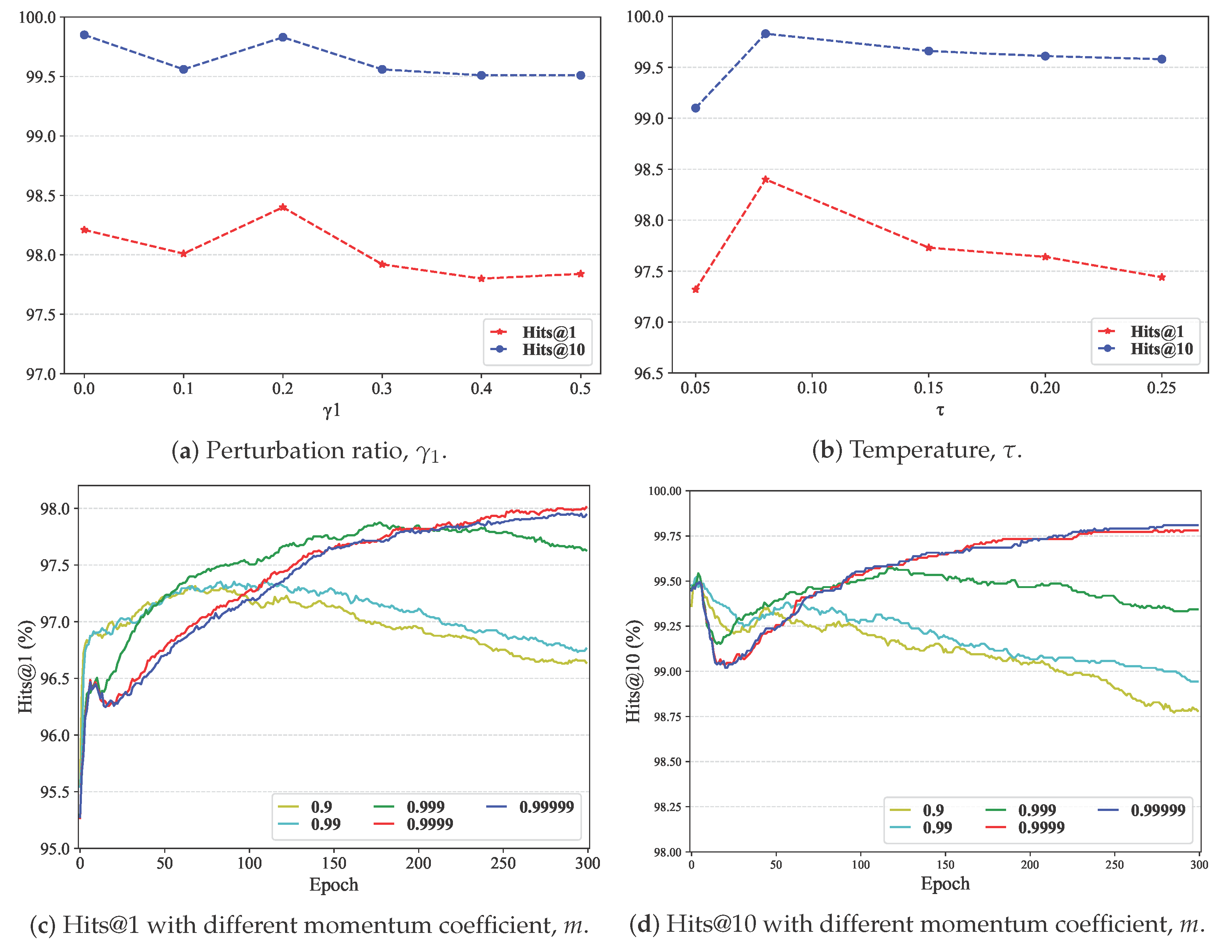

5.5. Additional Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Zhao, J.; Zhang, P.; Wang, Y.; Xin, R.; Lu, X.; Li, R.; Lyu, S.; Ou, Z.; Song, M. TQAgent: Enhancing Table-Based Question Answering with Knowledge Graphs and Tree-Structured Reasoning. Appl. Sci. 2025, 15, 3788. [Google Scholar] [CrossRef]

- Chen, F.; Yin, G.; Dong, Y.; Li, G.; Zhang, W. KHGCN: Knowledge-Enhanced Recommendation with Hierarchical Graph Capsule Network. Entropy 2023, 25, 697. [Google Scholar] [CrossRef] [PubMed]

- Xia, Y.; Luo, J.; Zhou, G.; Lan, M.; Chen, X.; Chen, J. DT4KGR: Decision Transformer for Fast and Effective Multi-Hop Reasoning over Knowledge Graphs. Inf. Process. Manag. 2024, 61, 103648. [Google Scholar] [CrossRef]

- Li, M.; Qiao, Y.; Lee, B. Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs. Information 2025, 16, 377. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; García-Durán, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-relational Data. In Proceedings of the 26th International Conference on Neural Information Processing Systems (NeurIPS), Lake Tahoe, NV, USA, 5–8 December 2013; pp. 2787–2795. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; pp. 1–14. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the 6th International Conference on Learning Representations(ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–12. [Google Scholar]

- Cai, W.; Ma, W.; Zhan, J.; Jiang, Y. Entity Alignment with Reliable Path Reasoning and Relation-aware Heterogeneous Graph Transformer. In Proceedings of the 31th International Joint Conference on Artificial Intelligence (IJCAI), Vienna, Austria, 23–29 July 2023; Volume 3, pp. 1930–1937. [Google Scholar]

- Cai, W.; Ma, W.; Wei, L.; Jiang, Y. Semi-supervised Entity Alignment via Relation-based Adaptive Neighborhood Matching. IEEE Trans. Knowl. Data Eng. (TKDE) 2023, 35, 8545–8558. [Google Scholar] [CrossRef]

- Tang, W.; Su, F.; Sun, H.; Qi, Q.; Wang, J.; Tao, S.; Yang, H. Weakly Supervised Entity Alignment with Positional Inspiration. In Proceedings of the the 16th ACM International Conference on Web Search and Data Mining, Singapore, 27 February–3 March 2023; pp. 814–822. [Google Scholar]

- Yang, H.W.; Zou, Y.; Shi, P.; Lu, W.; Lin, J.; Sun, X. Aligning Cross-Lingual Entities with Multi-Aspect Information. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 4430–4440. [Google Scholar]

- Liu, Z.; Cao, Y.; Pan, L.; Li, J.; Liu, Z.; Chua, T.S. Exploring and Evaluating Attributes, Values, and Structures for Entity Alignment. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6355–6364. [Google Scholar]

- Cai, W.; Wang, Y.; Mao, S.; Zhan, J.; Jiang, Y. Multi-heterogeneous Neighborhood-aware for Knowledge Graphs Alignment. Inf. Process. Manag. 2022, 59, 102790. [Google Scholar] [CrossRef]

- Zhu, B.; Bao, T.; Han, R.; Cui, H.; Han, J.; Liu, L.; Peng, T. An Effective Knowledge Graph Entity Alignment Model based on Multiple Information. Neural Netw. 2023, 162, 83–98. [Google Scholar] [CrossRef] [PubMed]

- Javaloy, A.; Martin, P.S.; Levi, A.; Valera, I. Learnable Graph Convolutional Attention Networks. In Proceedings of the 11th International Conference on Learning Representations(ICLR), Kigali, Rwanda, 1–5 May 2023; pp. 1–35. [Google Scholar]

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Chen, M.; Tian, Y.; Yang, M.; Zaniolo, C. Multilingual Knowledge Graph Embeddings for Cross-lingual Knowledge Alignment. In Proceedings of the 26th International Joint Conference on Artificial Intelligence (IJCAI), Melbourne, Australia, 19–25 August 2017; pp. 1511–1517. [Google Scholar]

- Sun, Z.; Hu, W.; Li, C. Cross-Lingual Entity Alignment via Joint Attribute-Preserving Embedding. In Proceedings of the 17th International Semantic Web Conference (ISWC), Vienna, Austria, 21–25 October 2017; pp. 628–644. [Google Scholar]

- Trisedya, B.D.; Qi, J.; Zhang, R. Entity Alignment between Knowledge Graphs Using Attribute Embeddings. In Proceedings of the 33th AAAI Conference on Artificial Intelligence (AAAI), Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 297–304. [Google Scholar]

- Zhang, Q.; Sun, Z.; Hu, W.; Chen, M.; Guo, L.; Qu, Y. Multi-view Knowledge Graph Embedding for Entity Alignment. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI), Macao, China, 10–16 August 2019; pp. 5429–5435. [Google Scholar]

- Sun, Z.; Hu, W.; Zhang, Q.; Qu, Y. Bootstrapping Entity Alignment with Knowledge Graph Embedding. In Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 4396–4402. [Google Scholar]

- Zhu, Q.; Zhou, X.; Wu, J.; Tan, J.; Guo, L. Neighborhood-Aware Attentional Representation for Multilingual Knowledge Graphs. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI), Macao, China, 10–16 August 2019; pp. 1943–1949. [Google Scholar]

- Wu, Y.; Liu, X.; Feng, Y.; Wang, Z.; Yan, R.; Zhao, D. Relation-Aware Entity Alignment for Heterogeneous Knowledge Graphs. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI), Macao, China, 10–16 August 2019; pp. 5278–5284. [Google Scholar]

- Sun, Z.; Wang, C.; Hu, W.; Chen, M.; Dai, J.; Zhang, W.; Qu, Y. Knowledge Graph Alignment Network with Gated Multi-Hop Neighborhood Aggregation. In Proceedings of the 34th AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; Volume 34, pp. 222–229. [Google Scholar]

- Huang, Z.; Li, X.; Ye, Y.; Zhang, B.; Xu, G.; Gan, W. Multi-view Knowledge Graph Fusion via Knowledge-aware Attentional Graph Neural Network. Appl. Intell. 2023, 53, 3652–3671. [Google Scholar] [CrossRef]

- Mao, X.; Wang, W.; Wu, Y.; Lan, M. Boosting the Speed of Entity Alignment 10*: Dual Attention Matching Network with Normalized Hard Sample Mining. In Proceedings of the World Wide Web Conference (WWW), Ljubljana, Slovenia, 19–23 April 2021; pp. 821–832. [Google Scholar]

- Mao, X.; Wang, W.; Xu, H.; Lan, M.; Wu, Y. MRAEA: An Efficient and Robust Entity Alignment Approach for Cross-lingual Knowledge Graph. In Proceedings of the 13th International Conference on Web Search and Data Mining (WSDM), Houston, TX, USA, 3–7 February 2020; pp. 420–428. [Google Scholar]

- Zeng, W.; Zhao, X.; Tang, J.; Fan, C. Reinforced Active Entity Alignment. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management (CIKM), New York, NY, USA, 1–5 November 2021; pp. 2477–2486. [Google Scholar]

- Guo, L.; Han, Y.; Zhang, Q.; Chen, H. Deep Reinforcement Learning for Entity Alignment. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 2754–2765. [Google Scholar]

- Qian, Y.; Pan, L. Variety-Aware GAN and Online Learning Augmented Self-Training Model for Knowledge Graph Entity Alignment. Inf. Process. Manag. 2023, 60, 103472. [Google Scholar] [CrossRef]

- Guo, L.; Chen, Z.; Chen, J.; Fang, Y.; Zhang, W.; Chen, H. Revisit and Outstrip Entity Alignment: A Perspective of Generative Models. In Proceedings of the 12th International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024; pp. 1–18. [Google Scholar]

- Sun, Z.; Hu, W.; Wang, C.; Wang, Y.; Qu, Y. Revisiting Embedding-based Entity Alignment: A Robust and Adaptive Method. IEEE Trans. Knowl. Data Eng. 2022, 35, 8461–8475. [Google Scholar] [CrossRef]

- Zhong, Z.; Zhang, M.; Fan, J.; Dou, C. Semantics Driven Embedding Learning for Effective Entity Alignment. In Proceedings of the IEEE 38th International Conference on Data Engineering (ICDE), Kuala Lumpur, Malaysia, 9–12 May 2022; pp. 2127–2140. [Google Scholar]

- Su, F.; Xu, C.; Yang, H.; Chen, Z.; Jing, N. Neural Entity Alignment with Cross-Modal Supervision. Inf. Process. Manag. 2023, 60, 103174. [Google Scholar] [CrossRef]

- Li, Q.; Ji, C.; Guo, S.; Liang, Z.; Wang, L.; Li, J. Multi-modal Knowledge Graph Transformer Framework for Multi-modal Entity Alignment. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 987–999. [Google Scholar]

- Zeng, K.; Dong, Z.; Hou, L.; Cao, Y.; Hu, M.; Yu, J.; Lv, X.; Cao, L.; Wang, X.; Liu, H.; et al. Interactive Contrastive Learning for Self-supervised Entity Alignment. In Proceedings of the 31st ACM International Conference on Information and Knowledge Management (CIKM), Atlanta, GA, USA, 17–21 October 2022; pp. 2465–2475. [Google Scholar]

- Liu, F.; Chen, M.; Roth, D.; Collier, N. Visual Pivoting for (Unsupervised) Entity Alignment. In Proceedings of the 35th the AAAI Conference on Artificial Intelligence (AAAI), Online, 2–9 February 2021; Volume 35, pp. 4257–4266. [Google Scholar]

- Li, J.; Song, D. Uncertainty-aware Pseudo Label Refinery for Entity Alignment. In Proceedings of the ACM Web Conference 2022, Virtual Event, Lyon, France, 25–29 April 2022; pp. 829–837. [Google Scholar]

- Liu, X.; Hong, H.; Wang, X.; Chen, Z.; Kharlamov, E.; Dong, Y.; Tang, J. SelfKG: Self-supervised Entity Alignment in Knowledge Graphs. In Proceedings of the 2022 World Wide Web Conference (WWW), Virtual Event, Lyon, France, 25–29 April 2022; pp. 860–870. [Google Scholar]

- Mao, X.; Wang, W.; Wu, Y.; Lan, M. From Alignment to Assignment: Frustratingly Simple Unsupervised Entity Alignment. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online and Punta Cana, Dominican Republic, 7–11 November 2021; pp. 2843–2853. [Google Scholar]

- Jiang, C.; Qian, Y.; Chen, L.; Gu, Y.; Xie, X. Unsupervised Deep Cross-Language Entity Alignment. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: Research Track: European Conference (ECML PKDD), Turin, Italy, 18–22 September 2023; pp. 3–19. [Google Scholar]

- Wang, C.; Huang, Z.; Wan, Y.; Wei, J.; Zhao, J.; Wang, P. FuAlign: Cross-Lingual Entity Alignment via Multi-view Representation Learning of Fused Knowledge Graphs. Inf. Fusion 2023, 89, 41–52. [Google Scholar] [CrossRef]

- Feng, F.; Yang, Y.; Cer, D.; Arivazhagan, N.; Wang, W. Language-agnostic Bert Sentence Embedding. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL), Dublin, Ireland, 22–27 May 2022; pp. 878–891. [Google Scholar]

- Fountoulakis, K.; Levi, A.; Yang, S.; Baranwal, A.; Jagannath, A. Graph Attention Retrospective. J. Mach. Learn. Res. 2023, 24, 1–52. [Google Scholar]

- Sun, Z.; Zhang, Q.; Hu, W.; Wang, C.; Chen, M.; Akrami, F.; Li, C. A Benchmarking Study of Embedding-based Entity Alignment for Knowledge Graphs. In Proceedings of the VLDB Endowment (PVLDB), Tokyo, Japan, 31 August–4 September 2020; pp. 2326–2340. [Google Scholar]

- Liu, B.; Lan, T.; Hua, W.; Zuccon, G. Dependency-aware Self-training for Entity Alignment. In Proceedings of the 16th ACM International Conference on Web Search and Data Mining, Singapore, 27 February–3 March 2023; pp. 796–804. [Google Scholar]

- Liu, B.; Scells, H.; Hua, W.; Zuccon, G.; Zhao, G.; Zhang, X. Guiding Neural Entity Alignment with Compatibility. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP), Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 491–504. [Google Scholar]

- Wang, Z.; Lv, Q.; Lan, X.; Zhang, Y. Cross-lingual Knowledge Graph Alignment via Graph Convolutional Networks. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing (EMNLP), Brussels, Belgium, 31 October–4 November 2018; pp. 349–357. [Google Scholar]

- Wang, M.; Shi, Y.; Yang, H.; Zhang, Z.; Lin, Z.; Zheng, Y. Probing the Impacts of Visual Context in Multimodal Entity Alignment. Data Sci. Eng. 2023, 8, 124–134. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

| Notation | Description |

|---|---|

| Text embedding matrix of entities. | |

| Context embedding matrix of entities. | |

| Output embedding matrix of the textual feature layer. | |

| Output embedding matrix of the LCAT layer. | |

| Output embedding matrix of the query encoder. | |

| Output embedding matrix of the key encoder. | |

| ⊕ | Superposition operation. |

| ‖ | Vector concatenation operation. |

| · | Dot product operation. |

| Datasets | KGs | Entities | Rel. | Rel. Triples | |

|---|---|---|---|---|---|

| DBP-15K | Japanese | 65,744 | 2043 | 164,373 | |

| English | 95,680 | 2096 | 233,319 | ||

| French | 66,858 | 1379 | 192,191 | ||

| English | 105,889 | 2209 | 278,590 | ||

| Chinese | 66,469 | 2830 | 153,929 | ||

| English | 98,125 | 2317 | 237,674 | ||

| WK31-15K | English | 15,000 | 215 | 47,676 | |

| German | 15,000 | 131 | 50,419 | ||

| English | 15,000 | 169 | 84,867 | ||

| German | 15,000 | 96 | 92,632 | ||

| English | 15,000 | 267 | 47,334 | ||

| French | 15,000 | 210 | 40,864 | ||

| English | 15,000 | 193 | 96,318 | ||

| French | 15,000 | 166 | 80,112 | ||

| DWY-100K | DBP-WD | DBpedia | 100,000 | 330 | 463,294 |

| Wikidata | 100,000 | 220 | 448,774 | ||

| DBP-YG | DBpedia | 100,000 | 302 | 428,952 | |

| YAGO3 | 100,000 | 21 | 502,563 | ||

| Datasets | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Models | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR |

| MTransE [17] | 30.8 | 61.4 | 36.4 | 27.9 | 57.5 | 34.9 | 24.4 | 55.6 | 33.5 |

| BootEA [21] | 62.9 | 84.8 | 70.3 | 62.2 | 85.4 | 70.1 | 65.3 | 87.4 | 73.1 |

| RDGCN ‡ [23] | 70.8 | 84.6 | 74.9 | 76.7 | 89.5 | 81.2 | 88.6 | 95.7 | 90.8 |

| AliNet [24] | 53.9 | 82.6 | 62.8 | 54.9 | 83.1 | 64.5 | 55.2 | 85.2 | 65.7 |

| EMEA * [47] | 78.2 | 93.3 | 84.2 | 77.1 | 95.0 | 83.7 | 80.1 | 96.6 | 86.3 |

| RPR-RHGT ‡ [8] | 69.3 | 86.9 | 75.4 | 88.6 | 95.5 | 91.2 | 88.9 | 97.0 | 91.0 |

| PEEA *† [10] | 76.1 | 91.5 | 81.6 | 77.2 | 92.5 | 82.1 | 80.6 | 94.5 | 85.8 |

| RANM ‡ [9] | 77.6 | 88.1 | 81.3 | 90.5 | 95.2 | 92.3 | 90.9 | 95.8 | 92.7 |

| KAGNN ‡ [25] | 73.6 | 87.3 | 78.6 | 79.4 | 91.1 | 83.7 | 92.0 | 97.6 | 94.1 |

| JAPE [18] | 41.2 | 74.5 | 49.0 | 36.3 | 68.5 | 47.6 | 32.4 | 66.7 | 43.0 |

| GCN-Align [48] | 41.3 | 74.4 | 54.9 | 39.9 | 74.5 | 54.6 | 37.3 | 74.5 | 53.2 |

| MRAEA [27] | 75.7 | 92.9 | 82.7 | 75.7 | 93.3 | 82.6 | 78.0 | 94.8 | 84.9 |

| AttrGNN † [12] | 79.6 | 92.9 | 84.5 | 78.3 | 92.0 | 83.4 | 91.8 | 97.7 | 91.0 |

| MHNA * [13] | 60.3 | 80.5 | 65.7 | 87.6 | 94.4 | 90.3 | 87.8 | 95.0 | 90.5 |

| MMEA-cat ‡ [49] | 62.4 | 84.5 | 70.2 | 64.1 | 86.9 | 72.3 | 72.5 | 91.4 | 79.3 |

| GEEA ‡ [31] | 76.1 | 94.6 | 82.7 | 75.5 | 95.3 | 82.7 | 77.6 | 96.2 | 84.4 |

| MultiKE [20] | 43.7 | 51.6 | 46.6 | 57.0 | 64.2 | 59.6 | 71.4 | 76.0 | 73.3 |

| AttrE [19] | 26.3 | 43.6 | 32.2 | 38.1 | 61.5 | 47.5 | 62.3 | 79.3 | 68.6 |

| ICLEA † [36] | 80.4 | 91.4 | - | 87.3 | 93.1 | - | 97.3 | 99.5 | - |

| EVA ‡ [37] | 75.2 | 89.5 | 80.4 | 73.7 | 89.0 | 79.1 | 73.1 | 90.9 | 79.2 |

| SEU *† [40] | 80.8 | 92.1 | 85.2 | 87.1 | 94.6 | 89.8 | 97.0 | 99.6 | 98.3 |

| SelfKG *† [39] | 73.8 | 86.0 | 77.1 | 81.5 | 91.3 | 84.9 | 94.2 | 98.8 | 97.2 |

| UDCEA *† [41] | 81.1 | 92.2 | 85.5 | 84.7 | 93.5 | 87.8 | 98.1 | 99.5 | 98.7 |

| LCA-UEA (ours) † | 81.5 | 91.5 | 85.1 | 87.5 | 94.6 | 90.1 | 98.4 | 99.8 | 99.0 |

| Datasets | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Models | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR |

| MTransE [17] | 24.6 | 56.2 | 35.0 | 24.4 | 52.8 | 34.0 | 30.9 | 61.2 | 40.9 | 19.6 | 43.3 | 27.7 |

| BootEA [21] | 50.3 | 78.6 | 59.7 | 66.1 | 90.8 | 74.7 | 67.1 | 86.6 | 73.7 | 84.9 | 94.5 | 88.3 |

| RDGCN [23] | 75.4 | 87.9 | 79.9 | 84.8 | 93.4 | 88.1 | 82.4 | 91.3 | 85.5 | 84.0 | 90.9 | 86.6 |

| AliNet [24] | 35.8 | 67.1 | 46.4 | 54.2 | 86.0 | 65.6 | 59.3 | 81.3 | 66.4 | 79.8 | 92.3 | 84.4 |

| EMEA * [47] | 63.8 | 91.1 | 73.3 | 85.5 | 98.3 | 90.5 | 75.1 | 94.1 | 81.9 | 87.6 | 98.0 | 94.4 |

| RPR-RHGT [8] | 90.9 | 96.6 | 93.0 | 94.9 | 98.5 | 96.3 | 92.1 | 97.2 | 94.0 | 93.8 | 97.8 | 95.3 |

| STEA [46] | 72.8 | 92.9 | 79.8 | 92.6 | 99.0 | 95.0 | 81.1 | 95.3 | 86.0 | 96.0 | 99.2 | 97.2 |

| PEEA † [10] | 76.6 | 92.0 | 80.4 | 88.9 | 98.2 | 92.5 | 78.7 | 95.4 | 84.5 | 95.7 | 99.0 | 97.0 |

| RANM [9] | 92.5 | 97.0 | 94.1 | 97.0 | 98.4 | 97.7 | 94.9 | 97.8 | 96.2 | 96.6 | 98.0 | 97.5 |

| JAPE [18] | 26.6 | 59.4 | 37.4 | 29.4 | 62.3 | 40.4 | 27.4 | 59.6 | 38.1 | 15.9 | 39.4 | 24.0 |

| GCN-Align [48] | 33.4 | 66.9 | 44.6 | 41.8 | 80.1 | 54.5 | 48.0 | 75.3 | 57.1 | 54.1 | 78.6 | 62.6 |

| MRAEA * [27] | 40.6 | 72.2 | 51.1 | 78.9 | 96.9 | 85.8 | 53.3 | 78.7 | 62.1 | 75.7 | 92.2 | 81.6 |

| MHNA * [13] | 92.9 | 96.4 | 94.5 | 96.1 | 98.4 | 97.2 | 94.1 | 97.4 | 95.5 | 95.7 | 98.2 | 96.9 |

| SDEA *† [33] | 97.1 | 98.9 | 97.8 | 97.6 | 99.2 | 98.1 | 97.2 | 99.0 | 97.9 | 97.7 | 99.4 | 98.3 |

| MultiKE [20] | 74.2 | 83.6 | 77.6 | 86.1 | 92.3 | 88.4 | 75.3 | 82.9 | 78.1 | 75.7 | 83.7 | 78.6 |

| AttrE [19] | 48.9 | 73.7 | 57.6 | 53.2 | 80.0 | 62.7 | 53.6 | 75.8 | 61.4 | 64.3 | 85.6 | 71.9 |

| SEU *† [40] | 97.5 | 99.3 | 98.6 | 95.1 | 99.3 | 96.5 | 97.2 | 99.0 | 97.9 | 95.4 | 97.9 | 96.3 |

| SelfKG *† [39] | 97.0 | 99.4 | 97.9 | 97.1 | 99.5 | 98.0 | 96.7 | 99.0 | 97.5 | 96.2 | 98.8 | 97.1 |

| UDCEA *† [41] | 97.6 | 99.4 | 98.2 | 97.8 | 99.1 | 98.2 | 96.6 | 98.6 | 97.4 | 94.8 | 98.0 | 96.0 |

| LCA-UEA (ours) † | 98.6 | 99.8 | 99.1 | 98.8 | 99.7 | 99.2 | 97.7 | 99.4 | 98.3 | 96.8 | 98.8 | 97.5 |

| Datasets | DBP-WD | DBP-YG | ||||

|---|---|---|---|---|---|---|

| Models | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR |

| MTransE [17] | 28.1 | 52.0 | 36.3 | 25.2 | 49.3 | 33.4 |

| BootEA [21] | 74.8 | 89.8 | 80.1 | 76.1 | 89.4 | 80.8 |

| AliNet [24] | 69.0 | 90.8 | 76.6 | 78.6 | 94.3 | 84.1 |

| EMEA * [47] | 83.6 | 95.2 | 88.9 | 86.2 | 97.3 | 90.4 |

| RPR-RHGT [8] | 99.2 | 99.8 | 99.5 | 96.5 | 98.8 | 97.4 |

| STEA * [46] | 90.6 | 97.8 | 93.2 | 89.3 | 96.5 | 91.9 |

| RANM [9] | 99.3 | 99.8 | 99.5 | 97.2 | 99.4 | 98.0 |

| JAPE [18] | 31.8 | 58.9 | 41.1 | 23.6 | 48.4 | 32.0 |

| GCN-Align [48] | 50.6 | 77.2 | 57.7 | 59.7 | 83.8 | 68.6 |

| MRAEA [27] | 65.5 | 88.6 | 73.4 | 77.5 | 94.2 | 83.4 |

| AttrGNN † [12] | 96.0 | 98.8 | 97.2 | 99.8 | 99.9 | 99.9 |

| MHNA * [13] | 99.3 | 99.9 | 99.4 | 99.9 | 100.0 | 100.0 |

| MultiKE [20] | 91.8 | 96.2 | 93.5 | 88.0 | 95.3 | 90.6 |

| SEU *† [40] | 95.7 | 99.4 | 97.2 | 99.9 | 100.0 | 99.9 |

| SelfKG *† [39] | 98.0 | 99.8 | 98.9 | 99.8 | 100.0 | 99.9 |

| LCA-UEA (ours) † | 98.3 | 99.8 | 98.9 | 100.0 | 100.0 | 100.0 |

| Datasets | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Models | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR | Hits@1 | Hits@10 | MRR |

| w/o ECE+RRS | 79.4 | 89.8 | 83.1 | 85.6 | 93.3 | 88.4 | 98.1 | 99.6 | 98.7 |

| w/o ECE | 79.6 | 89.6 | 83.2 | 86.0 | 93.5 | 88.7 | 98.2 | 99.7 | 98.8 |

| w Cosine | 77.0 | 89.1 | 81.4 | 83.8 | 93.3 | 87.3 | 96.2 | 99.6 | 97.6 |

| w GAT | 74.3 | 90.2 | 80.1 | 81.6 | 93.7 | 86.1 | 97.7 | 99.6 | 98.5 |

| w GCN+GAT | 76.5 | 91.8 | 82.2 | 87.0 | 95.6 | 90.2 | 97.8 | 99.9 | 98.8 |

| LCA-UEA (ours) | 81.5 | 91.5 | 85.1 | 87.5 | 94.6 | 90.1 | 98.4 | 99.8 | 99.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, W.; Ma, W. Learnable Convolutional Attention Network for Unsupervised Knowledge Graph Entity Alignment. Entropy 2025, 27, 924. https://doi.org/10.3390/e27090924

Cai W, Ma W. Learnable Convolutional Attention Network for Unsupervised Knowledge Graph Entity Alignment. Entropy. 2025; 27(9):924. https://doi.org/10.3390/e27090924

Chicago/Turabian StyleCai, Weishan, and Wenjun Ma. 2025. "Learnable Convolutional Attention Network for Unsupervised Knowledge Graph Entity Alignment" Entropy 27, no. 9: 924. https://doi.org/10.3390/e27090924

APA StyleCai, W., & Ma, W. (2025). Learnable Convolutional Attention Network for Unsupervised Knowledge Graph Entity Alignment. Entropy, 27(9), 924. https://doi.org/10.3390/e27090924