- Article

Artificial Intelligence-Based Models for Predicting Disease Course Risk Using Patient Data

- Rafiqul Chowdhury,

- Wasimul Bari and

- Minhajur Rahman

- + 2 authors

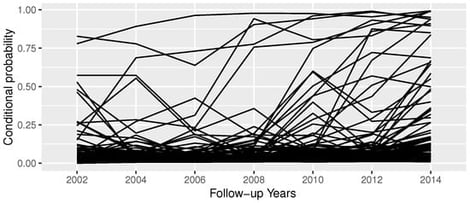

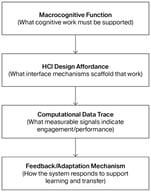

Nowadays, longitudinal data are common—typically high-dimensional, large, complex, and collected using various methods, with repeated outcomes. For example, the growing elderly population experiences health deterioration, including limitations in Instrumental Activities of Daily Living (IADLs), thereby increasing demand for long-term care. Understanding the risk of repeated IADLs and estimating the trajectory risk by identifying significant predictors will support effective care planning. Such data analysis requires a complex modeling framework. We illustrated a regressive modeling framework employing statistical and machine learning (ML) models on the Health and Retirement Study data to predict the trajectory of IADL risk as a function of predictors. Based on the accuracy measure, the regressive logistic regression (RLR) and the Decision Tree (DT) models showed the highest prediction accuracy: 0.90 to 0.93 for follow-ups 1–6; and 0.89 and 0.90 for follow-up 7, respectively. The Area Under the Curve and Receiver Operating Characteristics curve also showed similar findings. Depression scores, mobility score, large muscle score, and Difficulties of Activities of Daily Living (ADLs) score showed a significant positive association with IADLs (p < 0.05). The proposed modeling framework simplifies the analysis and risk prediction of repeated outcomes from complex datasets and could be automated by leveraging Artificial Intelligence (AI).

6 February 2026