Abstract

This paper presents a novel system for the automated monitoring and maintenance of gravel runways in remote airports, particularly in Northern Canada, using Unmanned Aerial Vehicles (UAVs) and computer vision technologies. Due to the geographic isolation and harsh weather conditions, these airports face unique challenges in runway maintenance. Our approach integrates advanced deep learning algorithms and UAV technology to provide a cost-effective, efficient, and accurate means of detecting runway defects, such as water pooling, vegetation encroachment, and surface irregularities. We developed a hybrid approach combining the vision transformer model with image filtering and thresholding algorithms, applied on high-resolution UAV imagery. This system not only identifies various types of defects but also evaluates runway smoothness, contributing significantly to the safety and reliability of air transport in these areas. Our experiments, conducted across multiple remote airports, demonstrate the effectiveness of our approach in real-world scenarios, offering significant improvements over traditional manual inspection methods.

1. Introduction

In Northern Canada’s vast, remote landscapes, air transportation serves as a lifeline, connecting isolated communities and facilitating essential services. Gravel runways at remote airports are fundamental to this transportation network, with 94 out of Canada’s 117 remote airports relying on gravel runways as their primary landing strips [1]. Ensuring the safety and integrity of these runways is vital to the reliability of air transportation in the region.

Monitoring and maintaining the condition of gravel runways present unique challenges due to their remote locations and harsh environmental conditions. Traditionally, gravel runways are inspected and maintained through manual, periodic inspections [2]. Yet, these inspections are often infrequent and labor-intensive, requiring specialized teams to travel to remote locations at significant expense. Furthermore, manual inspections are constrained by weather conditions and accessibility, hindering runway defects’ timely detection and repair. However, with the introduction of systems like DJI Dock 2, these inspections can now be conducted remotely, even during brief operational windows, enhancing our ability to respond promptly.

Several studies have explored ways to automate the process to address the difficulties in monitoring runways manually. Different ways of gathering and handling datasets have been used to develop systems that can assist in the automated monitoring of asphalt and concrete runways. For example, Zhai et al. proposed an automatic segmentation and enhancement method for airport pavements based on 3D images [3]. Ambalam et al. developed an automated object detection framework by harnessing the capabilities of YOLOv5: the integration of Unmanned Aerial Vehicles (UAVs) into surveillance operations for the precise identification of Foreign Object Debris (FOD) on airport runways [4].

The automation and integration of AI-assisted UAV operation is a new trend [5]. However, to the best of our knowledge, there has not been any work to automate the identification of issues with gravel runways at remote airports, primarily due to the harsh climate conditions and the expense of collecting data. We design and implement an automated system using Unmanned Aerial Vehicles (UAVs) imagery and computer vision methods to overcome these challenges and enhance runway maintenance efficiency for remote airports’ gravel runways. UAVs have significant advantages in periodically collecting image data of remote airports, including accessing inaccessible areas, being resilient to harsh conditions, and cost effectiveness. The project begins by collecting baseline datasets from remote airports using drones with advanced sensor payloads. These drones capture high-resolution imagery, ensuring precision and depth in data acquisition.

On the other hand, deep learning algorithms in computer vision have recently made significant advancements. They are ideal tools to replace manual inspection processes [6,7,8,9]. The vision transformer algorithm has been trained on image data to analyze UAV images and detect potential runway defects thoroughly, such as surface water pooling and vegetation close to the runway [10]. Throughout this process, it was observed that detecting specific defects on the runway, such as loss of material, segregation, and rutting, poses significant challenges due to the complexity of categorization. While deep learning methods are powerful, they often require a substantial labeled training dataset for model development. However, in the context of operational airports, it is nearly impossible to obtain many images showing runways with various evident defects and label them in bulk.To address this limitation, our approach involves evaluating the smoothness of airport runways using image filtering and thresholding algorithms with high-resolution UAV imagery.

The following are the main contributions of this paper.

- Approach: We have developed a hybrid approach combining the vision transformer model, image filtering, and thresholding algorithms with high-resolution UAV imagery to accurately evaluate gravel runways’ conditions. Our system can effectively detect and segment areas with defects such as surface water pooling and vegetation, generating comprehensive evaluation reports that include numerical data and visual representations.

- Experimentation: To facilitate our experimentation, we collect diverse high-resolution aerial images of airport gravel runways from multiple airports, utilizing drones as our primary data acquisition tool. This dataset is a crucial asset, forming the backbone of our model’s training and testing phases. With this extensive dataset at our disposal, we can thoroughly evaluate and validate the performance of our model across a wide range of real-world airport environments, ensuring its robustness and effectiveness.

- Evaluation: Our approach’s overall model performance involves assessing its effectiveness in detecting airport gravel runway defects and evaluating the runway’s smoothness. Here are some key details about the overall model performance:

- –

- Accuracy in defect detection: this involves evaluating the model’s ability to identify and classify these defects correctly.

- –

- Intersection over Union (IoU) metrics: we use IoU metrics to measure the overlap between the model’s predicted and ground truth defect regions.

- –

- Precision and recall: we assess the precision and recall of the model to evaluate its ability to minimize false positives and false negatives, respectively, and also to determine the overall efficiency of the model.

The paper is structured as follows: Section 2 explores the existing literature. Section 3 describes the core problem to be solved and breaks it down into four key subproblems. Section 4 mentions that we will use the sliding window technique to run the detection model directly on orthogonal images. Section 5 and Section 6 discuss the detection and the smoothness evaluation algorithms used in our research process. We finish by analyzing and summarizing our experimental results in Section 7 and Section 8.

2. Related Works on Airport Runway Defects Detection

We conducted a literature review to provide an in-depth discussion of the progress achieved in airport runway defect detection, particularly in the context of the growing applications of UAV technology and image segmentation algorithms.

2.1. Ground-Based Imagery

Deep learning offers essential techniques for detecting runway defects, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and deep belief networks (DBNs). Guo et al. proposed an Airport Road Surface Intelligent Inspection System. Employing computer vision and deep learning, the system utilizes MobileNet-SSD and Mask R-CNN for target detection and semantic segmentation of airport pavement anomalies [11]. Nhat-Duc et al. developed an intelligent model combining image processing and machine learning algorithms to automatically detect and classify asphalt pavement cracks [12]. The model utilizes multiclass support vector machines and artificial bee colony optimization to classify pavement cracks, with features derived from image projective integral analysis, enhancing prediction performance, with classification accuracy exceeding 96%.

Li et al. contributed to the field with an algorithm designed for the crack segmentation of airport runway pavement [13]. Their deep learning-based approach employs an encoder–decoder network structure, integrating VGG19 for feature extraction. The model introduces spatial pyramid pooling and multiloss supervision, enhancing crack segmentation capabilities, particularly on complex background airport pavements.

In exploring airport pavement damage detection, Zhang et al. proposed an automatic segmentation algorithm, AM-Mask R-CNN. Addressing challenges in small target areas and low light conditions, the algorithm integrates attention mechanisms [14]. The experimental results highlight the model’s effectiveness, with a high average F1-score of 0.9489, a mean intersection over the union of 0.9388, and an average segmentation speed of 11.8 FPS.

The datasets used in these studies are collected from ground-based devices or sensors, such as vehicle-mounted cameras, laser sensors, or cameras carried by pavement inspection robots, rather than aerial imagery obtained from Unmanned Aerial Vehicles (UAVs) or satellites.

2.2. UAVs Integrated Computer Vision Methods for Pavement Inspection

In recent years, there has been a surge in studies focused on the automation of pavement crack detection and evaluation, driven by the need for efficient road maintenance and safety improvement. Much of this research is dedicated to utilizing Unmanned Aerial Vehicles (UAVs) and advanced image segmentation algorithms. The introduction of UAV technology has revolutionized the field of runway defect detection, providing a more comprehensive and timely approach to assessing road conditions [15].

The integration of Unmanned Aerial Vehicles (UAVs) and deep learning networks has emerged as a potent approach in the domain of highway crack detection [16]. UAVs with deep learning models, such as YOLOv4 and YOLOv5, have demonstrated their potential to automate this critical task. These systems utilize various image preprocessing techniques to enhance dataset quality, ultimately improving deep learning algorithms’ detection performance and generalization ability.

In the quest for timely and effective road crack detection, a pixel-level approach based on ARD-Unet has been proposed, combining U-Net with innovative techniques [17]. This method achieves impressive results using UAV remote sensing images, with a 76.41% mean Intersection over Union (mIoU) and a 74.24% F1-Score on a self-made dataset. Moreover, ARD-Unet is integrated with UAV technology to create a road crack detection UAV Internet of Things (IoT) system, which has demonstrated excellent performance in practical applications.

Conventional methods for monitoring pavement health have been criticized for their inefficiency, time-consuming nature, and destructive impact on pavements. Recent research suggests that deep learning network models offer a potential solution [18]. Low-altitude UAVs, equipped with high-resolution multispectral imaging capabilities, have been employed to collect detailed pavement data. These UAV-captured images are processed to extract and classify pavement defects using a combination of convolutional neural networks (CNNs) and support vector machine (SVM) classifiers. The findings indicate a significant enhancement in the accuracy of asphalt pavement aging and damage detection, addressing the limitations of conventional methods.

Another paper introduces a detailed airport pavement inspection approach, leveraging remote sensing UAV technology and AI to automate distress identification and measurement [19]. It claims significant cost savings (88%) and a substantial increase in data collection speed (1000 times faster) compared to traditional methods, with reproducible results. However, further discussion on potential challenges, such as the initial investment and operational expertise required for UAV usage, regulatory and safety considerations, and potential limitations of AI-based distress detection, would enhance the paper’s completeness and applicability in real-world airport management.

These studies collectively emphasize the growing significance of UAV technology and the integration of advanced algorithms in automating the detection and evaluation of pavement defects.

2.3. Gravel Pavement Surface Inspection

Given the distinct characteristics of our study areas in Northern Canada, where airport runways are predominantly constructed with unsurfaced gravel material, it is essential to understand the unique health conditions associated with gravel pavement surfaces. Unlike asphalt and concrete surfaces, the definitions of gravel pavement surfaces’ health conditions vary significantly. Numerous studies have discussed the conditions and potential defects specific to gravel surfaces.

Unsurfaced gravel roads constitute a significant portion of road networks in Brazil and other countries, necessitating substantial budget allocations for maintenance [20]. However, despite these investments, construction and maintenance efforts often fall short due to a lack of specialized technological knowledge among personnel. Contributing to improved practices, the following paper analyzed distress mechanisms in unsurfaced gravel roads based on soil mechanics. The comprehensive study encompasses laboratory tests, 30-month performance monitoring, and surveys addressing distress evolution and road roughness.

Another study evaluated the performance of the runway and taxiway at Cambridge Bay airport, highlighting the superior ride quality on the taxiway but recommending corrective action for the runway based on International Roughness Index (IRI) and Riding Comfort Index (RCI) values [2]. The lightweight deflectometer (LWD) test revealed stiffness variations along the runway, suggesting potential design considerations for new surface technologies. Condition inspections identified gravel quality issues impacting operational and maintenance costs, emphasizing the need for standardized procedures. The study recommended a hydraulic penetrometer and LWD for California Bearing Ratio (CBR) testing in Arctic airports with financial constraints. These metrics have been used for decades. They are manual measures, highly subjective, and limited to specific devices. We will introduce a more comprehensive metric that is more accurate, adaptable to diverse situations, and incorporates objective data for a reliable evaluation.

In managing vital gravel airstrips crucial for general aviation, emergency evacuations, and forest fire-fighting, the Yukon Department of Highways and Public Works faces unique challenges [21]. Despite existing systems for highways and major airports, a gap exists concerning an inspection and rating system for these low-traffic airstrips. The paper outlines the development of a rating system, focusing on distress monitoring and introducing a general condition index. Emphasizing the distinctive roles of these community airports, the study underscores their specific needs, such as medical evacuations and forest fire-fighting support, necessitating a tailored management approach.

2.4. Research Gap

Our research goal aims to create a next-gen automated gravel runway inspection system, especially for airports in remote areas. Existing studies on runway inspection primarily focused on asphalt surfaces, with works such as [13,22,23,24]. The domain of gravel runway inspection remains reliant mainly on manual methods [2].

Our research adopts a robust approach that combines drones and computer vision to address the inspection challenges of gravel runways. We provide a comprehensive tool for this purpose, which incorporates identifying critical elements like water pooling and vegetation, which are potential runway defects. Additionally, the tool detects irregularities on the runway surface using a bilateral image-filtering technique, a novel application of image filtering in this field. Overall, our research represents the first of its kind, an end-to-end automated system designed to inspect gravel runways.

3. Problem Description

Traditional manual inspections of gravel runways at remote airports in Northern Canada are inadequate due to infrequency, high labor costs, and challenges posed by the harsh environment. While automated monitoring approaches have been explored for asphalt and concrete runways, limited work exists for gravel surfaces at remote sites.

We have learned from Advisory Circular 300-004 [10] issued by Transport Canada that the following common types of defects are observed on gravel runways:

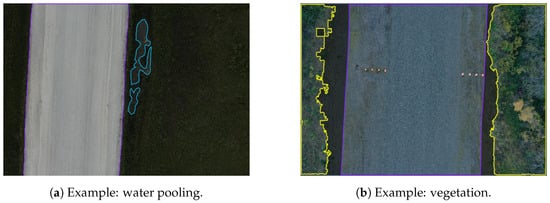

- Surface water pooling (as shown in the blue area of Figure 1a): there is a risk of water intrusion into the runway due to poor drainage around the runway.

Figure 1. Gravel runway defects example.

Figure 1. Gravel runway defects example. - Vegetation (as shown in the yellow area of Figure 1b): vegetation that grows uncontrollably can gradually cover the surface or edges of a gravel runway, potentially caused by inadequate drainage systems or the buildup of organic soils over time [10].

- Smoothness (as shown in the runway area in Figure 1c): in this category, we can observe a lot of foreign body spots on the runway, such as large rocks and areas of frost heave (as shown in Figure 1d), scattered across the surface.The smoothness of the runway area depicted in Figure 1c reveals numerous foreign objects, such as large rocks and areas of frost heave, scattered across the surface.

The primary objective of this research is to develop a comprehensive automated system specifically designed for remote airports to monitor and detect irregularities and defects in gravel runways, thereby enhancing safety measures and operational efficiency in airport environments. We tested our approach using datasets based on Canadian remote gravel airports.

In conjunction with the existing literature concerning airport runway defect detection, this study aspires to address four pivotal subproblems:

- Characterizing and Classifying Runway Issues: Establishing standardized criteria for characterizing and classifying defects in airport runways to enable the systematic assessment of surface smoothness and structural integrity.

- POI Detection Algorithms: Selecting and utilizing the most efficient deep learning algorithms capable of accurately detecting and segmenting the potential defect areas on the gravel runway.

- Smoothness Evaluation Algorithms: Integrating image filtering and image thresholding technologies with a comprehensive metric to accurately assess the smoothness of gravel runway surfaces.

- Automate Analysis System: Formulating an automated, streamlined approach with the above airport runway inspection and maintenance methods. This system aims to simplify the inspection process, visualize the inspection results, and improve the accuracy and efficiency of airport condition analysis.

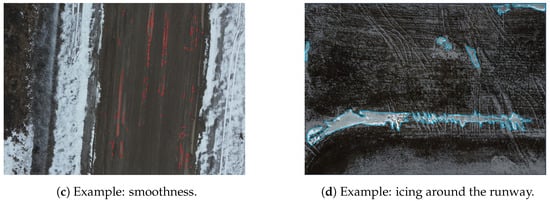

The complete methodology steps are illustrated in the flowchart below (see Figure 2). In the methodology section, we will detail each subproblem, providing solutions and insights derived from our research.

Figure 2.

Methodology flowchart.

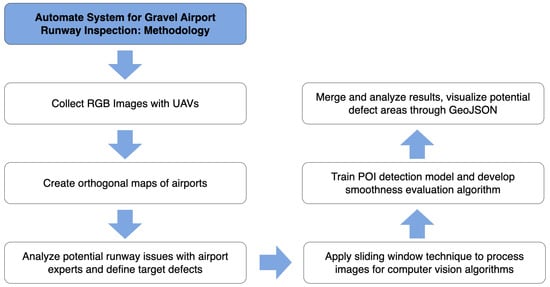

4. Sliding Window Technique

After collecting airport images using UAVs, a stitched large geotagged orthogonal image will be generated using external software (this is not the focus of our study). To run the detection model over the orthogonal image, we apply a sliding window technique, which enables us to load and read large images using computer vision models, perform segmentation, and associate results with real geocoordinates. The process of the sliding window technique is visualized in Figure 3.

Figure 3.

Sliding window technique.

We start by iterating through the large orthogonal image using a sliding window, starting from the top left corner and then moving the window horizontally and vertically according to the specified stride. As the window shifts to a new position, it captures a portion of the image within its boundaries. We apply our POI detection and smoothness evaluation methods for each sliced image. Afterward, we combine the results of processing each subregion and assign specific labels and georeferences.

5. Point of Interest (POI) Detection Algorithms

To ensure the accurate detection of potential defects on the runway, we have explored a wide range of object detection algorithms, including R-CNN [25] and YOLO [26]. However, as object detection is limited to detecting a fuzzy range using bounding boxes, we incorporated image segmentation algorithms to identify the targets’ outlines, yielding much better results.

We conducted tests and comparisons on three distinct segmentation algorithms: Mask R-CNN [27], PointRend [28], and Mask2Former [29]. Among these, Mask2Former demonstrated superior performance, outperforming the other two algorithms as discussed later in the paper.

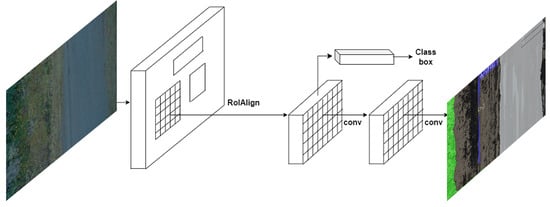

5.1. Mask R-CNN

Mask-RCNN is a computer vision algorithm well known for its excellent image segmentation performance. Developed by the Facebook AI Research team, Mask-RCNN expands upon the Faster R-CNN architecture to detect objects and generate segmentation masks for each instance simultaneously [27].

This is achieved by adding a mask branch (as shown in Figure 4) to each Region of Interest (RoI), which predicts segmentation masks alongside the existing branches for classification and bounding box regression. The mask branch utilizes a small Fully Convolutional Network (FCN) applied to each RoI to predict segmentation masks on a pixel-by-pixel basis. Mask-RCNN is straightforward to implement and train within the Faster R-CNN framework, allowing for flexible architecture designs. Additionally, the computational overhead of the mask branch is minimal, running at 5 fps, resulting in fast system performance and rapid experimentation.

Figure 4.

MASK R-CNN framework for instance segmentation.

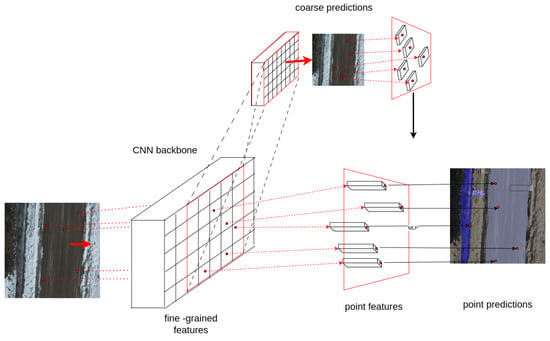

5.2. PointRend

The PointRend algorithm [28] performs point-based segmentation predictions at adaptively selected locations using an iterative subdivision algorithm. At its core, PointRend intelligently selects a subset of points within an initial coarse segmentation map based on the uncertainty of predictions at those locations, often at or near object edges. These points are chosen through an adaptive subdivision process that zeroes in on areas with high prediction entropy. For each selected point, PointRend extracts high-resolution features from multiple layers of the CNN, providing a rich, detailed context for making predictions. These features are then processed by a specialized, lightweight neural network module known as the Point Head, which makes final class predictions for each point. This process may be iterated several times, with each cycle further refining the segmentation by focusing on areas of uncertainty. The outcome is a segmentation map that significantly improves upon the initial output, offering sharper and more accurate object boundaries without a proportional increase in computational demand. PointRend achieves this by efficiently directing computational resources to where they are most needed as shown in Figure 5, optimizing the trade-off between detail enhancement and overall computational efficiency.

Figure 5.

PointRend architecture.

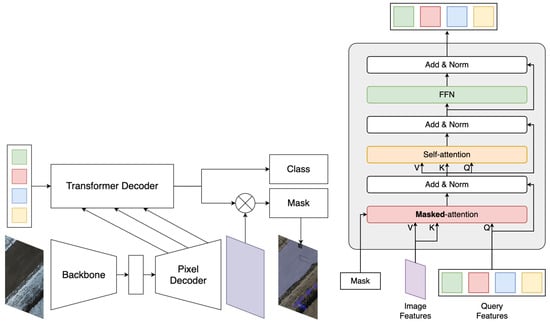

5.3. Mask2Former

Mask2Former is a unified vision transformer architecture created by the Google Research Team for universal image segmentation tasks like panoptic, instance, and semantic segmentation.

As Figure 6 illustrates, its key innovation is the masked attention mechanism that extracts localized features by constraining cross-attention within predicted mask regions. Unlike previously specialized architectures for each task, Mask2Former reduces research effort by at least three times while outperforming state-of-the-art methods across popular datasets [29]. Our project necessitates high accuracy in segmentation tasks due to the critical nature of airport maintenance. Mask2Former, leveraging the latest vision transformer model and masked attention mechanism, would be a strong candidate for our POI detection task.

Figure 6.

Mask2Former architecture.

6. Smoothness Evaluation Algorithm

The smoothness of a runway is determined by quantifying the irregularities on the runway surface. This involves detecting these irregularities while distinguishing them from the runway’s normal texture. For this purpose, we chose to use the bilateral filter algorithm. This approach allowed us to identify irregularities by highlighting the differences from the original image while preserving the edges. To refine the results, we used the Ramer–Douglas–Peucker algorithm and morphological operations, which helped retain only the relevant irregularities. In the end, we provided a rating for the runway condition using a sigmoid function on a scale from 1 to 5, where a higher rating indicates a greater need for maintenance. We will discuss the details of each step in the following subsections.

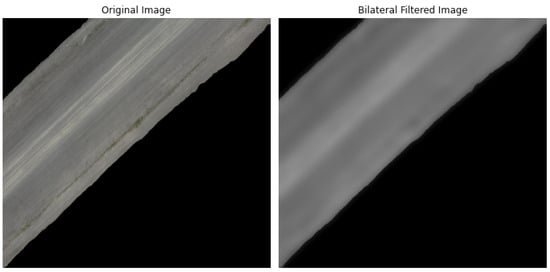

6.1. Bilateral Filter

Bilateral filtering algorithm [30] smooths images while preserving edges using a nonlinear combination of nearby image values. This method considers spatial proximity and photometric similarity and prefers near values to distant values in both domain and range. It does this by adjusting each pixel’s value based on a weighted average of its neighbors, where the weights are determined by the spatial distance and the intensity difference between the center pixel and its surrounding pixels. The key idea of the bilateral filter is that a pixel’s impact on another is determined by its spatial closeness as well as its value similarity.

The bilateral filter equation [31], denoted by , is defined as:

where the normalization factor ensures pixel weights sum to 1.0:

The parameters and determine the extent of filtering applied to image I. Equation (1) defines a normalized weighted average, where represents a spatial Gaussian weight that diminishes the impact of pixels based on their spatial distance, and is a range Gaussian that reduces the influence of pixel q when its intensity significantly deviates from that of . Figure 7 shows the original runway image and its transformed version after applying the bilateral filter.

Figure 7.

Original and bilateral filtered image.

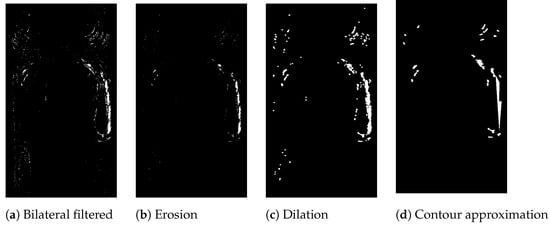

6.2. Morphological Operations

Morphological operations is a technique used to modify the shape and structure of objects within an image. The structuring element acts as a probe that interacts with the input image, and the resulting output depends on the relationship between the structuring element and the local pixel configurations in the image. For our research, we utilized erosion and dilation.

Erosion is a morphological operation that shrinks or thins objects in an image. It combines the input image with a structuring element (kernel), and it sets the output pixel value to the minimum value in the neighborhood defined by the structuring element. Figure 8b demonstrates erosion’s effect on a runway’s gray image.

Figure 8.

Morphological operations and contour approximation applied to a gray image of a runway.

Dilation is a morphological operation that expands or thickens objects in an image. It combines the input image with a structuring element (kernel). The output pixel value is set to the maximum value in the neighborhood defined by the structuring element. Figure 8c demonstrates the effect of dilation on a gray image of a runway.

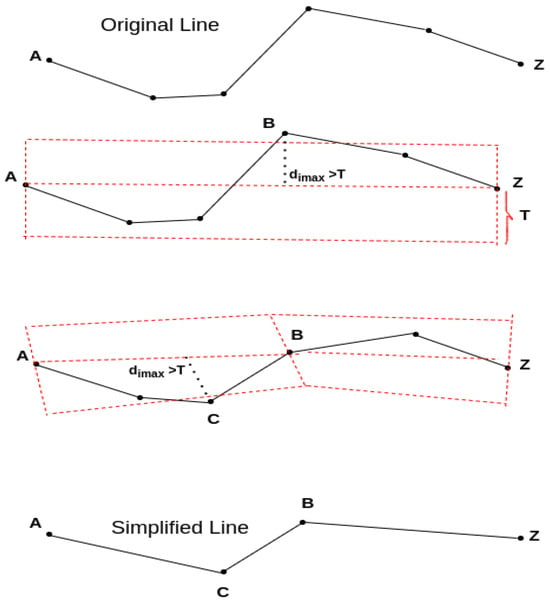

6.3. Ramer–Douglas–Peucker Algorithm

Ramer–Douglas–Peucker [32] is an algorithm that decimates a curve composed of line segments to a similar curve with fewer points. The algorithm simplifies an original line by identifying specific points, named critical points, which are then used to construct a simplified version of the line. This process begins by setting a predefined tolerance value (T) greater than zero, which measures units of length to help determine the points to be retained. A segment is drawn from the original line’s starting point A to the ending point Z (as seen in Figure 9). The distances of all other points on the line to this segment are then measured. If no distance exceeds the tolerance T, the process ends, and the generalized line will be composed of points .

Figure 9.

Visualization of the Ramer–Douglas–Peucker algorithm from Point A to Z.

Conversely, if vertex B is more distant from ( = ), it will be selected and B will be marked as a critical point. Following this, the line is divided into two new segments, and , and the process of measuring distances and selecting critical points is repeated for these new segments. This recursive procedure continues, splitting the line and evaluating points until no further divisions are possible. The result is a simplified line composed of the selected critical points, effectively reducing the points of the original line. This simplified line is generated as shown in Figure 9.

Contour approximation, which uses the Ramer–Douglas–Peucker algorithm, aims to simplify a curve by reducing its vertices. We can see a demo of contour approximation in Figure 8d using a gray image of a runway following a morphological operation.

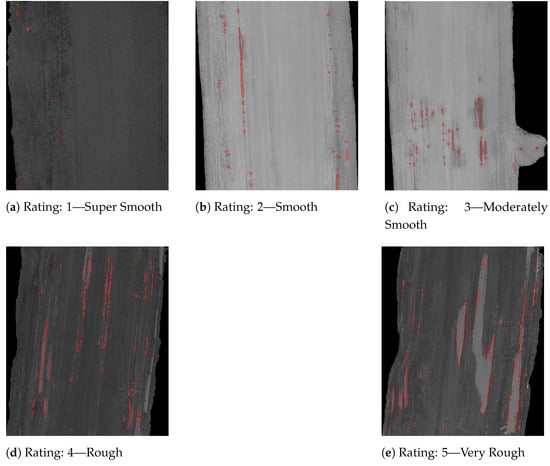

6.4. Sigmoid Function

The sigmoid function is a mathematical function that maps any real-valued input to a value between 0 and 1. The sigmoid function is denoted by the Greek letter ) and is defined as:

where ) is the input to the function, and ) is Euler’s number. In evaluating the runway’s smoothness, we modified the sigmoid function, which maps outputs to a range from 1 to 5. The modified sigmoid function is defined as:

where ) represents the normalized thresholded differences obtained after applying a bilateral filter to the original image, and ) is a constant that controls the steepness of the sigmoid curve.

The updated sigmoid function categorizes runway conditions into five levels based on their smoothness. The details of the rating are defined as follows:

- Super Smooth: The surface is exceptionally smooth, indicating optimal conditions.

- Smooth: Indicates a well-maintained surface with minimal irregularities.

- Moderately Smooth: The surface is generally smooth but with some noticeable variations.

- Rough: Shows signs of a significant presence of irregularities, requiring attention.

- Very Rough: This signifies a deteriorated surface.

Figure 10 showcases examples corresponding to each of these ratings, illustrating the range of conditions from Super Smooth to Very Rough.

Figure 10.

Smoothness rating on runway images.

7. Methodology

The initial dataset for our model consists of images obtained from six remote airports in Northern Canada, gathered using UAVs. These airports include Port McNeill Airport (YMP) on Vancouver Island, as well as Kashechewan Airport (ZKE), Moosonee Airport (YMO), Round Lake Airport (ZRJ), Keewaywin Airport (KEW), and North Spirit Lake Airport (YNO) in Ontario. The images were captured in 4k resolution using RGB cameras at an altitude ranging from 40 to 70 m above ground level. Preprocessing of the images was performed using RoboFlow, and key features of interest were labeled accordingly. Following preprocessing, the model was trained using the Northeastern Discovery Cluster.

7.1. Annotation and Preprocessing

In order to train our algorithm for identifying specific defects within airport runway imagery, we conducted a detailed annotation process. This process aimed to label and classify various points of interest in our collected high-resolution aerial images. The following points of interest were carefully identified and annotated:

- Surface Water Pooling or Icing: We carefully examined each image to identify and annotate any water bodies or signs of icing in the images as shown in the blue area of the previous Figure 1a. In addition to standing water on the runway, puddles at the runway’s edge pose a potential material loss hazard. We annotated such areas to enable the algorithm to detect these risks.

- Vegetation: Another important aspect of our annotation process was identifying any vegetation growing along the edges of the runway, as shown in the yellow area of the previous Figure 1b. Vegetation within a specified distance from the runway edge was annotated to help the algorithm recognize it.

- Edges of Runway: Each image was thoroughly reviewed to determine the edges of runway as shown in the purple area of the previous Figure 1a,b, which is essential for assessing the smoothness of the gravel runway. In cases where runway boundaries were unclear due to snow or ice, we used runway lights to identify the prepared runway edge accurately.

Following annotation, we preprocessed the dataset to enhance accuracy. This preprocessing involved two main steps:

- Resize: All images were resized to 1024 × 1024 pixels to ensure the model could capture small details, such as defects on the runway, without loss.

- Augmentation: We augmented the training samples by flipping them horizontally and vertically and adjusting saturation (between −25% and +25%) and exposure (between −10% and +10%) randomly to improve model robustness.

7.2. Metrics

The metrics we use for POI detection algorithms include Intersection over Union (IoU), accuracy, F-score, precision, and recall. These are commonly used metrics for image segmentation tasks, collectively evaluating the algorithm’s ability to accurately identify POI within images.

- 1.

- Intersection over Union (IoU):where the area of overlap is the intersection between the predicted and ground truth regions, and the area of union is their combined area.

- 2.

- Accuracy:

- 3.

- F-score:

- 4.

- Precision:

- 5.

- Recall:

8. Results

We selected three image segmentation models to achieve the best results: Mask R-CNN, PointRend, and Mask2Former. We trained these models using the same hyperparameters and dataset. At the early stage of our project without the UAVs dataset, we trained and tested these models with LARD (Landing Approach Runway Detection), a dataset of aerial front view images of runways taken during the aircraft landing phase [33]. In total, 1500 samples were selected, resized and split into an 8:2 train–validate ratio. With batch size 2 and 100 epoch, we obtained the results shown in Table 1 for segmenting runway and background.

Table 1.

LARD dataset training result.

After we obtained the UAV dataset of the six remote airports, we trained these three models again to compare. The dataset contained a total of 6832 UAV images. With training batch size 2 and 100 epoch, the training results are shown in Table 2.

Table 2.

UAVs realistic dataset result.

After comparing the results, Mask2Former outperformed the other two regarding accuracy and IoU. A final training session was conducted for Mask2Former to ensure the best fit before deployment.

Due to the lack of examples for the water pooling on the runway and low IoU on water, we augment the dataset by manually adding water pools to the runway. Two hundred augmented water pooling samples were added for the final training. With 7315 samples, batch size 2, and 100 epochs, we obtained better IoU and accuracy for the overall performance.

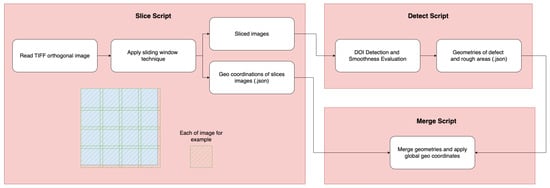

8.1. Building Automate Pipeline and Visualizing Results

To streamline the analysis of runway images, we developed a robust automated pipeline that integrates the essential stages of slicing, detecting, and merging. Initially, the pipeline segments large orthorectified images into manageable pieces, which is crucial for detailed analysis of specific areas without the computational burden of processing the entire image simultaneously. Each sliced segment then undergoes a detailed detection process using our trained Mask2Former model. This step identifies and classifies points of interest (POIs) such as surface irregularities, water pooling, and vegetation encroachment. We also evaluated the smoothness of runways as discussed in previous sections.

Following these operations, the system merges the analyzed segments to reconstruct the comprehensive view of the runway. This reconstructed image includes all detected POIs as seen in Figure 11, each annotated with global georeference coordinates. The final output is visualized through an intuitive graphical interface that displays the runway, highlighting areas of concern. This visualization assists in rapid assessment and decision-making and is a critical tool for ongoing airport maintenance planning. The automated process ensures maintenance teams have accurate, up-to-date information about runway conditions, enhancing safety and operational efficiency.

Figure 11.

Visualized results overlaying a satellite map. The bright green contours highlight all detected targets, including runways, vegetation, water, and rough surfaces. They are distinguished by various filling colors, e.g., runway as purple, water as blue, vegetation as green, and rough surfaces as red.

8.2. Implications

Our research holds significant potential for improving aviation safety and operational efficiency at remote airports with unpaved runways. The implications of our work extend beyond the immediate scope of Northern Canada and offer several critical benefits globally. Below, we address these implications in detail:

- Global Relevance of the Approach: Our methodology is not limited to Northern Canada. Countries with vast, sparsely populated areas such as the United States, Australia, and New Zealand also rely on gravel runways for connecting remote communities. Additionally, developing nations with limited road and rail infrastructure, like parts of Africa, Papua New Guinea, and Pacific Islands, could potentially benefit from our automated runway inspection and maintenance system [34].

- Enhancement of Aviation Safety: The implementation of our automated system could revolutionize the frequency and thoroughness of runway inspections, potentially leading to improved aviation safety. Regular and detailed monitoring ensures that any hazards such as surface irregularities, water pooling, or vegetation encroachment are promptly identified and addressed. Integrating drone-in-a-box technology (e.g., DJI Dock 2) for automated data collection can further streamline runway inspections. This system allows for frequent, scheduled flights without the need for constant human oversight, ensuring consistent monitoring and rapid response to emerging issues.

- Applicability to Other Unpaved Surfaces: Our model can be adapted for other types of unpaved runways, including grass, dirt, and coral surfaces. By fine-tuning our algorithms and training on relevant datasets, we can extend our system’s applicability to various runway materials, enhancing the versatility and utility of our approach.

- Potential Implications in Other Industries: The techniques developed in our research can extend beyond aviation to benefit a variety of other industries:

- –

- Infrastructure Inspection: The technology could be adapted for inspecting roads, bridges, and other infrastructure, identifying issues such as cracks, erosion, and vegetation overgrowth.

- –

- Agriculture: Automated monitoring of large agricultural fields for water pooling, soil erosion, and crop health, similar to how runways are inspected.

- –

- Environmental Monitoring: Assessing remote areas for environmental changes, such as deforestation, flooding, or land degradation.

8.3. Limitations and Future Work

The following are possible future directions and limitations of our work:

- The segmentation IoU of water and vegetation is not ideal due to a lack of relevant datasets. Even though we added 200 augmented water samples for the final training, it is still insufficient for significant improvement. The next step of our study would be to enrich our dataset to ensure a comprehensive number of water and vegetation samples. This can be achieved by utilizing the latest image generation technologies.

- The smoothness evaluation algorithm’s result is highly dependent on airport weather and lighting conditions. It is not robust enough to accurately evaluate unseen airports. We will explore the integration of deep learning algorithms to address compatibility issues in the future.

- The training process is prolonged due to the large dataset, input size, and the use of a large vision transformer model. In the next phase of our research, we will apply more training to the model, fine-tune the hyperparameters and improve the model’s architecture to achieve faster detection and evaluation results and greater availability of our solution in the cloud-based platform [35].

9. Conclusions

Our paper introduces a novel approach for automating the monitoring and maintaining of gravel runways at remote airports in Northern Canada. The potential applications of our approach extend beyond the aviation sector. Our approach could be used in monitoring the integrity of roads, bridges, and other infrastructure, identifying structural vulnerabilities such as cracks and erosion. It could also assist in monitoring agricultural fields for water pooling and detecting soil erosion, additionally assessing remote areas for environmental changes, such as deforestation, flooding, or land degradation. Our approach is adaptable in detecting various surface irregularities, making it a powerful tool across diverse sectors.

In conclusion, by harnessing UAV imagery and advanced computer vision techniques, we have developed a hybrid approach that accurately detects and segments runway defects such as water pooling, vegetation, and rough surfaces. Through extensive experimentation with diverse high-resolution aerial images collected from multiple airports, we have demonstrated the effectiveness and robustness of our approach in providing comprehensive evaluation results for runway maintenance. Ultimately, our automated system offers a universal, effective, and user-friendly solution that can be broadly applied to diverse types of airports globally.

Author Contributions

Conceptualization, M.A.; Data curation, M.A. and L.C.; Formal analysis, Z.Y., S.N. and C.H.; Funding acquisition, M.A.; Investigation, Z.Y., S.N., C.H., M.A. and L.C.; Methodology, M.A.; Project administration, M.A.; Resources, M.A. and L.C.; Software, Z.Y., S.N. and C.H.; Supervision, M.A. and L.C.; Validation, Z.Y., S.N. and C.H.; Visualization, Z.Y., S.N. and C.H.; Writing—original draft, Z.Y., S.N. and C.H.; Writing—review and editing, M.A. and L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Transport Canada and Natural Resources of Canada.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy concerns and IP restrictions (patent pending).

Acknowledgments

This work was supported by Spexi Geospatial Inc., Transport Canada, Natural Resources of Canada and the Northeastern University—Vancouver Campus. We thank all the collaborators who allowed this project to happen.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Government of Canada, Office of the Auditor General of Canada to the Parliament of Canada. Report 6-Civil Aviation Infrastructure in the North-Transport Canada; Government of Canada: Ottawa, ON, Canada, 2017.

- Zhao, G.; Barbi, P.; Tighe, S. Performance Evaluation of Gravel Runway Operation and Maintenance in the Arctic Region of Canada; Springer: Berlin/Heidelberg, Germany, 2022; pp. 59–71. [Google Scholar] [CrossRef]

- Zhai, S.; Xu, Y. The automated segmentation and enhancement of cracks on airport pavements using three-dimensional imaging techniques. In Proceedings of the International Conference on Algorithm, Imaging Processing, and Machine Vision (AIPMV 2023), SPIE, Qingdao, China, 15–17 September 2023; Volume 12969, pp. 343–350. [Google Scholar]

- Sri Hari, R.V.; Ambalam, R.; Ruban Kumar, B.; Ibrahim, M.; Ponnusamy, R. Yolo5-Based UAV Surveillance for Tiny Object Detection on Airport Runways. In Proceedings of the 2023 International Conference on Data Science, Agents & Artificial Intelligence (ICDSAAI), Chennai, India, 21–23 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Aibin, M.; Aldiab, M.; Bhavsar, R.; Lodhra, J.; Reyes, M.; Rezaeian, F.; Saczuk, E.; Taer, M.; Taer, M. Survey of RPAS Autonomous Control Systems Using Artificial Intelligence. IEEE Access 2021, 9, 167580–167591. [Google Scholar] [CrossRef]

- Wu, J.; Chantiry, X.E.; Gimpel, T.; Aibin, M. AI-Based Classification to Facilitate Preservation of British Columbia Endangered Birds Species. In Proceedings of the 2022 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Regina, SK, Canada, 24–26 September 2022; pp. 85–88. [Google Scholar] [CrossRef]

- Aibin, M.; Li, Y.; Sharma, R.; Ling, J.; Ye, J.; Lu, J.; Zhang, J.; Coria, L.; Huang, X.; Yang, Z.; et al. Advancing Forest Fire Risk Evaluation: An Integrated Framework for Visualizing Area-Specific Forest Fire Risks Using UAV Imagery, Object Detection and Color Mapping Techniques. Drones 2024, 8, 39. [Google Scholar] [CrossRef]

- Maunder, J.D.; Zhang, J.; Lu, J.; Chen, T.; Hindley, Z.; Saczuk, E.; Aibin, M. AI-based General Visual Inspection of Aircrafts Based on YOLOv5. In Proceedings of the 2023 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Regina, SK, Canada, 24–27 September 2023; pp. 55–59. [Google Scholar] [CrossRef]

- Lee, A.; Jiang, B.; Zeng, I.; Aibin, M. Ocean Medical Waste Detection for CPU-Based Underwater Remotely Operated Vehicles (ROVs). In Proceedings of the 2022 IEEE 13th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 26–29 October 2022; pp. 385–389. [Google Scholar] [CrossRef]

- Canada, T. AC 300-004: Unpaved Runway Surfaces. Available online: https://tc.canada.ca/en/aviation/reference-centre/advisory-circulars/advisory-circular-ac-no-300-004 (accessed on 28 May 2024).

- Guo, W.; Wang, N.; Fang, H. Design of airport road surface inspection system based on machine vision and deep learning. J. Phys. Conf. Ser. 2021, 1885, 052046. [Google Scholar] [CrossRef]

- Hoang, N.D.; Nguyen, Q.L.; Tien Bui, D. Image processing–based classification of asphalt pavement cracks using support vector machine optimized by artificial bee colony. J. Comput. Civ. Eng. 2018, 32, 04018037. [Google Scholar] [CrossRef]

- Li, H.; Jing, P.; Huang, R.; Gui, Z. Algorithm for Crack Segmentation of Airport Runway Pavement under Complex Background based on Encoder and Decoder. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 1706–1711. [Google Scholar] [CrossRef]

- Zhang, H.; Dong, J.; Gao, Z. Automatic segmentation of airport pavement damage by AM-Mask R-CNN algorithm. Eng. Rep. 2023, 5, e12628. [Google Scholar] [CrossRef]

- Mahmud, M.N.; Sabri, N.N.N.A.; Osman, M.K.; Ismail, A.P.; Mohamad, F.A.; Idris, M.; Sulaiman, S.N.; Saad, Z.; Ibrahim, A.; Rabiain, A.H. Pavement Crack Detection from UAV Images Using YOLOv4; Springer: Berlin/Heidelberg, Germany, 2023; pp. 73–85. [Google Scholar]

- Xing, J.; Liu, Y.; Zhang, G.Z. Improved YOLOV5-Based UAV Pavement Crack Detection. IEEE Sensors J. 2023, 23, 15901–15909. [Google Scholar] [CrossRef]

- Gao, Y.; Cao, H.; Cai, W.; Zhou, G. Pixel-level road crack detection in UAV remote sensing images based on ARD-Unet. Measurement 2023, 219, 113252. [Google Scholar] [CrossRef]

- Pan, Y.; Chen, X.; Sun, Q.; Zhang, X. Monitoring Asphalt Pavement Aging and Damage Conditions from Low-Altitude UAV Imagery Based on a CNN Approach. Can. J. Remote Sens. 2021, 47, 432–449. [Google Scholar] [CrossRef]

- McNerney, M.T.; Bishop, G.; Saur, V.; Lu, S.; Naputi, J.; Serna, D.; Popko, D. Detailed Pavement Inspection of Airports Using Remote Sensing UAS and Machine Learning of Distress Imagery. In Proceedings of the International Conference on Transportation and Development 2022, Seattle, WA, USA, 31 May–3 June 2022; pp. 201–211. [Google Scholar] [CrossRef]

- Nervis, L.O.; Nuñez, W.P. Identification and discussion on distress mechanisms of unsurfaced gravel roads. Int. J. Pavement Res. Technol. 2019, 12, 88–96. [Google Scholar] [CrossRef]

- MacLeod, D.R.; Hidinger, W. Asset Management of Gravel Airstrips in The Yukon Canada. In Proceedings of the 7th International Conference on Managing Pavement Assets, Calgary, AB, Canada, 23–28 June 2008. [Google Scholar]

- Jiang, L.; Xie, Y.; Ren, T. A deep neural networks approach for pixel-level runway pavement crack segmentation using drone-captured images. arXiv 2020, arXiv:2001.03257. [Google Scholar]

- Zakeri, H.; Nejad, F.M.; Fahimifar, A. Image Based Techniques for Crack Detection, Classification and Quantification in Asphalt Pavement. Arch. Computat. Methods Eng. 2017, 24, 935–977. [Google Scholar] [CrossRef]

- Majidifard, H.; Adu-Gyamfi, Y.; Buttlar, W. Deep Machine Learning Approach to Develop a New Asphalt Pavement Condition Index. Constr. Build. Mater. 2020, 247, 118513. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2013, arXiv:1311.2524. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. arXiv 2017, arXiv:1703.06870. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R.B. PointRend: Image Segmentation As Rendering. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2019; pp. 9796–9805. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention Mask Transformer for Universal Image Segmentation. arXiv 2021, arXiv:2112.01527. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar] [CrossRef]

- Kornprobst, P.; Tumblin, J.; Durand, F. Bilateral Filtering: Theory and Applications. Found. Trends Comput. Graph. Vis. 2009, 4, 1–74. [Google Scholar] [CrossRef]

- Ramer, U. An iterative procedure for the polygonal approximation of plane curves. Comput. Graph. Image Process. 1972, 1, 244–256. [Google Scholar] [CrossRef]

- Ducoffe, M.; Carrere, M.; Féliers, L.; Gauffriau, A.; Mussot, V.; Pagetti, C.; Sammour, T. LARD—Landing Approach Runway Detection—Dataset for Vision Based Landing. 2023. Available online: https://hal.science/hal-04056760 (accessed on 28 May 2024).

- Mbiyana, K.; Kans, M.; Campos, J.F.; Håkansson, L. Literature Review on Gravel Road Maintenance: Current State and Directions for Future Research. Transp. Res. Rec. 2022, 2677, 506–522. [Google Scholar] [CrossRef]

- Kit, N.K.K.; Aibin, M. Study on High Availability and Fault Tolerance. In Proceedings of the 2023 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 20–22 February 2023; pp. 77–82. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).