Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery

Abstract

1. Introduction

2. Materials and Methods

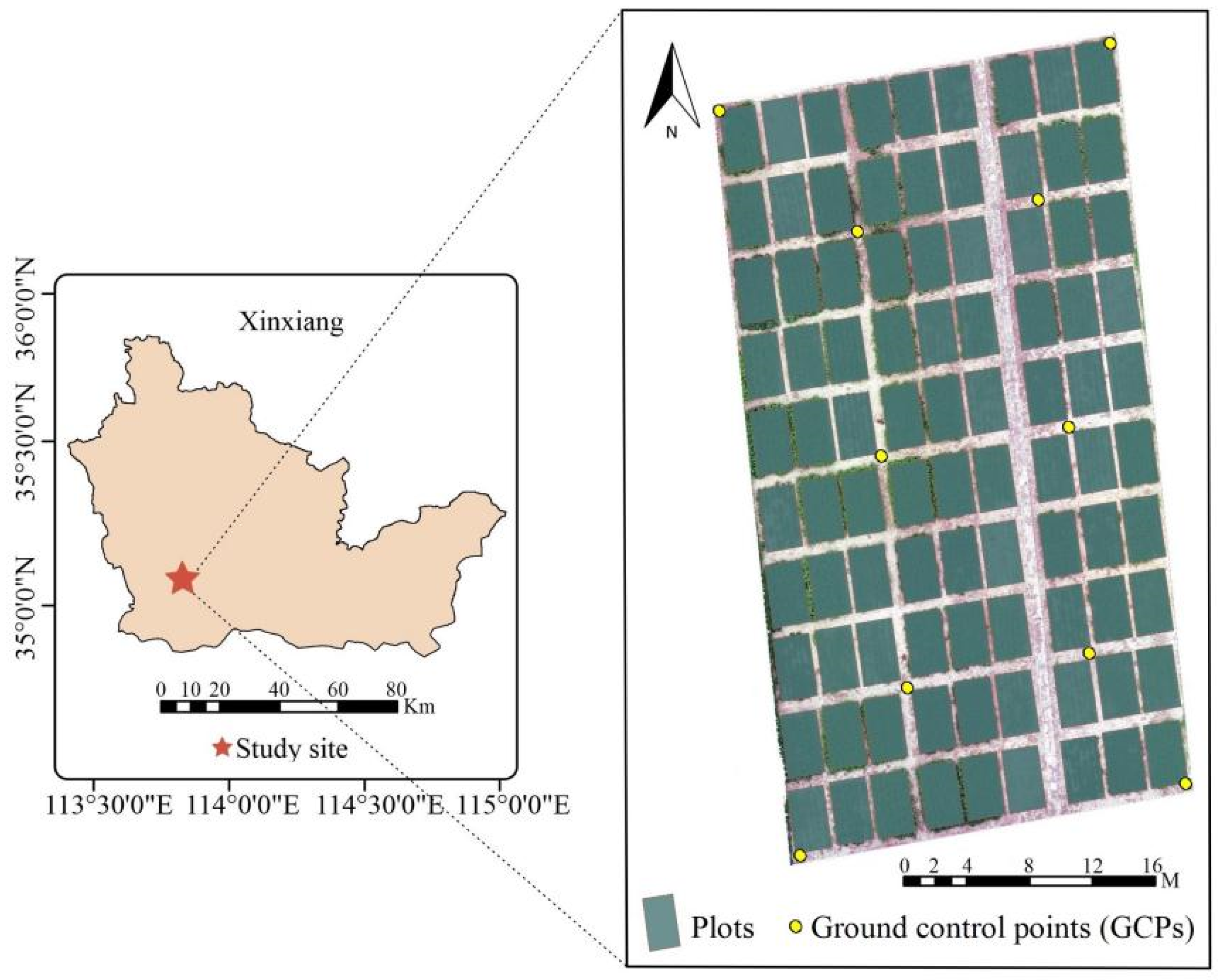

2.1. Test Design and Pea Yield Measurement

2.2. UAV-Based Images Acquisition and Processing

2.3. RGB and MS Feature Extraction

2.3.1. RGB Data Extraction

2.3.2. MS Data Extraction

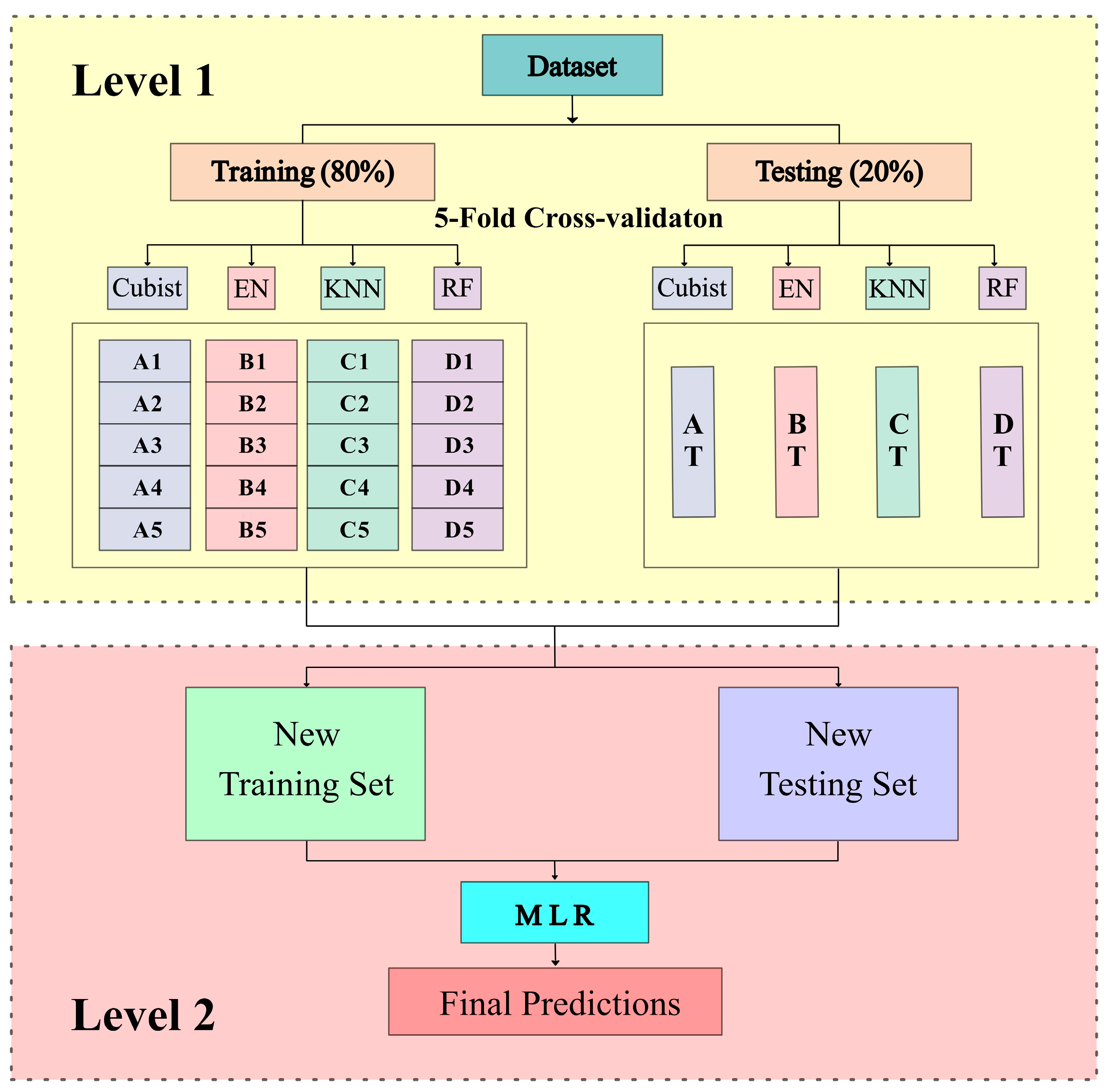

2.4. Regression Technology

2.5. Model Performance Evaluation

3. Results

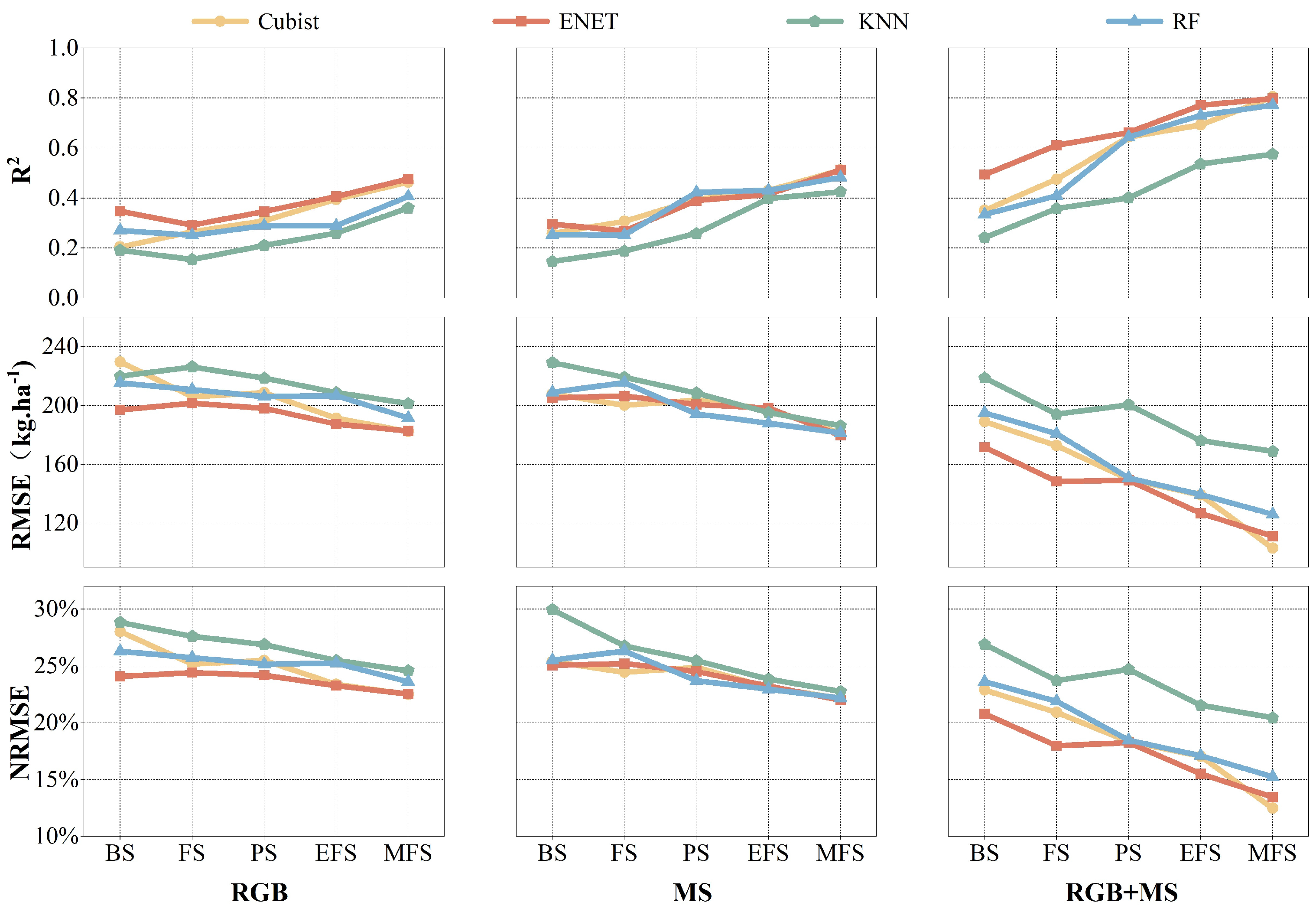

3.1. Performance of Sensor Data on Pea Yield

3.2. Effects of Different Growth Stages on Yield Estimation

3.3. Model Performance for Pea Yield Estimation

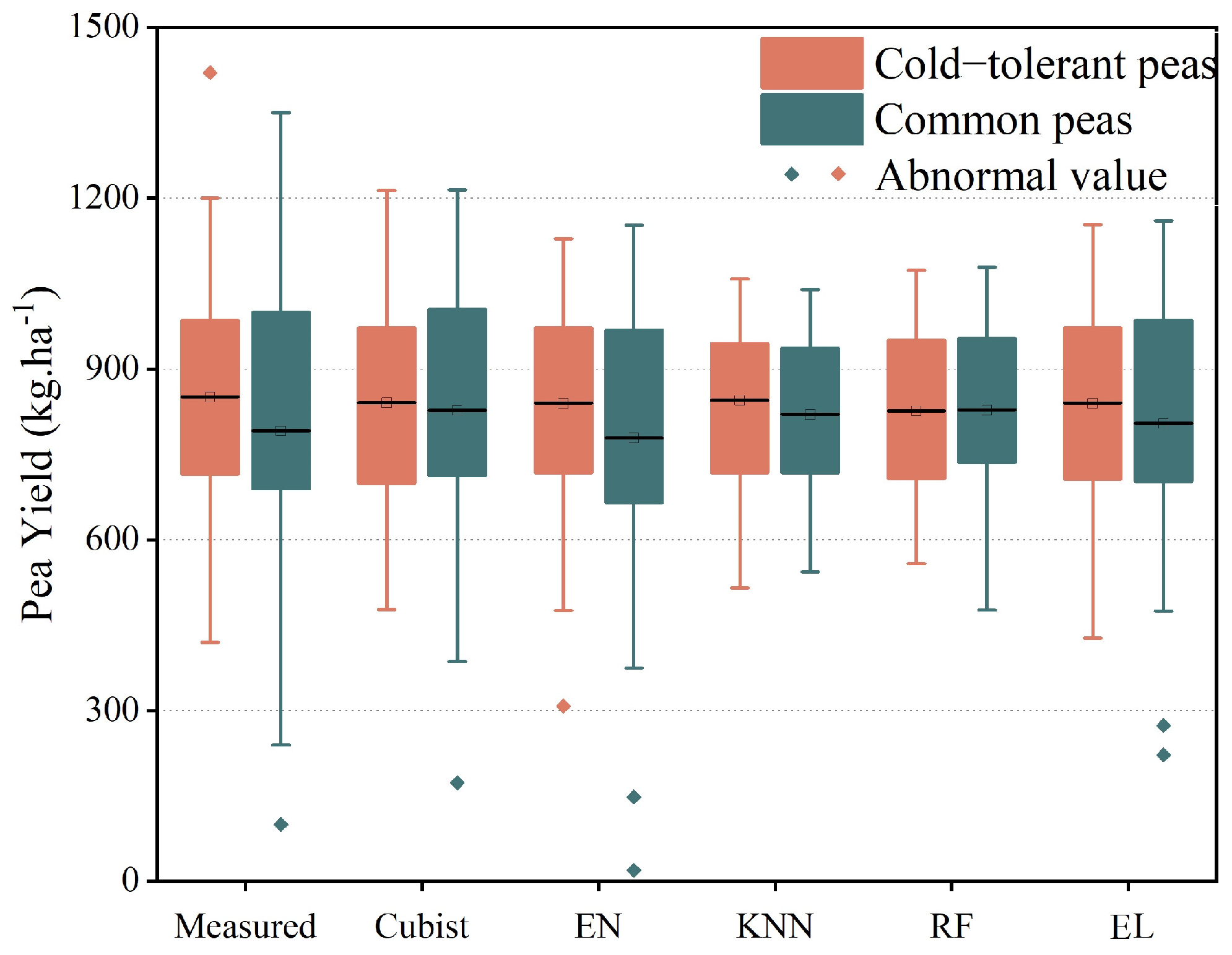

3.4. Yield Estimation for Different Pea Types

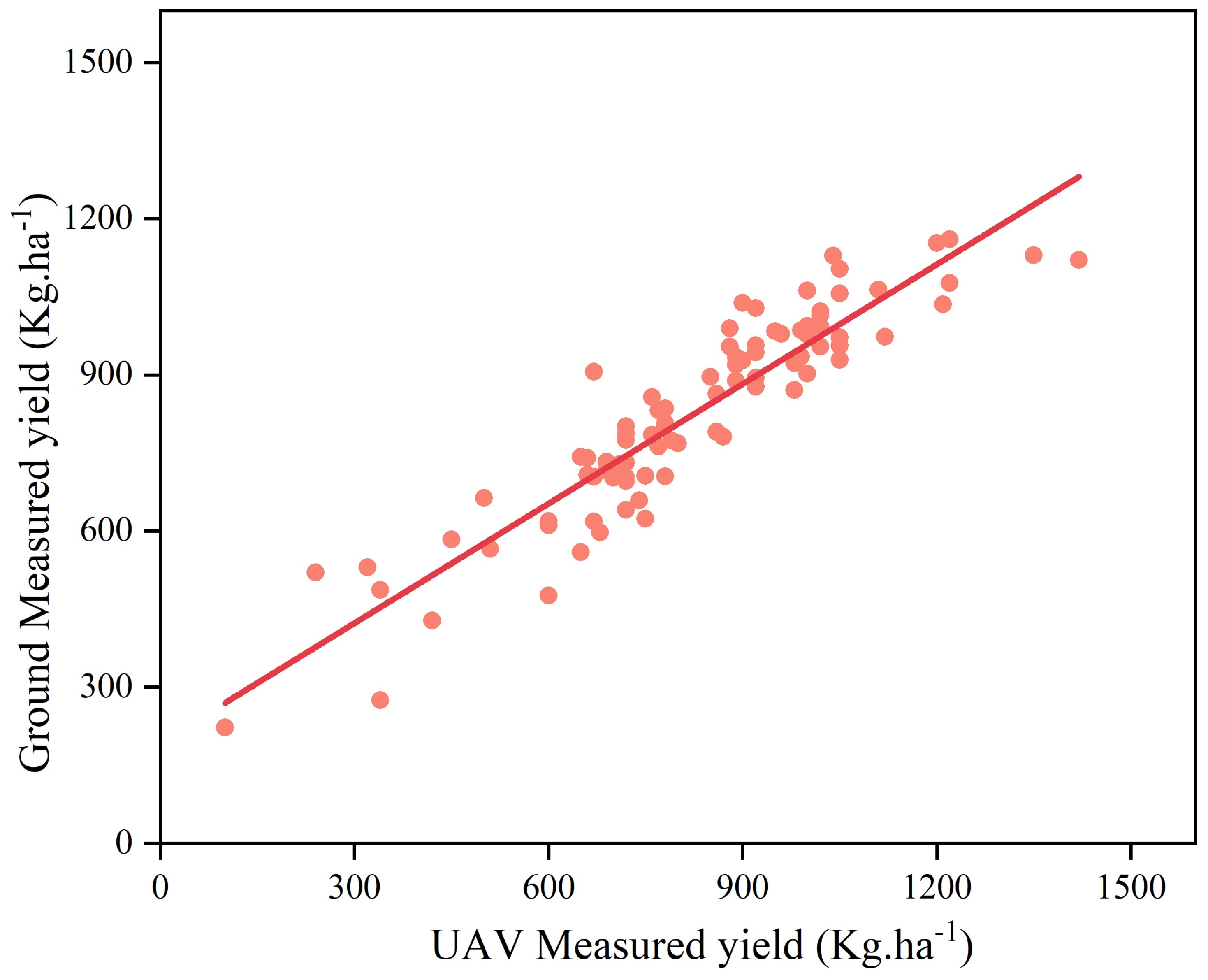

3.5. Estimation Effect Analysis

4. Discussion

4.1. The Estimation Accuracy between Different Sensors

4.2. Effects of Pea Growth Stage on Estimating Pea Yield

4.3. Performance of Models for Pea Yield Estimation

4.4. The Estimation Accuracy between Two Types of Peas

4.5. Deficiency and Prospect

5. Conclusions

- (1)

- The RGB estimation accuracy outperformed the MS data in the early growth stage, whereas the MS estimation accuracy was higher in the late growth stage. Regardless of growth stage, the fusion data (RGB + MS) obtained higher accuracy than the single-sensor estimation of pea yield.

- (2)

- The mid filling growth stage achieved the best estimation of pea yield than the other four growth stages, whereas the branching and flowering growth stages were poor.

- (3)

- The EN and Cubist algorithms performed better than the RF and KNN algorithms in estimating pea yield, and the EL algorithm provided the best performance in estimating pea yield than base learners.

- (4)

- The applicability of the estimation method was verified by comparing the yield estimation effect of cold-tolerant and common pea types. This study thereby provides technical support and valuable insight for future pea yield estimations.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sharma, N.K.; Ban, Z.; Classen, H.L.; Yang, H.; Yan, X.; Choct, M.; Wu, S.B. Net energy, energy utilization, and nitrogen and energy balance affected by dietary pea supplementation in broilers. Anim. Nutr. 2021, 7, 506–511. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wan, S.; Hao, J.; Hu, J.; Yang, T.; Zong, X. Large-scale evaluation of pea (Pisum sativum L.) germplasm for cold tolerance in the field during winter in Qingdao. Crop J. 2016, 4, 377–383. [Google Scholar] [CrossRef]

- Li, Y.X. Cultivated land and food supply in China. Land Use Policy 2000, 7, 73–88. [Google Scholar]

- Bastiaanssen, W.; Ali, S. A new crop yield forecasting model based on satellite measurements applied across the Indus Basin, Pakistan. Agric. Ecosyst. Environ. 2003, 94, 321–340. [Google Scholar] [CrossRef]

- Allen, R.; Hanuschak, G.; Craig, M. Limited Use of Remotely Sensed Data for Crop Condition Monitoring and Crop Yield Forecasting in NASS; US Department of Agriculture: Washington, DC, USA, 2002.

- Geipel, J.; Link, J.; Claupein, W. Combined Spectral and Spatial Modeling of Corn Yield Based on Aerial Images and Crop Surface Models Acquired with an Unmanned Aircraft System. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Sicre, C.M.; Le Dantec, V.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Xie, Y.; Huang, J. Integration of a Crop Growth Model and Deep Learning Methods to Improve Satellite-Based Yield Estimation of Winter Wheat in Henan Province, China. Remote Sens. 2021, 13, 4372. [Google Scholar] [CrossRef]

- Yu, D.; Zha, Y.; Shi, L.; Jin, X.; Hu, S.; Yang, Q.; Huang, K.; Zeng, W. Improvement of sugarcane yield estimation by assimilating UAV-derived plant height observations. Eur. J. Agron. 2020, 121, 126159. [Google Scholar] [CrossRef]

- Anthony, D.; Elbaum, S.; Lorenz, A.; Detweiler, C. On crop height estimation with UAVs. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Falco, N.; Wainwright, H.M.; Dafflon, B.; Ulrich, C.; Soom, F.; Peterson, J.E.; Brown, J.B.; Schaettle, K.B.; Williamson, M.; Cothren, J.D.; et al. Influence of soil heterogeneity on soybean plant development and crop yield evaluated using time-series of UAV and ground-based geophysical imagery. Sci. Rep. 2021, 11, 7046. [Google Scholar] [CrossRef] [PubMed]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; He, Z.; Chen, Z.; Shu, M.; Wang, J.; Li, C.; Xiao, Y. Assessment of Ensemble Learning to Predict Wheat Grain Yield Based on UAV-Multispectral Reflectance. Remote Sens. 2021, 13, 2338. [Google Scholar] [CrossRef]

- Peng, X.; Han, W.; Ao, J.; Wang, Y. Assimilation of LAI Derived from UAV Multispectral Data into the SAFY Model to Estimate Maize Yield. Remote Sens. 2021, 13, 1094. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Shang, J.; Liao, C. Using UAV-Based SOPC Derived LAI and SAFY Model for Biomass and Yield Estimation of Winter Wheat. Remote Sens. 2020, 12, 2378. [Google Scholar] [CrossRef]

- Vega, F.A.; Ramírez, F.C.; Saiz, M.P.; Rosúa, F.O. Multi-temporal imaging using an unmanned aerial vehicle for monitoring a sunflower crop. Biosyst. Eng. 2015, 132, 19–27. [Google Scholar] [CrossRef]

- Li, C.; Ma, C.; Cui, Y.; Lu, G.; Wei, F. UAV Hyperspectral Remote Sensing Estimation of Soybean Yield Based on Physiological and Ecological Parameter and Meteorological Factor in China. J. Indian Soc. Remote Sens. 2020, 49, 873–886. [Google Scholar] [CrossRef]

- Varela, S.; Pederson, T.; Bernacchi, C.J.; Leakey, A.D.B. Understanding Growth Dynamics and Yield Prediction of Sorghum Using High Temporal Resolution UAV Imagery Time Series and Machine Learning. Remote Sens. 2021, 13, 1763. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Vicente, R.; Vergara-Diaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A.; Melichar, J.P.E.; Serret Molins, M.D.; Araus, J.L. Comparative UAV and Field Phenotyping to Assess Yield and Nitrogen Use Efficiency in Hybrid and Conventional Barley. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.D.; Sudduth, K.A.; Zhang, M. Yield estimation in cotton using UAV-based multi-sensor imagery. Biosyst. Eng. 2020, 193, 101–114. [Google Scholar] [CrossRef]

- Som-ard, J.; Hossain, M.D.; Ninsawat, S.; Veerachitt, V. Pre-harvest Sugarcane Yield Estimation Using UAV-Based RGB Images and Ground Observation. Sugar Tech 2018, 20, 645–657. [Google Scholar] [CrossRef]

- Stroppiana, D.; Migliazzi, M.; Chiarabini, V.; Crema, A.; Musanti, M.; Franchino, C.; Villa, P. Rice yield estimation using multispectral data from UAV: A preliminary experiment in northern Italy. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium, Milan, Italy, 26–31 July 2015; pp. 4664–4667. [Google Scholar]

- Luo, B.; Yang, C.; Chanussot, J.; Zhang, L. Crop Yield Estimation Based on Unsupervised Linear Unmixing of Multidate Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 162–173. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Cheein, F.A.; Guevara, J.; Llorens, J.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R. Fruit detection, yield prediction and canopy geometric characterization using LiDAR with forced air flow. Comput. Electron. Agric. 2020, 168, 105121. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Huang, Y.; Sui, R.; Thomson, S.J.; Fisher, D.K. Estimation of cotton yield with varied irrigation and nitrogen treatments using aerial multispectral imagery. Int. J. Agric. Biol. Eng. 2013, 6, 37–41. [Google Scholar]

- García-Martínez, H.; Flores-Magdaleno, H.; Ascencio-Hernández, R.; Khalil-Gardezi, A.; Tijerina-Chávez, L.; Mancilla-Villa, O.R.; Vázquez-Peña, M.A. Corn Grain Yield Estimation from Vegetation Indices, Canopy Cover, Plant Density, and a Neural Network Using Multispectral and RGB Images Acquired with Unmanned Aerial Vehicles. Agriculture 2020, 10, 277. [Google Scholar] [CrossRef]

- Li, Q.; Jin, S.; Zang, J.; Wang, X.; Sun, Z.; Li, Z.; Xu, S.; Ma, Q.; Su, Y.; Guo, Q.; et al. Deciphering the contributions of spectral and structural data to wheat yield estimation from proximal sensing. Crop J. 2022, 10, 1334–1345. [Google Scholar] [CrossRef]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Yeom, J.; Maeda, M.; Maeda, A.; Dube, N.; Landivar, J.; Hague, S.; et al. Developing a machine learning based cotton yield estimation framework using multi-temporal UAS data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 180–194. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Wu, Z.; Wang, S.; Sun, H.; Senthilnath, J.; Wang, J.; Bryant, C.R.; Fu, Y. Modified Red Blue Vegetation Index for Chlorophyll Estimation and Yield Prediction of Maize from Visible Images Captured by UAV. Sensors 2020, 20, 5055. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Liu, R.; Xiao, Y.; Cui, Y.; Chen, Z.; Zong, X.; Yang, T. Faba bean above-ground biomass and bean yield estimation based on consumer-grade unmanned aerial vehicle RGB images and ensemble learning. Precis. Agric. 2023, 24, 1439–1460. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; Pauw, E.D. Hyperspectral Vegetation Indices and Their Relationships with Agricultural Crop Characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Ji, Y.; Chen, Z.; Cheng, Q.; Liu, R.; Li, M.; Yan, X.; Li, G.; Wang, D.; Fu, L.; Ma, Y.; et al. Estimation of plant height and yield based on UAV imagery in faba bean (Vicia faba L.). Plant Methods 2022, 18, 26. [Google Scholar] [CrossRef] [PubMed]

- Shu, M.; Shen, M.; Dong, Q.; Yang, X.; Li, B.; Ma, Y. Estimating the maize above-ground biomass by constructing the tridimensional concept model based on UAV-based digital and multi-spectral images. Field Crops Res. 2022, 282, 108491. [Google Scholar] [CrossRef]

- Liu, S.; Jin, X.; Nie, C.; Wang, S.; Yu, X.; Cheng, M.; Shao, M.; Wang, Z.; Tuohuti, N.; Bai, Y.; et al. Estimating leaf area index using unmanned aerial vehicle data: Shallow vs. deep machine learning algorithms. Plant Physiol. 2021, 187, 1551–1576. [Google Scholar] [CrossRef] [PubMed]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Nichol, J.E.; Sarker, M.L.R. Improved Biomass Estimation Using the Texture Parameters of Two High-Resolution Optical Sensors. IEEE Trans. Geosci. Remote Sens. 2011, 49, 930–948. [Google Scholar] [CrossRef]

- Quinlan, J.R. Learning with continuous classes. In Proceedings of the 5th Australian Joint Conference on Artificial Intelligence, Hobart, TAS, Australia, 16–18 November 1992. [Google Scholar]

- Houborg, R.; McCabe, M.F. A hybrid training approach for leaf area index estimation via Cubist and random forests machine-learning. ISPRS J. Photogramm. Remote Sens. 2018, 135, 173–188. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2022, 24, 187–212. [Google Scholar] [CrossRef]

- Cong, H.; Xjwa, B.; Zyl, C. Generating adversarial examples with elastic-net regularized boundary equilibrium generative adversarial network—ScienceDirect. Pattern Recognit. Lett. 2020, 140, 281–287. [Google Scholar]

- Gang, Z.; Peng, S.K.; Rong, H.; Yang, L.I.; Wang, N. A General Introduction to Estimation and Retrieval of Forest Volume with Remote Sensing Based on KNN. Remote Sens. Technol. Appl. 2010, 34, 2627–2638. [Google Scholar]

- Breiman, L. Machine Learning. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Lujan-Moreno, G.A.; Howard, P.R.; Rojas, O.G.; Montgomery, D.C. Design of experiments and response surface methodology to tune machine learning hyperparameters, with a random forest case-study. Expert Syst. Appl. 2018, 109, 195–205. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Hassani, K.; Gholizadeh, H.; Taghvaeian, S.; Natalie, V.; Carpenter, J.; Jacob, J. Application of UAS-Based Remote Sensing in Estimating Winter Wheat Phenotypic Traits and Yield During the Growing Season. PFG—J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 77–90. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, X.; Chen, S.; Wang, H.; Jayavelu, S.; Cammarano, D.; Fu, Y. Integrated UAV-Based Multi-Source Data for Predicting Maize Grain Yield Using Machine Learning Approaches. Remote Sens. 2022, 14, 6290. [Google Scholar] [CrossRef]

- Chang, A.; Jung, J.; Yeom, J.; Maeda, M.M.; Landivar, J.A.; Enciso, J.M.; Avila, C.A.; Anciso, J.R. Unmanned Aircraft System- (UAS-) Based High-Throughput Phenotyping (HTP) for Tomato Yield Estimation. J. Sens. 2021, 2021, 8875606. [Google Scholar] [CrossRef]

- Liu, S.; Hu, Z.; Han, J.; Li, Y.; Zhou, T. Predicting grain yield and protein content of winter wheat at different growth stages by hyperspectral data integrated with growth monitor index. Comput. Electron. Agric. 2022, 200, 107235. [Google Scholar] [CrossRef]

- Alabi, T.R.; Abebe, A.T.; Chigeza, G.; Fowobaje, K.R. Estimation of soybean grain yield from multispectral high-resolution UAV data with machine learning models in West Africa. Remote Sens. Appl. Soc. Environ. 2022, 27, 100782. [Google Scholar] [CrossRef]

- Mbebi, A.J.; Breitler, J.C.; Bordeaux, M.; Sulpice, R.; McHale, M.; Tong, H.; Toniutti, L.; Castillo, J.A.; Bertrand, B.; Nikoloski, Z. A comparative analysis of genomic and phenomic predictions of growth-related traits in 3-way coffee hybrids. G3 2022, 12, jkac170. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.; Jiao, X.; Liu, Y.; Shao, M.; Yu, X.; Bai, Y.; Wang, Z.; Wang, S.; Tuohuti, N.; Liu, S.; et al. Estimation of soil moisture content under high maize canopy coverage from UAV multimodal data and machine learning. Agric. Water Manag. 2022, 264, 107530. [Google Scholar] [CrossRef]

- Shu, M.; Fei, S.; Zhang, B.; Yang, X.; Guo, Y.; Li, B.; Ma, Y. Application of UAV Multisensor Data and Ensemble Approach for High-Throughput Estimation of Maize Phenotyping Traits. Plant Phenomics 2022, 2022, 9802585. [Google Scholar] [CrossRef] [PubMed]

- Sagan, V.; Maimaitijiang, M.; Bhadra, S.; Maimaitiyiming, M.; Brown, D.R.; Sidike, P.; Fritschi, F.B. Field-scale crop yield prediction using multi-temporal WorldView-3 and PlanetScope satellite data and deep learning. ISPRS J. Photogramm. Remote Sens. 2021, 174, 265–281. [Google Scholar] [CrossRef]

| Time | Growth Stages | Ground/UAV Data Collection Time |

|---|---|---|

| 20 September 2019 | Sowing | / |

| 21 September 2019–14 December 2019 | Seedling stage | / |

| 15 December 2019–25 March 2020 | Branching stage | 7 March 2020 |

| 25 March 2020–10 April 2020 | Flowering stage | 3 April 2020 |

| 10 April 2020–20 April 2020 | Podding stage | 14 April 2020 |

| 20 April 2020–28 April 2020 | Early filling stage | 14 April 2020 |

| 28 April 2020–26 May 2020 | Mid filling stage | 14 April 2020 |

| 27 May 2020 | Harvest | / |

| Camera | Sensor | Size(mm) | Band | Image Resolution |

|---|---|---|---|---|

| Zenmuse X7 | RGB | 151 × 108 × 132 | R G B | 2400 × 1080 |

| Red-Edge MX | Multi-spectral | 87 × 59 × 45.4 | Blue | 1280 × 960 |

| Green Red | 1280 × 960 1280 × 960 | |||

| Red-edge | 1280 × 960 | |||

| Near infrared | 1280 × 960 |

| Cubist | EN | KNN | RF | EL | ||

|---|---|---|---|---|---|---|

| BS | R2 | 0.35 | 0.49 | 0.20 | 0.33 | 0.52 |

| RMSE | 189.06 | 171.66 | 220.50 | 195.06 | 169.44 | |

| NRMSE | 22.89% | 20.79% | 26.71% | 23.63% | 21.80% | |

| FS | R2 | 0.48 | 0.61 | 0.24 | 0.41 | 0.62 |

| RMSE | 172.72 | 148.42 | 218.73 | 180.80 | 146.90 | |

| NRMSE | 20.92% | 17.97% | 26.91% | 21.89% | 17.69% | |

| PS | R2 | 0.54 | 0.56 | 0.40 | 0.54 | 0.65 |

| RMSE | 159.79 | 158.99 | 200.50 | 160.69 | 145.04 | |

| NRMSE | 19.35% | 19.26% | 24.71% | 19.46% | 17.91% | |

| EFS | R2 | 0.59 | 0.67 | 0.44 | 0.63 | 0.69 |

| RMSE | 149.10 | 136.70 | 186.14 | 149.45 | 127.99 | |

| NRMSE | 18.05% | 16.5% | 22.54% | 18.10% | 15.19% | |

| MFS | R2 | 0.81 | 0.80 | 0.58 | 0.77 | 0.85 |

| RMSE | 103.00 | 111.05 | 168.79 | 125.94 | 101.16 | |

| NRMSE | 12.48% | 13.45% | 20.44% | 15.25% | 12.86% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Ji, Y.; Ya, X.; Liu, R.; Liu, Z.; Zong, X.; Yang, T. Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery. Drones 2024, 8, 227. https://doi.org/10.3390/drones8060227

Liu Z, Ji Y, Ya X, Liu R, Liu Z, Zong X, Yang T. Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery. Drones. 2024; 8(6):227. https://doi.org/10.3390/drones8060227

Chicago/Turabian StyleLiu, Zehao, Yishan Ji, Xiuxiu Ya, Rong Liu, Zhenxing Liu, Xuxiao Zong, and Tao Yang. 2024. "Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery" Drones 8, no. 6: 227. https://doi.org/10.3390/drones8060227

APA StyleLiu, Z., Ji, Y., Ya, X., Liu, R., Liu, Z., Zong, X., & Yang, T. (2024). Ensemble Learning for Pea Yield Estimation Using Unmanned Aerial Vehicles, Red Green Blue, and Multispectral Imagery. Drones, 8(6), 227. https://doi.org/10.3390/drones8060227