Neural Network Prediction of Keratoconus in AIPL1-Linked Leber Congenital Amaurosis: A Proof-of-Concept Pilot Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Design and Ethics

2.2. Participants

2.3. Reference Standard

2.4. Predictors

2.5. Data Preprocessing and Partitioning

2.6. Model Development

2.7. Evaluation

2.8. Reporting

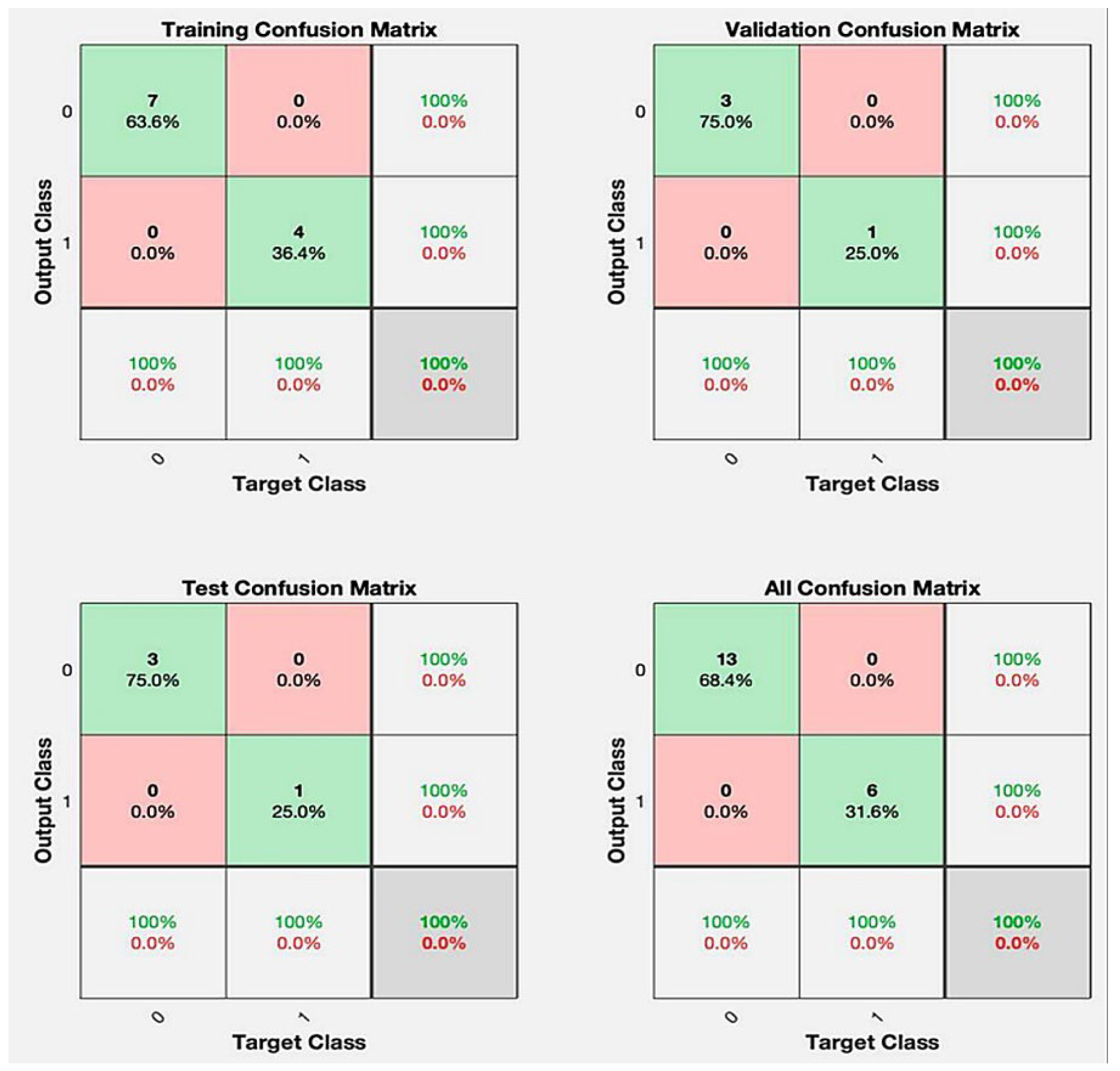

3. Results

4. Discussion

Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIPL1 | aryl hydrocarbon receptor-interacting protein-like 1 gene |

| CXL | corneal–collagen cross-linking |

| KC | keratoconus |

| LCA | Leber congenital amaurosis |

| OCT | optical coherence tomography |

| REB | Research Ethics Board |

References

- Gomes, J.A.P.; Rodrigues, P.F.; Lamazales, L.L. Keratoconus epidemiology: A review. Saudi J. Ophthalmol. 2022, 36, 3–6. [Google Scholar] [CrossRef] [PubMed]

- Steinmetz, J.D.; Bourne, R.R.A.; Briant, P.S.; Flaxman, S.R.; Taylor, H.R.B.; Jonas, J.B.; Abdoli, A.A.; Abrha, W.A.; Abualhasan, A.; Abu-Gharbieh, E.G.; et al. Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of avoidable blindness in relation to VISION 2020: The Right to Sight: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e144–e160. [Google Scholar] [CrossRef] [PubMed]

- Elder, M.J. Leber congenital amaurosis and its association with keratoconus and keratoglobus. J. Pediatr. Ophthalmol. Strabismus 1994, 31, 38–40. [Google Scholar] [CrossRef] [PubMed]

- Dharmaraj, S.; Leroy, B.P.; Sohocki, M.M.; Koenekoop, R.K.; Perrault, I.; Anwar, K.; Khaliq, S.; Devi, R.S.; Birch, D.G.; De Pool, E.; et al. The phenotype of Leber congenital amaurosis in patients with AIPL1 mutations. Arch. Ophthalmol. 2004, 122, 1029–1037. [Google Scholar] [CrossRef] [PubMed]

- Meyer, J.J.; Gokul, A.; Vellara, H.R.; McGhee, C.N.J. Progression of keratoconus in children and adolescents. Br. J. Ophthalmol. 2023, 107, 176–180. [Google Scholar] [CrossRef] [PubMed]

- Lass, J.H.; Lembach, R.G.; Park, S.B.; Hom, D.L.; Fritz, M.E.; Svilar, G.M.; Nuamah, I.F.; Reinhart, W.J.; Stocker, E.G.; Keates, R.H.; et al. Clinical management of keratoconus. A multicenter analysis. Ophthalmology 1990, 97, 433–445. [Google Scholar] [CrossRef] [PubMed]

- Achiron, A.; El-Hadad, O.; Leadbetter, D.F.; Hecht, I.; Hamiel, U.; Avadhanam, V.F.; Tole, D.F.; Darcy, K.F. Progression of Pediatric Keratoconus After Corneal Cross-Linking: A Systematic Review and Pooled Analysis. Cornea 2022, 41, 874–878. [Google Scholar] [CrossRef] [PubMed]

- Hansen, L.O.; Garcia, R.; Torricelli, A.A.M.; Bechara, S.J. Cost-Effectiveness of Corneal Collagen Crosslinking for Progressive Keratoconus: A Brazilian Unified Health System Perspective. Int. J. Environ. Res. Public Health 2024, 21, 1569. [Google Scholar] [CrossRef] [PubMed]

- Anitha, V.; Vanathi, M.; Raghavan, A.; Rajaraman, R.; Ravindran, M.; Tandon, R. Pediatric keratoconus—Current perspectives and clinical challenges. Indian J. Ophthalmol. 2021, 69, 214–225. [Google Scholar] [CrossRef] [PubMed]

- Rao, D.P.; Savoy, F.M.; Tan, J.Z.E.; Fung, B.P.-E.; Bopitiya, C.M.; Sivaraman, A.; Vinekar, A. Development and validation of an artificial intelligence based screening tool for detection of retinopathy of prematurity in a South Indian population. Front. Pediatr. 2023, 11, 1197237. [Google Scholar] [CrossRef] [PubMed]

- Shu, Q.; Pang, J.; Liu, Z.; Liang, X.; Chen, M.; Tao, Z.; Liu, Q.; Guo, Y.; Yang, X.; Ding, J.; et al. Artificial Intelligence for Early Detection of Pediatric Eye Diseases Using Mobile Photos. JAMA Netw. Open 2024, 7, e2425124. [Google Scholar] [CrossRef] [PubMed]

- Yarkheir, M.; Sadeghi, M.; Azarnoush, H.; Akbari, M.R.; Pour, E.K. Automated strabismus detection and classification using deep learning analysis of facial images. Sci. Rep. 2025, 15, 3910. [Google Scholar] [CrossRef] [PubMed]

- Collins, G.S.; Reitsma, J.B.; Altman, D.G.; Moons, K.G.M. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMJ 2015, 350, g7594. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Zhao, J.; Iselin, K.C.; Borroni, D.; Romano, D.; Gokul, A.; McGhee, C.N.J.; Zhao, Y.; Sedaghat, M.-R.; Momeni-Moghaddam, H.; et al. Keratoconus detection of changes using deep learning of colour-coded maps. BMJ Open Ophthalmol. 2021, 6, e000824. Available online: https://bmjophth.bmj.com/content/6/1/e000824 (accessed on 24 May 2025). [CrossRef] [PubMed]

- Vandevenne, M.M.; Favuzza, E.; Veta, M.; Lucenteforte, E.; Berendschot, T.T.; Mencucci, R.; Nuijts, R.M.; Virgili, G.; Dickman, M.M. Artificial intelligence for detecting keratoconus. Cochrane Database Syst. Rev. 2023, 2023, CD014911. [Google Scholar] [CrossRef] [PubMed]

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef] [PubMed]

- Salmon, H.A.; Chalk, D.; Stein, K.; Frost, N.A. Cost effectiveness of collagen crosslinking for progressive keratoconus in the UK NHS. Eye 2015, 29, 1504–1511. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.-C.; Lee, S.-M.; Graham, A.D.; Lin, M.C. Effects of eye rubbing and breath holding on corneal biomechanical properties and intraocular pressure. Cornea 2011, 30, 855–860. [Google Scholar] [CrossRef] [PubMed]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef] [PubMed]

- Debray, T.P.A.; Collins, G.S.; Dhiman, P.; Riley, R.D.; Snell, K.I.E.; Van Calster, B.; Reitsma, J.B.; Moons, K.G.M. Transparent reporting of multivariable prediction models developed or validated using clustered data: TRIPOD-Cluster checklist. BMJ 2023, 380, e071018 . [Google Scholar] [CrossRef]

| Predictor (Model Input) | All Patients (N = 19) | KC-Converters (N = 6) | Non-Converters (N = 13) | Absolute Difference (KC-Non) |

|---|---|---|---|---|

| Age, years | 16.0 ± 13.7 | 25.7 ± 16.9 | 11.1 ± 9.1 | +14.6 yr |

| South Asian origin | 5 (26%) | 4 (67%) | 1 (8%) | +59 pp |

| Severe Visual Impairment (all patients) * | 19 (100%) | 6 (100%) | 13 (100%) | 0 pp |

| Photophobia (yes) | 5 (26%) | 2 (33%) | 3 (23%) | +10 pp |

| Nyctalopia (yes) | 16 (84%) | 5 (83%) | 11 (85%) | −1 pp |

| Oculo-digital phenomenon (yes) | 2 (11%) | 1 (17%) | 1 (8%) | +9 pp |

| Maculopathy (yes) | 19 (100%) | 6 (100%) | 13 (100%) | 0 pp |

| Optic nerve pallor (yes) | 15 (79%) | 6 (100%) | 9 (69%) | +31 pp |

| Pigmentary retinopathy (yes) | 17 (89%) | 6 (100%) | 11 (85%) | +15 pp |

| W278Stop mutation present | 11 (58%) | 3 (50%) | 8 (62%) | −12 pp |

| Dataset with AIPL1 | Dataset Without AIPL1 | |||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Specificity | Sensitivity | F1-Score | Accuracy | Specificity | Sensitivity | F1-Score | |

| Training | 95.5 (14.3) | 95.5 (16.9) | 96.3 (9.3) | 84.5 (21.5) | 85.0 (20.0) | 84.0 (22.0) | ||

| Validation | 85.0 (17.0) | 87.1 (26.6) | 66.7 (45.4) | 82.5 (18.3) | 83.0 (19.0) | 82.0 (20.0) | ||

| Test | 87.5 (22.2) | 92.1 (20.5) | 86.9 (29.4) | 68.8 (24.2) | 70.8 (28.6) | 62.2 (48.6) | ||

| Overall | 91.6 (12.8) | 93.5 (17.0) | 87.5 (13.1) | 0.901 | 80.8 (17.8) | 83.5 (19.0) | 75.0 (33.6) | 0.781 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chow, D.R.; Remtulla, R.; Vargas, G.; Leite, G.; Koenekoop, R.K. Neural Network Prediction of Keratoconus in AIPL1-Linked Leber Congenital Amaurosis: A Proof-of-Concept Pilot Study. J. Clin. Med. 2025, 14, 6499. https://doi.org/10.3390/jcm14186499

Chow DR, Remtulla R, Vargas G, Leite G, Koenekoop RK. Neural Network Prediction of Keratoconus in AIPL1-Linked Leber Congenital Amaurosis: A Proof-of-Concept Pilot Study. Journal of Clinical Medicine. 2025; 14(18):6499. https://doi.org/10.3390/jcm14186499

Chicago/Turabian StyleChow, Daniel R., Raheem Remtulla, Glenda Vargas, Goreth Leite, and Robert K. Koenekoop. 2025. "Neural Network Prediction of Keratoconus in AIPL1-Linked Leber Congenital Amaurosis: A Proof-of-Concept Pilot Study" Journal of Clinical Medicine 14, no. 18: 6499. https://doi.org/10.3390/jcm14186499

APA StyleChow, D. R., Remtulla, R., Vargas, G., Leite, G., & Koenekoop, R. K. (2025). Neural Network Prediction of Keratoconus in AIPL1-Linked Leber Congenital Amaurosis: A Proof-of-Concept Pilot Study. Journal of Clinical Medicine, 14(18), 6499. https://doi.org/10.3390/jcm14186499