Abstract

Since early 2018 the “Natural Hazards” Section of Geosciences journal has aimed to publish pure, experimental, or applied research that is focused on advancing methodologies, technologies, expertise, and capabilities to detect, characterize, monitor, and model natural hazards and assess their associated risks. This stream of geoscientific research has reached a high degree of specialization and represents a multi-disciplinary research realm. To inaugurate this section, the Special Issue “Key Topics and Future Perspectives in Natural Hazards Research” was launched. After a year and half since the call for papers was initially opened, the special issue is now completed with the editorial introducing the collection of 10 selected papers covering the following hot topics of natural hazards research: (i) trends in publications and research directions at international level; (ii) the role of Big Data in natural disaster management; (iii) assessment of seismic risk through the understanding and quantification of its three components (i.e., hazard, vulnerability and exposure/impact); (iv) climatic/hydro-meteorological hazards (i.e., drought, hurricanes); and (v) scientific analysis of past incidents and disaster forensics (i.e., the Oroville Dam 2017 spillway incident). The present editorial provides a summary of each paper of the collection within the current context of scientific research on natural hazards, pointing out the salient results and key messages.

1. Introduction

In early 2018 the journal Geosciences was re-organized into six sections, including one focused on “Natural Hazards”. I accepted the journal invitation to serve as the Editor-in-Chief of this section and defined its profile.

The section is dedicated to the publication of pure, experimental, or applied research that aims to advance methodologies, technologies, expertise, and capabilities to detect, characterize, monitor, and model natural hazards and assess their associated risks. This stream of geoscientific research has reached a high degree of specialization and represents a multi-disciplinary research realm. In this context, the Natural Hazards section is open to geoscientific studies of natural hazards that are carried out using ground investigations, in situ instrumentations and remote sensing. However, the section also accepts methodological papers proposing workflows and routines for modelling and forecasting, as well as cross-cutting articles dealing with the different aspects of hazard assessment and management. The latter encompass hazard mitigation, emergency management, post-disaster recovery, the scientific communication of hazards, and capacity building.

The emphasis of the section is on hazards that are predominantly associated with natural processes and phenomena, including environmental, geological or geophysical, hydro-meteorological, atmospheric, climatological, oceanographic, and biological hazards.

However, areas of interest include: research investigating the role played by human action in (co-)triggering natural hazards and/or exacerbating their impact on the environment; natural hazards that display slow kinematics and increasingly manifest in time (for example land subsidence), or require long-term observations and measurements to be detected and characterized; and, of course, hazards with an abrupt onset or that quickly spread and affect large areas.

So far, the Natural Hazards section has been very successful, with more than 260 published papers, 13 currently open special issues and other 18 already closed, which definitely make the section the biggest of the journal.

To inaugurate this new section, in May 2018 I launched the “Inaugural Section Special Issue: Key Topics and Future Perspectives in Natural Hazards Research”, in the hope to capture the state-of-the-art of natural hazards research through a collection of review and research papers that could outline where we were as a research community and future perspectives. I envisaged that the following topics would have been covered:

- new findings in understanding triggering and propagation mechanisms;

- revision of previous hypotheses or hazard scenarios;

- development of new conceptualizations and methodologies;

- testing of new data, sensors, and techniques for investigation, monitoring, change detection, and multi-temporal or back-analysis;

- development of new or refined models for forecasting;

- integration of hazard assessment in risk analysis;

- design of new materials and solutions for risk mitigation;

- exploration of new ways to communicate hazards and increase awareness;

- study of societal impacts of natural hazards.

2. Facts and Figures of the Special Issue

A total of 21 submissions were received for consideration of publication in the special issue from May 2018 to September 2019. After editorial checks and the peer-review process involving external and independent experts in the field, the acceptance rate was 48%. The published special issue, therefore, contains a collection of 10 research articles. The acceptance rate is in line with the current trend of the journal, and proves that the whole editorial process was very rigorous and selective.

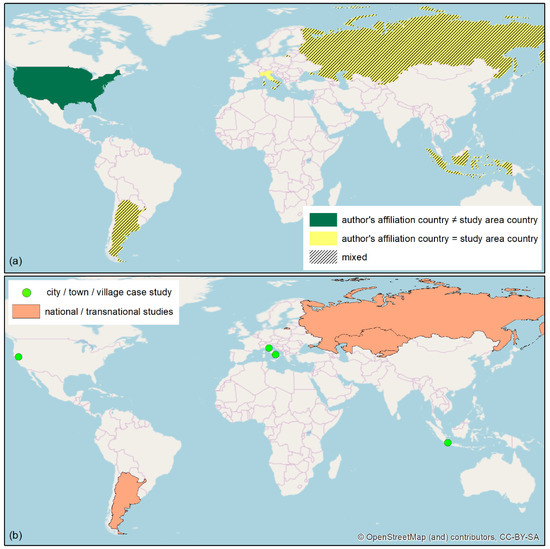

Figure 1a shows the countries where the study areas of the papers published in the special issue are located, compared with the countries of the author’s affiliation. Almost all the papers are about study areas that are located in the same country as the affiliation of at least one of the authors. In the majority of the cases, papers are the outcome of an international collaboration between different academia and research institutions. In one case only, the study area country does not coincide with the author’s affiliation country. This reflects the typology of the paper, i.e., the scientific analysis of a recent disaster that the paper authors chose to investigate because it was an interesting case study of a given incident type occurred under certain contextual conditions [1].

Figure 1.

(a) Spatial distribution of the countries where the study areas of the papers published in the special issue are located versus the country where the affiliation institutions of the authors are based; (b) geographic distribution of the study areas distinguished by typology (“city/town/village case study” and “national/transnational studies”).

With regard to study areas, four papers do not have a specific test site, while other four focus on selected cities, towns and villages. Two papers, instead, propose analyses of natural hazards at national and transnational levels (Figure 1b).

The article metrics after one year and half from the publication of the first paper are encouraging. Some of the papers have already attracted attention across the community. Yu et al. [2] has gained 16 citations, Emmer [3] 10 and Chieffo and Formisano [4] 6. Not surprisingly, the first two papers with the highest number of citations are a review and a bibliometrics paper. They provide insights into the realm of big data in natural disaster management and the trends in research on natural hazards worldwide, respectively. The third most cited paper, instead, has been referenced in the recent literature presumably owing to the methodological approach to seismic risk assessment presented therein, potentially replicable in other contexts.

3. Overview of the Published Papers

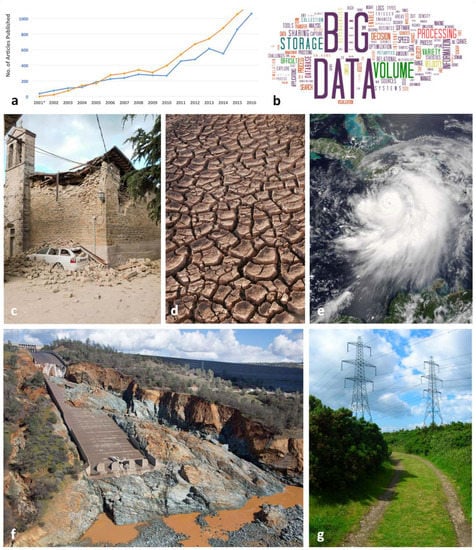

Figure 2 provides a pictorial composition summarising the main research topics that are covered by the papers published in the special issue:

Figure 2.

Natural hazards research topics covered by the papers published in the special issue: (a) trends in natural hazards research; (b) Big Data in natural disaster management; (c) earthquakes and seismic risk; (d) drought; (e) hurricanes; (f) analysis of disaster incidents (Oroville Dam spillway incident, California, USA, February 2017); (g) electricity utilities and infrastructure. Photo source: Wikimedia Commons.

- Trends in natural hazards research;

- Big Data in natural disaster management;

- Seismic risk: hazard, vulnerability and exposure/impact;

- Climatic/hydro-meteorological hazards;

- Scientific analysis of past incidents and disaster forensics.

An overview of each paper is provided in the following sections.

3.1. Trends in Natural Hazards Research

The scientometrics and bibliometrics paper by Emmer [3] is an excellent well-structured overview of the research on different types of natural hazards worldwide, and is based on the analysis of a set of more than 580,000 items published between 1900 and 2017 and recorded in the Clarivate Analytics Web of Science database. The author describes precisely how he searched and then classified the research items according to a classification of natural hazards that he adapted from [5,6]. In this study two general categories of natural hazards are covered: (i) climatic/hydro-meteorological; and (ii) geological/geomorphic. Floods, storms, drought, hurricanes are examples falling in the first category, while earthquakes, slope movements, erosion, volcanic activity of the second. The analysis is focused around spatio-temporal patterns (geographies) of the research and selected scientometrical characteristics. The author also compares the geographic focus of the studies with the events/fatalities/damages recorded in the two freely available global databases of natural disasters MunichRE NatCatSERVICE and SwissRE Sigma Explorer.

Among the key outcomes, it is worth mentioning the following:

- The overall number of research items has been increasing dramatically over time, with floods, earthquakes, storms and droughts being the most frequently researched natural hazards;

- At the same time, the ratio between research items focusing on (i) and those on (ii) has remained relatively stable over time, with climatic/hydro-meteorological dominating in all studied periods;

- Nevertheless, significant differences exist between individual countries, thus suggesting a regionalism reflecting the most common and pressing natural hazards at regional to local scales. Research on (i) dominates in the majority of countries, while research on (ii) in Italy, Japan and France. An increased share of research on droughts is, instead, observed in India and Australia;

- From the global perspective, the research on individual types of natural hazards is in all cases dominated by researchers from the USA, followed by researchers from China, with the exception of research on volcanic activity, where Italy is ranked second;

- The majority of research items are written by author(s) from one country. Only one fourth are international research items. The two clusters of bi-lateral cooperation are: (1) European (leading countries England, Germany, France, Italy) and (2) circum-Pacific (leading countries USA, China, Canada, Australia, Japan);

- Both NatCatSERVICE and Sigma Explorer report increasing extents of damage caused by natural disasters yearly, apparently reflecting changing conditions, increasing population pressure and vulnerability globally, as well as possible inconsistencies in disaster databases on a global level;

- Major disasters (e.g., 2008 Wenchuan earthquake) are capable of causing an increase in research publications focused on that particular type of natural hazard.

3.2. Big Data in Natural Disaster Management

In natural hazards research and development (R&D), there is no doubt that “Big Data” have nowadays become a key component. They are contributing to change the way natural hazards are studied and natural disasters are managed. This is what Yu et al. [2] are sure about in their systematic review of the scientific literature aiming to analyze the role of Big Data in natural disaster management.

The authors created a sample of peer-reviewed journal articles published from 2011 to 2018, starting from an initial collection in the research catalogue Google Scholar, and then manually skim-reading each publication to reach the final body of relevant literature. Three aspects are specifically reviewed in the paper: (1) the major sources of Big Data; (2) the associated achievements in different disaster management phases; and (3) emerging technological topics associated with leveraging this new ecosystem of Big Data to monitor and detect natural hazards, mitigate their effects, assist in relief efforts, and contribute to the recovery and reconstruction processes.

In the current context that no standard definition of “Big Data” in disaster management is available, the authors acknowledge that some documents exist at national and international levels that outline the emerging data collections and a catalogue of sources of Big Data in disaster resilience. However, it is the authors’ opinion that the concept of Big Data goes beyond the datasets themselves, regardless of their size. Big Data should be considered as the integration of diverse data sources and the capability to analyze and use the data (usually in real time) to the benefit of the population and society faced with a given disaster.

The authors find that satellite imagery, crowd-sourcing, and social media data serve as the most popular data for disaster management. Not surprisingly, satellite remote-sensing technology is primarily used for post-disaster damage assessment through change detection, and to respond through operational assistance. At the same time, the use of aerial imagery captured via unmanned aerial vehicles (UAVs) is becoming more common owing to the efficiency of such instrumentations in situational awareness to provide much faster higher spatial resolution data compared to satellite imagery.

In this regard, this finding aligns with the current trend in operational services for disaster risk management. For example, the Copernicus Emergency Management Service (Copernicus EMS) has been assessing the feasibility and the associated benefits of the use of airborne sensors on-board drones or planes in its operational workflow, through specific agreements with plane and drone operators for on-demand rapid data acquisition and processing services in Europe. Significant advantages can be achieved when these data sources are used in combination with satellite Earth Observation data, for both mapping and validation tasks [7]. However, Yu et al. rightly point out that some challenges are still open (e.g., short battery life; unforeseen behaviour in different atmospheric conditions; limited scope of pilot training for users; and legislation that severely limits the use of UAVs in most countries).

Similar critical comments are made by the authors with regard to crowd-sourcing and social media, by highlighting opportunities and current limitations that not only scientists but also first responders and policy/decision-makers need to account for. Although this aspect is only briefly investigated in the paper, it is evident that more research and technological transfer are required to develop effective analytics (even of “real-time” type, in the case of social media), to extract valuable and reliable information that can be used as validated inputs in the disaster management cycle and be integrated with authoritative data, such as terrain and census.

Yu et al. have also mapped the major data sources and the application fields within the four distinct phases of the disaster management cycle, i.e., “mitigation”, “preparedness”, “responses”, and “recovery”.

“Machine learning” and “Cyber-infrastructure” emerge as the two evolutionary technologies that may facilitate disaster management in various ways. The review of the existing technologies and recent achievements proves that this is currently a vibrant research field, but with the major weakness of a technological gap between research and society. Big Data and cyber-infrastructure still pose significant challenges in terms of data volume, fast data transfer, intuitive data visualization and, more generally, efficient data management, that require financial and information technology (IT) resources, as well as expert users and translators of these data into information valuable for end users.

3.3. Seismic Risk: Hazard, Vulnerability and Exposure/Impact

The seismic risk is the most investigated natural risk in this special issue, with 5 out of the total 10 papers exploring either hazard or vulnerability or exposure/impact, i.e., the three components of the seismic risk equation.

3.3.1. Hazard

To characterize the seismic hazard and risk at a given location, the knowledge of local geology is mandatory. This is even more important in those sites, such as large cities and megacities, that are heavily urbanized and where rapid urbanisation may have happened with poor knowledge of the subsurface and little adherence to earthquake-proof building codes, so as an epicentral hit has the potential to cause 1 million fatalities [8]. The majority of cities are built on sedimentary basins that, on the one hand, offer flat topography for building development and fertile soils and groundwater resource for subsistence but, on the other hand, have potential to cause amplification and resonance of seismic wave motion in the case of earthquakes.

This is the geological context of the Jakarta Basin, in Indonesia, that is investigated by Ry et al. [9]. The authors tested a relatively new and simple technique to map shallow seismic structure using body-wave polarization. This technique proved to be a cost-effective alternative to the use of borehole and active source surveys, wherever three-component seismometers are operated.

To this end, Ry et al. exploited two dense, temporary broadband seismic networks covering Jakarta city and its surroundings during two distinct periods: 96 stations from October 2013 to February 2014; 143 stations between April and October 2018. This second deployment provided coverage just outside Jakarta in order to reveal the extent of the basin edge. Signals from 56 earthquakes with a good signal-to-noise ratio (SNR), varying from local to regional and teleseismic earthquakes, were recorded and evaluated. By applying the polarization technique to these earthquake signals, the apparent half-space shear-wave velocity (Vsahs) beneath each station was obtained, providing spatially dense coverage of the sedimentary deposits and the edge of the basin.

The results showed that spatial variations in Vsahs are compatible with previous studies, and appear to reflect the average shear-wave velocity (Vs) of the top 150 m. The authors were also able to extend this information beyond the city limits of Jakarta to what was thought to be the basin edge. The understanding of the complete geometry of the Jakarta sedimentary basin is crucial to develop a more accurate ground-motion simulation for hypothetical earthquake scenarios that can characterize the seismic risk in Jakarta.

3.3.2. Vulnerability

Giuffrida et al. [10] focus on the seismic vulnerability in small inland urban centres. This is a rather important topic that, sometimes, has received less attention than the seismic risk in cities and densely populated agglomerations. Areas that are marginal to large cities, or are located in mountainous regions or locations outside of main infrastructure and transportation networks, may be exposed to the risk of depopulation. If hit by earthquakes, these minor centres could be definitely abandoned soon after the seismic event. Therefore, in the process of restoration and redevelopment of minor historic centres, land and urban planning needs to encompass seismic vulnerability reduction policies, while preserving the integrity and cultural identity of the main buildings.

In this regard, the study by Giuffrida et al. is contextualized in the current Italian legislation framework. The Emergency Limit Condition (ELC) was introduced in 2012 as a municipal-scale analysis set up based on the Civil Protection Plans, and aims to guarantee that the emergency management system works during the post-earthquake phase. In particular, the ELC represents the limit condition for which, after the seismic event, all the functions (including residence) of the urban settlement are lost, except for the strategic functions necessary for the emergency management, their accessibility and connection with the territorial context.

The authors propose an approach to seismic vulnerability reduction made of three main stages: (1) knowledge of the typological, constructive, and technological features of the buildings; (2) analysis of the possible damage in case of an earthquake; and (3) planning of actions to reduce the vulnerability of buildings, with a cost modelling tool to define the trade-off between the extension and intensity of the vulnerability reduction works, given the available budget. The case study is the old town of Brisighella, in the province of Ravenna, Emilia-Romagna region, central Italy, for which the authors provide an evaluation of the vulnerable assets, in terms of both the human and urban capitals. This evaluation allows the authors to compare the costs of the seismic retrofit with the advantages of safety and to provide further evidence to formalize the equalization model.

A small rural municipality is also the case study of the paper by Chieffo and Formisano [4]. The authors assessed the seismic vulnerability and damage of the old masonry building compounds in a sector of the historic centre of Senerchia, in the province of Avellino, Campania region, southern Italy. The authors describe how they classified the inspected building aggregates by construction typology using the CARTIS form, i.e., the method developed by the PLINIVS research centre of the University of Naples “Federico II” in collaboration with the Italian Civil Protection Department.

The work aimed to evaluate the effects of local amplification varying the topographic class and the type of soils foreseen in the Italian “Updating of Technical Standards for Construction” NTC18 issued in 2018. The influence of soil conditions was considered by implementing a macroelement model of a typical masonry aggregate of the investigated study area, with the goal to plot damage scenarios expected under different earthquake moment magnitudes and site-source distances. In practice, the authors assessed the global seismic vulnerability of the building sample using the macroseismic method according to the European macroseismic EMS-98 scale, and identified the buildings most susceptible to seismic damage. Then, they developed 12 damage scenarios by means of an appropriate seismic attenuation law and analysed them with regard to local induced hazard effects. According to these damage scenarios, the site effects lead to a damage increment variable from 2% to 50%, which was much more marked at the smallest considered distance. In addition, local seismic effects were considerable for larger magnitudes. Seismic amplification factors due to the soil condition increase the occurrence probability of attaining the largest damage thresholds.

3.3.3. Economic and Social Impacts

Population and the possible fatalities caused by an earthquake of a given magnitude are the first element at risk and consequence that are calculated in a quantitative seismic risk assessment. However, earthquakes are natural disasters that can also cause enormous economic damage if they affect the integrity and functioning of buildings, infrastructure and utilities.

The paper by Iakubovskii et al. [11] focuses on electricity utilities and it is original in that the proposed mathematical model is applied at transnational level to evaluate the reliability of the interconnected electricity supply system of three countries of the Eurasian Economic Union (EAEU)—Russia, Kazakhstan, and Kyrgyzstan—under the threat of earthquakes. The EAEU currently consists of Armenia, Belarus, Kazakhstan, Kyrgyzstan, and Russia, and is currently facing a process of integration of its energy markets due to the planned creation of a common electricity market by 2019. The question around the reliability of electricity supply in the wake of natural hazards gained the attention of the EAEU states in the framework of energy strategies. Even though such interruptions are not frequent, each power outage or blackout affects several millions of people and causes vast economic losses and damages. Therefore, the authors call for the development of coordinated policies and risk management strategies to deal with electricity outage risks in the EAEU.

In their work, Iakubovskii et al. implement a modified version of the simplified reliability assessment approach, based on the so-called “N—i criterion”. The authors determine which elements of the system are susceptible to failure due to an earthquake of a given magnitude. The results of the scenario analysis of earthquakes and their impacts on the reliability of the power supply system highlight that the energy security of the EAEU region is affected by the existence of interconnections that are vulnerable. The interconnections where disruptions of the electricity supply will have high impacts are situated between Kazakhstan and Kyrgyzstan, as well as between the isolated “West” node of Kazakhstan and Russia. Power supply interruptions at these lines can seriously influence the stability of the electricity transmission system, and lead to huge economic losses in the affected regions.

Going back to the human component of exposure and impact, Raccanello et al. [12] rightly recall that natural disasters have a potentially highly traumatic impact on psychological functioning of the affected population, specifically on children. This paper is extremely interesting since it investigates the psychological representation of earthquakes in children’s minds, a topic that has been given scarce attention in the literature so far. The study of how people, and in particular children, perceive and represent natural disasters not only provides new elements of knowledge from the theoretical point of view of this discipline but also, from an applied perspective, provides the foundations for improving the risk awareness of youngest generations in order to promote adequate preparedness, in particular through emotional prevention.

The authors involved a convenience sample of 128 primary school children, from the second to the fourth grades, coming from a variety of socio-economic status levels, in north-eastern Italy. Most of the children had never experienced earthquakes directly and only a small percentage of children had experienced them. None of the children who had experienced earthquakes at least once reported any damage. The participants were asked to complete a written definition task and an online recognition task. The answers were analysed through the Rasch model.

In the children’s representation of earthquakes, natural elements such as geological ones, were the most salient, followed by man-made and then by person-related elements. Older children revealed a more complex representation of earthquakes, and this was detected through the online recognition task. The authors conclude that Italian primary school children possess a basic knowledge of earthquakes, are able to differentiate elements pertaining to different domains, and give particular relevance to the core geological issues characterizing earthquakes. At the same time, children showed an initial awareness of the different elements to which damage can be associated (e.g., the macroscopic and external consequences for the structures which are built by the individuals). Human behaviors such as escaping, biological consequences such as being hurt, or affective reactions assume similar relevance. By contrast, age differences were not so well detected in the written task compared to the recognition one. The better differentiation in the latter may be due to the more intuitive nature of the recognition task requiring a lower amount of cognitive resources, as well as the fact that the use of information and communication technology (ICT) instruments may stimulate children to engage with the task.

3.4. Climatic/Hydro-Meteorological Hazards

The “Natural Hazards” Section encourages submissions about hydro-meteorological, atmospheric, climatological and oceanographic hazards. Differently from the statistics of the whole research publication realm presented in [3], in Geosciences journal these types of natural hazards have been so far less researched than the geological/geomorphic ones. Therefore, it is interesting that 2 of the 10 papers published in this inaugural special issue relate to drought and weather prediction in the context of the simulation of hurricanes.

With regard to drought, it is well known that the deficit or inadequate timing of precipitation over an extended period of time leads to water scarcity that, insidiously, propagates in time through the hydrological cycle, and causes serious damage to socioeconomic and environmental systems. Naumann et al. [13] recall that, in the last 20 years, several drought periods were reported across Argentina, with at least three devastating events in 2006, 2009 and 2011. The authors focus their analysis on a systematic quantification of the drought risk at national scale: (i) as a function of long-term hazard, exposure and vulnerability; and (ii) dynamically as a combination of changes in drought conditions and the exposed assets. Media news, official reports at national and provincial levels, and the DesInventar disaster loss database were used as data sources to identify drought impacts. By combining drought hazard indicators and exposure layers, the authors analysed spatial and temporal patterns of exposure towards a better understanding of the interaction between changes in population structure and regional climate variability. Assets exposed to droughts were identified with several records of drought impacts and declarations of farming emergencies. Indeed, dry periods had detrimental impacts on very diverse sectors including agriculture and livestock production, but also inland river transportation and hydropower production.

If suitable medium-range forecasts became available, the method by Naumann et al. could be helpful for monitoring the probability of impact occurrence from the onset of a drought, or even before, and therefore could provide scientific input to trigger pro-active measures in order to cope with and mitigate the potential impacts of droughts.

Predictability is also the keyword of the study published by Shen [14]. The author builds upon previous studies wherein he achieved realistic 30-day simulations of multiple African easterly waves (AEWs) and an averaged African easterly jet (AEJ), as well as of hurricane Helene (2006) from Day 22 to Day 30. In the present paper, Shen further analyzes such extended predictability based on recent understandings of chaos and instability within Lorenz models and the generalized Lorenz model (GLM), and concludes that a statement of the theoretical predictability of two weeks is not universal. He also shows new insight into chaotic and non-chaotic processes revealed by the GLM and that there is the potential for extending prediction lead times at extended range scales. Examples of simulation of hurricanes with larger errors are also discussed, leading to the conclusion that the predictability of hurricane formation may be better near the Cape Verde Islands that are closer to the continent (e.g., for hurricane Helene) than over the Atlantic Ocean (e.g., for hurricane Florence). Shen projects his future work on refining the model to better examine the validity of the mechanism in explaining the recurrence of multiple AEWs.

3.5. Scientific Analysis of Past Incidents and Disaster Forensics

The paper by Koskinas et al. [1] reminds us that, when disasters happen (either natural or human-induced or as a combination of the two) and gain the attention of the general public, it is quite frequent that questions arise about the causes (and responsibilities). Scientific investigations aim to provide objective and evidence-based description and interpretation of the event, in the hope that this knowledge would strengthen the society capabilities to prevent similar events in future or, if not possible otherwise, to improve the disaster management and contribute to building resilience to hazards.

On the other hand, forensic investigation applied to the study of natural hazard disasters is a relatively new discipline. Despite what the term “forensic” could suggest, these types of investigations are not intended to seek or assign legal responsibility, but rather to understand which factors and how they contributed to the gestation and occurrence of a disaster in order to prevent and mitigate disaster risk [15].

Koskinas et al. present the outcomes of their hydroclimatic analysis of the Oroville Dam’s catchment in California where, in February 2017, a huge spillway incident occurred at the local namesake dam (Figure 2f). Heavy rainfall during the 2017 California floods damaged the main spillway of the dam on 7 February, prompting the evacuation of more than 180,000 people living downstream along the Feather River and the relocation of a fish hatchery.

Figure 3 compares cloud-free satellite multispectral images collected by Copernicus Sentinel-2 constellation on 1 December 2016 and 11 March 2017. The GIF animations accessible in the Supplementary Materials of this editorial highlight the impact of rainfalls causing the rise of the reservoir surface level and the extent of the damage to the main spillway, still visible one month after the event.

Figure 3.

Oroville dam, California, USA, (a) before and (b) after the spillway incident occurred in February 2017, in two satellite multispectral images collected on 1 December 2016 and 11 March 2017, respectively (contains Copernicus Sentinel-2 data 2016–2017). The full time-lapse is provided in the Supplementary Materials.

The incident was massively covered by the media and an independent forensic team was tasked with determining the causes. Koskinas et al. chose to back-analyse this incident because it represents an interesting case study of dam failure that occurred under standard operating conditions, yet at an unfortunate time. The hydroclimatic analysis of the catchment is conducted, along with a review of related design and operational manuals. Based on summary characteristics of the 2017 floods, the authors outline possible causes in order to understand which factors contributed more significantly. They conclude that the event was most likely the result of a structural problem in the dam’s main spillway and detrimental geological conditions. However, the analysis of surface level data also reveals operational issues that were not present during previous larger floods. The authors suggest that a discussion should be promoted about flood control design methods, specifications, and dam inspection procedures, and how these can be improved to prevent the future occurrence of similar events.

Supplementary Materials

The following GIF animations are available online at https://www.mdpi.com/2076-3263/10/1/22/s1: time-lapse of cloud-free satellite multispectral images collected by Copernicus Sentinel-2 constellation from 1 December 2016 to 11 March 2017, showing the evolution of the Oroville Dam 2017 spillway incident (created in Sentinel Hub EO Browser and exported under CC BY 4.0 license).

Funding

This research received no external funding.

Acknowledgments

The Guest Editor thanks all the authors, Geosciences’ editors, and reviewers for their great contributions and commitment to this Special Issue. A special thank goes to Richard Li, Geosciences’ Assistant Editor, for his dedication to this project and his valuable collaboration in the setup, promotion and management of the Special Issue.

Conflicts of Interest

The author declares no conflict of interest.

References

- Koskinas, A.; Tegos, A.; Tsira, P.; Dimitriadis, P.; Iliopoulou, T.; Papanicolaou, P.; Koutsoyiannis, D.; Williamson, T. Insights into the Oroville dam 2017 Spillway incident. Geosciences 2019, 9, 37. [Google Scholar] [CrossRef]

- Yu, M.; Yang, C.; Li, Y. Big data in natural disaster management: A review. Geosciences 2018, 8, 165. [Google Scholar] [CrossRef]

- Emmer, A. Geographies and scientometrics of research on natural hazards. Geosciences 2018, 8, 382. [Google Scholar] [CrossRef]

- Chieffo, N.; Formisano, A. Geo-hazard-based approach for the estimation of seismic vulnerability and damage scenarios of the old city of senerchia (Avellino, Italy). Geosciences 2019, 9, 59. [Google Scholar] [CrossRef]

- Burton, I.; Kates, R.W. The Perception of Natural Hazards in Resource Management. Nat. Resour. J. 1964, 3, 412–441. [Google Scholar]

- Wisner, B. Handbook of Hazards and Disaster Risk Reduction; Routledge: London, UK, 2012. [Google Scholar]

- Drones and Planes in Support of Copernicus: Examples from the Emergency Management Service—Copernicus In Situ Component. Available online: https://insitu.copernicus.eu/news/drones-and-planes-in-support-of-copernicus-examples-from-the-emergency-management-service (accessed on 31 December 2019).

- Bilham, R. The seismic future of cities. Bull. Earthq. Eng. 2009, 7, 839–887. [Google Scholar] [CrossRef]

- Ry, R.V.; Cummins, P.; Widiyantoro, S. Shallow shear-wave velocity beneath jakarta, indonesia revealed by body-wave polarization analysis. Geosciences 2019, 9, 386. [Google Scholar] [CrossRef]

- Giuffrida, S.; Trovato, M.R.; Circo, C.; Ventura, V.; Giuffrè, M.; Macca, V. Seismic vulnerability and old towns. A cost-based programming model. Geosciences 2019, 9, 427. [Google Scholar] [CrossRef]

- Iakubovskii, D.; Komendantova, N.; Rovenskaya, E.; Krupenev, D.; Boyarkin, D. Impacts of earthquakes on energy security in the Eurasian economic union: Resilience of the electricity transmission networks in Russia, Kazakhstan, and Kyrgyzstan. Geosciences 2019, 9, 54. [Google Scholar] [CrossRef]

- Raccanello, D.; Vicentini, G.; Burro, R. Children’s Psychological Representation of Earthquakes: Analysis of Written Definitions and Rasch Scaling. Geosciences 2019, 9, 208. [Google Scholar] [CrossRef]

- Naumann, G.; Vargas, W.M.; Barbosa, P.; Blauhut, V.; Spinoni, J.; Vogt, J.V. Dynamics of socioeconomic exposure, vulnerability and impacts of recent droughts in Argentina. Geosciences 2019, 9, 39. [Google Scholar] [CrossRef]

- Shen, B.W. On the predictability of 30-day global mesoscale simulations of african easterly waves during summer 2006: A view with the generalized lorenz model. Geosciences 2019, 9, 281. [Google Scholar] [CrossRef]

- Mendoza, M.T.; Schwarze, R. Sequential Disaster Forensics: A Case Study on Direct and Socio-Economic Impacts. Sustainability 2019, 11, 5898. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).