Deep Learning in Biomedical Informatics and Healthcare

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Biomedical Sensors".

Viewed by 111435Editors

Interests: brain computer interfaces; human-machine interaction; signal processing; machine-learning algorithms; connectivity-based methods

Special Issues, Collections and Topics in MDPI journals

Interests: cognitive neuroscience; behavioural neuroscience; neuropsychology, biosignals processing; brain-computer interface; human-machine interaction; human factor; road safety

Special Issues, Collections and Topics in MDPI journals

Interests: Neuroimaging; passive Brain-Computer Interface; Human Factors; Machine Learning; Applied Neuroscience; Cooperation

Interests: deep learning; case-based reasoning; data mining; fuzzy logic; other machine learning and machine intelligence approaches for analytics especially in Big data

Special Issues, Collections and Topics in MDPI journals

Interests: brain networks; affective and personality neuroscience; applied neuroscience; EEG signal processing; machine learning; graph theory

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

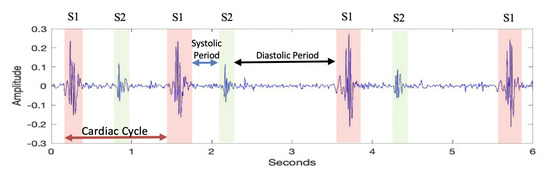

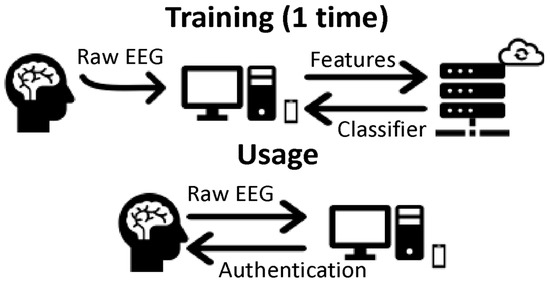

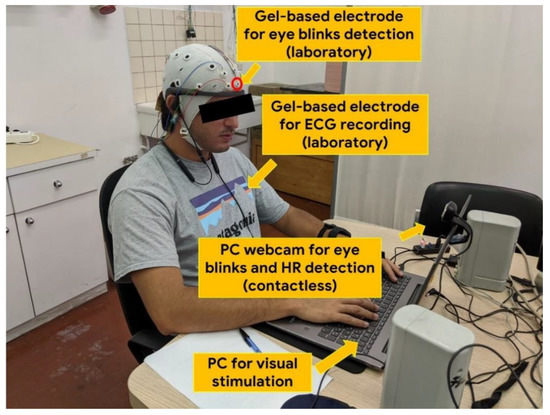

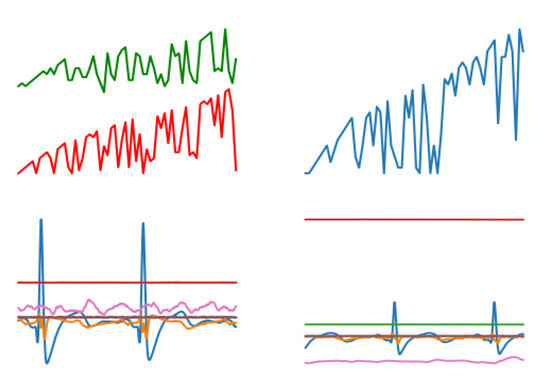

Artificial intelligence (AI) is already part of our everyday lives, and over the past few years, AI has exploded. Much of that has to do with the wide availability of GPUs that make parallel processing ever faster, cheaper, and more powerful. Deep learning (DL) has enabled many practical applications of machine learning and by extension the overall field of AI. The concept of DL has been applied to numerous research areas, such as mental states prediction and classification, image/speech recognition, vision, and predictive healthcare. The main advantage of DL algorithms relies on providing a computational model of a large dataset by learning and representing data at multiple levels. Therefore, deep learning models are able to give intuitions to understand the complex structures of large dataset. The employment of DL algorithms in controlled settings and datasets has been widely demonstrated and recognized, but realistic healthcare contexts may present some issues and limitations, for example, the invasiveness and cost of the biomedical signals recording systems, to achieve high performance. In this regard, the aim of the Special Issue is to collect the latest DL algorithms and applications to be applied in everyday life, contexts, and various research areas, in which biomedical signals, for example, Electroencephalogram (EEG), Electrocardiogram (ECG), and Galvanic Skin Response (GSR), are considered and eventually combined for the user’s mental states monitoring while dealing with realistic tasks (i.e., passive BCI), the adaptation of Human–Machine Interactions (HMIs), and for an objective and comprehensive user’s wellbeing assessment such as remote and predictive healthcare applications.

Areas covered by this section include but are not limited to the following:

- Modelling and assessment of mental states and physical/psychological impairments/disorders;

- Remote and predictive healthcare;

- Transfer learning;

- Human–Machine Interactions (HMIs);

- Adaptive automation;

- Human Performance Envelope (HPE);

- Passive Brain-Computer Interaction (pBCI);

- Wearable technologies;

- Multimodality for neurophysiological assessment.

All types of manuscripts are considered, including original basic science reports, translational research, clinical studies, review articles, and methodology papers.

Dr. Gianluca Borghini

Dr. Gianluca Di Flumeri

Dr. Nicolina Sciaraffa

Assoc. Prof. Mobyen Uddin Ahmed

Ass. Prof. Manousos Klados

Guest Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 250 words) can be sent to the Editorial Office for assessment.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- healthcare

- deep learning

- transfer learning

- diagnosis

- mental states

- biomedical signal fusion

- machine learning

- articificial intelligence

- adaptive automation

- passive brain–computer interface