Maximum Entropy and Its Applications

Share This Topical Collection

Editor

Dr. Dawn E. Holmes

Dr. Dawn E. Holmes

Dr. Dawn E. Holmes

Dr. Dawn E. Holmes

E-Mail

Website

Collection Editor

Department of Statistics and Applied Probability, University of California, Santa Barbara, CA 93106-3110, USA

Interests: Bayesian networks; machine learning; data mining; knowledge discovery; the foundations of Bayesianism

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

The field of entropy-related research has been particularly fruitful in the past few decades and continues to produce important results in a range of scientific areas, including statistical physics, quantum communications, environmental systems, and natural language processing and network analysis. Contributions to this Collection are welcome from both the theoretical and applied perspectives of entropy, including papers addressing conceptual and methodological developments, as well as new applications of entropy and information. Papers on foundational issues involving the theory of maximum entropy are also welcome.

Dr. Dawn E. Holmes

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 250 words) can be sent to the Editorial Office for assessment.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Entropy is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Keywords

- Maximum entropy

- Bayesian maximum entropy

- Relative entropy

- Entropy loss

- Information theory

- Estimating missing information

Published Papers (3 papers)

Open AccessArticle

Bio-Inspired Intelligent Systems: Negotiations between Minimum Manifest Task Entropy and Maximum Latent System Entropy in Changing Environments

by

Stephen Fox, Tapio Heikkilä, Eric Halbach and Samuli Soutukorva

Cited by 1 | Viewed by 1997

Abstract

In theoretical physics and theoretical neuroscience, increased intelligence is associated with increased entropy, which entails potential access to an increased number of states that could facilitate adaptive behavior. Potential to access a larger number of states is a latent entropy as it refers

[...] Read more.

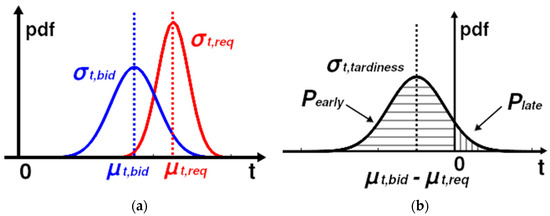

In theoretical physics and theoretical neuroscience, increased intelligence is associated with increased entropy, which entails potential access to an increased number of states that could facilitate adaptive behavior. Potential to access a larger number of states is a latent entropy as it refers to the number of states that could possibly be accessed, and it is also recognized that functioning needs to be efficient through minimization of manifest entropy. For example, in theoretical physics, the importance of efficiency is recognized through the observation that nature is thrifty in all its actions and through the principle of least action. In this paper, system intelligence is explained as capability to maintain internal stability while adapting to changing environments by minimizing manifest task entropy while maximizing latent system entropy. In addition, it is explained how automated negotiation relates to balancing adaptability and stability; and a mathematical negotiation model is presented that enables balancing of latent system entropy and manifest task entropy in intelligent systems. Furthermore, this first principles analysis of system intelligence is related to everyday challenges in production systems through multiple simulations of the negotiation model. The results indicate that manifest task entropy is minimized when maximization of latent system entropy is used as the criterion for task allocation in the simulated production scenarios.

Full article

►▼

Show Figures

Open AccessArticle

About the Definition of the Local Equilibrium Lattice Temperature in Suspended Monolayer Graphene

by

Marco Coco, Giovanni Mascali and Vittorio Romano

Cited by 3 | Viewed by 2630

Abstract

The definition of temperature in non-equilibrium situations is among the most controversial questions in thermodynamics and statistical physics. In this paper, by considering two numerical experiments simulating charge and phonon transport in graphene, two different definitions of local lattice temperature are investigated: one

[...] Read more.

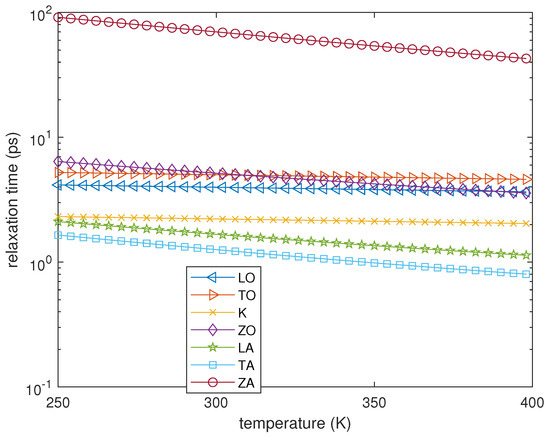

The definition of temperature in non-equilibrium situations is among the most controversial questions in thermodynamics and statistical physics. In this paper, by considering two numerical experiments simulating charge and phonon transport in graphene, two different definitions of local lattice temperature are investigated: one based on the properties of the phonon–phonon collision operator, and the other based on energy Lagrange multipliers. The results indicate that the first one can be interpreted as a measure of how fast the system is trying to approach the local equilibrium, while the second one as the local equilibrium lattice temperature. We also provide the explicit expression of the macroscopic entropy density for the system of phonons, by which we theoretically explain the approach of the system toward equilibrium and characterize the nature of the equilibria, in the spatially homogeneous case.

Full article

►▼

Show Figures

Open AccessArticle

A Bootstrap Framework for Aggregating within and between Feature Selection Methods

by

Reem Salman, Ayman Alzaatreh, Hana Sulieman and Shaimaa Faisal

Cited by 23 | Viewed by 5163

Abstract

In the past decade, big data has become increasingly prevalent in a large number of applications. As a result, datasets suffering from noise and redundancy issues have necessitated the use of feature selection across multiple domains. However, a common concern in feature selection

[...] Read more.

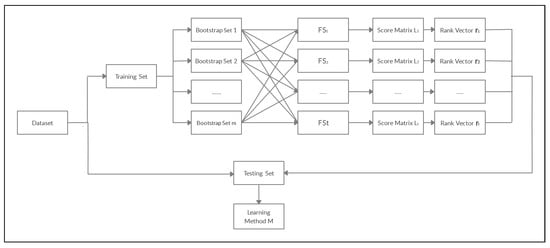

In the past decade, big data has become increasingly prevalent in a large number of applications. As a result, datasets suffering from noise and redundancy issues have necessitated the use of feature selection across multiple domains. However, a common concern in feature selection is that different approaches can give very different results when applied to similar datasets. Aggregating the results of different selection methods helps to resolve this concern and control the diversity of selected feature subsets. In this work, we implemented a general framework for the ensemble of multiple feature selection methods. Based on diversified datasets generated from the original set of observations, we aggregated the importance scores generated by multiple feature selection techniques using two methods: the Within Aggregation Method (WAM), which refers to aggregating importance scores within a single feature selection; and the Between Aggregation Method (BAM), which refers to aggregating importance scores between multiple feature selection methods. We applied the proposed framework on 13 real datasets with diverse performances and characteristics. The experimental evaluation showed that WAM provides an effective tool for determining the best feature selection method for a given dataset. WAM has also shown greater stability than BAM in terms of identifying important features. The computational demands of the two methods appeared to be comparable. The results of this work suggest that by applying both WAM and BAM, practitioners can gain a deeper understanding of the feature selection process.

Full article

►▼

Show Figures