Abstract

In recent years, the rapid integration of artificial intelligence (AI) technologies into education has sparked intense academic and public debate regarding their impact on students’ cognitive development. One of the central concerns raised by researchers and practitioners is the potential erosion of critical and independent thinking skills in an era of widespread reliance on neural network-based technologies. On the one hand, AI offers new opportunities for personalized learning, adaptive content delivery, and increased accessibility and efficiency in the educational process. On the other hand, growing concerns suggest that overreliance on AI-driven tools in intellectual tasks may reduce students’ motivation to engage in self-directed analysis, diminish cognitive effort, and lead to weakened critical thinking skills. This paper presents a comprehensive analysis of current research on this topic, including empirical data, theoretical frameworks, and practical case studies of AI implementation in academic settings. Particular attention is given to the evaluation of how AI-supported environments influence students’ cognitive development, as well as to the pedagogical strategies that can harmonize technological assistance with the cultivation of autonomous and reflective thinking. This article concludes with recommendations for integrating AI tools into educational practice not as replacements for human cognition, but as instruments that enhance critical engagement, analytical reasoning, and academic autonomy.

1. Introduction

1.1. Literature Review

In recent years, there has been a surge of interest in the application of artificial intelligence (AI) technologies in the field of education. This interest is driven not only by technological advancement but also by the need to transform pedagogical approaches in the context of digital learning (Beetham & Sharpe, 2013; Holmes et al., 2021). Numerous researchers emphasize that AI tools significantly expand the possibilities for personalized, adaptive learning and intellectual support throughout the educational process (Luckin et al., 2016; U.S. Department of Education, 2023).

A significant body of literature focuses on the cognitive impact of AI on learners. On one hand, generative models such as ChatGPT or Bing Chat can serve as “cognitive mediators”, stimulating processes of analysis, argumentation, and reflection (Vasconcelos & dos Santos, 2023; Darwin et al., 2024). On the other hand, there are concerns that widespread and uncritical use of AI may foster algorithmic dependence and diminish cognitive engagement (Selwyn, 2020; Akgun et al., 2024).

Foundational theories of cognitive and metacognitive development—such as metacognition (Flavell, 1979; Schraw & Dennison, 1994) and sociocultural internalization (Vygotsky, 1978)—form a crucial theoretical framework for assessing the influence of AI. These frameworks assert that critical thinking develops through active cognitive engagement, self-reflection, argumentation, and hypothesis testing. In this context, AI can either support thinking when pedagogically integrated, or substitute for intellectual activity when passively used.

Several international organizations (UNESCO, 2022; OECD, 2021; World Economic Forum, 2023) underscore the need for fostering digital critical literacy—the ability to verify, evaluate, and meaningfully integrate information in digital environments. These competencies are seen as key to the future of sustainable education. A critical aspect of this challenge involves training both educators and students in the ethical use of AI (Heikkilä & Niemi, 2023; Floridi & Cowls, 2019).

Literature further highlights the importance of blended and flexible learning models, in which AI tools are not replacements for teachers but complements to pedagogical interaction (Williamson & Eynon, 2020). These models combine digital capabilities with the development of high-level cognitive functions—such as analysis, interpretation, and independent judgment. It is the pedagogical design, rather than the mere presence of AI, that determines educational effectiveness (Holmes, 2023; Braun & Clarke, 2006).

Thus, contemporary research reveals the dual nature of AI in education: from the potential to enhance cognition to the risk of promoting intellectual inertia. This duality reinforces the relevance of this study, which seeks to identify the conditions under which AI can serve as a catalyst rather than a replacement for critical thinking.

In the 21st century, the digitalization of education has transitioned from a prospective trend to an objective necessity. This shift is driven by both the globalization of education and the rapid development of digital technologies, including AI. AI has become an integral part of the educational ecosystem, transforming instructional practices, learner engagement, and assessment processes (Floridi & Cowls, 2019; Holmes et al., 2021; UNESCO, 2022; Holmes, 2023).

Modern AI tools—such as adaptive platforms (Squirrel AI, Century Tech), intelligent tutors (Knewton, Carnegie Learning), automated grading systems, and generative models (ChatGPT-40, Bing Chat)—are being implemented in an increasing number of educational institutions (Luckin et al., 2016; Vasconcelos & dos Santos, 2023; Akgun et al., 2024). According to forecasts by the World Economic Forum (2023) and the Center for Curriculum Redesign (Holmes et al., 2021), AI will evolve from a supportive tool into an active agent shaping educational content and structure by 2030.

However, these advancements bring a set of challenges, foremost among them being the preservation and development of students’ critical and independent thinking. According to several scholars, excessive algorithmization of education may lead to a decline in students’ cognitive engagement (Selwyn, 2020; Williamson & Eynon, 2020; Darwin et al., 2024). The use of AI to generate written assign-ments, solve problems, and search for information may simplify educational tasks but can also reduce the cognitive effort required for comprehension and analysis (Luckin et al., 2016; OECD, 2021; Sweller, 1988).

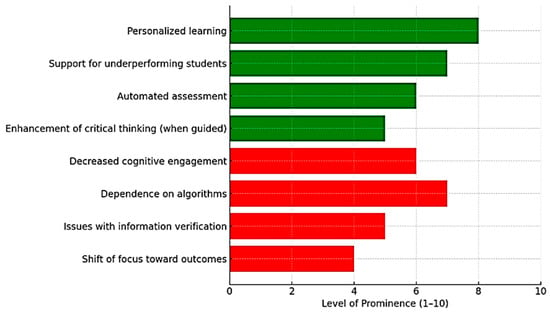

The chart shown in Figure 1 presents the principal risks and opportunities associated with AI in education, as identified through a combination of empirical data from participant interviews and surveys (N = 56) and a synthesis of recent literature (Holmes et al., 2021; Akgun et al., 2024; Selwyn, 2020; Luckin et al., 2016). The ‘Level of Prominence’ (1–10) reflects the average rating assigned by participants to each theme. Data were collected via a Likert-type survey, and the results were validated by triangulation with the interview data and literature review.

Figure 1.

Risks and Opportunities of Using AI in Education. Note: This figure (Figure 1) is based on mixed-methods data. ‘Level of Prominence’ indicates the mean importance rating (1–10) assigned by participants. Themes were further cross-validated with relevant literature for robustness. For details, see the Methods section.

A key risk is the development of “passive knowledge”, where students primarily rely on ready-made answers rather than engaging in processes of analysis, synthesis, and reflection (Flavell, 1979; Selwyn, 2020–2023; Darwin et al., 2024). This is particularly evident in writing assignments, homework, or online tests completed using AI tools. As Van der Spoel and colleagues (Darwin et al., 2024) note, there is a danger of intellectual dependency, where students lose the ability to independently formulate hypotheses, construct arguments, and interpret evidence.

This issue is exacerbated by the predominantly technical focus of AI implementation in education. Institutions often emphasize functionality at the expense of pedagogical scaffolding, ethical reasoning, and critical literacy training (Beetham & Sharpe, 2013; U.S. Department of Education, 2023; Vygotsky, 1978; Heikkilä & Niemi, 2023). This can shift attention from formative learning goals to instrumental outcomes, diminishing the value of the learning process itself (Sweller, 1988). As illustrated in Table 1, international approaches to AI integration vary significantly, with some countries emphasizing ethical and critical literacy (e.g., Finland), while others prioritize efficiency or innovation through large-scale implementation (e.g., USA, China).

Table 1.

International Approaches to AI Integration in Education.

It is crucial to consider that the nature of generative neural networks—based on probabilistic language models (LLMs)—requires users to possess high levels of digital critical literacy: the ability to verify, interpret, and meaningfully integrate information into their cognitive frameworks (Flavell, 1979; Vasconcelos & dos Santos, 2023; Holmes, 2023). Without these competencies, students are vulnerable to misinformation, manipulation, and flawed reasoning.

Nonetheless, recent studies highlight a more optimistic perspective. With appropriate pedagogical scaffolding, AI can serve not merely as an automation tool, but as an instrument for fostering higher-order thinking. Vasconcelos and dos Santos (2023) show that AI can function as an “object to think with”, enabling students to formulate hypotheses, evaluate alternatives, and deepen understanding. Akgun and colleagues (Akgun et al., 2024) confirm that AI-assisted tasks can promote argumentation, structural reasoning, and reflective thinking.

Therefore, it is necessary to reconsider the role of AI in education and explore its dual nature in relation to cognitive autonomy: is neural intelligence a threat to students’ critical thinking, or can it instead support its development—provided that it is integrated into an ethically aware and pedagogically grounded educational model?

1.2. Research Objectives and Hypotheses

The goal of this study is to investigate how the systematic use of AI tools in educational practice affects the development of students’ critical and independent thinking. Given the mixed findings of prior research, this study places particular emphasis on the pedagogical conditions under which AI may act not as a substitute for intellectual activity but as its catalyst.

Based on theoretical assumptions and empirical precedent, the following working hypotheses were formulated:

- H1: Students learning with the support of AI tools demonstrate higher levels of critical thinking compared to those learning without AI;

- H2: Instructor involvement and formative feedback when using AI positively correlate with students’ cognitive independence;

- H3: Pedagogically meaningful integration of AI (including metacognitive tasks, reflective analysis, and instructor support) results in lower levels of algorithmic dependence among students;

- H4: Students’ digital literacy and ability to critically evaluate information moderate the effectiveness of AI in supporting cognitive skill development.

Modern research highlights a number of key components necessary for the effective integration of artificial intelligence into educational practice. These include didactic purposefulness (the organic embedding of AI into the course structure), formative feedback (with an emphasis on process-oriented student support), the use of metacognitive assignments (which stimulate self-reflection), the provision of cognitive diversity (the application of AI across various learning formats), and an interdisciplinary approach (adapting AI integration to the specific characteristics of each discipline). These components are widely discussed in contemporary academic literature as factors that promote the development of critical and independent thinking (Floridi & Cowls, 2019; Holmes et al., 2021; OECD, 2021; UNESCO, 2022).

2. Materials and Methods

To comprehensively examine the impact of AI on the development of students’ critical and independent thinking, a complex methodology was employed, combining both quantitative and qualitative analytical approaches. The use of a mixed-methods design provided interpretive depth, enabling analysis of both objective indicators (such as cognitive skill levels) and subjective perceptions of AI technologies among students and instructors. This design facilitated verification of the results from multiple research perspectives, including pedagogical, psychological, and technological.

2.1. Research Design

This study followed a sequential design, placing primary emphasis on qualitative data, followed by quantitative verification, as follows:

- Phase One: A systematic literature review (n = 54 sources) was conducted, identifying key trends, risks, and potentials of AI in educational practice;

- Phase Two: A series of semi-structured interviews were conducted with representatives of the academic community—both faculty and students (n = 28);

- Phase Three: A case analysis was performed on 12 university-level implementations of AI tools, focusing on methods aimed at developing student thinking (e.g., analytical platforms, generative assistants, reasoning visualization systems, etc.).

Special attention was given to blended learning practices where AI was integrated as a component of embedded digital solutions. In particular, this study assessed the effectiveness of digital assistants, automated assessment systems, adaptive testing modules, and neural networks supporting academic activities.

Formation of Experimental and Control Groups

Two sample groups were formed for the comparative analysis of AI’s influence on critical thinking development—an experimental group and a control group, each consisting of 28 participants (14 instructors and 14 students).

Experimental Group:

Participants in this group were part of courses where AI tools were systematically integrated into the educational process. This included the following:

- Use of generative models (ChatGPT-40 and Bing Chat) in writing assignments, data analysis, and reflective writing;

- Application of adaptive systems and digital assistants for independent work;

- Inclusion of AI within instructional modules (e.g., pedagogical scaffolding, critical response evaluation, and source analysis);

- AI interaction was supervised by instructors and accompanied by formative feedback.

Thus, AI was not only an auxiliary tool but also a pedagogically meaningful component aimed at fostering analytical and metacognitive strategies.

Control Group:

This group included instructors and students from comparable courses where AI tools were either not used or used sporadically and without regulation.

Specifically, the educational process followed traditional formats (lectures, seminars, and assignments without generative AI systems), as follows:

- Student support was delivered via in-person consultations and standard feedback;

- There was no integration of AI into assessment or cognitive strategy development.

Group Matching Method:

Groups were matched based on baseline characteristics: academic level, course profile, and workload. This matching procedure ensured a comparable starting point across groups, allowing the study to control for external variables and isolate AI as the key variable under investigation. Table 2 presents a detailed comparison of the conditions in the experimental and control groups, emphasizing differences in AI implementation strategies, the tools used, the type and extent of instructor supervision, assignment formats, and the intended pedagogical purposes of AI integration.

Table 2.

Comparison of Conditions in Experimental and Control Groups.

2.2. Participants

The study involved 28 instructors and 28 undergraduate and graduate students from three universities with pedagogical, technical, and humanities profiles.

Inclusion and exclusion criteria were applied equally to both the experimental and control groups to ensure comparability. The specific criteria used for group selection are summarized in Table 3. All participants, regardless of group assignment, were required to have at least one semester of systematic experience in higher education (either as instructors or as students), and to provide informed consent for participation in surveys and interviews.

Table 3.

Inclusion and Exclusion Criteria.

All participants were invited to both the survey and interview components; interviewees were purposively selected from the survey sample to ensure balanced representation.

For the experimental group, both instructors and students were selected based on their direct involvement in AI-integrated coursework or teaching during the semester under study, as described in Section “Formation of Experimental and Control Groups”.

For the control group, participants were drawn from parallel courses of similar academic level and discipline in which AI was not systematically used, but which met the same general inclusion criteria.

This approach ensured that both groups were balanced in terms of academic discipline, level, and educational experience, isolating the use of AI as the principal differentiating variable.

Inclusion Criteria:

- Systematic experience using AI in education for at least one academic semester;

- For instructors: integration of AI tools (e.g., ChatGPT, Bing Chat, Copilot, digital tutors, and adaptive platforms) into teaching, assessment, or student advising;

- For students: participation in courses where AI was part of learning activities (e.g., text generation, data analysis, problem-solving, and project work);

- Consent to participate in surveys, interviews, and anonymized data processing.

Exclusion Criteria:

- AI use experience of less than one semester;

- Passive use (e.g., incidental use of generative models not integrated into coursework);

- Inability to complete interviews or surveys for technical, linguistic, or other reasons;

- Refusal to provide evidence of AI engagement in courses (students) or integration in teaching (instructors).

Verification Procedure:

- Initial screening: participants completed a pre-survey about AI tools used, purposes, frequency, assignment types, and integration methods;

- Document verification: instructors submitted course syllabi with AI elements; students identified specific courses and instructors (verified independently);

- Qualitative filtering: interview data were analyzed to verify context; inconsistent responses led to exclusion.

Rationale for Methodological Approach:

Purposive sampling was chosen to focus on participants with practical experience in educational AI use. Despite a small sample size, the rigorous inclusion criteria and multi-level verification ensured internal validity and data reliability.

2.3. Data Collection Methods

- Qualitative Data: Semi-structured interviews followed a flexible protocol tailored to individual experiences. All interviews were audio-recorded (with consent) and transcribed verbatim by the research team. In rare cases where recording was not possible, detailed written notes were taken during the interview. All the interview data were then subjected to open and axial coding to construct a categorical tree. Braun and Clarke’s (2006) thematic analysis method was applied;

- Quantitative Data: Surveys were conducted online using validated scales: Watson–Glaser Critical Thinking Appraisal and the Metacognitive Awareness Inventory (MAI). All 56 study participants (28 instructors and 28 students) completed the survey; a purposive subsample of 28 (14 instructors and 14 students, equally divided across experimental and control groups) also took part in the interviews. Thus, the term “respondents” refers to survey participants, while “interviewees” refers to those who participated in the qualitative interviews. The overlap between the groups is intentional, to facilitate mixed-methods triangulation. The respondents assessed the frequency, nature, and intentionality of AI use and their own critical thinking skills.

2.4. Data Analysis Methods

The interview analysis was conducted in NVivo, encompassing initial coding, categorization, the creation of thematic matrices, and semantic visualization. Contextual variables such as academic discipline, teaching experience, and digital literacy were also considered.

The quantitative analysis was performed in SPSS, (Version 29.0) utilizing the following statistical procedures: Descriptive statistics (mean and standard deviation) were used to summarize the central tendency and variability of key variables (e.g., critical thinking scores; Field, 2017). Independent samples t-tests were applied to determine whether there were statistically significant differences in mean scores between the experimental and control groups (Field, 2017; Cohen et al., 2018). One-way ANOVA was employed to verify group differences and assess variance among more than two groups or variables where applicable (Field, 2017). Correlation analysis measured the strength and direction of relationships between variables such as AI use, metacognitive activity, and critical thinking levels (Bryman, 2016). Factor analysis was applied to identify underlying constructs and validate the structure of survey instruments (Tabachnick & Fidell, 2019). Levene’s test assessed the homogeneity of variances to confirm that parametric tests were appropriate (Field, 2017). The Shapiro–Wilk test checked for normality of data distributions (Field, 2017). Cronbach’s Alpha evaluated the internal consistency and reliability of multi-item scales (e.g., survey questionnaires; Bryman & Bell, 2015). These methods were selected to ensure the robustness and validity of the statistical findings (Field, 2017; Cohen et al., 2018).

Operationalization of Composite Hypotheses

In accordance with best practices for methodological transparency and in response to reviewer feedback, each element of composite (multi-component) hypotheses—specifically those containing more than one conceptual construct (e.g., “instructor involvement and formative feedback”)—was operationalized, measured, and analyzed independently.

For Hypothesis 2 (“Instructor involvement and formative feedback when using AI positively correlate with students’ cognitive independence”), the following procedures were employed.

Instructor involvement was assessed through distinct qualitative interview prompts (e.g., descriptions of direct instructor engagement and individualized guidance in the context of AI-supported coursework) and dedicated items on the quantitative survey (e.g., “My instructor provided guidance on AI-related assignments”, rated on a Likert scale).

Formative feedback was evaluated separately using both qualitative questions about the perceived frequency and usefulness of feedback, and quantitative survey items (e.g., “I received timely formative feedback during AI-supported tasks”).

Cognitive independence was measured using the standardized Watson–Glaser Critical Thinking Appraisal and supplementary items assessing independent problem-solving, critique of AI outputs, and self-regulated learning behaviors.

Statistical analyses were conducted separately for each independent variable using correlation and regression models, with the combined effects of these components also explored through multivariate analysis. Table 4 presents the measurement items and operational definitions, while Table 5 summarizes the disaggregated statistical results for each component.

Table 4.

Operationalization and Measurement of Composite Hypotheses.

Table 5.

Separate and Combined Effects of Instructor Involvement and Formative Feedback on Cognitive Independence.

This approach was similarly applied to other composite hypotheses, ensuring analytical clarity and construct validity throughout this study.

Operationalization and Analysis for H3

For Hypothesis 3 (“Pedagogically meaningful integration of AI—including metacognitive tasks, reflective analysis, and instructor support—results in lower levels of algorithmic dependence among students”), each component was operationalized and measured independently.

Metacognitive tasks were examined through interviews (e.g., student examples of engaging in self-reflection assignments and reflective journaling) and survey items (e.g., “I regularly completed metacognitive/reflection tasks”).

The reflective analysis was assessed via qualitative questions regarding the depth of error analysis and learning reviews, and through quantitative items (e.g., “I analyzed my learning mistakes and adapted strategies accordingly”).

Instructor support was measured separately, similarly to H2, using both interviews and survey statements (e.g., “The instructor encouraged reflection on AI use”).

Algorithmic dependence was assessed using dedicated scales and custom items reflecting passive reliance on AI-generated answers, decreased autonomy, and tendencies toward intellectual inertia.

Separate and combined statistical analyses were performed using correlation and regression. Table 6 details the operationalization, while Table 7 summarizes the results.

Table 6.

Operationalization and Measurement of H3 Components.

Table 7.

Separate and Combined Effects of H3 Components on Algorithmic Dependence.

Operationalization and Analysis for H4

For Hypothesis 4 (“Students’ digital literacy and ability to critically evaluate information moderate the effectiveness of AI in supporting cognitive skill development”), the following variables were separately measured.

Digital literacy was assessed using standardized digital competence scales (e.g., self-assessment, digital skills tests, and cybersecurity questions).

Critical evaluation skills were analyzed through interviews (examples of verifying, analyzing, and critiquing information obtained via AI) and survey items (e.g., “I critically assess information from AI sources”).

Cognitive skill development was measured using pre- and post-intervention scores on the Watson–Glaser scale and other cognitive development indicators.

The analysis included correlations, moderation analysis, and hierarchical regression, with the moderating role of digital and critical competencies examined in detail. Table 8 and Table 9 provide operational definitions and statistical results.

Table 8.

Operationalization and Measurement of H4 Components.

Table 9.

Moderating Effects of Digital Literacy and Critical Evaluation Skills on Cognitive Skill Development.

2.5. Ethical Considerations

This study complied with academic and research ethics. All the participants gave informed consent, with confidentiality assured. Identifiable data were excluded during processing. Ethical approval was obtained from a local ethics board (Faculty of Humanities and Social Sciences’ ethics committee, approval no. SSC-NDN-80741238). The participants had access to the aggregated results post-study, ensuring transparency.

2.6. Tables and Data Visualization

Statistical Analysis:

- t(54) = 2.87, p = 0.005;

- Confidence Interval: [2.4; 13.4];

- Levene’s Test: p = 0.12 (homogeneity confirmed).

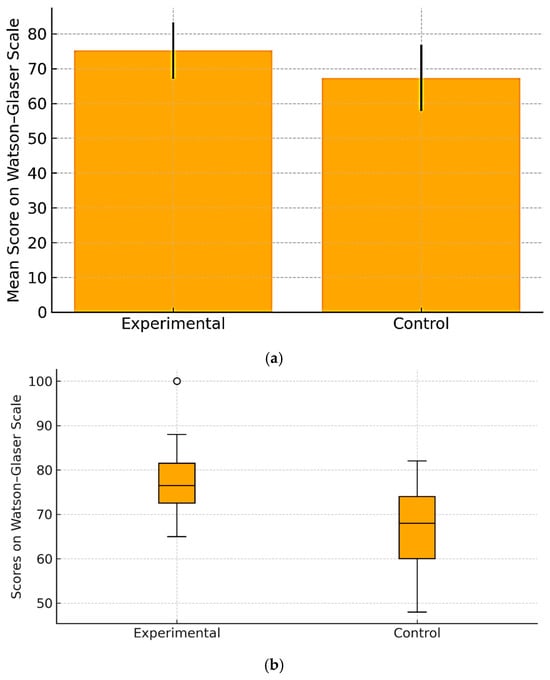

Table 10 provides a structured overview of the operationalization and measurement of composite hypotheses. It details how core constructs such as instructor involvement, formative feedback, and cognitive independence were assessed through both qualitative indicators (interviews, thematic analysis) and quantitative instruments (validated surveys and standardized scales). The table highlights the alignment between theoretical constructs and specific, measurable variables, supporting the transparency and reproducibility of the analysis. The distribution of critical thinking scores by group is visualized in Figure 2a.

Table 10.

Watson–Glaser Mean Scores.

Figure 2.

(a). Mean Watson–Glaser Scores by Group. Explanation: The bar chart shows significantly higher critical thinking scores in the experimental group (mean = 75.2) compared to the control group (mean = 67.3), with visible standard deviations. (b). Boxplot of Individual Scores. Explanation: This boxplot displays the variability of critical thinking scores. The experimental group shows a higher median and smaller interquartile range, indicating a more consistent performance.

Table 11 presents the statistical results for the separate and combined effects of instructor involvement and formative feedback on cognitive independence. The table includes correlation coefficients (r), regression weights (β), and p-values, which collectively indicate the strength and statistical significance of these relationships. Both instructor involvement and formative feedback demonstrate positive and statistically significant associations with students’ cognitive independence (p < 0.05). When both factors are included in the model, their effects remain significant, though somewhat attenuated, indicating that each factor makes a partially overlapping but distinct contribution to the outcome.

Table 11.

t-Test Results and Homogeneity Check.

Interpretation: These findings empirically confirm that both active instructor involvement and formative feedback play significant, independent roles in fostering cognitive independence among students in AI-supported learning environments. The persistent significance of both predictors in the combined model suggests that each offers unique value, and that their combined presence maximizes the development of critical and independent thinking skills. The instruments and scales used in the study are listed in Table 12. (Detailed information for Pearson (2020) and Schraw and Dennison (1994) is provided in the References section). To further illustrate the distribution and variability of individual scores, Figure 2b presents a boxplot of critical thinking scores for both groups.

Table 12.

Tools and Scales Used.

2.7. Essay Source Materials

The students received curated sets of 5–6 academically valid sources, supporting evidence-based pedagogy. These included the following:

- Scientific publications: e.g., Artificial Intelligence in Education (Holmes et al., 2021), Intelligence Unleashed (Luckin et al., 2016), and Should Robots Replace Teachers? (Selwyn, 2020), UNESCO (2022);

- Analytical reports: OECD (2021), UNESCO (2022), and World Economic Forum (2023);

- Academic reviews: Critical studies on digital pedagogy and cognitive impact (Williamson & Eynon, 2020);

- Expert blogs: e.g., Selwyn’s Medium, Brookings Briefs, and Nesta Reports.

Each source was annotated and guided, helping students not just read but also critically interpret the material.

2.8. Study Limitations

Despite the rigorous design and use of mixed methods, this study possesses several substantial limitations that must be acknowledged.

First, the relatively small sample size (n = 56) substantially limits the generalizability and external validity of the results (Cohen et al., 2018; Field, 2017; Creswell & Creswell, 2017; Mertens, 2020; Punch, 2014). Even with purposive sampling and strict participant verification, the final sample size cannot be considered fully representative of broader educational populations (Bryman, 2016; Kelley et al., 2003). The scholarly literature emphasizes that small samples are particularly susceptible to statistical errors, random variation, and the influence of hidden variables (Maxwell, 2004; Tabachnick & Fidell, 2019).

Additionally, although the study sample included both undergraduate and graduate students, the subgroup sizes were insufficient to permit robust statistical analysis of differences by academic level. As a result, we were unable to systematically assess whether the impact of AI-driven interventions varies between undergraduate and graduate cohorts. Future studies should address this limitation by ensuring adequate subgroup sizes and employing stratified or multifactorial statistical analysis.

Second, the institutional scope of this study (only three universities) may lead to a contextual specificity in the findings, complicating transferability to other educational contexts (Shadish et al., 2002; Hammersley, 2013; Bortnikova, 2016; Schwandt, 2015). Such organizational and geographic constraints increase the risk of contextual and cultural biases.

Third, the reliance on self-report, interview, and questionnaire data brings risks of subjectivity and social desirability bias (Podsakoff et al., 2003; Meshcheryakova, 2017; Bryman & Bell, 2015; Krosnick, 1999). Recent research shows that such distortions may result in the overestimation of intervention effects or the underreporting of critical feedback (Braun & Clarke, 2006).

Fourth, the time frame (one semester) does not permit an assessment of the long-term sustainability of the observed effects or their impact on cognitive development (Vinogradova, 2021; Sharma, 2017; Ruspini, 2016; Menard, 2002). Only longitudinal studies spanning multiple academic years and diverse age cohorts can reveal the true dynamics of intellectual autonomy development (McMillan & Schumacher, 2014).

Finally, it is important to note the possible impact of uncontrolled variables such as individual learning styles, varying levels of digital literacy, prior AI experience, and pedagogical strategies (Luckin et al., 2016; Holmes et al., 2021).

International research underscores that small and institutionally limited samples remain a typical but acknowledged barrier to producing universally generalizable results in educational research (Bakker, 2018; Henrich et al., 2010; Blatchford & Edmonds, 2019). Thus, future research should prioritize large-scale, multi-site, and longitudinal projects employing randomization and representative sampling (Floridi & Cowls, 2019).

To increase the reliability and external validity of results, researchers are encouraged to do the following:

- Employ advanced statistical methods (multivariate modeling, cross-validation, and SEM) (Kline, 2016; Byrne, 2016; Bentler, 2006; Hoyle, 2012);

- Standardize inclusion criteria and ensure transparent participant verification (Levitt et al., 2018);

- Combine quantitative, qualitative, and longitudinal strategies (Johnson & Onwuegbuzie, 2004; Teddlie & Tashakkori, 2009).

The limitations of sample size, institutional specificity, research duration, and methodological choices warrant caution in the interpretation and extrapolation of results. Further studies with larger and more heterogeneous samples will be needed to confirm the stability and universality of the observed trends.

3. Results

This section presents both the quantitative and qualitative results obtained through the experimental study aimed at analyzing the impact of AI tools on the development of students’ critical thinking. The data are used to empirically test the previously formulated hypotheses (H1–H4).

3.1. Quantitative Results

The analysis of the data collected using the Watson–Glaser scale revealed statistically significant differences between the experimental and control groups. Students participating in programs that systematically integrated AI demonstrated a higher level of critical thinking compared to those in courses without such technologies, as follows:

- The experimental group achieved a mean score of M = 75.2, SD = 8.1;

- The control group had a mean score of M = 67.3, SD = 9.5.

These differences are statistically significant according to the Student’s t-test (t(54) = 2.87, p = 0.005), as visualized in Figure 1 and confirmed in Table 4 and Table 5.

Additionally, a one-way ANOVA revealed a statistically significant difference between the groups (F(1, 54) = 8.24, p < 0.01). Levene’s test for homogeneity of variances yielded a p-value of 0.12, supporting the validity of using parametric methods.

A correlation analysis was also conducted, identifying the following relationships between variables (n = 56):

| Variable Relationship | Correlation Coefficient (r) | p-Value |

| AI use and critical thinking | 0.46 | 0.003 |

| Metacognitive activity and critical thinking | 0.58 | <0.001 |

| Digital literacy and critical thinking | 0.41 | 0.008 |

These findings indicate moderate positive correlations between critical thinking levels and factors reflecting the quality and intentionality of AI use. These results support Hypothesis H1 and partially confirm H2–H4, emphasizing the significance of metacognitive and digital components in cognitive development.

3.2. Qualitative Results

The qualitative component of this study was based on semi-structured interviews with 28 participants (students and instructors). The thematic analysis identified four main semantic clusters; however, within these clusters, there was considerable diversity in individual perspectives, arguments, and strategies. Rather than unanimity, the interviews revealed a wide range of viewpoints, personal motivations, and contextual factors shaping the use of AI.

AI as a tool for cognitive support.

Many respondents noted that tools such as ChatGPT helped them articulate their thoughts more clearly, clarify terminology, and develop arguments. However, the ways of using AI varied: some participants leveraged it for generating ideas and obtaining alternative perspectives, while others used it mainly to structure their own reasoning or to search for information on specialized topics.

AI as a means of effort substitution.

Under conditions of high workload and fatigue, some participants admitted using AI primarily for time-saving, without subsequent reflection or verification of the generated content. At the same time, several informants acknowledged the risk of losing autonomy and consciously limited their AI use in order to strengthen their own analytical skills.

The instructor’s role in guiding AI use.

Attitudes ranged from enthusiastic (“the instructor helps to unlock the potential of AI for learning”) to critical (“feedback is too formal” or “there is not enough time for discussion”). Some students valued reflective assignments and collaborative discussions of AI experiences, whereas others preferred individual consultations or independent analysis of typical errors.

Awareness of limitations and dependency risks.

Participants differed in their attitudes toward issues of ethics, information verification, and potential algorithmic dependence. For some, the critical appraisal of AI outputs was central; for others, privacy concerns or the lack of clear standards for ethical use of AI in their educational context were more important.

Illustrative Quotes from Students:

- “ChatGPT helps me get started when I’m stuck, but I always try to rewrite the answer in my own words and check the facts” (student, humanities);

- “When I have too much work, I sometimes let AI do the first draft, but I know it makes me lazy if I rely on it too often”;

- “Our instructor gave us tasks to compare our own solutions with AI-generated ones and discuss the differences. This made me think more deeply about the problem”;

- “Sometimes the teacher’s comments on AI use were too general, so I had to figure out myself how to improve my approach”.

Illustrative Quotes from Instructors:

- “I try to encourage my students to treat AI as a starting point, not as the final answer. It’s important that they learn to evaluate and improve what the AI provides”;

- “I worry that students will stop thinking critically if they just copy what the AI says, especially when there are no clear rules on how to use these tools”;

- “I’ve noticed that students become more reflective when we include structured discussions about the limitations of AI and ask them to critique AI-generated responses”.

Thus, the qualitative analysis not only revealed the main thematic clusters but also highlighted the presence of nuances and differences in participant positions and strategies regarding AI in the learning process. These findings provide insight into the underlying reasons (“why”) for adopting specific approaches to AI use, reflecting both the positive effects of technology integration and the challenges associated with critical thinking, autonomy, and pedagogical support. This approach yields a more comprehensive understanding of the factors that enable or constrain the development of cognitive independence in digital educational environments.

These findings support Hypothesis H1 regarding AI’s potential positive influence on cognitive development—provided it is pedagogically integrated. This also aligns with Hypotheses H2–H4. In contrast, the absence of instructor guidance often led to AI being used merely as an automation tool without fostering meaningful thinking.

4. Discussion and Conclusions

The findings of this study indicate a positive correlation between the systematic use of AI tools in educational practice and the development of students’ critical thinking. The experimental group demonstrated statistically significantly higher scores on the Watson–Glaser scale compared to the control group, highlighting the potential effectiveness of AI as a tool for cognitive enhancement.

However, these results require deeper analysis in the context of existing pedagogical, cognitive, and technological theories. Contemporary research emphasizes that the use of AI alone does not guarantee a positive effect. The key factor lies in the pedagogical strategy of integration: the meaningful incorporation of AI into the learning process, supported by feedback, metacognitive tasks, and instructor guidance, creates the conditions necessary for the development of higher-order cognitive functions (Beetham & Sharpe, 2013; Holmes et al., 2021; Holmes, 2023).

4.1. AI as a Cognitive Mediator

The conceptual framework of “AI as a cognitive mediator” suggests that AI does not replace the thinking process, but rather extends its capabilities (Luckin et al., 2016; Darwin et al., 2024). Depending on the context and pedagogical design, generative models such as ChatGPT, Bing Chat, or Copilot can serve multiple distinct functions that are supported by empirical research and recent literature, as follows:

- Sources of heuristic prompting: AI tools can generate questions, examples, and alternative perspectives that stimulate students’ critical reflection and problem-solving (Vasconcelos & dos Santos, 2023; Luckin et al., 2016);

- Tools for task decomposition: AI can help break down complex tasks into manageable steps, supporting learners in understanding and structuring their own reasoning (Holmes et al., 2021; Luckin et al., 2016);

- Platforms for simulating argumentation and debate: generative models can participate in role-play or dialogic exchanges, allowing students to practice argument construction, rebuttal, and analysis of opposing viewpoints (Akgun et al., 2024; Darwin et al., 2024);

- Aids for structuring reflective writing: AI can suggest outlines, provide feedback on drafts, or prompt metacognitive reflection, thereby scaffolding the process of academic writing and self-assessment (Vasconcelos & dos Santos, 2023; Holmes, 2023).

Thus, AI can serve as an “object to think with” (Akgun et al., 2024), activating mechanisms of analysis, comparison, interpretation, and argumentation. However, without sufficient digital literacy and critical thinking skills on the student’s part, the same system can be reduced to a mere tool for copying and replacing intellectual activity.

4.2. Risks of Algorithmic Dependency and Cognitive Offloading

In parallel with its positive effects, literature highlights the risks of algorithmic dependency and cognitive inertia—situations where students become accustomed to outsourcing intellectual effort to algorithms without engaging in conscious analysis (Selwyn, 2020; Heikkilä & Niemi, 2023). In the experiment, such risks were partially mitigated by pedagogical oversight and the inclusion of high-cognitive-load tasks. However, the interview participants noted that during independent work outside the classroom, AI was often used as a shortcut to solutions, particularly under high workload and time pressure.

This underscores the need to cultivate ethical and metacognitive competencies, enabling students not only to use AI effectively but also to understand its limitations, distinguish genuine intellectual activity from superficial output, and self-regulate in a digital environment (Flavell, 1979; Selwyn, 2020–2023; Braun & Clarke, 2006).

4.3. Pedagogical Architecture for Effective AI Integration

Based on the analysis of the collected data and comparison with contemporary academic sources (see the “Literature Review” section), our study identified the following components as the most significant for the effective use of AI to foster students’ cognitive autonomy:

- Didactic purposefulness: the organic integration of AI into the course structure, rather than its use as an optional add-on (Floridi & Cowls, 2019; Holmes et al., 2021);

- Formative feedback: regular reflection and evaluation of how students use AI, with an emphasis on strategies for interacting with AI (Vasconcelos & dos Santos, 2023);

- Metacognitive assignments: the introduction of tasks aimed at self-reflection, such as reflective essays and case studies, to promote conscious thinking (OECD, 2021);

- Cognitive diversity: the use of AI in various formats—from text analysis to idea visualization (Darwin et al., 2024);

- Interdisciplinary approach: taking into account the specific features of each discipline when implementing AI (UNESCO, 2022; Sweller, 1988).

The empirical results of our study confirmed the significance of these components: in courses where all of the listed conditions were fully implemented, there was a statistically significant increase in students’ critical and independent thinking compared to the control group (see Table 4 and Table 5, as well as Section 3.1 “Quantitative Results”).

4.4. Tensions and Future Directions

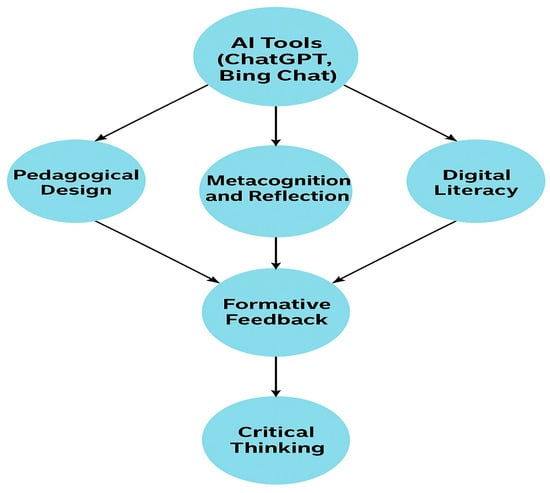

This schematic visualizes the interaction between AI tools, pedagogical design, digital and metacognitive competencies, and the resulting development of students’ critical thinking. It illustrates the role of formative feedback as the linking component between cognitive and digital elements.

Figure 3 presents a conceptual model illustrating the interplay between AI-driven educational tools, pedagogical design, digital and metacognitive competencies, and the development of students’ critical thinking skills. To strengthen its theoretical contribution, this model is explicitly grounded in several foundational theories and frameworks from cognitive psychology and educational research.

Figure 3.

Cognitive Development Model Supported by AI. Arrows indicate principal directions of influence; however, reciprocal and feedback relationships are also implied in the model structure.

First, the model draws upon Flavell’s model of metacognition (Flavell, 1979), which highlights the importance of individuals’ awareness and regulation of their own cognitive processes. In our conceptual model, formative feedback and reflective assignments serve as mechanisms for enhancing students’ metacognitive monitoring and self-regulation, thereby directly aligning with Flavell’s emphasis on metacognitive development as a driver of effective learning and critical analysis.

Second, the model is underpinned by Vygotsky’s sociocultural theory (Vygotsky, 1978), which posits that cognitive growth and higher-order thinking are mediated through social interaction and the use of cultural tools. Here, the integration of AI-based educational technologies acts as a form of ‘cognitive scaffolding,’ facilitating collaborative learning, guided reflection, and the co-construction of knowledge—core tenets of Vygotsky’s approach. The model thus situates AI not as an isolated technological factor, but as a mediator that supports socially and culturally situated learning processes.

In addition, the model is informed by contemporary frameworks on digital literacy and critical thinking (UNESCO, 2022; OECD, 2021; Holmes et al., 2021). These frameworks stress the need for learners to develop skills in evaluating digital information, exercising digital citizenship, and adapting to complex, technology-rich environments. Within the model, digital competencies—together with metacognitive abilities—function as key mediators that bridge the gap between technology integration and the cultivation of advanced critical thinking skills.

Taken together, these theoretical perspectives inform the logic of the model, clarifying how formative feedback, digital skills, and metacognitive practices interact in the context of AI-enhanced education. The model thus offers a theoretically robust account of the mechanisms by which AI integration can contribute to the development of students’ critical and independent thinking.

By explicitly anchoring the model in established theories and recent research, we situate our conceptual framework within the broader academic discourse on cognitive and metacognitive development, sociocultural learning, and digital pedagogy.

Despite the promising results, it is important to recognize that AI integration may reinforce educational inequality. Students with high levels of digital literacy adapt more quickly to new technologies, while less-prepared peers struggle, potentially widening the cognitive gap (Schraw & Dennison, 1994). Moreover, AI itself is not a neutral product—its algorithms are trained on specific cultural and ideological assumptions, requiring critical examination for content objectivity (U.S. Department of Education, 2023; Williamson & Eynon, 2020).

Going forward, expanding pedagogical involvement in the design of digital learning systems and embedding AI literacy in foundational curricula appear essential for the sustainable and ethically sound use of AI in education.

4.5. Final Conclusions Practical Recommendations and Future Research Directions

It is important to explicitly note that this study was conducted over a single academic semester, which constitutes a clear limitation for detecting long-term and cumulative cognitive outcomes of AI integration. Short-term interventions may not sufficiently capture the gradual development of metacognitive strategies, epistemic autonomy, or resilience to algorithmic dependency. The results of this study convincingly demonstrate the significant potential of integrating artificial intelligence (AI) technologies for fostering critical and independent thinking among university students—provided that their implementation is pedagogically informed and well-structured (Luckin et al., 2016; Holmes et al., 2021; Floridi & Cowls, 2019). The experiment revealed that systematic and supervised use of AI tools in the educational process contributes to higher levels of critical thinking compared to traditional or unsupervised approaches (Darwin et al., 2024; Braun & Clarke, 2006). This finding aligns with international research emphasizing that AI should not be treated as an autonomous or self-sufficient factor but as a didactically justified component that enhances processes of analysis, reflection, argumentation, and independent judgment (Williamson & Eynon, 2020; Beetham & Sharpe, 2013).

At the same time, significant limitations related to the sample size and structure (n = 56), the one-semester time frame of the intervention, as well as the institutional characteristics of the participating universities, require critical interpretation of the data. As noted in contemporary scientific literature, small and homogeneous samples reduce the level of external validity and limit the possibilities for extrapolating results to broader educational, cultural, and social contexts (Cohen et al., 2018; Bakker, 2018; Bryman, 2016; Field, 2017). Such limitations are typical for studies conducted in rapidly developing digital environments and necessitate careful consideration and critical reflection in formulating conclusions and educational policy (Tabachnick & Fidell, 2019; OECD, 2021; UNESCO, 2022).

Current educational research emphasizes that the main task is not only the adoption of AI, but also the creation of flexible, inclusive, and multidisciplinary models of integration that take into account individual cognitive styles, professional contexts, and varied formats (Holmes, 2023; Johnson & Onwuegbuzie, 2004; Teddlie & Tashakkori, 2009). To increase the scientific reliability and validity of findings, it is necessary to employ advanced statistical methods—including multivariate modeling (SEM), cross-validation, and, most importantly, longitudinal (multi-wave) and multi-institutional studies—which allow researchers to control for hidden variables and reveal the long-term cognitive effects of AI interventions (Kline, 2016; Bentler, 2006; Sharma, 2017; Menard, 2002). Key practical recommendations based on these findings are summarized in Table 13.

Table 13.

Practical Recommendations for the Integration of AI in University Education.

The implementation of these recommendations requires a comprehensive approach, including not only the adaptation of curricula, but also the ongoing professional development of educators, the enhancement of infrastructure, and the establishment of ethical standards for working with AI. Special attention should be paid to the creation of a pedagogical environment that fosters the development of digital critical literacy and metacognitive strategies among students, as confirmed by recent studies (Schraw & Dennison, 1994; Holmes et al., 2021; Selwyn, 2020).

Future Research Directions

Given the limitations of the present study—especially the one-semester time frame and the restricted sample—it is essential for future research to employ longitudinal, multi-wave, and multi-institutional designs. Large-scale implementations across diverse student populations, disciplines, and educational cultures will be necessary to enhance generalizability and to capture the cumulative, long-term cognitive, social, and emotional effects of AI integration in education (Menard, 2002; Sharma, 2017; Holmes et al., 2021).

Moreover, future studies should systematically examine whether the impact of AI-driven interventions differs by academic level (e.g., undergraduate vs. graduate) and disciplinary background. We recommend the use of stratified sampling procedures and multifactorial statistical analyses (such as ANOVA or ANCOVA) to detect and interpret potential differences between these cohorts and disciplines. Addressing these dimensions will provide a more nuanced understanding of how AI-based innovations affect various subgroups within the student population.

Special importance should also be given to examining individual differences in the perception and use of AI by students of different backgrounds, exploring the impact of cultural and institutional contexts, and developing ethical and regulatory models to ensure safe and equitable AI implementation in education (UNESCO, 2022; OECD, 2021; Holmes, 2023). There are also opportunities for developing pedagogical strategies that integrate digital critical literacy and metacognition into various disciplines, thus enabling the creation of a truly inclusive, sustainable, and adaptive educational environment (Williamson & Eynon, 2020; Beetham & Sharpe, 2013).

In general, the creation of a pedagogically sound environment based on the principles of ethical and conscious use of artificial intelligence is not only the key to developing critical thinking and metacognition among students, but also one of the defining factors for the resilience and competitiveness of the education system in the 21st century (Williamson & Eynon, 2020; World Economic Forum, 2023).

Funding

This research received no external funding.

Institutional Review Board Statement

The study was approved by the Ethics Committee of the Faculty of Humanities and Social Sciences (protocol code SSC-NDN-80741238, 22 January 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Akgun, M., Greenhow, C., & Lewin, C. (2024). The ethics of AI use in education: Teacher perspectives and dilemmas. Computers & Education, 203, 104923. [Google Scholar]

- Bakker, A. (2018). Design research in education: A practical guide for early career researchers. Routledge. [Google Scholar]

- Beetham, H., & Sharpe, R. (2013). Rethinking pedagogy for a digital age: Designing for 21st century learning (2nd ed.). Routledge. [Google Scholar]

- Bentler, P. M. (2006). EQS 6 structural equations program manual. Multivariate Software. [Google Scholar]

- Blatchford, P., & Edmonds, S. (2019). Class size: Learning, teaching, and psychological perspectives. Routledge. [Google Scholar]

- Bortnikova, I. Y. (2016). Problemy vneshnei validnosti empiricheskikh issledovanii [Problems of external validity in empirical research]. Vestnik Tomskogo gosudarstvennogo universiteta, 7(4), 38–59. [Google Scholar]

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. [Google Scholar] [CrossRef]

- Bryman, A. (2016). Social research methods (5th ed.). Oxford University Press. [Google Scholar]

- Bryman, A., & Bell, E. (2015). Business research methods (4th ed.). Oxford University Press. [Google Scholar]

- Byrne, B. M. (2016). Structural equation modeling with AMOS: Basic concepts, applications, and programming (3rd ed.). Routledge. [Google Scholar]

- Cohen, L., Manion, L., & Morrison, K. (2018). Research methods in education (8th ed.). Routledge. [Google Scholar]

- Creswell, J. W., & Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches (5th ed.). Sage. [Google Scholar]

- Darwin, Rusdin, D., Mukminatien, N., Suryati, N., Laksmi, E. D., & Marzuki. (2024). Critical thinking in the AI era: An exploration of EFL students’ perceptions, benefits, and limitations. Cogent Education, 11(1), 2290342. [Google Scholar] [CrossRef]

- Field, A. (2017). Discovering statistics using IBM SPSS statistics (5th ed.). Sage. [Google Scholar]

- Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. American Psychologist, 34(10), 906–911. [Google Scholar] [CrossRef]

- Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in education. Philosophy & Technology, 32, 687–703. [Google Scholar] [CrossRef]

- Hammersley, M. (2013). What is qualitative research? Bloomsbury. [Google Scholar]

- Heikkilä, T., & Niemi, H. (2023). AI literacy in the classroom: Preparing future teachers for critical use of generative AI. Education and Information Technologies, 28(5), 7895–7912. [Google Scholar]

- Henrich, J., Heine, S. J., & Norenzayan, A. (2010). The weirdest people in the world? Behavioral and Brain Sciences, 33(2–3), 61–83. [Google Scholar] [CrossRef]

- Holmes, W. (2023). Generative AI in education: Emerging risks and recommendations. UNESCO Policy Brief. [Google Scholar]

- Holmes, W., Bialik, M., & Fadel, C. (2021). Artificial intelligence in education: Promises and implications for teaching and learning. Center for Curriculum Redesign. [Google Scholar]

- Hoyle, R. H. (2012). Handbook of structural equation modeling. Guilford Press. [Google Scholar]

- Johnson, R. B., & Onwuegbuzie, A. J. (2004). Mixed methods research: A research paradigm whose time has come. Educational Researcher, 33(7), 14–26. [Google Scholar] [CrossRef]

- Kelley, K., Clark, B., Brown, V., & Sitzia, J. (2003). Good practice in the conduct and reporting of survey research. International Journal for Quality in Health Care, 15(3), 261–266. [Google Scholar] [CrossRef]

- Kline, R. B. (2016). Principles and practice of structural equation modeling (4th ed.). Guilford Press. [Google Scholar]

- Krosnick, J. A. (1999). Survey research. Annual Review of Psychology, 50, 537–567. [Google Scholar] [CrossRef] [PubMed]

- Levitt, H. M., Motulsky, S. L., Wertz, F. J., Morrow, S. L., & Ponterotto, J. G. (2018). Recommendations for designing and reviewing qualitative research in psychology: Promoting methodological integrity. Qualitative Psychology, 5(1), 3–22. [Google Scholar] [CrossRef]

- Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2016). Intelligence unleashed: An argument for AI in education. Pearson. [Google Scholar]

- Maxwell, S. E. (2004). The persistence of underpowered studies in psychological research: Causes, consequences, and remedies. Psychological Methods, 9(2), 147–163. [Google Scholar] [CrossRef] [PubMed]

- McMillan, J. H., & Schumacher, S. (2014). Research in education: Evidence-based inquiry (7th ed.). Pearson. [Google Scholar]

- Menard, S. (2002). Longitudinal research (2nd ed.). Sage. [Google Scholar]

- Mertens, D. M. (2020). Research and evaluation in education and psychology: Integrating diversity with quantitative, qualitative, and mixed methods (6th ed.). Sage. [Google Scholar]

- Meshcheryakova, O. A. (2017). The influence of social desirability on survey results. Sociological Studies, 7(5), 56–62. [Google Scholar]

- OECD. (2021). AI and the future of skills: Volume 1: Capabilities and assessments. OECD Publishing. [Google Scholar]

- Pearson. (2020). Watson–glaser critical thinking appraisal manual. Pearson Education. Available online: https://www.talentlens.com/recruitment/assessments/watson-glaser-critical-thinking-appraisal.html (accessed on 18 May 2025).

- Podsakoff, P. M., MacKenzie, S. B., Lee, J.-Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88(5), 879–903. [Google Scholar] [CrossRef]

- Punch, K. F. (2014). Introduction to social research: Quantitative and qualitative approaches (3rd ed.). Sage. [Google Scholar]

- Ruspini, E. (2016). Longitudinal research in the social sciences. Routledge. [Google Scholar]

- Schraw, G., & Dennison, R. S. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19(4), 460–475. [Google Scholar] [CrossRef]

- Schwandt, T. A. (2015). The SAGE dictionary of qualitative inquiry (4th ed.). Sage. [Google Scholar]

- Selwyn, N. (2020). Should robots replace teachers? AI and the future of education. Polity Press. [Google Scholar]

- Selwyn, N. (2020–2023). Expert blogs and commentary. Medium. Brookings Institution; Nesta Foundation. [Google Scholar]

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Houghton Mifflin. [Google Scholar]

- Sharma, S. (2017). Longitudinal studies: Design, analysis, and reporting. The European Journal of General Practice, 23(1), 30–36. [Google Scholar]

- Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12(2), 257–285. [Google Scholar] [CrossRef]

- Tabachnick, B. G., & Fidell, L. S. (2019). Using multivariate statistics (7th ed.). Pearson. [Google Scholar]

- Teddlie, C., & Tashakkori, A. (2009). Foundations of mixed methods research: Integrating quantitative and qualitative approaches in the social and behavioral sciences. Sage. [Google Scholar]

- UNESCO. (2022). AI and the futures of education: Towards a purposeful use of artificial intelligence in education. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000381137 (accessed on 18 May 2025).

- U.S. Department of Education. (2023). Artificial intelligence and the future of teaching and learning: Insights and recommendations. Available online: https://www2.ed.gov/documents/ai-report/ai-report.pdf (accessed on 18 May 2023).

- Vasconcelos, M. A. R., & dos Santos, R. P. (2023). Enhancing STEM learning with ChatGPT and Bing Chat as objects to think with: A case study. arXiv, arXiv:2303.12713. [Google Scholar] [CrossRef]

- Vinogradova, E. E. (2021). Prodolzhitelnye issledovaniya v obrazovanii: Problemy i perspektivy [Long-term studies in education: Problems and perspectives]. Obrazovatel’nye tekhnologii i obshchestvo [Educational Technology & Society], 24(3), 21–34. [Google Scholar]

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press. [Google Scholar]

- Williamson, B., & Eynon, R. (2020). Historical threads, missing links, and future directions in AI in education. Learning, Media and Technology, 45, 223–235. [Google Scholar] [CrossRef]

- World Economic Forum. (2023). The future of jobs report 2023. Available online: https://www.weforum.org/reports/the-future-of-jobs-report-2023 (accessed on 18 May 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).