Abstract

This work addresses the lack of methodologies for the seamless integration of 360° videos, 3D digitized artifacts, and virtual human agents within a virtual reality environment. The proposed methodology is showcased in the context of a tour guide application and centers around the innovative use of a central hub, metaphorically linking users to various historical locations. Leveraging a treasure hunt metaphor and a storytelling approach, this combination of digital structures is capable of building an exploratory learning experience. Virtual human agents contribute to the scenario by offering personalized narratives and educational content, contributing to an enriched cultural heritage journey. Key contributions of this research include the exploration of the symbolic use of the central hub, the application of a gamified approach through the treasure hunt metaphor, and the seamless integration of various technologies to enhance user engagement. This work contributes to the understanding of context-specific cultural heritage applications and their potential impact on cultural tourism. The output of this research work is the reusable methodology and its demonstration in the implemented showcase application that was assessed by a heuristic evaluation.

1. Introduction

Today, digital Cultural Heritage (CH) is bridging traditional methods to present cultural heritage with innovative digital technologies and rich interaction paradigms. In this new era, Cultural Heritage Institutions (CHIs), including museums worldwide, are in pursuit of innovative methods that could enhance the engagement of the audience, attract new audiences to the museum, and elevate the overall museum-visiting experience [1]. In this era, Augmented Reality (AR) and Virtual Reality (VR) technologies are considered important facilitators of this new approach since they are capable of enhancing both the physical and the virtual aspects of the museum encounter [2]. Interactive exploration through such technologies enables online and onsite visitors to engage with culture without being in direct contact with real artifacts. This includes visiting virtual objects and sites that are not physically present [3]. Moreover, on-site these technologies can support supplementary experiences to the main visit such as immersive encounters, personalized guidance, and information, including adding digital dimensions to enrich physical exhibits by using multimedia and digital storytelling [4].

In the same context, the evolution of Virtual Humans (VHs) provides more versatility to the curators, since they can be used to assume various actual or fictional roles such as historical figures, storytellers, personal guides, etc. [5]. Their multifaceted applicability in digital experiences allows VHs to act as visitor guides while offering supplementary information in the form of multimedia, thereby providing a holistically enriched museum-visiting experience.

CH and CHIs are an integral part of the travel industry through their combination with tourism attractions. At the same time heritage sites provide enriching experiences by immersing tourists in the cultural and historical heritage of a destination [6]. Digital cultural heritage technologies can enhance cultural tourism by offering travelers more than just a superficial view of landmarks and artifacts. Through digital encounters, the visits become a journey that can break the barriers of physical spaces and historical sites, and thus by collecting and presenting bits and pieces of history provide a deeper understanding of the cultural narrative supporting the exploration of historical sites, museums, and cultural landmarks virtually, even if they are physically distant [7].

In such virtual explorations, interactivity, multimedia enhancements, storytelling, and gamification experiences have the potential to enhance immersion and memorability [8].

In this work, the challenge of enhancing the current state of the art in digital cultural heritage technologies is addressed by introducing a methodology designed to bring advances in CH preservation and digital CH combined with unique interactive digital experiences. The methodology is put into real practice in a paradigmatic case study illustrating its applicability to the CH tourism sector. The proposed methodology integrates key technological components into cultural experiences such as VR, 360° videos, 3D digitized artifacts, and VH agents. The usage of these components serves for the presentation of historical narratives and fosters active user engagement, transforming CH exploration into an immersive and gamified experience accessible to diverse audiences.

The principal aim of this work is to present a comprehensive and reusable methodology and emphasize its adaptability and potential impact on the experiential presentation of historical knowledge. From a technical perspective, the presented methodology attempts to simplify the implementation of such environments by mainstreaming parts of the implementation using third-party tools and investing in gamification, captivating narration, and interaction with VHs to enhance the visitor experience.

To support and validate the proposed methodology, the implementation of a use case was conducted. To this end, a virtual storytelling application was developed for the city of Heraklion.

2. Theoretical Background

2.1. Virtual Reality and Augmented Reality Technologies in Cultural Heritage Applications

VR and AR technologies are integral to CH applications, offering users immersive explorations of historical sites and artifacts in virtual environments [9,10,11,12].

To this end, CHIs have started with the provision of a first level of immersion through 360° photos and videos that can support the creation of virtual exhibitions and thus offer the possibility to remotely experience artworks and historical artifacts [13,14]. With the addition of stereoscopic acquisition of images and videos, enhanced immersion can be achieved even using these modest technical means. Using the same principle, 360° videos are used in education to support virtual field trips, exploring historical sites and museums, and enhancing learning through immersion [15,16]. Cultural tourism applications have also explored 360° videos to showcase historical landmarks, archaeological sites, and cities [17,18]. Apart from the sense of being there, enhancements in the experience can be achieved by some limited form of adaptability and multilingual narration support [19].

In terms of content provision, storytelling and narratives are employed to enrich the immersive nature of 360° videos to emotionally charge the visiting experience through legends, stories, and myths [20,21]. Even the limited support for interactivity in 360° videos, such as clickable points of interest, has been proven to enhance user engagement with the narrative, allowing access to additional information or multimedia content [22].

Moving a step forward in technologies employed in DCH AR has the benefit of combining the digital and physical world into a single blended reality and thus enriching the user’s perception of their physical surroundings [23]. In CH applications, AR is employed to augment the visitor’s experience in various contexts including physical museums, open-air museums, archaeological sites, etc. [24,25,26]. AR-enabled devices, such as smartphones or AR glasses, can provide contextual information, 3D reconstructions, or interactive elements superimposed onto exhibits, creating an enhanced and informative museum visit [27,28,29]. Educational uses of AR offer students real-time interactive learning experiences [30,31,32] tied to historical artifacts or sites to support a deeper understanding [33,34]. The same stands for cultural tourism where AR enhances on-site exploration by providing additional layers of information [35,36,37] including historical facts, multimedia content, virtual reconstructions, etc. [38,39].

AR and VR often employ some form of knowledge representation to present engaging narrations that are rooted in the social and historical context around a subject of interest. Representation media from CH preservation and other domains (e.g., e-health, e-commerce, education, automotive, etc. [40,41,42,43]) are combined with semantic web technologies [44,45,46] for the representation of historic narratives [47].

2.2. Virtual Humans, Storytelling, and Digital Narrations

VHs are 3D anthropomorphic characters that are capable of simulating human-like behavior in a unique way to support storytelling and interaction in CH contexts [48,49]. Their primary role, currently, is to engage with users in dialogues, thus creating a more interactive and personalized experience [50,51]. VHs can enhance the overall visitor experience in CH sites, museums, or virtual environments. They can guide visitors, share historical anecdotes, and add a human touch to digital exploration [52]. Due to their digital nature, they can be programmed to communicate in multiple languages, thus enhancing the accessibility to diverse audiences [53,54]. This feature facilitates a broader reach and has been tested with the simulation of various human processes [55,56].

Research objectives in this field include improving realism, interactivity, and the overall user experience and acceptance [57]. Technologies supporting these directions include advancements in AI, graphics, and behavioral modeling. From a content-wise perspective, VHs can target historical reenactment by bringing key historical figures or characters to life or acting as conversational agents, guides, and storytellers. The first approach allows users to interact with figures from the past, providing a more immersive understanding of historical events. By simulating realistic emotions [58] and expressions [59,60,61], these characters aim at the creation of personal connections with their audience. Furthermore, adapting their narratives based on user interactions and preferences can provide a more personalized storytelling experience, making it more memorable.

2.3. Three-Dimensional Reconstruction

Today, still, photogrammetry is considered the prominent technique for 3D reconstruction. It involves the analysis of high-resolution images from multiple angles and with significant overlapping between subsequent takes to create detailed and accurate 3D models [62,63]. Processing is performed using specialized software to extract three-dimensional information [64]. In CH applications both terrestrial and aerial photogrammetry are employed [65,66,67]. Terrestrial photogrammetry involves capturing images from ground-level perspectives, while aerial photogrammetry uses drones or aircraft to obtain images from above.

For capturing precise 3D data, laser scanning, or Light Detection and Ranging (LiDAR), is widely used [68]. LiDAR sensors emit laser beams to measure distances, creating highly detailed point clouds that can be used to generate 3D models.

The structured light approach is based on the projection of a pattern of light onto an object to capture its deformation [69,70]. By analyzing the pattern’s distortion, 3D information is extracted. This method is suitable for capturing fine details on objects and artworks.

Multi-view stereo involves the reconstruction of 3D models by analyzing multiple images of a scene taken from different viewpoints [71]. Advanced algorithms match features across images to create dense point clouds, which are then used to generate the final 3D model [72].

Depth sensing technologies, such as structured light sensors or time-of-flight cameras, are integrated into 3D scanners [73,74]. These sensors capture depth information, enabling the creation of accurate 3D models, especially for smaller objects.

Three-dimensional reconstruction technologies are often integrated with VR and AR applications, allowing users to explore CH sites or artifacts in immersive virtual environments [75,76,77,78,79,80]. This integration enhances the educational and experiential aspects of CH presentations.

2.4. Contributions

In this research work a methodology is provided for creating VR guides that integrate various aspects of the forthcoming digital reality. These include captures of actual scenes of the world using 360° videos, 3D reconstructed heritage artifacts and heritage sites, and VHs as conversational agents. The merging of these modalities, to the best of our knowledge, has not been thoroughly addressed by existing work. In more detail, the presentation of virtual humans in an actual scene has been studied as part of an augmented reality problem where VHs are integrated into the scene as this is captured by the camera of a mobile device but augmented 360° video with virtual humans is a different research topic. At the same time, creating hybrid environments by combining 360° captures with digitized or artificially generated 3D environments is also a challenge. Finally, bringing all three together and ensuring their blending, interoperability, and the seamless “existence” of VHs across realities is challenging.

In this work, these are blended in a hybrid VR environment and at the same time enriched by exploring aspects of gamification, storytelling, and narratives. This combination of techniques is a separate research domain and is a unique opportunity to test how these emerging disciplines have the potential to be combined showcasing an innovative approach to CH visits. A main technical contribution is the provision of a seamless experience through these diverse media. At the same time, by combining easier-to-acquire media with 3D reconstructions the approach optimizes the usage of resources for digitization, thus making such implementation more affordable for the cultural and creative industries.

Apart from testing this integration in this work, we present a reusable methodology and instructions on how to apply this strategy according to a use case. Thus, we contribute to fostering further research on the subject and making our research reusable in other possible commercial contexts.

The use case experience presented is strictly intended for the validation of the proposed methodology and is built around the heritage of the historical city of Heraklion. The use case incorporates conversational agents as guides to enhance user engagement and learning. The research work contributes to understanding how these virtual guides can effectively communicate historic narratives, fostering a more interactive and educational journey.

As a central pivot point in the use case, the Basilica of St. Marcos is selected and transformed into a portal that transfers visitors to different locations within the city. This contributes to the understanding of how metaphorical elements can be strategically employed to guide users through CH narratives.

Another element, the treasure hunt metaphor, adds a level of gamification to the CH experience and contributes to exploring the impact of such metaphors on user engagement. At the same time, the project’s emphasis on dynamic storytelling and interactive features, including clickable points of interest and user interactions with virtual guides, contributes to the evolving landscape of how CH narratives are presented.

From a social dimension, the project’s primary objective is to offer a captivating and informative experience for users of all ages, contributing to the democratization of historical knowledge. Understanding how such applications cater to a diverse audience contributes to the broader field of educational accessibility in CH contexts.

3. Method

The methodology proposed in this research work is the outcome of our work on advanced digitization, immersive interaction, and semantic knowledge representation. More specifically, integrating outcomes from various digitization technologies in a single virtual space is challenging due to the different qualities of the digitization outcomes. At the same time, due to these inconsistencies, the need to add symbolic elements that bind together different digitizations has been proven of importance. To enhance the interaction with digitized locations and artifacts, virtual human guides have been selected as a means of providing human-like interaction and storytelling features in the digital space. Bound all together with cultural knowledge transformed into oral narratives, we deliver a holistic method to providing enhanced digital experiences, namely, the developing Immersive Digital storytElling narrAtives (IDEA) methodology.

3.1. Overview

The methodology is facilitated by the utilization of 360° videos and photospheres. Panoramic views of historical sites are captured with high-resolution cameras, and subsequent stitching techniques are employed to create immersive environments, ensuring a visually rich and easily accessible representation of CH. The inclusion of 360° videos is motivated by the simplification they bring to the development process. Their ease of acquisition and straightforward integration into VR environments make them a practical choice for capturing and presenting diverse historical locations. For static scenes where users are intended by the scenario to explore information, 360° videos can be substituted by simpler photospheres acquired from the same camera and position as the video.

Complementary to the 360° videos, 3D digitized buildings and artifacts are employed to enrich the VR environment. Detailed geometry and surface features are captured using advanced 3D scanning technologies, including laser scanning and structured light techniques, resulting in the creation of 3D models.

To enhance user engagement and provide a sense of presence, user interface elements are complemented by VH conversational agents. These agents serve as storytellers, delivering historical narratives and responding to user inquiries, thereby fostering a more immersive and enjoyable CH experience. The incorporation of VH conversational agents is motivated by the desire to evoke a sense of interaction with intelligence. This choice is rooted in their capacity to deliver narratives with a human touch, providing users with a more engaging and personalized exploration of CH.

3.2. Key Storytelling and User Engagement Components

Since capturing the entire part of the city where the presented locations are located is not practical and does not add to the experience, a central hub was metaphorically chosen to symbolize a gateway to these locations. This hub contains elements that support this metaphor such as portals that serve as a navigational anchor, connecting users seamlessly to specific 360° videos and 3D digitized artifacts.

Navigation and exploration in each location are considered essential. Users should have the ability to smoothly explore historical sites, interact with doors and panels, and move between different points of interest to enhance their understanding and engagement. At the same time, interactive challenges should be used to enhance user engagement, focusing on a gamified approach, such as the treasure hunt metaphor. Different locations are explored by users through interactions with virtual guides, entry into metaphorical portals, and the collection of information, adding an interactive layer to the CH experience. Users should have the ability to collect 3D objects during their exploration, and these collected objects should be available in their personal collection later on to create a sense of accomplishment and continuity.

The application must incorporate gamified elements, including multiple-choice riddles and object collection, to encourage active user participation and make the experience enjoyable and engaging.

Access to information can be supported by historical information panels offering comprehensive information, including historical narratives, images, and practical details, to enrich the educational aspect of the tour.

3.3. Proposed Implementation Steps

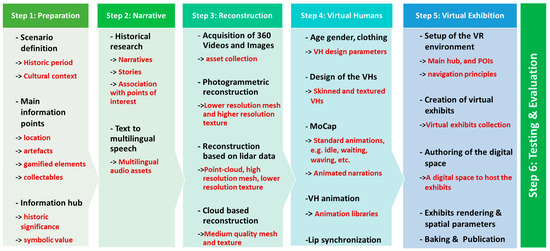

The implementation of the proposed approach can be facilitated through a step-by-step process as presented in Figure 1. The approach supports both linear execution and iterative execution since executing a step may require going back to a previous step to acquire the information and content needed.

Figure 1.

Proposed implementation methodology.

Taking a closer look at the methodology, the first step regards the definition of the interaction scenario. In this stage, it is important to clearly define the scenario or setting for the VR experience since this will define many of the technical aspects to deal with in subsequent steps. Furthermore, historical aspects such as the period, cultural context, etc. are of significance. As a first subsequent action, in this step, the identification of the main information points and the central information hub is essential to know the historical landmarks, artifacts, or locations that will be served through the experience. Gamified elements and collectibles are considered digitization targets and should be defined in this step too. The essential outcome is the establishment of a central hub, metaphorical or physical, that connects various elements within the application.

Having identified the historical context and locations, step 2 has enough information to drive historical research on the identification of stories and narratives and associate them with each information point. Collaboration with historians and experts is essential to ensure historical accuracy and engagement and a professional translation service is essential to map the produced content to the targeted languages. For simplicity, the implementation team can utilize an automated AI-based service for transforming written stories and narratives into realistic human voice narrations in the targeted languages, ensuring that the AI service can generate high-quality audio with natural intonations and expressions.

Step 3 is on the acquisition of data. First, 360° videos and images of the selected significant historical locations are acquired using a 360° video recording camera and specialized stabilization equipment. The selection of devices should ensure high resolution and comprehensive coverage for a rich VR experience. Furthermore, photogrammetry, LiDAR, or a combination of both is utilized to create detailed and accurate 3D models of objects and sites to be integrated into the VR experience. The complexity of the objects is considered and the reconstruction method is chosen accordingly. In this stage, the post-processing of the acquired data is essential for producing the final 3D models.

Step 4 regards the implementation of VHs and the determination of their role and characteristics in the application. The number, appearance, and roles they play as guides or storytellers are decided. Then, the development of the VHs based on the identified characters should be carried out and the aspect of lip synchronization should be taken into account for smooth dialogues. For the animation of the VH, Motion Capture (MoCap) technology can be employed to record realistic motion from actual humans and then remapped to create an animation set for each human. Please note that gender is essential when recording subjects since, as well as the anthropometric characteristics, the overall body language is an essential part of realism.

Having all the required assets at hand, step 5 regards the VR environment design including information panels and gamified elements. In this stage the objective is to design the VR environment to reflect the historical scenario, incorporating visual elements, textures, and lighting to enhance immersion. Information panels are implemented at key locations, providing additional historical context. Gamified elements, such as a treasure hunt metaphor, are integrated to encourage exploration and engagement. Furthermore, it integrates 360° videos, 3D digitized artifacts, and other multimedia elements seamlessly into the VR environment. A cohesive and smooth transition between different locations and informational points is ensured.

The final step is to ensure that the experience is the subject of sufficient testing at various levels, including checking for technical glitches, narrative coherence, and user experience. When feasible, collecting feedback from users, historians, and stakeholders to refine the application is essential. This can be performed iteratively based on feedback, making adjustments to enhance overall functionality, historical accuracy, and user engagement.

4. Implementation of the Case Study

To validate the proposed methodology a case study virtual storytelling application has been developed for the city of Heraklion as outlined in the following sub-sections.

4.1. Scenario

The scenario for the application is built around the cultural legacy of the city of Heraklion as a host of five plus one cultures throughout its long history (Minoan, Romans, Arabs, Venetians, Ottomans, and modern Greeks). The remains of these cultures are scattered throughout the city waiting for the virtual visitor to explore, play, and collect. The central hub of this experience is the St. Marcos Basilica, a Venetian Church in the city center. The digital doors of St. Marcos Basilica are portals that transfer the visitor to different locations in the city. Each location has a scenario to explore and upon completion, collectibles are acquired. Collectibles become part of each user’s collection which is on display at the central hub of the experience.

For the formulation of the scenario, the following expertise was used. A film director with a background in humanities studies was used to define the scenario structure in terms of the main hub, the selection of sites, and the transition between locations. The scenario at the Museum of Natural History and the gamification aspects were defined by the curators of the museum. Regarding heritage sites of Heraklion, content was defined together with historians of the Municipality of Heraklion. All the aforementioned experts contributed to parts of the scenario and reviewed the final version before proceeding with the technical implementation. The aforementioned process was iterative since comments on intermediate versions required the team to go back and redefine sub-scenarios for individual points of interest.

In the rest of this section, the technical aspects of building this experience on the aforementioned scenario are explained in depth.

4.2. Three-Dimensional Reconstruction

4.2.1. St. Marcos Basilica

The reconstruction of St. Marcos Basilica presented a primary challenge, given that the basilica features ten large columns causing partial occlusions in potential scanning areas. Additionally, the substantial chandeliers on the roof posed dual challenges, impacting both the roof and basilica structure reconstructions. For the reconstruction, a methodology for the acquisition of scans had to be defined to deal with occlusions and complex objects. As a result, this was holistically implemented as part of this research work.

Laser scanning utilized a scan topology enabling the acquisition of partially overlapping scans, subsequently registered to generate the synthetic basilica model. In total, 18 scans were acquired and registered to form a point cloud from all scans. For the acquisition, a Faro Focus scanner was used [81].

For the implementation of the basilica’s 3D model, the eighteen acquired LiDAR Faro scans were employed. These scans provide accurate distance measurements, offering high-quality geometric representations of real shapes and colors. Despite this, blind spots, areas obstructed during scanning with no overlap, persist, resulting in empty patches in the dense point-cloud mesh. Geometric artifacts originating from individual scans compound during automatic merging, introducing errors in both accuracy and aesthetic appearance.

Retopology was applied to the final geometric mesh to address the trade-off between lesser geometric accuracy and optimization. This approach helped rectify structural errors and omissions, minimizing vertex overhead. Any deficiencies in geometric details were compensated for by normal mapping extraction.

The 3D modeling and retexturing of the final structure were executed using the Blender application. A total of eighteen scans were registered. Additional masks were employed to blend various textures into a unified synthesis, a process also utilized for normal mapping. The final texture composite aimed to convey the impression of a higher-fidelity mesh within a simplified structure.

Overall, the acquisition of data for the reconstruction of the basilica required two daily visits to the basilica for the acquisition of the 18 scans. For a full scan, a time frame of 30 min is required for the laser scanner. The post-processing and registration of partial scans are a quite straightforward procedure that requires a day if enough computing resources are available. The post-processing of the result and the retopology and masking are an artistic process that cannot be approximated for any case. In our case, this took approximately 2 working weeks to achieve the result.

4.2.2. Three-Dimensional Objects

In our 3D object reconstruction process, we adopted a versatile approach, combining photogrammetry and Trnio for optimal results [82]. Photogrammetry, a robust technique, was employed specifically for complex subjects, where we gathered extensive image datasets and leveraged the capabilities of Pix4D for processing [83]. This method proved highly effective in capturing intricate details and challenging geometries, ensuring a comprehensive and accurate reconstruction. In this case, the careful acquisition of the dataset, the acquisition setup, and the acquisition density in both axes are essential [84].

For objects with more straightforward geometries, we turned to Trnio, a dynamic solution that utilizes a blend of LiDAR and photogrammetric reconstruction. This innovative approach allows data acquisition directly from a mobile device, enhancing flexibility and accessibility. The post-processing phase takes place in the cloud, leveraging Trnio’s computational capabilities to refine and generate the final 3D reconstructions. The utilization of Trnio complements our methodology by providing a streamlined process for reconstructing simpler geometries, showcasing the adaptability and efficiency of our 3D reconstruction pipeline. The Trnio reconstruction of each of the objects was performed in less than an hour and minimal post-processing was required afterward to clean the parts of the scene that were captured but were not part of the digitization target. As such, we were greatly assisted by existing technology in this direction.

4.3. The 360° Video and Photography

For the acquisition of 360° video and photospheres, the Insta360 One RS sensor [85] was used together with a handheld gimbal for stabilization purposes. For the acquisition, a professional was employed to deal with stabilization issues, recording style, lighting conditions outdoors, etc. which are essential parameters for recording.

For the acquisition of the data, one visit was required for each site in a day with good weather conditions. The post-processing of the data involved mainly montage and editing which required less than a week since mature video processing technologies were used.

4.4. Audio Narrations

In both Greek and English narrations, we employed an advanced AI-based service known as PlayHT [86]. This innovative service utilizes PlayHT’s AI Voice Generator to create ultra-realistic Text-to-Speech (TTS) outputs. With PlayHT’s Voice AI, the text is seamlessly transformed into natural-sounding, human-like voice performances, exhibiting exceptional realism and authenticity. Using PlayHT we managed to minimize narration costs, maintaining a very good quality in the narrations and of course reducing the time and human resources required for the task. Of course, such a service is not suitable for all occasions since more complex or emotionally charged narrations will benefit from a professional narration rather than an AI voice generator.

4.5. Implementation of the Virtual Humans

The 3D models for the VHs were created using Reallusion’s Character Creator 3 (CC3) [87], known for its easy and intuitive VH development capabilities. The resulting VHs are presented in Figure 2. CC3 seamlessly collaborates with the Unity 3D game engine [88] and a lip-synchronization library. Avatar body, face, hair, and clothing components can be selected from the software. Various export formats, including Unity-supported .obj and .fbx, are facilitated by CC3. The CC3 model was then appropriately converted into a Unity 3D-compatible asset. CC3 is a suite that simplifies character creation by providing built-in features of high realism but does not reduce the effort and time needed to translate a 3D model of a human to a VH.

Figure 2.

Implementation of the VHs for the experience.

4.6. Motion Capture and Virtual Human Animation

For animating the VH, scanned animations were utilized, representing a realistic approach using Motion Capture (MoCap) equipment. These animations enable the avatar to exhibit lifelike movements during user interactions or while in an idle state.

The process for the acquisition of the animation is as follows. Initially, it is critical to mention that to capture human motion it is essential to capture a person who is close to the anthropometric characteristics of the VH. Thus, in our case, both a male and a female person were employed. The human motion was recorded using a Rokoko motion capture suit. The selection of the suit was the result of a thorough evaluation of motion capture systems on the market, and Rokoko Studio solutions [89] were selected for their high performance-to-price ratio, user-friendly interface, and versatility in recording environments. The suit comprises a full-body ensemble with 19 inertial sensors tracking and recording body movement, gloves for finger tracking, and Rokoko Studio software translating sensor data into an on-screen avatar mirroring the actor’s moves in real time. The Rokoko suit features a recording mode, allowing actors to record their movements.

The recordings stemming from the suit can be processed by automatic filters embedded in Rokoko Studio, trimmed, and exported into various mainstream formats (FBX, BVH, CSV, C3D). In our case, the .fbx format was selected to simplify importing the recorded animations into the Unity game engine. For the recordings of motion, one day was required plus the time for post-processing and editing the acquired animation samples. The existence of the MoCap suite and a MoCap suit greatly enhances our capability of acquiring human movement to be retargeted to VH in the form of animations.

The collection of captured movements consisted both of idle human motions and poses to enhance pre-interaction realism and a collection of narration-specific movements to enhance the appearance of VHs while narrating content. These animations also include human actions and gestures such as pointing, moving toward an exhibit, turning, etc.

For lip synchronization, version 2.5.2 of the Salsa Lip-Sync suite was utilized [90]. This suite is fully compatible with both the Unity 3D game engine and CC3 avatars, allowing Salsa to fully exploit the blend shapes of CC3-created avatars and achieve optimal results. Salsa Lip-Sync reduces the need for manual editing of facial features during speech and is a good all-around solution for directly reproducing wave file recordings of speech by VHs.

4.7. VR Implementation

The system was implemented within the Unity development environment, using version 2021.3.14f1. This part of the system was holistically implemented in this work by using the available technological and programming frameworks as presented in this section. To ensure compatibility with the Oculus Quest 2 headset [91], the application integrates the Oculus XR Plugin [92] and the OpenXR Plugin packages [93]. Given that the application is designed to offer an interactive VR experience, it integrates two additional packages: the XR Interaction Toolkit [94] and XR Plugin Management [95]. These packages provide a framework for user interactions such as movement, rotation, and object manipulation, facilitated through the Oculus headset and controllers. Within the application scene, the XR Origin is a crucial component. This entity encompasses the user’s game objects, comprising the primary camera and the VR controllers. As the application runs, user movements and actions performed with the controllers and Oculus headset trigger corresponding responses from the XR Origin, orchestrating the interactive experience seamlessly.

Within the application scene, three fundamental objects form the cornerstone of this immersive VR experience. These include the previously mentioned XR Origin, St. Marcos Basilica, and a spherical entity. Each of these objects encompasses various components that synergize to create a unique and captivating VR environment.

St. Marcos Basilica stands as a 3D representation of the real-world Basilica of Saint Marcos located in Heraklion. Housed within this basilica is the XR Origin, which serves as the user’s gateway to navigation, facilitated by the Oculus controllers. The basilica interior further features doors (the entrance and rear exits of the basilica), enabling users to access and teleport to significant historical locations in Heraklion. The atmospheric ambiance within the Basilica of Saint Marcos was crafted to evoke mystery and enigma. In addition to the immersive doors, users encounter shelves that will display the 3D objects collected during their exploration of historical points.

The spherical entity serves a crucial role in the application by projecting 360° videos of the various historical points that users can virtually visit. This dynamic feature creates an immersive illusion of being physically present at the real attractions, enhancing the overall user experience. Within the sphere, users not only access the 360° videos but also encounter additional elements designed to further immerse them in the experience. The virtual avatars, Ariadne and Dominic, are introduced as guides, welcoming users and guiding them on the available actions. Additionally, two interactive pins are present within the sphere. Activating one of these pins opens an information panel that offers insights into the specific historical point. The second pin unlocks a puzzle panel, challenging users to solve riddles to obtain 3D objects, adding an engaging layer of interactivity to the experience.

The interconnection of these three core components operates seamlessly within the application’s structure. It commences with the XR Origin’s initial placement within Vasiliki. As previously discussed, users access various historical points by traversing through doors located within the basilica. Upon opening a door, a pivotal process unfolds. The spherical entity, housing 360° videos, textures, audio, and visual elements, is initialized and serves as the immersive environment. Simultaneously, the user is teleported into this sphere. To facilitate user navigation, a practical hand panel allows users to return to the basilica. By pressing the home button on their Oculus controllers, users can exit the sphere and return to their previous location.

5. VR Game Walkthrough

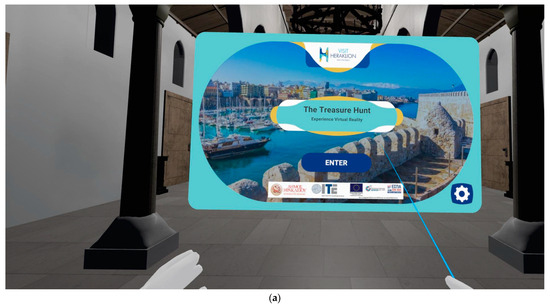

The game starts with the player positioned within the Basilica of Saint Marcos (see Figure 3a). The user has the option to enter the game or configure some basic parameters of his interaction. By entering the game some basic instructions are presented that will guide him through the provided experience (see Figure 3b). The configuration parameters include the selection of language and the dominant hand for interaction which is important for adjusting the interaction in a VR-based environment where hand-operated controllers are used for interaction (see Figure 3c).

Figure 3.

(a) Startup screen, (b) instructions, (c) basic configuration.

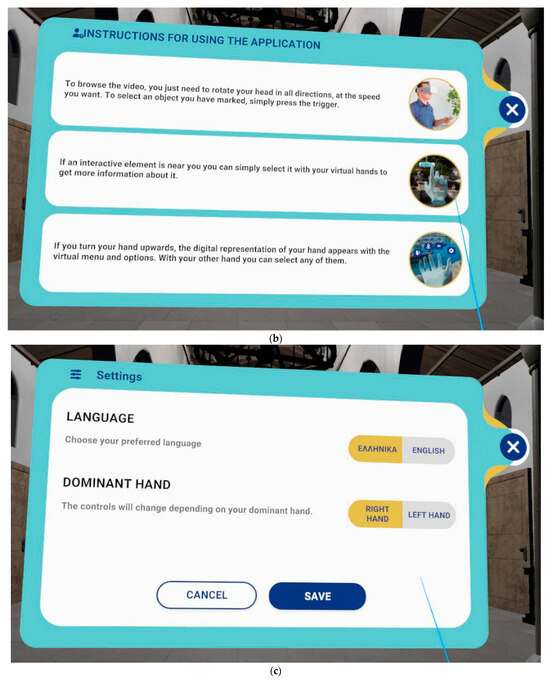

After completion of the preparatory steps, the users are free to explore the main hub of the game (see Figure 4a). In the basilica the doors at both sides of the building are portals to other locations within the city of Heraklion. Entering portals occurs when the user manipulates the door handle (see Figure 4b). To increase the excitement of interaction the doors do not provide any further information regarding the location that the user will be transferred to after entering.

Figure 4.

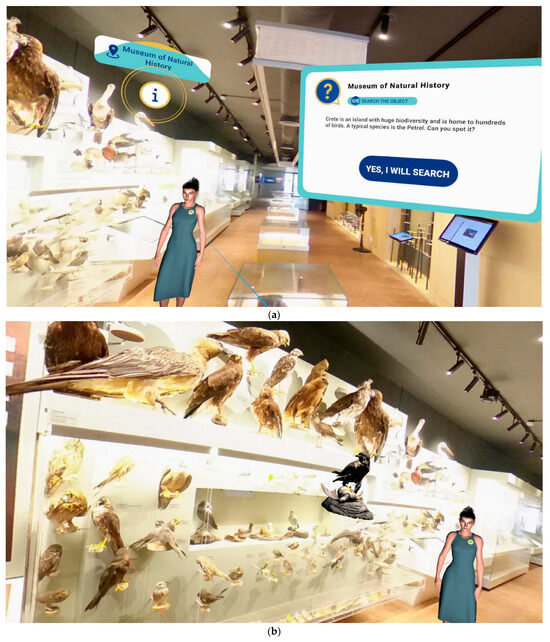

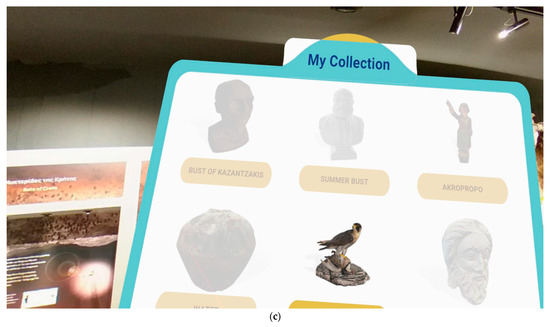

(a) The Basilica of St Marcos, (b) Interacting with door portals, (c) At the Museum of Natural History (an “i” is presented in the location where an information element is available).

An example of such a transfer is shown in the figure where the user “lands” at the Museum of Natural History of Crete (see Figure 4c).

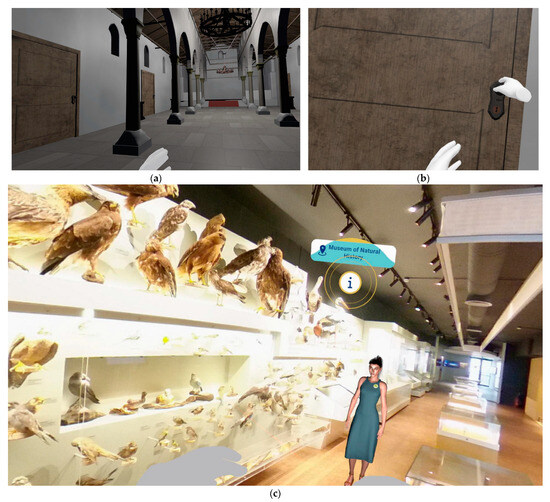

There, the VH Ariadne welcomes and introduces the concept of the treasure hunt game (see Figure 5a). At the museum, the objectives are to locate a specific bird species that is representative of the biodiversity of the island of Crete among the hundreds of available species following the instructions of Ariadne. Except for the hunt itself, several other information points are available in the museum and Ariadne is more than happy to introduce each one of them (see Figure 5b). In some cases, accessing the information points may be important to obtain enough information for locating the treasure since clues can be hidden in any corner, any line of text, or any word that Ariadne pronounces.

Figure 5.

(a) Introduction to treasure hunt at the Museum of Natural History, (b) Locating a unique wild animal of Crete, (c) Adding the collectible to the user’s collection.

The completion of the quest offers not only the satisfaction of the adventurer but also a new artifact for the collection of the user (see Figure 5c). The user will find out later when entering the basilica again that the item found is now available for display there as a piece of the living history of the journey to knowledge.

6. Lessons Learned

Building up a methodology for the integration of diverse technologies under a common gamified experience was challenging. Among the issues that were under consideration was the merging of captured physical with captured digital information, i.e., 360° and reconstructed 3D content. Since seamless navigation through these diverse realities would be impractical and could affect the experience the main hub metaphor came into place as a solution to this challenge. Another challenge was the merging of digital VHs into 360° videos and schemes. Location, scale, and lighting were of extreme importance so as for the VHs to appear as if they were there, their size to be relevant to the size of the scene, and the cast light and shadows as expected to be based on the lighting of the scene. A valuable lesson learned in this process regarding the acquisition of 360° video is that it should be carried out using a camera home positioned at the location of the eyes of the viewer. Acquisition from a greater height would result in a feeling that the visitor is taller than the height of an actual person. Acquisition from a lower height would result in a feeling that the visitor is shorter than the height of an actual person. With this restriction, the acquisition of 360° video becomes challenging since the operator of the camera should not appear within the scene. Regarding VHs, their lighting should be carefully defined for each scene individually to ensure that they blend well with the overall scene. An example is shown in Figure 4c where the soft lighting on Ariadne’s face and clothing supports blending her gracefully into the environment. Regarding the acquisition of digital assets through reconstruction technologies, we learned that simple mobile-device-based reconstruction is well suited for artifacts in the case where these are for display purposes and they do not require the precision of digitizing, for example, archaeological or historical artifacts for preservation. This was the method used for the collectible items integrated into the experience. On the contrary, scientific acquisition was selected for the Basilica of Saint Marcos. In this case, we learned that high-precision reconstruction results in datasets that are difficult to merge and render within a VR application where the rendering restrictions make simplification a prerequisite. In our case we followed a dual approach, in which we merged the individual laser scans to make a 3D reconstruction of the monument for preservation purposes and then we performed retopology editing and artistic filtering to simplify the reconstruction for integration into the experience. This is a compromise between quality and size that allows us to maintain high-quality digitization for preservation and use the simplified version for presentation. Of course, this required increased effort in terms of resources and should be followed only when both results are required by a specific study.

Regarding the feasibility and applicability of the proposed approach for cultural and creative industries, we learned that through the integration of a variety of digital technologies in terms of equipment and software, we are confident that both low-cost and high-cost equipment are applicable. Furthermore, to further support the objective of reusability of our approach we used third-party tools to streamline part of the implementation, thus reducing the amount of highly specialized resources needed for the implementation.

At the same time, investing in gamification, captivating narration, and VHs allowed the comprehensive representation of both tangible and intangible dimensions, thus supporting a richer understanding of historical artifacts and sites. Furthermore, the use of storytelling and narratives enhances presentation by establishing emotional connections with users. Of course, this confers the challenge of crafting compelling narratives to support a deeper appreciation for the historical stories embedded in CH.

Another dimension that we experimented on was interaction. The hypothesis tested regarded the incorporation of interactive elements within digital environments and whether these can contribute to user engagement.

The validation process was concluded through a heuristic evaluation involving a panel of five domain experts. The experts were presented with a demo version of the application and were tasked with completing predefined scenarios. They were encouraged to report any errors encountered during the task execution and provide additional observations. This is a process typically followed before conducting a user-based evaluation of the prototype. The rationale of heuristic evaluation is that usability experts, since they are professionals in user experience evaluation, concentrate knowledge both from the design of interactive applications and their evaluation with users. As such, they can easily locate usability issues and thus not expose end users to issues that could affect the overall user experience and satisfaction. By doing so, a user-based evaluation will be able to focus on identifying deeper and more crucial usability issues since the majority have been resolved a priori. In our case, the insights gained from this heuristic evaluation process were collected, analyzed, and fed to a development iteration to resolve all these issues before presenting the application to the users.

Since the aforementioned experts are not sufficient samples to validate the overall user experience, further validation will be required in the future in the context of a user-based evaluation of the use case. This is out of the scope of this paper since the objectives are currently on presenting the methodology and judging the technical feasibility of its implementation. As such, this work through the validation of the methodology is fully justified. The user-based evaluation will provide useful insights on improving the use case before making it available for actual use in the future.

7. Conclusions

In conclusion, the integration of digital technologies has allowed this work to propose an alternative form of preservation and presentation of CH. The lessons learned from this exploration underscore the importance of a holistic approach that combines various technologies to provide comprehensive and immersive experiences. These technologies not only enhance educational engagement, breaking down geographical barriers and fostering inclusivity, but also contribute to cultural tourism and trip planning. The power of storytelling, coupled with interactive features, establishes emotional connections with users, making the exploration of CH more meaningful. Moreover, the adaptability and democratization of historical knowledge through streamlined methodologies and third-party tools showcase the potential for widespread accessibility and impact. As the digital landscape continues to evolve, these lessons serve as guiding principles for creating engaging and enriching experiences that ensure the preservation and appreciation of our diverse CH for generations to come.

Author Contributions

Conceptualization, E.Z., E.K. (Eirini Kontaki) and N.P.; methodology E.Z., E.K. (Eirini Kontaki) and N.P.; software, E.K. (Emmanouil Kontogiorgakis) and E.Z.; validation, E.Z., E.K. (Eirini Kontaki), C.M., S.N. and N.P.; formal analysis, E.Z., E.K. (Eirini Kontaki) and C.S.; investigation, E.Z., E.K. (Eirini Kontaki), C.M., S.N. and N.P.; resources, C.S.; data curation, E.K. (Emmanouil Kontogiorgakisand), E.Z., C.M., S.N. and N.P.; writing—original draft preparation, N.P.; writing—review and editing, E.K (Emmanouil Kontogiorgakis)., E.Z., E.K. (Eirini Kontaki), N.P., C.M., S.N. and C.S.; visualization, E.K. (Emmanouil Kontogiorgakisand), E.Z. and E.K. (Eirini Kontaki); supervision, E.Z. and E.K. (Eirini Kontaki); project administration, E.Z. and E.K. (Eirini Kontaki); funding acquisition, E.Z., N.P. and C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was conducted under the project Interactive Heraklion which received funding for the Operational Programme Crete 2014–2020 PRIORITY AXIS: 1 “Strengthening the competitiveness, innovation, and entrepreneurship of Crete”, which is co-financed by the (ERDF), “Action 2.c.he.1 Smart application for e-culture, e-tourism, and destination identity enhancement (Heraklion SBA)” and the Horizon Europe project Craeft, which received funding from the European Union’s Horizon Europe research and innovation program under grant agreement No. 101094349.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available upon request.

Acknowledgments

We would like to thank the Municipality of Heraklion for the support in implementing this work. Furthermore, the authors would like to thank the anonymous reviewers for their contribution to improving the quality and impact of this research work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Black, G. The Engaging Museum: Developing Museums for Visitor Involvement; Routledge: London, UK, 2012. [Google Scholar]

- Jung, T.; tom Dieck, M.C.; Lee, H.; Chung, N. Effects of virtual reality and augmented reality on visitor experiences in the museum. In Information and Communication Technologies in Tourism 2016: Proceedings of the International Conference in Bilbao, Spain, 2–5 February 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 621–635. [Google Scholar]

- Gabellone, F.; Ferrari, I.; Giannotta, M.T.; Dell’Aglio, A. From museum to original site: A 3d environment for virtual visits to finds re-contextualized in their original setting. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; IEEE: Piscataway, NJ, USA, 2013; Volume 2, pp. 215–222. [Google Scholar]

- Keil, J.; Pujol, L.; Roussou, M.; Engelke, T.; Schmitt, M.; Bockholt, U.; Eleftheratou, S. A digital look at physical museum exhibits: Designing personalized stories with handheld Augmented Reality in museums. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; IEEE: Piscataway, NJ, USA, 2013; Volume 2, pp. 685–688. [Google Scholar]

- Carrozzino, M.; Colombo, M.; Tecchia, F.; Evangelista, C.; Bergamasco, M. Comparing different storytelling approaches for virtual guides in digital immersive museums. In Augmented Reality, Virtual Reality, and Computer Graphics: Proceedings of the 5th International Conference, AVR 2018, Otranto, Italy, 24–27 June 2018, Proceedings, Part II 5; Springer International Publishing: Cham, Switzerland, 2018; pp. 292–302. [Google Scholar]

- Buonincontri, P.; Marasco, A. Enhancing cultural heritage experiences with smart technologies: An integrated experiential framework. Eur. J. Tour. Res. 2017, 17, 83–101. [Google Scholar] [CrossRef]

- Hausmann, A.; Weuster, L.; Nouri-Fritsche, N. Making heritage accessible: Usage and benefits of web-based applications in cultural tourism. Int. J. Cult. Digit. Tour. 2015, 2, 19–30. [Google Scholar]

- Kalay, Y.; Kvan, T.; Affleck, J. (Eds.) New Heritage: New Media and Cultural Heritage; Routledge: London, UK, 2007. [Google Scholar]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A survey of augmented, virtual, and mixed reality for cultural heritage. J. Comput. Cult. Herit. (JOCCH) 2018, 11, 7. [Google Scholar] [CrossRef]

- Marto, A.; Gonçalves, A.; Melo, M.; Bessa, M. A survey of multisensory VR and AR applications for cultural heritage. Comput. Graph. 2022, 102, 426–440. [Google Scholar] [CrossRef]

- Gaitatzes, A.; Christopoulos, D.; Roussou, M. Reviving the past: Cultural heritage meets virtual reality. In Proceedings of the 2001 Conference on Virtual Reality, Archeology, and Cultural Heritage, Glyfada, Greece, 28–30 November 2001; pp. 103–110. [Google Scholar]

- Bouloukakis, M.; Partarakis, N.; Drossis, I.; Kalaitzakis, M.; Stephanidis, C. Virtual reality for smart city visualization and monitoring. In Mediterranean Cities and Island Communities: Smart, Sustainable, Inclusive and Resilient; Springer: Cham, Switzerland, 2019; pp. 1–18. [Google Scholar]

- Argyriou, L.; Economou, D.; Bouki, V. Design methodology for 360 immersive video applications: The case study of a cultural heritage virtual tour. Pers. Ubiquitous Comput. 2020, 24, 843–859. [Google Scholar] [CrossRef]

- Škola, F.; Rizvić, S.; Cozza, M.; Barbieri, L.; Bruno, F.; Skarlatos, D.; Liarokapis, F. Virtual reality with 360-video storytelling in cultural heritage: Study of presence, engagement, and immersion. Sensors 2020, 20, 5851. [Google Scholar] [CrossRef] [PubMed]

- Garcia, M.B.; Nadelson, L.S.; Yeh, A. “We’re going on a virtual trip!”: A switching-replications experiment of 360-degree videos as a physical field trip alternative in primary education. Int. J. Child Care Educ. Policy 2023, 17, 4. [Google Scholar] [CrossRef] [PubMed]

- Snelson, C.; Hsu, Y.C. Educational 360-degree videos in virtual reality: A scoping review of the emerging research. TechTrends 2020, 64, 404–412. [Google Scholar] [CrossRef]

- Salazar Flores, J.L. Virtual Mexico: Magical Towns in 360 Degrees An Exploratory Study of the Potential of 360-Degree Video in Promoting Cultural Tourism. Ph.D. Thesis, Newcastle University, Newcastle upon Tyne, UK, 2023. [Google Scholar]

- Tolle, H.; Wardhono, W.S.; Dewi, R.K.; Fanani, L.; Afirianto, T. The Development of Malang City Virtual Tourism for Preservation of Traditional Culture using React 360. MATICS J. Ilmu Komput. Dan Teknol. Inf. (J. Comput. Sci. Inf. Technol.) 2023, 15, 23–28. [Google Scholar] [CrossRef]

- Partarakis, N.; Antona, M.; Stephanidis, C. Adaptable, personalizable, and multi-user museum exhibits. In Curating the Digital: Space for Art and Interaction; Springer: Cham, Switzerland, 2016; pp. 167–179. [Google Scholar]

- Dooley, K. Storytelling with virtual reality in 360-degrees: A new screen grammar. Stud. Australas. Cine. 2017, 11, 161–171. [Google Scholar] [CrossRef]

- Pope, V.C.; Dawes, R.; Schweiger, F.; Sheikh, A. The geometry of storytelling: Theatrical use of space for 360-degree videos and virtual reality. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 4468–4478. [Google Scholar]

- Bassbouss, L.; Steglich, S.; Fritzsch, I. Interactive 360° video and storytelling tool. In Proceedings of the 2019 IEEE 23rd International Symposium on Consumer Technologies (ISCT), Ancona, Italy, 19–21 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 113–117. [Google Scholar]

- Mekni, M.; Lemieux, A. Augmented reality: Applications, challenges and future trends. Appl. Comput. Sci. 2014, 20, 205–214. [Google Scholar]

- Tscheu, F.; Buhalis, D. Augmented reality at cultural heritage sites. In Information and Communication Technologies in Tourism 2016: Proceedings of the International Conference, Bilbao, Spain, 2–5 February 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 607–619. [Google Scholar]

- tom Dieck, M.C.; Jung, T.H. Value of augmented reality at cultural heritage sites: A stakeholder approach. J. Destin. Mark. Manag. 2017, 6, 110–117. [Google Scholar] [CrossRef]

- Angelopoulou, A.; Economou, D.; Bouki, V.; Psarrou, A.; Jin, L.; Pritchard, C.; Kolyda, F. Mobile augmented reality for cultural heritage. In Mobile Wireless Middleware, Operating Systems, and Applications: Proceedings of the 4th International ICST Conference, Mobilware 2011, London, UK, 22–24 June 2011, Revised Selected Papers 4; Springer: Berlin/Heidelberg, Germany, 2012; pp. 15–22. [Google Scholar]

- Hammady, R.; Ma, M.; Temple, N. Augmented reality and gamification in heritage museums. In Serious Games: Second Joint International Conference, JCSG 2016, Brisbane, QLD, Australia, 26–27 September 2016, Proceedings 2; Springer International Publishing: Cham, Switzerland, 2016; pp. 181–187. [Google Scholar]

- Rhodes, G.A. Future museums now—Augmented reality musings. Public Art Dialogue 2015, 5, 59–79. [Google Scholar] [CrossRef]

- Abbas, Z.; Chao, W.; Park, C.; Soni, V.; Hong, S.H. Augmented reality-based real-time accurate artifact management system for museums. PRESENCE Virtual Augment. Real. 2018, 27, 136–150. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Barroso-Osuna, J.; Llorente-Cejudo, C.; Fernández Martínez, M.D.M. Educational uses of augmented reality (AR): Experiences in educational science. Sustainability 2019, 11, 4990. [Google Scholar] [CrossRef]

- Yuen, S.C.Y.; Yaoyuneyong, G.; Johnson, E. Augmented reality: An overview and five directions for AR in education. J. Educ. Technol. Dev. Exch. (JETDE) 2011, 4, 11. [Google Scholar] [CrossRef]

- Saltan, F.; Arslan, Ö. The use of augmented reality in formal education: A scoping review. Eurasia J. Math. Sci. Technol. Educ. 2016, 13, 503–520. [Google Scholar] [CrossRef]

- Perra, C.; Grigoriou, E.; Liotta, A.; Song, W.; Usai, C.; Giusto, D. Augmented reality for cultural heritage education. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 8–11 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 333–336. [Google Scholar]

- Gonzalez Vargas, J.C.; Fabregat, R.; Carrillo-Ramos, A.; Jové, T. Survey: Using augmented reality to improve learning motivation in cultural heritage studies. Appl. Sci. 2020, 10, 897. [Google Scholar] [CrossRef]

- Han, D.I.D.; Weber, J.; Bastiaansen, M.; Mitas, O.; Lub, X. Virtual and augmented reality technologies to enhance the visitor experience in cultural tourism. In Augmented Reality and Virtual Reality: The Power of AR and VR for Business; Springer: Cham, Switzerland, 2019; pp. 113–128. [Google Scholar]

- Shih, N.J.; Diao, P.H.; Chen, Y. ARTS, an AR tourism system, for the integration of 3D scanning and smartphone AR in cultural heritage tourism and pedagogy. Sensors 2019, 19, 3725. [Google Scholar] [CrossRef]

- Chung, N.; Lee, H.; Kim, J.Y.; Koo, C. The role of augmented reality for experience-influenced environments: The case of cultural heritage tourism in Korea. J. Travel Res. 2018, 57, 627–643. [Google Scholar] [CrossRef]

- Han, D.I.; tom Dieck, M.C.; Jung, T. User experience model for augmented reality applications in urban heritage tourism. J. Herit. Tour. 2018, 13, 46–61. [Google Scholar] [CrossRef]

- Lv, M.; Wang, L.; Yan, K. Research on cultural tourism experience design based on augmented reality. In Culture and Computing: Proceedings of the 8th International Conference, C&C 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020, Proceedings 22; Springer International Publishing: Cham, Switzerland, 2020; pp. 172–183. [Google Scholar]

- Jin, W.; Kim, D.H. Design and implementation of e-health system based on semantic sensor network using IETF YANG. Sensors 2018, 18, 629. [Google Scholar] [CrossRef] [PubMed]

- Devedzic, V. Education and the semantic web. Int. J. Artif. Intell. Educ. 2004, 14, 165–191. [Google Scholar]

- Trastour, D.; Bartolini, C.; Preist, C. Semantic web support for the business-to-business e-commerce lifecycle. In Proceedings of the 11th International Conference on World Wide Web, Honolulu, HI, USA, 7–11 May 2002; pp. 89–98. [Google Scholar]

- Lilis, Y.; Zidianakis, E.; Partarakis, N.; Antona, M.; Stephanidis, C. Personalizing HMI elements in ADAS using ontology meta-models and rule based reasoning. In Universal Access in Human–Computer Interaction. Design and Development Approaches and Methods: Proceedings of the 11th International Conference, UAHCI 2017, Held as Part of HCI International 2017, Vancouver, BC, Canada, 9–14 July 2017, Proceedings, Part I 11; Springer International Publishing: Cham, Switzerland, 2017; pp. 383–401. [Google Scholar]

- Benjamins, V.R.; Contreras, J.; Blázquez, M.; Dodero, J.M.; Garcia, A.; Navas, E.; Hernández, F.; Wert, C. Cultural heritage and the semantic web. In European Semantic Web Symposium; Springer: Berlin/Heidelberg, Germany, 2004; pp. 433–444. [Google Scholar]

- Signore, O. The semantic web and cultural heritage: Ontologies and technologies help in accessing museum information. In Proceedings of the Information Technology for the Virtual Museum, Sønderborg, Denmark, 6–7 December 2006; Volume 1. [Google Scholar]

- Di Giulio, R.; Maietti, F.; Piaia, E. 3D Documentation and Semantic Aware Representation of Cultural Heritage: The INCEPTION Project. In Proceedings of the 14th Eurographics Workshop on Graphics and Cultural Heritage (GCH), Genua, Italy, 6 October 2016; pp. 195–198. [Google Scholar]

- Meghini, C.; Bartalesi, V.; Metilli, D. Representing narratives in digital libraries: The narrative ontology. Semant. Web 2021, 12, 241–264. [Google Scholar] [CrossRef]

- Burden, D.; Savin-Baden, M. Virtual Humans: Today and Tomorrow; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Magnenat-Thalmann, N.; Thalmann, D. (Eds.) Handbook of Virtual Humans; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Sylaiou, S.; Fidas, C. Virtual Humans in Museums and Cultural Heritage Sites. Appl. Sci. 2022, 12, 9913. [Google Scholar] [CrossRef]

- Machidon, O.M.; Duguleana, M.; Carrozzino, M. Virtual humans in cultural heritage ICT applications: A review. J. Cult. Herit. 2018, 33, 249–260. [Google Scholar] [CrossRef]

- Ringas, C.; Tasiopoulou, E.; Kaplanidi, D.; Partarakis, N.; Zabulis, X.; Zidianakis, E.; Patakos, A.; Patsiouras, N.; Karuzaki, E.; Foukarakis, M.; et al. Traditional craft training and demonstration in museums. Heritage 2022, 5, 431–459. [Google Scholar] [CrossRef]

- Sylaiou, S.; Kasapakis, V.; Dzardanova, E.; Gavalas, D. Assessment of virtual guides’ credibility in virtual museum environments. In Augmented Reality, Virtual Reality, and Computer Graphics: Proceedings of the 6th International Conference, AVR 2019, Santa Maria al Bagno, Italy, 24–27 June 2019, Proceedings, Part II 6; Springer International Publishing: Cham, Switzerland, 2019; pp. 230–238. [Google Scholar]

- Bönsch, A.; Hashem, D.; Ehret, J.; Kuhlen, T.W. Being Guided or Having Exploratory Freedom: User Preferences of a Virtual Agent’s Behavior in a Museum. In Proceedings of the 21st ACM International Conference on Intelligent Virtual Agents, Virtual, 14–17 September 2021; pp. 33–40. [Google Scholar]

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Zikas, P.; Papagiannakis, G.; Magnenat Thalmann, N. TooltY: An approach for the combination of motion capture and 3D reconstruction to present tool usage in 3D environments. In Intelligent Scene Modeling and Human-Computer Interaction; Springer International Publishing: Cham, Switzerland, 2021; pp. 165–180. [Google Scholar]

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Papagiannakis, G. An approach for the visualization of crafts and machine usage in virtual environments. In Proceedings of the 13th International Conference on Advances in Computer-Human Interactions, Valencia, Spain, 21–25 November 2020; pp. 21–25. [Google Scholar]

- Amadou, N.; Haque, K.I.; Yumak, Z. Effect of Appearance and Animation Realism on the Perception of Emotionally Expressive Virtual Humans. In Proceedings of the 23rd ACM International Conference on Intelligent Virtual Agents, Würzburg, Germany, 19–22 September 2023; pp. 1–8. [Google Scholar]

- Konijn, E.A.; Van Vugt, H.C. Emotions in Mediated Interpersonal Communication: Toward modeling emotion in virtual humans. In Mediated Interpersonal Communication; Routledge: London, UK, 2008; pp. 114–144. [Google Scholar]

- De Melo, C.; Paiva, A. Multimodal expression in virtual humans. Comput. Animat. Virtual Worlds 2006, 17, 239–248. [Google Scholar] [CrossRef]

- Bouchard, S.; Bernier, F.; Boivin, E.; Dumoulin, S.; Laforest, M.; Guitard, T.; Robillard, G.; Monthuy-Blanc, J.; Renaud, P. Empathy toward virtual humans depicting a known or unknown person expressing pain. Cyberpsychol. Behav. Soc. Netw. 2013, 16, 61–71. [Google Scholar] [CrossRef]

- Garcia, A.S.; Fernandez-Sotos, P.; Vicente-Querol, M.A.; Lahera, G.; Rodriguez-Jimenez, R.; Fernandez-Caballero, A. Design of reliable virtual human facial expressions and validation by healthy people. Integr. Comput.-Aided Eng. 2020, 27, 287–299. [Google Scholar] [CrossRef]

- Torresani, A.; Remondino, F. Videogrammetry vs photogrammetry for heritage 3D reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 1157–1162. [Google Scholar] [CrossRef]

- Do, P.N.B.; Nguyen, Q.C. A review of stereo-photogrammetry method for 3-D reconstruction in computer vision. In Proceedings of the 2019 19th International Symposium on Communications and Information Technologies (ISCIT), Ho Chi Minh City, Vietnam, 25–27 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 138–143. [Google Scholar]

- Linder, W. Digital Photogrammetry: Theory and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Jo, Y.H.; Hong, S. Three-dimensional digital documentation of cultural heritage site based on the convergence of terrestrial laser scanning and unmanned aerial vehicle photogrammetry. ISPRS Int. J. Geo-Inf. 2019, 8, 53. [Google Scholar] [CrossRef]

- Ulvi, A. Documentation, Three-Dimensional (3D) Modelling and visualization of cultural heritage by using Unmanned Aerial Vehicle (UAV) photogrammetry and terrestrial laser scanners. Int. J. Remote Sens. 2021, 42, 1994–2021. [Google Scholar] [CrossRef]

- Nuttens, T.; De Maeyer, P.; De Wulf, A.; Goossens, R.; Stal, C. Terrestrial laser scanning and digital photogrammetry for cultural heritage: An accuracy assessment. In Proceedings of the FIG Working Week, Marrakech, Morocco, 18–22 May 2011; pp. 1–10. [Google Scholar]

- Reutebuch, S.E.; Andersen, H.E.; McGaughey, R.J. Light detection and ranging (LIDAR): An emerging tool for multiple resource inventory. J. For. 2005, 103, 286–292. [Google Scholar] [CrossRef]

- Rocchini, C.M.P.P.C.; Cignoni, P.; Montani, C.; Pingi, P.; Scopigno, R. A low cost 3D scanner based on structured light. In Computer Graphics Forum; Blackwell Publishers Ltd.: Oxford, UK; Boston, MA, USA, 2001; Volume 20, pp. 299–308. [Google Scholar]

- McPherron, S.P.; Gernat, T.; Hublin, J.J. Structured light scanning for high-resolution documentation of in situ archaeological finds. J. Archaeol. Sci. 2009, 36, 19–24. [Google Scholar] [CrossRef]

- Furukawa, Y.; Hernández, C. Multi-view stereo: A tutorial. Found. Trends® Comput. Graph. Vis. 2015, 9, 1–148. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: Piscataway, NJ, USA, 2006; Volume 1, pp. 519–528. [Google Scholar]

- Giancola, S.; Valenti, M.; Sala, R. A Survey on 3D Cameras: Metrological Comparison of Time-of-Flight, Structured-Light and Active Stereoscopy Technologies; Springer Nature: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Bruno, F.; Bruno, S.; De Sensi, G.; Luchi, M.L.; Mancuso, S.; Muzzupappa, M. From 3D reconstruction to virtual reality: A complete methodology for digital archaeological exhibition. J. Cult. Herit. 2010, 11, 42–49. [Google Scholar] [CrossRef]

- Llull, C.; Baloian, N.; Bustos, B.; Kupczik, K.; Sipiran, I.; Baloian, A. Evaluation of 3D Reconstruction for Cultural Heritage Applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 1642–1651. [Google Scholar]

- Spallone, R.; Lamberti, F.; Guglielminotti Trivel, M.; Ronco, F.; Tamantini, S. 3D reconstruction and presentation of cultural heritage: AR and VR experiences at the Museo D’arte Orientale di Torino. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 46, 697–704. [Google Scholar] [CrossRef]

- Ferdani, D.; Fanini, B.; Piccioli, M.C.; Carboni, F.; Vigliarolo, P. 3D reconstruction and validation of historical background for immersive VR applications and games: The case study of the Forum of Augustus in Rome. J. Cult. Herit. 2020, 43, 129–143. [Google Scholar] [CrossRef]

- Portalés, C.; Lerma, J.L.; Pérez, C. Photogrammetry and augmented reality for cultural heritage applications. Photogramm. Rec. 2009, 24, 316–331. [Google Scholar] [CrossRef]

- Spallone, R.; Lamberti, F.; Olivieri, L.M.; Ronco, F.; Castagna, L. AR And VR for Enhancing Museums’ Heritage through 3D Reconstruction of Fragmented Statue and Architectural Context. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 46, 473–480. [Google Scholar] [CrossRef]

- Faro Focus. Available online: https://www.faro.com/en/Products/Hardware/Focus-Laser-Scanners (accessed on 18 January 2024).

- Trnio 3D Scanner. Available online: https://www.trnio.com/ (accessed on 18 January 2024).

- Pix4D. Available online: https://www.pix4d.com/ (accessed on 18 January 2024).

- Bucchi, A.; Luengo, J.; Fuentes, R.; Arellano-Villalón, M.; Lorenzo, C. Recommendations for Improving Photo Quality in Close Range Photogrammetry, Exemplified in Hand Bones of Chimpanzees and Gorillas. Int. J. Morphol. 2020, 38, 348–355. [Google Scholar] [CrossRef]

- Insta 360. Available online: https://www.insta360.com/product/insta360-oners/1inch-360 (accessed on 25 January 2024).

- Play.ht. Available online: https://play.ht (accessed on 18 January 2024).

- Reallusion Character Creator. Available online: https://www.reallusion.com/character-creator/ (accessed on 18 January 2024).

- Unity. Available online: https://unity.com/ (accessed on 18 January 2024).

- Rokoko. Available online: https://www.rokoko.com (accessed on 18 January 2024).

- Salsa Lip-Sync Suite. Available online: https://assetstore.unity.com/packages/tools/animation/salsa-lipsync-suite-148442 (accessed on 18 January 2024).

- Oculus Quest 2. Available online: https://www.meta.com/quest/products/quest-2/?utm_source=www.google.com&utm_medium=oculusredirect (accessed on 18 January 2024).

- Oculus XR Plugin. Available online: https://developer.oculus.com/documentation/unity/unity-xr-plugin/ (accessed on 18 January 2024).

- OpenXR. Available online: https://www.khronos.org/openxr/ (accessed on 18 January 2024).

- XR Interaction Toolkit. Available online: https://docs.unity3d.com/Packages/com.unity.xr.interaction.toolkit@2.5/manual/index.html (accessed on 18 January 2024).

- XR Plugin Management. Available online: https://docs.unity3d.com/Manual/com.unity.xr.management.html (accessed on 18 January 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).