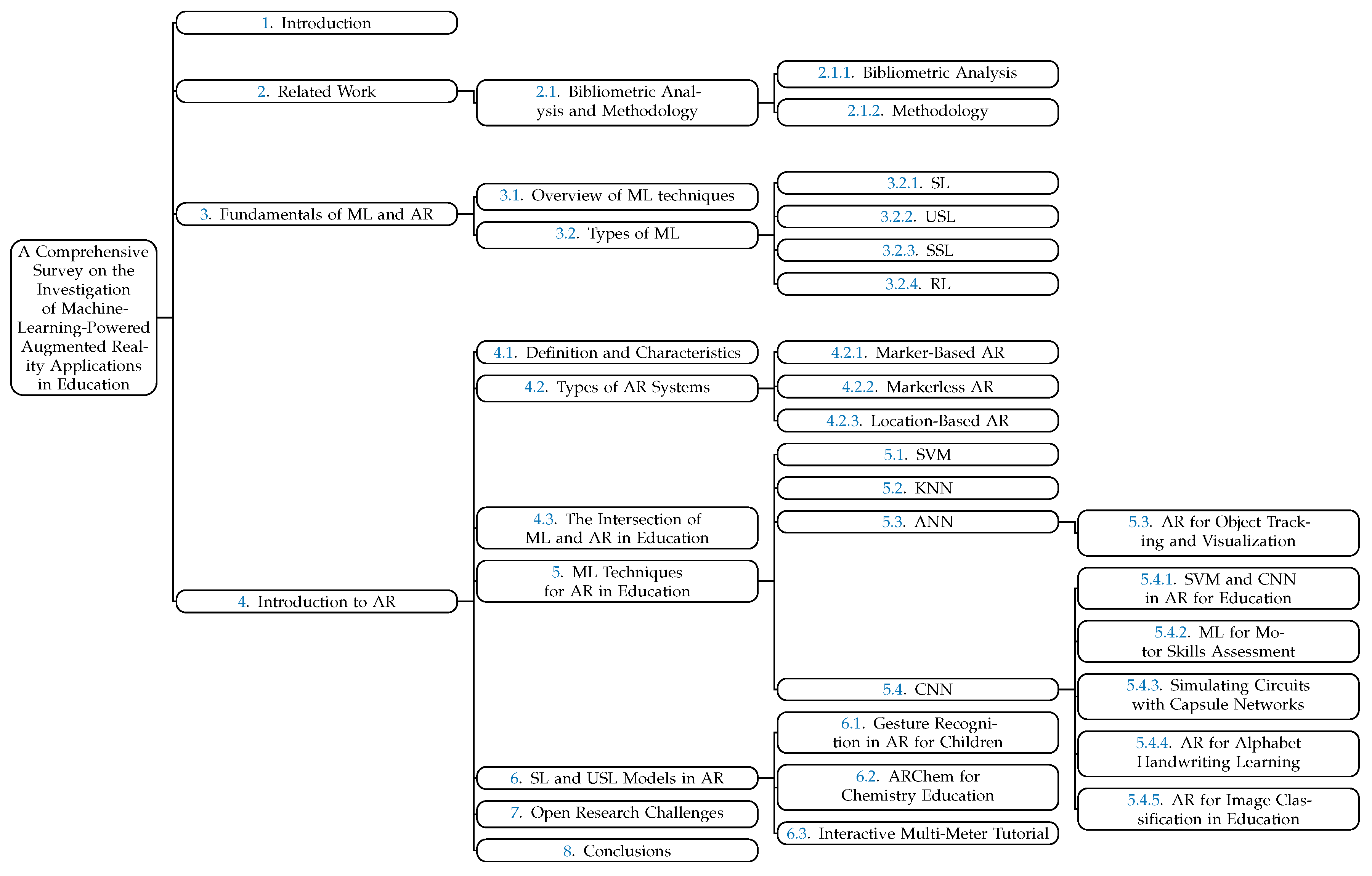

A Comprehensive Survey on the Investigation of Machine-Learning-Powered Augmented Reality Applications in Education

Abstract

1. Introduction

- ML techniques in AR applications are discussed for several areas of education.

- An analysis of related works is presented in detail.

- ML models for AR applications such as support vector machine (SVM), CNN, artificial neural network (ANN), etc., are discussed.

- A detailed analysis of ML models in the context of AR is presented.

- A set of challenges and possible solutions are presented.

- Research gaps and future directions are discussed in several fields of education involving ML-based AR frameworks.

- Emerging trends and developments in the use of ML and AR are recognized and analyzed in educational settings.

- Insights are provided into areas that need more research or improvement.

- Insights to help guide future research and development activities in the sector are provided.

2. Related Work

- How advanced are augmented reality applications in education today?

- How is machine learning being integrated into the educational augmented reality applications?

- In comparison with conventional approaches, how successful and efficient are machine-learning-powered augmented reality applications in increasing learning outcomes?

- What are the primary elements influencing student and instructor user experiences with machine-learning-powered augmented reality in education?

- What technical challenges are there when combining machine learning and augmented reality in educational settings?

- What emerging trends in the development and deployment of machine-learning-powered augmented reality applications in education are anticipated?

2.1. Bibliometric Analysis and Methodology

2.1.1. Bibliometric Analysis

2.1.2. Methodology

| Algorithm 1 Article selection criteria |

| Require: Search on databases Ensure: Article from 2017 to 2023 while keyword—Augmented Reality Machine Learning Education do if Discuss ML-assisted AR application | Evaluate performance | Analyze application in education then Consider for analysis else if Does not discuss ML then Exclude from the analysis end if end while |

3. Fundamentals of ML and AR

3.1. Overview of ML Techniques

3.2. Types of ML

3.2.1. SL

3.2.2. UL

3.2.3. SSL

3.2.4. RL

4. Introduction to AR

4.1. Definition and Characteristics

4.2. Types of AR Systems

4.2.1. Marker-Based AR

4.2.2. Markerless AR

4.2.3. Location-Based AR

4.3. The Intersection of ML and AR in Education

5. ML Techniques for AR in Education

5.1. SVM

5.2. KNN

5.3. ANN

AR for Object Tracking and Visualization

5.4. CNN

5.4.1. SVM and CNN in AR for Education

5.4.2. ML for Motor Skills Assessment

5.4.3. Simulating Circuits with Capsule Networks

5.4.4. AR for Alphabet Handwriting Learning

5.4.5. AR for Image Classification in Education

6. SL and USL Models in AR

6.1. Gesture Recognition in AR for Children

6.2. ARChem for Chemistry Education

6.3. Interactive Multi-Meter Tutorial

7. Open Research Challenges

- The accuracy and speed of object recognition have improved through the utilization of DL models and AR target databases [85].

- The Vuforia software v9.8 had been instrumental in tracking and aligning AR objects with real-world scenes, enhancing tracking and alignment [88].

- Improving the performance of ML models in AR relies heavily on the quality and quantity of training data.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| ANN | Artificial neural network |

| AR | Augmented reality |

| CNN | Convolutional neural network |

| DL | Deep learning |

| KNN | K-nearest neighbors |

| ML | Machine learning |

| SVM | Support vector machine |

| SL | Supervised learning |

| UL | Unsupervised learning |

| RL | Reinforcement learning |

| SSL | Semi-supervised learning |

| VR | Virtual reality |

| DT | Decision tree |

| LSTM | Long short-term memory |

| SDK | Software development kit |

| SMILES | Simplified molecular input line entry system |

| SOMs | Self-organizing maps |

| GANs | Generative adversarial networks |

| DBNs | Belief networks |

| EEG | Electroencephalogram |

| DAN | Deep Adversarial Networks |

| TDA | Temporal Difference Algorithms |

| DRL | Deep reinforcement learning |

References

- Garzón, J.; Acevedo, J. Meta-analysis of the impact of Augmented Reality on students’ learning gains. Educ. Res. Rev. 2019, 27, 244–260. [Google Scholar] [CrossRef]

- Wu, H.K.; Lee, S.W.Y.; Chang, H.Y.; Liang, J.C. Current status, opportunities and challenges of augmented reality in education. Comput. Educ. 2013, 62, 41–49. [Google Scholar] [CrossRef]

- Ren, Y.; Yang, Y.; Chen, J.; Zhou, Y.; Li, J.; Xia, R.; Yang, Y.; Wang, Q.; Su, X. A scoping review of deep learning in cancer nursing combined with augmented reality: The era of intelligent nursing is coming. Asia-Pac. J. Oncol. Nurs. 2022, 9, 100135. [Google Scholar] [CrossRef] [PubMed]

- Menghani, G. Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Gheisari, M.; Ebrahimzadeh, F.; Rahimi, M.; Moazzamigodarzi, M.; Liu, Y.; Dutta Pramanik, P.K.; Heravi, M.A.; Mehbodniya, A.; Ghaderzadeh, M.; Feylizadeh, M.R.; et al. Deep learning: Applications, architectures, models, tools, and frameworks: A comprehensive survey. CAAI Trans. Intell. Technol. 2023, 8, 581–606. [Google Scholar] [CrossRef]

- Jamil, S.; Jalil Piran, M.; Kwon, O.J. A comprehensive survey of transformers for computer vision. Drones 2023, 7, 287. [Google Scholar] [CrossRef]

- Hurst, W.; Mendoza, F.R.; Tekinerdogan, B. Augmented reality in precision farming: Concepts and applications. Smart Cities 2021, 4, 1454–1468. [Google Scholar] [CrossRef]

- Kovoor, J.G.; Gupta, A.K.; Gladman, M.A. Validity and effectiveness of augmented reality in surgical education: A systematic review. Surgery 2021, 170, 88–98. [Google Scholar] [CrossRef]

- Lu, J.; Cuff, R.F.; Mansour, M.A. Simulation in surgical education. Am. J. Surg. 2021, 221, 509–514. [Google Scholar] [CrossRef]

- Keller, D.S.; Grossman, R.C.; Winter, D.C. Choosing the new normal for surgical education using alternative platforms. Surgery 2020, 38, 617–622. [Google Scholar] [PubMed]

- Zheng, T.; Xie, W.; Xu, L.; He, X.; Zhang, Y.; You, M.; Yang, G.; Chen, Y. A machine learning-based framework to identify type 2 diabetes through electronic health records. Int. J. Med. Inform. 2017, 97, 120–127. [Google Scholar] [CrossRef] [PubMed]

- Salari, N.; Hosseinian-Far, A.; Mohammadi, M.; Ghasemi, H.; Khazaie, H.; Daneshkhah, A.; Ahmadi, A. Detection of sleep apnea using Machine learning algorithms based on ECG Signals: A comprehensive systematic review. Expert Syst. Appl. 2022, 187, 115950. [Google Scholar] [CrossRef]

- Khandelwal, P.; Srinivasan, K.; Roy, S.S. Surgical education using artificial intelligence, augmented reality and machine learning: A review. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Yilan, Taiwan, 20–22 May 2019; pp. 1–2. [Google Scholar]

- Soltani, P.; Morice, A.H. Augmented reality tools for sports education and training. Comput. Educ. 2020, 155, 103923. [Google Scholar] [CrossRef]

- Radu, I. Why should my students use AR? A comparative review of the educational impacts of augmented-reality. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 313–314. [Google Scholar]

- Burgsteiner, H.; Kandlhofer, M.; Steinbauer, G. Irobot: Teaching the basics of artificial intelligence in high schools. In Proceedings of the AAAI conference On Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Chiu, T.K. A holistic approach to the design of artificial intelligence (AI) education for K-12 schools. TechTrends 2021, 65, 796–807. [Google Scholar] [CrossRef]

- Kim, J.; Shim, J. Development of an AR-based AI education app for non-majors. IEEE Access 2022, 10, 14149–14156. [Google Scholar] [CrossRef]

- Bistaman, I.N.M.; Idrus, S.Z.S.; Abd Rashid, S. The use of augmented reality technology for primary school education in Perlis, Malaysia. J. Phys. Conf. Ser. 2018, 1019, 012064. [Google Scholar] [CrossRef]

- Garzón, J. An overview of twenty-five years of augmented reality in education. Multimodal Technol. Interact. 2021, 5, 37. [Google Scholar] [CrossRef]

- Lin, H.M.; Wu, J.Y.; Liang, J.C.; Lee, Y.H.; Huang, P.C.; Kwok, O.M.; Tsai, C.C. A review of using multilevel modeling in e-learning research. Comput. Educ. 2023, 198, 104762. [Google Scholar] [CrossRef]

- Cho, J.; Rahimpour, S.; Cutler, A.; Goodwin, C.R.; Lad, S.P.; Codd, P. Enhancing reality: A systematic review of augmented reality in neuronavigation and education. World Neurosurg. 2020, 139, 186–195. [Google Scholar] [CrossRef]

- Fourman, M.S.; Ghaednia, H.; Lans, A.; Lloyd, S.; Sweeney, A.; Detels, K.; Dijkstra, H.; Oosterhoff, J.H.; Ramsey, D.C.; Do, S.; et al. Applications of augmented and virtual reality in spine surgery and education: A review. In Seminars in Spine Surgery; Elsevier: Amsterdam, The Netherlands, 2021; Volume 33, p. 100875. [Google Scholar]

- Theodoropoulos, A.; Lepouras, G. Augmented Reality and programming education: A systematic review. Int. J. Child-Comput. Interact. 2021, 30, 100335. [Google Scholar] [CrossRef]

- Gouveia, P.F.; Luna, R.; Fontes, F.; Pinto, D.; Mavioso, C.; Anacleto, J.; Timóteo, R.; Santinha, J.; Marques, T.; Cardoso, F.; et al. Augmented Reality in Breast Surgery Education. Breast Care 2023, 18, 182–186. [Google Scholar] [CrossRef] [PubMed]

- Urbina Coronado, P.D.; Demeneghi, J.A.A.; Ahuett-Garza, H.; Orta Castañon, P.; Martínez, M.M. Representation of machines and mechanisms in augmented reality for educative use. Int. J. Interact. Des. Manuf. (IJIDeM) 2022, 16, 643–656. [Google Scholar] [CrossRef]

- Ahmed, N.; Lataifeh, M.; Alhamarna, A.F.; Alnahdi, M.M.; Almansori, S.T. LeARn: A Collaborative Learning Environment using Augmented Reality. In Proceedings of the 2021 IEEE 2nd International Conference on Human-Machine Systems (ICHMS), Magdeburg, Germany, 8–10 September 2021; pp. 1–4. [Google Scholar]

- Zhu, J.; Ji, S.; Yu, J.; Shao, H.; Wen, H.; Zhang, H.; Xia, Z.; Zhang, Z.; Lee, C. Machine learning-augmented wearable triboelectric human-machine interface in motion identification and virtual reality. Nano Energy 2022, 103, 107766. [Google Scholar] [CrossRef]

- Iqbal, M.Z.; Mangina, E.; Campbell, A.G. Exploring the real-time touchless hand interaction and intelligent agents in augmented reality learning applications. In Proceedings of the 2021 7th International Conference of the Immersive Learning Research Network (iLRN), Virtual Conference, 17 May–10 June 2021; pp. 1–8. [Google Scholar]

- Iqbal, M.Z.; Mangina, E.; Campbell, A.G. Current challenges and future research directions in augmented reality for education. Multimodal Technol. Interact. 2022, 6, 75. [Google Scholar] [CrossRef]

- Gupta, A.; Nisar, H. An Improved Framework to Assess the Evaluation of Extended Reality Healthcare Simulators using Machine Learning. In Proceedings of the 2022 IEEE/ACM Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), Arlington, VA, USA, 17–19 November 2022; pp. 188–192. [Google Scholar]

- Martins, R.M.; Gresse Von Wangenheim, C. Findings on Teaching Machine Learning in High School: A Ten-Year Systematic Literature Review. Inform. Educ. 2022, 22, 421–440. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Lou, G.; Shi, H. Face image recognition based on convolutional neural network. China Commun. 2020, 17, 117–124. [Google Scholar] [CrossRef]

- William, P.; Gade, R.; esh Chaudhari, R.; Pawar, A.; Jawale, M. Machine Learning based Automatic Hate Speech Recognition System. In Proceedings of the 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 7–9 April 2022; pp. 315–318. [Google Scholar]

- Polyakov, E.; Mazhanov, M.; Rolich, A.; Voskov, L.; Kachalova, M.; Polyakov, S. Investigation and development of the intelligent voice assistant for the Internet of Things using machine learning. In Proceedings of the 2018 Moscow Workshop on Electronic and Networking Technologies (MWENT), Moscow, Russia, 14–16 March 2018; pp. 1–5. [Google Scholar]

- Tuncali, C.E.; Fainekos, G.; Ito, H.; Kapinski, J. Simulation-based adversarial test generation for autonomous vehicles with machine learning components. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1555–1562. [Google Scholar]

- Kühl, N.; Schemmer, M.; Goutier, M.; Satzger, G. Artificial intelligence and machine learning. Electron. Mark. 2022, 32, 2235–2244. [Google Scholar] [CrossRef]

- Shinde, P.P.; Shah, S. A review of machine learning and deep learning applications. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation (ICCUBEA), Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar]

- Ray, S. A quick review of machine learning algorithms. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; pp. 35–39. [Google Scholar]

- Jamil, S.; Piran, M.J.; Rahman, M.; Kwon, O.J. Learning-driven lossy image compression: A comprehensive survey. Eng. Appl. Artif. Intell. 2023, 123, 106361. [Google Scholar] [CrossRef]

- Sarker, I.H.; Kayes, A.; Badsha, S.; Alqahtani, H.; Watters, P.; Ng, A. Cybersecurity data science: An overview from machine learning perspective. J. Big Data 2020, 7, 1–29. [Google Scholar] [CrossRef]

- Uddin, S.; Khan, A.; Hossain, M.E.; Moni, M.A. Comparing different supervised machine learning algorithms for disease prediction. BMC Med. Inform. Decis. Mak. 2019, 19, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Saravanan, R.; Sujatha, P. A state of art techniques on machine learning algorithms: A perspective of supervised learning approaches in data classification. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 945–949. [Google Scholar]

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Dike, H.U.; Zhou, Y.; Deveerasetty, K.K.; Wu, Q. Unsupervised learning based on artificial neural network: A review. In Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS), Shenzhen, China, 25–27 October 2018; pp. 322–327. [Google Scholar]

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Aradi, S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 23, 740–759. [Google Scholar] [CrossRef]

- Qiang, W.; Zhongli, Z. Reinforcement learning model, algorithms and its application. In Proceedings of the 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), Jilin, China, 19–22 August 2011; pp. 1143–1146. [Google Scholar]

- Khan, T.; Johnston, K.; Ophoff, J. The impact of an augmented reality application on learning motivation of students. Adv. Hum.-Comput. Interact. 2019, 2019, 7208494. [Google Scholar] [CrossRef]

- Sirakaya, M.; Alsancak Sirakaya, D. Trends in educational augmented reality studies: A systematic review. Malays. Online J. Educ. Technol. 2018, 6, 60–74. [Google Scholar] [CrossRef]

- Tzima, S.; Styliaras, G.; Bassounas, A. Augmented reality applications in education: Teachers point of view. Educ. Sci. 2019, 9, 99. [Google Scholar] [CrossRef]

- Wei, X.; Weng, D.; Liu, Y.; Wang, Y. Teaching based on augmented reality for a technical creative design course. Comput. Educ. 2015, 81, 221–234. [Google Scholar] [CrossRef]

- Holley, D.; Hobbs, M. Augmented reality for education. In Encyclopedia of Educational Innovation; Springer: Singapore, 2019. [Google Scholar]

- Koutromanos, G.; Sofos, A.; Avraamidou, L. The use of augmented reality games in education: A review of the literature. Educ. Media Int. 2015, 52, 253–271. [Google Scholar] [CrossRef]

- Scrivner, O.; Madewell, J.; Buckley, C.; Perez, N. Augmented reality digital technologies (ARDT) for foreign language teaching and learning. In Proceedings of the 2016 Future Technologies Conference (FTC), San Francisco, CA, USA, 6–7 December 2016; pp. 395–398. [Google Scholar]

- Radosavljevic, S.; Radosavljevic, V.; Grgurovic, B. The potential of implementing augmented reality into vocational higher education through mobile learning. Interact. Learn. Environ. 2020, 28, 404–418. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Pochtoviuk, S.; Vakaliuk, T.; Pikilnyak, A. Possibilities of application of augmented reality in different branches of education. Educ. Dimens. 2020, 54, 179–197. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G.; Pektas, H.; Ocak, M. AR in science laboratories: The effects of AR on university students’ laboratory skills and attitudes toward science laboratories. Comput. Hum. Behav. 2016, 57, 334–342. [Google Scholar] [CrossRef]

- Lee, S.; Shetty, A.S.; Cavuoto, L.A. Modeling of Learning Processes Using Continuous-Time Markov Chain for Virtual-Reality-Based Surgical Training in Laparoscopic Surgery. IEEE Trans. Learn. Technol. 2023, 17, 462–473. [Google Scholar] [CrossRef] [PubMed]

- Martin Sagayam, K.; Ho, C.C.; Henesey, L.; Bestak, R. 3D scenery learning on solar system by using marker based augmented reality. In Proceedings of the 4th International Conference of the Virtual and Augmented Reality in Education, VARE 2018, Budapest, Hungary, 17–19 September 2018; pp. 139–143. [Google Scholar]

- Brito, P.Q.; Stoyanova, J. Marker versus markerless augmented reality. Which has more impact on users? Int. J. Hum.-Interact. 2018, 34, 819–833. [Google Scholar] [CrossRef]

- Yu, J.; Denham, A.R.; Searight, E. A systematic review of augmented reality game-based Learning in STEM education. Educ. Technol. Res. Dev. 2022, 70, 1169–1194. [Google Scholar] [CrossRef]

- Bouaziz, R.; Alhejaili, M.; Al-Saedi, R.; Mihdhar, A.; Alsarrani, J. Using Marker Based Augmented Reality to teach autistic eating skills. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Utrecht, The Netherlands, 14–18 December 2020; pp. 239–242. [Google Scholar] [CrossRef]

- Liu, B.; Tanaka, J. Virtual marker technique to enhance user interactions in a marker-based AR system. Appl. Sci. 2021, 11, 4379. [Google Scholar] [CrossRef]

- Sharma, S.; Kaikini, Y.; Bhodia, P.; Vaidya, S. Markerless augmented reality based interior designing system. In Proceedings of the 2018 International Conference on Smart City and Emerging Technology (ICSCET), Mumbai, India, 5 January 2018; pp. 1–5. [Google Scholar]

- Hui, J. Approach to the interior design using augmented reality technology. In Proceedings of the 2015 Sixth International Conference on Intelligent Systems Design and Engineering Applications (ISDEA), Guizhou, China, 18–19 August 2015; pp. 163–166. [Google Scholar]

- Georgiou, Y.; Kyza, E.A. The development and validation of the ARI questionnaire: An instrument for measuring immersion in location-based augmented reality settings. Int. J. Hum.-Comput. Stud. 2017, 98, 24–37. [Google Scholar] [CrossRef]

- Kleftodimos, A.; Moustaka, M.; Evagelou, A. Location-Based Augmented Reality for Cultural Heritage Education: Creating Educational, Gamified Location-Based AR Applications for the Prehistoric Lake Settlement of Dispilio. Digital 2023, 3, 18–45. [Google Scholar] [CrossRef]

- Burman, I.; Som, S. Predicting students academic performance using support vector machine. In Proceedings of the 2019 Amity International Conference on Artificial Intelligence (AICAI), Dubai, United Arab Emirates, 4–6 February 2019; pp. 756–759. [Google Scholar]

- Mohamed, A.E. Comparative study of four supervised machine learning techniques for classification. Int. J. Appl. 2017, 7, 1–15. [Google Scholar]

- Lopez-Bernal, D.; Balderas, D.; Ponce, P.; Molina, A. Education 4.0: Teaching the basics of KNN, LDA and simple perceptron algorithms for binary classification problems. Future Internet 2021, 13, 193. [Google Scholar] [CrossRef]

- Chen, C.H.; Wu, C.L.; Lo, C.C.; Hwang, F.J. An augmented reality question answering system based on ensemble neural networks. IEEE Access 2017, 5, 17425–17435. [Google Scholar] [CrossRef]

- K, P.; N, B.; D, M.; S, H.; M, K.; Kumar, V. Artificial Neural Networks in Healthcare for Augmented Reality. In Proceedings of the 2022 Fourth International Conference on Cognitive Computing and Information Processing (CCIP), Bengaluru, India, 23–24 December 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Dash, A.K.; Behera, S.K.; Dogra, D.P.; Roy, P.P. Designing of marker-based augmented reality learning environment for kids using convolutional neural network architecture. Displays 2018, 55, 46–54. [Google Scholar] [CrossRef]

- Rodríguez, A.O.R.; Riaño, M.A.; Gaona-García, P.A.; Montenegro-Marín, C.E.; Sarría, Í. Image Classification Methods Applied in Immersive Environments for Fine Motor Skills Training in Early Education. Int. J. Interact. Multimed. Artif. Intell. 2019, 5, 151–158. [Google Scholar] [CrossRef]

- Alhalabi, M.; Ghazal, M.; Haneefa, F.; Yousaf, J.; El-Baz, A. Smartphone Handwritten Circuits Solver Using Augmented Reality and Capsule Deep Networks for Engineering Education. Educ. Sci. 2021, 11, 661. [Google Scholar] [CrossRef]

- Opu, M.N.I.; Islam, M.R.; Kabir, M.A.; Hossain, M.S.; Islam, M.M. Learn2Write: Augmented Reality and Machine Learning-Based Mobile App to Learn Writing. Computers 2022, 11, 4. [Google Scholar] [CrossRef]

- Le, H.; Nguyen, M.; Nguyen, Q.; Nguyen, H.; Yan, W.Q. Automatic Data Generation for Deep Learning Model Training of Image Classification used for Augmented Reality on Pre-school Books. In Proceedings of the 2020 International Conference on Multimedia Analysis and Pattern Recognition (MAPR), Ha Noi, Vietnam, 8–9 October 2020; pp. 1–5. [Google Scholar]

- Hanafi, A.; Elaachak, L.; Bouhorma, M. Machine learning based augmented reality for improved learning application through object detection algorithms. Int. J. Electr. Comput. Eng. (IJECE) 2023, 13, 1724–1733. [Google Scholar] [CrossRef]

- Sun, M.; Wu, X.; Fan, Z.; Dong, L. Augmented reality-based educational design for children. Int. J. Emerg. Technol. Learn. 2019, 14, 51. [Google Scholar] [CrossRef]

- Menikrama, M.; Liyanagunawardhana, C.; Amarasekara, H.; Ramasinghe, M.; Weerasinghe, L.; Weerasinghe, I. ARChem: Augmented Reality Chemistry Lab. In Proceedings of the 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 27–30 October 2021; pp. 0276–0280. [Google Scholar]

- Estrada, J.; Paheding, S.; Yang, X.; Niyaz, Q. Deep-Learning-Incorporated Augmented Reality Application for Engineering Lab Training. Appl. Sci. 2022, 12, 5159. [Google Scholar] [CrossRef]

- Salman, S.M.; Sitompul, T.A.; Papadopoulos, A.V.; Nolte, T. Fog Computing for Augmented Reality: Trends, Challenges and Opportunities. In Proceedings of the 2020 IEEE International Conference on Fog Computing (ICFC), Sydney, NSW, Australia, 21–24 April 2020; pp. 56–63. [Google Scholar] [CrossRef]

- Langfinger, M.; Schneider, M.; Stricker, D.; Schotten, H.D. Addressing security challenges in industrial augmented reality systems. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 299–304. [Google Scholar]

- Amara, K.; Aouf, A.; Kerdjidj, O.; Kennouche, H.; Djekoune, O.; Guerroudji, M.A.; Zenati, N.; Aouam, D. Augmented Reality for COVID-19 Aid Diagnosis: Ct-Scan segmentation based Deep Learning. In Proceedings of the 2022 7th International Conference on Image and Signal Processing and their Applications (ISPA), Mostaganem, Algeria, 8–9 May 2022; pp. 1–6. [Google Scholar]

- Supruniuk, K.; Andrunyk, V.; Chyrun, L. AR Interface for Teaching Students with Special Needs. In Proceedings of the COLINS, Lviv, Ukraine, 23–24 April 2020; pp. 1295–1308. [Google Scholar]

- Sakshuwong, S.; Weir, H.; Raucci, U.; Martínez, T.J. Bringing chemical structures to life with augmented reality, machine learning, and quantum chemistry. J. Chem. Phys. 2022, 156, 204801. [Google Scholar] [CrossRef] [PubMed]

| Research | Year | Scope of the Surveys | Contributions and Limitations | ||||

|---|---|---|---|---|---|---|---|

| AR | SVM | KNN | ANN | CNN | |||

| [1] | 2019 |  |  |  |  |  | Study of the medium’s effect on student learning gains. ML models for AR were not focused on. |

| [3] | 2022 |  |  |  |  |  | Focused on uses of AR and DL in cancer nursing. All ML models were not discussed. |

| [8] | 2021 |  |  |  |  |  | Discussed AR in plant education for precise farming. Only conventional methods were discussed, not ML models. |

| [21] | 2021 |  |  |  |  |  | Overview of AR; description of three generations of AR in education; challenges of AR applications. |

| [14] | 2019 |  |  |  |  |  | Explored the combination of AR, AI, and ML for surgical education. |

| [22] | 2021 |  |  |  |  |  | Highlighted the application of HLM as a multilevel modeling technique in e-learning research. |

| [23] | 2020 |  |  |  |  |  | Surveyed current technologies and limitations in AR for neurosurgical training as an educational tool. |

| [24] | 2021 |  |  |  |  |  | Reviewed current clinical applications of AR in spine surgery and education. |

| [25] | 2021 |  |  |  |  |  | Studied the impact of AR on programming education, its challenges and benefits for student learning. |

| This survey | 2024 |  |  |  |  |  | Focuses on ML models in AR for different fields of education: pros, and cons of each model. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, H.A.; Jamil, S.; Piran, M.J.; Kwon, O.-J.; Lee, J.-W. A Comprehensive Survey on the Investigation of Machine-Learning-Powered Augmented Reality Applications in Education. Technologies 2024, 12, 72. https://doi.org/10.3390/technologies12050072

Khan HA, Jamil S, Piran MJ, Kwon O-J, Lee J-W. A Comprehensive Survey on the Investigation of Machine-Learning-Powered Augmented Reality Applications in Education. Technologies. 2024; 12(5):72. https://doi.org/10.3390/technologies12050072

Chicago/Turabian StyleKhan, Haseeb Ali, Sonain Jamil, Md. Jalil Piran, Oh-Jin Kwon, and Jong-Weon Lee. 2024. "A Comprehensive Survey on the Investigation of Machine-Learning-Powered Augmented Reality Applications in Education" Technologies 12, no. 5: 72. https://doi.org/10.3390/technologies12050072

APA StyleKhan, H. A., Jamil, S., Piran, M. J., Kwon, O.-J., & Lee, J.-W. (2024). A Comprehensive Survey on the Investigation of Machine-Learning-Powered Augmented Reality Applications in Education. Technologies, 12(5), 72. https://doi.org/10.3390/technologies12050072