Abstract

This study compares the effectiveness of virtual reality (VR) and videoconferencing (VC) platforms for online learning. Comparative meta-analyses of 29 articles from 2003 to 2023 (15 for VR, 14 for VC) revealed that both technologies positively affect learning outcomes, with VR demonstrating a larger effect size (ES = 0.913) compared with VC (ES = 0.284). VR proved more beneficial in regular-sized classes, especially for natural science subjects, and excelled in experiential or collaborative learning environments. VC showed a greater impact in smaller classes, with significant variations depending on the program brand and camera options; it also favored natural science. These findings provide valuable guidance for educators and learners in choosing the most suitable technology for different online learning scenarios and shed light on future research in this field.

1. Introduction

Online learning has gained increasing attention from educators in the post-pandemic era for its potential to overcome temporal and spatial constraints, support real-time teacher–student interaction, and facilitate personalized learning []. However, online learning still faces some challenges, including low participation [] and rapid fatigue []. Students may also experience psychological health issues, including social isolation as well as a lack of interaction and emotional support [,]. Participation in online learning requires the use of various technologies to ensure its success [,,]. Technological advances have greatly enriched the form and content of online learning, from artificial intelligence-assisted instruction to the application of virtual reality (VR). VR and videoconferencing (VC) platforms are two widely used technologies that support online learning.

VR for online learning typically refers to a three-dimensional virtual learning environment with immersion, interaction, and imagination created by the computer. It is generally classified into two categories based on the devices utilized. The first is called immersive VR, which uses head-mounted displays (HMDs) like Meta Quest and HTC Vive and allows for a fully immersive experience [,]. While full-immersion VR generally uses HMDs, there are notable exceptions, such as cave automatic virtual environment (CAVE) systems. These are room-sized setups in which the walls, floors, and ceilings are covered with projections that create an immersive virtual space without the need for head-mounted hardware []. The second type is non-immersive VR, which is also called desktop-based VR. The use of computers or displays with interactive devices (e.g., mouse, data gloves) can create a semi-immersive experience [,]. Platforms commonly applied in instruction such as Second Life and Virbela fall into this category. Many researchers have conducted experiments to investigate the impact of VR-enhanced online learning environments, and the results have shown that they can be more effective than traditional teaching modalities (e.g., lectures, texts, and videos) in increasing the sense of presence [,] and acquiring knowledge [,,].

VC platforms allow students in various locations to participate in classes through synchronous online learning. This technology greatly enhances the accessibility and flexibility of online learning. Online communications often face delays and poor quality due to technical limitations; however, with advances in internet technologies such as broadband, smart devices, and communication algorithms, high-definition video communication has become a reality. Online learning enhanced by VC platforms has become a common teaching mode, and its use was particularly common during the COVID-19 pandemic, when it played an irreplaceable role []. Commonly used VC platforms such as Zoom and Tencent Meeting have become the preferred alternatives [,,]. Zoom has a global user base, while Tencent Meeting dominates the Chinese market, with a high usage rate and a diverse user group. Researchers have reported the advantages of online learning supported by VC platforms, including an unrestrictive learning space [], the convenience and effectiveness of communication [], and an enhanced sense of belonging and motivation [,].

The effectiveness of VR and VC platforms in online learning refers to measurable learning outcomes, which are commonly evaluated by standardized test instruments, observed student performance during learning activities, and samples of students’ work. However, the ways in which these technologies influence learning can be quite distinct, as each provides unique affordances and learner experiences. The benefits of VR in online learning are primarily reflected in its ability to create an immersive learning environment that enhances students’ sense of belonging and presence. Through immersive experiences, students feel as if they are in a real classroom or simulated scenario, which greatly encourages active and reflective learning. By contrast, VC platforms allow for real-time communication without the need for complex equipment or high costs: effective online learning can be achieved with just a mobile phone or laptop. VC platforms have become the preferred choice for many online courses due to their ease of use and widespread availability. A comparison of these two technologies would thus be valuable, given their differences.

Although VR and VC platforms are increasingly used in online learning, previous research often failed to assess and compare their effects comprehensively. The lack of an overall assessment of their effectiveness hinders our understanding of how these technologies impact students’ learning outcomes in terms of research context, technological features, and instructional design. Moreover, the absence of systematic comparisons between the two technologies poses challenges for educators in selecting the most suitable technology for specific teaching needs and contexts.

Based on these research limitations, this study conducted meta-analyses of relevant experimental studies in the literature on VR and VC platforms, and systematically compared their respective advantages and limitations. The aim of this study is to deepen our understanding of the educational potential of both technologies and provide practical implications for future technology selection. The following questions guided this study:

- RQ1:

- What is the overall effect of VR and VC platforms in enhancing online learning?

- RQ2:

- How do research context, technological features, and instructional design affect the learning effect?

2. Literature Review

2.1. Online Learning Supported by VR

VR has become prevalent in online learning due to its immersive three-dimensional environments and interactive capabilities. Shih [] defined VR as a technology that allows users to maneuver and engage within a computer-generated world, underscoring attributes such as presence and interactivity []. When users wear HMDs and enter the virtual world, they experience a sense of presence in the virtual environment []. Users can also interact with objects in the virtual environment through natural means of communication such as gestures, body movements, and language []. These features make VR applicable to various fields, including gaming, healthcare, and real estate, as well as online learning.

The continuous advances in VR have allowed its application to online learning to yield many benefits. First, several studies have experimentally demonstrated that VR can improve learning outcomes. Lakka et al. [] compared online VR-based physics laboratories with traditional laboratories and found that students in the VR condition showed a better understanding of complex concepts, thus improving learning outcomes. Similar findings have been reported across different disciplines [,]. Second, it has been claimed that VR can significantly enrich the online learning experience [,]. For example, Sai [] conducted an experiment in which a VR platform was implemented for online music learning; students’ academic achievements improved significantly as a result. However, the application of VR in online learning is not without challenges. Users may encounter physical discomfort such as eye strain and motion sickness [].

However, these effects are not consistent across all contexts. Differences across disciplines and educational levels may partly account for the variations in outcomes. VR has been shown to be particularly effective in natural science subjects, while learning outcomes have also been found to vary across academic domains in both K–12 and higher education settings [,]. Additionally, technological features also contribute to these discrepancies. Factors such as interactivity, visual fidelity, and level of immersion have been identified as critical to user engagement and knowledge retention [,]. Furthermore, instructional design is another key factor. The effectiveness of VR-based environments varies depending on pedagogical strategies, with inquiry-based and collaborative models often producing stronger results than didactic approaches [].

In sum, VR can enhance online learning by improving learning outcomes and experiences, but it also poses problems that require proper setup and application. The impact of the disciplinary domain, technological features, and instructional design on the effectiveness of VR-supported online learning also need to be considered [].

2.2. Online Learning Supported by VC Platforms

VC platforms, which are characterized by real-time voice and video communication facilitated through the Internet, enable face-to-face interaction between teachers and students across diverse geographical locations []. Such platforms make it possible to conduct instructional sessions and foster the dissemination of knowledge as long as participants have a functional internet connection. The integration of VC technology has made online learning a practical and effective pedagogical approach. VC affordances for online learning are primarily reflected in the following aspects. First, VC platforms allow real-time interaction between teachers and students. Such immediateness encourages effective learning and active participation []. Second, VC platforms can provide both visual and acoustic stimuli. This multimodal approach aids students in comprehending and retaining learning material by allowing them to visualize complex ideas and knowledge points in the form of pictures, movies, charts, and other formats []. Teachers can also use classroom-like teaching situations through VC platforms, which include using a whiteboard, presenting objects, leading group discussions, and other activities that improve students’ sense of engagement and immersion [].

The effects of VC platforms on online learning have been examined. For instance, Banoor et al. [] found that students who participated in higher-order online learning activities enhanced their critical thinking and practical competencies, which led to better grades. Similarly, Alshaibani et al. [] used Moodle and Zoom as online learning tools for first-year university medical students and found that using a VC platform as an instructional instrument significantly improved students’ performance. According to Lin [], student satisfaction with the course and the instructor increased significantly when the VC platform Zoom was used for online learning. Despite these advantages, researchers have argued that using VC platforms also has disadvantages. For example, Patricia Aguilera-Hermida [] found that using VC platforms (e.g., Zoom, Teams, Google) for online learning reduced college students’ cognitive engagement.

These mixed outcomes suggest that the effectiveness of VC-supported online learning is contingent on several moderating factors. Technological features such as camera use, screen sharing, and chat functionalities can influence student engagement and participation. For instance, the use of cameras and microphones may enhance immediacy and presence, yet may also raise privacy concerns or contribute to fatigue [,]. Pedagogical strategies also matter. VC-based learning is more effective when paired with structured, collaborative learning models such as problem-based learning or game-based planning tasks, which promote active interaction among learners [,]. Furthermore, the level of instructional support and teacher involvement plays a crucial role. VC platforms embedded within a well-supported community of inquiry framework have been shown to foster more effective collaboration and learner satisfaction [].

Overall, despite the negative effects of VC platforms, their use for online learning generally contributes to improving students’ learning performance, boosting their higher-order thinking and practice skills, and enhancing academic achievement and satisfaction. The impact of VC platforms in supporting online learning can be affected by various factors, including the technology itself, the pedagogical approach, and teacher support.

2.3. Possible Moderator Variables

Studies on the effectiveness of VR and VC platforms in online learning have come to mixed conclusions, and the differences are likely due to a number of factors. We conducted meta-analyses of the existing literature to identify the variables that are frequently reported. Through this analysis, we identified three categories of possible moderator variables corresponding to the research questions: research context (sample size, discipline, and grade level), technological features (including equipment, camera option, and platform), and instructional design (including pedagogy and learning outcome).

2.3.1. Research Context

Sample size. Sample size is an important moderator variable. Researchers have concurred that an increase in class size leads to a reduction in teacher–student interaction and a subsequent decline in student satisfaction with online courses, which has a negative impact on student achievement [,]. Small classes, typically comprising 20–25 students, have been shown to produce more positive academic outcomes compared with larger classes [,]. Kingma and Keefe [] reported that students were most satisfied with online courses when the class size was limited to 23 students. Based on this evidence, the present study adopted 23 as the threshold for defining a small class. For studies with a control group, a total sample size of 46 or fewer was considered small; for studies without a control group, any sample size above 23 was considered regular-sized.

Discipline. Discipline is a crucial moderator variable that has an impact on the effectiveness of technology-enhanced learning []. VR-related meta-analyses have been conducted in various disciplines, including science, mathematics, medicine, and other subjects [,]. Disciplines vary in terms of knowledge content, structure, and presentation, so when technology is applied to online learning, it may produce varying learning effects in different disciplines. VR may be more appropriate for some disciplines, while VC platforms may be more suitable for others. To investigate the reasons for the differences in the learning effects, this study considered discipline as a moderator variable and categorized it into four groups: natural science, humanities and social sciences, medical science, and engineering and technology sciences.

Grade level. Grade level can affect learning effectiveness [,]. It is important to consider the cognitive structures and abilities of students in different grades []. Younger students may find it easier to adapt to new technologies such as VR, while older students may be more disciplined and self-directed. This can affect their performance in technology-enhanced online learning environments. We therefore examined whether there were differences in learning outcomes between K–12 and college students to provide insights into choosing the appropriate online learning support technology.

2.3.2. VR Technological Features

Equipment. VR equipment may affect students’ immersion experience and learning outcomes. Devices such as HMDs, desktop systems, and VR glasses all offer unique features. HMDs create a highly immersive experience by encompassing the user’s field of view [,], which offers an engaging and realistic virtual environment that can facilitate learning and improve knowledge retention by providing a feeling of presence and a high degree of engagement [,]. Brands like HTC Vive (HTC Corporation, Taoyuan City, Taiwan) and Meta Quest (Meta Platforms, Inc., Menlo Park, CA, USA) are globally recognized for their advanced HMD technology. Desktops, meanwhile, enable users to experience virtual environments through interactive devices such as screens, keyboards, and mice. Although they may not offer the same level of immersion as HMDs, they provide a more user-friendly and cost-effective way to access virtual environments []. Because desktop VR does not fully isolate the user from the real world, this allows for a more gradual transition between real and virtual experiences. VR glasses, such as Google Cardboard (Google, Mountain View, CA, USA) and Samsung Gear VR (Samsung Electronics Co., Ltd., Suwon, South Korea), are mobile-based VR equipment that creates a virtual environment in front of the user’s eyes. These glasses offer users a lighter and more convenient option than HMDs [,].

2.3.3. VC Technological Features

Camera option. The camera options for a VC platform can affect online learning. When the camera is turned on, teachers can assess students’ understanding and emotions based on their facial expressions to provide immediate feedback []. This can enhance students’ engagement and sense of community, which can lead to increased concentration and participation in class [,]. However, it is important to consider potential issues when deciding whether to turn on the camera []. In situations where the internet connection is weak, turning off the camera can ensure a smoother online learning experience []. Privacy is also a crucial concern, especially in sensitive environments such as dormitories or shared living spaces. Turning off the camera can provide students with a sense of security and comfort.

Platform. The platform used for the VC environment is a key moderating variable that affects learning outcomes. VC platforms differ in terms of interface design, functional features, and means of interaction. These factors may affect participants’ engagement, communication effectiveness, and continuance intention []. Some VC platforms offer high-definition video communication [], which allows remote students to see and hear their teachers’ expressions and voices more clearly. Other platforms offer rich interactive features—such as instant polling, screen sharing, and screen annotation [,]—that promote communication and collaboration among students and improve online learning efficiency and outcomes. This study therefore focused on the choice of VC platform and its potential impact on learning outcomes.

2.3.4. Instructional Design

Pedagogy. Pedagogy, which focuses on how to teach and learn more effectively, is another variable that may have an impact on the effect size. It includes the design of a range of instructional activities for online learning. This requires considering how to enhance teacher–student interaction, how to increase participation, and how to develop student motivation. Research has shown that there are significant differences in the effect of VR interventions in terms of pedagogy []. Following the categorization by Zhang et al. [] and Luo et al. [], studies on the variable of “pedagogy” were classified into five categories: inquiry-based learning, game-based learning, collaborative learning, direct instruction, and experiential learning.

Type of learning outcome. Changes in students’ knowledge, skills, and attitudes achieved after completing specific instructional activities are called learning outcomes. Clear learning outcomes can help students understand learning objectives and influence their choice of learning strategies and methods. The learning outcome is also an important indicator for measuring learning effectiveness. Previous studies took the learning outcome as a coding item and summarized it into three categories: knowledge-based, ability-based, and skills-based []. To conduct more detailed analyses, we drew from the work of Zhang et al. [] and identified four essential types of learning outcomes: knowledge, behavior, skill, and affective outcomes.

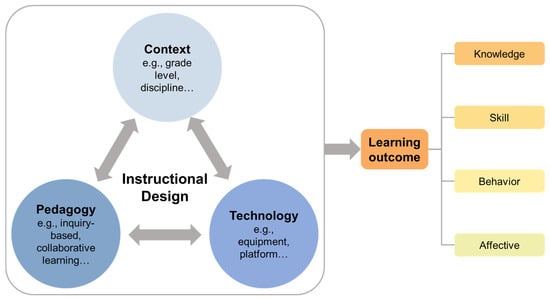

Building on the instructional design theoretical assumptions proposed by Reigeluth [], this study develops a conceptual framework that emphasizes the contextual nature of instruction (see Figure 1). Within this framework, instructional design functions as a key mechanism that mediates between pedagogical strategies and technological features, enabling context-sensitive decisions aimed at achieving effective learning outcomes [].

Figure 1.

Conceptual framework for comparative meta-analyses.

3. Method

3.1. Search Strategies

This paper searched for journal articles related to online learning through VR and VC platforms. To ensure comprehensive coverage, two prominent academic databases, Web of Science and Scopus, were consulted. The search terms used to identify literature on VR were (“virtual reality” OR “metaverse” OR “virtual world”) AND (“online learning” OR “online teaching” OR “online course” OR “distance learning”). For literature on VC platforms, the search terms were (“video conferencing” OR “Zoom” OR “Tencent meeting”) AND (“online learning” OR “online teaching” OR “online course” OR “distance learning”). The search was limited to peer-reviewed journal articles published in English between 1 January 2003 and 31 December 2023. In Web of Science, searches were limited to the core collection and the research area of Education Educational Research, yielding 969 articles. In Scopus, 1352 articles were retrieved without restricting the search to subject areas, as Scopus does not include a distinct “Education” classification. Initially, a total of 2321 articles were identified for potential inclusion (including duplicates). Because there are few articles comparing VR with VC platforms, this paper conducted two independent meta-analyses of the literature related to the two topics. This approach was necessary to present accurate and tangible educational application outcomes.

3.2. Inclusion and Exclusion Criteria

Articles meeting the following criteria were included in the study. The inclusion criteria were as follows:

- The publication year was limited to the period between 2003 and 2023. The reason for choosing 2003 as the starting year for the literature search is that it marked the launch of Second Life, an innovative three-dimensional virtual world that has had a profound impact on the evolution of VR.

- Only English-language articles published in peer-reviewed academic journals were eligible for inclusion.

- Studies that used either a randomized controlled trial (RCT) or quasi-experimental design were considered.

- The subjects of the studies were students in K–12 or higher education.

- The studies compared online learning facilitated by VR with those conducted under non-VR conditions. We also made an identical provision for articles about VC platforms.

- The dependent variables measured were related to learning effects.

The exclusion criteria were as follows:

- Both the experimental and control groups used VR or VC platforms;

- The research employed augmented reality, mixed reality, or other technological approaches besides VR;

- The studies did not provide sufficient data, such as sample size and mean values, to calculate the effect size.

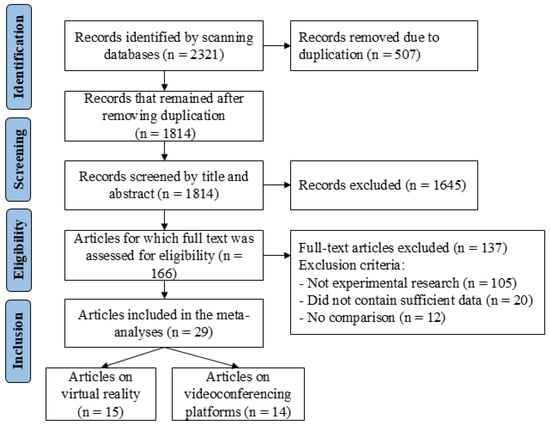

Figure 2 displays an overview of the literature search procedure following the PRISMA protocol []. This study identified 507 duplicate articles through a synergistic approach that combined automated recognition tools with meticulous manual screening. Preliminary scanning of the titles and abstracts led to the exclusion of 1645 articles that failed to meet the predefined inclusion criteria. The excluded articles encompassed a variety of categories: some focused on vocational education as their research domain; others relied on methodologies such as surveys, interviews, or case studies; and a subset merely measured the attitudes of the participants. At this stage, 166 articles were obtained for further evaluation. During the second round of screening, 137 articles were excluded after reading the full text according to the criteria outlined above. A total of 29 articles meeting all the criteria were thus included in the meta-analyses, of which 15 were related to VR and the remaining 14 were related to VC platforms.

Figure 2.

Flow chart of the selection process based on the PRISMA protocol.

Given the relatively small number of eligible studies, it was necessary to extract multiple effect sizes from some articles to ensure adequate data coverage. For articles with multiple measures of different types of learning outcomes, or with more than one experimental or control condition, the following principles were used to calculate the effect size: (a) if multiple measurements were reported for the same type of learning outcome (e.g., several post-test scores for knowledge), the average effect size was computed to represent that outcome; (b) if different types of learning outcomes were measured (e.g., using tests for changes in knowledge and scales for changes in attitude), one effect value was extracted for each dependent variable; (c) when there were multiple experimental or control groups (e.g., comparison between VR, VC platforms, and face-to-face instruction in the study), one effect value was extracted for each comparison between VR and non-VR treatment, and between VC and non-VC treatment. As a result, the total number of independent studies included was greater than the number of articles; the number of VR-related studies was 37 and the number of VC platform-related studies was 35.

3.3. Research Quality Assessment

To ensure the inclusion of well-designed studies in the meta-analyses, we conducted a formal quality assessment using the Medical Education Research Study Quality Instrument (MERSQI) []. This instrument evaluates methodological rigor across 10 items within six domains: study design, sampling, type of data, validity of evaluation instrument, data analysis, and outcomes. The included studies had MERSQI scores ranging from 10 to 16, with a mean of 12.6 (SD = 1.74). The complete results of MERSQI can be accessed in Supplementary Materials. According to a review by Cook and Reed [], which examined 26 published studies using MERSQI, the median score was 11.3 (range: 8.9–15.1). These findings suggest that the studies included in our meta-analyses were of relatively high methodological quality.

3.4. Coding Procedure

Based on an in-depth exploration of the significant characteristics of VR and VC platforms, key moderator variables were extracted from the 29 articles selected, which involved research context, technological features, and instructional design. As shown in Table 1, the research context codes represented the total number of participants and their educational level (K–12 or college) within specific disciplines (natural science; humanities and social sciences; medical science; or engineering and technology sciences). VR and VC platforms each had different coding criteria for technological features. VR was assessed based on the type of device used, while the VC platform section recorded whether students’ cameras were activated and which platforms were used. The instructional design codes mainly covered the pedagogy adopted and the evaluation of learning outcomes.

Table 1.

Coding manual.

To ensure coding reliability, one researcher conducted the primary coding using a standardized manual. To validate consistency, a second researcher independently reviewed a random 20% of the studies. Intercoder reliability was assessed using Cohen’s kappa, yielding a value of 0.771, indicating substantial agreement.

3.5. Data Analysis

Comprehensive Meta-Analysis (CMA) 3.0 software was used for the analyses, which included calculating the effect size, as well as a heterogeneity test, moderator analysis, and publication bias analysis. Hedges’ g was chosen as the measure of effect size due to its greater accuracy in studies with small sample sizes compared with Cohen’s d. Hedges’ g is a modification of Cohen’s d [] that accounts for errors in estimating the effect size due to sample size. It corrects such errors by adding a correction factor to the summary estimate, which results in more robust results. While both Cohen’s d and Hedges’ g are susceptible to positive bias to some degree, Hedges’ g can reduce this bias through small-sample correction. Meta-analyses often combine samples of different sizes, which makes it common to choose the more universal and robust Hedges’ g as the effect size indicator.

The heterogeneity test provides researchers with insight into the extent of variability in effect sizes across different studies—that is, it indicates the presence of statistical heterogeneity. This understanding is crucial for deciding whether to use a fixed-effects or random-effects model for meta-analysis. The two commonly used methods for heterogeneity testing are the Q test and I2 test. If the p-value of the Q test is greater than 0.1, it is generally assumed that there is no significant heterogeneity between studies. The I2 statistic reflects the proportion of heterogeneity in the total variation in the effect size. A value of 25% indicates low heterogeneity, 50% indicates moderate heterogeneity, and 75% indicates high heterogeneity [].

The effect size analyzed in this study was based on the effect size standard proposed by Cohen []. An effect size of 0.2–0.5 was considered small, 0.5–0.8 medium, and 0.8 or greater large. After obtaining all of the effect sizes, we chose to use the random-effects model (REM) to calculate the total effect size, as suggested by Borenstein et al. [], because we could not guarantee that the true effect sizes of all studies were exactly the same, which made the fixed-effects model implausible.

4. Results and Discussion

4.1. Overall Effectiveness

The overall effectiveness of VR-supported online learning is shown in Table 2. A meta-analysis of 37 studies was conducted, and the heterogeneity analysis revealed a statistically significant Q value (Q (36) = 430.196, p < 0.001), which suggests considerable heterogeneity across the effect sizes. The REM analysis result indicated that VR had a large and positive effect on online learning (g = 0.913, SE = 0.148, 95% CI = [0.624, 1.203]). This finding supports the idea that VR can enhance learning outcomes []. The I2 of the overall model showed high heterogeneity (I2 = 0.92), which indicated the need for moderator analysis to explain this heterogeneity.

Table 2.

Results for the overall analysis of VR.

The results in Table 3 indicate that online learning enhanced by VC platforms has a significant impact on students’ learning outcomes, with a small effect size (g = 0.284, SE = 0.071, 95% CI = [0.146, 0.423], p < 0.001). The analysis revealed substantial heterogeneity in effect sizes (Q (34) = 245.828, I2 = 0.86), indicating that 86% of the difference was due to true heterogeneity. Therefore, additional moderator analyses were necessary to explore the factors contributing to this heterogeneity.

Table 3.

Results for the overall analysis of VC platforms.

4.2. Moderator Analysis for VR-Supported Online Learning

4.2.1. Research Context in VR Studies

One contextual consideration is class size. Regular-sized (51.4%) and small classes (48.6%) had a well-balanced distribution in the sample, as shown in Table 4. Although we found that the effect size for small classes (g = 0.657) was lower than that for regular-sized classes (g = 1.181), the variance between the different sample sizes was not significant (QB = 3.399, p = 0.065). This suggests that VR can effectively support online learning in both large and small classes. However, under certain conditions, a regular-sized class may be more conducive to improving learning outcomes. It would be valuable for future studies to explore the circumstances under which small classes can be advantageous.

Table 4.

Moderator analysis results for VR-supported online learning.

With respect to disciplinary domain, engineering and technology sciences were the most commonly studied disciplines (59.5%), followed by humanities and social sciences (24.3%), medical science (8.1%), and natural science (8.1%). Previous studies have shown that VR has a large effect in engineering, social sciences, and basic sciences [], and the results of this study further confirm this tendency. The Q statistics indicated a significant difference in effect size (QB = 17.934, p < 0.001). While all four disciplines had a positive moderating impact on VR-supported online learning, natural science showed the greatest effect size (g = 4.565), followed by medical science (g = 0.818), humanities and social sciences (g = 0.689), and engineering and technology sciences (g = 0.619). The reason for this may be that research in natural science mainly deals with abstract physical phenomena and natural laws. VR can simulate and construct these phenomena and laws, thus providing learners with a more intuitive and in-depth learning experience. Notably, the effect size for VR in natural science (g = 4.565) was derived from a small number of studies (k = 3), and should therefore be interpreted with caution due to possible overestimation.

Regarding students’ educational stage, 86.5% of the studies were conducted at the college level, while only 13.5% were conducted at the K–12 level. There was no significant variance in effect size across different grade levels (QB = 0.217, p = 0.642). This may be due to the fact that VR is an innovative technology that appeals to both K–12 and college students. Its use in online learning may initially generate a novelty effect [,], which could lead to increased student interest and engagement. It thus appears that online learning supported by VR is not moderated by grade level; however, it is worth noting that a moderate effect (g = 0.625) was reported for the K–12 stage, while a large effect was reported at the college level (g = 0.973). One possible explanation is that college students may be better able to adapt to and use VR to enhance their learning than K–12 students in terms of cognitive development.

4.2.2. Technological Features in VR Studies

We discussed the VR equipment used in the studies and found that desktops were the most frequently used equipment (70.3%), followed by HMDs (29.7%). The Q statistics revealed that the effect size for HMDs (g = 1.049) was slightly higher (g = 1.049) than for desktops (g = 0.866), but this difference was not statistically significant (QB = 0.514, p = 0.474). Surprisingly, this finding contradicts the results of Wu et al. [] and Luo et al. []. This is understandable for two reasons. First, VR equipment has improved over time. Today’s HMDs may offer a more immersive learning experience that has a greater impact. Second, the meta-analysis included studies in which most participants were college students. They may have rich experience with desktop VR and, thus, more positive expectations for immersive VR; this psychological expectation could potentially also improve their learning outcomes. Finally, it is important to consider the limitations of the number of studies. Only 11 studies explored the impact of HMD on online learning, which means that an element of chance may play a role in the results.

4.2.3. Instructional Design in VR Studies

The descriptive analysis showed that experiential learning was the most frequently used pedagogical approach (32.4%), while inquiry-based learning was the least used (10.8%). We identified that pedagogy had a significant moderating effect on VR-enhanced online learning (QB = 12.830, p < 0.05). On average, experiential learning was the most effective pedagogy (g = 1.704), followed by collaborative learning (g = 1.098), game-based learning (g = 0.960), direct instruction (g = 0.370), and inquiry-based learning (g = −0.239). These findings emphasize the benefits of experiential learning strategies while offering valuable insights for designing effective online learning activities using VR. This impressive performance is not accidental, but rather, a result of the unique advantages that VR offers. VR can replicate real-world scenes, including classrooms and museums, as well as simulate situations that are difficult to achieve in reality [], such as complex tasks like controlling river pollution. The use of experiential learning in VR-enabled online learning is thus highly effective.

We also investigated the moderating effect of learning outcome type. The most frequently studied learning outcome type was the affective outcome (35.1%), followed by knowledge (27%), skills (27%), and behavior (10.8%). The Q statistics indicated that there were no significant differences in the effect sizes for different types of learning outcome (QB = 2.888, p = 0.409). Interestingly, despite this, it can be seen that behavior yielded the largest effect (g = 2.348), perhaps because VR can simulate real scenes, which allows learners to practice and improve their behavior patterns in a virtual environment. This can lead to observable changes in behavioral outcomes, such as the ability to perform experiments [].

4.3. Moderator Analysis for VC Platform-Supported Online Learning

4.3.1. Research Context in VC Studies

As shown in Table 5, more studies were conducted in regular-sized classes (80%) compared with small classes (20%). The moderating effect of sample size was obvious (QB = 9.660, p < 0.01). The effect size was significantly larger for small classes (g = 0.982) than for regular-sized classes (g = 0.205), which suggests that smaller classes may benefit more from online learning supported by VC platforms. This finding is consistent with previous studies [,]. In smaller classes, each student can engage more, which can prevent marginalization []. Due to the increase in the number of students in regular-sized classes, teachers may find it challenging to pay attention to each student’s learning behavior, which could lead to some students being ignored and ultimately disengaging from the classroom.

Table 5.

Moderator analysis results for VC platform-supported online learning.

As for the disciplinary domain, humanities and social sciences were the most commonly studied disciplines (51.4%), while engineering and technical sciences were the least studied (5.7%). The Q statistic revealed a significant difference in effect size across various disciplines (QB = 63.695, p < 0.001). Notably, natural science had the largest effect size (g = 0.732), followed by humanities and social sciences (g = 0.302) and medical science (g = 0. 307), while engineering and technology sciences showed a negative effect (g = −0.583). Many concepts in natural science can be taught through diagrams and simple demonstrations, and VC platforms can support this type of presentation, thus allowing teachers to show relevant learning material through screen sharing. In addition, some problems in natural science may be easier to understand through discussion and interaction. VC environments meet this need and provide a means for real-time communication between teachers and students.

More studies of online learning supported by VC platforms were conducted at the college level (85.7%) than at the K–12 level (14.3%). At the same time, this study found that grade level had a significant impact on the learning outcome (QB = 9.288, p < 0.01), and the effect size for K–12 (g = 0.801) was larger than for higher education (g = 0.208). This may be because K–12 students tend to require more supervision due to their lower level of self-discipline. VC platforms offer a centralized online learning environment where young students can be supervised effectively. College students may have already adapted to this approach to online learning, which could result in less novelty and improvement in learning outcomes than among K–12 students.

4.3.2. Technological Features in VC Studies

Regarding the camera option, 17 studies turned on the cameras (48.6%), while more studies chose to turn them off (51.4%). A statistically significant moderating effect was found for the camera option (QB = 4.115, p < 0.05). In particular, the effect size of having cameras on (g = 0.433) was greater than when cameras were off (g = 0.148). This finding corroborates the ideas presented in previous research [,] and further confirms the importance of camera use in online learning. When cameras are turned on, students can receive visual supervision from the teacher, which helps them focus on the course. They can also observe the status of other students, which can stimulate peer-driven learning motivation and encourage them to work harder to achieve better learning performance. When cameras are off, students often have to accept the learning content on the screen passively, and their connection with teachers and classmates is reduced. This learning mode not only causes fatigue but may also make students feel lonely, thus affecting their learning.

Zoom was the most commonly used VC platform (45.7%), followed by Tencent Meeting (8.6%), Gather (8.6%), and video games (5.7%). The platform was also identified as a significant moderating variable (QB = 35.887, p < 0.001). Among the mainstream platforms, the intensity of the effect varied. Specifically, the use of a video game [] as a VC platform had the strongest effect size (g = 1.270), followed by Gather (g = 0.927), Tencent Meeting (g = 0.663), and Zoom (g = 0.071). Surprisingly, although Zoom and Tencent Meeting have a considerable share of the VC market, their effect sizes were lower than those reported for Gather and video games. One explanation for this phenomenon could be that students may experience fatigue and burnout from prolonged use of traditional VC platforms [], which may potentially weaken the effectiveness of these platforms in supporting online learning.

4.3.3. Instructional Design in VC Studies

Direct instruction was used in the majority of studies (54.3%), followed by inquiry learning (17.1%), experiential learning (11.4%), collaborative learning (11.4%), and game-based learning (5.7%). The various pedagogies did not exhibit significant differences in effect size (QB = 6.510, p = 0.164). This finding suggests that the choice of pedagogy may not have a decisive impact on learning outcomes in VC-supported online learning. Surprisingly, despite the lack of significant variance, game-based learning produced the largest effect size (g = 1.270). We believe this may be attributable to the greater autonomy students had in the game [], which increased student engagement and satisfaction. This finding challenges the assumption that direct instruction is the most appropriate pedagogical method and opens up new possibilities for innovative applications of the VC environment. Although there are relatively few studies on this aspect, existing research has shown its potential and value.

Regarding learning outcome types, knowledge was the most commonly used learning outcome measure (51.4%), with only 2.9% of studies using behavior. There was no significant moderating effect found for learning outcome type (QB = 1.480, p = 0.687). However, it is worth noting that the effect size of behavior was the greatest (g = 0.657). One possible reason for this phenomenon is that learners’ behaviors—such as speaking, asking questions, and participating in discussions—are more readily observable by teachers and other students on VC platforms. This emphasis may further amplify the impact of behavior on learning outcomes.

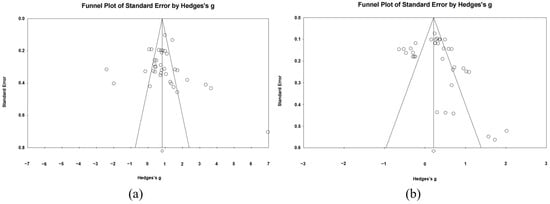

4.4. Publication Bias

Publication bias was assessed through the use of a funnel plot and a classic fail-safe N test []. If there is no publication bias, all points (i.e., each individual study) should be symmetrically distributed along the same line of the funnel plot (i.e., the overall effect size), forming a funnel shape. In the plots, circles represent individual studies, and diamonds represent the pooled effect size. Figure 3a shows the symmetrical funnel plot for the VR meta-analysis, with most studies located in the upper middle part of the plot, which indicates no publication bias. Figure 3b shows possible publication bias in the meta-analysis of VC platforms.

Figure 3.

Publication bias funnel plots. (a) VR; (b) VC platforms.

We also employed the classic fail-safe N test due to the potential subjectivity of the funnel plot. It is generally recommended that the fail-safe N value should be greater than 5k + 10, where k is the number of independent studies included in the meta-analysis. The classic fail-safe N test estimate for VR was 3441, which is significantly higher than 195 (i.e., 5 × 37 + 10). Similarly, the estimate for VC was 778, exceeding the critical value of 185 (i.e., 5 × 35 + 10). Thus, it appears that publication bias does not significantly affect the validity of the meta-analysis.

5. Comparative Discussion

To answer RQ1, both VR and VC platforms effectively promote online learning, but their impact differs due to three possible technical affordances: immersion, interaction, and supported pedagogies. Compared with VC platforms that rely primarily on real-time video transmission, VR stands out due to its ability to provide physical and cognitive immersion in a virtual world []. This immersive affordance generates a powerful sense of presence [], which leads to enhanced learning experience and performance. VR also allows for more natural interactions in the virtual environment and facilitates convenient peer-to-peer interactions. VC platforms often restrict social interaction to fixed forms such as text chat and on-screen speech, which enables a mostly one-to-many discourse mode between the teacher and students. Finally, VR has greater potential for student-centered pedagogies, such as experiential learning, which is supported by more studies on this topic related to VR than VC. This divergence in pedagogical support contributes to the variance in learning effectiveness between these two technologies.

To answer RQ2, moderating effects exist for both VR and VC platforms, with contextual and technological factors commonly identified with VC platforms and pedagogical factors with VR. First, contextual factors such as class size and grade level were found to significantly moderate the effects of VC-supported online learning, while VR was more generally applicable and had a more stable supporting effect for online learning. In a large class, the one-to-many model of the VC environment makes it difficult for teachers to consider the needs of all students, and the difficulty in class management increases significantly. Younger students, with limited attention spans and poor self-regulated learning skills, can more easily withdraw from the teacher-centered model. VR, meanwhile, can support more diverse instructional models, which makes it effective across different contexts. The VR environment, with its enhanced immersion, presence, and usability [], can support effective instruction for both large-scale lectures in regular-sized classes and group discussions in small classes []. Additionally, this difference may also be interpreted through attribution theory []. VR’s student-centered structure encourages learners to view success as the result of their own effort, while VC’s more teacher-led model—especially in large or lower-grade classrooms—may foster external attributions, potentially reducing engagement and motivation.

Second, technical factors such as the camera option have a significant influence on the efficacy of online learning with VC platforms, whereas the effects of VR-supported online learning remain stable regardless of the equipment chosen. The VC brand appears to be a moderating factor for online learning; compared with established VC brands such as Skype, the latest VC platforms tend to incorporate more social functions and, thus, lead to improved online learning experiences [,]. By contrast, advances in VR equipment appear to have less of an impact on learning outcomes. In fact, several meta-analyses revealed that desktop VR programs have been more effective than HMD-based programs overall, despite the technical advantages of HMDs [,]. One possible explanation for this paradox is that the effectiveness of VR-supported online learning largely depends on its instructional design, which offsets the influence of technical disparities among devices. Despite offering an enriched experience, more advanced VR devices can also cause increased extrinsic cognitive load and cybersickness. This pattern may also be explained by the novelty effect [], which temporarily enhances learner engagement when new technologies like HMDs are introduced. However, as the initial excitement fades, motivation may decline—especially if the learning task lacks intrinsic value. This may result in similar long-term outcomes for both immersive and non-immersive VR platforms.

Third, the choice of pedagogy has a more profound impact on online learning in the VR environment than for VC platforms. VR appears to be capable of supporting diverse pedagogies in the literature, but to a varying extent. This aligns with the findings of Luo et al. [], which indicated that VR-supported learning tends to benefit more from active and experiential pedagogies compared to traditional instructional approaches. Pedagogies such as experiential, collaborative, and game-based learning are more suitable for implementation in a VR environment due to its high degree of representational fidelity and learner interaction, which enables identification, embodied action, natural communication, and scripted behaviors [,]. VC environments, meanwhile, have been employed primarily for the delivery of direct instruction. The homogeneity of pedagogical choice within VC environments may explain the lack of significant moderating effects for this variable, as it limits the exploration of alternative pedagogies that could potentially enhance the learning experience. However, as the number of studies on the game-based pedagogy for VC is limited, the generalizability of these findings should be interpreted with caution.

Implications for Practice and Research

Drawing upon these findings, we propose several implications for online teaching practice. First, we recommend that teachers using VC platforms conduct online learning for smaller classes with younger students owing to its superiority in this context. However, as class sizes expand and students mature, VR emerges as a more viable and effective alternative, particularly when financial considerations permit. Second, we encourage teachers to choose more recent VC platforms for online learning due to their more advanced functions; students should also be encouraged to turn on their cameras for improved effectiveness. For VR-supported online learning, we favor desktop computers over HMDs, as they offer a similar learning experience, but are more affordable and accessible. Third, in the VR environment, we recommend that teachers adopt more student-centered pedagogies to capture the affordances of this technology in full. Conversely, in the VC environment, a lecture-based approach may be more appropriate, given the characteristics of this medium.

Based on our meta-analyses, we derived three limitations for future research. First, we observed that many reviewed articles lacked sufficient statistical data. We therefore strongly recommend that future studies enhance the rigor of their data and carry out comprehensive reporting of relevant data. Second, our findings revealed that current studies on VR or VC online learning interventions focus on cognitive outcomes, such as knowledge and skills acquisition. We thus encourage researchers to consider non-cognitive outcomes as well, such as behavioral changes and emotional development, in their future investigations. Third, the analyses were conducted using CMA software, which does not support multilevel modeling or robust variance estimation. As a result, in some cases, the statistical dependence among multiple effect sizes extracted from the same study may not have been fully addressed. Future research could adopt more advanced statistical tools to better account for this issue.

6. Conclusions

This study conducted comparative meta-analyses of existing empirical research into the efficacy of VR and VC platforms for online learning. First, the results showed that both VR and VC platforms can effectively enhance learning outcomes. Further comparison revealed that VR technology has a more pronounced impact on online learning than VC platforms. Second, we extensively discussed the moderating effects of three categories of variables: research context, technological characteristics, and instructional design. The findings provide a strong guide for the selection of technology for online education.

However, it is important to point out that this study has some limitations. First, the list of moderator variables may not have been exhaustive. For example, the interaction level of VR, a potential element that could influence its effectiveness in supporting online learning, was not explored in detail. Second, the number of empirical studies included in the meta-analyses is relatively limited, which may have influenced the results. Consequently, future research should explore more potential moderating factors and strive to overcome the problem of insufficient data to conduct a more accurate and comprehensive evaluation of the efficacy of VR and VC platforms in supporting online learning.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app15116293/s1, Table S1: Research quality assessment by MESQRI.

Author Contributions

Conceptualization, H.L.; methodology, H.L.; formal analysis, Y.Z.; investigation, Y.Z.; writing—original draft preparation, Y.Z., S.P. and X.H.; writing—review and editing, H.L.; visualization, Y.Z.; supervision, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, grant number 62177021.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in the Mendeley Data repository at https://doi.org/10.17632/z9v43xb35g.1.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| VR | Virtual reality |

| VC | Videoconferencing |

| ES | Effect size |

| HMDs | Head-mounted displays |

| CAVE | Cave automatic virtual environment |

| RCT | Randomized controlled trial |

| CMA | Comprehensive Meta-Analysis |

| REM | Random-effects model |

References

- Garlinska, M.; Osial, M.; Proniewska, K.; Pregowska, A. The influence of emerging technologies on distance education. Electronics 2023, 12, 1550. [Google Scholar] [CrossRef]

- Lee, Y.; Choi, J. A review of online course dropout research: Implications for practice and future research. Educ. Technol. Res. Dev. 2011, 59, 593–618. [Google Scholar] [CrossRef]

- Bakhov, I.; Opolska, N.; Bogus, M.; Anishchenko, V.; Biryukova, Y. Emergency distance education in the conditions of COVID-19 pandemic: Experience of Ukrainian Universities. Educ. Sci. 2021, 11, 364. [Google Scholar] [CrossRef]

- Elmer, T.; Mepham, K.; Stadtfeld, C. Students under lockdown: Comparisons of students’ social networks and mental health before and during the COVID-19 crisis in Switzerland. PLoS ONE 2020, 15, e0236337. [Google Scholar] [CrossRef]

- Lamanauskas, V.; Makarskaitė-Petkevičienė, R. Distance lectures in university studies: Advantages, disadvantages, improvement. Contemp. Educ. Technol. 2021, 13, ep309. [Google Scholar] [CrossRef] [PubMed]

- Dondera, R.; Jia, C.; Popescu, V.; Nita-Rotaru, C.; Dark, M.; York, C.S. Virtual classroom extension for effective distance education. IEEE Comput. Graph. Appl. 2008, 28, 64–74. [Google Scholar] [CrossRef]

- Lassoued, Z.; Alhendawi, M.; Bashitialshaaer, R. An exploratory study of the obstacles for achieving quality in distance learning during the COVID-19 pandemic. Educ. Sci. 2020, 10, 232. [Google Scholar] [CrossRef]

- Wallengren Lynch, M.; Lena, D.; Cuadra, C. Information communication technology during COVID-19. Soc. Work Educ. 2023, 42, 1–13. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Wu, B.; Yu, X.; Gu, X. Effectiveness of immersive virtual reality using head-mounted displays on learning performance: A meta-analysis. Br. J. Educ. Technol. 2020, 51, 1991–2005. [Google Scholar] [CrossRef]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A.; Kenyon, R.V.; Hart, J.C. The CAVE: Audio visual experience automatic virtual environment. Commun. ACM 1992, 35, 64–72. [Google Scholar] [CrossRef]

- Maas, M.J.; Hughes, J.M. Virtual, augmented and mixed reality in K–12 education: A review of the literature. Technol. Pedagog. Educ. 2020, 29, 231–249. [Google Scholar] [CrossRef]

- Merchant, Z.; Goetz, E.T.; Cifuentes, L.; Keeney-Kennicutt, W.; Davis, T.J. Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Comput. Educ. 2014, 70, 29–40. [Google Scholar] [CrossRef]

- Chessa, M.; Solari, F. The sense of being there during online classes: Analysis of usability and presence in web-conferencing systems and virtual reality social platforms. Behav. Inf. Technol. 2021, 40, 1237–1249. [Google Scholar] [CrossRef]

- Sun, Y.; Albeaino, G.; Gheisari, M.; Eiris, R. Online site visits using virtual collaborative spaces: A plan-reading activity on a digital building site. Adv. Eng. Inform. 2022, 53, 101667. [Google Scholar] [CrossRef]

- Chan, P.; Van Gerven, T.; Dubois, J.-L.; Bernaerts, K. Virtual chemical laboratories: A systematic literature review of research, technologies and instructional design. Comput. Educ. Open 2021, 2, 100053. [Google Scholar] [CrossRef]

- Checa, D.; Miguel-Alonso, I.; Bustillo, A. Immersive virtual-reality computer-assembly serious game to enhance autonomous learning. Virtual Real. 2023, 27, 3301–3318. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Xu, T.; Zhou, Y. Effects of VR instructional approaches and textual cues on performance, cognitive load, and learning experience. Educ. Technol. Res. Dev. 2024, 72, 585–607. [Google Scholar] [CrossRef]

- Suduc, A.-M.; Bizoi, M.; Filip, F.G. Status, challenges and trends in videoconferencing platforms. Int. J. Comput. Commun. Control 2023, 18, 5465. [Google Scholar] [CrossRef]

- Alameri, J.; Masadeh, R.; Hamadallah, E.; Ismail, H.B.; Fakhouri, H.N. Students’ perceptions of e-learning platforms (Moodle, Microsoft Teams and Zoom platforms) in the University of Jordan Education and its relation to self-study and academic achievement during COVID-19 pandemic. Adv. Res. Stud. J. 2020, 11, 21–33. [Google Scholar]

- Quadir, B.; Zhou, M. Students perceptions, system characteristics and online learning during the COVID-19 epidemic school disruption. Int. J. Distance Educ. Technol. 2021, 19, 15–33. [Google Scholar] [CrossRef]

- Serhan, D. Transitioning from face-to-face to remote learning: Students’ attitudes and perceptions of using Zoom during COVID-19 pandemic. Int. J. Technol. Educ. Sci. 2020, 4, 335–342. [Google Scholar] [CrossRef]

- Suduc, A.-M.; Bizoi, M.; Filip, F.G. Exploring multimedia web conferencing. Inform. Econo. 2009, 13, 5–17. [Google Scholar]

- Gedera, D.S.P. Students’ experiences of learning in a virtual classroom. Int. J. Educ. Dev. Using Inf. Commun. Technol. 2014, 10, 93–101. [Google Scholar]

- Han, H. Do nonverbal emotional cues matter? Effects of video casting in synchronous virtual classrooms. Am. J. Distance Educ. 2013, 27, 253–264. [Google Scholar] [CrossRef]

- Rojabi, A.R.; Setiawan, S.; Munir, A.; Purwati, O.; Widyastuti. The camera-on or camera-off, is it a dilemma? Sparking engagement, motivation, and autonomy through microsoft teams videoconferencing. Int. J. Emerging Technol. Learn. 2022, 17, 174–189. [Google Scholar] [CrossRef]

- Shih, Y.-C. Communication strategies in a multimodal virtual communication context. System 2014, 42, 34–47. [Google Scholar] [CrossRef]

- Jarvis, L. Narrative as virtual reality 2: Revisiting immersion and interactivity in literature and electronic media. Int. J. Perform. Arts Digit. Media 2019, 15, 239–241. [Google Scholar] [CrossRef]

- Speidel, R.; Felder, E.; Schneider, A.; Öchsner, W. Virtual reality against Zoom fatigue? A field study on the teaching and learning experience in interactive video and VR conferencing. GMS J. Med. Educ. 2023, 40, Doc19. [Google Scholar] [CrossRef]

- Ślósarz, L.; Jurczyk-Romanowska, E.; Rosińczuk, J.; Kazimierska-Zając, M. Virtual reality as a teaching resource which reinforces emotions in the teaching process. Sage Open 2022, 12, 21582440221118083. [Google Scholar] [CrossRef]

- Lakka, I.; Zafeiropoulos, V.; Leisos, A. Online virtual reality-based vs. Face-to-face physics laboratory: A case study in distance learning science curriculum. Educ. Sci. 2023, 13, 1083. [Google Scholar] [CrossRef]

- Khodabandeh, F. Exploring the applicability of virtual reality- enhanced education on extrovert and introvert EFL learners’ paragraph writing. Int. J. Educ. Technol. High. Educ. 2022, 19, 27. [Google Scholar] [CrossRef] [PubMed]

- Madathil, K.C.; Frady, K.; Hartley, R.; Bertrand, J.; Alfred, M.; Gramopadhye, A. An empirical study investigating the effectiveness of integrating virtual realitybased case studies into an online asynchronous learning environment. Comput. Educ. J. 2017, 8, 1–10. [Google Scholar]

- Sai, Y. Online music learning based on digital multimedia for virtual reality. Interact. Learn. Environ. 2024, 32, 1751–1762. [Google Scholar] [CrossRef]

- Vlahovic, S.; Suznjevic, M.; Pavlin-Bernardic, N.; Skorin-Kapov, L. The Effect of VR Gaming on Discomfort, Cybersickness, and Reaction Time. In Proceedings of the 2021 13th International Conference on Quality of Multimedia Experience (QoMEX), Montreal, QC, Canada, 14–17 June 2021; pp. 163–168. [Google Scholar]

- Chang, S.-C.; Ting-Chia, H.; Yen-Ni, C.; Jong, M.S. The effects of spherical video-based virtual reality implementation on students’ natural science learning effectiveness. Interact. Learn. Environ. 2020, 28, 915–929. [Google Scholar] [CrossRef]

- Luo, H.; Li, G.; Feng, Q.; Yang, Y.; Zuo, M. Virtual reality in K-12 and higher education: A systematic review of the literature from 2000 to 2019. J. Comput. Assist. Learn. 2021, 37, 887–901. [Google Scholar] [CrossRef]

- Illi, C.; Elhassouny, A. Edu-Metaverse: A Comprehensive Review of Virtual Learning Environments. IEEE Access 2025, 13, 30186–30211. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, M.; Lam, K.K.L. Dance in Zoom: Using video conferencing tools to develop students’ 4C skills and self-efficacy during COVID-19. Think. Skills Creat. 2022, 46, 101102. [Google Scholar] [CrossRef]

- Tao, H.; Feng, B. Zoom Data Analysis in an Introductory Course in Mechanical Engineering. In Proceedings of the 7th International Conference on Higher Education Advances (HEAd’21), Online, 22–23 June 2021. [Google Scholar]

- Banoor, R.Y.; Rennie, F.; Santally, M.I. The relationship between quality of student contribution in learning activities and their overall performances in an online course. Eur. J. Open Distance E-Learn. 2018, 21, 16–30. [Google Scholar] [CrossRef]

- Alshaibani, T.; Almarabheh, A.; Jaradat, A.; Deifalla, A. Comparing online and face-to-face performance in scientific courses: A retrospective comparative gender study of year-1 students. Adv. Med. Educ. Pract. 2023, 14, 1119–1127. [Google Scholar] [CrossRef]

- Lin, T.-C. Student learning performance and satisfaction with traditional face-to-face classroom versus online learning: Evidence from teaching Statistics for Business. E-Learn. Digit. Media 2022, 19, 340–360. [Google Scholar] [CrossRef]

- Patricia Aguilera-Hermida, A. College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 2020, 1, 100011. [Google Scholar] [CrossRef] [PubMed]

- Dixon, M.; Syred, K. Factors Influencing Student Engagement in Online Synchronous Teaching Sessions: Student Perceptions of Using Microphones, Video, Screen-Share, and Chat. In Proceedings of the Learning and Collaboration Technologies. Designing the Learner and Teacher Experience (HCII 2022), Virtual, 26 June–1 July 2022; Springer: Cham, Switzerland, 2022; pp. 209–227. [Google Scholar]

- Petchamé, J.; Iriondo, I.; Azanza, G. “Seeing and being seen” or just “seeing” in a smart classroom context when videoconferencing: A user experience-based qualitative research on the use of cameras. Int. J. Environ. Res. Public Health 2022, 19, 9615. [Google Scholar] [CrossRef] [PubMed]

- Indergaard, T.; Stjern, B. Counselling of problem based learning (PBL) groups through videoconferencing. In Connecting Health and Humans; Studies in Health Technology and Informatics; IOS Press: Amsterdam, The Netherlands, 2009; pp. 603–607. [Google Scholar] [CrossRef]

- Sousa, M. Modding Modern Board Games for E-Learning: A Collaborative Planning Exercise about Deindustrialization. In Proceedings of the 2021 4th International Conference of the Portuguese Society for Engineering Education (CISPEE), Lisbon, Portugal, 21–23 June 2021; pp. 1–8. [Google Scholar]

- Qin, R.; Yu, Z. Extending the UTAUT model of tencent meeting for online courses by including community of inquiry and collaborative learning constructs. Int. J. Hum. Comput. Interact. 2024, 40, 5279–5297. [Google Scholar] [CrossRef]

- Gibbs, N.P.; Sloat, E.F.; Amrein-Beardsley, A.; Cha, D.-J.; Mulerwa, O.; Beck, M.F. Establishing class size policies for optimal course outcomes in online undergraduate education programs. Stud. High. Educ. 2023, 48, 1841–1855. [Google Scholar] [CrossRef]

- Russell, V.; Curtis, W. Comparing a large- and small-scale online language course: An examination of teacher and learner perceptions. Internet High. Educ. 2013, 16, 1–13. [Google Scholar] [CrossRef]

- Blatchford, P.; Moriarty, V.; Edmonds, S.; Martin, C. Relationships between Class Size and Teaching: A Multimethod Analysis of English Infant Schools. Am. Educ. Res. J. 2002, 39, 101–132. [Google Scholar] [CrossRef]

- OECD. Education at a Glance 2021: OECD Indicators; OECD Publishing: Paris, France, 2021. [CrossRef]

- Kingma, B.; Keefe, S. An analysis of the virtual classroom: Does size matter? Do residencies make a difference? Should you hire that instructional designer? J. Educ. Libr. Inf. Sci. 2006, 47, 127–143. [Google Scholar] [CrossRef]

- Choi-Lundberg, D.L.; Butler-Henderson, K.; Harman, K.; Crawford, J. A systematic review of digital innovations in technology-enhanced learning designs in higher education. Australas. J. Educ. Technol. 2023, 39, 133–162. [Google Scholar] [CrossRef]

- Cao, S.; Juan, C.; ZuoChen, Z.; Liu, L. The effectiveness of VR environment on primary and secondary school students’ learning performance in science courses. Interact. Learn. Environ. 2024, 32, 7321–7337. [Google Scholar] [CrossRef]

- Ran, H.; Kim, N.J.; Secada, W.G. A meta-analysis on the effects of technology’s functions and roles on students’ mathematics achievement in K-12 classrooms. J. Comput. Assist. Learn. 2022, 38, 258–284. [Google Scholar] [CrossRef]

- Slater, M. Immersion and the illusion of presence in virtual reality. Br. J. Psychol. 2018, 109, 431–433. [Google Scholar] [CrossRef] [PubMed]

- Chittaro, L.; Buttussi, F. Assessing knowledge retention of an immersive serious game vs. A traditional education method in aviation safety. IEEE Trans. Vis. Comput. Graph. 2015, 21, 529–538. [Google Scholar] [CrossRef] [PubMed]

- Valentine, A.; Van Der Veen, T.; Kenworthy, P.; Hassan, G.M.; Guzzomi, A.L.; Khan, R.N.; Male, S.A. Using head mounted display virtual reality simulations in large engineering classes: Operating vs observing. Australas. J. Educ. Technol. 2021, 37, 119–136. [Google Scholar] [CrossRef]

- Ai-Lim Lee, E.; Wong, K.W.; Fung, C.C. How does desktop virtual reality enhance learning outcomes? A structural equation modeling approach. Comput. Educ. 2010, 55, 1424–1442. [Google Scholar] [CrossRef]

- Moro, C.; Štromberga, Z.; Stirling, A. Virtualisation devices for student learning: Comparison between desktop-based (Oculus Rift) and mobile-based (Gear VR) virtual reality in medical and health science education. Australas. J. Educ. Technol. 2017, 33. [Google Scholar] [CrossRef]

- Yu, M.; Zhou, R.; Wang, H.; Zhao, W. An evaluation for VR glasses system user experience: The influence factors of interactive operation and motion sickness. Appl. Ergon. 2019, 74, 206–213. [Google Scholar] [CrossRef]

- Händel, M.; Bedenlier, S.; Kopp, B.; Gläser-Zikuda, M.; Kammerl, R.; Ziegler, A. The webcam and student engagement in synchronous online learning: Visually or verbally? Educ. Inf. Technol. 2022, 27, 10405–10428. [Google Scholar] [CrossRef]

- Maimaiti, G.; Chengyuan, J.; Hew, K.F. Student disengagement in web-based videoconferencing supported online learning: An activity theory perspective. Interact. Learn. Environ. 2023, 31, 4883–4902. [Google Scholar] [CrossRef]

- Schwenck, C.M.; Pryor, J.D. Student perspectives on camera usage to engage and connect in foundational education classes: It’s time to turn your cameras on. Int. J. Educ. Res. Open 2021, 2, 100079. [Google Scholar] [CrossRef]

- Williams, C.; Pica-Smith, C. Camera use in the online classroom: Students’ and educators’ perspectives. Eur. J. Teach. Educ. 2022, 4, 28–51. [Google Scholar] [CrossRef]

- Marquart, M.; Russell, R. Dear Professors: Don’t Let Student Webcams Trick You. EDUCAUSE Review, 10 September 2020. Available online: https://er.educause.edu/blogs/2020/9/dear-professors-dont-let-student-webcams-trick-you (accessed on 6 May 2025).

- Chen, G.; Chen, P.; Huang, W.; Zhai, J. Continuance intention mechanism of middle school student users on online learning platform based on qualitative comparative analysis method. Math. Probl. Eng. 2022, 2022, 3215337. [Google Scholar] [CrossRef]

- Alom, K.; Hasan, M.K.; Khan, S.A.; Reaz, M.T.; Saleh, M.A. The COVID-19 and online learning process in Bangladesh. Heliyon 2023, 9, e13912. [Google Scholar] [CrossRef]

- Martin, F.; Parker, M.A. Use of synchronous virtual classrooms: Why, who, and how? J. Online Learn. Teach. 2014, 10, 192–210. [Google Scholar]

- Zhang, F.; Zhang, Y.; Li, G.; Luo, H. Using virtual reality interventions to promote social and emotional learning for children and adolescents: A systematic review and meta-analysis. Children 2024, 11, 41. [Google Scholar] [CrossRef]

- Zhang, J.; Li, G.; Huang, Q.; Feng, Q.; Luo, H. Augmented reality in K–12 education: A systematic review and meta-analysis of the literature from 2000 to 2020. Sustainability 2022, 14, 9725. [Google Scholar] [CrossRef]

- Reigeluth, C.M. (Ed.) Instructional-Design Theories and Models: A New Paradigm of Instructional Theory, Volume II; Routledge: New York, NY, USA, 1999; ISBN 978-1-4106-0378-4. [Google Scholar]

- Wang, F.; Hannafin, M.J. Design-Based Research and Technology-Enhanced Learning Environments. ETR&D 2005, 53, 5–23. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Reed, D.A.; Cook, D.A.; Beckman, T.J.; Levine, R.B.; Kern, D.E.; Wright, S.M. Association between Funding and Quality of Published Medical Education Research. JAMA 2007, 298, 1002–1009. [Google Scholar] [CrossRef]

- Cook, D.A.; Reed, D.A. Appraising the Quality of Medical Education Research Methods: The Medical Education Research Study Quality Instrument and the Newcastle–Ottawa Scale-Education. Acad. Med. 2015, 90, 1067. [Google Scholar] [CrossRef]

- Hedges, L.V.; Olkin, I. (Eds.) CHAPTER 5—Estimation of a single effect size: Parametric and nonparametric methods. In Statistical Methods for Meta-Analysis; Academic Press: San Diego, CA, USA, 1985; pp. 75–106. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 327, 557–560. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.T.; Rothstein, H.R. A basic introduction to fixed-effect and random-effects models for meta-analysis. Res. Synth. Methods 2010, 1, 97–111. [Google Scholar] [CrossRef] [PubMed]

- Ghanbarzadeh, R.; Ghapanchi, A.H. Investigating various application areas of three-dimensional virtual worlds for higher education. Br. J. Educ. Technol. 2018, 49, 370–384. [Google Scholar] [CrossRef]

- Sun, L.; Zhen, G.; Hu, L. Educational games promote the development of students’ computational thinking: A meta-analytic review. Interact. Learn. Environ. 2023, 31, 3476–3490. [Google Scholar] [CrossRef]

- Bagley, E.A.; Shaffer, D.W. Stop talking and type: Comparing virtual and face-to-face mentoring in an epistemic game. J. Comput. Assist. Learn. 2015, 31, 606–622. [Google Scholar] [CrossRef]

- Riedl, R. On the stress potential of videoconferencing: Definition and root causes of Zoom fatigue. Electron. Mark. 2022, 32, 153–177. [Google Scholar] [CrossRef]

- Rosenthal, R. The file drawer problem and tolerance for null results. Psychol. Bull. 1979, 86, 638–641. [Google Scholar] [CrossRef]

- Fowler, C. Virtual reality and learning: Where is the pedagogy? Br. J. Educ. Technol. 2015, 46, 412–422. [Google Scholar] [CrossRef]

- Shin, D.-H. The role of affordance in the experience of virtual reality learning: Technological and affective affordances in virtual reality. Telemat. Inform. 2017, 34, 1826–1836. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, H.; Liu, Y.; Cheng, W. Is Metaverse Better than Video Conferencing in Promoting Social Presence and Learning Engagement? In Proceedings of the 2023 International Conference on Intelligent Education and Intelligent Research (IEIR), Wuhan, China, 5–7 November 2023; pp. 1–5. [Google Scholar]

- Weiner, B. An Attributional Theory of Achievement Motivation and Emotion. Psychol. Rev. 1985, 92, 548–573. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.-H.; Yu, H.-Y.; Tzeng, J.-W.; Zhong, K.-C. Using an avatar-based digital collaboration platform to foster ethical education for university students. Comput. Educ. 2023, 196, 104728. [Google Scholar] [CrossRef]

- Clark, R.E. Reconsidering Research on Learning from Media. Rev. Educ. Res. 1983, 53, 445–459. [Google Scholar] [CrossRef]

- Dalgarno, B.; Lee, M.J.W. What are the learning affordances of 3-D virtual environments? Br. J. Educ. Technol. 2010, 41, 10–32. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).