Research on Linear Active Disturbance Rejection Control of Electrically Excited Motor for Vehicle Based on ADP Parameter Optimization

Abstract

1. Introduction

2. LADRC of EESM

2.1. Model Description of Electrically Excited Motor

2.2. Problem Statements

2.3. Proof of Stability

3. Adaptive Dynamic Programming

3.1. Problem Statements

3.2. ADP-Based LADRC Optimization Procedure

3.3. Algorithm Properties

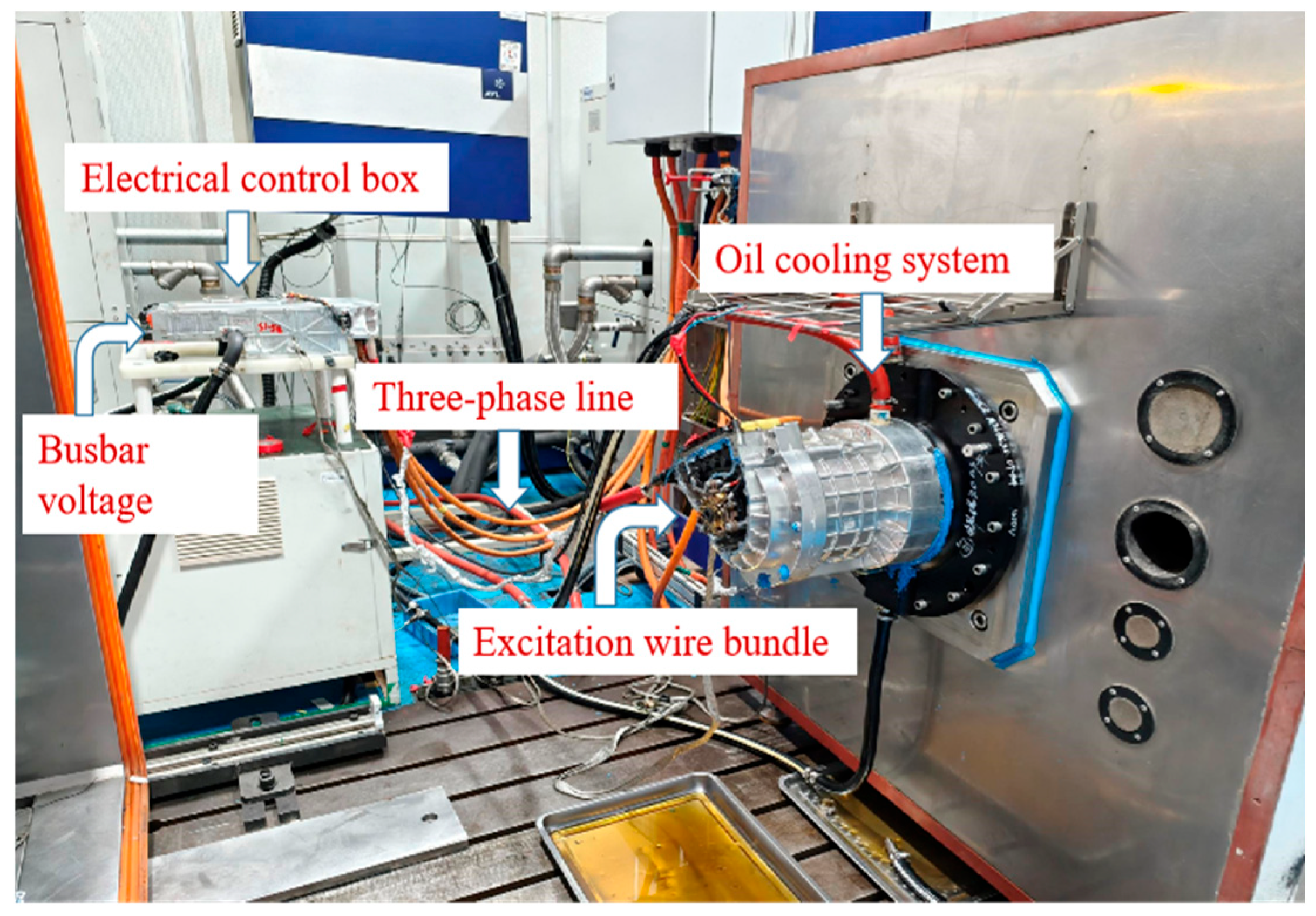

4. Experimental Results

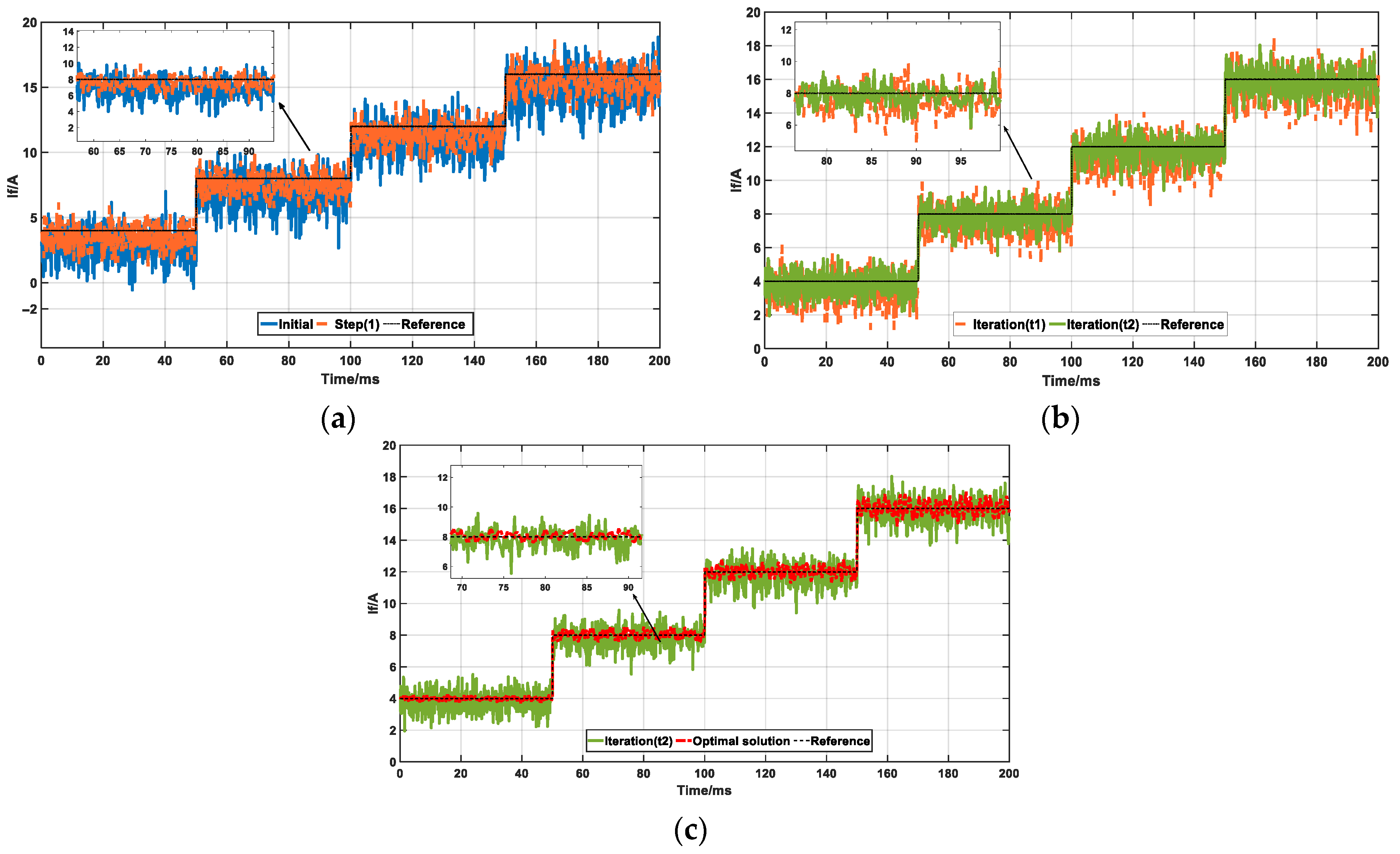

4.1. Initial Process

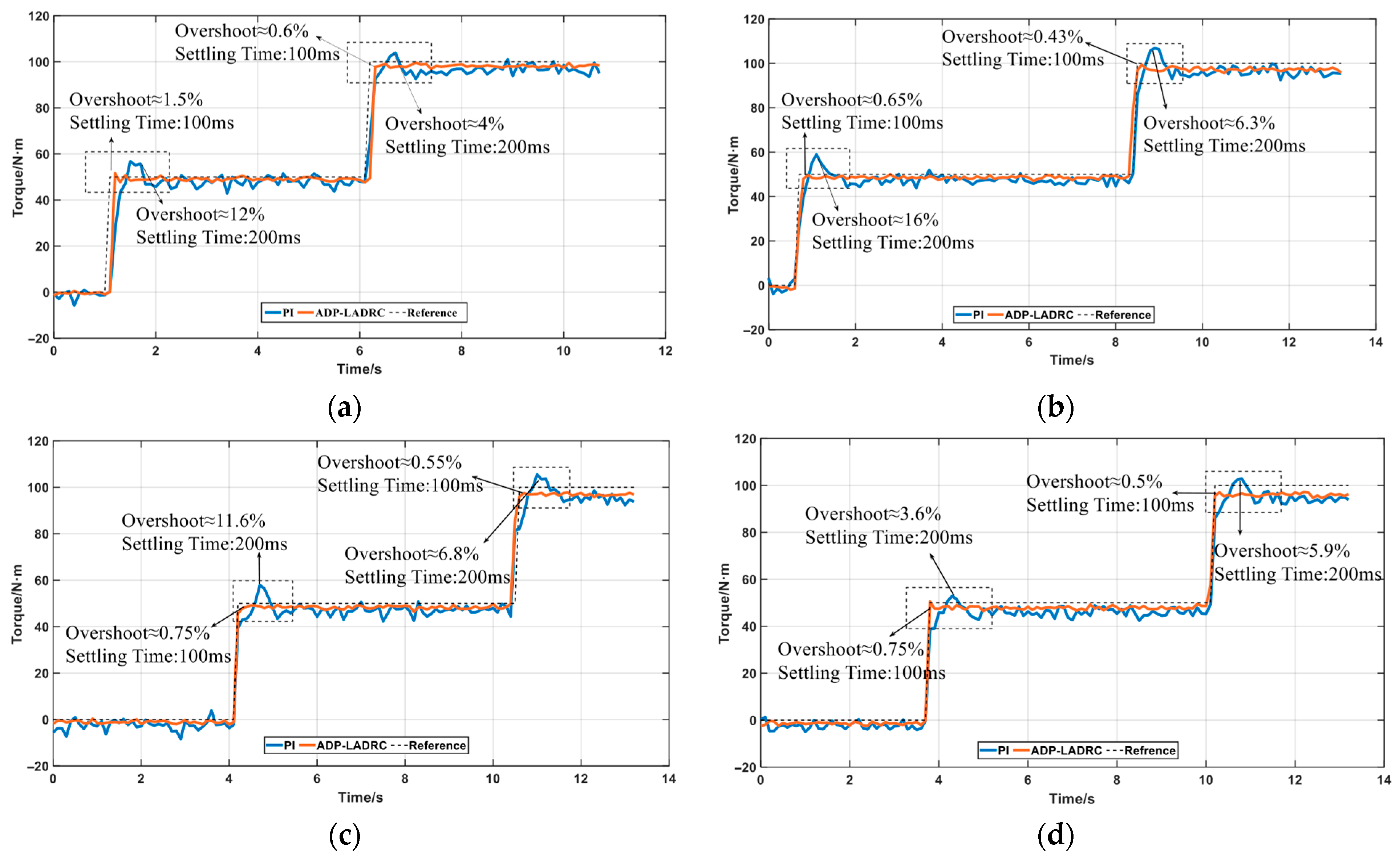

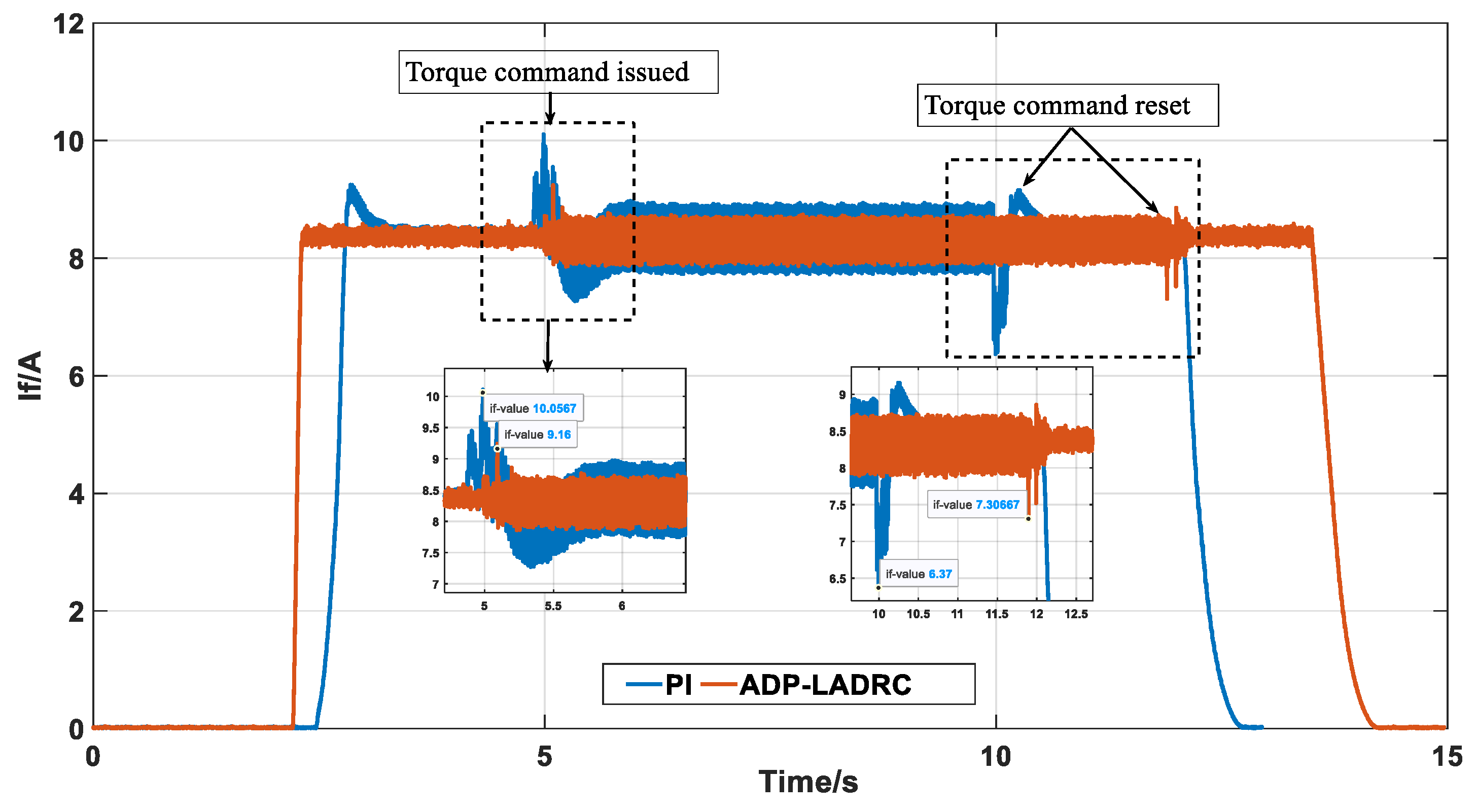

4.2. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Instantaneous Power Definition

References

- Petit, Y.L. Electric Vehicle Life Cycle Analysis and Raw Material Availability. Transp. Environ. 2017. Available online: https://www.transportenvironment.org/articles (accessed on 26 October 2017).

- Widmer, J.D.; Martin, R.; Kimiabeigi, M. Electric Vehicle Traction Motors without Rare Earth Magnets. Sustain. Mater. Technol. 2015, 3, 7–13. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Z. Sliding-mode observer-based mechanical parameter estimation for permanent magnet synchronous motor. IEEE Trans. Power Electron. 2015, 31, 5732–5745. [Google Scholar] [CrossRef]

- Liang, D.; Li, J.; Qu, R.; Kong, W. Adaptive second-order sliding-mode observer for PMSM sensorless control considering VSI nonlinearity. IEEE Trans. Power Electron. 2017, 33, 8994–9004. [Google Scholar] [CrossRef]

- Borhan, H.; Vahidi, A.; Phillips, A.M.; Kuang, M.L.; Kolmanovsky, I.V.; Di Cairano, S. MPC-Based Energy Management of a Power-Split Hybrid Electric Vehicle. IEEE Trans. Control Syst. Technol. 2012, 20, 593–603. [Google Scholar] [CrossRef]

- Xue, W.; Huang, Y. On frequency-domain analysis of ADRC for uncertain system. In Proceedings of the 2013American Control Conference, Washington, DC, USA, 17–19 June 2013; IEEE: New York, NY, USA, 2013; pp. 6637–6642. [Google Scholar]

- Gao, Z. Scaling and bandwidth-parameterization based controller tuning. In Proceedings of the American Control Conference, Denver, CO, USA, 4–6 June 2003; IEEE: New York, NY, USA, 2003; Volume 6, pp. 4989–4996. [Google Scholar]

- Wang, G.; Liu, R.; Zhao, N.; Ding, D.; Xu, D. Enhanced linear ADRC strategy for HF pulse voltage signal injection-based sensorless IPMSM drives. IEEE Trans. Power Electron. 2018, 34, 514–525. [Google Scholar] [CrossRef]

- Werbos, P.J. Advanced forecasting methods for global crisis warning and models of intelligence. Gen. Syst. Yearb. 1977, 22, 25–38. [Google Scholar]

- Werbos, P.J. Approximate dynamic programming for real-time control and neural modeling. In Handbook of Intelligent Control: Neural, Fuzzy and Adaptive Approaches; White, D.A., Sofge, D.A., Eds.; Van Nostrand: New York, NY, USA, 1992; Chapter. 13. [Google Scholar]

- Murray, J.J.; Cox, C.J.; Lendaris, G.G.; Saeks, R. Adaptive dynamic programming. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2002, 32, 140–153. [Google Scholar] [CrossRef]

- Saeks, R.E.; Cox, C.J.; Mathia, K.; Maren, A.J. Asymptotic dynamic programming: Preliminary concepts and results. In Proceedings of the International Conference on Neural Networks (ICNN’97), Houston, TX, USA, 12 June 1997; pp. 2273–2278. [Google Scholar]

- Bertsekas, D.P.; Tsitsiklis, J.N. Neuro-Dynamic Programming; Athena Scientific: Belmont, MA, USA, 1996. [Google Scholar]

- Enns, R.; Si, J. Helicopter trimming and tracking control using direct neural dynamic programming. IEEE Trans. Neural Netw. 2003, 14, 929–939. [Google Scholar] [PubMed]

- Lewis, F.L.; Huang, J.; Parisini, T.; Prokhorov, D.V.; Wunsch, D.C. Special Issue on neural networks for feedback control systems. IEEE Trans. Neural Netw. 2007, 18, 969–972. [Google Scholar] [CrossRef] [PubMed]

- Lewis, F.L.; Lendaris, G.; Liu, D. Special issue on approximate dynamic programming and reinforcement learning for feedback control. IEEE Trans. Syst. Man. Cybern. B Cybern. 2008, 38, 896–897. [Google Scholar] [CrossRef]

- Ferrari, S.; Jagannathan, S.; Lewis, F.L. Special issue on approximate dynamic programming and reinforcement learning. J. Control Theory Appl. 2011, 9, 309. [Google Scholar] [CrossRef]

- Lewis, F.L.; Vrabie, D. Reinforcement learning and adaptive dynamic programming for feedback control. IEEE Circuits Syst. Mag. 2009, 9, 32–50. [Google Scholar] [CrossRef]

- Wang, F.Y.; Zhang, H.; Liu, D. Adaptive dynamic programming: An introduction. IEEE Comput. Intell. Mag. 2009, 4, 39–47. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning—An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Si, J.; Barto, A.; Powel, W.; Wunsch, D. Handbook of Learning and Approximate Dynamic Programming; IEEE: Piscataway, NJ, USA, 2004. [Google Scholar]

- Lewis, F.L.; Liu, D. Approximate Dynamic Programming and Reinforcement Learning for Feedback Control; Wiley: Hoboken, NJ, USA, 2012. [Google Scholar]

- Akagi, H.; Watanabe, E.H.; Aredes, M. The Instantaneous Power Theory. In Instantaneous Power Theory and Applications to Power Conditioning; Wiley-IEEE Press: Tokyo, Japan; Rio deJaneiro, Brazil, 2007; pp. 41–107. [Google Scholar]

| Parameters | Range | Accuracy |

|---|---|---|

| Torque | 0~500 N·m | 0.4% FS |

| Speed | 0~15,000 rpm | ±1 rpm |

| Busbar voltage | 15~1000 V | 0.5% FS |

| Phase current | 0~1500 A | 0.5% FS |

| Temperature | −40~150 °C | ±1 °C |

| Parameters | Value | Unit |

|---|---|---|

| Pole pairs | 4 | / |

| Rotor inductance | 2.5 | μH |

| Rated voltage | 400 | V |

| Rated power | 150 | kW |

| Rated current | 300 | A |

| Rated speed | 5000 | rpm |

| Peak torque | 200 | N·m |

| Process | Objective |

|---|---|

| 1. Motor position calibration | Obtain the initial electrical angle of the rotor of the electrically excited motor |

| 2. Coefficient calibration of phase current Hall sensor | Minimize the current sampling error |

| 3. Bus voltage sampling coefficient calibration | Minimize the sampling error of bus voltage |

| 4. Excitation current sampling coefficient calibration | Obtain the A/D conversion coefficient for the excitation current sampling |

| 5. Fault protection | Verify the effectiveness of the overcurrent and overvoltage fault protection functions |

| 6. Motor electrical angle delay time compensation | Obtain the angle delay time |

| and linkage Look-up table calibration | Obtain the offline table for current control loop |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ling, H.; Zhang, J.; Pan, H. Research on Linear Active Disturbance Rejection Control of Electrically Excited Motor for Vehicle Based on ADP Parameter Optimization. Actuators 2025, 14, 440. https://doi.org/10.3390/act14090440

Ling H, Zhang J, Pan H. Research on Linear Active Disturbance Rejection Control of Electrically Excited Motor for Vehicle Based on ADP Parameter Optimization. Actuators. 2025; 14(9):440. https://doi.org/10.3390/act14090440

Chicago/Turabian StyleLing, Heping, Junzhi Zhang, and Hua Pan. 2025. "Research on Linear Active Disturbance Rejection Control of Electrically Excited Motor for Vehicle Based on ADP Parameter Optimization" Actuators 14, no. 9: 440. https://doi.org/10.3390/act14090440

APA StyleLing, H., Zhang, J., & Pan, H. (2025). Research on Linear Active Disturbance Rejection Control of Electrically Excited Motor for Vehicle Based on ADP Parameter Optimization. Actuators, 14(9), 440. https://doi.org/10.3390/act14090440