Abstract

The objective was to propose a human–robot bidirectional trust-triggered cyber–physical–human (CPH) system framework for human–robot collaborative assembly in flexible manufacturing and investigate the impact of modulating communications in the CPH system on system performance and human–robot interactions (HRIs). As the research method, we developed a one human–one robot hybrid cell where a human and a robot collaborated with each other to perform the assembly operation of different manufacturing components in a flexible manufacturing setup. We configured the human–robot collaborative system in three interconnected components of a CPH system: (i) cyber system, (ii) physical system, and (iii) human system. We divided the functions of the CPH system into three interconnected modules: (i) communication, (ii) computing or computation, and (iii) control. We derived a model to compute the human and robot’s bidirectional trust in each other in real time. We implemented the trust-triggered CPH framework on the human–robot collaborative assembly setup and modulated the communication methods among the cyber, physical, and human components of the CPH system in different innovative ways in three separate experiments. The research results show that modulating the communication methods triggered by bidirectional trust impacts on the effectiveness of the CPH system in terms of human–robot interactions, and task performance (efficiency and quality) differently. The results show that communication methods with an appropriate combination of a higher number of communication modes (cues) produces better HRIs and task performance. Based on a comparative study, it was concluded that the results prove the efficacy and superiority of configuring the HRC system in the form of a modular CPH system over using conventional HRC systems in terms of HRI and task performance. Configuring human–robot collaborative systems in the form of a CPH system can transform the design, development, analysis, and control of the systems and enhance their scope, ease, and effectiveness for various applications, such as industrial manufacturing, construction, transport and logistics, forestry, etc.

1. Introduction

1.1. CPH Systems in Assembly in Manufacturing

The literature shows increasing research interest in human–robot collaborations (HRCs) for assembly and disassembly in flexible manufacturing [1,2,3,4]. Based on the literature, we realize that human–robot collaborative assembly can be performed in a cyber–physical–human (CPH) system framework, which can provide manifold benefits, i.e., the CPH framework can help maintain a boundary among the physical, cyber, and human subsystems of the HRC system, and thus help modularize the overall HRC system in assembly in manufacturing [2,5,6,7]. Modularization through the CPH framework can help design, develop, model, control, and maintain the system very easily and effectively [8,9]. More specifically, modularization can greatly help understand the computation used for the system clearly and determine optimum computational models, strategies, and methods easily [9]. In addition, modularization can make the control of the physical system specific, appropriate, and accurate [9,10]. Cross-connection and purposeful communications among cyber, physical, and human systems can be made clearer and easier due to modularization [8].

1.2. CPH Systems Versus CPSs and CPSSs with Respect to Assembly in Manufacturing

The CPH framework is an advancement of the basic CPS (cyber–physical system) framework [9,11,12,13,14,15]. However, the CPS does not necessarily specify the role and responsibility of the human element in the system [14]. The CPH framework is also an advancement of the CPSS (cyber–physical–social system) [10], the other version of the CPS [9,11,12,13,14,15]. The CPSS connects the cyber and physical systems with the societal system demonstrating a social implication of the entire system [10]. However, this connection is a gross connection, which does not exclusively connect a human component of the system with the cyber and physical systems even though the human may be a part of the societal system [10]. The CPH framework seems to be a more suitable framework to implement HRC in assembly in manufacturing because the CPH framework considers specific roles, benefits, responsibilities, contributions, and interactions of the human component with the cyber and physical systems, which can specify and greatly advance computation, control, communication and interactions within the HRC system [5,6,7,8]. The CPH framework specifies how the human component can influence the computation, communication, and control of the HRC system in assembly operations in manufacturing. It also specifies the factors related to humans that can influence the HRC performance. The features or clues of a successful CPH system are that (i) the cyber, physical, and human components and their boundaries are clearly identified and maintained; (ii) the scope and agenda of three functional units, such as computation, communication, and control, are clearly defined; and (iii) each of the functional units can be implemented and adjusted modularly and the impact of such adjustments on the system performance and usability can be evaluated and investigated clearly, etc. [5,6,7,8]. In other words, failure to maintain these clues or features may cause obstacles, which may avoid making CPH system frameworks practical and useful.

However, despite having tremendous prospects of the CPH framework for HRCs in assembly in manufacturing, the literature does not show enormous advancements and contributions of the CPH framework for HRCs in assembly in manufacturing [8]. More specifically, enormous efforts toward making CPH system frameworks practical, easily implementable, and effective for advancing HRC assembly and disassembly operations have not been reported in the literature. We, as a preliminary effort, initiated configuring the HRC assembly system in the form of a CPH system [16]. However, the proposed CPH framework needs to be evaluated comprehensively to justify its effectiveness and practicality. In addition, the scope and boundaries of each element and functional module of the CPH system were not defined clearly and the influence of adjusting one element or module on others (e.g., interrelationships and interdependencies among the modules) was not investigated adequately [16].

1.3. Human–Robot Bidirectional Trust as a Basis of the CPH System

Human trust is an important cue that can reveal human’s mental states during the HRC process [17]. We believe that human trust can significantly impact the performance of the CPH framework because a human may deny performing with the cyber and physical systems together if the human cannot trust these systems. Similarly, the robot is an integral part of a CPH system for HRCs in assembly in manufacturing, and it would be beneficial if we could express the robot’s mental states through its trust in its human counterpart [18]. Human trust in robots is an active research topic [17]. However, robot trust in humans, especially in a CPH framework for HRCs in manufacturing, is yet to be studied adequately [18]. It seems to be rational that a human–robot bidirectional trust model can be incorporated into the CPH framework for HRCs in assembly in manufacturing, which may serve as a basis of modulating the computation, communication, and control of the CPH framework making each of these functions (computation, communication, and control) of the CPH framework clearer and more transparent [19,20].

However, the design, development, and control of a HRC system within the CPH framework triggered by human–robot bidirectional trust has not been proposed in the literature yet, except some preliminary work [16]. The preliminary work focused mostly on modeling human–robot bidirectional trust and measuring trust in real time. However, the control of the CPH framework triggered by bidirectional trust as well as the evaluation of performance of the CPH framework for different conditions regarding computing, communication, control, and human perceptions have not been investigated adequately [16]. The bidirectional trust approaches proposed in [19] and [20] need to be verified and validated through implementing them in HRC assembly systems configured in the CPH framework.

1.4. Communications in the CPH System

Computation, communication, and control modules are to be equally important for a CPH system [8,16]. The literature shows various efforts toward improving the control of HRC systems, though such investigations toward advancements in controls were not modular in nature [9,21]. However, modular investigations into the advancements of computing and communications among different CPH system components have not been reported adequately in the literature. Communications among different CPH system components are crucial for effective functioning of the CPH system [22,23]. Computing and control effectiveness also depend on successful communications in the CPH system [16]. The literature shows growing studies on communications in human–robot systems [22,23,24,25,26,27,28,29,30]. The literature shows various efforts toward improving communications among different system components in other intelligent systems, such as integrating spoken instructions into flight trajectory prediction, to optimize automation in air traffic control [31], and swarm systems [32]. However, investigations of communications between different agents in the state-of-the-art HRC systems merely focus on the component level investigations on communication strategies and methods in HRC systems [22,23,24,25,26,27,28,29,30]. System-level synergistic investigations of communications among cyber, physical, and human components for different functional modules in the CPH framework to understand its impact on the effectiveness of other functional units as well as on human–robot interactions and task performance of the CPH system, especially CPH systems employed in collaborative assembly in manufacturing, have not been reported in the literature, except the preliminary investigations we made recently [33]. Nonetheless, there is still scope and the need to expand the evaluation protocols and evaluation results for modulating communications presented previously in [33]. Furthermore, different innovative communication methods can still be searched for advancing and optimizing the roles of communications in the effectiveness of the CPH system.

1.5. Research Questions

Based on the above background information and motivational scenarios, we identified a few research questions that could clarify the efficacy of configuring a HRC system in the form of a CPH system for collaborative assembly in manufacturing and evaluating the communications module separately. The research questions (RQs) are as follows:

- RQ 1: How can we effectively configure a HRC system in the form of a CPH system with respect to human–robot collaboration in assembly in manufacturing?

- RQ 2: How can the modularity in the CPH configuration of a HRC system be used to study and investigate the impact of adjustment in each module on the overall HRC performance and human–robot interactions? More specifically, how can we modularly adjust communications in the CPH system and how does it impact on the system performance and HRI modularly in the CPH system?

1.6. Research Objectives

To address the gaps in the literature as explained earlier and answer the research questions, we in this paper presented a model of a HRC task for assembly in manufacturing in the form of a CPH framework triggered by human–robot bidirectional trust. The proposed CPH framework was implemented on an actual HRC setup for assembly in manufacturing. We experimentally evaluated how communication complexities, strategies, and methods among the cyber, physical, and human components of the CPH framework could impact on the overall performance and interactional effectiveness of the CPH system. The findings might transform the design, development, analysis, and control of human–robot collaborative systems and enhance the scope, ease, and effectiveness of such systems for various applications, such as manufacturing, construction, transport and logistics, forestry, etc.

1.7. Organization of the Paper

The rest of the paper is organized as follows: Section 2 discusses the theoretical aspects of the CPH framework; Section 3 introduces the physical human–robot collaborative system that we developed in the form of a CPH framework to be used for the experimental purpose; Section 4 presents an innovative human–robot bidirectional trust computational model; Section 5 presents the human–robot bidirectional trust-triggered CPH framework; Section 6 presents the experiment design to define and modulate different communication approaches and strategies among the cyber, physical, and human systems as well as the evaluation scheme and human subjects to conduct experiments and evaluate the CPH framework experimentally; Section 7 presents the experimental results and analyses, including the justification of the hypothesis related to the trust-triggered CPH framework; Section 8 presents a detailed discussion on the results and limitations of the proposed methods; and Section 9 presents the conclusions and future research directions. Then, the acknowledgements, ethical standards, and references are presented.

2. Theories and Concepts of the CPH Framework

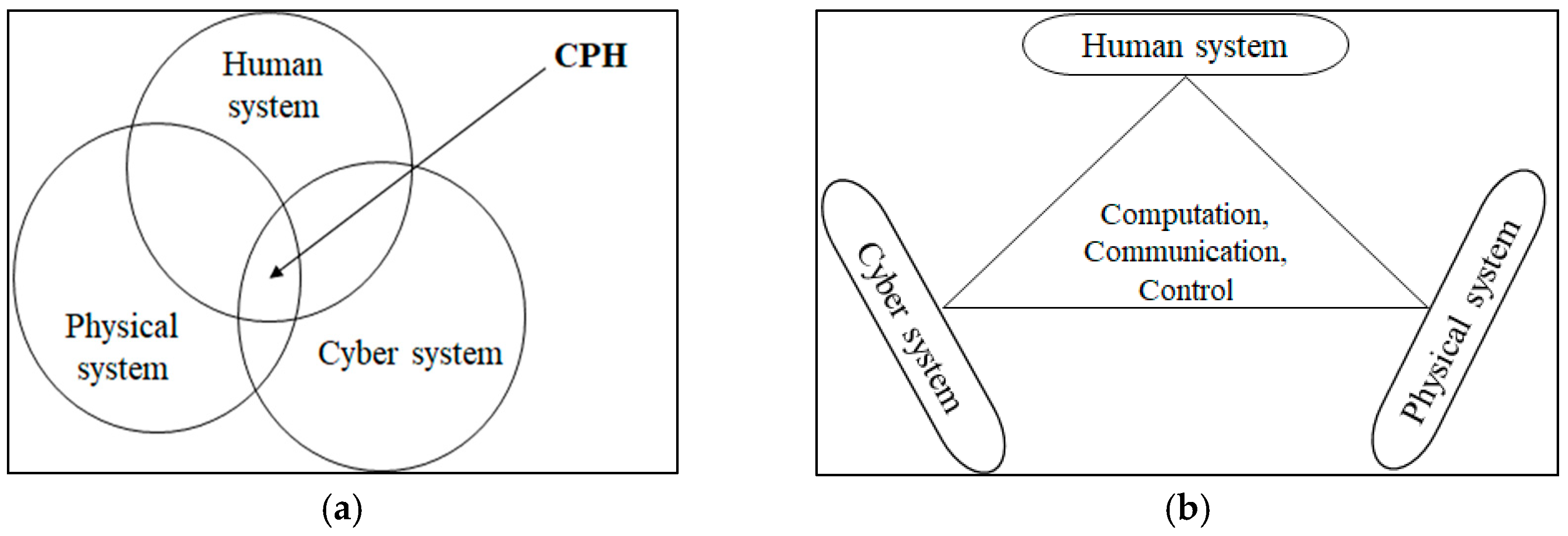

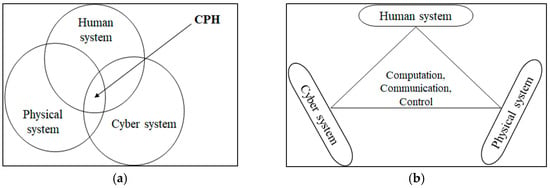

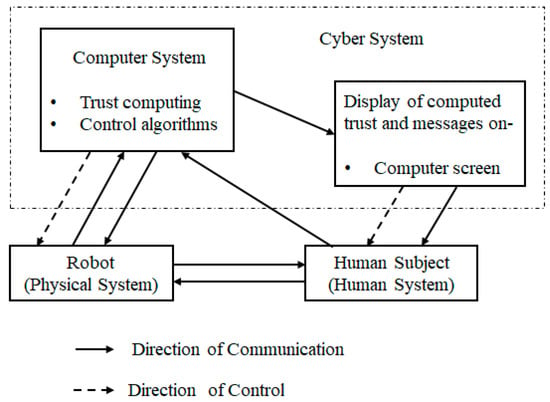

As Figure 1a shows, a CPH framework intersects among three different systems components: cyber system, physical system, and human system [8]. For the proposed CPH framework for HRC in assembly in manufacturing, the physical system may consist of the physical robot, including its hardware components, sensors, physical accessories, assembly components, and assembled products [8]. The cyber system may consist of the computer, different software packages installed in the computer, control algorithms, programming code, sensor data and any other data stored in the computer, etc. [8,9]. The human system may consist of the human co-workers (subjects) collaborating with the robot for the assembly task, the physical environment where the human collaborates, other humans with whom the human interacts during the task, such as the task supervisor, inspector, work aide, etc. [8]. The roles and contributions of each of these components may not be the same. The contributions vary depending on the objectives of the collaborative tasks. The autonomy levels of each of these components may also vary depending on the nature of the tasks and of the objectives of the tasks [8,9,10].

Figure 1.

The theoretical framework of a CPH system: (a) the configuration and different components of the CPH framework (cyber, physical, and human systems); (b) different functional modules of the CPH framework (computation, communication, and control) performed within and between different CPH framework components.

As Figure 1b shows, the CPH framework needs to perform computation, communication, and control within the cyber, physical, and human systems to function properly [8,16]. There are communications along three directions: (i) communications between the cyber system and the physical system, (ii) communications between the cyber system and the human system, and (iii) communications between the physical system and the human system [8,9,16]. It is not a requirement that all these communications be present in a CPH framework [8]. However, a full spectrum of the CPH framework should maintain communications in all these directions [8]. The communications may be via electronic signal flows, visual cues, auditory or verbal cues, haptic cues, nonverbal cues, etc. [16]. The control function means the application of control algorithms by the cyber system to control the behaviors of the physical system [16]. The cyber system may also control the behaviors of the human system via different messages and sounds conveyed to the human system using visual and auditory mechanisms [16]. The human system may also control the behaviors of the physical system, and vice versa in different ways [16]. Computation is usually carried out in the cyber system. The computations necessary for processing the algorithms and data sets captured by sensors and different computational models are included in the computation function [8]. There are bilateral dependencies and relationships among the physical, cyber, and human systems in terms of computation, communication, and control, which a CPH system needs to maintain [8,16].

3. Development of the Experimental Human–Robot Collaborative System

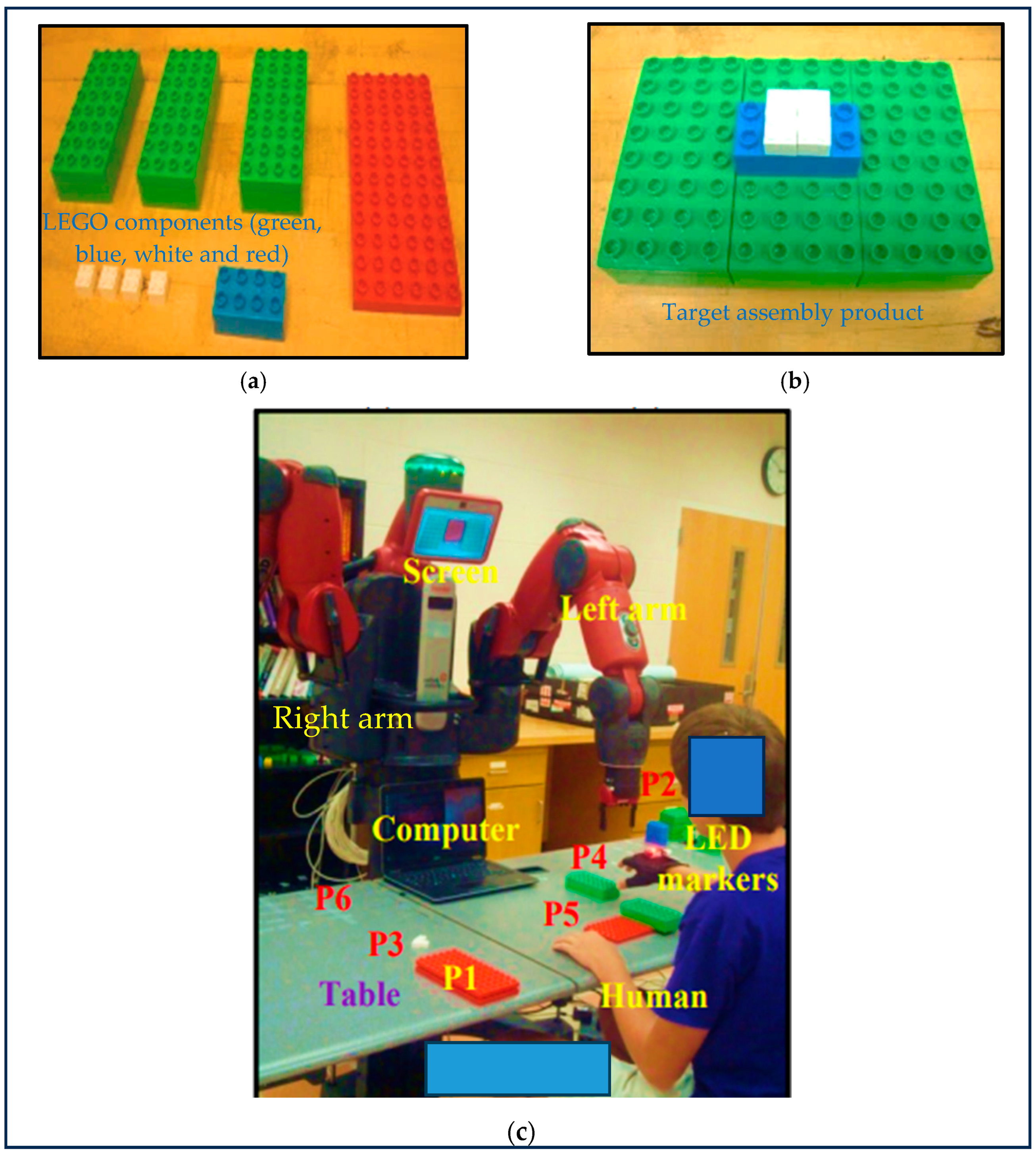

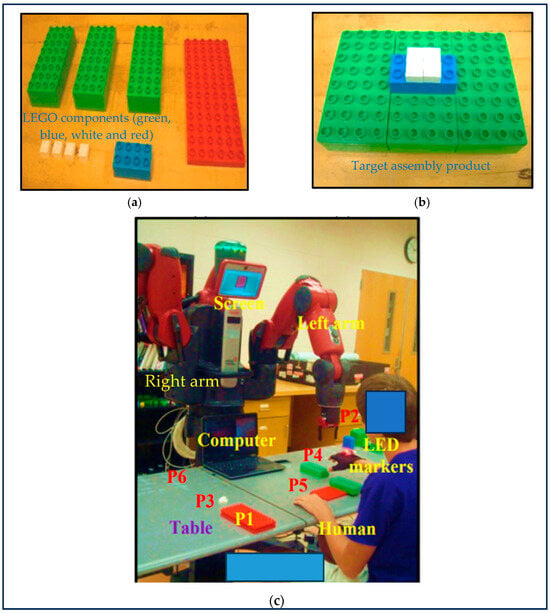

We developed a human–robot hybrid cell [34,35], where a human co-worker (a human subject) and a Baxter robot collaborated with each other to assemble different LEGO blocks, as Figure 2 demonstrates [16]. The assembly of different LEGO blocks to a final product was performed by the human and the robot in a pre-decided sequence under a pre-optimized task allocation scheme between the human and the robot in the hybrid cell [21]. The task sequence was decided based on a work measurement study result, where the total number of tasks needed to complete the assembled product was the minimum [21]. The assembly task was divided into subtasks, and each subtask was assigned to either the human or the robot following an optimization scheme [35]. Table 1 shows subtask allocation between the human (H) and the robot (R) for the collaborative assembly task.

Figure 2.

(a) LEGO components (parts), (b) the target product to be produced through assembling the LEGO components (the red component is not shown as it was covered by the green components) by the human and the robot in collaboration, and (c) the human–robot hybrid cell for performing the assembly of the product. The human wore a LED glove for the acquisition of data of human hand movements during the assembly process necessary for trust computation in real time [16,21].

Table 1.

Subtasks allocated to the human and robot in sequence for the assembly of the final product [16,21].

The procedures of how the human and the robot could collaborate to produce a finished product are described based on Figure 2c, as follows [16,21]. Initially, the red components were placed at the P1 location on the table, the blue as well as the green components were placed at P2, and the other components (white) were placed at P3. In the hybrid cell, the human hand was not able to reach the P2 location, but the left arm of the robot was able to reach there. To complete an assembly (to produce a finished product), it was necessary that one (01) red component (from P1), three (03) green components (from P2), one (01) blue component (from P2), and four (04) white components (from P3) were transferred to P4, assembled at P5, and then the assembled product was shifted to P6 maintaining the specified sequence and subtask allocation following the instructions displayed on the screen at the robot head or on the computer screen [21]. Human trust in the robot and robot trust in the human was computed in real time and displayed on the screen at the head of the robot or on the computer monitor [16].

The presented collaborative assembly task as in Figure 2 may not resemble the tasks that we observe in industry floors [36]. However, this simple task was used here as a proof-of-concept assembly task for the proposed CPH framework with an expectation that the results would be applicable to real-world human–robot collaborative assembly tasks in manufacturing without a loss of generality.

4. Computing Human–Robot Bidirectional Trust and the Visual Interface of Trust

Modeling trust or bidirectional trust is challenging. A plethora of trust models have been proposed in the literature [37,38,39,40,41,42,43,44,45,46,47,48]. Some models are deterministic, and some other models are probabilistic or stochastic [38]. Almost all models parameterized human performance or capabilities in the models in different ways. A few models parameterized human performance or capabilities as well as human weaknesses or inabilities in the trust models [38,49]. We believe that human trust in the robot should corelate both robot performance or capabilities and limitations or inabilities [16,33,38,49]. On the contrary, it is still unclear whether modeling robot trust in the human should be similar as modeling human trust in the robot, which could pave the way to modeling dynamic human–robot bidirectional trust [19,20]. Most importantly, the state-of-the-art trust models are yet to be used as a basis of control, computation, or communication in the CPH system because the real-time implementation of the trust models has not been verified and validated yet properly.

We here adopted a deterministic-type human–robot bidirectional trust model that parameterized human performance or capabilities as well as human weaknesses or inabilities [16,33,38,49]. As follows, we denoted the computational human trust in the robot as and robot trust in the human as TR2H for the HRC task demonstrated in Figure 2c. As proposed by Lee and Moray [49], a time-series model could be derived to compute and TR2H using Equations (1) and (2), respectively. Here, denotes the performance of the robot, denotes faults made by the robot, denotes the performance of the human, and denotes faults made by the human during the HRC task. The model contains coefficients , , with real constant values. Values of these coefficients can be impacted by the nature of the HRC task, human behaviors, and robot characteristics [50]. Here, k denotes time step in seconds, and the computed trust values are to be updated at every k.

The trust model consisting of Equations (1) and (2) together is termed as “bidirectional trust”, which means that both the human and the robot possess trust in each other that can be influenced by the performance and faults made by each other during the collaboration for the assembly task [19,20,21]. Each computed trust value ( or TR2H value) can range between 0 and 1 if we avoid mistrust, distrust, and overtrust to keep trust computation simple [21]. We also ignore human and robot memories, system noises, and perturbations to keep the model simple [16]. Again, the and TR2H values may not reflect the natural trust of human and robot, respectively; rather, these are the computed values of artificial trust of the human and of the robot developed while working together for the HRC task [16,21].

, , and can be further modeled to facilitate the computation of bidirectional trust in Equations (1) and (2). Human and robot motions (speeds) during the collaboration were considered as their performance (efficiency) (i.e., and respectively). Mistakes or incorrectness made by the human and the robot during the assembly task were considered as “faults” of the human and the robot (i.e., and , respectively). For example, failure of the robot end-effector to grip a component or the placement of a component with an incorrect orientation (e.g., tilted orientation) at the time of manipulation can be considered as the robot’s faults. Similarly, the human’s faults may occur when assembling a wrong component or attaching a correct component at an incorrect location (at an incorrect slot) during the assembly. We determined the values of the coefficients (, , ) experimentally and measured , , and in real time following human hand motion capture and computer vision methods to compute human–robot bidirectional trust using Equations (1) and (2) for the proposed assembly task [16,21].

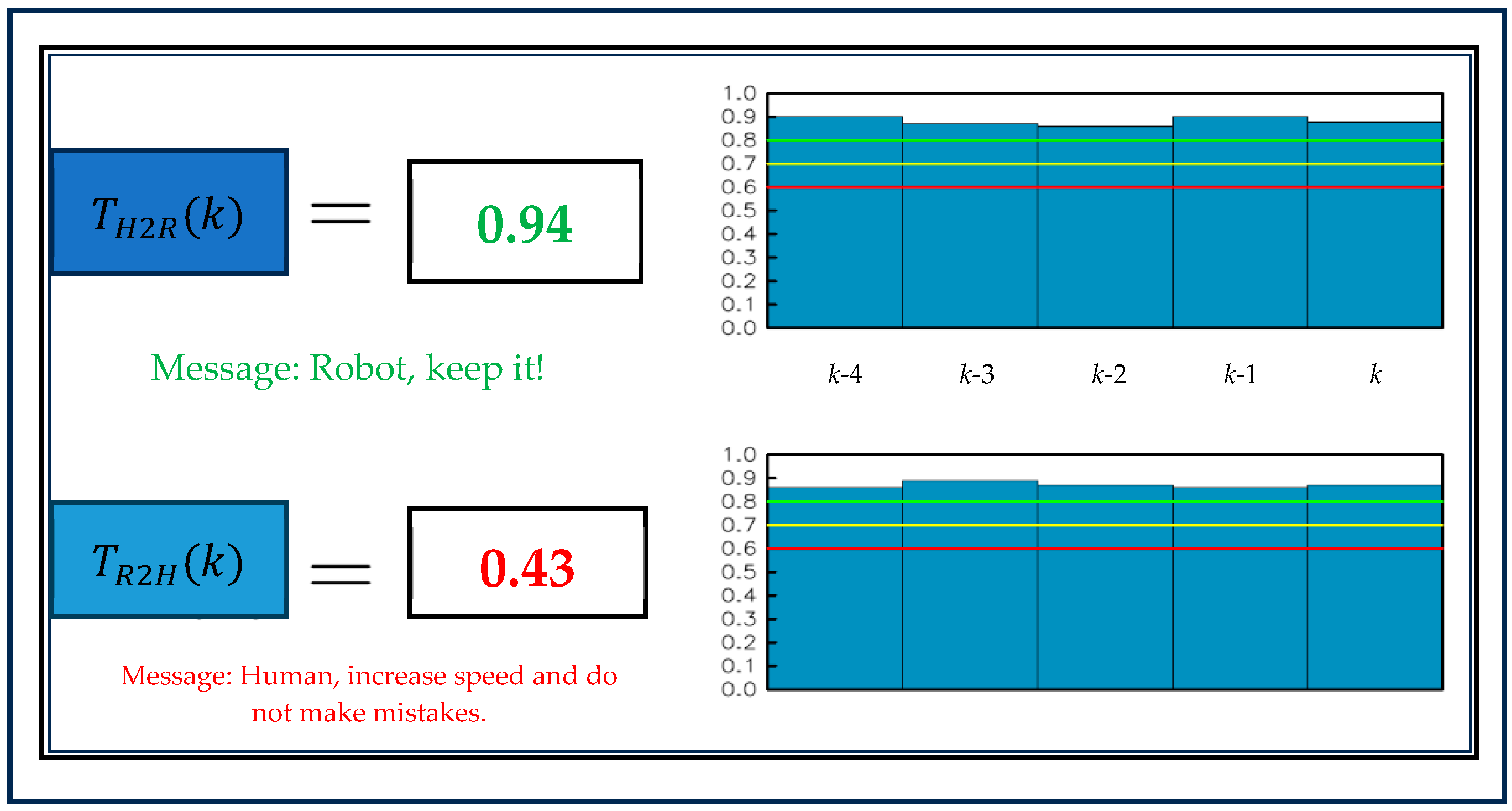

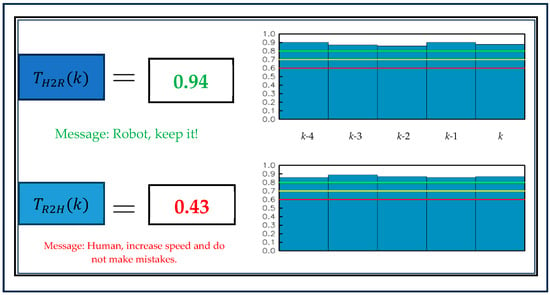

The computed values of bidirectional trust ( and ) were communicated to the human displaying the values on the screen attached at the head of the robot or on the computer (screen) used to control the robot placing it in front of the human [16]. Figure 3 shows the layout of the trust display (computer) screen. The computed trust values were updated in every time step, k. The screen displayed the computed trust values for 5 recent consecutive time steps exhibiting the trend of the computed trust. The colored lines (red, yellow, green) corresponding to different ranges of computed trust were used as the baselines (thresholds) for developing and communicating warning messages to the human by the computer (cyber) system to inform the trust status and help the human take necessary actions to uphold and maintain the robot trust in the human to a desired level [16].

Figure 3.

The typical layout of the (computer) screen for communicating computed bidirectional trust values to the human at different time steps displaying them on the screen. Trust values were updated at every time step, k [16,21].

5. The Trust-Triggered CPH Framework

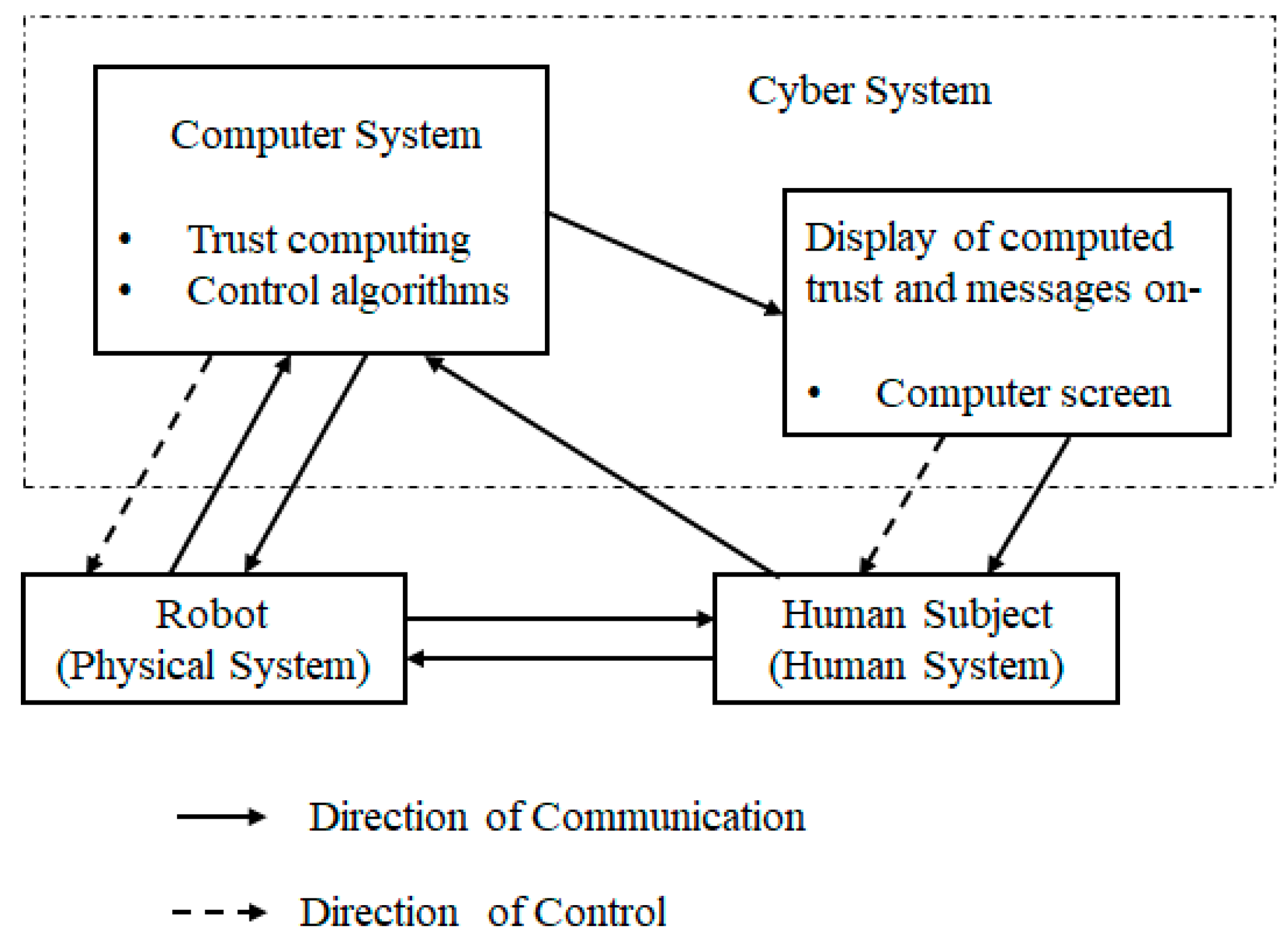

If two agents collaborate on a HRC task, the behaviors of the first agent experienced by the second agent can influence the trust of the second agent in the first agent, and vice versa [17,18,19]. Taking this into account and being inspired by the theoretical aspects of the CPH framework demonstrated in Figure 1, the trust-triggered CPH framework for HRC in assembly in manufacturing is proposed in Figure 4. As Figure 4 shows the human subject of the human system visually observed (visual communication) robot behaviors (robot motions and faults or mistakes made during its motions) in the HRC task, which developed and changed the human’s trust in the robot [17,18,19]. The robot’s behavioral information was captured by the sensor system of the robot (physical system), and it was communicated to the computer system (cyber system). Then, human’s trust in the robot was computed in real time in the computer system following the computational model presented earlier. Similarly, the robot observed human behaviors (human motions, faults) via sensors (e.g., vision sensor, motion sensor). The data were communicated to the computer system, and the robot’s trust in human was computed in the computer system following the proposed model [21]. Both the human and the robot trust values were updated in every k. The cyber system displayed the computed trust values on the (computer and robot head) screens as illustrated in Figure 3 [16,21].

Figure 4.

The proposed trust-triggered CPH framework for HRCs in assembly in manufacturing [16,21].

Based on the computed human trust in the robot, the cyber system controlled the behaviors of the robot applying a motion control algorithm [16], which was such that the robot’s speed increased to increase its performance, and the end-effector’s opening and closing movements were made more accurate by applying a PID position control algorithm to reduce the robot’s faults to increase the human’s trust in the robot [17,18,19]. The updated human’s trust in the robot was displayed on the screens. Similarly, the robot’s trust in the human was also displayed on the screens by the cyber system. The cyber system also produced sound cues and generated messages on the screens to make the human aware of the robot’s trust in the human, if the robot’s trust in the human was lower than some prespecified thresholds. The status of the robot’s trust in the human could influence the human’s mind and actions, and thus the human might change his/her behavior (increase task performance (speed) and reduce faults) to gain the robot’s trust [18]. In this way, the cyber system controlled the human’s behavior psychologically. Such changes in human behavior were also captured by robot sensors, communicated to the cyber system, and the modified robot’s trust in the human was computed by the cyber system and displayed again on the screens [16].

Thus, we see that there were bilateral dependencies and relationships among the physical, cyber, and human systems in terms of computation, communication, and control triggered by the computed bidirectional trust in the HRC system for the assembly task, which constituted the trust-triggered CPH framework, as Figure 4 demonstrates [8].

6. Experiments

6.1. Subjects

We conducted power analysis (continuous endpoint, two independent sample studies, alpha = 0.05, beta = 0.1, power = 0.90), which resulted in a sample size equal to 30. As a result, we selected 30 human subjects (psychology, human factors, and engineering students and researchers). Out of the 30 subjects, 27 subjects were male and 3 were female. The mean age (as reported by the subjects) was 24.36 years (STD . As part of the ethical consideration, we collected written consent from each subject for his/her participation in the experiments. This study was approved by the IRB, and we followed the ethical guidelines regarding human subjects (e.g., privacy, treating them with respect) as per the IRB certification. The subjects received brief training and instructions on the experimental procedures.

6.2. Experiment Protocols

We identified bilateral communications in three different directions in the trust-triggered CPH framework for HRCs in assembly in manufacturing presented in Figure 4. We thus designed three different experiments to modulate the bilateral communications in three different directions [8,16]. Each experiment had at least two experimental protocols or conditions, as follows:

Experiment 1 (modulating communications between the human system and the cyber system): The cyber system computed the human’s trust in the robot and the robot’s trust in the human, and displayed the computed trust values on the screen placed in front of the human. The cyber system also generated messages based on the computed trust values and displayed the messages on the screen to make the human aware of the bidirectional trust status. The cyber system communicated with the human system in that way. Similarly, the human was influenced by the trust-related messages displayed on the screen, and the human could respond to the messages by increasing his/her performance (task speed) and reducing his/her faults in the assembly tasks, which was measured by the sensor system and communicated to the cyber system to update trust computation and the messages generated based on the computed trust [16,21].

We adopted the following three communication protocols under this experiment focusing on how the cyber system communicated with the human subjects (human system), as follows [22,23]:

Protocol 1: Only trust values ( and values) were communicated to the human subjects displaying the values on the screen in real time. This protocol was considered as the reference condition (the performance baseline for the CPH framework at the least communication complexity).

Protocol 2: Trust values were displayed on the screen in real time. In addition, the values were pronounced using sounds by the sound system of the CPH framework to make the human subjects aware of the status of trust, especially the robot’s trust in the human.

Protocol 3: Trust values were displayed on the screen in real time and were pronounced with sounds. In addition, three different warning messages were displayed on the screen, and the messages were pronounced to make the human subjects aware of the trust status, especially the robot’s trust in the human (see Figure 3) [22,23].

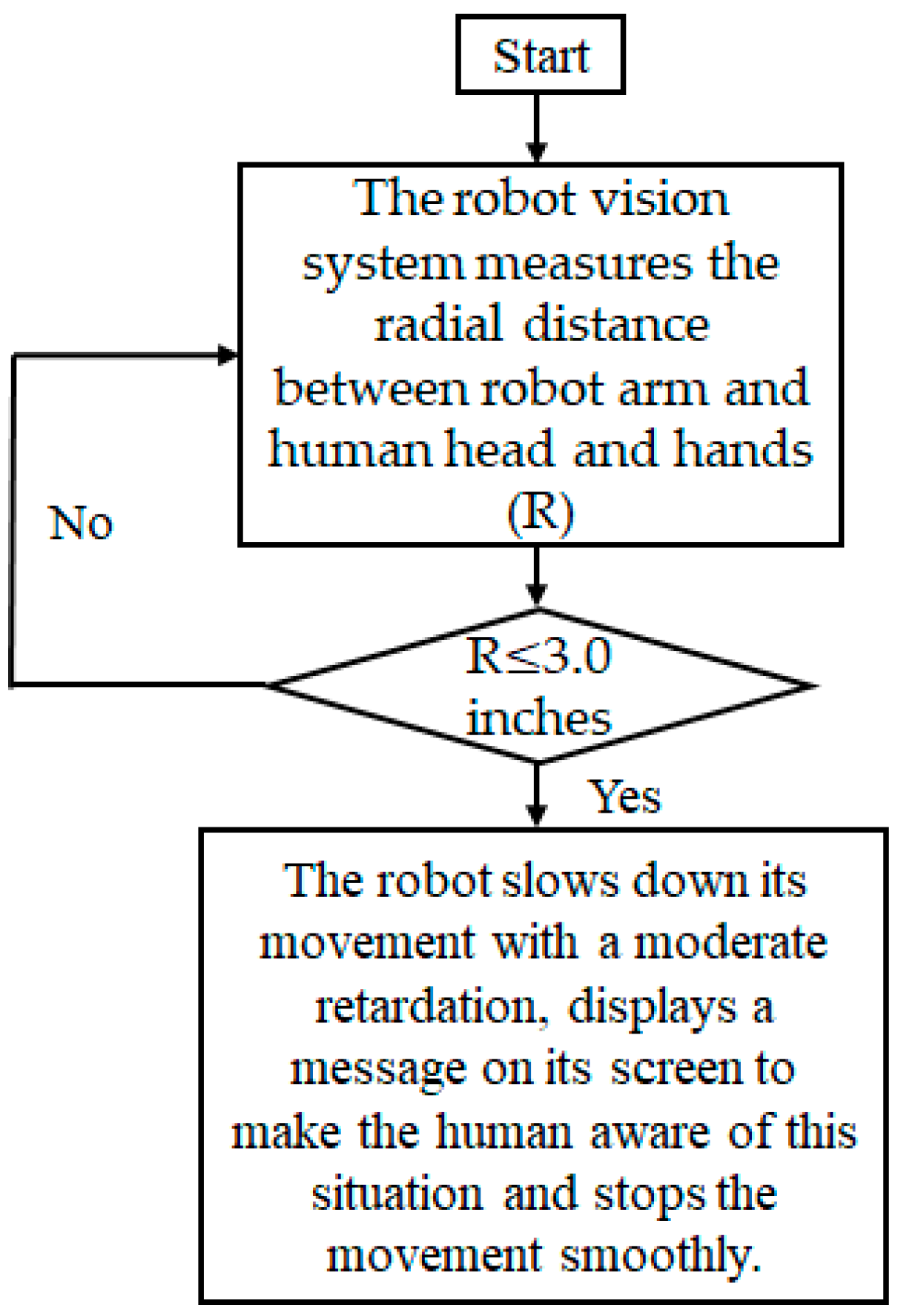

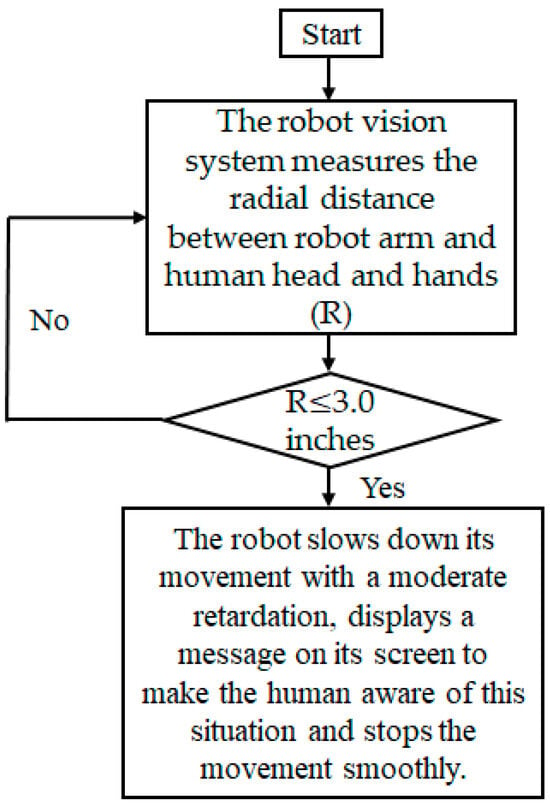

Experiment 2 (modulating communications between the human system and the physical (robot) system): The human observed the manipulation tasks performed by the robot, and the human rated his/her trust in the robot via the keyboard. Thus, the human had visual communication with the robot. Similarly, the robot used its vision system to observe the human collaborating with it [16]. Based on a safety algorithm, as in Figure 5, the robot slowed down its motion or stopped its motion if the human’s head, hands, or body parts came very close to the robot’s arm [21,51]. The robot also displayed such events as messages on its monitor (screen) attached to its head. As a result, the robot communicated potential safety hazards to the human co-worker [21]. We adopted two experiment protocols as follows regarding this situation:

Figure 5.

The safety algorithm that the robot used to communicate potential safety hazards to the human and control its motion to provide safety to the human.

Protocol 1: The robot displayed potential safety concerns (hazardous events) to the human (safety communication).

Protocol 2: The robot did not display potential safety concerns (hazardous events) to the human (no safety communication).

Experiment 3 (modulating communications between the physical (robot) system and the cyber system): The cyber system implemented control algorithms and sent control signals to the robot to control its motions and behaviors. Similarly, the physical system used its sensor system to measure human performance (using the IMU) and human faults (using the vision system of the robot) and send the measured data to the cyber system for trust computation and adjustments of the control signals based on the computed trust values [21]. The signals were communicated between the physical system and the cyber system via the ROS (Robot Operating System) [16,52]. We modulated the QoS (Quality of Service) settings of the ROS communications by selecting the following two QoS profiles [52,53]:

Best Effort: This protocol was used to maintain faster or high-speed communication. However, the communication might not be so reliable, i.e., there was less guarantee that the messages (information) would be delivered correctly [52].

Reliable: In this service protocol, the possibility that the messages (information) would be delivered properly was very high. However, it compromised with the communication speed, i.e., the high reliability in message delivery might impact the communication speed adversely [53].

We adopted the following hypothesis regarding the impact of communication speed and reliability on human–robot collaboration performance and human–robot interactions [54], as follows:

Hypothesis: The trade-off between communication speed and reliability might impact robot behaviors, human–robot interactions, and human–robot collaborative performance of the assembly task.

To address the hypothesis, we adopted two experiment protocols as follows that modulated the communication between the physical (robot) system and the cyber system:

Protocol 1: The communication between the physical system and the cyber system was fast but less reliable (fast communication).

Protocol 2: The communication between the physical system and the cyber system was slow but more reliable (slow communication).

6.3. Evaluation Scheme

We used both subjective and objective criteria to assess the performance of the CPH system for the above experiment protocols. The objective criteria were the assembly efficiency (in terms of mean assembly completion time in seconds, where task completion means completion of the task with satisfactory task requirements or quality) and the assembly success rate expressed in percentage (%). The success rate also indicated task quality. We used two types of subjective evaluations: pHRI (physical human–robot interaction) [55] and cHRI (cognitive human–robot interaction) [56]. We expressed pHRIs in two ways: human–robot engagement [57] and human–robot team fluency [58]. We applied the work sampling method to measure engagement, and it was expressed as the % of time the human was physically engaged with the robot in a collaborative assembly task cycle or a trial [21]. We measured the idle time of the human co-worker and of the robot separately using two stopwatches. The mean % of time the human and the robot were not idle was used to express team fluency [58].

We expressed cHRIs in three ways: cognitive workload, situation awareness, and human trust. We used NASA TLX to assess the cognitive workload of the human co-worker [59] and a 7-point Likert scale (1 for the least and 7 for the most favorable responses) to assess the human subject’s trust in the robot as well as in the CPH framework for collaborating with the robot under the CPH system [60]. We also used the 7-point Likert scale to assess the situation awareness of the human subjects for collaborating with the robot under the CPH system [16].

We used the above evaluation criteria because we believed that those criteria could truly address the KPIs (key performance indicators) of the HRC system [21]. In addition, we used the 7-point Likert scale to assess human co-worker’s perception of the potential long-term work relationship with the robot for collaborating through the CPH framework in terms of naturalness [61], ease of work, symbiosis [62], and empathy [63] as those criteria seemed to indicate and foster a potential long-term work relationship between the human and the robot for the HRC task [64]. This is because the human might be interested in a long-term work relationship with the robot if the human feels natural and at ease while working with the robot [61]. Symbiosis is the human’s perception of bilateral dependencies between the human and the robot. Higher level of symbiosis can enhance the possibilities of a long-term work relationship between the human and the robot, and vice versa [62]. Similarly, empathy of the human for the robot when the robot passes hard times while working with the human may foster the possibility of having a long-term relationship between the human and the robot, and vice versa [63].

6.4. Experimental Procedures

Based on practice trials using the subjects and the experimental system shown in Figure 2c, we first estimated the parameter values (values of the constant coefficients) necessary to compute trust in real time for the trust models derived in Equations (1) and (2). To do so, the information on agent (robot and human) performance and faults observed in the practice trials was used to compute the constant coefficients of the computational trust models following the Autoregressive Moving Average Model (ARMAV) method [21]. The estimated values of the constant coefficients are presented in Table 2, as follows.

Table 2.

Values of the constant coefficients necessary to compute trust in real time.

Then, for the formal experiments, in each direction of bilateral communication, the experimental conditions (protocols) were used as independent variables and the evaluation criteria were used as the dependent variables of the experiments. We evaluated the CPH system in each protocol separately. In each protocol, each human subject performed 3 consecutive trials (assembly of 3 products illustrated in Figure 2 consecutively). We evaluated the assembly efficiency and success rate and the pHRIs following the evaluation scheme [21,55]. To evaluate the cHRIs, the subject responded to NASA TLX to assess cognitive workload and a separate Likert scale questionnaire to assess his/her trust in the robot and situation awareness around the HRC system [59,60].

All the subjects evaluated the CPH system following the above procedures separately for each experiment protocol in each communication direction. At the end of the experiments, each subject also responded to the Likert scale questionnaires to assess his/her perception of the possibility of a potential long-term work relationship with the robot under the CPH system [64]. We briefly trained each subject on how to conduct the experiment in each experiment protocol properly, which helped reduce the learning effects that the subject might gain during the experiments [21]. The experiment protocols and subjects were selected randomly to reduce the learning effects.

7. Experimental Results and Analyses

7.1. Results of Experiment 1 (Modulating Communications Between the Human System and the Cyber System)

Table 3 shows the mean assembly completion times and mean assembly success rates for different experimental conditions (protocols), where the digits in the parentheses indicate the standard deviations. In comparison with the reference condition (Protocol 1, only the trust values were displayed), we see that the mean assembly completion time decreased (efficiency increased) and the assembly success rate increased as sounds were produced along with displaying the computed trust values, i.e., the computed trust values were displayed and pronounced by the sound system of the CPH system [21]. We also see that the efficiency and assembly success rate further increased when warning messages were added to displaying trust values and pronouncing the trust values using sounds. It might happen as the addition of auditory and visual cues might enhance humans’ perception of performance and quality more transparently, which might motivate the humans to work more efficiently and carefully toward the successful completion of the task [16,21].

Table 3.

Mean assembly completion times and mean assembly success rates for different experimental protocols.

We conducted analysis of variances (ANOVAs) for the mean assembly completion times and assembly success rates separately. ANOVAs showed that variations in the results between subjects were statistically nonsignificant ( for each case), which indicated that the evaluation results could be used as the general findings, even though a limited number of subjects participated in the experiments. On the other hand, variations in the results between experiment protocols were statistically significant ( for each case), which clearly proved the impacts of the experiment protocols (different communication methods with varying complexities) on the task performance in the CPH framework [65,66,67,68].

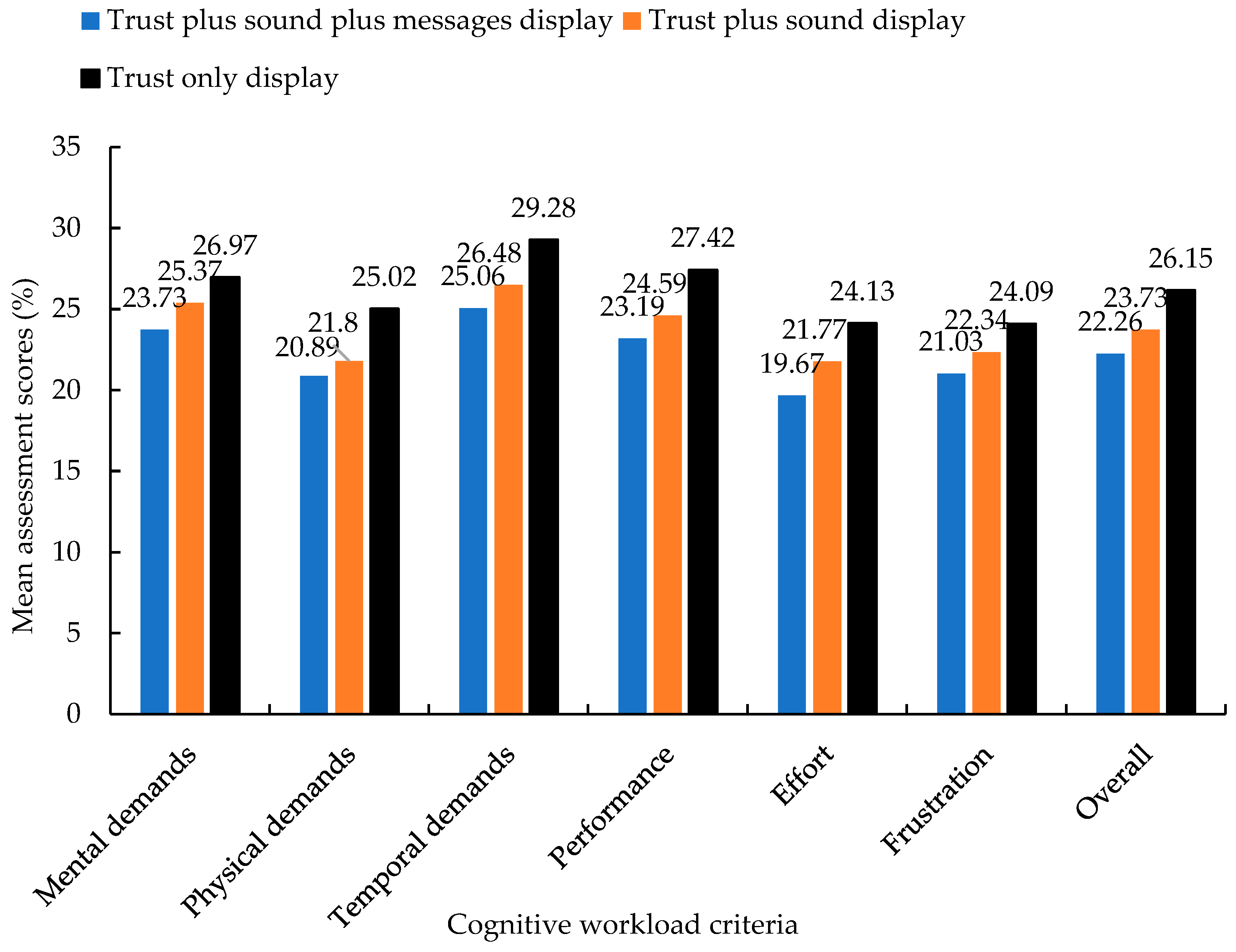

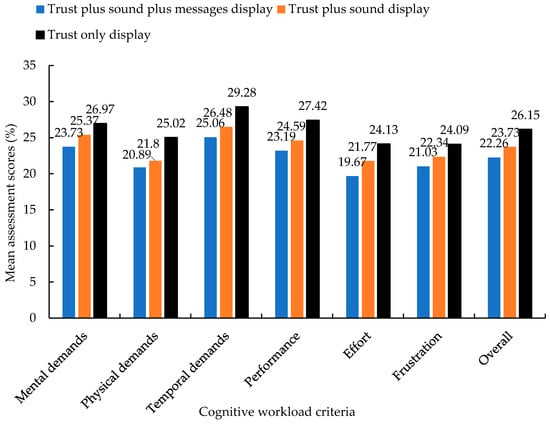

We see that similar trends of results were obtained for the pHRI evaluation results in Table 4 and the cHRI evaluation results in Table 5, where the digits in the parentheses indicate the standard deviations. In Table 5, the mean values of the overall or total cognitive workloads for different experiment protocols are shown. Figure 6 elaborately presents the mean values of different elements (criteria) of cognitive workloads among different experimental protocols (different communication strategies). The reasons behind the similar trends of results might be that the additional auditory and visual cues in communications helped humans become more engaged in the collaboration with the robot through the CPH framework [22,57]. The enhanced transparency through the auditory and visual cues made the collaboration fluent [58]. As a result, the pHRIs increased. The advanced auditory and visual cues in communications created a transparent and fluent environment that helped the humans perform the collaborative task with reduced cognitive requirements, which reduced the cognitive workloads [59]. The enhanced transparency due to additional cues made humans aware of the work environment, which enhanced situation awareness [21]. The overall improvement in the task performance and interaction scenarios enhanced the human’s trust in his/her collaborator (the robot) and in the collaboration environment (CPH system) [50].

Table 4.

Physical human–robot interaction evaluation results for different experimental protocols.

Table 5.

Cognitive human–robot interaction evaluation results for different experimental protocols.

Figure 6.

Mean assessment scores of different elements of cognitive workload among different experimental protocols (different communication strategies).

7.2. Results of Experiment 2 (Modulating Communications Between the Human System and the Physical System)

Table 6 shows the mean assembly completion times and mean assembly success rates for the two experimental conditions (safety communications versus no safety communications) related to modulating communications between the human system and the physical system [51]. In the table, the digits in the parentheses indicate the standard deviations. The results show that the mean assembly completion time is lower (efficiency is higher) when the safety algorithm is not in effect and no safety hazards are communicated to the human co-worker by the robot (physical system) [68]. This might happen because the robotic system does not need to check safety hazards and inform the human of the safety related warnings for the no-safety-communications condition. The human tried to keep pace with the robot for the assembly task. As a result, the assembly completion time decreased, and the (time) efficiency of the assembly task increased for the no safety communications condition. However, the assembly success rate was lower for the no-safety-communication condition compared to when the safety communication was in effect. This might happen because the human might be fearful to work with the robot when no safety communication is conveyed to the human. The human performed the assembly task keeping pace with the robot’s movement, which decreased the task completion time, but the fear of possible hits by the robot on the human’s body parts while performing the assembly task might have adversely affected the assembly success rate. The opposite happened when safety communication was in effect, which significantly increased the assembly success rate [68].

Table 6.

Mean assembly completion times and mean assembly success rates for different experimental protocols.

We see that similar trends of results were obtained for the pHRI evaluation results in Table 7 and the cHRI evaluation results in Table 8. In the tables, the digits in the parentheses indicate standard deviations. In Table 8, the mean values of the overall or total cognitive workloads for the two different experiment protocols were determined by averaging the values of different elements (criteria) of cognitive workloads between the experimental protocols (different communication strategies) following the same method illustrated in Figure 6. The reasons behind the similar trends of results might be that the additional visual cues via safety communications helped humans become more engaged in their collaboration with the robot through the CPH framework [22,57]. The enhanced transparency through the visual cues via safety communications made the collaboration fluent [58]. As a result, the pHRIs increased. The advanced visual cues via safety communications created a transparent, safe, and fluent environment that helped the humans perform the collaborative task with reduced cognitive requirements, which reduced the cognitive workloads [59]. The enhanced transparency due to additional visual safety cues made the humans aware of the work environment, which enhanced situation awareness [21]. The overall improvement in the task performance and interaction scenarios enhanced the human’s trust in his/her collaborator (the robot) and in the collaboration environment (CPH system) for the safety communication condition [50].

Table 7.

Physical human–robot interaction evaluation results for different experimental protocols.

Table 8.

Cognitive human–robot interaction evaluation results for different experimental protocols.

We conducted analysis of variances (ANOVAs) for the mean assembly completion times, assembly success rates, engagement, time fluency, cognitive workload, trust, and situation awareness separately. ANOVAs showed that variations in the results between subjects were statistically nonsignificant ( for each case), which indicated that the evaluation results could be used as the general findings, even though a limited number of subjects were engaged in the experiments. On the other hand, variations in the results between experiment protocols (safety communications versus no safety communications) were statistically significant ( for each case), which clearly proved the impacts of the experiment protocols (different safety communication scenarios) on the task performance in the CPH framework [65,66,67,68].

7.3. Results of Experiment 3 (Modulating Communications Between the Physical System and the Cyber System)

Table 9 shows the mean assembly completion times and mean assembly success rates for the two experimental conditions (slow communications versus fast communications) related to modulating communications between the cyber system and the physical system [52,53]. In the table, the digits in the parentheses indicate the standard deviations. The results show that the mean assembly completion time is higher (efficiency is lower) and the assembly success rate is higher for the slow communication condition compared to the fast communication condition [54]. This might happen because the slow communication slows down the trust computation in the cyber system, which impacts the trust-based control of robot behaviors or motions. The human observed the slow behaviors of the robot, and as a result, slowed down his/her own movements for the collaborative task keeping pace with the robot. All these phenomena might increase the assembly completion time for the slow communication condition [54]. However, slow communication helped the human perform the assembly task slowly but more attentively. The slow motions of the robot also enabled the robot to pick, move, and place the parts more accurately. All these phenomena might enhance the assembly success rate [54].

Table 9.

Mean assembly completion times and mean assembly success rates for different experimental protocols.

We see better pHRIs (human–robot engagement and human–robot team fluency) in Table 10 and cHRIs (human’s cognitive workload, human’s trust in the robot, and human’s situation awareness while performing the assembly task) in Table 11 for the slow communication condition compared to the fast communication condition. In the tables, the digits in parentheses indicate the standard deviations. In Table 11, the mean values of the overall or total cognitive workloads for the two different experimental protocols were determined by averaging the values of different elements (criteria) of cognitive workloads between the experimental protocols (different communication strategies) following the same method illustrated in Figure 6. The reason behind the better results for the slow communication condition might be that slow communication provided an opportunity to humans to engage with the robot more actively and intensely. Higher engagement reduced the time gaps between the human and the robot and enhanced the task fluency between the human and the robot. A comparatively more stable, well-engaged, and fluent work environment for the slow communication condition helped the humans perform the collaborative task with reduced uncertainty, time pressure, and anxiety, which reduced cognitive requirements or cognitive workloads [59]. The slow communication offered a better chance to humans to be aware of the work environment, which enhanced situation awareness [21]. The overall improvement in the task performance and interaction scenarios enhanced the human’s trust in his/her collaborator (the robot) and in the collaboration environment (the CPH system) for the slow communication condition [50].

Table 10.

Physical human–robot interaction evaluation results for different experimental protocols.

Table 11.

Cognitive human–robot interaction evaluation results for different experimental protocols.

We conducted analysis of variances (ANOVAs) for the mean assembly completion times, assembly success rates, engagement, time fluency, cognitive workload, trust, and situation awareness separately for the results presented in Table 9, Table 10 and Table 11. ANOVAs showed that variations in the assembly completion time, assembly success rate, engagement, time fluency, cognitive workload, trust, and situation awareness between subjects were statistically nonsignificant ( for each case), which indicated that the evaluation results could be used as the general findings, even though we employed a limited number of subjects in the experiments. On the other hand, variations in the assembly completion time, assembly success rate, engagement, time fluency, cognitive workload, trust, and situation awareness between experiment protocols (slow communications versus fast communications) were statistically significant ( for each case), which clearly proved the impacts of the experiment protocols (communication speeds) on the task performance and interaction scenarios in the CPH framework [65,66,67,68].

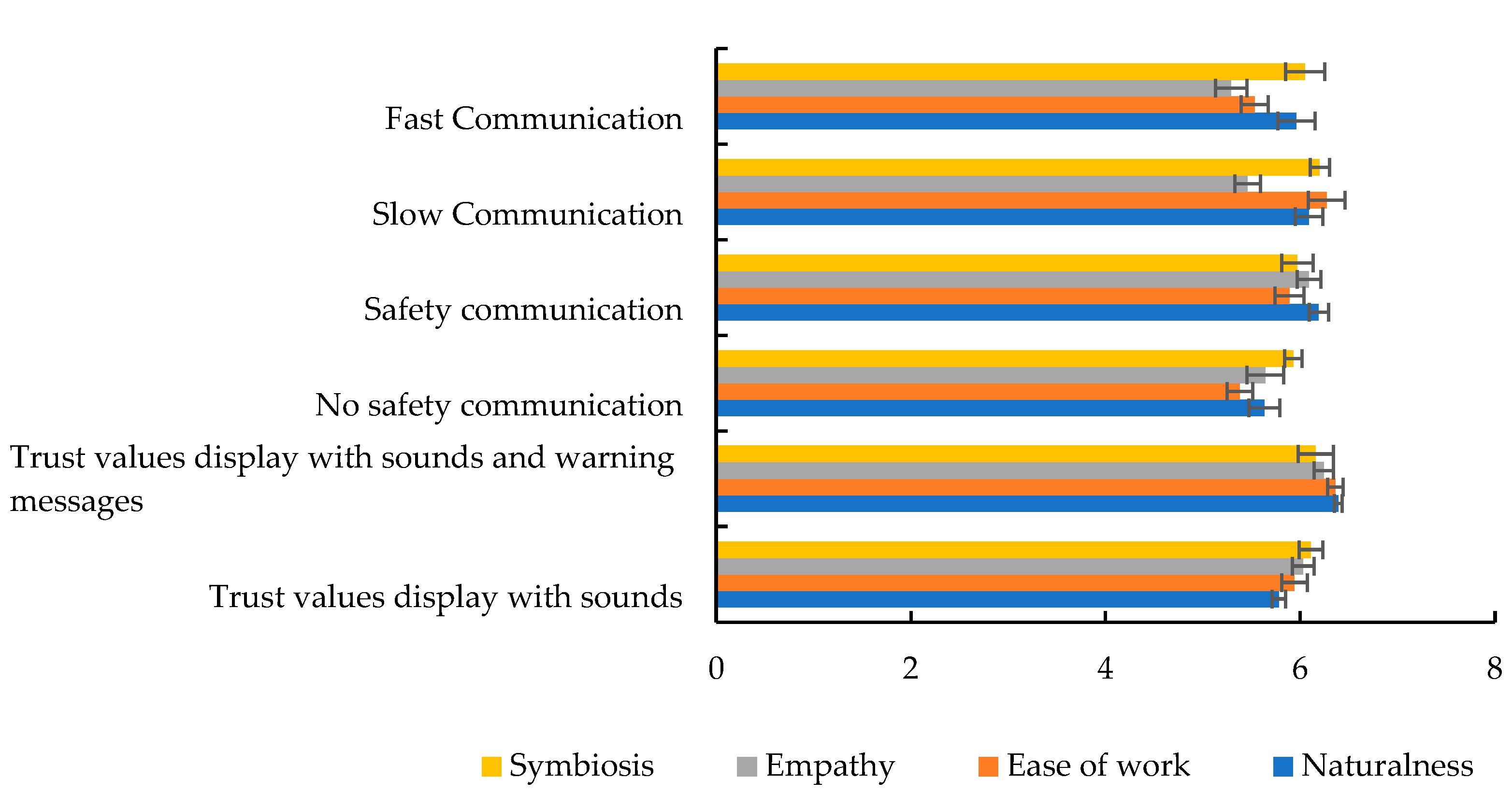

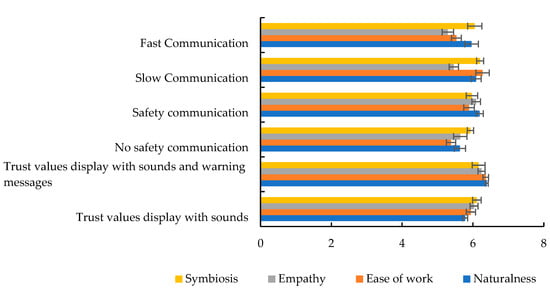

The mean assessment scores in Figure 7 compare human perceptions of the potential long-term work relationship with the robot for collaborating through the CPH framework after working with the robot for the assembly task under different communication protocols examined in Experiments 1, 2, and 3 [64]. ANOVAs showed that variations in perceived potential long-term work relationship assessment scores between human subjects were statistically nonsignificant (), which indicated that the assessment results could be used as the general findings, even though we employed a limited number of human subjects in the experiments. On the other hand, variations in perceived potential long-term work relationship assessment scores between communication protocols (e.g., slow communications, fast communications, and so forth) were statistically significant ( for each case), which clearly proved the impacts of the communication protocols on the assessment results.

Figure 7.

Perceived potential long-term work relationships (assessment scores) between human subjects and the robot for different communication protocols for the HRC task under the CPH framework.

The results show that there is a prospect for a long-term work relationship between the human and the robot under the CPH framework because the levels of perceived naturalness [61], symbiosis [62], ease of work, and empathy [63] are high. The results also show that such a prospect increases when the CPH system is implemented with more transparent (e.g., safety hazards communications, trust values, and warning messages display) but slower communication (e.g., slow communication) conditions [21,22,64]. The results thus indicate that better and more purposeful communications among different components of a HRC system configured in the form of a CPH system not only impact the task performance and human–robot interactions favorably, but also create a prospect for long-term work relationships between the human and the robot, which is essential for the long-term success of HRC tasks [22,23,64].

8. Discussion

8.1. Interpretation of Trust and Its Significance

Equations (1) and (2) were derived to compute human trust in robots and robot trust in humans in real time, respectively. As the equations show, the computed trust values are merely the weighted summations of the performance and mistakes (faults) of the robot and of the human. This is because the performance and faults of an actor significantly correlate to the trust of a collaborator or a co-worker of the actor about the actor [49,50]. Usually, a good performance leads to trust in an interacting co-worker regarding the performer, and the mistakes made by the performer reduce the trust of an interacting co-worker in the performer. Actual trust is the weighted resultant of the trust and lack of trust in real time [49,50]. Despite this, the computed trust value does not represent actual trust. Rather, the computed trust value merely reveals artificial trust, which may reflect the mental state of the human and of the robot about their counterparts.

These computed artificial trust values were used here as a basis of communication between different elements of the CPH system. The proposed trust computation models were the deterministic models of human and robot bidirectional trust. However, the models could be made more realistic by converting them into probabilistic or stochastic models. A good approach of such probabilistic or stochastic modeling is to use the time-varying or probabilistic values of the real constant coefficients in the models [21]. The proposed individual trust models in Equations (1) and (2) were used together, and the results of one trust model could influence that of the other. As a result, the combined effect of these two trust models was considered here as the “bidirectional trust” between the human and the robot [19].

8.2. Comprehensive Evaluations

In the evaluation scheme, we used both the objective assessment criteria (e.g., assembly efficiency, success rate, team fluency) and the subjective assessment criteria (e.g., trust, cognitive workload, engagement, situation awareness, naturalness, ease of work, empathy, symbiosis) to evaluate the proposed CPH framework [65,66,67,68]. We believe that the combination of subjective and objective criteria made the evaluation scheme complete and comprehensive, and helped us crosscheck the results [21,65,66,67,68]. We further believe that the proposed criteria incorporating the KPI should be sufficient to make the evaluation results comprehensive. However, more evaluation criteria could be added to the evaluation scheme, and the evaluations could be made more objective using innovative sensor applications [65,66,67,68].

8.3. Research Questions and Hypothesis

Section 5 justifies research question 1 (RQ 1). This section addresses how we configured a HRC system in the form of a CPH system via human–robot bidirectional trust with respect to human–robot collaborations in assembly in manufacturing [16,21]. The experimental protocols in Section 6 and the corresponding results in Section 7, especially the ANOVAs results, clearly show that the CPH configuration of a HRC system impacts HRC performance and human–robot interactions modularly, which justifies research question 2 (RQ 2) [16]. The experimental results, especially the results of experiment 3 in Section 7, justify the hypothesis that we adopted, which is that the trade-off between the communication speed and reliability surely impact the robot’s behavior, human–robot interactions, and human–robot collaborative performance of the assembly task [54]. Therefore, the experiments clearly addressed all the research questions and the hypothesis.

8.4. The Modularity Trait

We adjusted only one module (communication strategies) between different elements of the HRC system. It was also possible to adjust other modules, such as computation (computing) and control, separately. The simultaneous adjustment of all three modules was also possible. Interrelationships and interdependencies among the modules might also exist, which might influence the CPH system’s performance. The CPH configuration allowed us to investigate how the adjustment of one module could influence the performance of other modules. Ideally, the adjustment of one module of a CPH system should be independent of that of other modules. These features and advantages of the CPH system made it different from conventional element-level approaches or non-modular (integrated) approaches of adjustments of communication strategies in HRC systems [22,23,24,25,26,27,28,29,30]. In [22,23,24,25,26,27,28,29,30], investigations on communications were at the element level, which did not consider the possibility of adjusting the computation, communication, and control of the HRC systems separately. We considered the adjustment of communication between all possible elements of the system (cyber, physical, and human elements) separately. However, the conventional element-level approaches or non-modular (integrated) approaches usually do not address the adjustment of communications between all elements separately [22,23,24,25,26,27,28,29,30]. These differences made the CPH framework superior to the non-modular (integrated) approaches of HRC system design and evaluation.

8.5. Significance of the Results

The main contributions of the research presented here are that the results prove the efficacy of implementing a HRC system in the form of a CPH system [16,33]. Communication is a function module of the CPH system, and the results prove that this module can be adjusted among the cyber, physical, and human components separately, and the impacts of such adjustments in terms of human–robot collaborative task performance and human–robot interactional effectiveness can be analyzed and calibrated separately [8,16]. The presented results thus prove that it was possible to analyze the variations in communication methods and complexity of the CPH framework separately as a single module, which can help design, develop, implement, analyze, and evaluate a CPH system for collaborative assembly tasks modularly, easily, and effectively [8,16]. The bidirectional trust served as the basis of communications and made the communications clear and easy [21]. The results thus prove the efficacy and prospect of designing collaborative assembly tasks in the form of a CPH system triggered by trust as a communication cue [8,9,10,69,70].

8.6. Limitations of the Results

The proposed modular CPH system was implemented and evaluated successfully. Despite this, there might have been limitations in the proposed CPH system method, such as, sometimes, it could be difficult to (i) identify and maintain the cyber, physical, and human components and their boundaries; (ii) define the scope and agenda of the three functional units, such as computation, communication, and control, clearly; and (iii) implement, adjust, investigate, and evaluate each of the functional units modularly, etc. It seems to be necessary to analyze the system properly and compare the system with the standard CPH system effectively to eradicate such limitations with the CPH system [8,16].

8.7. Comparison of the Results

We realize that the CPH system approach presented here enabled us to define and analyze each module and function of the HRC system more clearly and conveniently, which was not possible when the HRC systems were not designed modularly in the past, i.e., when HRC systems were designed following conventional methods without identifying and defining each component module and function separately [65,66,67,68]. The results obtained here for the CPH system approach prove to be better than those obtained in the past when HRC systems were not configured in the form of CPH systems [65]. A comparative study revealed that the human–robot collaborative performance in terms of task efficiency and success rate (a measure of task quality) for the CPH system approach increased on average by 11.33% and 10.48%, respectively, compared to the results we obtained for our past research, where the HRC systems were not designed in the form of CPH systems [65,66,67,68]. Table 12 compares the human–robot interactional effectiveness for the proposed HRC system in the form of a CPH system with that observed for the state-of-the-art HRC systems not designed in the form of CPH systems [65,66,67,68].

Table 12.

Comparison of the proposed HRC system in the form of a CPH system with similar state-of-the-art HRC systems [65,66,67,68].

The possibility to look closer into the communication module design, implementation, and evaluation of the modular CPH approach might be the reason behind the superior results of the CPH approach to the conventional approaches investigated in the past [65,66,67,68]. However, the effectiveness and superiority of the CPH systems approach may largely depend on how the modules and functions of the CPH systems within the HRC systems are defined and adjusted accurately [8,16].

8.8. Scaling up the System

The system presented in Figure 2 may not directly relate to industrial systems employed for human–robot collaborative manufacturing. However, the presented system is a proof-of-concept system that serves the purpose of the research presented here, i.e., to investigate the feasibility and effectiveness of implementing a modular CPH system for human–robot collaborative assembly in manufacturing. As a result, this system can represent all HRC systems to be used in industries because the results are accurate for all HRC systems, in principle. Real industrial assembly may involve complex quality standards, tight cycle times, cost pressures, and multi-part coordination. However, all these factors can be incorporated on top of what has been presented in the sample collaborative task in this article. This means that, if the tasks are complex, then human–robot collaboration and subtask allocation may need to be designed more carefully, addressing the task requirements. We may need more skills and care to design the collaborative task and subtask allocation and identify the scope and boundaries among the cyber, physical, and human system components. However, the CPH system principles shown here should be applicable to solve complex problems.

A more realistic HRC system can be developed in the form of a CPH system. For example, a HRC system can be developed in the form of a CPH system to assemble the center console part of automobiles [21]. The HRC system (hybrid cell) can be connected to the central automation system of the factory floor by integrating suitable conveyor belts with the HRC system. A conveyor belt can be used to convey input components to the hybrid cell, while another conveyor belt can be used to dispatch the finished products to the next processing unit [9,17,36]. The HRC system can also be part of the central or integrated PLC system of the manufacturing plant [9,71]. Other advanced facilities, such as the integrated synergistic industry automation system [71], process operation diagrams [72], computer vision systems [73], digital twins [74], etc., can also be used to augment the abilities and scope of the proposed CPH system. The finally developed industrial CPH systems need to be tested using actual industry workers in industry environments. Worker acceptance, training requirements, job displacement concerns, and long-term adaptation effects that critically influence industrial HRC system success should also be considered to make the presented framework useful in industrial settings.

8.9. Impacts of System Latency

Continuous trust calculations and dynamic communication adjustments based on computed trust may impose a computational burden in industrial settings. System latency (e.g., delays in trust computation and in communications of trust-based messages and decisions) may affect interaction fluency and system performance [75]. This is because system latency may loosely connect the human component with the cyber and physical components of the CPH system. Sometimes, latency may be helpful to help humans keep pace with the physical system. As a result, an optimum level of system latency should be maintained in the CPH system by investigating performance variations under different latency conditions and explicitly justifying the real-time assumptions. To do so, the value of k (time step) in trust models can be varied and its impact on interaction fluency and system performance can be analyzed [21].

8.10. Communication Modalities

In this paper, we adjusted the communication module only. The objectives of the experiments were to check how easily we could identify each module and how clearly we could understand the impacts of making changes in that module. There are many communication modalities in human–robot collaboration tasks, such as gesture recognition, natural language processing, gaze tracking, haptic feedback, and adaptive communication systems, which respond to user expertise levels and preferences. However, all these modalities are usually not applied to a single human–robot collaborative task. The adoption of communication modalities depends on task requirements and other constraints. In this article, our main objective was not to show innovations in communication modalities in human–robot collaborative systems. Instead, the main objective was to show how a human–robot system could be designed and managed modularly and how such modularization could work effectively. The presented framework did not restrict innovations in communication modalities, such as gesture recognition, natural language processing, gaze tracking, haptic feedback, adaptive communication, etc. We here tested communication methods just to show that the CPH system could help adjust the communication modalities easily and conveniently. The different communication modalities (e.g., visual displays, audio announcements, basic warning messages) that we used here seemed to be very useful in industrial settings. The presented framework should be able to accommodate any suitable communication modalities on top of the modalities we tested here.

8.11. Impacts of Gender

The gender of human subjects might impact human–robot collaborative task performance. As a result, it might impact trust computation and trust-based decisions. We compared performance and human–robot interactions among different communication protocols. The same subjects (males and females) participated in all experimental protocols randomly. As a result, the impact of gender might not have impacted the comparative results we present in Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10 and Table 11. A separate hypothesis could be addressed to verify gender impacts more clearly, and trust computation could be adjusted accordingly.

9. Conclusions and Future Work

We successfully proposed a human–robot bidirectional trust-triggered cyber–physical–human (CPH) system framework for human–robot collaborative assembly in flexible manufacturing, and evaluated the impact of modulating communications in the CPH system on the system performance and human–robot interactions. For this purpose, we developed a one robot–one human hybrid cell in the form of a CPH system to perform human–robot collaborative assembly in flexible manufacturing. We computed human trust in the robot and robot trust in the human to constitute a human–robot bidirectional trust model. We used trust values to monitor, analyze, and communicate the performance of the CPH system. As part of this research, we modulated the communication complexities and communication methods among the cyber, physical, and human systems, and investigated its impacts on the performance of the CPH system for the collaborative assembly task in terms of assembly performance and human–robot interactional effectiveness. The results show that it is possible and convenient to define and modulate the communication methods and complexities separately triggered by bidirectional trust. The results also show that such modulations of communication methods and complexities significantly impact the effectiveness of the CPH system in terms of human–robot interaction (HRI) and task performance (efficiency and quality). The results prov the efficacy and superiority of configuring HRC systems in the form of modular CPH systems over using conventional HRC systems in terms of HRI and task performance. The results thus prove that a HRC system can be effectively designed in the form of a CPH system, which can enhance the scope, modularity, flexibility, and ease in the design, implementation, evaluation, and maintenance of the HRC system. The results are important to advance HRC systems for various applications, such as manufacturing, construction, healthcare, transport, and logistics.

In the future, we will adjust other functional modules (control, computing) of the CPH system separately following innovative methods, and investigate their interrelationships and interdependencies as well as their impacts on HRC system performance and human–robot interactions. Specifically, we will design and evaluate advanced control strategies (e.g., trust-triggered model predictive control, inverse reinforcement learning control) for the CPH system to modulate its control toward modulating its performance and interactions with users. We will investigate the impact of system latency on system performance and interactions. We will validate the results obtained from the proof-of-concept CPH system presented here in industrial settings. During the validation study, the diversity of users will be increased by recruiting human subjects from diversified backgrounds, ages, genders, and experience levels.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was guided by ethics. The author followed the IRB ethical principles while working with the human subjects for this research. Informed consent was received from the subjects for this research. This study was conducted in accordance with the Declaration of Helsinki, and it was approved by the Institutional Review Board of Clemson University (approved code is 2014-241).

Data Availability Statement

Available if needed. The corresponding author may be contacted by email to check the availability of the data.

Acknowledgments

This research was carried out partly when the author was at Clemson University, SC, USA. The author is thankful to Clemson University for the support he received to conduct the research presented here. The author is also thankful to the subjects (Clemson University students and researchers) who participated in the experiments.

Conflicts of Interest

The author declares no conflict of interest.

References

- Mondellini, M.; Nicora, M.L.; Prajod, P.; André, E.; Vertechy, R.; Antonietti, A.; Malosio, M. Exploring the dynamics between cobot’s production rhythm, locus of control and emotional state in a collaborative assembly scenario. In Proceedings of the IEEE 4th International Conference on Human-Machine Systems, Toronto, ON, Canada, 15–17 May 2024; pp. 1–6. [Google Scholar]

- Das, A.R.; Koskinopoulou, M. Towards sustainable manufacturing: A review on innovations in robotic assembly and disassembly. IEEE Access 2025, 13, 100149–100166. [Google Scholar] [CrossRef]

- Qin, S.; Xiang, C.; Wang, J.; Liu, S.; Guo, X.; Qi, L. Multiple product hybrid disassembly line balancing problem with human-robot collaboration. IEEE Trans. Autom. Sci. Eng. 2025, 22, 712–723. [Google Scholar] [CrossRef]

- Jung, D.; Gu, C.; Park, J.; Cheong, J. Touch gesture recognition-based physical human–robot interaction for collaborative tasks. IEEE Trans. Cogn. Dev. Syst. 2025, 17, 421–435. [Google Scholar] [CrossRef]

- Li, M.; Ling, S.; Qu, T.; Lu, S.; Li, M.; Guo, D. Real-time data-driven hybrid synchronization for integrated planning, scheduling, and execution toward industry 5.0 human-centric manufacturing. IEEE Trans. Syst. Man Cybern. Syst. 2025, 55, 5670–5681. [Google Scholar] [CrossRef]

- Zhao, S.; Li, Z.; Xia, H.; Cui, R. Skin-inspired triple tactile sensors integrated on robotic fingers for bimanual manipulation in human-cyber-physical systems. IEEE Trans. Autom. Sci. Eng. 2025, 22, 656–666. [Google Scholar] [CrossRef]

- Almasarwah, N.; Abdelall, E.; Saraireh, M.; Ramadan, S. Human-cyber-physical disassembly workstation 5.0 for sustainable manufacturing. In Proceedings of the 2022 International Conference on Emerging Trends in Computing and Engineering Applications (ETCEA), Karak, Jordan, 23–25 November 2022; pp. 1–5. [Google Scholar]

- Lou, S.; Hu, Z.; Zhang, Y.; Feng, Y.; Zhou, M.; Lv, C. Human-cyber-physical system for industry 5.0: A review from a human-centric perspective. In Proceedings of the IEEE Transactions on Automation Science and Engineering, Bari, Italy, 28 August–1 September 2024. [Google Scholar] [CrossRef]

- Huang, K.-C.; Ku, H.-C.; Chuang, T.-H.; Huang, W.-N. Improved fault diagnosis method for PLC-based manufacturing processes with validation through a cyber-physical system. In Proceedings of the 2024 IEEE 4th International Conference on Electronic Communications, Internet of Things and Big Data (ICEIB), Taipei, Taiwan, 19–21 April 2024; pp. 705–710. [Google Scholar]

- Qian, F.; Tang, Y.; Yu, X. The future of process industry: A cyber–physical–social system perspective. IEEE Trans. Cybern. 2024, 54, 3878–3889. [Google Scholar] [CrossRef]

- Li, Z.; Sun, H.; Gong, J.; Chen, Z.; Meng, X.; Yu, X. Enhancing SMT quality and efficiency with self-adaptive collaborative optimization. IEEE Trans. Cybern. 2025, 55, 1409–1420. [Google Scholar] [CrossRef]

- Martins, G.S.M.; Martins, T.; Soares, T.R.; Zafalão, I.H.L.; Fernandes, A.C.; Granziani, Á.P. Optimization machining processes in multi-user cyber-physical cells: A comparative. In Proceedings of the 2024 3rd International Conference on Automation, Robotics and Computer Engineering (ICARCE), Wuhan, China, 17–18 December 2024; pp. 50–54. [Google Scholar]

- Paredes-Astudillo, Y.A.; Moreno, D.; Vargas, A.-M.; Angel, M.-A.; Perez, S.; Jimenez, J.-F. Human fatigue aware cyber-physical production system. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems (ICHMS), Rome, Italy, 7–9 September 2020; pp. 1–6. [Google Scholar]

- Fantini, P.; Tavola, G.; Taisch, M.; Barbosa, J.; Leitao, P.; Liu, Y. Exploring the integration of the human as a flexibility factor in CPS enabled manufacturing environments: Methodology and results. In Proceedings of the 42nd Annual Conference of the IEEE Industrial Electronics Society (IECON 2016), Florence, Italy, 23–26 October 2016; pp. 5711–5716. [Google Scholar]

- Krueger, V.; Chazoule, A.; Crosby, M.; Lasnier, A.; Pedersen, M.R.; Rovida, F. A vertical and cyber–physical integration of cognitive robots in manufacturing. Proc. IEEE 2016, 104, 1114–1127. [Google Scholar] [CrossRef]

- Rahman, S.M.M. Cognitive cyber-physical system (C-CPS) for human-robot collaborative manufacturing. In Proceedings of the 2019 IEEE Annual Conference on System of Systems Engineering, Anchorage, AK, USA, 19–22 May 2019; pp. 125–130. [Google Scholar]

- Campagna, G.; Lagomarsino, M.; Lorenzini, M.; Chrysostomou, D.; Rehm, M.; Ajoudani, A. Promoting trust in industrial human-robot collaboration through preference-based optimization. IEEE Robot. Autom. Lett. 2024, 9, 9255–9262. [Google Scholar] [CrossRef]

- Yu, C.; Serhan, B.; Cangelosi, A. ToP-ToM: Trust-aware robot policy with theory of mind. In Proceedings of the IEEE International Conference on Robotics and Automation, Yokohama, Japan, 13–17 May 2024; pp. 7888–7894. [Google Scholar]

- Li, Y.; Cui, R.; Yan, W.; Zhang, S.; Yang, C. Reconciling conflicting intents: Bidirectional trust-based variable autonomy for mobile robots. IEEE Robot. Autom. Lett. 2024, 9, 5615–5622. [Google Scholar] [CrossRef]

- Azevedo-Sa, H.; Yang, X.J.; Robert, L.P.; Tilbury, D.M. A unified bi-directional model for natural and artificial trust in human–robot collaboration. IEEE Robot. Autom. Lett. 2021, 6, 5913–5920. [Google Scholar] [CrossRef]

- Rahman, S.M.M.; Wang, Y. Mutual trust-based subtask allocation for human-robot collaboration in flexible lightweight assembly in manufacturing. Mechatronics 2018, 54, 94–109. [Google Scholar] [CrossRef]

- Salehzadeh, R.; Gong, J.; Jalili, N. Purposeful communication in human–robot collaboration: A review of modern approaches in manufacturing. IEEE Access 2022, 10, 129344–129361. [Google Scholar] [CrossRef]

- Raković, M.; Duarte, N.F.; Marques, J.; Billard, A.; Santos-Victor, J. The gaze dialogue model: Nonverbal communication in HHI and HRI. IEEE Trans. Cybern. 2024, 54, 2026–2039. [Google Scholar] [CrossRef]