The Impact of Physical Props and Physics-Associated Visual Feedback on VR Archery Performance

Highlights

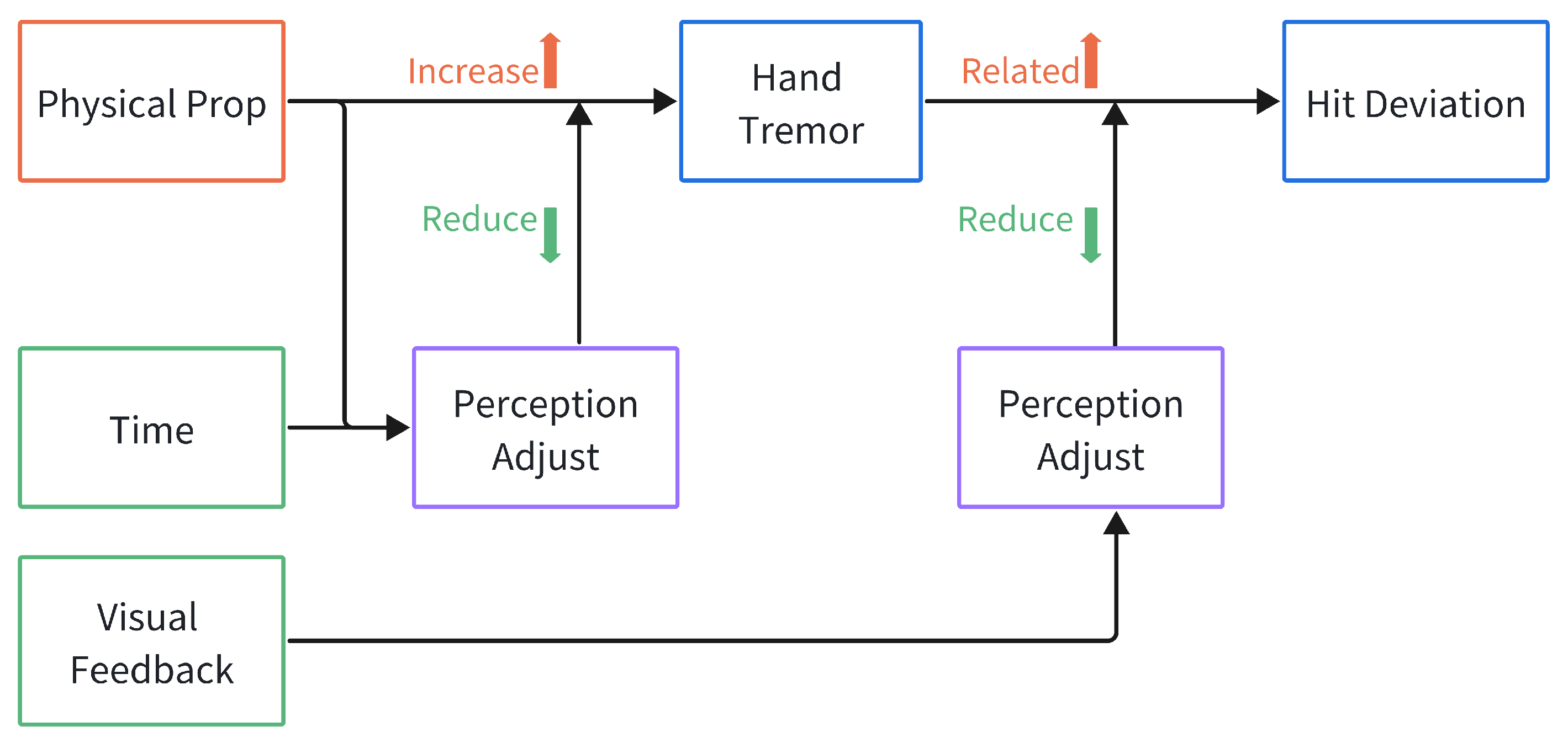

- Physical props significantly enhance presence and enjoyment but increase hand tremor, impairing task performance in high-skill VR archery.

- Physics-associated visual feedback moderates the negative impact of hand tremor on performance and synergistically enhances flow experience when combined with physical props.

- VR designers should implement multimodal integration where physical props are paired with congruent visual feedback to optimize both engagement and performance.

- The identified moderated pathway provides a framework for balancing experiential benefits against performance demands in high-skill VR applications.

Abstract

1. Introduction

1.1. Background

- Develop and validate a virtual reality archery framework with integrated sensors that can objectively quantify motion performance through data acquisition.

- Investigate the underlying mechanisms through which physical props affect both performance and user experience in high-skill VR tasks.

- Explore how does physics-associated visual feedback interact with the physical prop interfaces, and can it mitigate potential performance decrements while enhancing the subjective experience.

- We establish a sensor-driven experimental framework that objectively quantify motor performance in VR archery tasks, offering a potential pathway for methodological refinement in XR interaction research.

- Through objective quantitative metrics, this study provides a nuanced, mechanism-based explanation for the performance-experience trade-off associated with physical props in high-skill VR tasks.

- This study elucidates how physics-associated visual feedback moderates the impact of motor instability on performance and experience, offering principled insights for designing effective and engaging multimodal VR interactions.

1.2. Related Work

1.2.1. Application Research of Physical Props in VR

1.2.2. The Impact of Simulated Haptic and Visual Feedback in VR

2. Material and Methods

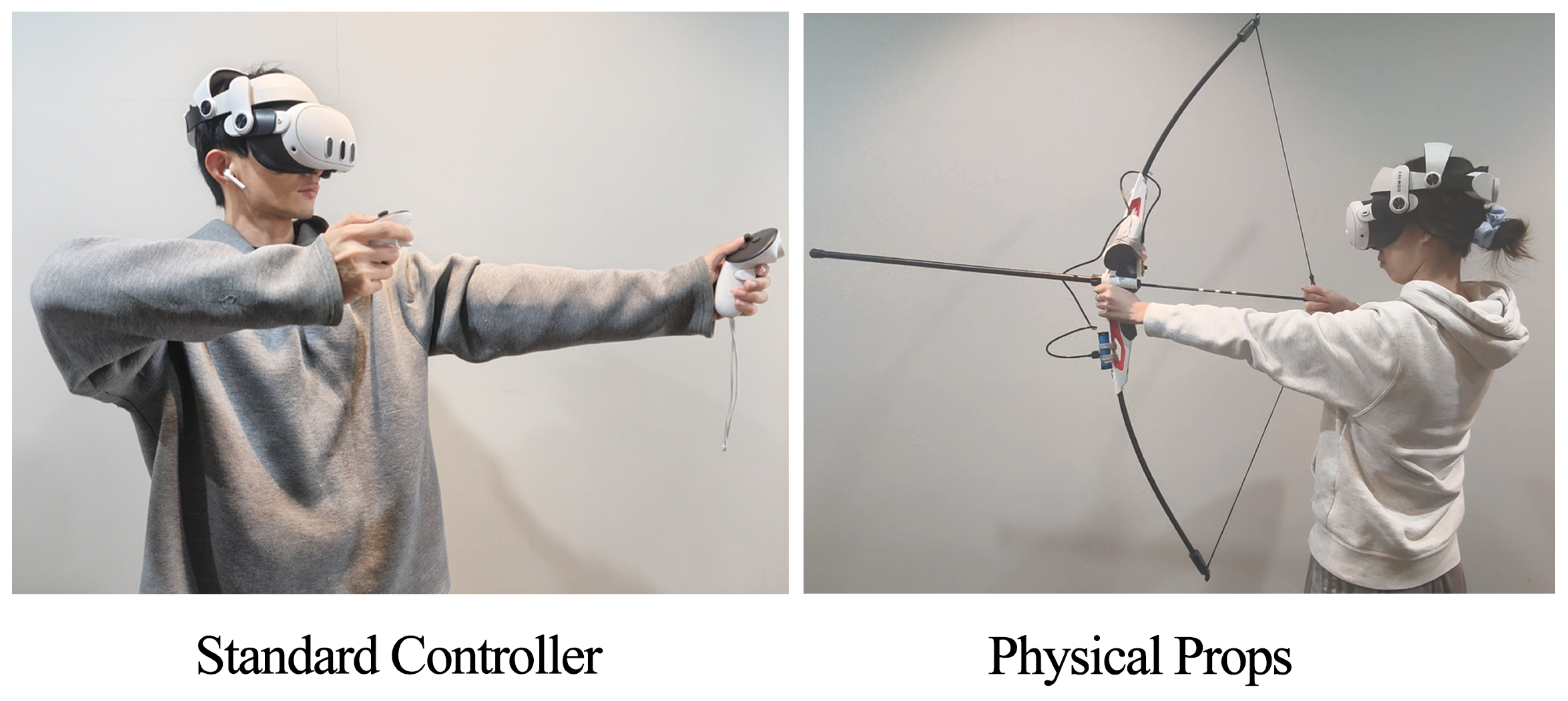

2.1. Design of the Physical Prop Interfaces

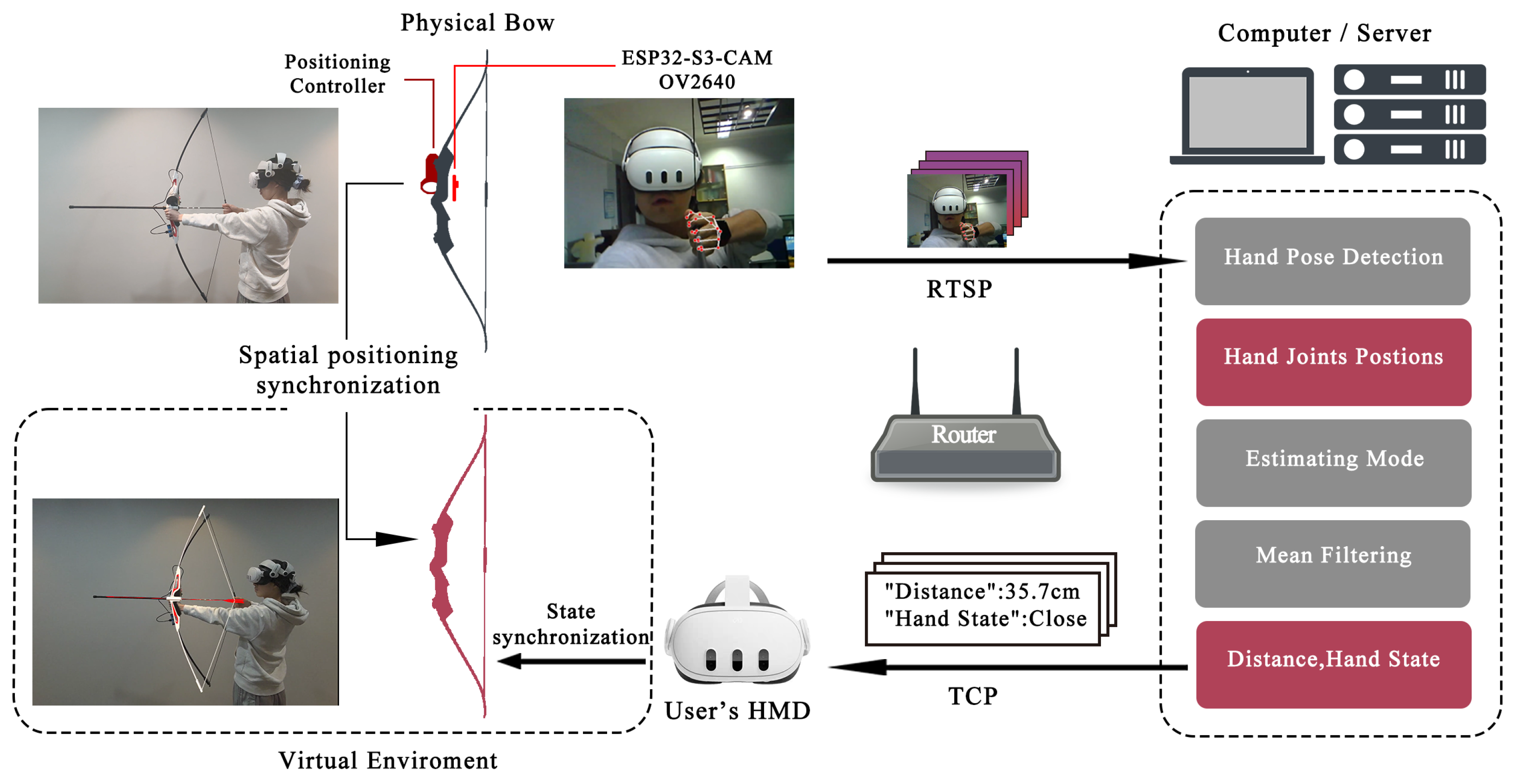

2.1.1. The Hardware Architecture

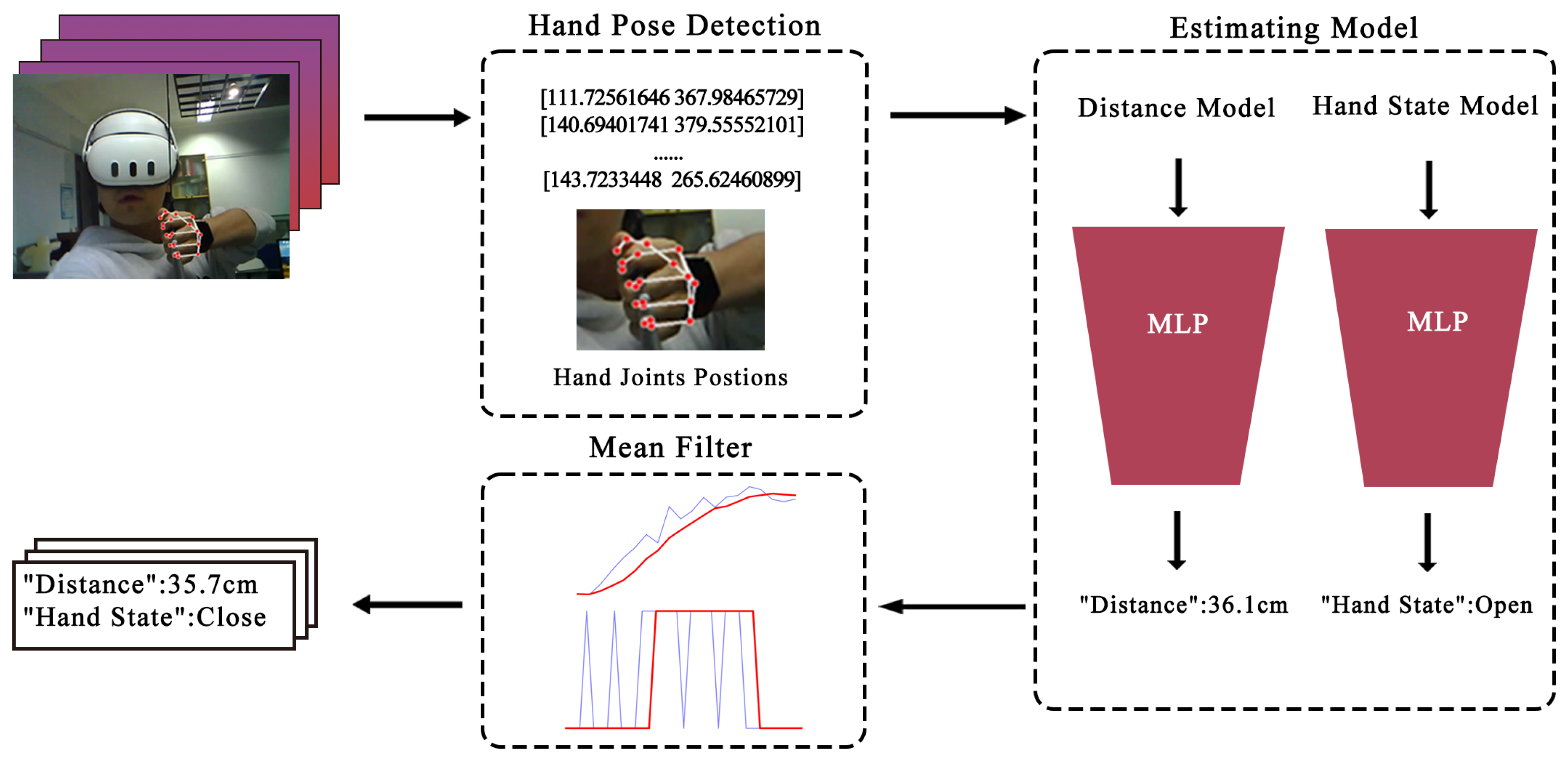

2.1.2. Gesture Recognition

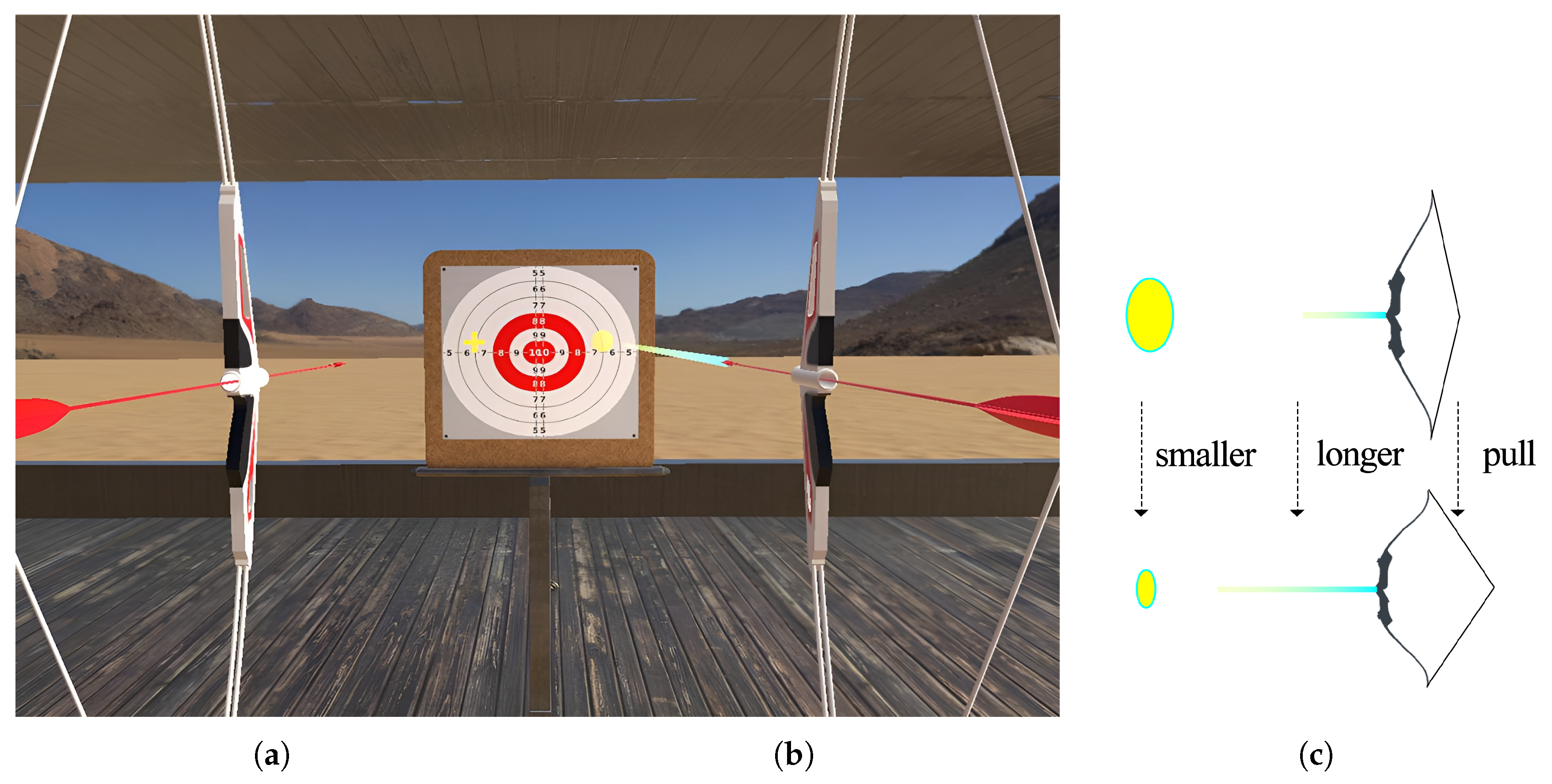

2.2. Physics-Associated Visual Feedback

2.3. Experiment

2.3.1. Study Design

2.3.2. Participants

2.3.3. Procedures

2.3.4. Measures

Objective Measures

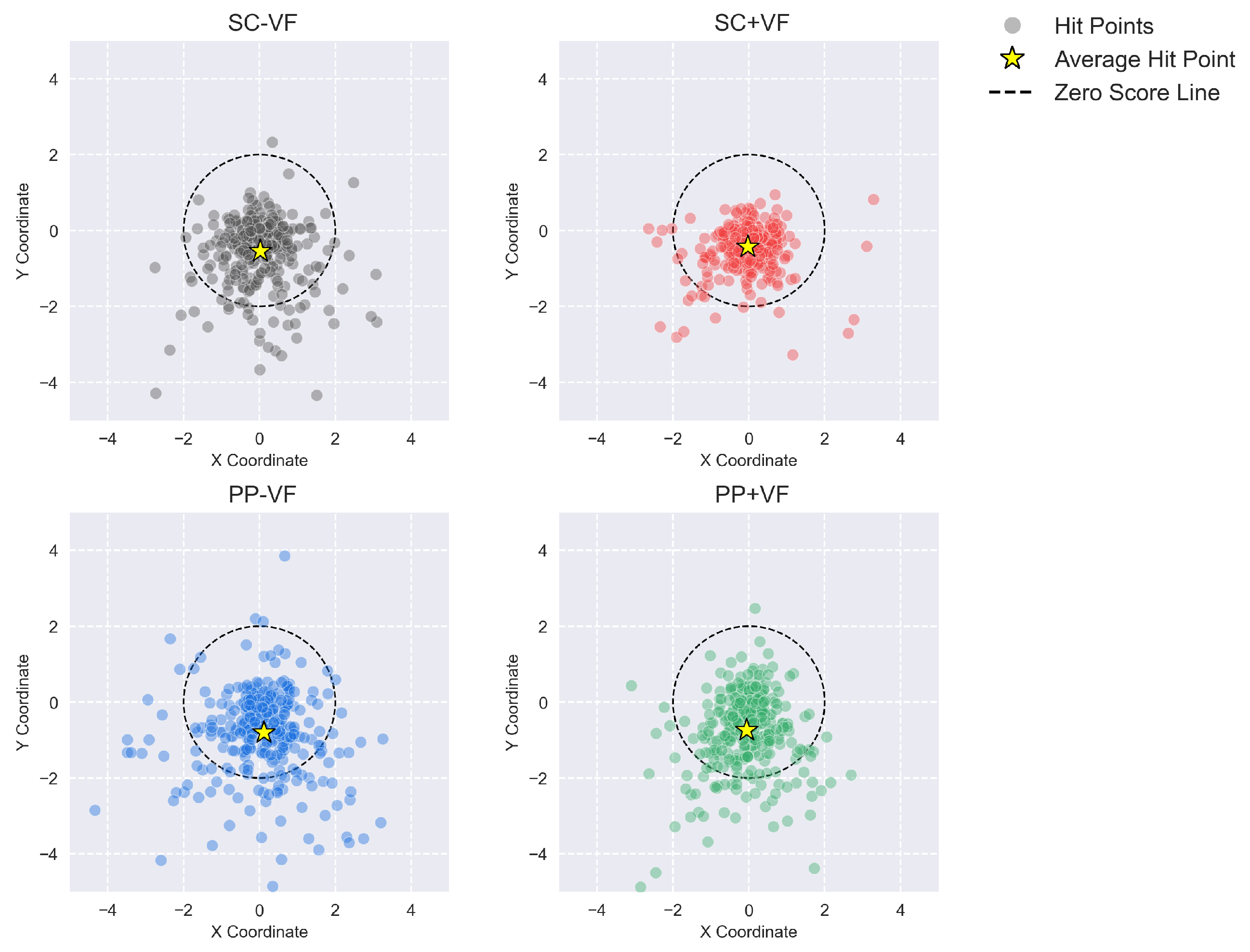

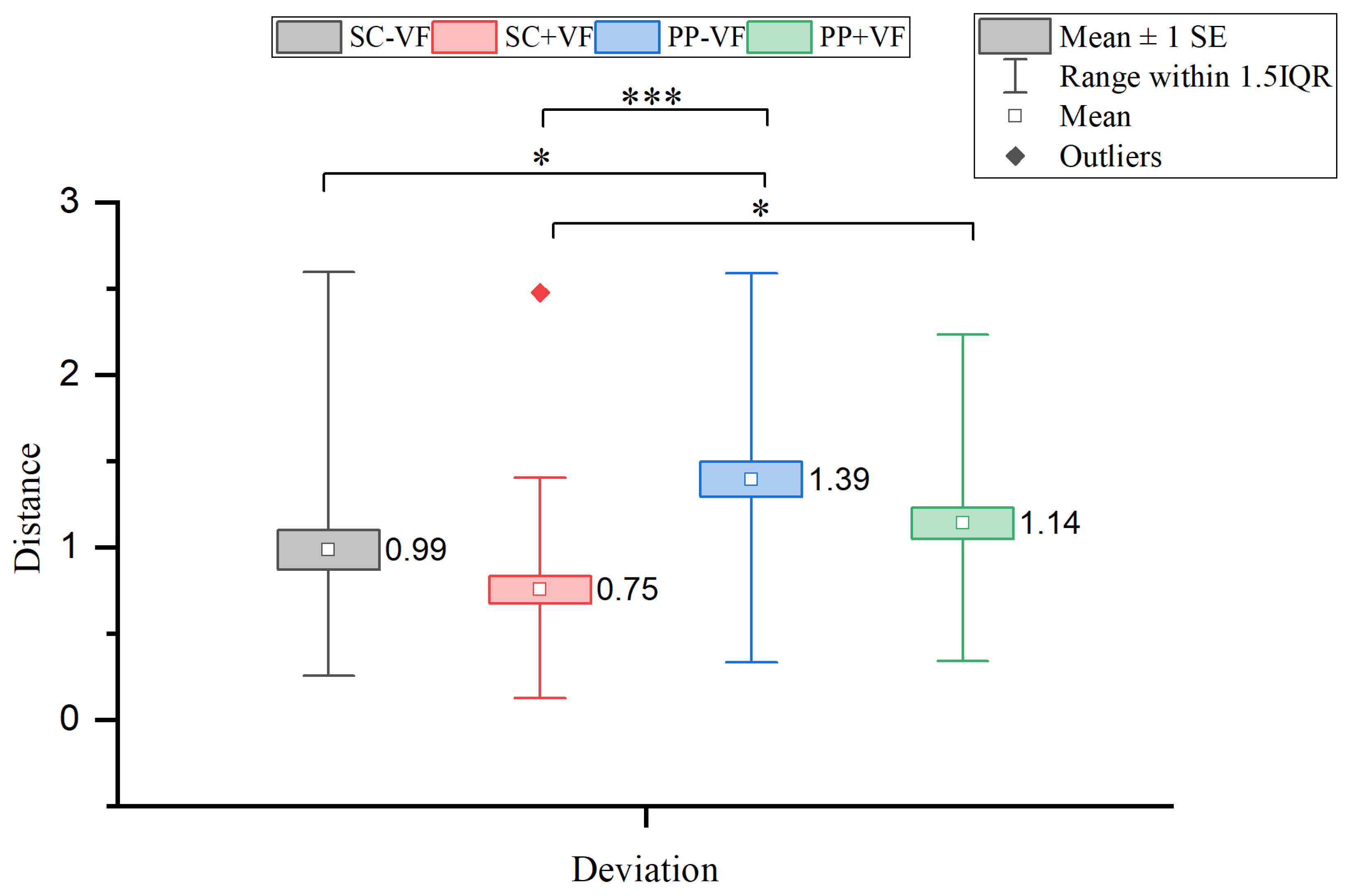

- Hit deviation, which reflects the precision with which users completed the task. For each shot, the Euclidean distance between the arrow’s impact coordinates on the target plane and the bullseye is recorded. This distance is then normalized using the radial separation from the bullseye (10-ring) to the 5-ring line as one unit.

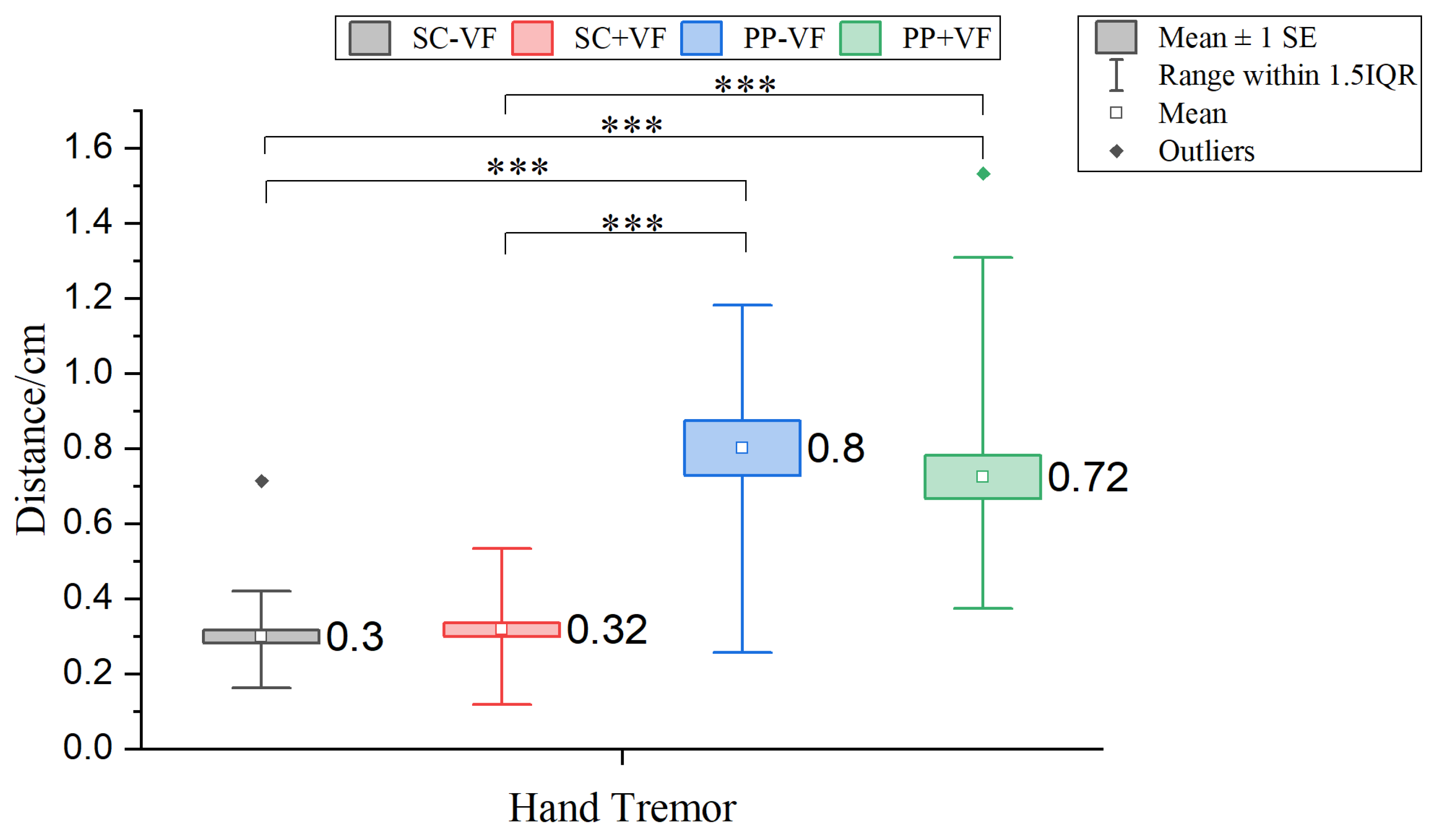

- Hand tremor, an objective factor potentially affecting precision, was operationalized as the mean positional deviation of the bow. This metric was derived from continuous sampling of the bow’s position at 60 Hz in the second before firing. For each frame, the Euclidean distance from the bow’s position to the average center across all frames was computed. The arithmetic mean of these distances (in centimeters) was then used as the quantification index, with higher values denoting poorer arm stability.

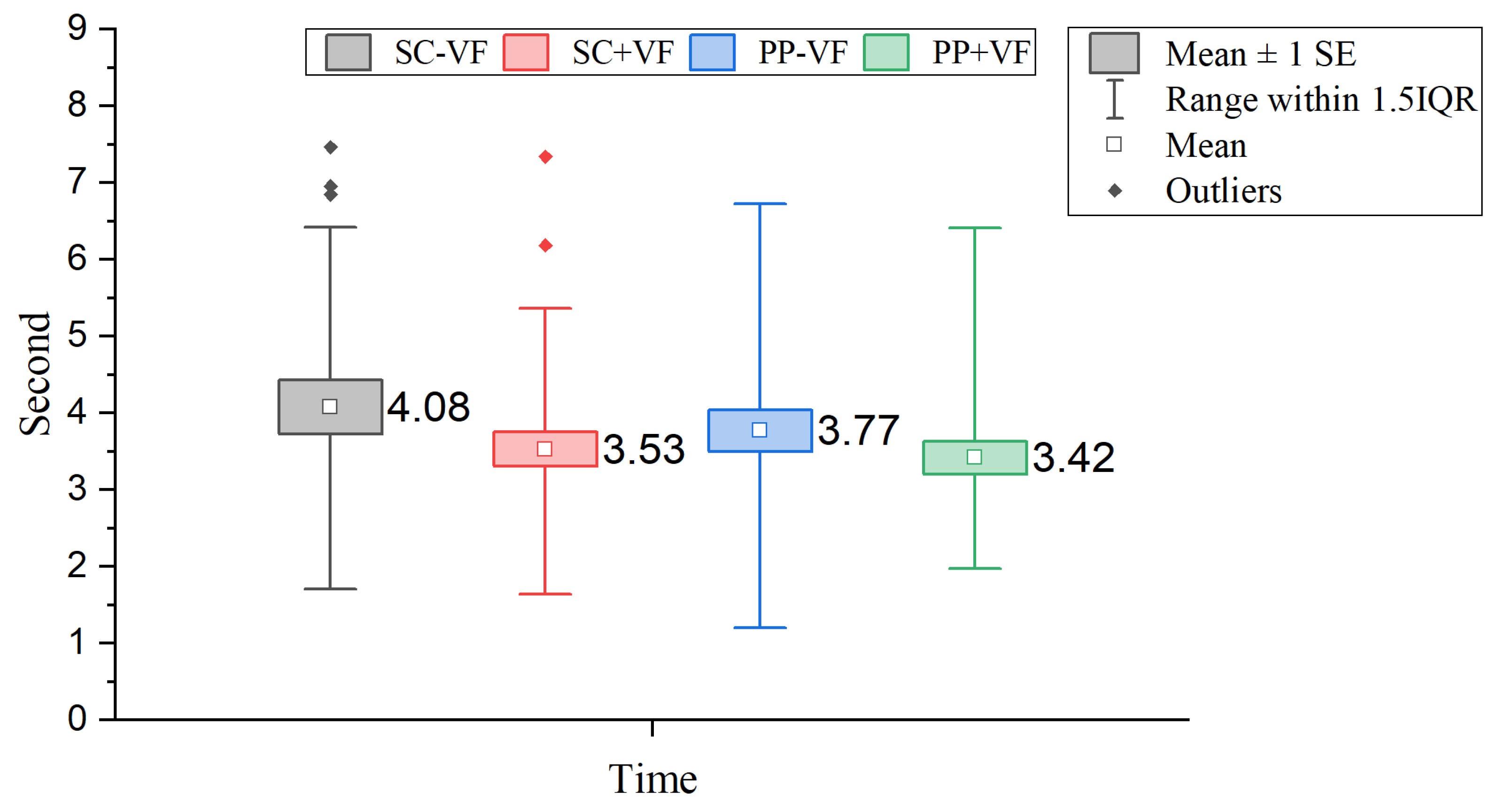

- Task completion time, which indicates efficiency. For each shot, the system records the precise duration from the initiation of the aiming action to the moment the arrow is released.

Subjective Measures

- Presence: We selected 4 items from the I-group Presence Questionnaire [39] to assess the immersive experience of the players.

- Physical Activity Enjoyment: We selected 4 items from the Short Version of the Physical Activity Enjoyment Scale [40] to evaluate the enjoyment of the players, focusing on positive experiences during the activity.

- Flow experience: We selected 4 items from a validated scale [41] to measure the flow experience of the players, covering key dimensions such as concentration and sense of control.

- Competence: To assess players’ sense of competence—a psychological need reflecting their perceived effectiveness and mastery in the game environment—we selected 4 items from the Competence subscale of the Game Experience Questionnaire [38].

- Task Load: We measured the mental and physical demands of the task using the 6 items of the NASA-TLX questionnaire [42], and the overall workload score was computed using the unweighted Raw TLX procedure.

- System Usability: We evaluated the usability of the game system using the 10 items of the System Usability Scale [43].

- Future Use Intention: We measured players’ intention to continue using the system in the future through a single-item scale, reflecting the system’s attractiveness and potential value.

3. Results

3.1. Task Performance

3.1.1. Hit Deviation Analysis

3.1.2. Task Completion Time Analysis

3.1.3. Hand Tremor Analysis

3.1.4. Linear Mixed-Effects Analysis of Hand Tremor

3.1.5. Linear Mixed-Effects Analysis of Hit Deviation

3.2. Questionnaire Result

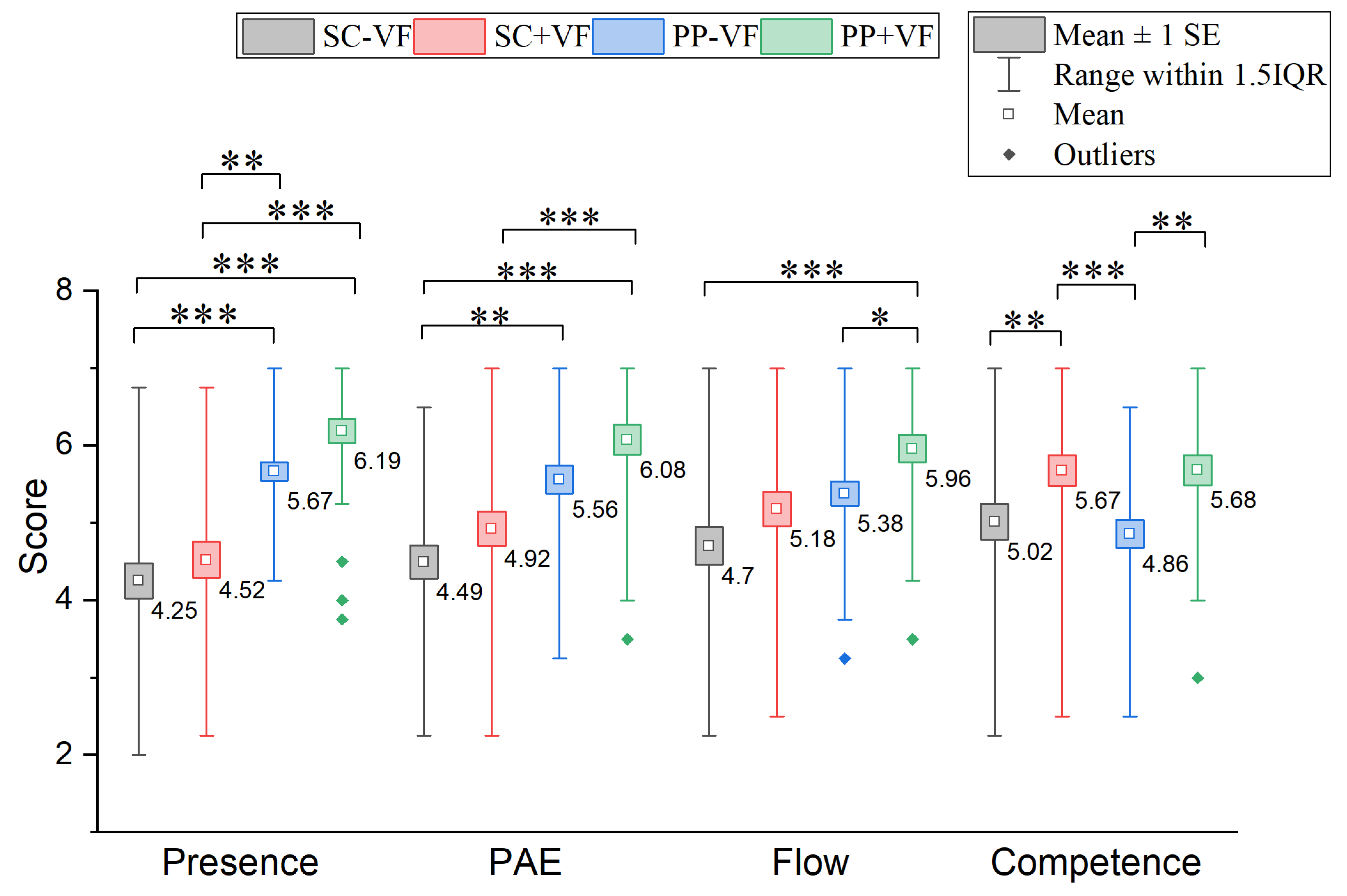

3.2.1. Presence

3.2.2. PAE

3.2.3. Flow Experience

3.2.4. Competence

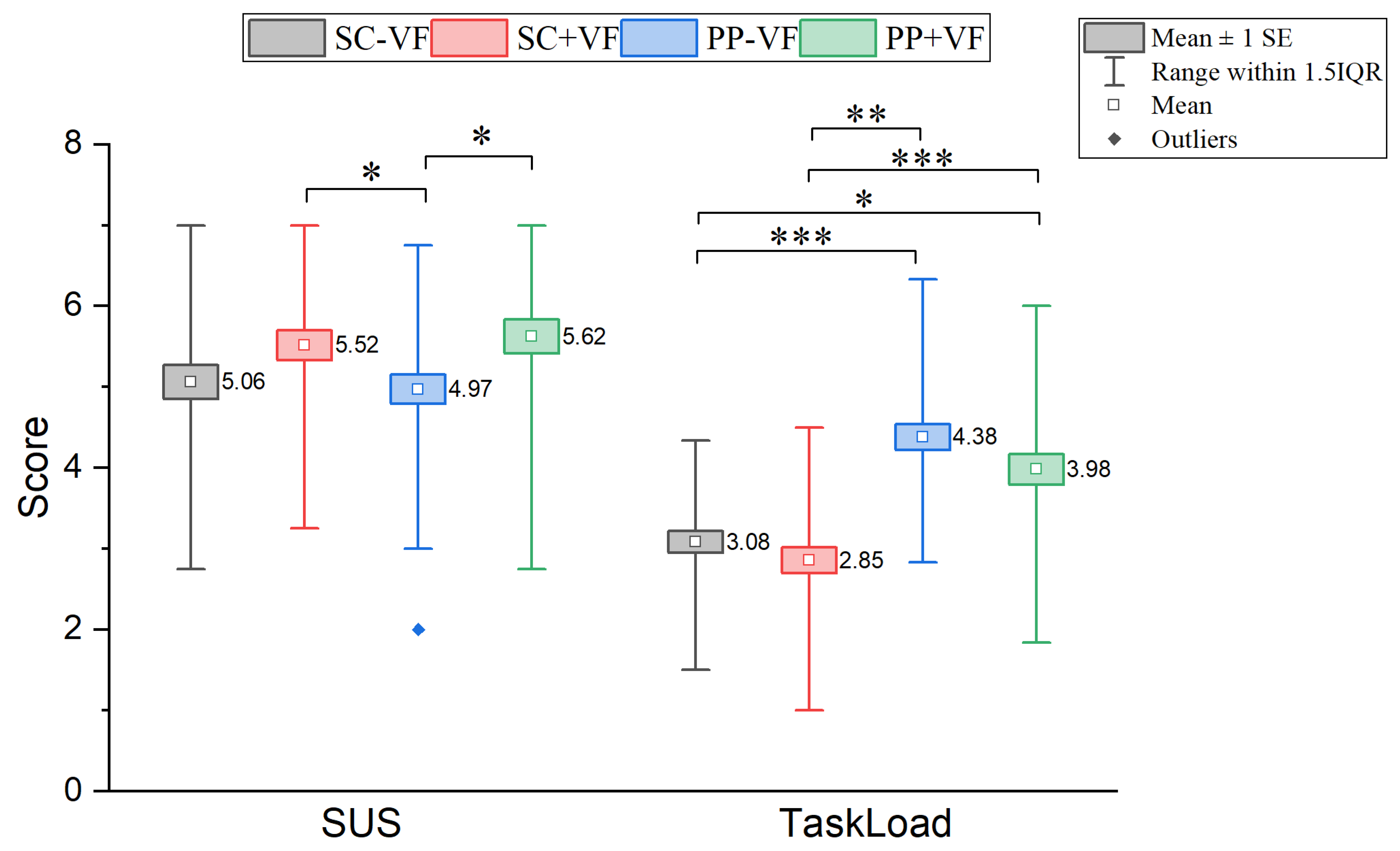

3.2.5. SUS and Task Load

4. Discussion

4.1. Main Findings

4.2. Reconciling Findings from Different Analytical Approaches

4.3. Design Inspiration

4.4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Measurement | Model | Cronbach’s Alpha | KMO | Bartlett’s Test of Sphericity | ||

|---|---|---|---|---|---|---|

| Chi-Square | df | Sig. | ||||

| Presence | SC − VF | 0.909 | 0.822 | 89.463 | 6 | 0.000 |

| SC + VF | 0.911 | 0.821 | 86.018 | 6 | 0.000 | |

| PP − VF | 0.700 | 0.734 | 23.150 | 6 | 0.001 | |

| PP + VF | 0.883 | 0.758 | 70.769 | 6 | 0.000 | |

| PAE | SC − VF | 0.828 | 0.768 | 59.059 | 6 | 0.000 |

| SC + VF | 0.876 | 0.816 | 68.78 | 6 | 0.000 | |

| PP − VF | 0.842 | 0.797 | 51.496 | 6 | 0.000 | |

| PP + VF | 0.855 | 0.795 | 87.658 | 6 | 0.000 | |

| Flow Experience | SC − VF | 0.896 | 0.747 | 82.538 | 6 | 0.000 |

| SC + VF | 0.884 | 0.713 | 88.956 | 6 | 0.000 | |

| PP − VF | 0.697 | 0.703 | 27.851 | 6 | 0.000 | |

| PP + VF | 0.781 | 0.721 | 59.258 | 6 | 0.000 | |

| Competence | SC − VF | 0.904 | 0.831 | 80.198 | 6 | 0.000 |

| SC + VF | 0.892 | 0.804 | 83.697 | 6 | 0.000 | |

| PP − VF | 0.873 | 0.792 | 65.136 | 6 | 0.000 | |

| PP + VF | 0.907 | 0.830 | 88.088 | 6 | 0.000 | |

References

- Bowman, D.A.; McMahan, R.P.; Ragan, E.D. Questioning naturalism in 3D user interfaces. Commun. ACM 2012, 55, 78–88. [Google Scholar] [CrossRef]

- Allen, J.A.; Hays, R.T.; Buffardi, L.C. Maintenance Training Simulator Fidelity and Individual Differences in Transfer of Training. Hum. Factors J. Hum. Factors Ergon. Soc. 1986, 28, 497–509. [Google Scholar] [CrossRef]

- Wee, C.; Yap, K.M.; Lim, W.N. Haptic Interfaces for Virtual Reality: Challenges and Research Directions. IEEE Access 2021, 9, 112145–112162. [Google Scholar] [CrossRef]

- Islam, M.S.; Lim, S. Vibrotactile feedback in virtual motor learning: A systematic review. Appl. Ergon. 2022, 101, 103694. [Google Scholar] [CrossRef]

- Cheng, L.P.; Chang, L.; Marwecki, S.; Baudisch, P. iTurk: Turning Passive Haptics into Active Haptics by Making Users Reconfigure Props in Virtual Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; CHI ’18. pp. 1–10. [Google Scholar] [CrossRef]

- LaViola, J.J. A discussion of cybersickness in virtual environments. ACM SIGCHI Bull. 2000, 32, 47–56. [Google Scholar] [CrossRef]

- Sanchez-Vives, M.V.; Slater, M. From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 2005, 6, 332–339. [Google Scholar] [CrossRef]

- Jang, H.; Kim, J.; Lee, J. Effects of Congruent Multisensory Feedback on the Perception and Performance of Virtual Reality Hand-Retargeted Interaction. IEEE Access 2024, 12, 119789–119802. [Google Scholar] [CrossRef]

- Dinh, H.; Walker, N.; Hodges, L.; Song, C.; Kobayashi, A. Evaluating the importance of multi-sensory input on memory and the sense of presence in virtual environments. In Proceedings of the IEEE Virtual Reality (Cat. No. 99CB36316), Houston, TX, USA, 13–17 March 1999; pp. 222–228. [Google Scholar] [CrossRef]

- Fröhlich, J.; Wachsmuth, I. The Visual, the Auditory and the Haptic – A User Study on Combining Modalities in Virtual Worlds. In Virtual Augmented and Mixed Reality. Designing and Developing Augmented and Virtual Environments; Shumaker, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 159–168. [Google Scholar] [CrossRef]

- Slater, M.; Wilbur, S. A Framework for Immersive Virtual Environments (FIVE): Speculations on the Role of Presence in Virtual Environments. Presence Teleoperators Virtual Environ. 1997, 6, 603–616. [Google Scholar] [CrossRef]

- Biocca, F.; Kim, J.; Choi, Y. Visual Touch in Virtual Environments: An Exploratory Study of Presence, Multimodal Interfaces, and Cross-Modal Sensory Illusions. Presence Teleoperators Virtual Environ. 2001, 10, 247–265. [Google Scholar] [CrossRef]

- Salkini, M.W.; Doarn, C.R.; Kiehl, N.; Broderick, T.J.; Donovan, J.F.; Gaitonde, K. The Role of Haptic Feedback in Laparoscopic Training Using the LapMentor II. J. Endourol. 2010, 24, 99–102. [Google Scholar] [CrossRef]

- Wierinck, E.; Puttemans, V.; Swinnen, S.; van Steenberghe, D. Effect of augmented visual feedback from a virtual reality simulation system on manual dexterity training. Eur. J. Dent. Educ. 2005, 9, 10–16. [Google Scholar] [CrossRef]

- De Barros, P.G.; Lindeman, R.W. Performance effects of multi-sensory displays in virtual teleoperation environments. In Proceedings of the 1st Symposium on Spatial User Interaction, Los Angeles, CA, USA, 20–21 July 2013; pp. 41–48. [Google Scholar] [CrossRef]

- Yasumoto, M.; Ohta, T. The Electric Bow Interface. In Virtual, Augmented and Mixed Reality. Systems and Applications; Shumaker, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 436–442. [Google Scholar] [CrossRef]

- Silviu, B.; Mihai, D.; Raffaello, B.; Florin, G.; Cristian, P.; Marcello, C. An Interactive Haptic System for Experiencing Traditional Archery. Acta Polytech. Hung. 2018, 15, 185–208. [Google Scholar] [CrossRef]

- Purnomo, F.A.; Purnawati, M.; Pratisto, E.H.; Hidayat, T.N. Archery Training Simulation based on Virtual Reality. In Proceedings of the 2022 1st International Conference on Smart Technology, Applied Informatics, and Engineering (APICS), Surakarta, Indonesia, 23–24 August 2022; pp. 195–198. [Google Scholar] [CrossRef]

- Yamamoto, Y.; Yamagishi, Y. VR-based Learning Support System for Kyudo (Japanese Archery). In Proceedings of the E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education. Association for the Advancement of Computing in Education (AACE), Singapore, 7 October 2024; pp. 467–471. [Google Scholar]

- Gründling, J.P.; Zeiler, D.; Weyers, B. Answering with Bow and Arrow: Questionnaires and VR Blend Without Distorting the Outcome. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; pp. 683–692. [Google Scholar] [CrossRef]

- Palacios-Alonso, D.; López-Arribas, A.; Meléndez-Morales, G.; Núñez-Vidal, E.; Gómez-Rodellar, A.; Ferrández-Vicente, J.M.; Gómez-Vilda, P. A Pilot and Feasibility Study of Virtual Reality as Gamified Monitoring Tool for Neurorehabilitation. In Artificial Intelligence in Neuroscience: Affective Analysis and Health Applications; Ferrández Vicente, J.M., Álvarez Sánchez, J.R., de la Paz López, F., Adeli, H., Eds.; Springer: Cham, Switzerland, 2022; pp. 239–248. [Google Scholar] [CrossRef]

- Park, K.H.; Kang, S.Y.; Kim, Y.H. Implementation of Archery Game Application Using VR HMD in Mobile Cloud Environments. Adv. Sci. Lett. 2017, 23, 9804–9807. [Google Scholar] [CrossRef]

- Thiele, S.; Meyer, L.; Geiger, C.; Drochtert, D.; Wöldecke, B. Virtual archery with tangible interaction. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; pp. 67–70. [Google Scholar] [CrossRef]

- Yasumoto, M. Bow Device for Accurate Reproduction of Archery in xR Environment. In Virtual, Augmented and Mixed Reality; Chen, J.Y.C., Fragomeni, G., Eds.; Springer: Cham, Switzerland, 2023; pp. 203–214. [Google Scholar] [CrossRef]

- Ishida, T.; Shimizu, T. Developing a Virtual Reality Kyudo Training System Using the Cross-Modal Effect. In Advances on P2P, Parallel, Grid, Cloud and Internet Computing; Barolli, L., Ed.; Springer: Cham, Switzerland, 2023; pp. 327–335. [Google Scholar] [CrossRef]

- White, M.; Gain, J.; Vimont, U.; Lochner, D. The Case for Haptic Props: Shape, Weight and Vibro-tactile Feedback. In Proceedings of the 12th ACM SIGGRAPH Conference on Motion, Interaction and Games, Newcastle upon Tyne, UK, 28–30 October 2019; MIG ’19. pp. 1–10. [Google Scholar] [CrossRef]

- Strandholt, P.L.; Dogaru, O.A.; Nilsson, N.C.; Nordahl, R.; Serafin, S. Knock on Wood: Combining Redirected Touching and Physical Props for Tool-Based Interaction in Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; CHI ’20. pp. 1–13. [Google Scholar] [CrossRef]

- Auda, J.; Gruenefeld, U.; Schneegass, S. Enabling Reusable Haptic Props for Virtual Reality by Hand Displacement. In Proceedings of the Mensch und Computer 2021, Ingolstadt, Germany, 5–8 September 2021; MuC ’21. pp. 412–417. [Google Scholar] [CrossRef]

- Mori, K.; Ando, M.; Otsu, K.; Izumi, T. Effect of Repulsive Positions on Haptic Feedback on Using a String-Based Device Virtual Objects Without a Real Tool. In Virtual, Augmented and Mixed Reality; Chen, J.Y.C., Fragomeni, G., Eds.; Springer: Cham, Switzerland, 2023; Volume 14027, pp. 266–277. [Google Scholar] [CrossRef]

- Todorov, E.; Shadmehr, R.; Bizzi, E. Augmented Feedback Presented in a Virtual Environment Accelerates Learning of a Difficult Motor Task. J. Mot. Behav. 1997, 29, 147–158. [Google Scholar] [CrossRef] [PubMed]

- Williams, B.; Garton, A.E.; Headleand, C.J. Exploring Visuo-haptic Feedback Congruency in Virtual Reality. In Proceedings of the 2020 International Conference on Cyberworlds (CW), Caen, France, 29 September–1 October 2020; pp. 102–109. [Google Scholar] [CrossRef]

- Gibbs, J.K.; Gillies, M.; Pan, X. A comparison of the effects of haptic and visual feedback on presence in virtual reality. Int. J. Hum.-Comput. Stud. 2022, 157, 102717. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, H.; Feng, G.; Guo, F.; Lv, H.; Li, B. RealPot: An immersive virtual pottery system with handheld haptic devices. Multimed. Tools Appl. 2019, 78, 26569–26596. [Google Scholar] [CrossRef]

- Van Damme, S.; Legrand, N.; Heyse, J.; De Backere, F.; De Turck, F.; Vega, M.T. Effects of Haptic Feedback on User Perception and Performance in Interactive Projected Augmented Reality. In Proceedings of the 1st Workshop on Interactive eXtended Reality, Lisboa, Portugal, 14 October 2022; IXR ’22. pp. 11–18. [Google Scholar] [CrossRef]

- Tanacar, N.T.; Mughrabi, M.H.; Batmaz, A.U.; Leonardis, D.; Sarac, M. The Impact of Haptic Feedback During Sudden, Rapid Virtual Interactions. In Proceedings of the 2023 IEEE World Haptics Conference (WHC), Delft, The Netherlands, 10–13 July 2023; pp. 64–70. [Google Scholar] [CrossRef]

- Radhakrishnan, U.; Kuang, L.; Koumaditis, K.; Chinello, F.; Pacchierotti, C. Haptic Feedback, Performance and Arousal: A Comparison Study in an Immersive VR Motor Skill Training Task. IEEE Trans. Haptics 2024, 17, 249–262. [Google Scholar] [CrossRef]

- Ai, M.; Li, K.; Liu, S.; Lin, D.K. Balanced incomplete Latin square designs. J. Stat. Plan. Inference 2013, 143, 1575–1582. [Google Scholar] [CrossRef]

- IJsselsteijn, W.; de Kort, Y.; Poels, K. The Game Experience Questionnaire; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2013. [Google Scholar]

- Berkman, M.; Catak, G. I-group Presence Questionnaire: Psychometrically Revised English Version. Mugla J. Sci. Technol. 2021, 7, 1–10. [Google Scholar] [CrossRef]

- Chen, C.; Weyland, S.; Fritsch, J.; Woll, A.; Niessner, C.; Burchartz, A.; Schmidt, S.C.E.; Jekauc, D. A Short Version of the Physical Activity Enjoyment Scale: Development and Psychometric Properties. Int. J. Environ. Res. Public Health 2021, 18, 11035. [Google Scholar] [CrossRef]

- Jackson, S.A.; Martin, A.J.; Eklund, R.C. Long and Short Measures of Flow: The Construct Validity of the FSS-2, DFS-2, and New Brief Counterparts. J. Sport Exerc. Psychol. 2008, 5, 561–587. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload; Advances in Psychology; Hancock, P.A., Meshkati, N., Eds.; ELSEVIER: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar] [CrossRef]

- Brooke, J. SUS - A quick and dirty usability scale. In Usability Evaluation in Industry; Taylor and Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.–Comput. Interact. 2008, 34, 574–594. [Google Scholar] [CrossRef]

- Hertzum, M. Reference values and subscale patterns for the task load index (TLX): A meta-analytic review. Ergonomics 2021, 64, 869–878. [Google Scholar] [CrossRef]

- Grier, R.A. How High is High? A Meta-Analysis of NASA-TLX Global Workload Scores. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 59, 1727–1731. [Google Scholar] [CrossRef]

| Category | Variable | Measurement Scale |

|---|---|---|

| Objective | Hit Deviation | Normalized distance |

| Hand Tremor | Centimeters | |

| Task Completion Time | Seconds | |

| Subjective | Presence | Likert-7 |

| Physical Activity Enjoyment (PAE) | Likert-7 | |

| Flow Experience | Likert-7 | |

| Competence | Likert-7 | |

| Task Load | Likert-7 | |

| System Usability | Likert-7 | |

| Future Use Intention | Likert-7 |

| Parameter | Estimate | Significance |

|---|---|---|

| Intercept | 2.132 | *** 1 |

| [VF = 0] 2 | 0.055 | |

| [PP = 0] | −1.216 | *** |

| Time | −0.112 | ** |

| [PP = 0] × Time | 0.142 | * |

| Parameter | Estimate | Significance |

|---|---|---|

| Intercept | 0.457 | |

| [VF = 0] | −0.115 | |

| [PP = 0] | −0.337 | |

| Time | 0.163 | |

| Hand Tremor | 0.552 | |

| [VF = 0] × Hand Tremor | 1.517 | p = 0.009 **1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Xu, H.; Tu, M.; Tian, F. The Impact of Physical Props and Physics-Associated Visual Feedback on VR Archery Performance. Sensors 2025, 25, 6991. https://doi.org/10.3390/s25226991

Liu Z, Xu H, Tu M, Tian F. The Impact of Physical Props and Physics-Associated Visual Feedback on VR Archery Performance. Sensors. 2025; 25(22):6991. https://doi.org/10.3390/s25226991

Chicago/Turabian StyleLiu, Zhenyu, Haojun Xu, Mengyang Tu, and Feng Tian. 2025. "The Impact of Physical Props and Physics-Associated Visual Feedback on VR Archery Performance" Sensors 25, no. 22: 6991. https://doi.org/10.3390/s25226991

APA StyleLiu, Z., Xu, H., Tu, M., & Tian, F. (2025). The Impact of Physical Props and Physics-Associated Visual Feedback on VR Archery Performance. Sensors, 25(22), 6991. https://doi.org/10.3390/s25226991