Abstract

In the scenario of an integrated space–air–ground emergency communication network, users encounter the challenge of rapidly identifying the optimal network node amidst the uncertainty and stochastic fluctuations of network states. This study introduces a Multi-Armed Bandit (MAB) model and proposes an optimization algorithm leveraging dynamic variance sampling (DVS). The algorithm posits that the prior distribution of each node’s network state conforms to a normal distribution, and by constructing the distribution’s expected value and variance, it maximizes the utilization of sample data, thereby maintaining an equilibrium between data exploitation and the exploration of the unknown. Theoretical substantiation is provided to illustrate that the Bayesian regret associated with the algorithm exhibits sublinear growth. Empirical simulations corroborate that the algorithm in question outperforms traditional ε-greedy, Upper Confidence Bound (UCB), and Thompson sampling algorithms in terms of higher cumulative rewards, diminished total regret, accelerated convergence rates, and enhanced system throughput.

1. Introduction

The Space-Air-Ground Integrated Network (SAGIN) integrates satellite systems, aerial networks, and terrestrial communication infrastructures [1], thereby enabling global, uninterrupted coverage. It holds considerable potential for application in scenarios such as disaster response, intelligent transportation systems, and the evolution towards 6G communication networks [2]. The integration of cutting-edge technologies, including artificial intelligence, machine learning, and Software-Defined Networking (SDN), further augments the performance and adaptability of the SAGIN [3]. The pivotal technological hurdles encompass dynamic node management, interconnection of heterogeneous networks, resource allocation optimization, and the intelligent management of networks [4].

In the context of emergency communication, users are in constant motion, and terrestrial base stations may be insufficient to fulfill their communication demands, thus requiring the collaborative support of space and aerial networks [5]. To ensure the reliability of data transmission, it is imperative for users to swiftly connect to the network node at the highest rate within the signal range [6]. However, given the dynamic shifts in the location and status of network nodes, users must rapidly connect to the optimal network node guided by a specific algorithm, without the benefit of prior knowledge of the network conditions. Addressing how users in emergency communication scenarios can expeditiously access the most suitable network nodes has become an imperative issue.

2. Related Work

Online learning methodologies serve as efficacious algorithms for learning and forecasting within dynamic settings [7]. The Multi-Armed Bandit (MAB) problem, often referred to as the slot machine dilemma [8], is a quintessential issue in the realm of online learning. The MAB framework has garnered widespread application due to its capacity to facilitate access optimization even amidst a dearth of environmental information. In particular, ref. [9] investigates the selection process among multiple channels under the condition that channel information adheres to independent and identically distributed variations within a solitary user context, proposing a decision-making framework predicated on the Restless Multi-Armed Bandit (RMAB) model. Ref. [10] integrates the RMAB model, introducing a greedy algorithm for channel selection to augment the spectrum access efficiency for users. Ref. [11] pioneers the employment of index algorithms to address the archetypal MAB challenge, while ref. [12] refines the confidence parameter of the Upper Confidence Bound (UCB) algorithm to enhance its efficacy.

Existing studies on network selection are typically marred by two principal deficiencies: firstly, the oversight of the influence that immediate gains may exert on future earnings; secondly, the linear growth pattern of cumulative regret values yielded by current algorithms, which results in diminished learning efficiency and protracted convergence. Such outcomes are at odds with practical aspirations for achieving higher efficiency through straightforward methods.

Addressing these concerns, the present research introduces an enhanced index algorithm designed to refine the network selection process. This algorithm takes into account the interplay between immediate and prospective future gains, leveraging the strengths of both the UCB and Thompson sampling algorithms to achieve a harmonious equilibrium between exploration and exploitation. Simulation results indicate that, in contrast to extant methodologies, the dynamic variance sampling algorithm proposed herein not only escalates learning efficiency but also mitigates cumulative regret, thereby augmenting system throughput.

3. System Model

3.1. Network Architecture

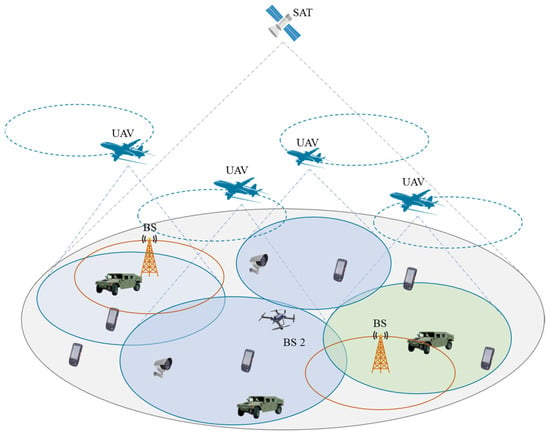

The network architecture of this study is illustrated in Figure 1. The research area is conceptualized as a circular zone, equipped with satellites, unmanned aerial vehicles (UAVs), and base stations. The satellite signals ensure comprehensive coverage across the entire area, and UAVs operate on predefined circular trajectories, while the coverage of base stations is confined. Users are outfitted with multi-mode antennas capable of accessing multiple networks, yet they are restricted to connecting to a single network node at any given instant. The user communication is structured in a time-slotted manner, segmenting the user communication timeframe into T discrete time slots, with each slot separated by a relatively minor interval. The crux of this paper’s research lies in the decision-making process for an individual user to access the space–air–ground integrated emergency communication network node during each time slot.

Figure 1.

Network architecture.

3.2. Channel Model

The system includes three types of links: space-to-ground, aerial-to-ground, and base station-to-ground links. According to the literature [13], the channel state information (CSI) from the satellite to the user is as follows:

where denotes the free space loss, which can be calculated by the formula , where denotes the signal wavelength, denotes the horizontal distance between the center of the satellite beam and the user, denotes the vertical height of the satellite relative to the ground, denotes the channel gain caused by the rain attenuation, which obeys the lognormal distribution, is the form of , is a phase vector uniformly distributed within the range , and indicates the satellite beam gain, which is defined as follows:

where represents the maximum gain of the satellite antenna , is the elevation angle between the center of the beam and the user, is the angle of the satellite beam, and and are the first-order and third-order Bessel functions of the first class, respectively.

According to the literature [13], the channel state information (CSI) from the aerial platform to the user is as follows:

where denotes the path loss, where denotes the channel power gain for a reference distance of 1 m, is the horizontal distance from the UAV to the target user, and is the height of the UAV. The small-scale fading follows the Rice (Rician) channel model, where is the Rician coefficient. is the line-of-sight Rician fading component and is the non-line-of-sight Rayleigh fading component.

According to the literature [13], the channel state information (CSI) from the base station to the user is as follows:

where represents the large-scale fading, where denotes the channel power gain at a reference distance of 1 m, denotes the distance between the base station and the user, and g0 represents the small-scale fading, which follows a distribution.

3.3. Communication Model

Based on the method of calculating the channel state information (CSI) mentioned, the communication rate that can be obtained by a user when connecting to a satellite, a UAV, and a base station, respectively (without considering inter-channel interference) can be expressed by the formula

In Equation (5), denotes the power of the satellite, denotes the CSI from the satellite to the user, and denotes the noise power of the satellite received by the user. Similarly, the meaning of each symbol in Equations (6) and (7) can be known.

3.4. Benefit Model

The benefit of the proposed algorithm in this paper after the user selects a network node to access is represented as in Equation (8):

where denotes the communication rate (i.e., ,, or ) that the user obtains from the network nodes, and denotes the upper bound of the communication rate that the user is able to obtain from all the network nodes, e.g., when the maximal rate is 100, , which ensures that the user’s gain interval is .

Meanwhile, in order to experimentally compare the algorithm proposed in this paper with the traditional ε-greedy algorithm, UCB algorithm, and Thompson sampling algorithm, according to the literature [14], the gain can be transformed into a Bernoulli random variable, and the current gain obeys the Bernoulli distribution with parameter (i.e., ) with . The article has shown that the above two returns are algorithmically equivalent.

Furthermore, to experimentally compare the algorithm proposed in this paper with conventional algorithms, including the ε-greedy algorithm, the Upper Confidence Bound (UCB) algorithm, and the Thompson sampling algorithm, the reward can be converted into a Bernoulli random variable, as outlined in reference [14]. The instantaneous reward is governed by a Bernoulli distribution parameterized by (i.e., ) with . The aforementioned article has substantiated the algorithmic equivalence of these two forms of rewards.

3.5. Objective Function

The purpose of the algorithm based on the MAB model is to determine the user’s strategy for selecting a network node at each moment in time so as to maximize the expectation of the total gain over time slots, i.e.,

where denotes the network node selected by the user according to the algorithm at moment , and denotes the gain after selecting network node , corresponding to and defined in the gain model. In order to compare the effects of different algorithms more intuitively, this paper uses the minimization of the expected total regret as the objective function corresponding to the maximization of the expected total gain, where the so-called regret is the expected rate lost at each moment due to the failure to select the best network node. Define as the expected gain of network node , so that denotes the expected gain of the optimal network node, denotes the suboptimal network gap, and also define as the number of times that the network node has been selected prior to the moment of . Then the total regret of the user in T time slots is denoted as

4. Network Selection Mechanism Based on the MAB Model

4.1. Dynamic Variance Sampling Algorithm

The issue of user network node selection in an unknown environment has been modeled as a Multi-Armed Bandit (MAB) model. As an advanced dynamic stochastic control framework, the MAB model has excellent learning capabilities and is mainly used to solve problems of selection and resource allocation under limited resources. This includes, but is not limited to, scenarios such as channel allocation, opportunistic network access, and routing selection. Through the application of the MAB model, users can make optimal decisions in uncertain environments, thereby effectively enhancing the overall performance of the system. Reference [15] proposed an Upper Confidence Bound (UCB)-based index algorithm, which, although it reduces the algorithm complexity compared to traditional algorithms, still has a certain gap in overall revenue compared to the ideal state, and the convergence speed is slow; reference [16] studied the theoretical performance of the Thompson sampling algorithm, which, although it has a lower regret lower bound compared to the UCB index algorithm, still has a gap from the ideal state.

The index value of the UCB algorithm consists of two parts: the sample average reward of the current network and the confidence factor, which is represented as follows:

where denotes the number of times a network node has been selected at moment , denotes the mean benefit of network node before moment , which reflects how well the algorithm utilizes the data, and the confidence factor is a quantity inversely related to the number of times it has been selected, which reflects how well the data has been explored.

The Thompson sampling algorithm is a stochastic algorithm based on Bayesian ideas, which selects arms at each time step based on their posterior probability of being the best arm by assuming a Beta prior distribution for the reward distribution parameter of each arm. The index values are calculated as follows:

where denotes the distribution sampling, and denote the number of times that the network node has been selected with a gain of 1 and the number of times that the network node has been selected with a gain of 0 before the moment , respectively.

The advantage of the UCB algorithm is the introduction of the confidence factor related to the number of times selected, which enhances the exploratory nature of the algorithm, and the disadvantage is the low exploratory efficiency and slow convergence speed; the advantages of the Thompson sampling algorithm are the introduction of the Bayesian sampling idea, that the assumption of the prior distribution is more in line with the actual scenario, and that its convergence speed has been improved, but it is a large gap with the ideal value. At the same time, from the CSI model, it can be seen that the changes in the state of network nodes are closer to the normal distribution, so the normal distribution is considered as the prior distribution of network state changes.

Based on the characteristics of the above two algorithms, this paper considers the improvement in terms of the advantages of the two algorithms. Bayesian sampling and the confidence factor are introduced into the algorithm at the same time, which assumes that the return of each network node obeys the normal distribution, the mean value of the sample is used as the expectation (reflecting the utilization of the data), the number of times of being selected is introduced into the sample variance (reflecting the exploration of the data), and the index value is calculated as follows:

where denotes the sampling of a normal distribution, denotes the mean benefit of network node before time , and denotes the number of times network node is selected at time . The update rule is as follows:

4.2. Theoretical Analysis and Proof

Definition 1.

denotes the number of times network node has been selected prior to moment t. and denote the number of times network node has been selected prior to moment with a gain of 1 and a gain of 0, respectively. denotes the value of the index of the network node that has been selected at moment .

Definition 2.

Assuming that network node 1 is the optimal network and that denotes the expected return of network node , there is for . Define , as two real numbers that satisfy , and obviously and must exist. Define , where denotes the KL dispersion between Bernoulli distributions with the parameters and , respectively, of the KL distance.

Definition 3.

denotes the sample values sampled by the algorithm from the posterior distribution of network node at moment and .

Definition 4.

denotes the mean value of the returns of network node at moment , defined as , for , defining as the event , and as the event .

It can be seen that both and are approximations of the true expected return of network node . The former is an empirical estimate, the latter is a sampled sample from the posterior distribution, and and are upper bounds on the true return expectation of network node . Thus, intuitively, the significance of and is that these two estimates should not be overestimated too much; specifically, do not exceed the thresholds and .

Definition 5.

denotes the sequence of historical policy information prior to moment , defined as , where denotes the index value of the network node that is selected at moment , and is its corresponding gain. Defining and satisfying , it can be seen that the distributions of , , and in the above definitions, and whether or not the events and occur, are all determined by .

Definition 6.

Defining , it can be seen that is a random variable determined by .

Lemma 1.

According to Lemma 1 of reference [17], when , we have the following for any

and

:

Lemma 2.

Let denote the moment when network node 1 is selected for the th time, which can be obtained according to Lemma 2.13 of reference [18]:

where .

Fact 1

(Chernoff–Hoeffding Bound). Let be random variables on the interval and , so that , and there is the following for any :

Fact 2.

Let denote a random variable obeying a normal distribution with mean and variance for with

Theorem 1.

The upper bound on the regret of the dynamic variance sampling algorithm is given by

where is the total time duration and is the number of network nodes.

Proof.

According to the definition of regret in Equation (10), we can see that

Firstly, the regret is decomposed according to the events defined in Definition 4, but here instead of decomposing the regret directly, the expectation of the number of times a suboptimal network node is selected is decomposed. Because according to the definition of regret, the expectation of the number of times a network node is selected is multiplied by the suboptimal network gap and summed up to get the final regret, and the optimal network node does not contribute to the regret, it is only necessary to decompose the number of times a suboptimal network node is selected. For , there is

where denotes the indicator function, and the decomposition of the above equation using the event and its complement is obtained:

Continuing to decompose the above equation by the event yields

Next, derive the upper bounds for each of the above three terms, starting with the upper bound for Equation (1). Combined with the relationship stated in Definition 5, this is obtained from the properties of conditional probability and Lemma 1:

The second equality sign above utilizes the property that is invariant given . Combined with , it can be seen that the value of only changes with the distribution of , i.e., only after network node 1 is selected. Defining to denote the moment when network node 1 is selected for the jth time, the values of are equal at all moments. Therefore,

Let , and by Lemma 2, when , there are

Substituting Equation (25) into Equation (24) gives the upper bound of Equation (1):

Next, the upper bound of Equation (2) is derived, and Equation (2) is decomposed into two parts according to the magnitude relationship between and :

First analyzing the first term of Equation (27),we can get

Next, analyzing the latter term of Equation (27), if the value of is large and the event occurs, then the probability of the event occurring will be very small, and in conjunction with the definition of the event in Definition 4, there is

From the definition, we can see that , and according to the normal distribution property, given , there are

Therefore, Equation (29) can be further scaled as

From the probability density function of normal distribution and its distribution characteristics, when , there are

Taking and , and substituting in Equation (32):

Therefore,

Substituting Equations (28) and (34) into Equation (27) gives the upper bound of Equation (2) as

Finally, to derive the upper bound of Equation (3), define to denote the moment of the th selection of network node . Let . According to the definition of , we can see that denotes the event , and then we have

When , there is

According to the Chernoff–Hoeffding Bound (Fact 2):

Substitute Equation (38) into Equation (36):

Taking , we have by Pinsker’s inequality:

Substituting Equations (26), (35), and (41) into Equation (22), respectively, yields

Therefore, the expectation of the regret upper bound for network node is

The value of the above equation decreases with when , and the upper bound of the expected regret is when for all network nodes is

When , the upper bound on total regret is

□

The proof is complete. This theorem shows that the algorithm proposed in this paper is able to make the system regret value grow sublinearly and eventually converge, which is better than the algorithm with linear growth and can improve the system throughput. The notation and terminology involved in the proof are recapitulated in Table 1.

Table 1.

Review of the main symbols.

4.3. Algorithm Description and Procedure

Based on the above analysis, the algorithm based on the MAB model always selects the network node access corresponding to the maximum value of the current time-slot index, so that the algorithm proceeds along the direction of minimizing the total regret value. Users can explore the node with the optimal network state in a short time by using the algorithm proposed in this paper under the condition of an unknown network state. The steps of the algorithm are as follows (Algorithm 1):

| Algorithm 1. Dynamic variance sampling algorithm flow. |

| for do for each node , sample independently from the distribution select node: observe reward: update selected times: update mean benefit: end for |

5. Performance and Evaluation

In this paper, we consider the problem of how users choose the optimal network node access under an unknown network environment, and adopt a dynamic variance sampling algorithm to explore and learn the unknown network environment, and choose the optimal network node access based on historical experience prediction. This subsection simulates and analyzes the algorithm from the perspective of its performance parameters by establishing a simulation scenario, and compares its performance with the traditional ε-greedy algorithm, UCB algorithm, and Thompson sampling algorithm, respectively.

5.1. Simulation Settings

According to the relevant formulas in the channel model, communication model, and revenue model, the meanings of the relevant symbols and the simulation parameter settings are shown in Table 2.

Table 2.

Meanings of symbols and simulation parameter settings.

The parameter settings related to the simulation scenario are shown in Table 3.

Table 3.

Scene parameter settings.

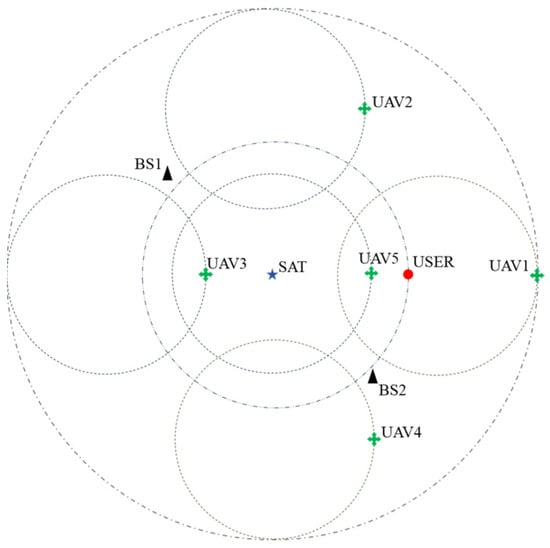

The scene schematic is shown in Figure 2. The reference coordinate system is the polar coordinate system with the center of the scene as the coordinate origin, the satellite is located at the origin of the coordinate system (0, 0), the coordinates of the flight centers of the UAVs are set to be , the flight altitudes are set to be , respectively, the initial positions are all set to be on the positive half-axis of the x-axis with the respective flight centers as the origin, and the positions of the base stations are set to be , respectively. The coordinates of the user’s motion center are (0, 0) and the initial position is set to (200, 0). The total time slot of the simulation is set to be 1000, and in order to overcome the randomness of the network environment, each group of experiments is repeated 100 times.

Figure 2.

Schematic diagram of the simulation scene.

5.2. Results and Analysis

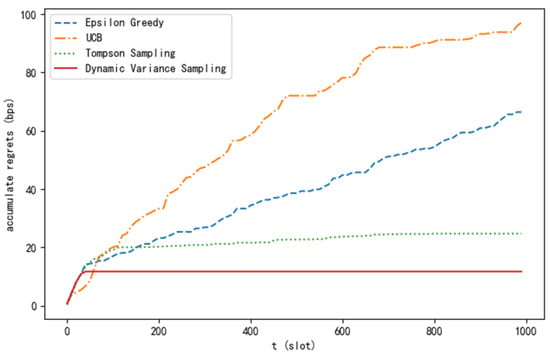

The experiment compares the dynamic variance sampling algorithm proposed in this paper with current mainstream algorithms (ε-greedy [18], UCB, and Thompson sampling, etc.) from different perspectives, ultimately verifying the superiority of this algorithm. As can be seen from Figure 3, the cumulative regret value of the algorithm proposed in this paper grows in an approximately logarithmic relationship with the time slot and ultimately converges, which confirms the deduction of Theorem 1.

Figure 3.

Cumulative regret curve of different algorithms.

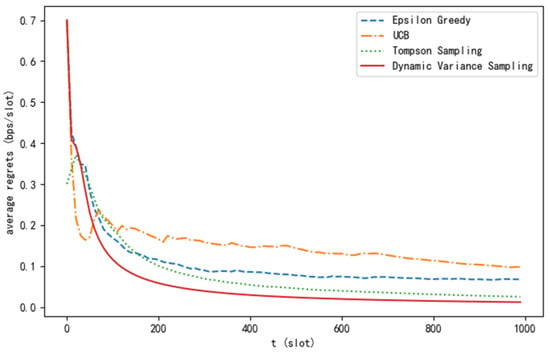

From Figure 4, it can be observed that as the number of time slots increases, all four algorithms are capable of gradually reducing the average regret value towards stability. However, the dynamic variance sampling algorithm proposed in this paper has a faster convergence rate compared to the other three algorithms, and the average regret value approaches closer to 0.

Figure 4.

Average regret curve of different algorithms.

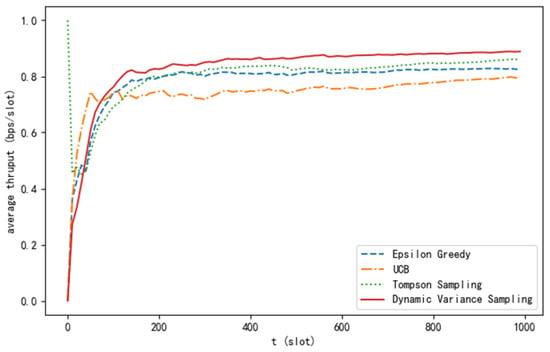

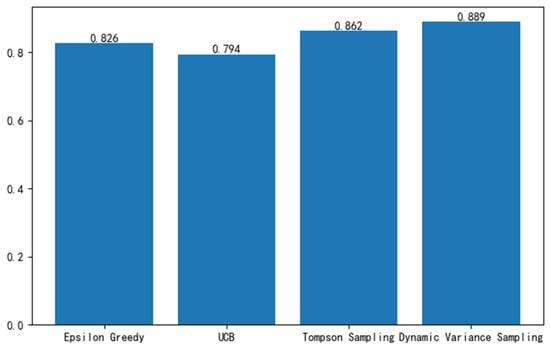

Figure 5 presents the comparison results of the average throughput for the four algorithms. It can be seen that the average throughput of the algorithm proposed in this paper is significantly higher than that of the other algorithms. When the number of time slots is sufficiently large, the average throughput approaches the ideal value.

Figure 5.

Average throughput curve of different algorithms.

Figure 6 illustrates the mean throughput of the four algorithms following 1000 iterations. Analysis of the figure reveals that, within the simulated environment described, the mean throughput of the algorithm introduced in this study has experienced enhancements of 7.63%, 11.96%, and 3.13% relative to the ε-greedy algorithm, Upper Confidence Bound (UCB) algorithm, and Thompson sampling algorithm, respectively.

Figure 6.

Average throughput after 1000 iterations.

6. Conclusions

This study, predicated on the MAB model, explores the dynamic selection of and access to network nodes by users within an integrated space–air–ground emergency communication network, given the condition of uncertain node network states. Comparative simulation experiments were conducted across various online learning algorithms. The ε-greedy algorithm, rooted in a greedy heuristic, prioritizes immediate gains; the Upper Confidence Bound (UCB) algorithm focuses on static assessments and lacks consideration of the interplay between sequential strategies, leading to reduced adaptability; the Thompson sampling algorithm, conversely, offers more consistent outcomes. The simulation outcomes underscore that the algorithm introduced in this paper adeptly harmonizes the interplay between exploration and exploitation inherent in network node states. In scenarios where the network node state information is obfuscated, the algorithm proposed herein markedly diminishes the learning regret, expedites the convergence pace, and augments system throughput. Nonetheless, the current research is primarily concentrated on the network node access mechanism for individual users, and further investigation is warranted to address the network node access mechanisms for multiple users within the context of an integrated space–air–ground emergency communication network.

Author Contributions

Conceptualization, methodology, Z.X.; software, validation, formal analysis, writing—original draft preparation, Q.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Meng, Y.; Qi, P.; Lei, Q.; Zhang, Z.; Ren, J.; Zhou, X. Electromagnetic Spectrum Allocation Method for Multi-Service Irregular Frequency-Using Devices in the Space-Air-Ground Integrated Network. Sensors 2022, 22, 9227. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Shi, Y.; Fadlullah, Z.M.; Kato, N. Space-Air-Ground Integrated Network: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 2714–2741. [Google Scholar] [CrossRef]

- Geng, Y.; Cao, X.; Cui, H.; Xiao, Z. Network Element Placement for Space-Air-Ground Integrated Network: A Tutorial. Chin. J. Electron. 2022, 31, 1013–1024. [Google Scholar] [CrossRef]

- Yin, Z.; Cheng, N.; Luan, T.H.; Wang, P. Physical Layer Security in Cybertwin-Enabled Integrated Satellite-Terrestrial Vehicle Networks. IEEE Trans. Veh. Technol. 2022, 71, 4561–4572. [Google Scholar] [CrossRef]

- Anjum, M.J.; Anees, T.; Tariq, F.; Shaheen, M.; Amjad, S.; Iftikhar, F.; Ahmad, F. Space-Air-Ground Integrated Network for Disaster Management: Systematic Literature Review. Appl. Comput. Intell. Soft Comput. 2023, 2023, 6037882. [Google Scholar] [CrossRef]

- Cui, H.; Zhang, J.; Geng, Y.; Xiao, Z.; Sun, T.; Zhang, N.; Liu, J.; Wu, Q.; Cao, X. Space-Air-Ground Integrated Network (SAGIN) for 6G: Requirements, Architecture and Challenges. China Commun. 2022, 19, 90–108. [Google Scholar] [CrossRef]

- Sridharan, K.; Yoo, S.W.W. Online Learning with Unknown Constraints. arXiv 2024, arXiv:2403.04033. [Google Scholar]

- Xu, Y.; Anpalagan, A.; Wu, Q.; Shen, L.; Gao, Z.; Wang, J. Decision-Theoretic Distributed Channel Selection for Opportunistic Spectrum Access: Strategies, Challenges and Solutions. IEEE Commun. Surv. Tutor. 2013, 15, 1689–1713. [Google Scholar] [CrossRef]

- Dong, S.; Lee, J. Greedy confidence bound techniques for restless multi-armed bandit based Cognitive Radio. In Proceedings of the 2013 47th Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 20–22 March 2013. [Google Scholar]

- Smallwood, R.D.; Sondik, E.J. The Optimal Control of Partially Observable Markov Processes Over a Finite Horizon. Oper. Res. 1973, 21, 1071–1088. [Google Scholar] [CrossRef]

- Agrawal, R. Sample mean based index policies by O(log n) regret for the multi-armed bandit problem. Adv. Appl. Probab. 1995, 27, 1054–1078. [Google Scholar] [CrossRef]

- Jiang, Z.; Hongcui, C.; Jiahao, X. Channel Selection Based on Multi-armed Bandit. Telecommun. Eng. 2015, 55, 1094–1100. [Google Scholar]

- Wang, Z.; Yin, Z.; Wang, X.; Cheng, N.; Zhang, Y.; Luan, T.H. Label-Free Deep Learning Driven Secure Access Selection in Space-Air-Ground Integrated Networks. In Proceedings of the GLOBECOM 2023–2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysi, 4–8 December 2023. [Google Scholar]

- Agrawal, S.; Goyal, N. Analysis of Thompson Sampling for the multi-armed bandit problem. Statistics 2011, 23, 39.1–39.26. [Google Scholar]

- Auer, P.; Cesa-Bianchi, N.; Fischer, P. Finite-time Analysis of the Multiarmed Bandit Problem. Mach. Learn. 2002, 47, 235–256. [Google Scholar] [CrossRef]

- Agrawal, S.; Goyal, N. Thompson Sampling for Contextual Bandits with Linear Payoffs. In Proceedings of the 30th International Conference on Machine Learning (ICML-13), Atlanta, GA, USA, 17–19 June 2013; pp. 513–521. [Google Scholar]

- Agrawal, S.; Goyal, N. Further Optimal Regret Bounds for Thompson Sampling. arXiv 2012, arXiv:1209.3353. [Google Scholar]

- Agrawal, S.; Goyal, N. Near-optimal regret bounds for Thompson sampling. J. ACM 2017, 64, 30. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).