- Article

Denoising Stock Price Time Series with Singular Spectrum Analysis for Enhanced Deep Learning Forecasting

- Carol Anne Hargreaves and

- Zixian Fan

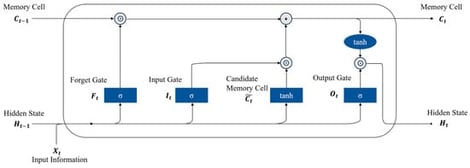

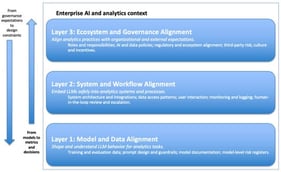

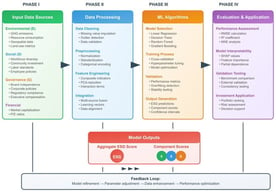

Aim: Stock price prediction remains a highly challenging task due to the complex and nonlinear nature of financial time series data. While deep learning (DL) has shown promise in capturing these nonlinear patterns, its effectiveness is often hindered by the low signal-to-noise ratio inherent in market data. This study aims to enhance the stock predictive performance and trading outcomes by integrating Singular Spectrum Analysis (SSA) with deep learning models for stock price forecasting and strategy development on the Australian Securities Exchange (ASX)50 index. Method: The proposed framework begins by applying SSA to decompose raw stock price time series into interpretable components, effectively isolating meaningful trends and eliminating noise. The denoised sequences are then used to train a suite of deep learning architectures, including Convolutional Neural Networks (CNN), Long Short-Term Memory (LSTM), and hybrid CNN-LSTM models. These models are evaluated based on their forecasting accuracy and the profitability of the trading strategies derived from their predictions. Results: Experimental results demonstrated that the SSA-DL framework significantly improved the prediction accuracy and trading performance compared to baseline DL models trained on raw data. The best-performing model, SSA-CNN-LSTM, achieved a Sharpe Ratio of 1.88 and a return on investment (ROI) of 67%, indicating robust risk-adjusted returns and effective exploitation of the underlying market conditions. Conclusions: The integration of Singular Spectrum Analysis with deep learning offers a powerful approach to stock price prediction in noisy financial environments. By denoising input data prior to model training, the SSA-DL framework enhanced signal clarity, improved forecast reliability, and enabled the construction of profitable trading strategies. These findings suggested a strong potential for SSA-based preprocessing in financial time series modeling.

27 January 2026