1. Introduction

This study investigates how integrating Singular Spectrum Analysis (SSA) with deep learning models can enhance stock price forecasting accuracy and trading performance in noisy financial markets, with a specific focus on the Australian Securities Exchange (ASX)50 index. The Australian equity market offers a valuable yet underexplored environment for testing advanced forecasting models. Unlike major global markets such as in the U.S. or China, the Australian market exhibits distinctive structural characteristics, moderate liquidity, high concentration in resource and financial sectors, and pronounced sensitivity to global commodity price movements. These characteristics introduce complex, non-linear dependencies that pose significant challenges for traditional time-series models. By applying the SSA–DL framework to this unique context, the study extends existing research beyond well-studied markets and demonstrates the model’s robustness and adaptability across diverse economic settings. This focus not only enriches the global literature on financial forecasting but also provides insights applicable to other markets with similar structural profiles.

To date, machine learning algorithms have been extensively applied in stock price prediction studies across global financial markets. Various models, such as Artificial Neural Networks (ANN) and Convolutional Neural Networks (CNN) have demonstrated strong effectiveness in improving predictive accuracy [

1]. However, stock market forecasting remains inherently challenging due to the noisy, chaotic, non-stationary, and highly volatile nature of financial time series data [

1,

2]. To mitigate these challenges, several signal processing and modeling techniques, such as WaveNet and Singular Spectrum Analysis (SSA), have been developed to enhance forecasting reliability [

3,

4].

While deep learning algorithms integrated with Singular Spectrum Analysis (SSA) have been applied to financial time series in various international markets, there remains a notable gap in the application of these advanced techniques to Australian stock market data. This underrepresentation may stem from competing research interests in other regions, the relatively smaller size of Australian market, and broader global research priorities. To address this gap, the present study focuses on stocks listed on the Australian Securities Exchange (ASX), specifically the ASX50 Index, which comprises the 50 largest and most liquid stocks in the market. In this study, SSA is employed as a preprocessing step to decompose and denoise stock price series, thereby enhancing the quality of inputs for the subsequent deep learning models.

This study makes two key contributions to the existing literature. First, it advances the application of cutting-edge analytics by integrating Singular Spectrum Analysis (SSA) with deep learning architectures, specifically, Convolutional Neural Networks (CNN), Long Short-Term Memory (LSTM), and a hybrid CNN-LSTM model for stock price forecasting. To the best of our knowledge, this integrated approach has not been previously explored in the context of the Australian stock market. Second, while many studies on stock analytics focus primarily on predictive performance, this research extends the scope by developing profitable and reliable trading strategies derived from the model outputs, thereby bridging the gap between predictive modeling and practical financial decision-making.

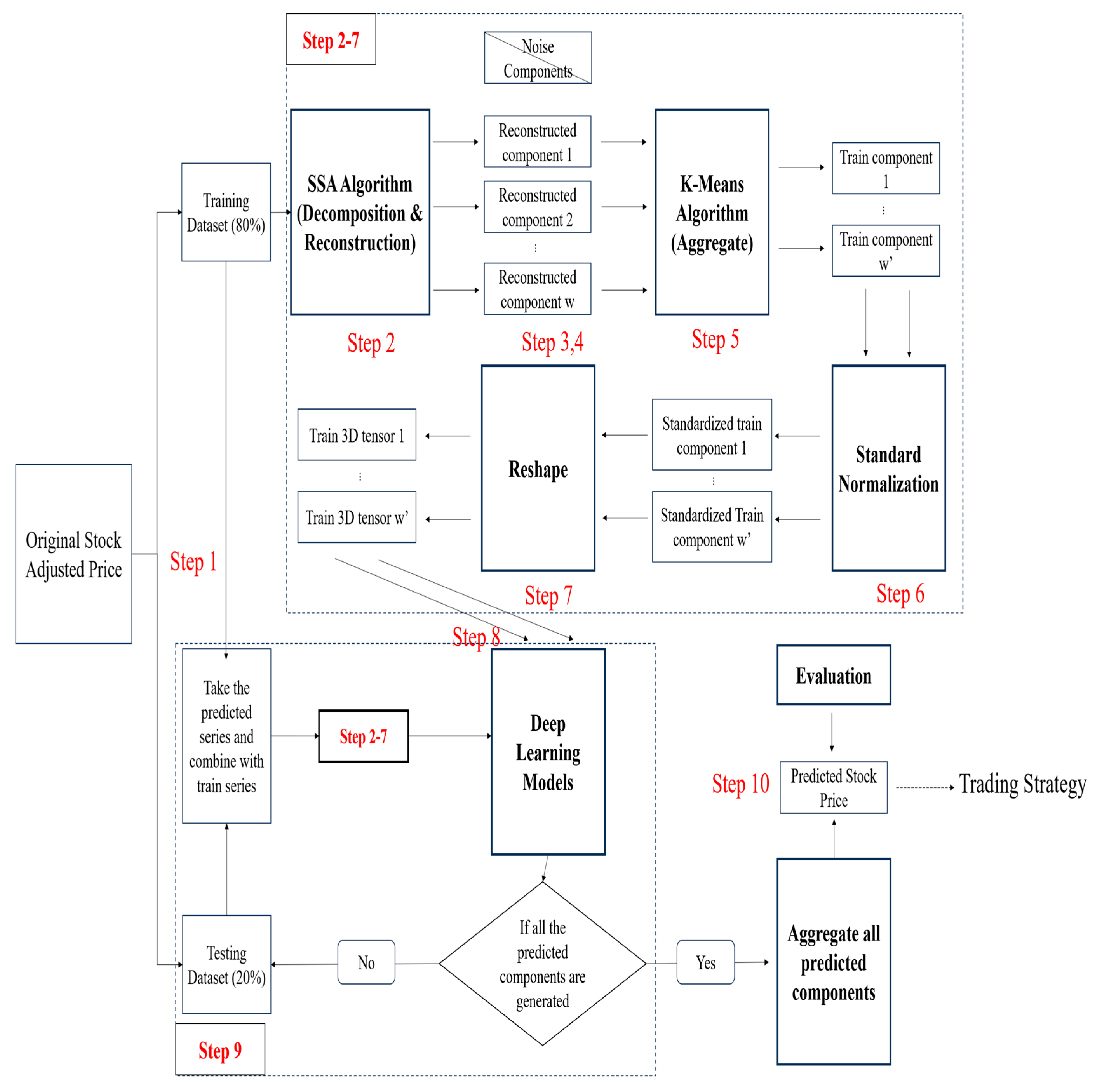

The proposed methodology follows a systematic and structured process. First, the Singular Spectrum Analysis (SSA) algorithm is applied separately to the training and test datasets to generate denoised time series. Second, these denoised training datasets are used to train multiple deep learning models, which are subsequently employed to forecast stock prices during the test period. Third, the predicted stock prices are aggregated and compared with the actual prices to evaluate each model’s forecasting performance. Finally, a trading strategy is developed based on the predicted prices to assess the practical utility and profitability of the proposed SSA-deep learning (SSA-DL) framework.

2. Literature Review

The Efficient Market Hypothesis (EMH), as proposed by Fama, posits that stock prices fully reflect all available market information [

5]. According to this theory, when investors attempt to earn excess returns through extensive analysis of historical stock data, the market rapidly incorporates such information, thereby adjusting prices to eliminate any potential profit opportunities. Despite this, many stock investors continue to rely on technical analysis, which seeks to identify empirical patterns and market behaviours based on publicly available price data.

Furthermore, numerous studies have questioned the assumption of market efficiency, presenting evidence that financial markets are not entirely efficient. Recent research, such as that of [

2], provides compelling evidence that certain technical trading strategies can still yield significant and consistent returns, particularly in the stock markets of China and South Korea.

Previous research has demonstrated that financial indicators such as the Moving Average (MA) [

6], Moving Average Convergence–Divergence (MACD), and the Relative Strength Index (RSI) [

7] are statistically significant in predicting stock prices and developing profitable trading strategies. These advantages have been observed across both bull and bear market conditions. Furthermore, various time series models have been employed for stock price forecasting, with notable approaches such as the Autoregressive Moving Average (ARMA) model incorporating past indicators and cyclical factors to enhance predictive accuracy.

Given that many stock price series exhibit non-stationary behavior, often mitigated through differencing, the Autoregressive Integrated Moving Average (ARIMA) model has been widely employed in previous studies. For instance, ref. [

8] applied the ARIMA model to forecast stock prices across various sectors of the National Stock Exchange (NSE), reporting high predictive accuracy and robustness as validated through paired

t-tests. Another commonly used approach, the Generalized Autoregressive Conditional Heteroskedasticity (GARCH) model, effectively captures stock market volatility dynamics, offering valuable forecasts that serve as key tools for risk management in stock trading strategies [

9].

Since the late 20th century, quantitative investing has grown rapidly in popularity, fueled by advances in computing power, analytical methodologies, and the increasing demand from large institutional investors [

10]. Today, numerous hedge funds and asset management firms leverage machine learning algorithms for portfolio analysis and management. Given the limitations of traditional time series models in addressing the nonlinear and non-stationary characteristics of financial data, many studies have demonstrated the superior effectiveness of machine learning techniques in predicting stock prices and formulating optimal trading strategies across different markets. Among these methods, supervised learning is the most widely used approach in stock market prediction [

11].

Given the nonlinear trends in stock prices and their complex relationships with various influencing factors, several machine learning models employing nonlinear algorithms have been applied to stock price forecasting. These include Support Vector Machines (SVM), Support Vector Regressors (SVR), Random Forests (RF), and Artificial Neural Networks (ANN). For instance, Yu et al. [

12] utilized Principal Component Analysis (PCA) to classify stocks based on multiple fundamental indicators and subsequently applied the SVM model for stock selection, achieving superior performance compared to the A-share index on the Shanghai Stock Exchange. Similarly, Kazem et al. [

13] proposed an SVR model integrated with a chaotic firefly algorithm and backtested it on three U.S. stock datasets, finding that it outperformed traditional models in terms of Mean Squared Error (MSE) and Mean Absolute Percentage Error (MAPE). Additionally, Polamuri et al. [

14] employed RF and Extra Tree Regressor models to forecast stock prices on the S&P 500 index, confirming their superiority over conventional linear models based on Mean Absolute Error (MAE) and MSE metrics.

Vijh et al. [

15] constructed technical indicators from stock data and applied Random Forest (RF) and Artificial Neural Network (ANN) models to predict the closing prices of five major U.S. companies. Their results indicated that both RF and ANN achieved strong predictive performance, with ANN generally outperforming RF in terms of Root Mean Square Error (RMSE), Mean Absolute Percentage Error (MAPE), and Mean Bias Error (MBE) metrics [

15]. Similarly, Göçken et al. [

16] integrated ANN with Genetic Algorithms and Harmony Search to optimize technical indicators and mitigate overfitting and underfitting issues, thereby enhancing stock price prediction accuracy. It is noteworthy that the architecture of the ANN model plays a crucial role in determining predictive performance, as factors such as the number of hidden layers, the number of nodes per layer, the inclusion of dropout layers during training, and other hyperparameter configurations can significantly influence the final outcomes [

16].

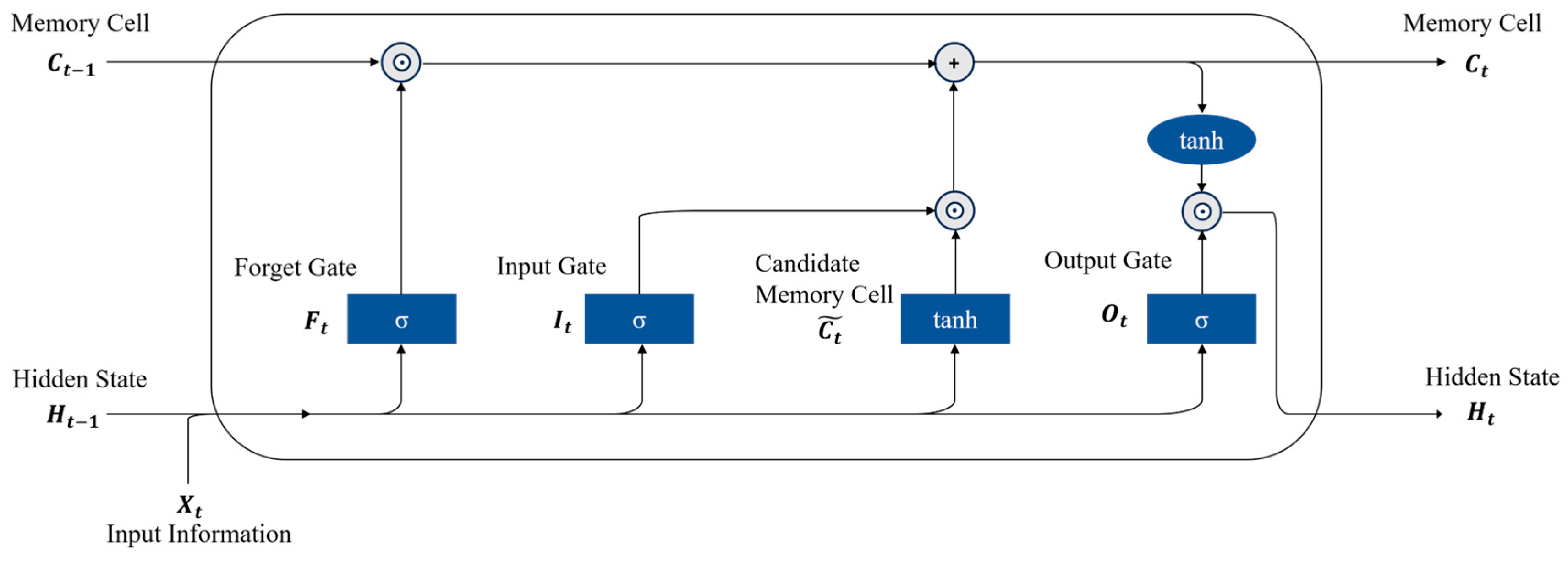

In recent years, deep learning models with multiple hidden layers, such as Deep Neural Networks (DNN), Convolutional Neural Networks (CNN), and Long Short-Term Memory (LSTM) networks, have proven increasingly effective for stock market forecasting due to their superior ability to extract meaningful features from large datasets [

11]. Zhong and Enke [

17] employed various DNN models with differing numbers of hidden layers to predict the daily return direction of the SPDR S&P 500 ETF, utilizing Principal Component Analysis (PCA) for feature engineering with 60 financial and economic indicators. While CNNs are well known for their strong performance in image classification task and LSTM networks excel in sequence-to-sequence (Seq2Seq) learning by mitigating the vanishing and exploding gradient problems, both architectures and their hybrid variants have demonstrated superior performance in stock market forecasting due to their ability to learn complex relationships between large sets of input and output variables. Hoseinzade and Haratizadeh [

18] further enhanced predictive accuracy by developing fine-tuned 2D-CNNpred and 3D-CNNpred models with kernels designed to mimic image- processing feature extraction, achieving more accurate predictions for six stock index movements compared to a shallow ANN model and a baseline CNN-core model.

Durairaj and Mohan developed two novel chaotic hybrid models, Chaos + CNN and Chaos + CNN + PR, which first reconstructed noisy time series affected by chaotic behaviour and then fit both the original time series and the fitted noise series into CNN models. These hybrid models generally produced more accurate predictions for foreign exchange, commodity, and stock market indices compared to traditional models such as ARIMA, CART, and Random Forest (RF) [

19]. However, in certain cases, the hybrid models did not outperform the standalone CNN model, suggesting that the CNN alone could capture intrinsic patterns within the noisy time series. To address the issue of overfitting in stock market forecasting, Beak and Yim proposed a specialized LSTM architecture that combined an overfitting-prevention LSTM module with a prediction LSTM module. This model yielded improved forecasts for the S&P 500 and KOSPI 200 indices [

20]. Similarly, Fazeli and Houghten employed an LSTM model enhanced with manually constructed technical indicators to predict the stock trends of major companies such as Apple, Microsoft, Google, and Intel, demonstrating the model’s capability to generate effective buy/sell signals based on historical data [

21].

The hybrid CNN-LSTM model has also gained considerable attention in recent research. Livieris et al. utilized a CNN to extract meaningful features and an LSTM to learn the internal representation of time-series data, concluding that the CNN-LSTM model achieved improved predictions for gold market prices [

22]. Similarly, Lu et al. incorporated information from the preceding 10 days using a CNN model as input for an LSTM to predict stock prices of the Shanghai Composite Index from 1 July 1991, to 31 August 2020. Their results, compared with models such as MLP, CNN, RNN, and LSTM, demonstrated that the CNN-LSTM combination produced lower RMSE and higher R

2 values [

23]. Song and Choi implemented both CNN-LSTM and GRU-CNN architectures (featuring different configurations of recurrent and convolutional neural networks) for one-step and multi-step predictions of closing prices for the DAX, DOW, and S&P 500 indices [

24]. Likewise, Beak applied a CNN-LSTM model using the most recent 20 days of technical data, combined it with Genetic Algorithms (GA) for hyperparameter optimization, and found that this approach achieved higher prediction accuracy for the KOSPI index compared to standalone CNN, LSTM, and CNN-LSTM models [

25].

Australia’s equity market is a vital component of global investment management. Research on the Australian market not only uncovers diverse investment opportunities but also enhances market efficiency and transparency. However, most major quantitative studies have primarily focused on regions such as Asia, Europe, North America, and South America, with relatively little attention given to Australia [

11]. This gap underscores the need for further exploration of the Australian stock market.

Kwong (2001) conducted a time-series study of selected Australian stocks using neural networks, to uncover patterns between stock movements and influencing factors [

26]. Indika Priyadarshani investigated the asymmetry associated with the volatility effects in the Australian stock market compared to other global markets. By modeling covolatility shocks across markets using multivariate generalized autoregressive conditional heteroskedasticity (MGARCH) approach, Priyadarshani demonstrated that the US stock market exerts a dominant influence on the Australian stock market [

27]. Hargreaves and Hao applied various machine learning techniques to develop trading strategies based on fundamental factors and concluded that machine-learning-driven equity research can generate superior returns in the Australian market [

28]. Hussain et al. employed adaptive neuro-fuzzy inference systems (ANFIS), which integrate the strengths of artificial neural networks (ANNs) and fuzzy systems (FSs) to forecast the performance of Australian stocks listed on the ASX. Their results showed that ANFIS outperformed traditional models such as LSTM and GRU in terms of RMSE, MAE, and MAPE [

29].

Due to the random fluctuations or irregularities inherent in both the market and individual stocks, filtering noise becomes a crucial challenge. Singular Spectrum Analysis (SSA) is a non-parametric method that, without many statistical constraints, decomposes a time series into multiple signals, effectively filtering out noise to reconstruct a cleaner time series. This method has a wide range of applications [

30]. Wang and Li developed an SSA-NN model that smoothed commodity price series with a threshold of 0.02%, subsequently inputting the results into multiple artificial feed-forward neural networks for prediction purposes [

31]. Xiao et al. used SSA to decompose the Shanghai Composite Index into long-term trends, significant event effects, and short-term noise, then applied Support Vector Machines (SVM) to make more accurate predictions than several baseline models [

4]. Syukur and Marjuni improved the performance of SSA for forecasting SMS2.SG stock prices over the next 30 days by applying Hadamard transformation to determine the optimal window length for SSA [

32].

Fathi et al. employed SSA to decompose price series into various features, which were then used to train non-linear autoregressive neural networks (NARNN) to forecast the performance of 24 stocks in the Egyptian market [

33]. While some studies have combined SSA with deep neural networks for forecasting, there has been limited application of this approach to stock markets. For example, Galajit et al. applied SSA to remove noisy components from skewed electrical load series data, using Long Short-Term Memory (LSTM) networks for more accurate electrical load forecasting [

34]. Similarly, Wei and Bai integrated SSA with a Convolutional Neural Network (CNN) and a Bidirectional Gated Recurrent Unit (BiGRU) model to forecast non-linear, non-stationary building energy consumption, achieving precise and robust multi-step predictions compared to the individual models [

35].

Previous studies have demonstrated the effectiveness of combining Singular Spectrum Analysis (SSA) with deep learning for load and energy forecasting. However, its application in financial markets remains limited. This study advances existing work by extending the SSA–DL framework to sector-level equity forecasting and comparing CNN, LSTM, and CNN–LSTM architectures, each optimized for sector-specific temporal patterns. This approach provides a novel contribution by evaluating the robustness and adaptability of SSA–DL models in complex financial environments.

Existing studies on stock price forecasting have explored a range of traditional and modern techniques, from statistical models such as ARIMA and GARCH to machine learning and deep learning architectures including Support Vector Machines (SVM), Convolutional Neural Networks (CNN), and Long Short-Term Memory (LSTM) networks. While these methods have achieved notable success in various markets, several limitations persist.

First, most deep learning models struggle with the noisy and non-stationary nature of financial time series, often resulting in overfitting and unstable forecasts. Data decomposition methods, such as Empirical Mode Decomposition (EMD) and Wavelet Transforms, have been introduced to address this issue; however, their parameter sensitivity and mode-mixing problems limit their effectiveness. In contrast, Singular Spectrum Analysis (SSA) has shown superior performance in denoising and extracting meaningful patterns from complex signals, yet its integration with deep learning models for stock market forecasting remains scarce [

31,

32,

33,

34,

35].

Second, the Australian stock market (ASX), despite being one of the most advanced and resource-rich markets globally, has received relatively little attention in data-driven forecasting research compared to the U.S., European, and Asian markets [

11,

26,

27,

28,

29]. Consequently, there is a pressing need to develop robust forecasting frameworks tailored to the ASX context that can provide both accurate predictions and actionable trading insights.

Finally, existing research tends to focus predominantly on forecasting accuracy metrics, such as MSE or RMSE, without translating predictive results into real-world trading performance. This disconnects between model accuracy and financial utility limits the practical application of predictive models in investment decision-making.

These gaps highlight the opportunity to design a hybrid forecasting and trading framework that integrates advanced signal decomposition, deep learning, and practical trading evaluation, especially in the underexplored ASX market.

3. Contributions

To address these gaps, this study proposes a hybrid Singular Spectrum Analysis–Deep Learning (SSA–DL) framework that integrates Singular Spectrum Analysis (SSA) with Convolutional Neural Networks (CNN), Long Short-Term Memory (LSTM), and a hybrid SSA–CNN–LSTM model to forecast stock prices of companies listed on the ASX50 index. The performance of these models is further evaluated through a back tested trading strategy, linking predictive modeling with investment applicability.

First, stocks from the ASX50 are grouped into three subgroups based on their industrial sectors, and the closing price series of all stocks are decomposed into multiple signals using SSA, with window lengths and criteria optimized for each subgroup. Second, the filtered and denoised signals are used as inputs for deep neural networks to produce rolling forecasts of each signal. These individual forecasts are aggregated into overall stock price predictions, which are evaluated using standard forecasting metrics such as Mean Squared Error (MSE) and Mean Absolute Error (MAE). Finally, a trading strategy based on sector-level stock price forecasts is designed and back tested to assess real-world profitability and robustness.

The key contributions of this research are as follows:

This study introduces a hybrid SSA–DL framework that combines SSA’s noise-reduction capabilities with the pattern recognition power of deep learning architectures (CNN, LSTM, and CNN–LSTM). While SSA has been previously applied to non-financial domains such as energy and commodity forecasting [

31,

32,

33,

34,

35], its integration with deep learning for stock market prediction—particularly within the Australian context—remains largely unexplored. This approach demonstrates enhanced forecasting accuracy compared to traditional deep learning models trained on raw, noisy data.

- 2.

Empirical advancement in modeling the Australian stock market:

The study provides one of the first comprehensive deep learning–based analyses of the Australian Securities Exchange (ASX), addressing the lack of research attention to this market [

11,

26,

27,

28,

29]. By focusing on the ASX50 index, this research offers empirical insights into market dynamics and presents a benchmark for future studies investigating Australian equity behavior using advanced data-driven methods.

- 3.

Bridging predictive modeling and actionable trading strategies:

Unlike most prior research, which primarily emphasizes predictive accuracy, this study evaluates model performance in terms of real-world trading outcomes. A portfolio-level trading strategy is developed based on the model’s stock price forecasts, and its performance is assessed using profitability metrics such as return on investment (ROI) and Sharpe Ratio. This integration of forecasting and trading evaluation enhances the practical relevance of the proposed models for investors and portfolio managers.

6. Results

6.1. Parameters

In the implementation of the Singular Spectrum Analysis method, two key parameters were considered:

The window length (L) used during the embedding stage, which determines the size of the trajectory matrix constructed from the time series data.

The contribution threshold for determining which components are retained for reconstruction. This threshold guides the grouping of components based on their relative significance.

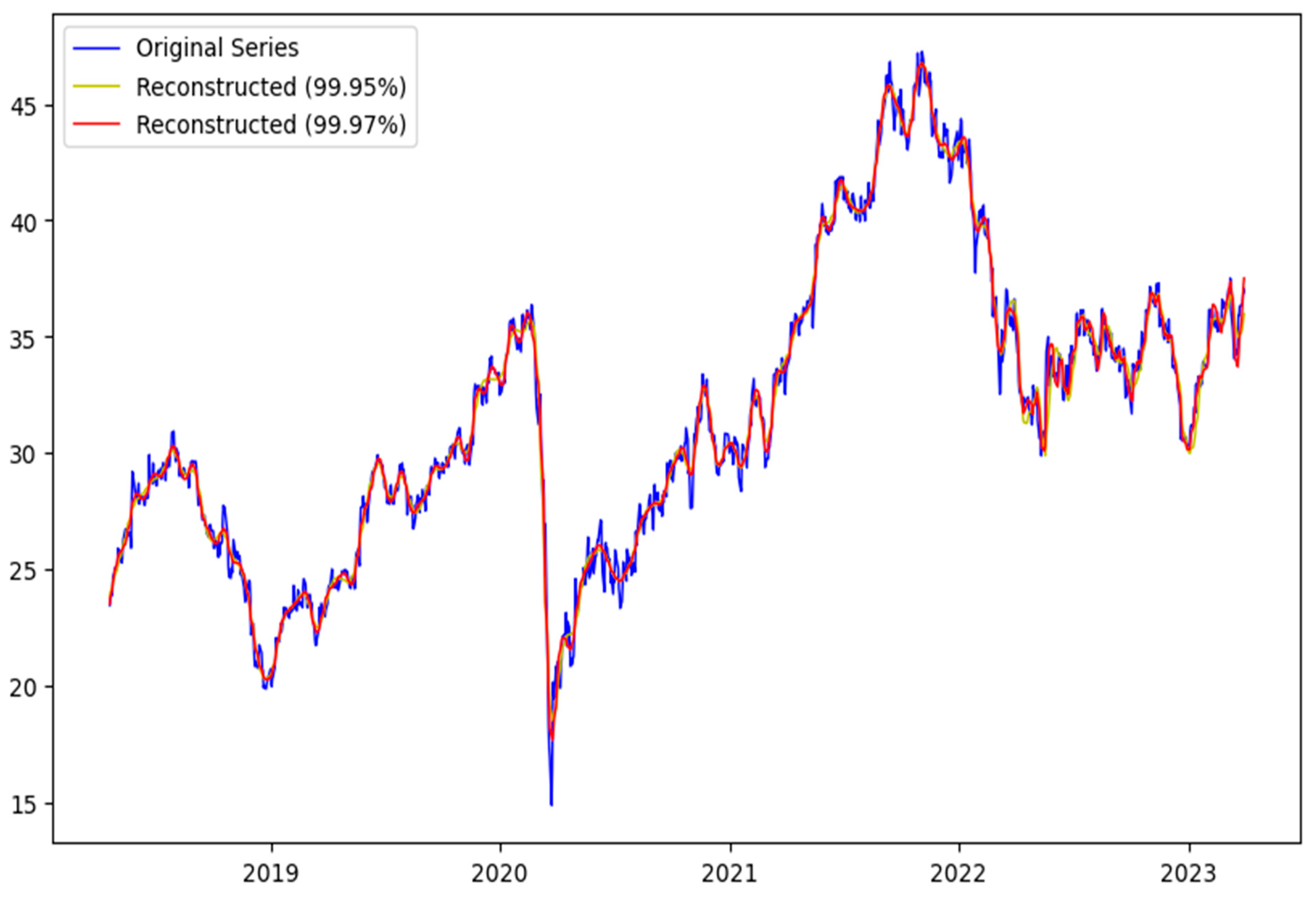

The selection of the key parameters in the Singular Spectrum Analysis (SSA) was guided by both theoretical and empirical considerations to ensure a balance between signal fidelity and noise suppression. The window length () was chosen to be sufficiently large to capture the dominant temporal patterns in the data, while remaining below half of the series length, consistent with established SSA practices. The contribution thresholds of 99.95% and 99.97% were applied to retain the principal components that together explained nearly all the signal variance, effectively filtering out residual noise without over-smoothing the reconstructed series. Preliminary sensitivity analyses confirmed that small variations in and the threshold values did not materially affect the results, indicating that the decomposition and reconstruction were robust to parameter choice.

In our research, we set the contribution criteria to 99.95% and 99.97%, respectively, meaning that only the components contributing to this cumulative percentage of the signal variance were preserved. The retained components were then grouped using the K-means clustering algorithm into two distinct categories: trend and periodicity [

31]. The remaining components, accounting for the last 0.05% (or 0.03%) of the contribution, were classified as noise and deemed non-informative for prediction purposes.

The window length is essential to be tuned for the stocks in different groups, and [

37] suggested that the window length should be large enough but no larger than

, where

is the total length of the series. This problem is also detected in our research, as very low window length will not capture the periodicity effect, causing the contribution value of trend to be larger than the criteria. According to Hassani et al.,

is a common practice for SSA method [

37]. Hence, in our research,

Table 2 below, displays the window Length

will be optimized by testing the possible values below for each group:

For the selected deep learning algorithms, several parameters required careful tuning to optimize model performance:

Time Step for 3D Tensor Formation: This defines the number of lag values used from the time series to form each input sample.

Neural Network Architecture and Hyperparameters: These include optimizer settings, hidden layer configurations, dropout rates, and activation functions.

In our study, a time step of 5 was chosen to construct each 3D tensor, corresponding to one trading week. For the neural network optimization, we employed the Adam optimizer with a learning rate of 0.001, as implemented in the Keras library. Adam is widely recognized for its adaptive learning capabilities and computational efficiency [

43].

Other hyperparameters such as the number of hidden units per layer, dropout rates, and activation functions were fine-tuned separately for each sector group to best fit the characteristics of the grouped stocks.

6.2. Forecasting Results

Similarly, this study initially applies the Singular Spectrum Analysis (SSA) algorithm to decompose each stock’s original price series and reconstruct its Trend and Periodicity components. Based on predefined contribution criteria, SSA identifies and excludes components deemed noise, which are therefore not considered in the K-means clustering stage. The contribution thresholds tested in this study are 99.95% and 99.97%, representing the cumulative variance retained from the original signal.

An illustrative example is provided using the stock ALL.AX, with the reconstructed series summarized in

Table 3. From the results, it can be observed that the trend components remain consistent across both criteria. However, the periodicity component reconstructed under the 99.97% threshold is smaller compared to that under the 99.95% threshold. This suggests that the trend captures most of the price series’ informational contribution, and the variation in the periodic component is primarily influenced by the stricter criterion.

Importantly, the sequence reconstructed under the 99.97% threshold more closely tracks the original stock price series, as illustrated in

Figure 3, where the line labeled “Reconstructed (99.97%)” aligns more accurately with the actual stock prices. This improved alignment is further supported by superior performance in downstream validation. Therefore, subsequent tuning and modeling in this study are conducted primarily under the 99.97% criterion.

The selection of the Window Length (L) in SSA plays a crucial role in effective feature detection and time series reconstruction. An improperly chosen L may hinder the SSA’s ability to accurately capture signal structures. In this study, a range of window lengths from 63 to 504 is explored, corresponding to meaningful temporal intervals such as quarterly (63 days), yearly (252 days), and biannual (504 days) periods.

For each value of L, the original stock price series is decomposed and reconstructed, followed by the construction of various deep learning (DL) models as described previously. These models are then fine-tuned individually within each group using optimized hyperparameters to perform stock price forecasting. The reconstructed trend and periodicity components are aggregated to produce the final predicted stock price series.

To evaluate the accuracy of the predictions, the Mean Squared Error (MSE) and Mean Absolute Error (MAE) are used as performance metrics, comparing the predicted prices to the actual stock prices. The evaluation results for different window lengths and model configurations are summarized in

Table 4,

Table 5 and

Table 6, respectively.

Observing the prediction results of different models when the window length of the SSA algorithm is chosen to be 63 to reconstruct the sequence, it can be found that the SSA-CNN provides the most accurate prediction away from the real stock price in most cases, and almost all the best MSEs and MAEs are obtained from this model. The ordinary CNN also gives relatively accurate results, and it should be noted that the ordinary CNN directly uses the original stock price for prediction, independent of the output sequence of the SSA algorithm. SSA-LSTM and SSA-CNN-LSTM hardly provide more accurate results compared to SSA-CNN and CNN, except for the WTC.AX stock, for which the best prediction is obtained by SSA-CNN-LSTM. However, it can be noted that the differences in model performance in terms of the assessment of prediction accuracy are all very small, so it is also necessary to check the empirical analysis provided by the trading strategy.

When analysing the prediction results with larger window lengths of 252 and 504, it becomes apparent that the prediction accuracy of the SSA-CNN model declines significantly. In contrast, the accuracy of SSA-LSTM and SSA-CNN-LSTM remains relatively stable, with only slight decreases observed. Notably, for the WTC.AX stock, the predictive performance even improves as the window length increases. This suggests that as the window length grows, the relative contribution of trend components diminishes, while the influence of periodicity and noise components increases, due to the higher number of decomposed components.

Furthermore, it is observed that the best prediction results for stocks in the Industrial and Infrastructure sectors tend to be achieved by the SSA-CNN-LSTM model rather than the others. This can be attributed to the model’s strength in capturing long-term fluctuations within periodic sequences, whereas SSA-CNN is better suited for non-smooth, trend-dominated sequences. Consequently, the performance of SSA-CNN deteriorates significantly in these cases, occasionally performing worse than the other two models.

Interestingly, CNN-based models (including non-SSA CNN) also demonstrate strong performance. This can be explained by the fact that with larger window lengths, if the same fixed contribution criteria are used to discard noise, there is a greater risk of omitting important information from the original stock price series. As a result, the reconstructed sequences used for training may lack critical signals, leading to less effective predictions.

However, it is important to recognize that the original stock price series inherently contains noise, which may not be informative or actionable for real-world trading strategies. Although CNNs trained on raw data might achieve higher accuracy in terms of price prediction, they might also capture spurious patterns, making them less suitable for practical trading applications. Investors are typically more interested in robust predictions based on the underlying structure of stock movements, rather than predictions influenced by noise.

For this reason, the present study emphasizes the empirical value of SSA-based Deep Learning (SSA-DL) models, aiming to construct more reliable and interpretable trading strategies grounded in the intrinsic properties of stock behaviour.

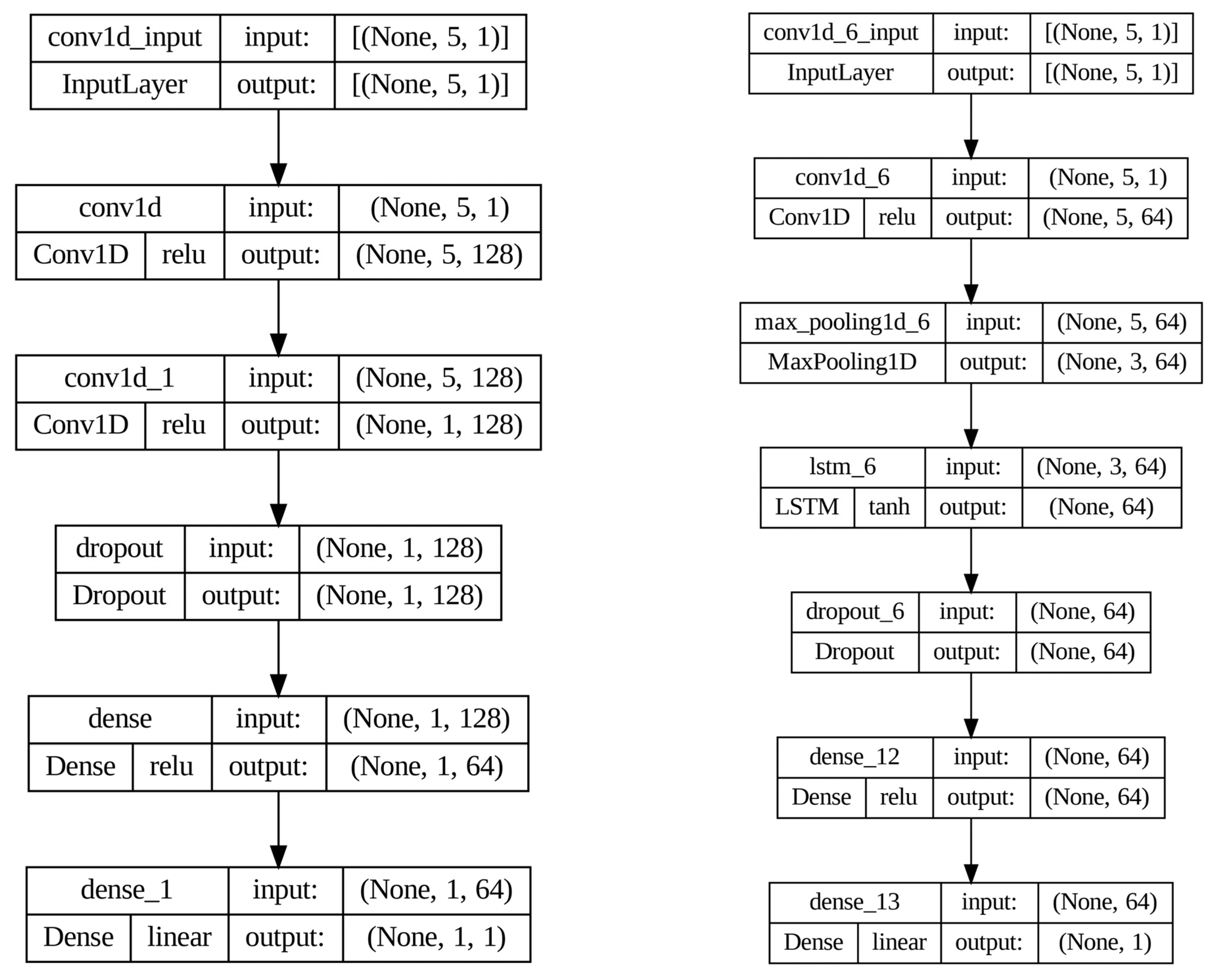

The architecture of the best-performing SSA-CNN model, identified when the window length is set to 63, is illustrated in

Figure 4 (left). The model begins with two convolutional layers, each comprising 128 filters with a kernel size of 3 and employing the ReLU activation function. Notably, no pooling layer is applied after the convolutional stages, allowing the model to retain the full granularity of the extracted features. These layers are followed by a Dropout layer with a dropout rate of 0.3 to prevent overfitting. Finally, a fully connected (dense) layer with 64 neurons and ReLU activation is employed to generate the output prediction.

In contrast, the SSA-CNN-LSTM model structure, shown in

Figure 4 (right), integrates both convolutional and recurrent layers to leverage spatial and temporal features. The model starts with a convolutional layer consisting of 64 filters and a ReLU activation function, which extracts local spatial patterns from the input sequence. This is followed by a Max Pooling layer to down sample and highlight the most salient features. The resulting feature maps are then passed into an LSTM layer with 64 units and a tanh activation function, designed to capture the temporal dependencies within the sequence. The final prediction is generated through a dense layer with 64 neurons and ReLU activation.

6.3. Trading Strategy Performance

In this study, beyond aiming for more accurate stock price predictions using SSA-DL models, we also emphasize the importance of obtaining noise-filtered forecasts that are practically applicable for real-world trading and profitability. This aligns with the insights from Dessian, who conducted a comprehensive review of over 190 research articles and highlighted that many commonly used evaluation metrics such as MSE and RMSE may be inadequate when the ultimate objective is profit maximization in real financial markets [

44].

To assess the practical utility of our models, we constructed daily frequency trading strategies based on the predicted stock prices generated by various models. These strategies were applied to a selection of 47 stocks from the ASX50 index, enabling us to test the extent to which model-driven predictions could inform profitable investment decisions. The performance of each strategy was evaluated using a suite of financial metrics, including Win Rate, Return on Investment (ROI), and the Sharpe Ratio (assuming a risk-free rate of 2%).

The empirical results and comparative analysis across models are presented in the following sections.

As shown in

Table 7, the SSA-CNN-LSTM model with a window length of 252 achieves the highest Sharpe Ratio, reaching a value of 1.878. Notably, this model also generates over

$3.3 million in cumulative return, based on a daily trading capital of

$2 million, significantly outperforming the other models. The SSA-LSTM model (also with a window length of 252) demonstrates similarly strong performance, recording the highest Win Rate and total Dollar Gain among all tested strategies.

Interestingly, while SSA-CNN (window length = 63) and the standard CNN model exhibit relatively higher predictive accuracy in terms of MSE and MAE, their trading strategy performance is notably weaker. In fact, the CNN model results in a substantial financial loss. A closer look at Win Rate and Dollar Loss further underscores that SSA-LSTM and SSA-CNN-LSTM outperform both CNN and SSA-CNN models in risk-adjusted returns. Although SSA-CNN performs comparably to the other two SSA-DL models in terms of total Dollar Gain, its high Dollar Loss reduces its final ROI and Sharpe Ratio.

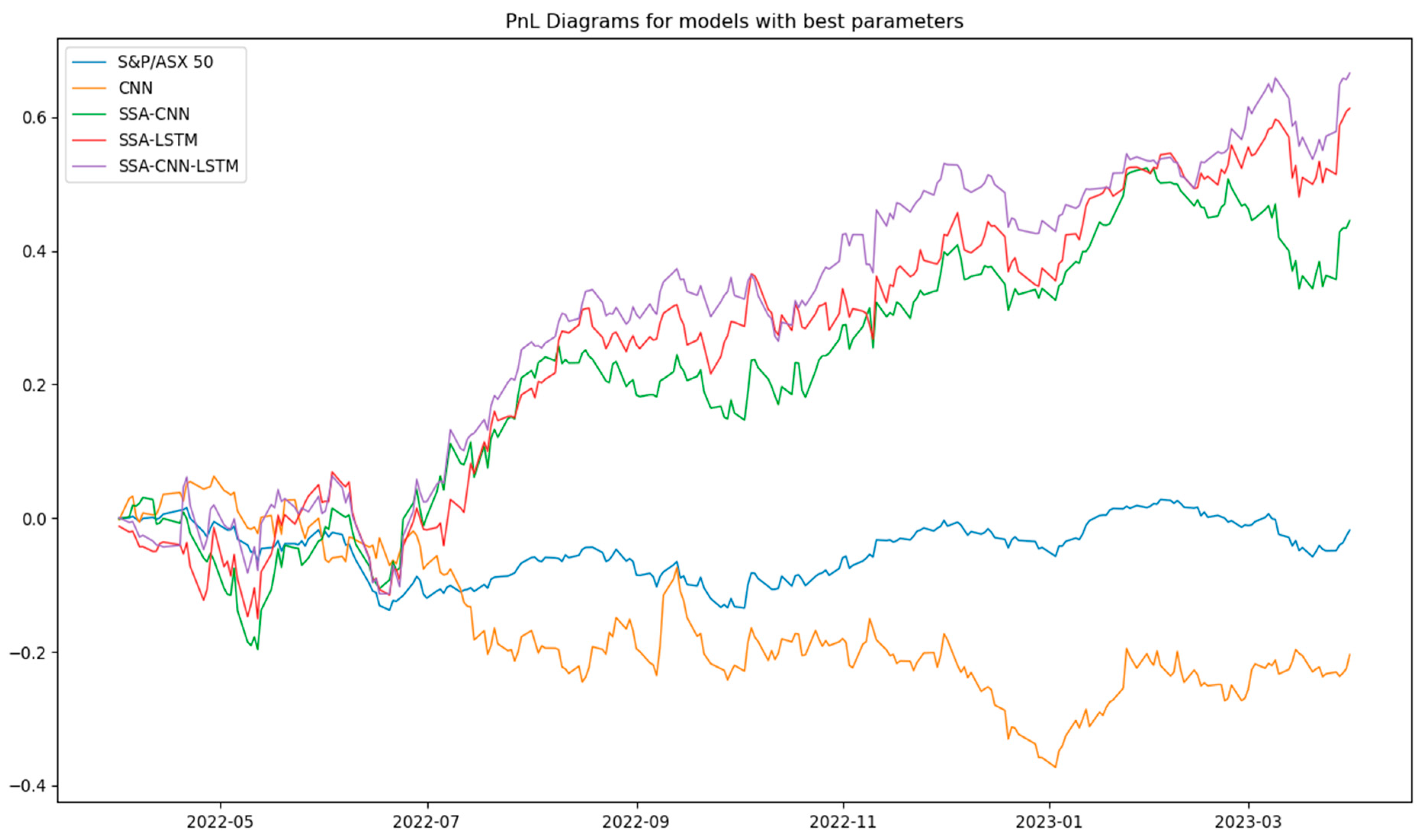

The fact that the two best-performing trading strategies are based on models with a window length of 252 supports the empirical validity of the guideline L = N/4 for selecting the SSA window length. To illustrate these findings visually,

Figure 5 presents the PnL (Profit and Loss) curves for CNN and the best-performing models from each SSA-DL variant, compared with the S&P/ASX50 index [

45].

The results reveal that the strategies built using SSA-DL models significantly outperform the market baseline. During the one-year evaluation period, the S&P/ASX50 index declined from 7254 to 7049, representing a −2.83% return. Despite the overall market downturn, the SSA-CNN-LSTM model achieved an ROI of 66.58%, while the SSA-LSTM and SSA-CNN models delivered ROIs of 61.28% and 44.51%, respectively. See

Figure 5 below. Yan and Ling also integrated their forecasting results with quantitative investing principles and constructed a new strategy that achieved better returns in twelve selected American financial stocks [

46]. This validates the practical value of incorporating SSA-DL models into the design of trading strategies, offering substantial alpha generation even in bearish market conditions.

To further investigate the trading behavior of the proposed models, we randomly selected all trading days from 3 January to 31 January for a detailed performance review. The results, summarized in

Table 8, reveal that during this month, the SSA-CNN model with a window length of 504 achieved the highest cumulative Dollar Gain. Despite this, notable differences in stock selection can be observed among the three models. Interestingly, SSA-LSTM (252) and SSA-CNN-LSTM (252) exhibit greater overlap in daily stock picks, suggesting a similarity in their portfolio construction approach, in contrast to SSA-CNN (504), which tends to diverge significantly in its choices.

Additionally, the analysis shows that on several consecutive trading days, the models, particularly SSA-LSTM and SSA-CNN-LSTM generate portfolios with unchanged or minimally rotated stock selections. This consistency implies that frequent rebalancing is not always necessary, and avoiding unnecessary portfolio turnover could reduce transaction costs, thereby enhancing real-world returns beyond what is reflected in the back-testing results.

A particularly noteworthy observation is that the SSA-LSTM model selects PLS.AX (an Industrial sector stock), 90 times over the course of the year, resulting in substantial representation of the Industrial and Infrastructure sectors within its portfolio. This consistent preference may indicate the model’s sensitivity to long-term cyclical patterns or strong predictive signals inherent in the stock’s behavior.

To examine sectoral tendencies,

Table 9 reports the annual frequency of stock selections made by each model. Relative to the SSA-DL models, the standard CNN model exhibits a pronounced bias towards the Industrial and Infrastructure sectors, while underrepresenting stocks from the Consumer Services, Financials, Healthcare, Technology, and Utilities sectors. In contrast, the SSA-DL models achieve a more balanced sectoral distribution, thereby providing improved diversification.

8. Conclusions

This study proposed a novel framework that combines Singular Spectrum Analysis (SSA) with deep learning algorithms to predict stock prices of companies listed on the ASX50 index. The primary objective was to reduce noise in stock price time series, extract meaningful trend and periodicity components, and enhance the accuracy of stock price forecasts. By improving prediction reliability, the proposed approach offers practical guidance for stock selection and portfolio construction in dynamic financial markets. We propose future work to extend the evaluation of the CNN, LSTM, CNN-LSTM and SSA-CNN-LSTM beyond the current dataset to a wide range of financial markets with diverse structural characteristics, including major equity indices such as the S&P 500 (United States), Nikkei 225 (Japan), and ASX200 (Australia), as well as the foreign exchange market (e.g., EUR/USD, USD/JPY), commodity markets (e.g., gold, crude oil), and cryptocurrency markets (e.g., Bitcoin, Ethereum). These markets differ substantially in volatility dynamics, liquidity levels, trading mechanisms, and information efficiency, thereby will provide a robust platform to validate the generality and adaptability of the proposed approach.

To evaluate the model’s performance, we employed Mean Squared Error (MSE) and Mean Absolute Error (MAE) as forecasting accuracy metrics and further validated the models through back tested trading strategies. Results demonstrated that SSA effectively filtered noise and isolated underlying market patterns, enabling deep learning models, specifically CNN, LSTM, and hybrid CNN–LSTM architectures to generate more stable and accurate predictions. Among these, the SSA–CNN–LSTM model with a window length of 252 achieved the best overall performance, yielding a 66% return on investment (ROI) and a Sharpe Ratio of 1.88.

While low-variance SSA components are treated as noise for the current daily/multi-day forecasting horizon, future studies could investigate their potential predictive value for ultra-short-term or intraday strategies, capturing high-frequency market microstructure effects.

These findings have several practical implications. For investors and portfolio managers, the SSA–DL framework provides a data-driven method to improve timing and selection of trades, particularly by filtering out short-term market noise that can distort traditional technical signals. For quantitative analysts and financial engineers, the integration of SSA with deep learning offers a promising pathway for developing robust forecasting engines capable of adapting to volatile, non-stationary financial environments. For trading strategy developers, the study demonstrates how preprocessing techniques can enhance the performance of deep learning-based algorithmic trading systems, leading to superior risk-adjusted returns compared to benchmark models and market indices.

The research also underscores the importance of model configuration, as key hyperparameters such as SSA window length and contribution criteria significantly influenced predictive performance.