4.1. Algorithm Description

This algorithm introduces a QiMARL (Algorithm 1) method for energy optimization that combines quantum variational optimization and reinforcement learning principles. The algorithm runs iteratively across several epochs with the objective of optimizing energy distribution using quantum computing methods.

The procedure begins with the initialization of a quantum circuit of n qubits and the selection of hyperparameters. A synthetic dataset is created, which is based on real-time demand–supply distribution, with each instance having power supply and power demand at time step t. Another set of entanglement patterns is created to specify various quantum connectivity structures. The model also has a reward history and convergence time histories to monitor learning.

Each epoch begins by adapting the learning rate adaptively, ensuring stability in training using a decay function given in Equation (

18), where

is the decay factor. The algorithm integrates

QAE and

QMC for enhanced performance. Below are the key mathematical formulations added to the algorithm:

Instead of using standard optimization techniques for VQE, we now use QAE to find optimal angles ((Equations (7) and (8)):

QAE provides a quadratic speedup, reducing the number of function evaluations required to reach optimal parameters.

The convergence time is reduced due to fewer required sampling steps.

| Algorithm 1 Quantum-inspired multi-agent reinforcement learning for energy optimization |

- 1:

Initialize: Define a quantum circuit with n qubits and define hyperparameters. - 2:

Generate synthetic energy dataset (Equations (1) and (2)) - 3:

Define entanglement patterns (Equation ( 4)). - 4:

Initialize reward history , convergence times . - 5:

for each epoch to do - 6:

- 7:

Optimize VQE angles with QAE (Equations (7) and (8)) - 8:

Select entanglement pattern using smoothed reward (Equations (5)): - 9:

Initialize total reward and previous deficit . - 10:

Parallel Quantum Batch Processing: - 11:

for each batch in parallel do - 12:

Prepare quantum circuit with Hadamard gates (Equation ( 3)) - 13:

Apply entanglement gates based on (Equation ( 6)) - 14:

Encode classical data into quantum states (Equation ( 9)) - 15:

Apply rotation gates (Equation ( 10)) - 16:

Measure quantum state and compute energy allocation via Quantum Monte Carlo (QMC) (Equation ( 11)) - 17:

Compute local reward using QAE-enhanced estimation (Equation ( 12)) - 18:

Update total reward (Equation ( 13)) - 19:

Update previous deficit: . - 20:

end for = 0 - 21:

Compute convergence time for each agent ((Equation ( 14)) - 22:

Compute global reward using QAE-based aggregation ((Equation ( 15)) - 23:

Update reward history: . - 24:

Update convergence times: . - 25:

Parallel Quantum Policy Updates: - 26:

for each quantum agent i in parallel do - 27:

Update policy parameters using quantum gradients: - 28:

end for - 29:

end for Return optimal policy and rewards .

|

Here, Z_i represents the Pauli-Z measurements on the employed qubits, and the parameters w_i and v_ij are calculated via training.

Based on a smoothed reward function, an entanglement pattern

is dynamically selected to influence quantum state interactions (Equation (

5)). During training, for each data sample

, a quantum circuit is prepared by applying Hadamard gates (Equation (

3)). Entanglement are applied based on the selected pattern in Equation (

6).

Classical energy data are encoded into quantum states using parameterized rotation gates as mentioned in Equation (

9). After encoding, parameterized rotation gates are applied (Equation (

10)). Quantum Monte Carlo is used instead of classical Monte Carlo to compute expected values of the quantum states (Equation (

11)):

A local reward function evaluates the success of the allocation by punishing major mismatches between demand and supply while rewarding accurate predictions as given by Equation (

12). After processing all training samples, the total reward

for the epoch is updated, and execution times for each agent are recorded as represented in Equation (

14). The global reward is now computed using QAE for better aggregation (Equation (

15)):

The quantum agents update their policy parameters in parallel, leveraging quantum gradients (Equations (20)–(22)):

Parallel updates improve computational efficiency, reducing per-epoch execution time.

Quantum gradients enhance the precision of parameter updates, leading to faster convergence.

Lastly, the best policy and cumulative rewards are returned as output, offering a high-performance quantum-inspired reinforcement learning platform for energy optimization. The technique exploits quantum entanglement, variational quantum optimization, and learning for adaptive improvements in decision-making in multi-agent energy management situations.

Table 1 represent the parameter notation table for the employed QiMARL algorithm.

4.3. Time Complexity Analysis

The provided algorithm has several nested loops and quantum operations. We examine each of the major parts to find the overall time complexity.

Outer Loop (Epochs): The outer loop executes for

epochs:

Inner Loop Training Data: For every epoch, the algorithm loops over the training dataset

of size

N:

Quantum Circuit Preparation: For every training data point, the algorithm prepares a quantum circuit:

Hadamard gates: ;

Entanglement gates (CNOTs): ;

Rotation gates: .

Hence, the preparation of quantum circuits takes

per iteration.

Quantum Measurement and Computation: The algorithm measures the quantum state and calculates energy allocation:

Expectation values and weighted sum calculation take

per iteration.

Local Reward Computation: The computation of the reward function includes absolute differences and conditional checks, which require

operations per step.

Global Reward and Convergence Time Updates: Updating global rewards and execution times involves summing over all agents

:

Total Time Complexity: Adding up all the parts, we have:

The algorithm scales quadratically with the number of qubits (). It scales linearly with the dataset size (N) and the number of epochs (). The number M of agents counts, but if small compared to , it does not control the complexity.

Thus, the worst-case time complexity is:

QAE for VQE Angles: QAE gains an estimation accuracy speedup, lowering complexity from regular sampling’s to , resulting in quicker convergence.

Parallel Quantum Batch Processing: Rather than processing data sequentially, batches of training data are processed in parallel quantum circuits. Parallelism lowers the per-epoch processing time, altering the complexity from to roughly , where B is the number of parallel batches.

QMC for Energy Allocation: QMC is employed in place of traditional expectation value calculations to estimate energy allocations. QMC achieves a quadratic speedup, bringing the computation of expectation values from to .

QAE-Based Global Reward Computation: Rather than summing rewards directly, a QAE-based aggregation process is proposed, where QAE lowers the number of samples needed to accurately estimate rewards, enhancing computational efficiency.

Parallel Quantum Policy Updates: Policy updates for all the quantum agents now take place in parallel rather than sequentially. Per-agent update complexity is still the same, but the total update time is decreased by a factor that is a function of the number of available quantum processors.

The Final Time Complexity:

After the changes, with the addition of parallel processing and QMC:

If B (batch size for parallel processing) is sufficiently large, complexity is effectively diminished in realistic implementations.

So the major advances arise due to quantum parallelism and quantum-enabled estimation techniques, which reduce the per-epoch computational cost and enhance scalability.

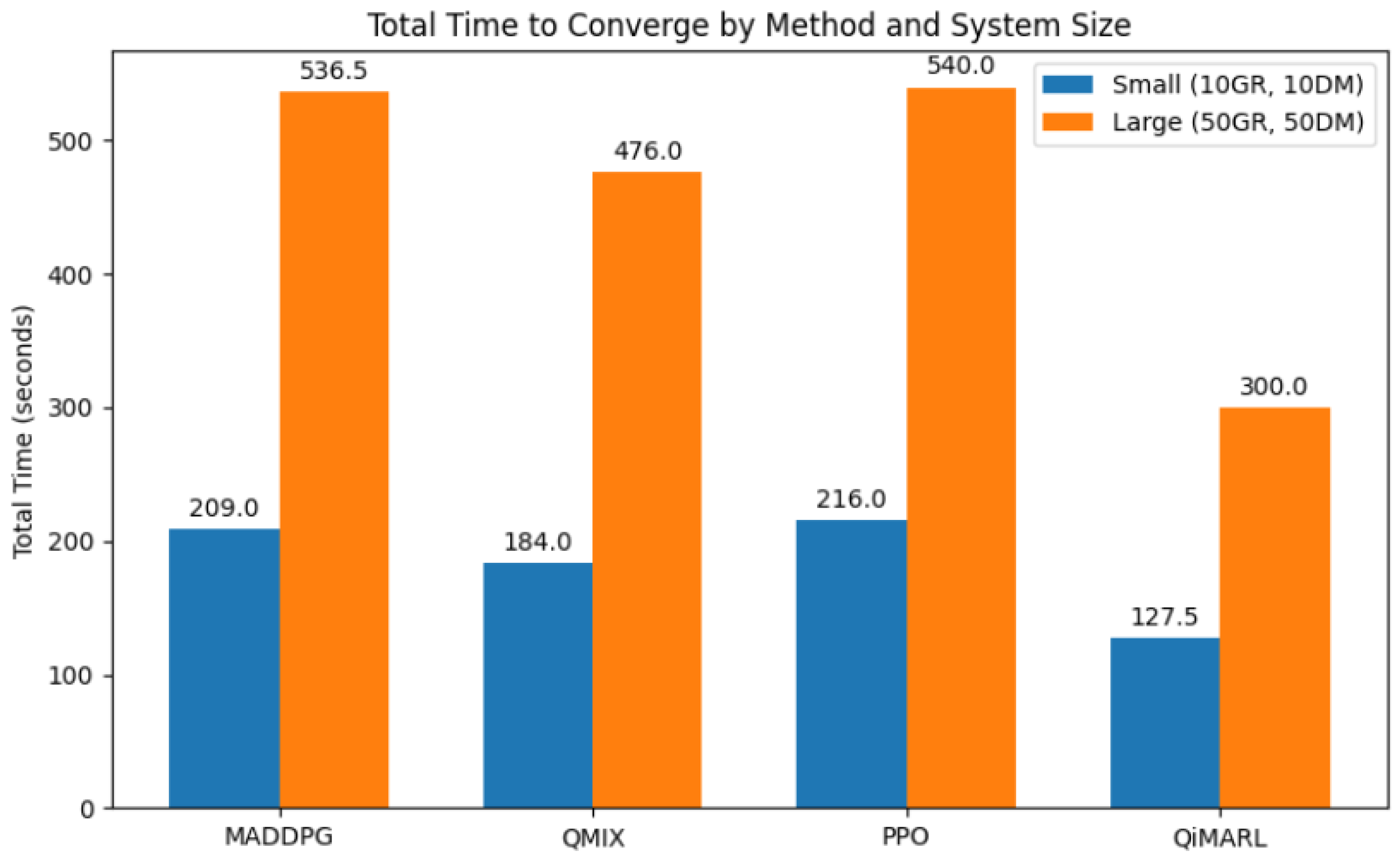

4.4. Scalability Analysis in Larger Systems

As the number of power generation stations () and demand stations () grows, the computational complexity of classical and quantum methods increases differently. The current section discusses the scalability benefit of the proposed quantum-inspired multi-agent reinforcement learning (QiMARL) method.

4.4.1. Growth of the Quantum System

In QMARL, the number of qubits required is given by

As the system scales, the quantum circuit size increases quadratically with respect to the number of stations.

The quantum circuit preparation involves the following:

Hadamard and rotation gates: .

Entanglement operations: .

Expectation value computations: due to parallelized computation.

Thus, the overall computational complexity of QiMARL is given by

Despite its complexity, QiMARL uses quantum parallelism via QAE, allowing it to handle tenfold bigger state–action spaces than conventional techniques.

4.4.2. Growth of Classical Approaches

For classical MAB methods, the complexity depends on the number of agents, , which is typically proportional to (i.e., ):

In larger systems, traditional approaches have high computational overhead:

Greedy MAB suffers from poor power allocation because of insufficient exploration.

UCB MAB becomes slower with the logarithmic scaling factor.

Thompson Sampling becomes infeasible because it has quadratic dependency on .

For classical Multi-Agent Reinforcement Learning (MARL) methods, the computational complexity depends on the number of agents , the state space size , and the action space size . In cooperative multi-agent energy allocation problems, is typically proportional to (i.e., ).

Scalability implications:

MADDPG becomes infeasible for large systems because of exponential scaling.

QMIX is more scalable than MADDPG but still suffers when dealing with a very large due to its full per-agent Q-function evaluation.

PPO scales better than MADDPG in theory but is slower in practice because of repeated policy optimization steps.

4.4.3. Justification for QiMARL’s Advantage in Large Systems

As and increase:

Though classical methods are perhaps more efficient on small scales, they are not scalable because they have a linear or quadratic complexity in the number of power stations. On the other hand, QiMARL uses quantum parallelism to search and optimize large-scale power networks effectively. Thus, it is a better option for energy optimization in high-dimensional quantum settings.