Abstract

Accurate imputation of missing data in air quality monitoring is essential for reliable environmental assessment and modeling. This study compares two imputation methods, namely Random Forest (RF) and Bidirectional Recurrent Imputation for Time Series (BRITS), using data from the Mexico City air quality monitoring network (2014–2023). The analysis focuses on stations with less than 30% missingness and includes both pollutant (CO, NO, NO2, NOx, SO2, O3, PM10, PM2.5, and PMCO) and meteorological (relative humidity, temperature, wind direction and speed) variables. Each station’s data was split into 80% for training and 20% for validation, with 20% artificial missingness. Performance was assessed through two perspectives: local accuracy (MAE and RMSE) on masked subsets and distributional similarity on complete datasets (Two One-Sided Tests and Wasserstein distance). RF achieved lower errors on masked subsets, whereas BRITS better preserved the complete distribution. Both methods struggled with highly variable features. On complete time series, BRITS produced more realistic imputations, while RF often generated extreme outliers. These findings demonstrate the advantages of deep learning for handling complex temporal dependencies and highlight the need for robust strategies for stations with extensive gaps. Enhancing the accuracy of imputations is crucial for improving forecasting, trend analysis, and public health decision-making.

1. Introduction

Air pollution refers to environmental contamination by any chemical, physical, or biological agent that modifies the natural characteristics of the atmosphere. It is primarily caused by motor vehicles, industrial activity, forest fires, and household combustion devices. Major air pollutants are particulate matter (PM2.5 and PM10), carbon monoxide (CO), ozone (O3), nitrogen dioxide (NO2), and sulfur dioxide (SO2). Air pollution causes respiratory and cardiovascular diseases, among others, and is an important source of morbidity and mortality, shortening the average person’s lifespan by 1 year and 8 months (World Health Organization (WHO), 2024 []). According to the State of Global Air Report 2024 [], air pollution accounted for more than 1 in 8 deaths globally in 2021 (8.1 million deaths), becoming the second risk factor for early death, surpassed only by high blood pressure. Over 700,000 deaths in children under five were associated with air pollution that year, representing 15% of all global deaths in this age group. Furthermore, air pollution is a major risk factor for death from heart disease, diabetes, lung cancer, and other noncommunicable diseases (State of Global Air (SoGA) []). The World Health Organization estimated that 99% of the global population breathes air exceeding its guideline limits, with the highest exposures in low- and middle-income countries. Since many air pollutants are also major contributors to greenhouse gas emissions, reducing air pollution offers dual benefits, improving public health and supporting climate change mitigation efforts (WHO Air pollution []).

Mexico City has a metropolitan area that exceeds 22 million inhabitants and has complex air pollution problems, caused primarily by heavy vehicular traffic, industrial emissions, extensive urbanization, and the burning of fossil fuels (Kim et al., 2021 [], He et al., 2023 []).

This situation is aggravated by topographical factors that restrict atmospheric dispersion, because Mexico City sits over 2000 m above sea level and is surrounded by mountains on three sides. These conditions result in naturally lower oxygen levels that contribute to incomplete fuel combustion and the accumulation of both primary and secondary pollutants, including fine particulate matter (PM2.5), ozone (O3), and nitrogen dioxide (NO2). Although government initiatives (ProAire []) have led to improvements over the past decades, key pollutants continue to exceed guidelines at many monitoring stations across the city (He et al., 2023) []).

Effective air quality management relies on accurate environmental monitoring. Mexico City atmospheric monitoring system [,] operates a network of monitoring stations that collect hourly data on pollutant (CO: carbon monoxide, NO: nitric oxide, NO2: nitrogen dioxide, NOx: nitrogen oxides, NO + NO2, SO2: sulfur dioxide, O3: ozone, PM10, PM2.5, and PMCO: coarse fraction: PM10–PM2.5) concentrations and meteorological (RH: relative humidity, Temp: temperature, WDR: wind direction, and WSP: wind speed) parameters to monitor air quality in real time.

However, missing data is a recurring issue, caused by sensor malfunctions, maintenance, power outages, and network transmission failures (Zhang & Zhou, 2024 []; Wang et al., 2023 []). These issues compromise the completeness and reliability of the dataset, obstructing subsequent analyses such as trend evaluation, forecasting, and epidemiological modeling (Hua et al., 2024 []). Imputing missing values in air quality time series is therefore essential to ensure data completeness and analytical robustness (Alkabbani et al., 2022 []).

While traditional imputation methods are limited in capturing the complex spatio-temporal dependencies inherent in environmental data, recent advances in machine and deep learning have opened promising avenues (see Table 1). Multiple strategies have been developed to handle missing data in atmospheric monitoring systems. Traditional methods, such as mean/median substitution or linear interpolation, are simple but often fail in complex, multivariate contexts with non-linear dependencies or temporal structure (Wang et al., 2023 []). More advanced statistical approaches such as Multivariate Imputation by Chained Equations (MICEs) and regression-based methods offer improvements but are sensitive to assumptions about data distributions (Hua et al., 2024 []).

Machine learning techniques, including k-nearest neighbors (KNNs), support vector regression (SVR), and decision trees, have shown enhanced accuracy, especially when capturing local or spatial patterns (Zhang & Zhou, 2024 []; Wang et al., 2023 []; Hua et al., 2024 []; Camastra et al., 2022 []). Hybrid and ensemble models have also emerged to better exploit spatial-temporal correlations (He et al. (2023) [], Wang et al. (2023) []).

In recent years, deep learning has become central to missing data imputation in air quality research. RNN-based models such as Gated Recurrent Unit-Decay (GRU-D; Che et al., 2018 []) and Bidirectional Recurrent Imputation for Time Series (BRITS; Cao et al., 2018 []) leverage temporal dependencies and trainable masking strategies, significantly improving performance on time series with irregular missingness. Similarly, generative models have been applied for their ability to more realistically reconstruct missing values. Among them, Generative Adversarial Imputation Nets (GAINs; Yoon et al., 2018 []) introduces adversarial training into the imputation task, improving robustness across diverse datasets. A recent review by Shahbazian and Greco (2023) [] presents an extensive survey of several imputation methods based on Generative Adversarial Networks (GANs), covering applications in healthcare, finance, meteorology, and air quality. The review highlights the strengths of these models in preserving statistical distributions and generating more realistic imputations compared to traditional techniques. Other novel architectures have also been proposed, such as Graph Recurrent Imputation Network (GRIN; Cini et al., 2021 []), a graph neural network imputation method, and Self-Attention-Based Imputation for Time Series (SAITS; Du et al., 2023 []), a transformer-based model, which further expands the tools for handling missing data in structured environmental datasets. Recent advances in imputation methods with spatial modeling, such as GRIN, have shown promising results, particularly in settings with strong spatial station interconnectivity. GRIN models exploit spatial correlations between monitoring stations using graph neural networks to improve imputation accuracy. These methods combine temporal modeling with graph convolutional layers and typically rely on spatial adjacency matrices based on geographical or statistical proximity.

A brief literature review of recent studies on air quality data imputation and prediction is presented below. Table 1 summarizes the key contributions in the field, with a focus on datasets, modeling techniques, study targets, and main findings.

Colorado Cifuentes & Flores Tlacuahua (2021) [] proposed a deep learning approach for short-term air pollution forecasting in Monterrey, Mexico, using hourly data (2012–2017) of eight pollutants and seven meteorological variables from 13 monitoring stations. Seasonal decomposition was applied to impute missing values, and a feedforward deep neural network (DNN) was trained to predict O3, PM2.5, and PM10 concentrations up to 24 h in advance. A key limitation identified was the reduced accuracy of the model in predicting extreme pollution events.

Kim et al. (2021) [] developed an interpretable deep learning model based on the Neural Basis Expansion Analysis for interpretable Time Series (N-BEATS) architecture to impute minute-level PM2.5 and PM10 concentrations using data from 24 stations in Guro-gu and 42 stations in Dangjin-si, South Korea. Real missingness rates of 7.91% and 16.1% were reported, with an additional 20% of data artificially removed for testing. N-BEATS outperformed baseline techniques (mean, spatial average, and MICE) and enabled interpretability through trend, seasonality, and residual components. However, it showed limitations in capturing long term seasonal trends due to the use of fixed-period Fourier components.

Alahamade & Lake (2021) [] evaluated six imputation strategies using hourly pollutant data (PM10, PM2.5, O3, and NO2) from the UK’s Automatic Urban and Rural Network (AURN) for the period 2015–2017. Their approach involved three groups of models: (1) clustering-based (CA: cluster average, CA+ENV: average within same station type, CA+REG: average within same region) using multivariate time series (MVTS) to group stations by temporal similarity; (2) spatial proximity-based (1NN: nearest station; 2NN: two nearest stations); and (3) ensemble: median of outputs of all five methods. The ensemble model performed best for O3, PM10, and PM2.5, while CA+ENV outperformed others for NO2 due to its high spatial variability. Performance depended on pollutant and station type, and extreme values were slightly under- or overestimated.

He et al. (2023) [] developed a predictive modeling framework for daily NO2 concentrations in Mexico City by integrating ground-based (RAMA: air quality monitoring network in Mexico City), satellite observations (OMI: Ozone Monitoring Instrument, and TROPOMI: TROPOspheric Monitoring Instrument), and meteorological data (CAMS: Copernicus Atmosphere Monitoring Service). Missing values were imputed using the MissForest algorithm. Random Forest and XGBoost outperformed the Generalized Additive Model (GAM), demonstrating the value of hybrid data sources for spatial-temporal NO2 estimation.

Wang et al. (2023) [] developed BRITS-ALSTM, a hybrid deep learning model that integrates BRITS with an attention-based long short-term memory (LSTM) decoder. The model was applied to hourly air quality data (2019–2022) from 16 monitoring stations in the alpine regions of China, covering six pollutants (PM2.5, PM10, O3, NO2, SO2, and CO). Both real missingness (5–22%) and artificial gaps (30%) were considered. BRITS-ALSTM consistently outperformed classical and deep learning baselines, showing strong performance across pollutants and various missing data patterns.

Zhang and Zhou (2024) [] proposed TMLSTM-AE, a hybrid deep learning model that combines LSTM, autoencoder architecture, and transfer learning to impute missing values in the PM2.5 time series from Xi’an, China, characterized by single, block, and long-interval missing patterns. Artificial missingness ranging from 10% to 50% was introduced to evaluate performance. TMLSTM-AE was compared with both classical and deep learning approaches. TMLSTM-AE outperformed all baselines, especially in scenarios with long and consecutive missing gaps, demonstrating its capacity to capture spatio-temporal dependencies.

Hua et al. (2024) [] evaluated several traditional imputation methods (mean, median, k-nearest neighbors imputation (KNNI), and MICE) and deep learning models (SAITS, BRITS, MRNN, and transformer) using six real-world air quality datasets from Germany, China, Taiwan, and Vietnam. The study introduced artificial missingness ranging from 10% to 80%. Results showed that SAITS and BRITS achieved the lowest imputation errors, while KNN performed well on large datasets with high missing rates (up to 80%). In contrast, MICE was more effective on smaller datasets but incurred higher computational costs. The transformer-based model underperformed across most scenarios. The authors concluded that no single method is universally optimal; performance depends on dataset size, missingness rate, and application context.

Table 1.

Summary of recent studies on air quality data imputation and prediction.

Table 1.

Summary of recent studies on air quality data imputation and prediction.

| Reference | Datasets and Missing Rate | Input Variables and Missing Rate | Techniques | Target | Key Findings |

|---|---|---|---|---|---|

| Kim et al. (2021) [] | Minute-level air quality data (2020–2021) from South Korea: Guro-gu (24 stations) and Dangjin-si (42 stations). | PM2.5 and PM10 concentrations. Missing Rate (Real/Artificial): 7.91–16.1%/20%. | A novel N-BEATS deep learning model with interpretable blocks (trend, seasonality, and residual) was compared with baseline methods (mean, spatial average, and MICE). | Imputation of missing PM2.5 and PM10 values. | The N-BEATS model outperforms traditional methods and allows interpretability via component decomposition but struggles with long term seasonal patterns due to fixed-period Fourier terms. |

| Alahamade and Lake (2021) [] | Hourly pollutant data (PM10, PM2.5, O3, and NO2) of the Automatic Urban and Rural Network (AURN) from the UK (2015–2017), covering 167 station types (urban, suburban, rural, roadside, and industrial). | Concentrations of PM10, PM2.5, O3, and NO2. Missing Rate (Real/Artificial): Not quantified (some pollutants entirely missing at certain stations)/Not applied | Evaluated models: (1) Clustering based on multivariate time series similarity (CA, CA+ENV, and CA+REG); (2) Spatial methods using the 1 or 2 nearest stations (1NN and 2NN); (3) Ensemble method: median of all five approaches. | Imputation of missing PM10, PM2.5, O3, and NO2 using temporal and spatial similarity. | The ensemble method performed best for O3, PM10, and PM2.5, CA+ENV for NO2. Performance depended on the pollutant and station type. MVTS clustering allowed full imputation; extremes were slightly under- or overestimated. |

| Wang et al. (2023) [] | Hourly air quality data (2019–2022) from 16 stations in Qinghai Province and Haidong City, China. | Multivariate time series of six pollutants (PM2.5, PM10, O3, NO2, SO2, and CO). Missing Rate (Real/Artificial): 5–22%/30%. | BRITS-ALSTM (BRITS encoder + LSTM with attention) was compared to Mean, KNN, MICE, MissForest, M-RNN, BRITS, and BRITS-LSTM. | Imputation of missing pollutants (PM2.5, PM10, O3, NO2, SO2, and CO) data with high and irregular missing rates. | BRITS-ALSTM outperformed baselines for all pollutants and missing patterns. |

| He et al. (2023) [] | Air quality and meteorological data from Mexico City (2005–2019; 42 stations), combined with satellite data from OMI, TROPOMI, and reanalysis data from CAMS. #stations? | Daily NO2 concentrations, wind speed/direction, temperature, cloud coverage, and satellite-based NO2 columns. Missing Rate (Real/Artificial): Not quantified/Not simulated. | Comparative modeling using RF, XGBoost, and GAM. Missing values were imputed using Random Forest. | Predict daily NO2 surface concentrations in Mexico City. | XGBoost and RF outperformed GAM. The model integrated hybrid sources (ground, satellite, and meteorological) to improve NO2 prediction. |

| Colorado Cifuentes and Flores Tlacuahua (2020) [] | Hourly air quality and meteorological data (2012–2017) from 13 monitoring stations in the Monterrey Metropolitan area, Mexico. | Pollutants and meteorological variables over a 24-h window. Missing Rate (Real/Artificial): <25%/Not simulated. | A deep neural network (DNN). Missing values were imputed using interpolation for non-seasonal time series. | 24-h ahead prediction of O3, PM2.5, and PM10. | The DNN model achieved good predictive accuracy for all target pollutants, and the imputation process preserved model performance. |

| Hua et al. (2024) [] | Six real-world hourly datasets from Germany, China, Taiwan, and Vietnam. The datasets include ~15,000 to 1.2 million samples. | Pollutant and meteorological variables. Missing Rate (Real/Artificial): 0–42%/10–80%. | Mean, median, KNN, MICE, SAITS, BRITS, MRNN, and transformer. | Evaluate the impact of different imputation strategies on the performance of air quality forecasting models aimed at predicting 24-h concentrations of AQI, PM2.5, PM10, CO, SO2, and O3. | SAITS achieved the highest accuracy, followed by BRITS. KNN performed well on large datasets with high missing rates. MICE was effective on smaller datasets but was slower. The transformer model performed worse than the top methods. |

| Zhang and Zhou (2024) [] | PM2.5 time series (2018–2020; 225 stations) from Xi’an, China, with single, block, and long-interval missing patterns. | PM2.5 data from multiple stations, with spatial-temporal dependencies. Missing Rate (Real/Artificial): <1%/[10%, 20%, 30%, and 50%] | TMLSTM-AE was compared to KNN, SVD, ST-MVL, LSTM, and DAE. | Impute complex missing patterns in single-feature PM2.5 time series using spatial-temporal dependencies. | TMLSTM-AE outperforms traditional and baseline methods, especially on long and block missing data. |

1NN: one nearest neighbor; 2NNs: two nearest neighbors; BRITS: bidirectional recurrent imputation for time series; BRITS-ALSTM: BRITS encoder + LSTM decoder with attention mechanism; BRITS-LSTM: BRITS encoder + LSTM decoder (Seq2Seq); CA: cluster average; CA+ENV: cluster average within the same station type (environmental category); CA+REG: cluster average within the same region; CAMS: global atmospheric reanalysis data; DAE: deep autoencoder; DNN: deep neural network; GAM: generalized additive model; KNN: k-nearest neighbors; LSTM: long short-term memory; MICE: multivariate imputation by chained equations; MRNN: multi-directional recurrent neural network; MVTS: multivariate time series; N-BEATS: neural basis expansion analysis for interpretable time series forecasting; OMI: satellite sensor for atmospheric pollutants; RAMA: air quality monitoring network in Mexico City; RF: random forest; SAITS: self-attention-based imputation for time series; ST-MVL: spatio-temporal multi-view learning; SVD: singular value decomposition; TMLSTM-AE: deep model combining LSTM, autoencoder, and transfer learning; TROPOMI: satellite instrument for tropospheric pollution; XGBoost: extreme gradient boosting.

As summarized in this analysis (Table 1), recent studies have increasingly adopted deep learning techniques, such as RNNs, attention-based models, and hybrid architectures, for imputing air quality data, particularly under complex and irregular missingness patterns.

BRITS has demonstrated high performance in reconstructing missing values in multivariate and temporally structured data, using bidirectional recurrent neural networks and a learnable masking mechanism. While some studies in Mexico have explored statistical and machine learning approaches for air quality prediction, the application of deep learning-based imputation remains limited. Conversely, tree-based ensemble methods such as Random Forest have been widely used for imputation in environmental datasets due to their flexibility and ability to handle nonlinear relationships, although they do not explicitly model temporal structure.

The objective of this study is to compare the performance of BRITS, a state-of-the-art deep learning model, with Random Forest, a robust machine learning baseline, for imputing missing hourly observations in air quality time series from Mexico City, considering multiple pollutants (CO, NO, NO2, NOx, SO2, O3, PM10, PM2.5, and PMCO) and meteorological variables (RH, TMP, WDR, and WSP). This study aims to identify the strengths and limitations of each approach and to contribute to the development of more accurate and reliable strategies for environmental data imputation.

2. Materials and Methods

2.1. Database

2.1.1. Study Area

Mexico City, the capital and largest metropolitan area in Mexico, is located at over 2000 m above sea level and is surrounded by mountains on three sides. In 2020, this city had a population of approximately 9.2 million, while the Valley of Mexico Metropolitan Area had 21.8 million inhabitants, with projections estimating 22.75 million by 2025 []. The Valley of Mexico Metropolitan Area includes Mexico City and its adjacent suburban areas. The city covers an area of 1494.3 km2 and faces persistent air quality challenges due to its topography, urban density, and emission sources.

2.1.2. Data Collection and Integration

This study uses hourly data from the Mexico City Atmospheric Monitoring Network (Red Automática de Monitoreo Atmosférico, RAMA []), comprising air pollutants (PM2.5, PM10, NO, NO2, NOx, SO2, CO, and O3) and meteorological variables (temperature, relative humidity, wind speed, and wind direction). Data were collected from 1 January 2014, to 31 December 2023, including both dry and rainy seasons.

Annual files for each variable were loaded, concatenated, and transformed into a long format with standardized columns. Date and hour were combined into a single datetime column. All variables were then merged into a unified multivariate time series, aligned by monitoring station and timestamp. Missing values originally coded as -99 were replaced with NaN.

2.1.3. Missing Data Patterns Analysis and Station Filtering

A comprehensive analysis of the percentage of missing data per variable and monitoring station revealed several significant patterns. While some stations exhibit relatively low missingness across all variables, many shows critical data quality issues. Several stations, including LAA, COY, and TPN, have 100% missing data for most variables, making them unsuitable for time series modeling.

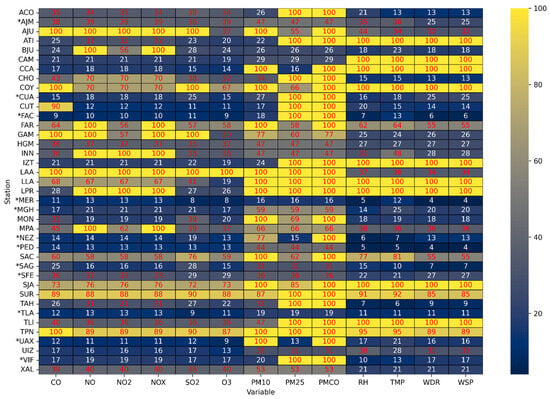

Additionally, PMCO, PM2.5, and PM10 consistently have 100% missing values across many stations. In contrast, pollutants such as CO, NO2, and O3 generally exhibit lower percentages of missing data across a wider range of stations, indicating relatively higher data availability. Figure 1 shows a heatmap of the missing data percentages for all monitoring stations included in the dataset. Variables with more than 30% missing data are shown in red, and those with 100% missing data are highlighted with a yellow background.

Figure 1.

Heatmap of missing data (%) by variable and monitoring. Variables with more than 30% missing data are highlighted in red; stations marked with an asterisk (*) were selected for this study.

Due to the high proportion of missing values across many stations, a missingness threshold was applied to determine which stations were included in the imputation procedure. In this work, 10 stations were selected, each with less than 30% missingness for every variable. To ensure reliability, variables with more than 30% missingness were excluded from the analysis to avoid unrealistic imputations caused by long sensor failures. This criterion was applied to stations such as MGH (59% missing in all PM variables), PED (44% in all PM variables), CUA (90% in CO, 100% in PM2.5 and PMCO), and NEZ (77% in PM10 and 100% in PMCO), where only the remaining variables were imputed.

Additionally, two stations (SFE with 21–36% and AJM with 25–47% missingness) were incorporated as a methodological exploration to evaluate model robustness under higher levels of missing data.

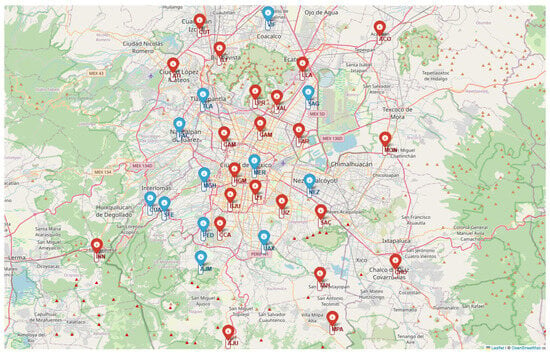

Figure 2 shows the RAMA monitoring network, with the selected stations highlighted in blue. Some variables were excluded from the selected stations when they exceeded the missing data threshold, for example PM10 at NEZ station and PMCO, PM2.5, and PM10 at UAX, FAC, and NEX stations, which had 100% missing values.

Figure 2.

Map of the Mexico City Atmospheric Monitoring Network. The stations selected for imputation are marked in blue.

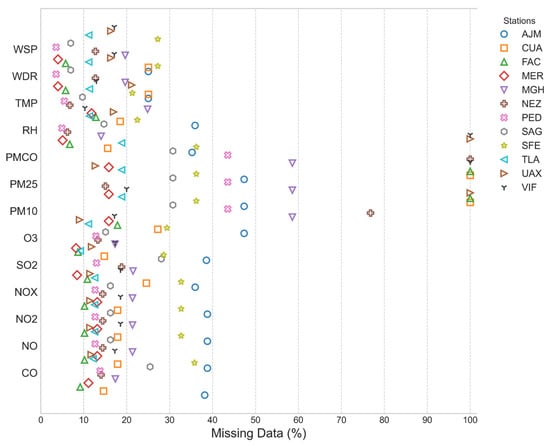

2.1.4. Missing Data Patterns in Selected Monitoring Station

Figure 3 shows the total percentage of missing values per variable for each of the 12 selected monitoring stations (CUA, FAC, MER, MGH, NEZ, PED, SAG, TLA, UAX, VIF, SFE, and AJM). The missingness rates vary across stations and variables (i.e., some stations have more missing data than others, and within each station, certain variables (e.g., PM10, PM2.5, and PMCO) may exhibit particularly high or complete missingness). Although most of these stations have less than 30% missing data overall, certain variables, such as PM10, PM2.5, and PMCO, show very high or complete absence of data in some stations.

Figure 3.

Percentage of missing values for each variable across the selected stations.

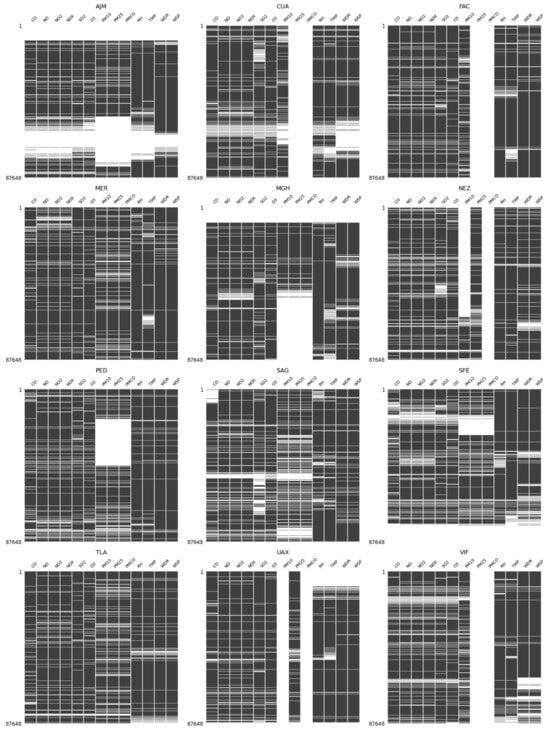

To better understand the temporal structure of missingness, nullity matrices were generated for each station. As illustrated in Figure 4, these visualizations reveal prolonged gaps in data across multiple variables, suggesting that the missing values are not randomly distributed but may be associated with systematic sensor failures.

Figure 4.

Temporal structure of missing data for the selected monitoring stations.

2.2. Model Training and Evaluation Pipeline

- Missing data identification: binary masks were created to identify real missing values in the dataset.

- Data splitting: each station’s dataset was divided into 80% for training and 20% for testing.

- Hyperparameter optimization: hyperparameter tuning was performed in sequential steps due to computational constraints.

- Artificial missingness for evaluation: to enable controlled performance assessment, 20% of the observed values in the test set were randomly removed under a Missing Completely At Random (MCAR) assumption. This masking allowed direct comparison between imputed and ground-truth values.

- Normalization: for the BRITS method, all variables were standardized using MinMaxScaler normalization, applied separately to the training and test datasets.

- Model Training and Evaluation: after hyperparameter optimization, models were retrained using the full training set and evaluated on the masked test set. Imputation performance was assessed using several evaluation metrics, including MAE, RMSE, Wasserstein distance, and TOST equivalence tests.

2.3. Model Training

To assess imputation quality across different methodological paradigms, two models were implemented: a classical machine learning method (Random Forest) and a deep learning model (BRITS). These models provide contrasting approaches to reconstructing missing environmental data, ranging from ensemble-based predictions to recurrent architectures that can model temporal dependencies.

2.3.1. Random Forest (RF) Hyperparameter Optimization

The Random Forest Regressor offers a robust and interpretable solution for multivariate imputation. We used IterativeImputer from scikit-learn with a Random Forest estimator to iteratively predict missing values using the available features at each timestamp. This approach can model complex nonlinear dependencies and often achieves high accuracy.

RF hyperparameter optimization was performed using a random search strategy, evaluating 35 randomly sampled configurations from the predefined grid of hyperparameters. The following hyperparameters were considered:

- n_estimators: number of trees in the ensemble.

- max_depth: maximum depth of individual trees.

- max_iter: number of iterations in the iterative imputation process (specific to IterativeImputer).

- min_samples_split: minimum number of samples required to split an internal node.

- min_samples_leaf: minimum number of samples required to be at a leaf node.

The default values and evaluated ranges for these hyperparameters are presented in Table 2, section (a).

Table 2.

Random Forest and BRIST hyperparameter optimization details used in this study.

2.3.2. BRITS Hyperparameter Optimization

BRITS is a deep learning model designed specifically for data imputation in multivariate time series, without relying on linear dynamics or strong prior assumptions. It is based on a bidirectional recurrent architecture that processes time series data in both forward and backward directions using recurrent neural networks (RNNs), allowing the model to learn temporal dependencies from both past and future contexts (Cao et al. []).

Unlike traditional methods such as MICE, where missing values are pre-filled and treated as known inputs, BRITS treats missing values as latent variables embedded within the model’s computation graph. These are dynamically updated through backpropagation during training, enabling more accurate and context-aware imputations. As reported by Hua et al. [], BRITS has shown strong performance on air quality datasets by effectively capturing nonlinear and correlated missing patterns.

For BRITS, hyperparameters were tuned using random search over 35 sampled configurations from the predefined grid, considering:

- RNN units: number of hidden units in the RNN layers. Larger values increase model capacity to learn temporal patterns.

- Subsequence length: input window size used during training.

- Learning rate: Rate at which the optimizer updates the model parameters.

- Batch size: number of samples per training batch.

- Use_regularization: whether to apply dropout/L2 regularization to prevent overfitting.

- Dropout_rate: proportion of neurons randomly deactivated during training.

The default values and evaluated ranges for these hyperparameters are presented in Table 2, section (b).

After hyperparameter tuning, the final model for each station was trained using 80% of the data and the corresponding optimal hyperparameter values. Model performance was then evaluated on the remaining 20% test set. To assess imputation accuracy, a mask was applied to randomly hide 20% of the observed values within the test set, simulating missing data. The model’s imputations were compared against these masked ground-truth values to compute the evaluation metrics.

2.4. Model Evaluation

To evaluate the imputation performance, four metrics were used: Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), Two One-Sided Tests (TOST), and Wasserstein distance.

- Mean Absolute Error (MAE) quantifies the average absolute difference between imputed values ŷt and observed values :where is the number of data points.

- 2.

- Root Mean Squared Error (RMSE) measures the square root of the average squared differences between imputed and observed values:

- 3.

- Wasserstein distance, also known as Earth Mover’s Distance (EMD), measures the dissimilarity between two probability distributions by calculating the minimum effort required to transform one distribution into another []. In the context of air quality time series, it is useful for comparing distributions of imputed and observed values. Given two cumulative distribution functions, and , the first-order Wasserstein distance is defined as:where the variable represents all possible values that a specific air quality variable (e.g., PM2.5 concentration) can take over time. The integral computes the area between the two cumulative curves, summarizing the total difference in distribution between the imputed and observed values. A smaller Wasserstein distance means that the imputed values follow the same pattern as the real ones, showing that the imputation method maintains the original data characteristics well.

- 4.

- The Two One-Sided Test (TOST []) is a statistical procedure used to assess the equivalence between the mean of imputed and observed values. Unlike conventional statistical tests that seek to detect significant differences, TOST is specifically designed to demonstrate equivalence, that is, to confirm that the difference is small enough to be considered negligible within a predefined tolerance margin. Let μo and μi be the means of observed and imputed values, respectively. According to Santamaría-Bonfil et al. [], TOST is implemented as two one-sided t-tests:where Δ is a defined equivalence margin. Equivalence is declared only if both null hypotheses are rejected, confirming that the mean difference lies entirely within the interval (). This criterion ensures that the imputed values do not statistically differ from the observed values beyond an acceptable tolerance. In this study, we used a significance level of α = 0.05 and equivalence margins based on ±10% of the observed mean.

3. Results

3.1. Results of Hyperparameter Optimization

Hyperparameter tuning was performed as described in Section 2.3, “Model Training”. For Random Forest, the optimization revealed notable variability across stations (Table 3). The number of estimators (n_estimators) ranged from 10 to 80, while the maximum tree depth (max_depth) was commonly set to 10 or 20, though some cases used no depth limit. The number of imputation iterations (max_iter) varied from 5 to 15. The minimum samples required to split a node (min_samples_split) and to remain at a leaf node (min_samples_leaf) showed heterogeneous configurations, indicating sensitivity to local data structure. Overall, smaller leaf sizes (1 to 4) and moderate split thresholds (2 to 10) were commonly preferred.

Table 3.

Random Forest hyperparameter optimization details for the 12 imputed station datasets used in this study.

The optimized hyperparameters suggest that stations with more complex missingness patterns (e.g., SFE) required deeper trees (max_depth = 20) and more estimators (50), while others (e.g., CUA and VIF) performed better with shallower models (max_depth = 10) and fewer estimators (10–20). Variations in min_samples_split and min_samples_leaf further suggest differences in noise levels and local dynamics across stations.

For BRITS, optimal hyperparameter settings were more consistent across stations (Table 4). A large hidden dimension was generally preferred, with 512 RNN units for most stations. Subsequence length values ranged from 32 to 168, and the learning rate was predominantly fixed at 0.005. The batch size varied between 16 and 64, reflecting a trade-off between stability and efficiency. Regularization was rarely applied, and dropout values ranged from 0.1 to 0.3.

Table 4.

BRITS hyperparameter optimization details for the 10 imputed station datasets used in this study.

Overall, BRITS exhibited consistent optimal settings across stations, favoring large hidden layers and moderate subsequence lengths, whereas Random Forest required highly station-specific settings, reflecting its adaptability to local variability but also its sensitivity to irregular missingness patterns.

3.2. Results of Imputation Models

After hyperparameter optimization, the final models were retrained on the full 80% training set using the selected optimal hyperparameter configurations. Evaluation was performed on the 20% test set. Additionally, to simulate realistic missingness and allow controlled performance evaluation, 20% of the observed values in the test set were randomly masked. The imputed values were compared to these hidden ground-truth values using four metrics: Mean Absolute Error (MAE), Root Mean Squared Error (RMSE) on masked subsets, Wasserstein distance, and TOST for equivalence testing on complete distributions.

3.2.1. Performance Evaluation on Masked Subset

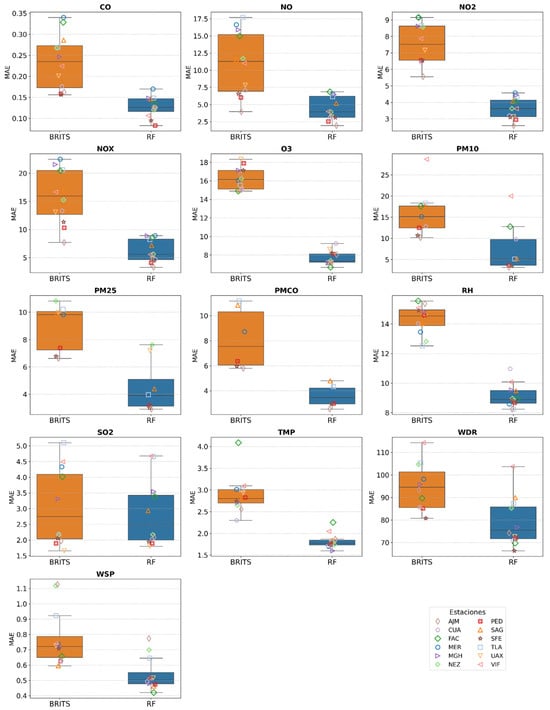

MAE and RMSE metrics were computed using 20% of observed values randomly masked from the validation set. Figure 5, Figure 6 and Figure 7 show the distribution of imputation errors, expressed as MAE and RMSE, for all variables across the ten stations, comparing RF and BRITS models.

Figure 5.

Boxplots of Mean Absolute Error (MAE) for Random Forest (RF) and BRITS across all variables and stations. These values were computed by randomly masking 20% of the observed validation set. Each subplot corresponds to one variable and preserves its original measurement scale.

Figure 6.

Boxplots of Root Mean Squared Error (RMSE) for RF and BRITS across all variables and stations, computed on masked validation sets. Each subplot corresponds to a different variable, shown in its original measurement scale.

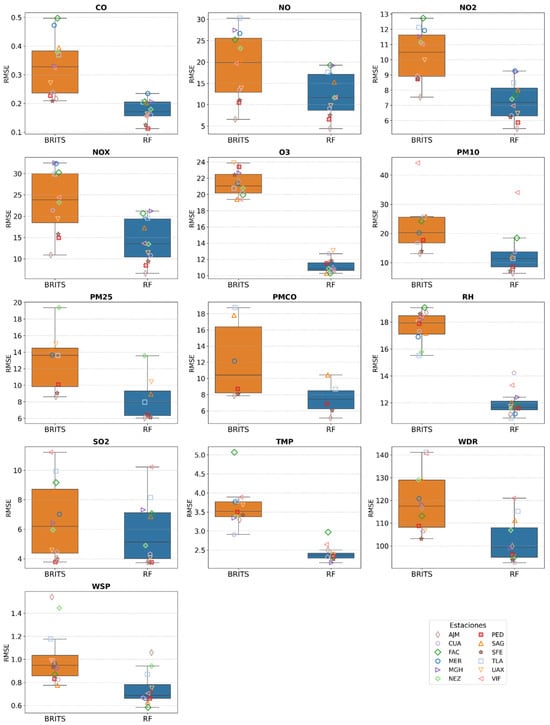

Figure 7.

Boxplots of Wasserstein distance for RF and BRITS across all variables and stations. Values were calculated by comparing the complete distribution of each variable in the original database versus the imputed database. Each subplot corresponds to a specific variable.

MAE Results

Figure 5 presents MAE results for RF and BRITS across all stations within each variable. Each subplot corresponds to a specific variable with its original scale, because these metrics are reported in the original units of each variable and should be compared only within the same context.

Overall, RF consistently achieved lower MAE values than BRITS across most variables and stations. The largest differences were observed in reactive gases such as NO, NO2, and NOx, where RF errors were often less than half those of BRITS. For example, in station MER, the MAE for NOx was 8.89 with RF versus 26.49 with BRITS, while in TLA, PM10 showed 5.13 for RF compared to 18.32 for BRITS (Table A1).

In contrast, for some meteorological variables (e.g., TMP and WSP), both methods performed similarly, with BRITS approaching RF performance in several stations. These results suggest that RF is more robust for pollutants with complex and nonlinear dynamics, while BRITS provides competitive results in smoother meteorological time series.

RMSE Results

Figure 6 shows a similar trend for RMSE. RF had smaller imputation errors and lower dispersion across stations compared to BRITS. RMSE differences were particularly notable for pollutants with high variability, such as NO, NOx, and PM10. For example, in station MER, RF achieved an RMSE of 20.31 for NOx, while BRITS reached 38.52; in TLA, PM10 showed 10.75 versus 24.23, respectively. These lower RMSE values for RF indicate that the model is more robust in capturing variability and reducing large errors associated with extreme observations, whereas BRITS’ conservative imputations around the mean make it more susceptible to large deviations when extreme values occur.

3.2.2. Distributional Similarity Assessment

Wasserstein distance and TOST were applied to the complete distribution of each variable, comparing the original dataset with the imputed dataset. These analyses revealed a different trend compared to the masked subset evaluation.

Results of Wasserstein Distance

BRITS consistently achieved lower Wasserstein distance values than RF for most variables, indicating superior preservation of the original data statistical distribution (Figure 7). For example, in station MER, NOx had 0.80 for RF compared to 0.40 for BRITS; in station TLA, PM2.5 showed 0.95 for RF versus 0.45 for BRITS. Similarly, in station SAG, NO2 reached 1.35 with RF versus 0.64 with BRITS, and in CUA, NO2 was 1.22 for RF against 0.59 for BRITS. BRITS also exhibited lower variability across stations, reflecting more consistent alignment with the true distributions. In contrast, RF, while achieving the best local accuracy (MAE and RMSE), produced higher Wasserstein distance values in many cases, underscoring a trade-off between decreasing individual errors and preserving the complete statistical distribution.

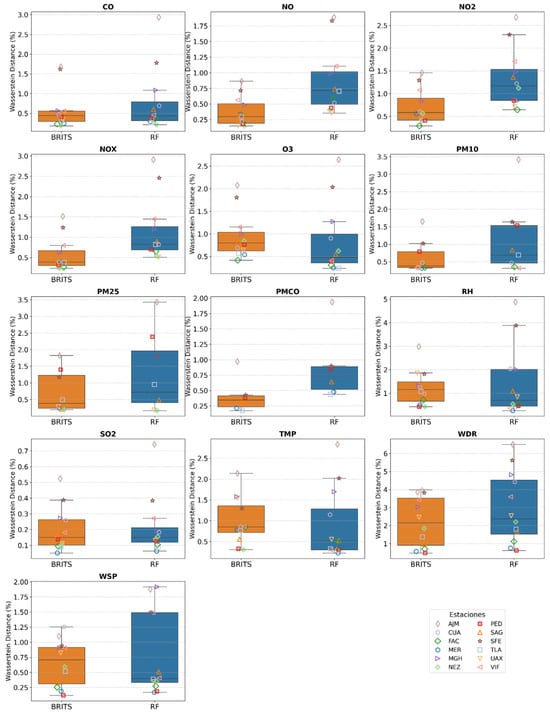

Results of TOST

Figure 8 presents a comparison between original means, imputed means, and TOST equivalence limits for each variable and station. In all cases the TOST equivalence criterion was satisfied, indicating that the imputed means were statistically equivalent to the original means within the predefined tolerance. Each subplot corresponds to a specific variable, while each row within a subplot represents one station. Black open circles indicate the observed mean for the complete dataset, while blue and red open circles represent the means obtained from the RF and BRITS imputation models, respectively. Horizontal dashed lines indicate the TOST equivalence bounds, defined as ±5% of the original mean for each variable. These bounds represent the range within which the imputed mean would be considered statistically equivalent to the original mean. This visualization facilitates a clear assessment of the proximity of the imputed means to the observed mean across variables and stations.

Figure 8.

Comparison of original and imputed means for RF and BRITS with TOST equivalence intervals across stations. TOST was applied to the complete distribution of each variable, comparing the original dataset with the imputed dataset. (a) CO; (b) NO; (c) NO2; (d) NOx; (e) SO2; (f) O3; (g) PM10; (h) PM2.5; (i) PMCO; (j) RH; (k) TMP; (l) WDR; (m) WSP.

The comparison of differences between observed and imputed means across stations reveals several patterns in the performance of RF and BRITS. Overall, most differences remained small (<±1 unit), indicating that both models achieved high accuracy for most variables. However, BRITS showed a slight overestimation tendency for several pollutants, particularly NO, NOx, PM10, and PM2.5. In contrast, RF generally was stable; it exhibited higher variability in some cases, with both under- and overestimations, especially in variables with greater heterogeneity, such as wind direction (WDR).

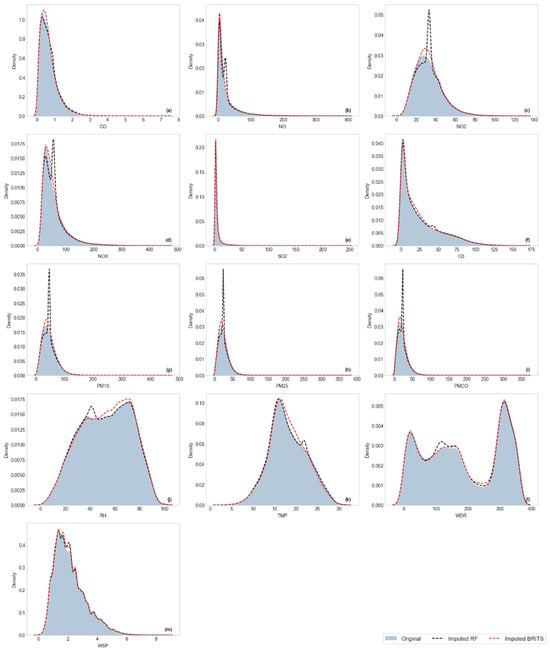

Visualization of Kernel Density Estimation (KDE)

KDE is a technique used to estimate the probability distribution of a dataset in a smooth and continuous manner. It is widely applied in exploratory analysis to examine the shape of the distribution (e.g., symmetric, skewed, or multimodal). In visualization, a KDE plot is a curve that represents the estimated density derived from the data.

Figure 9 shows KDE-based estimated distributions for the MER station, comparing original data (shaded area) with imputations from RF (black dashed line) and BRITS (red dashed line). Overall, both techniques adequately captured the global shape of the original distributions. However, notable differences are observed for variables with high skewness or multimodality. For pollutants such as CO, NO, NO2, and NOx (Figure 9a–d), the imputed distributions closely followed the right-skewed pattern of these variables, although RF tended to generate sharper peaks around central tendency, whereas BRITS provided smoother densities, more closely resembling the original distribution. For SO2 and O3 (Figure 9e,f), both methods exhibited acceptable alignment. For particulate matter (PM10, PM2.5, and PMCO; subplots g–i), notable differences emerged: RF concentrates density around central values, reducing distribution spread, while BRITS better reproduces distribution. For meteorological variables such as RH and TMP (Figure 9j,k), BRITS more accurately captured RH bimodality and TMP variability, whereas RF tended to overfit central regions. Finally, for WDR and WSP (Figure 9l, m), both methods followed the general pattern, though marked deviations occurred in WDR due to its multimodal distribution, where RF exhibited oscillations absent in the original data. Overall, Figure 9 highlights that BRITS preserved distributional characteristics more effectively in variables with high variability, while RF, despite achieving reasonable central estimates, tended to distort tails and reduced spread in highly skewed variables.

Figure 9.

Kernel Density Estimation (KDE) of original and imputed distributions for MER station variables. (a) CO; (b) NO; (c) NO2; (d) NOx; (e) SO2; (f) O3; (g) PM10; (h) PM2.5; (i) PMCO; (j) RH; (k) TMP; (l) WDR; (m) WSP.

Figures S1–S11 present KDE-based estimated distributions for the CUA, FAC, MER, MGH, NEZ, PED, SAG, TLA, UAX, VIF, SFE, and AJM stations, comparing the original dataset with imputations from RF and BRITS models. KDE analysis showed that BRITS generally preserved distributional variability more effectively than RF, which tended to concentrate density near central values; both models struggled with WDR due to its multimodal nature.

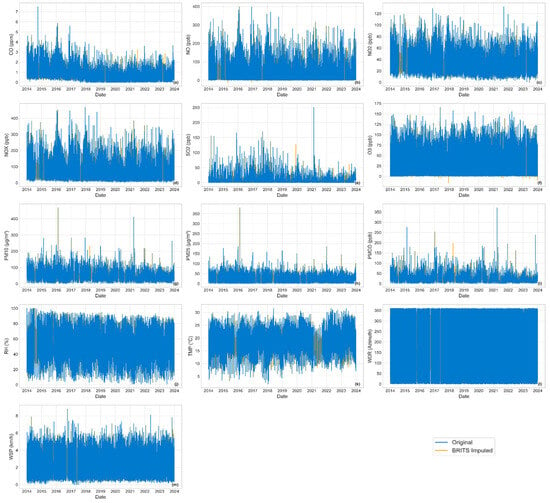

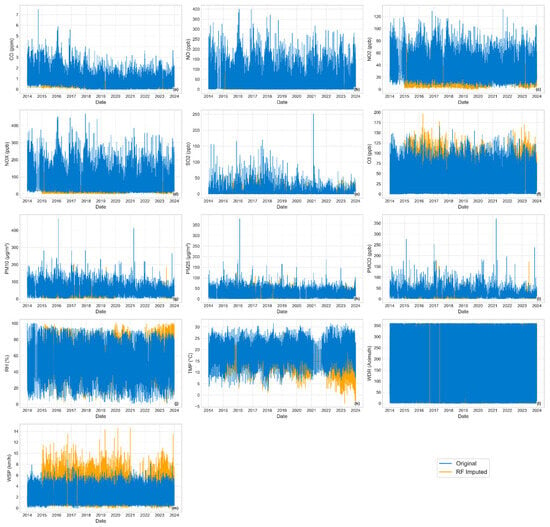

Time Series Reconstruction from 2014–2023: MER Station

Figure 10 and Figure 11 show the complete time series from 2014 to 2023 for the MER station, which exhibited the low missing rates, ranging from 4% (WDR and WSP) to 16% (PM10, PM2.5, and PMCO). Figure 10 shows imputations obtained for BRITS, where imputed values (yellow color) closely follow the pattern of observed values (blue color), even across large gaps; for example, TMP in 2021 and NO, NO2, and NOx during late 2014 display imputations that align well with the original trends. In contrast, RF imputations (Figure 11) were less consistent, particularly for particulate matter variables (PM10, PM2.5, and PMCO), where the model often generated unrealistic extremes at peak points.

Figure 10.

Complete time series from 2014 to 2023 for the MER station, showing observed values (blue) and imputed values using BRITS (yellow). (a) CO; (b) NO; (c) NO2; (d) NOx; (e) SO2; (f) O3; (g) PM10; (h) PM2.5; (i) PMCO; (j) RH; (k) TMP; (l) WDR; (m) WSP.

Figure 11.

Complete time series from 2014 to 2023 for the MER station, showing observed values (blue) and imputed values using Random Forest (yellow). (a) CO; (b) NO; (c) NO2; (d) NOx; (e) SO2; (f) O3; (g) PM10; (h) PM2.5; (i) PMCO; (j) RH; (k) TMP; (l) WDR; (m) WSP.

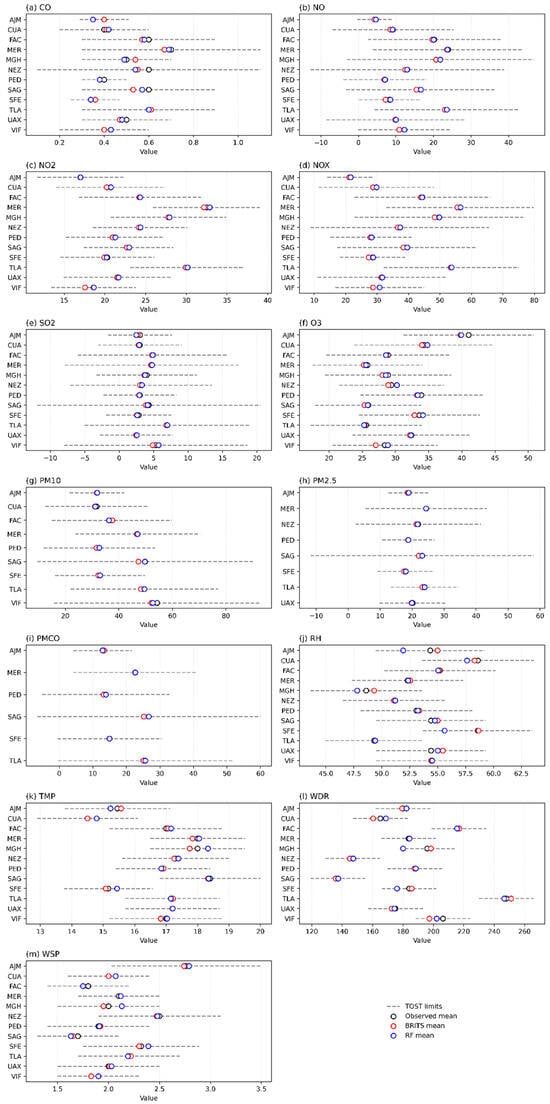

3.3. Comparative Evaluation of Temporal Autocorrelation and Extreme Event Preservation Between RF and BRITS

To assess whether the imputation methods preserved the temporal structure of the data, we evaluated the similarity between the autocorrelation functions (ACF) of the observed and imputed series, computed on the masked validation dataset with 168 lags, corresponding to one week of hourly dependencies and sufficient to capture both daily and weekly temporal patterns relevant for air quality dynamics. Figure 12 presents a comparative analysis of ACF errors for RF and BRITS. Overall, RF demonstrated superior performance in preserving temporal autocorrelation across most stations and variables. However, several cases favored BRITS, specifically for RH, SO2, TMP, and WDR in several stations, as well as PMCO in MER and PED, and WSP in AJM. Among all variables, RH exhibited the largest ACF errors, highlighting the difficulty of reconstructing meteorological variables with higher short-term variability.

Figure 12.

Comparative analysis of temporal autocorrelation preservation between RF and BRITS on the masked validation dataset (lag = 168): (a) distribution of ACF errors across variables, (b) heatmap of ACF errors for RF, and (c) heatmap of ACF errors for BRITS, with stars marking cases where BRITS outperforms RF.

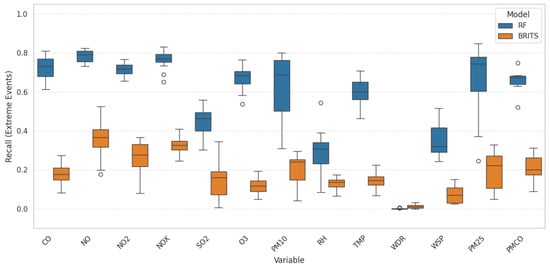

To further assess whether the imputation methods realistically handle extreme events, we evaluated their recall in detecting values above the 90th percentile of the observed distribution. The boxplot comparison (Figure 13) reveals that Random Forest consistently achieves higher recall across most variables and stations, indicating a stronger ability to preserve extreme fluctuations. In contrast, BRITS exhibits comparatively lower recall, though in a few cases it shows competitive performance. These results highlight that while both models can reconstruct general temporal dynamics, Random Forest better captures the occurrence of extreme events in the masked validation dataset.

Figure 13.

Boxplot comparison of recall for detecting extreme events (threshold: 90th percentile of observed values) in the masked validation dataset.

4. Discussion

Our findings demonstrate that BRITS generally outperforms RF in preserving distributional similarity and minimizing Wasserstein distance, particularly for variables with high variability, while both methods achieved TOST equivalence. These results are consistent with recent studies by Wang et al. (2023) [] and Hua et al. (2024) [], which highlighted the robustness of BRITS and other deep learning models under irregular missingness in multivariate time series. RF performed adequately for low-variability variables (e.g., CO and SO2), confirming its usefulness in certain contexts as noted by He et al. (2023) []. However, both methods showed limitations when reconstructing meteorological variables with higher variability. Neither RF nor BRITS successfully reconstructed long periods of missing data. For example, there were extreme multi-year gaps in PMCO, PM10, and PM2.5 data at the PED station (2016 to 2019). During this period, BRITS consistently underestimated the actual values, while RF tended to overestimate and produced outliers.

An important aspect to consider is the contrasting hyperparameter behavior of both models, which reflects their underlying adaptability. BRITS exhibited relatively consistent optimal settings across stations, favoring large hidden layers (512 units), moderate subsequence lengths (32–64), and a fixed learning rate (0.005). This indicates its reliance on generalized temporal patterns. In contrast, RF required highly station-specific configurations, such as deeper trees (max_depth = 20) and more estimators (50) for stations with high variability (e.g., PED and SAG), while simpler structures sufficed for others. This heterogeneity suggests that RF adapts its complexity to local dynamics, whereas BRITS applies a more uniform strategy, which may explain its superior stability for short- to medium-sized gaps but inferior performance under extreme missingness.

Additional preliminary tests were conducted using two alternative deep learning models, specifically SAITS and GAIN. However, these models produced unsatisfactory results, because they exhibited very limited variability, with a strong tendency to reproduce mean values, rather than capturing the original distribution or local dynamics. This smoothing effect makes such models unsuitable for time series in which maintaining natural variability is critical, such as environmental monitoring and subsequent forecasting.

The inclusion of stations with higher proportions of missing data, such as SFE (21–36%) and AJM (25–47%), allowed us to directly test the robustness of the methodology under more challenging conditions. While MAE and RMSE did not show a systematic increase with higher missingness, the Wasserstein distance provided a clearer signal, consistently identifying SFE and AJM as the stations with the greatest distributional divergences. Despite this, the comparative behavior of RF and BRITS remained consistent: RF tended to reduce local errors, whereas BRITS more effectively preserved global distributional features. These results highlight that the methodology remains robust even when applied to stations with up to ~50% missing data, reinforcing its potential for real-world monitoring networks where large gaps are common.

ACF-based analysis on the masked validation dataset indicates that RF consistently preserved temporal autocorrelation better than BRITS in most variables. Nonetheless, BRITS outperformed RF in several cases, notably for SO2, TMP, WDR, PMCO (in MER and PED), and WSP (in AJM). RH showed the largest ACF errors overall, reflecting the challenge of reconstructing highly variable meteorological data.

Our results highlight that there is no single “best” imputation method across all evaluation metrics. Random Forest, which consistently achieved lower MAE and RMSE on masked datasets, appears more suitable for compliance monitoring or operational contexts where pointwise accuracy is critical (e.g., meeting regulatory thresholds or triggering alerts). In contrast, BRITS, with comparatively lower Wasserstein distances, is better suited for exploratory research, long term trend analysis, or forecasting, where preserving the underlying data structure, variability, and temporal dynamics is more relevant. These distinctions underscore that the choice of method should be guided by the specific objectives of the study, thereby enhancing the practical applicability of our findings.

5. Limitations and Future Perspectives

A key limitation of this study is that stations or variables with extreme missingness (>30% and up to 100%) were excluded from the analysis to ensure reliable imputations and delimit the scope of this work. However, as an exploratory test of model robustness, two stations with higher missingness (SFE with 21–36% and AJM with 25–47%) were included. Future research on this topic should focus on developing more robust imputation frameworks capable of handling stations with extreme missingness. This could involve hybrid deep learning and machine learning methods that integrate spatial-temporal dependencies to preserve variability and dynamic patterns in long missing sequences.

Recent advances in graph-based imputation models offer promising perspectives for future improvements. Models such as the Graph Recurrent Imputation Network (GRIN; Cini et al., 2022 []) and other graph convolutional frameworks have demonstrated strong performance in imputing environmental time series by leveraging spatial correlations between monitoring stations (Chen et al., 2022 []; Li et al., 2023 []; Dimitri et al., 2024 []). These approaches integrate spatial and temporal dependencies by combining recurrent or attention-based architectures with graph convolutional layers, often learning the station connectivity dynamically. Such methods are particularly well-suited for dense and structured monitoring networks, such as the Mexico City air quality system, where spatial proximity and functional similarity across stations can provide complementary context for more accurate imputation. While our present study focused on purely temporal models to assess individual station performance, future work could incorporate spatially informed methods to enhance robustness, especially for stations with persistent sensor failures or sparse observational records. Ultimately, incorporating spatial-temporal methods could pave the way for practical deployment in real-time operational monitoring networks.

6. Conclusions

This study systematically evaluated the performance of RF and BRITS for imputing missing values in multivariate air quality time series from Mexico City monitoring stations. The results evaluation considered two perspectives: local performance on masked subsets using MAE and RMSE metrics and distributional similarity on complete datasets through TOST and Wasserstein distance. Results indicate that RF consistently achieved lower MAE and RMSE values than BRITS on masked subsets. In contrast, BRITS generally preserved the statistical distribution of the original data better than RF for most variables. However, both models showed limitations for variables with high variability, such as wind direction (WDR) and particulate matter (PM10 and PM2.5), where both error magnitudes and equivalence intervals were substantially wider.

These findings highlight that the choice of imputation method should be guided by the intended application: RF when pointwise accuracy is critical (e.g., compliance monitoring) and BRITS when preserving distributional and temporal dynamics is essential (e.g., forecasting and trend analysis).

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/ai6090208/s1, Figure S1: Kernel Density Estimation (KDE) plots for CUA station; Figure S2: KDE plots for FAC station; Figure S3: KDE plots for MGH station; Figure S4: KDE plots for NEZ station; Figure S5: KDE plots for PED station; Figure S6: KDE plots for SAG station; Figure S7: KDE plots for TLA station; Figure S8: KDE plots for UAX station; Figure S9: KDE plots for VIF station; Figure S10: KDE plots for SFE station; Figure S11: KDE plots for AJM station.

Author Contributions

Conceptualization, L.D.-G., J.C.P.-S. and N.L.; methodology, L.D.-G. and I.T.-U.; software, I.T.-U.; validation, I.T.-U.; investigation, L.D.-G., I.T.-U., J.C.P.-S. and N.L.; data curation, I.T.-U.; writing—original draft, L.D.-G.; writing—review and editing, I.T.-U., J.C.P.-S. and N.L.; visualization, I.T.-U. and L.D.-G.; supervision, L.D.-G., J.C.P.-S. and N.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was also not funded by any institution or grant.

Data Availability Statement

The datasets and Python 3.9 notebooks used in this work are available at the web repository: https://github.com/IngridLangley01/AirQuality-Imputation (accessed on 27 August 2025).

Acknowledgments

The first author acknowledges the sabbatical scholarship granted by Secretaría de Ciencia, Humanidades, Tecnología e Innovación (SECIHTI) and the institutional support provided by Instituto Nacional de Astrofísica, Óptica y Electrónica (INAOE) during the development of this work.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A

This appendix provides more details on the results of the study. Table A1 presents performance metrics results for masked subsets and the complete datasets.

Table A1.

Performance metrics for masked subsets and complete datasets. The first section reports MAE and RMSE metrics for Random Forest (RF) and BRITS imputation models on masked subsets. The second section shows original means, imputed means, TOST equivalence limits (±5% of the original mean), and Wasserstein distance relative percentages for the complete datasets.

Table A1.

Performance metrics for masked subsets and complete datasets. The first section reports MAE and RMSE metrics for Random Forest (RF) and BRITS imputation models on masked subsets. The second section shows original means, imputed means, TOST equivalence limits (±5% of the original mean), and Wasserstein distance relative percentages for the complete datasets.

| # | Station | Variable | Masked Subset | Complete Distribution Dataset | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | Original Mean (Om) | Imputed Mean (Im) | Mean Difference (Om-Im) | TOST Interval ± | Wasserstein Distance (%) | ||||||||

| RF | BRITS | RF | BRITS | RF | BRITS | RF | BRITS | RF | BRITS | |||||

| 1 | MER | CO | 0.17 | 0.34 | 0.23 | 0.47 | 0.70 | 0.69 | 0.67 | −0.02 | 0.01 | 0.40 | 0.29 | 0.25 |

| 2 | NO | 6.57 | 16.63 | 16.92 | 26.69 | 23.90 | 23.70 | 23.59 | 0.16 | 0.27 | 20.00 | 0.73 | 0.33 | |

| 3 | NO2 | 4.57 | 9.14 | 9.26 | 11.92 | 32.50 | 32.88 | 32.16 | −0.36 | 0.36 | 6.60 | 0.86 | 0.55 | |

| 4 | NOx | 8.89 | 22.49 | 19.28 | 32.36 | 56.40 | 56.55 | 55.53 | −0.20 | 0.82 | 23.40 | 0.79 | 0.38 | |

| 5 | O3 | 7.23 | 16.05 | 11.43 | 21.35 | 25.80 | 25.56 | 25.25 | 0.27 | 0.59 | 8.30 | 0.25 | 0.54 | |

| 6 | PM10 | 4.76 | 15.13 | 9.90 | 20.22 | 47.00 | 46.99 | 46.71 | 0.01 | 0.28 | 23.30 | 0.68 | 0.31 | |

| 7 | PM2.5 | 3.86 | 9.81 | 7.95 | 13.64 | 24.40 | 24.35 | 24.38 | 0.09 | 0.06 | 19.00 | 0.48 | 0.24 | |

| 8 | PMCO | 3.93 | 8.72 | 7.94 | 12.15 | 22.60 | 22.65 | 22.61 | −0.09 | −0.05 | 18.50 | 0.48 | 0.21 | |

| 9 | RH | 8.59 | 13.46 | 11.18 | 16.90 | 52.30 | 52.36 | 52.54 | −0.05 | −0.23 | 5.00 | 0.26 | 0.50 | |

| 10 | SO2 | 2.84 | 4.33 | 5.36 | 7.02 | 4.70 | 4.77 | 4.61 | −0.04 | 0.12 | 12.60 | 0.06 | 0.05 | |

| 11 | TMP | 1.70 | 3.01 | 2.28 | 3.76 | 18.00 | 18.05 | 17.84 | −0.05 | 0.16 | 1.50 | 0.23 | 0.77 | |

| 12 | WDR | 83.03 | 98.15 | 105.60 | 120.78 | 183.70 | 184.29 | 184.37 | −0.64 | −0.72 | 18.00 | 0.75 | 0.56 | |

| 13 | WSP | 0.48 | 0.74 | 0.66 | 0.96 | 2.10 | 2.12 | 2.12 | 0.00 | 0.00 | 0.40 | 0.17 | 0.19 | |

| 14 | TLA | CO | 0.15 | 0.27 | 0.20 | 0.37 | 0.60 | 0.60 | 0.61 | 0.01 | 0.01 | 0.30 | 0.26 | 0.31 |

| 15 | NO | 6.15 | 17.69 | 17.64 | 30.27 | 23.50 | 23.55 | 23.02 | −0.07 | 0.46 | 19.20 | 0.70 | 0.29 | |

| 16 | NO2 | 4.34 | 8.64 | 8.49 | 12.13 | 30.10 | 30.16 | 29.93 | −0.06 | 0.17 | 7.00 | 0.85 | 0.41 | |

| 17 | NOx | 8.23 | 20.62 | 19.58 | 29.91 | 53.60 | 53.69 | 53.40 | −0.12 | 0.16 | 21.50 | 0.82 | 0.38 | |

| 18 | O3 | 7.17 | 15.55 | 10.63 | 20.76 | 25.60 | 25.28 | 25.36 | 0.30 | 0.21 | 8.40 | 0.24 | 0.57 | |

| 19 | PM10 | 5.13 | 18.32 | 11.44 | 25.60 | 49.50 | 49.59 | 48.25 | −0.12 | 1.22 | 27.50 | 0.69 | 0.32 | |

| 20 | PM2.5 | 3.97 | 10.22 | 7.96 | 13.60 | 23.90 | 23.93 | 23.22 | −0.05 | 0.66 | 10.60 | 0.95 | 0.49 | |

| 21 | PMCO | 4.32 | 11.18 | 8.67 | 18.76 | 25.60 | 25.66 | 25.05 | −0.07 | 0.55 | 26.00 | 0.44 | 0.17 | |

| 22 | RH | 8.25 | 12.52 | 10.88 | 15.52 | 49.30 | 49.40 | 49.39 | −0.09 | −0.08 | 4.40 | 0.41 | 1.08 | |

| 23 | SO2 | 4.66 | 5.10 | 8.15 | 9.93 | 7.00 | 7.00 | 6.83 | −0.05 | 0.13 | 12.00 | 0.09 | 0.09 | |

| 24 | TMP | 1.79 | 3.01 | 2.39 | 3.79 | 17.20 | 17.15 | 17.22 | 0.07 | −0.01 | 1.50 | 0.33 | 0.77 | |

| 25 | WDR | 87.39 | 105.27 | 115.21 | 141.17 | 248.00 | 246.58 | 251.15 | 1.38 | −3.20 | 18.00 | 1.79 | 1.37 | |

| 26 | WSP | 0.65 | 0.92 | 0.87 | 1.18 | 2.20 | 2.19 | 2.22 | 0.04 | 0.00 | 0.50 | 0.39 | 0.52 | |

| 27 | SAG | CO | 0.15 | 0.29 | 0.20 | 0.39 | 0.60 | 0.57 | 0.53 | −0.02 | 0.02 | 0.30 | 0.60 | 0.53 |

| 28 | NO | 5.19 | 11.52 | 15.29 | 20.16 | 16.50 | 16.62 | 15.43 | −0.07 | 1.12 | 19.80 | 0.74 | 0.30 | |

| 29 | NO2 | 4.06 | 7.09 | 8.02 | 9.26 | 22.90 | 22.98 | 22.68 | −0.05 | 0.25 | 5.50 | 1.36 | 0.62 | |

| 30 | NOx | 7.19 | 16.95 | 17.25 | 25.65 | 39.50 | 39.60 | 38.36 | −0.12 | 1.12 | 22.20 | 0.90 | 0.39 | |

| 31 | O3 | 7.23 | 15.01 | 10.29 | 19.40 | 25.90 | 25.85 | 25.34 | 0.02 | 0.53 | 8.00 | 0.55 | 0.83 | |

| 32 | PM10 | 5.24 | 17.65 | 12.14 | 25.80 | 49.80 | 49.72 | 47.32 | 0.08 | 2.48 | 40.30 | 0.83 | 0.37 | |

| 33 | PM2.5 | 4.40 | 9.83 | 8.94 | 14.31 | 23.10 | 23.16 | 22.07 | −0.04 | 1.05 | 34.90 | 0.49 | 0.21 | |

| 34 | PMCO | 4.79 | 10.83 | 10.43 | 17.81 | 26.70 | 26.68 | 25.19 | 0.00 | 1.49 | 33.30 | 0.64 | 0.31 | |

| 35 | RH | 9.49 | 14.03 | 12.03 | 17.17 | 54.40 | 54.75 | 55.00 | −0.38 | −0.63 | 4.90 | 1.09 | 1.37 | |

| 36 | SO2 | 2.94 | 3.47 | 6.86 | 8.56 | 4.30 | 4.13 | 3.87 | 0.13 | 0.39 | 16.20 | 0.15 | 0.16 | |

| 37 | TMP | 1.84 | 2.78 | 2.40 | 3.53 | 18.40 | 18.35 | 18.33 | 0.03 | 0.05 | 1.60 | 0.53 | 0.56 | |

| 38 | WDR | 89.93 | 100.27 | 111.19 | 128.92 | 137.10 | 137.39 | 135.76 | −0.32 | 1.31 | 18.00 | 1.66 | 0.98 | |

| 39 | WSP | 0.46 | 0.60 | 0.63 | 0.78 | 1.70 | 1.63 | 1.65 | 0.03 | 0.00 | 0.40 | 0.51 | 0.33 | |

| 40 | UAX | CO | 0.12 | 0.20 | 0.16 | 0.27 | 0.50 | 0.48 | 0.47 | 0.00 | 0.00 | 0.20 | 0.32 | 0.34 |

| 41 | NO | 3.19 | 7.82 | 9.76 | 13.85 | 10.00 | 9.99 | 9.79 | 0.02 | 0.22 | 18.60 | 0.35 | 0.15 | |

| 42 | NO2 | 3.14 | 7.16 | 6.47 | 9.98 | 21.50 | 21.67 | 21.51 | −0.13 | 0.04 | 6.60 | 0.71 | 0.38 | |

| 43 | NOx | 4.79 | 13.11 | 11.42 | 19.37 | 31.50 | 31.67 | 31.30 | −0.13 | 0.24 | 20.40 | 0.51 | 0.24 | |

| 44 | O3 | 8.63 | 18.33 | 13.07 | 23.89 | 32.40 | 32.34 | 32.17 | 0.08 | 0.25 | 8.90 | 0.37 | 0.64 | |

| 45 | PM2.5 | 7.19 | 9.99 | 10.41 | 15.00 | 20.20 | 19.93 | 19.83 | 0.23 | 0.34 | 10.40 | 0.18 | 0.28 | |

| 46 | RH | 8.79 | 14.53 | 11.73 | 18.00 | 54.40 | 55.02 | 55.46 | −0.64 | −1.08 | 4.90 | 0.85 | 1.86 | |

| 47 | SO2 | 1.80 | 1.66 | 4.08 | 4.57 | 2.50 | 2.56 | 2.45 | −0.04 | 0.07 | 5.30 | 0.13 | 0.11 | |

| 48 | TMP | 1.81 | 2.94 | 2.40 | 3.68 | 17.20 | 17.21 | 17.20 | 0.02 | 0.03 | 1.50 | 0.55 | 1.55 | |

| 49 | WDR | 72.65 | 84.76 | 95.10 | 106.61 | 175.00 | 174.36 | 172.58 | 0.59 | 2.37 | 18.00 | 2.54 | 2.46 | |

| 50 | WSP | 0.52 | 0.73 | 0.75 | 0.99 | 2.00 | 2.03 | 2.01 | 0.01 | 0.03 | 0.50 | 0.35 | 0.82 | |

| 51 | FAC | CO | 0.13 | 0.33 | 0.21 | 0.50 | 0.60 | 0.58 | 0.57 | 0.00 | 0.01 | 0.30 | 0.37 | 0.23 |

| 52 | NO | 6.85 | 14.99 | 19.25 | 25.19 | 20.20 | 20.06 | 19.65 | 0.11 | 0.52 | 17.70 | 0.59 | 0.19 | |

| 53 | NO2 | 4.05 | 9.14 | 7.68 | 12.73 | 24.30 | 24.33 | 24.21 | −0.06 | 0.06 | 7.50 | 0.64 | 0.29 | |

| 54 | NOx | 8.59 | 20.45 | 20.65 | 30.23 | 44.40 | 44.36 | 43.95 | 0.07 | 0.48 | 21.50 | 0.65 | 0.26 | |

| 55 | O3 | 6.68 | 14.90 | 10.31 | 19.95 | 28.90 | 28.56 | 28.64 | 0.31 | 0.23 | 9.30 | 0.31 | 0.42 | |

| 56 | PM10 | 12.78 | 17.60 | 18.44 | 24.21 | 37.40 | 36.30 | 37.59 | 1.09 | −0.20 | 22.40 | 0.35 | 0.34 | |

| 57 | RH | 8.92 | 15.56 | 11.81 | 19.07 | 55.20 | 55.04 | 55.16 | 0.16 | 0.04 | 5.00 | 0.51 | 0.71 | |

| 58 | SO2 | 3.39 | 4.02 | 7.05 | 9.16 | 4.90 | 4.87 | 4.76 | 0.05 | 0.15 | 10.90 | 0.10 | 0.09 | |

| 59 | TMP | 2.25 | 4.09 | 2.97 | 5.07 | 17.00 | 17.16 | 17.06 | −0.13 | −0.02 | 1.80 | 0.50 | 0.85 | |

| 60 | WDR | 69.72 | 89.64 | 94.79 | 113.11 | 216.90 | 215.55 | 216.94 | 1.31 | −0.08 | 18.00 | 1.11 | 0.70 | |

| 61 | WSP | 0.42 | 0.66 | 0.58 | 0.87 | 1.80 | 1.75 | 1.75 | 0.00 | 0.00 | 0.40 | 0.27 | 0.25 | |

| 62 | NEZ | CO | 0.12 | 0.27 | 0.18 | 0.38 | 0.60 | 0.54 | 0.55 | 0.01 | 0.01 | 0.50 | 0.21 | 0.18 |

| 63 | NO | 3.90 | 11.71 | 11.65 | 23.20 | 13.00 | 12.98 | 12.44 | −0.01 | 0.53 | 25.80 | 0.40 | 0.15 | |

| 64 | NO2 | 3.62 | 8.56 | 7.41 | 11.14 | 24.30 | 24.37 | 24.16 | −0.08 | 0.13 | 5.80 | 1.12 | 0.56 | |

| 65 | NOx | 5.63 | 15.26 | 13.46 | 23.23 | 37.20 | 37.34 | 36.58 | −0.09 | 0.66 | 28.40 | 0.53 | 0.23 | |

| 66 | O3 | 7.39 | 16.24 | 10.85 | 20.76 | 29.40 | 30.23 | 28.92 | −0.84 | 0.47 | 7.90 | 0.62 | 0.85 | |

| 67 | PM2.5 | 7.62 | 10.82 | 13.56 | 19.38 | 21.90 | 21.72 | 21.34 | 0.17 | 0.55 | 19.60 | 0.17 | 0.19 | |

| 68 | RH | 8.96 | 12.82 | 11.60 | 15.78 | 51.10 | 51.20 | 51.08 | −0.12 | 0.00 | 4.60 | 0.54 | 0.43 | |

| 69 | SO2 | 2.17 | 2.16 | 4.90 | 5.96 | 3.20 | 3.31 | 3.02 | −0.15 | 0.14 | 10.30 | 0.15 | 0.10 | |

| 70 | TMP | 1.74 | 2.65 | 2.29 | 3.38 | 17.30 | 17.39 | 17.26 | −0.07 | 0.05 | 1.70 | 0.30 | 0.31 | |

| 71 | WDR | 85.24 | 104.66 | 106.92 | 129.10 | 146.90 | 147.32 | 145.05 | −0.46 | 1.81 | 18.00 | 2.21 | 1.84 | |

| 72 | WSP | 0.70 | 1.12 | 0.94 | 1.45 | 2.50 | 2.48 | 2.47 | 0.02 | 0.03 | 0.60 | 0.35 | 0.59 | |

| 73 | PED | CO | 0.08 | 0.16 | 0.11 | 0.23 | 0.40 | 0.38 | 0.38 | 0.01 | 0.00 | 0.10 | 0.41 | 0.41 |

| 74 | NO | 2.54 | 6.03 | 6.57 | 10.49 | 7.10 | 7.07 | 6.89 | 0.01 | 0.19 | 11.10 | 0.44 | 0.18 | |

| 75 | NO2 | 2.95 | 6.55 | 5.87 | 8.73 | 21.20 | 21.28 | 20.94 | −0.10 | 0.25 | 6.00 | 0.85 | 0.41 | |

| 76 | NOx | 4.10 | 10.31 | 8.45 | 14.92 | 28.20 | 28.29 | 27.88 | −0.06 | 0.34 | 13.00 | 0.70 | 0.31 | |

| 77 | O3 | 8.09 | 17.90 | 11.51 | 23.41 | 33.90 | 33.35 | 33.25 | 0.53 | 0.63 | 9.20 | 0.39 | 0.76 | |

| 78 | PM10 | 3.63 | 12.48 | 8.54 | 17.74 | 32.70 | 32.75 | 31.62 | −0.08 | 1.06 | 20.80 | 1.54 | 0.79 | |

| 79 | PM2.5 | 3.17 | 7.39 | 6.41 | 10.09 | 18.80 | 18.81 | 18.71 | −0.03 | 0.07 | 8.20 | 2.39 | 1.40 | |

| 80 | PMCO | 2.98 | 6.37 | 6.88 | 8.71 | 13.90 | 13.91 | 13.18 | −0.01 | 0.72 | 19.00 | 0.85 | 0.38 | |

| 81 | RH | 8.67 | 14.57 | 11.60 | 17.88 | 53.10 | 53.23 | 53.36 | −0.08 | −0.21 | 5.00 | 0.47 | 0.43 | |

| 82 | SO2 | 1.89 | 1.89 | 3.75 | 3.78 | 3.00 | 2.94 | 2.89 | 0.06 | 0.11 | 5.30 | 0.12 | 0.14 | |

| 83 | TMP | 1.75 | 2.83 | 2.34 | 3.50 | 16.90 | 16.85 | 16.92 | 0.05 | −0.01 | 1.50 | 0.30 | 0.33 | |

| 84 | WDR | 71.86 | 85.18 | 96.45 | 108.78 | 187.70 | 188.45 | 187.66 | −0.70 | 0.08 | 18.00 | 0.62 | 0.48 | |

| 85 | WSP | 0.47 | 0.63 | 0.66 | 0.83 | 1.90 | 1.91 | 1.92 | 0.01 | 0.00 | 0.50 | 0.18 | 0.12 | |

| 86 | VIF | CO | 0.11 | 0.22 | 0.16 | 0.32 | 0.40 | 0.43 | 0.40 | 0.00 | 0.02 | 0.20 | 0.46 | 0.56 |

| 87 | NO | 3.99 | 11.04 | 11.73 | 19.64 | 12.20 | 12.26 | 10.87 | −0.01 | 1.38 | 12.30 | 1.10 | 0.56 | |

| 88 | NO2 | 3.61 | 7.86 | 6.98 | 11.01 | 18.60 | 18.64 | 17.55 | −0.06 | 1.03 | 5.20 | 1.71 | 1.08 | |

| 89 | NOx | 5.57 | 16.68 | 13.63 | 24.44 | 30.80 | 30.78 | 28.71 | 0.04 | 2.11 | 14.10 | 1.45 | 0.80 | |

| 90 | O3 | 7.25 | 15.13 | 10.64 | 19.40 | 28.40 | 28.86 | 27.04 | −0.45 | 1.37 | 7.90 | 0.41 | 1.15 | |

| 91 | PM10 | 19.98 | 28.70 | 34.05 | 44.21 | 54.30 | 52.71 | 52.19 | 1.59 | 2.10 | 38.60 | 0.31 | 0.33 | |

| 92 | RH | 10.08 | 15.08 | 13.31 | 18.43 | 54.50 | 54.51 | 54.41 | 0.00 | 0.09 | 5.00 | 0.37 | 0.98 | |

| 93 | SO2 | 4.67 | 4.49 | 10.22 | 11.22 | 5.30 | 5.73 | 4.86 | −0.39 | 0.47 | 13.30 | 0.27 | 0.18 | |

| 94 | TMP | 2.05 | 3.09 | 2.65 | 3.89 | 17.00 | 17.03 | 16.83 | −0.06 | 0.14 | 1.80 | 0.30 | 0.87 | |

| 95 | WDR | 103.68 | 114.29 | 120.98 | 140.72 | 206.30 | 202.17 | 197.34 | 4.16 | 9.00 | 18.00 | 3.60 | 3.43 | |

| 96 | WSP | 0.52 | 0.74 | 0.71 | 0.99 | 1.90 | 1.90 | 1.83 | −0.01 | 0.05 | 0.40 | 0.41 | 0.91 | |

| 97 | MGH | CO | 0.15 | 0.25 | 0.21 | 0.33 | 0.50 | 0.49 | 0.54 | 0.04 | −0.01 | 0.20 | 1.09 | 0.57 |

| 98 | NO | 6.44 | 15.90 | 19.19 | 27.46 | 21.80 | 21.80 | 20.68 | −0.01 | 1.11 | 24.90 | 0.99 | 0.49 | |

| 99 | NO2 | 4.43 | 8.61 | 9.19 | 11.52 | 27.80 | 27.93 | 27.78 | −0.11 | 0.04 | 7.10 | 1.48 | 0.84 | |

| 100 | NOx | 8.88 | 21.55 | 21.21 | 32.51 | 49.60 | 49.79 | 48.30 | −0.22 | 1.28 | 26.80 | 1.20 | 0.63 | |

| 101 | O3 | 7.13 | 17.13 | 10.78 | 22.64 | 28.90 | 28.55 | 28.05 | 0.34 | 0.85 | 9.50 | 1.27 | 1.00 | |

| 102 | RH | 9.54 | 14.86 | 12.42 | 18.20 | 48.60 | 47.79 | 49.31 | 0.82 | −0.71 | 5.00 | 2.00 | 1.32 | |

| 103 | SO2 | 3.54 | 3.31 | 7.32 | 6.43 | 4.00 | 3.74 | 3.65 | 0.23 | 0.33 | 7.30 | 0.19 | 0.27 | |

| 104 | TMP | 1.60 | 2.71 | 2.17 | 3.34 | 18.00 | 18.33 | 17.75 | −0.37 | 0.21 | 1.50 | 1.70 | 1.58 | |

| 105 | WDR | 76.70 | 95.76 | 99.03 | 118.26 | 195.90 | 180.07 | 198.38 | 15.81 | −2.49 | 18.00 | 4.82 | 3.03 | |

| 106 | WSP | 0.48 | 0.72 | 0.67 | 0.92 | 2.00 | 2.13 | 1.95 | −0.16 | 0.03 | 0.50 | 1.92 | 0.91 | |

| 107 | CUA | CO | 0.13 | 0.18 | 0.16 | 0.24 | 0.40 | 0.42 | 0.41 | 0.01 | 0.01 | 0.20 | 0.69 | 0.47 |

| 108 | NO | 3.13 | 7.05 | 9.06 | 13.52 | 9.20 | 9.10 | 8.51 | 0.06 | 0.64 | 16.10 | 0.52 | 0.22 | |

| 109 | NO2 | 3.20 | 6.43 | 6.33 | 8.95 | 20.70 | 20.74 | 20.21 | −0.06 | 0.47 | 6.70 | 1.22 | 0.60 | |

| 110 | NOx | 4.71 | 13.33 | 10.79 | 21.38 | 29.80 | 29.85 | 28.71 | −0.02 | 1.11 | 18.40 | 0.82 | 0.39 | |

| 111 | O3 | 9.24 | 15.03 | 12.70 | 20.23 | 34.30 | 34.83 | 33.99 | −0.54 | 0.29 | 10.50 | 0.90 | 0.69 | |

| 112 | PM10 | 9.77 | 12.81 | 13.70 | 16.77 | 31.70 | 31.18 | 31.03 | 0.51 | 0.66 | 19.20 | 0.46 | 0.47 | |

| 113 | RH | 10.97 | 14.04 | 14.22 | 17.31 | 58.60 | 57.63 | 58.30 | 1.02 | 0.35 | 5.00 | 2.04 | 1.25 | |

| 114 | SO2 | 2.02 | 2.19 | 4.32 | 4.48 | 3.00 | 2.90 | 2.82 | 0.13 | 0.20 | 6.10 | 0.18 | 0.26 | |

| 115 | TMP | 1.71 | 2.30 | 2.32 | 2.91 | 14.50 | 14.79 | 14.50 | −0.25 | 0.05 | 1.60 | 1.14 | 0.85 | |

| 116 | WDR | 71.01 | 85.65 | 92.62 | 106.44 | 165.10 | 168.76 | 160.36 | −3.64 | 4.76 | 18.00 | 4.42 | 3.98 | |

| 117 | WSP | 0.49 | 0.63 | 0.67 | 0.82 | 2.00 | 2.07 | 2.00 | −0.03 | 0.04 | 0.40 | 1.49 | 1.25 | |

| 118 | SFE | CO | 0.09 | 0.16 | 0.12 | 0.21 | 0.36 | 0.34 | 0.36 | 0.02 | −0.01 | 0.11 | 1.78 | 1.62 |

| 119 | NO | 3.11 | 6.58 | 7.52 | 11.02 | 8.23 | 8.50 | 7.21 | −0.27 | 1.01 | 8.10 | 1.83 | 0.72 | |

| 120 | NO2 | 3.09 | 6.54 | 6.23 | 8.76 | 20.30 | 20.21 | 19.91 | 0.09 | 0.39 | 5.80 | 2.30 | 1.29 | |

| 121 | NOx | 4.45 | 11.34 | 9.37 | 15.80 | 28.52 | 28.74 | 27.31 | −0.22 | 1.21 | 10.35 | 2.46 | 1.24 | |

| 122 | SO2 | 1.95 | 2.05 | 3.74 | 3.97 | 2.83 | 2.62 | 2.56 | 0.21 | 0.27 | 4.70 | 0.38 | 0.39 | |

| 123 | O3 | 8.21 | 17.14 | 11.83 | 22.42 | 33.60 | 34.14 | 32.87 | −0.54 | 0.73 | 9.10 | 2.04 | 1.81 | |

| 124 | PM10 | 3.44 | 10.70 | 7.12 | 13.93 | 32.98 | 32.99 | 32.32 | −0.01 | 0.66 | 16.80 | 1.64 | 1.02 | |

| 125 | PM2.5 | 3.00 | 6.77 | 6.12 | 9.06 | 18.00 | 18.03 | 17.46 | −0.03 | 0.54 | 8.90 | 1.81 | 1.17 | |

| 126 | PMCO | 2.93 | 5.95 | 6.05 | 8.06 | 14.98 | 14.97 | 15.02 | 0.02 | −0.04 | 15.55 | 0.90 | 0.43 | |

| 127 | RH | 8.86 | 14.91 | 11.58 | 18.60 | 58.58 | 55.62 | 58.66 | 2.96 | −0.07 | 4.90 | 3.88 | 1.82 | |

| 128 | TMP | 1.75 | 2.74 | 2.27 | 3.42 | 15.16 | 15.44 | 15.08 | −0.29 | 0.07 | 1.41 | 2.02 | 1.30 | |

| 129 | WDR | 66.33 | 80.77 | 93.80 | 103.12 | 184.07 | 176.04 | 185.70 | 8.04 | −1.63 | 18.00 | 5.62 | 3.82 | |

| 130 | WSP | 0.52 | 0.71 | 0.72 | 0.93 | 2.32 | 2.39 | 2.30 | −0.07 | 0.02 | 0.57 | 1.49 | 0.94 | |

| 131 | AJM | CO | 0.13 | 0.16 | 0.16 | 0.22 | 0.40 | 0.35 | 0.40 | 0.05 | 0.00 | 0.11 | 2.94 | 1.68 |

| 132 | NO | 1.94 | 3.96 | 4.41 | 6.57 | 4.43 | 4.65 | 4.23 | −0.23 | 0.20 | 4.80 | 1.89 | 0.86 | |

| 133 | NO2 | 2.59 | 5.55 | 5.48 | 7.54 | 16.98 | 17.01 | 17.01 | −0.03 | −0.03 | 5.30 | 2.68 | 1.46 | |

| 134 | NOx | 3.28 | 7.71 | 6.62 | 10.90 | 21.42 | 21.62 | 21.16 | −0.20 | 0.26 | 7.25 | 2.91 | 1.51 | |

| 135 | SO2 | 2.08 | 1.97 | 3.78 | 4.04 | 3.02 | 2.47 | 2.69 | 0.55 | 0.33 | 4.65 | 0.74 | 0.52 | |

| 136 | O3 | 8.04 | 16.69 | 10.94 | 21.63 | 41.02 | 39.91 | 39.78 | 1.12 | 1.24 | 9.80 | 2.64 | 2.08 | |

| 137 | PM10 | 3.15 | 10.17 | 6.36 | 13.09 | 31.83 | 31.92 | 31.76 | −0.08 | 0.08 | 10.20 | 3.41 | 1.65 | |

| 138 | PM2.5 | 2.92 | 6.62 | 6.05 | 8.62 | 18.92 | 18.88 | 18.56 | 0.04 | 0.36 | 6.30 | 3.43 | 1.82 | |

| 139 | PMCO | 2.53 | 5.79 | 5.12 | 7.87 | 12.92 | 12.91 | 13.37 | 0.01 | −0.46 | 8.70 | 1.94 | 0.97 | |

| 140 | RH | 8.25 | 15.37 | 11.14 | 18.71 | 54.33 | 51.91 | 54.99 | 2.42 | −0.66 | 4.90 | 4.88 | 2.98 | |

| 141 | TMP | 1.87 | 2.56 | 2.50 | 3.30 | 15.45 | 15.23 | 15.57 | 0.23 | −0.12 | 1.67 | 2.83 | 2.14 | |

| 142 | WDR | 74.25 | 93.31 | 99.86 | 116.66 | 179.97 | 182.28 | 179.44 | −2.31 | 0.53 | 18.00 | 6.50 | 3.86 | |

| 143 | WSP | 0.77 | 1.13 | 1.06 | 1.54 | 2.76 | 2.79 | 2.74 | −0.03 | 0.03 | 0.73 | 1.88 | 1.10 | |

References

- World Health Organization (WHO). Ambient (Outdoor) Air Pollution. Available online: https://www.who.int/news-room/fact-sheets/detail/ambient-(outdoor)-air-quality-and-health (accessed on 19 July 2025).

- State of Global Air (SoGA). State of Global Air Report 2024. Available online: https://www.stateofglobalair.org/hap (accessed on 19 July 2025).

- World Health Organization (WHO). Air Pollution. Available online: https://www.who.int/health-topics/air-pollution#tab=tab_1 (accessed on 19 July 2025).

- Kim, T.; Kim, J.; Yang, W.; Lee, H.; Choo, J. Missing Value Imputation of Time-Series Air-Quality Data via Deep Neural Networks. Int. J. Environ. Res. Public Health 2021, 18, 12213. [Google Scholar] [CrossRef] [PubMed]

- He, M.Z.; Yitshak-Sade, M.; Just, A.C.; Gutiérrez-Avila, I.; Dorman, M.; de Hoogh, K.; Kloog, I. Predicting Fine-Scale Daily NO2 over Mexico City Using an Ensemble Modeling Approach. Atmos. Pollut. Res. 2023, 14, 101763. [Google Scholar] [CrossRef] [PubMed]

- Gobierno de México. Programa de Gestión para Mejorar la Calidad del Aire (PROAIRE). Available online: https://www.gob.mx/semarnat/acciones-y-programas/programas-de-gestion-para-mejorar-la-calidad-del-aire (accessed on 19 July 2025).

- Gobierno de la Ciudad de México. Mexico City Atmospheric Monitoring System (SIMAT). Available online: http://www.aire.cdmx.gob.mx/default.php (accessed on 19 July 2025).

- Zhang, X.; Zhou, P. A Transferred Spatio-Temporal Deep Model Based on Multi-LSTM Auto-Encoder for Air Pollution Time Series Missing Value Imputation. Future Gener. Comput. Syst. 2024, 156, 325–338. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, K.; He, Y.; Fu, Q.; Luo, W.; Li, W.; Xiao, S. Research on Missing Value Imputation to Improve the Validity of Air Quality Data Evaluation on the Qinghai-Tibetan Plateau. Atmosphere 2023, 14, 1821. [Google Scholar] [CrossRef]

- Hua, V.; Nguyen, T.; Dao, M.S.; Nguyen, H.D.; Nguyen, B.T. The Impact of Data Imputation on Air Quality Prediction Problem. PLoS ONE 2024, 19, e0306303. [Google Scholar] [CrossRef] [PubMed]

- Alkabbani, H.; Ramadan, A.; Zhu, Q.; Elkamel, A. An Improved Air Quality Index Machine Learning-Based Forecasting with Multivariate Data Imputation Approach. Atmosphere 2022, 13, 1144. [Google Scholar] [CrossRef]

- Camastra, F.; Capone, V.; Ciaramella, A.; Riccio, A.; Staiano, A. Prediction of Environmental Missing Data Time Series by Support Vector Machine Regression and Correlation Dimension Estimation. Environ. Model. Softw. 2022, 150, 105343. [Google Scholar] [CrossRef]

- Che, Z.; Purushotham, S.; Cho, K.; Sontag, D.; Liu, Y. Recurrent Neural Networks for Multivariate Time Series with Missing Values. Sci. Rep. 2018, 8, 6085. [Google Scholar] [CrossRef] [PubMed]

- Cao, W.; Wang, D.; Li, J.; Zhou, H.; Li, L.; Li, Y. BRITS: Bidirectional Recurrent Imputation for Time Series. Adv. Neural Inf. Process. Syst. 2018, 31, 6775–6785. [Google Scholar]

- Yoon, J.; Jordon, J.; Schaar, M. GAIN: Missing Data Imputation Using Generative Adversarial Nets. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 5689–5698. [Google Scholar]

- Shahbazian, R.; Greco, S. Generative Adversarial Networks Assist Missing Data Imputation: A Comprehensive Survey and Evaluation. IEEE Access 2023, 11, 88908–88928. [Google Scholar] [CrossRef]

- Cini, A.; Marisca, I.; Alippi, C. Filling the Gaps: Multivariate Time Series Imputation by Graph Neural Networks. arXiv 2021, arXiv:2108.00298. [Google Scholar]

- Du, W.; Côté, D.; Liu, Y. SAITS: Self-Attention-Based Imputation for Time Series. Expert Syst. Appl. 2023, 219, 119619. [Google Scholar] [CrossRef]

- Colorado Cifuentes, G.U.; Flores Tlacuahuac, A. A Short-Term Deep Learning Model for Urban Pollution Forecasting with Incomplete Data. Can. J. Chem. Eng. 2021, 99, S417–S431. [Google Scholar] [CrossRef]

- Alahamade, M.; Lake, A. Handling Missing Data in Air Quality Time Series: Evaluation of Statistical and Machine Learning Approaches. Atmosphere 2021, 12, 1130. [Google Scholar] [CrossRef]

- World Population Review. Mexico City Population. Available online: https://worldpopulationreview.com/cities/mexico/mexico-city (accessed on 19 July 2025).