YOLOv7scb: A Small-Target Object Detection Method for Fire Smoke Inspection

Abstract

1. Introduction

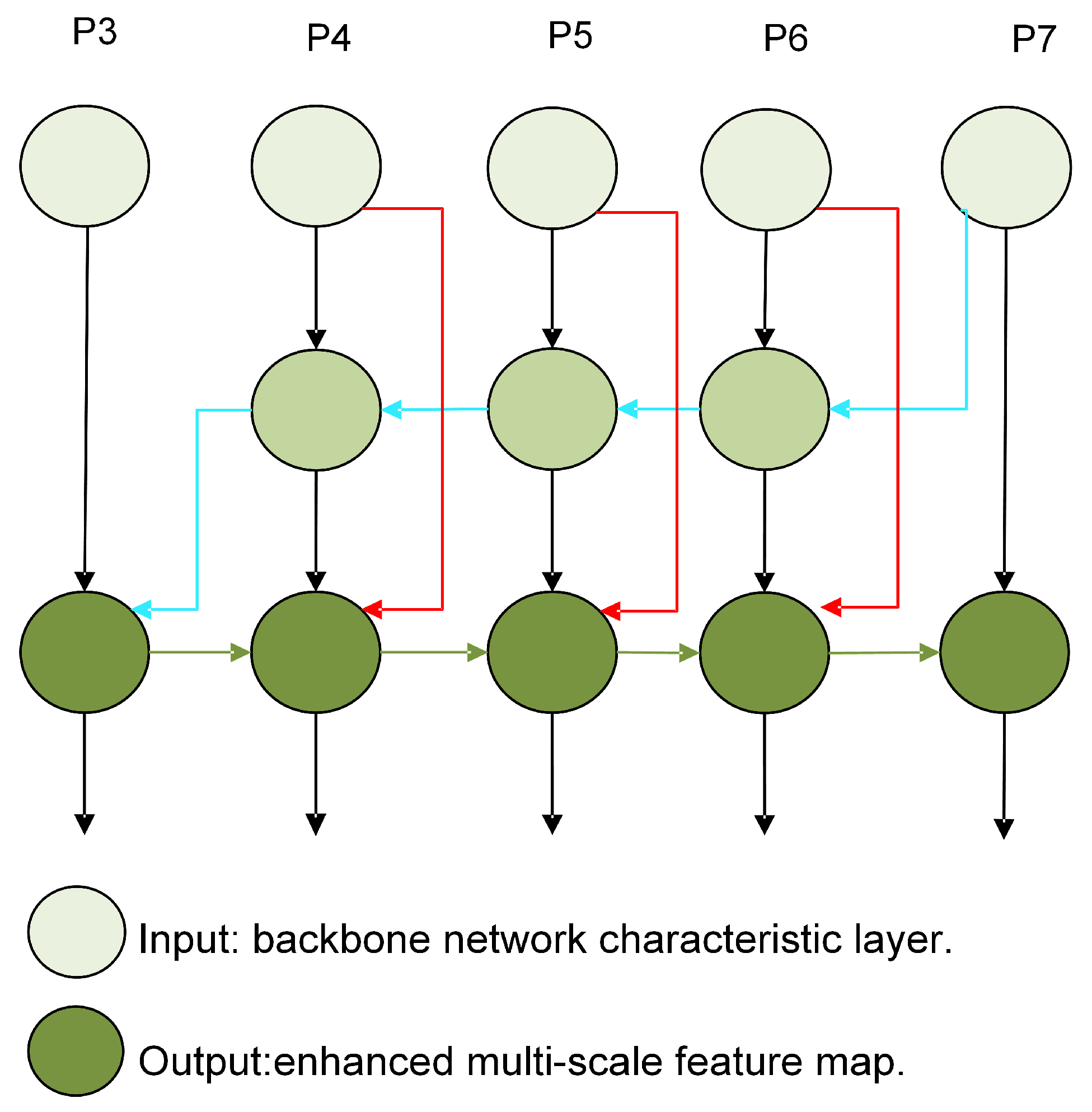

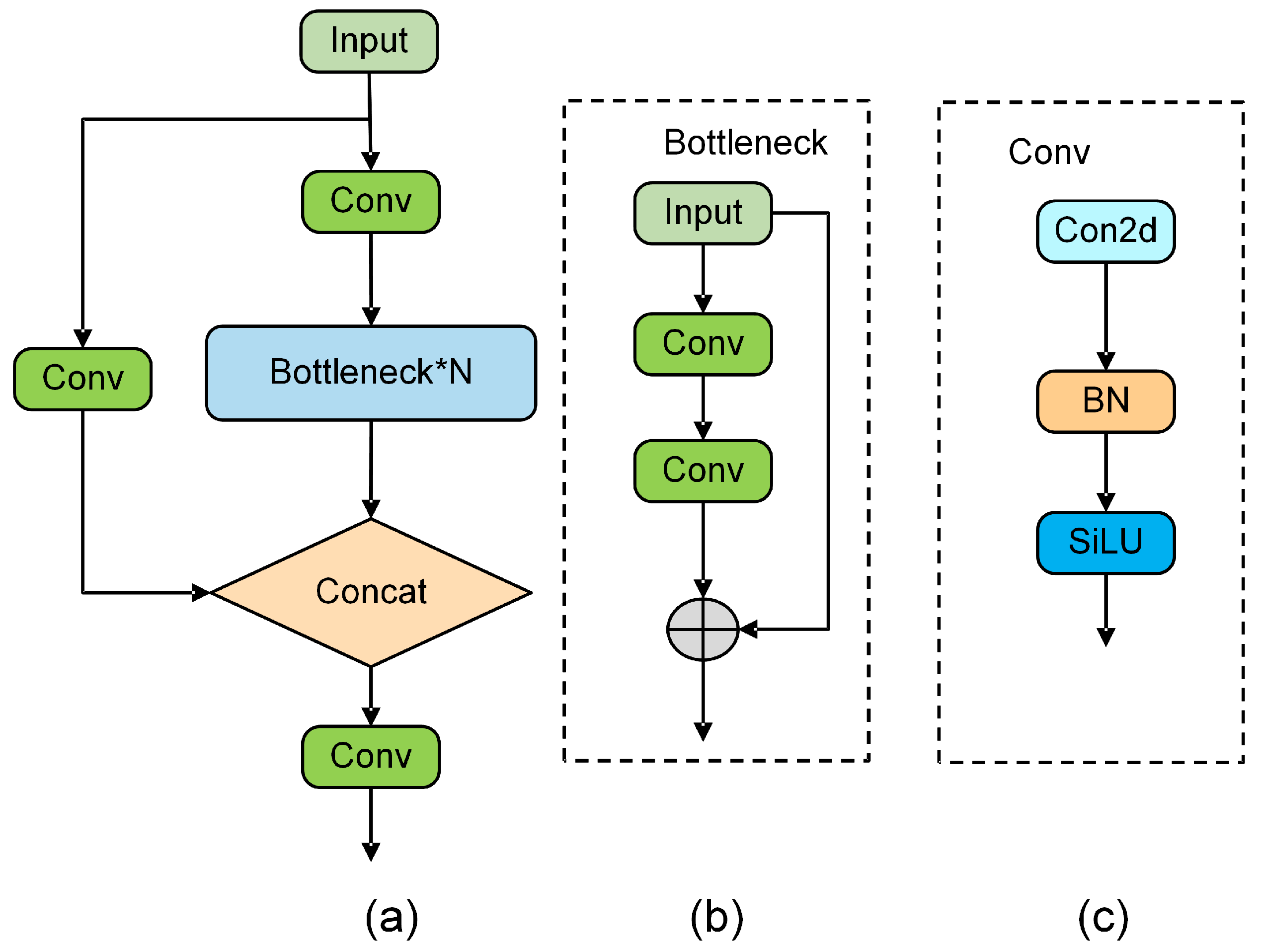

- We refine the YOLOv7 architecture by replacing the PANet structure with the BiFPN structure and substituting the ELAN-W module with the C3 module, resulting in improved detection of small-target fires.

- We introduce the SPD-Conv module to reduce information loss and enhance feature-extraction precision, therefore improving the detection of small-target fires and smoke in images.

- To tackle the imbalance between positive and negative samples of fire and smoke, we introduce a weight factor of focal loss into the original bounding box loss function.

2. Related Work

2.1. Fire Detection

2.1.1. Conventional Device-Based Fire Detection Methods

2.1.2. A Computer Vision-Based Fire Detection Method

2.2. YOLOv7

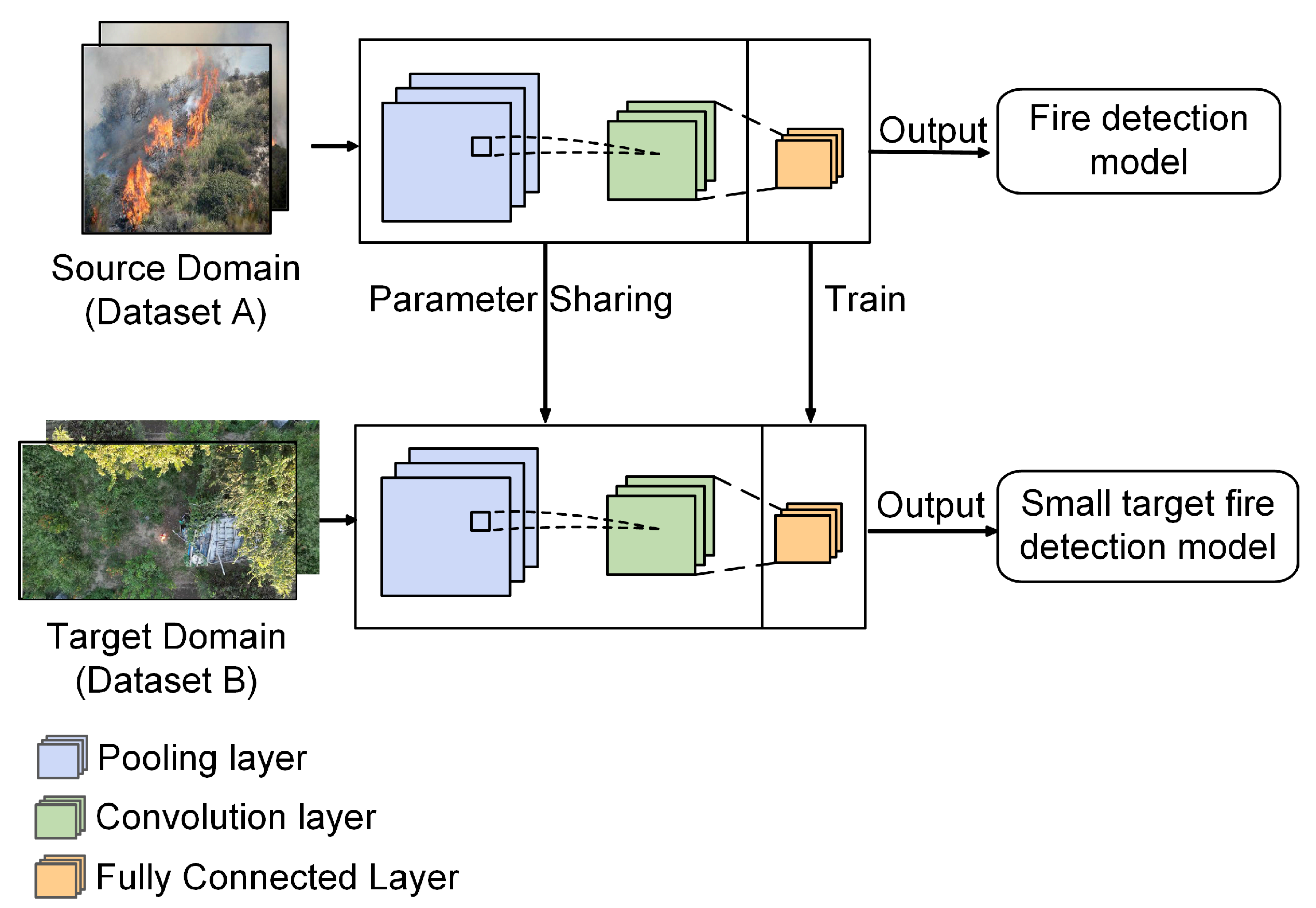

2.3. Transfer Learning

3. Materials and Methods

3.1. Fire Image Collection

3.2. Fire Detection Model

3.2.1. BiFPN Replacement of PANet

3.2.2. Substitution of ELAN-W with C3

3.2.3. Incorporating SPD-Conv

3.2.4. Improvement of the Loss Function

3.3. Transfer Learning in YOLOv7

3.4. Performance Comparisons

- YOLOv3: The third version of the YOLO algorithm for object detection employs multiscale prediction and feature pyramid networks to strike a balance between speed and precision [49].

- YOLOv5: Developed by ultralytics, YOLOv5 comprises lightweight target detection algorithms characterized by modular design, automated model optimization, and rapid training techniques, emphasizing simplicity and flexibility [50].

- YOLOv7: The seventh version of the YOLO series boasts a deeper network structure and enhanced performance optimization, resulting in improved precision and speed in target detection [51].

- YOLOv8: This version incorporates advanced techniques such as model compression and attention mechanisms to further improve both precision and efficiency [52].

- YOLOv10: Enhanced feature extraction, optimized network architecture, and improved training techniques in YOLOv10 result in higher precision and faster inference speeds for real-time object detection [53].

- YOLOv11: Demonstrates superior performance in computer vision tasks such as object detection, instance segmentation, pose estimation, and oriented object detection. It surpasses its predecessors in mean Average Precision () and computational efficiency while maintaining a balance between parameter count and accuracy [54].

4. Experiments and Results

4.1. Parameter Settings

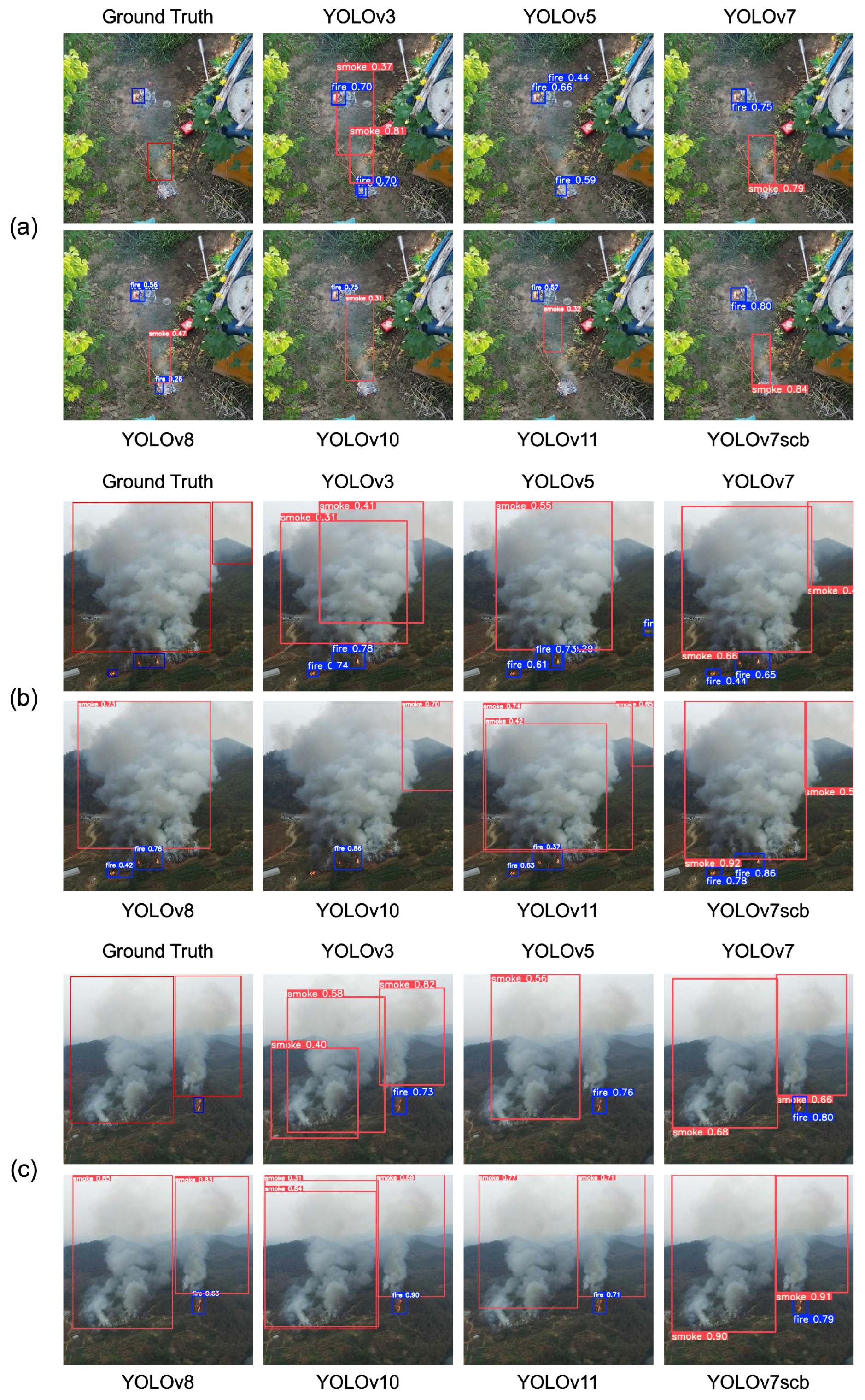

4.2. Performance Evaluation Against Different YOLO Models

4.3. Performance Evaluation Against Different Methods

4.4. Ablation Experiments

4.4.1. Comprehensive Performance Analysis on Combinatorial Modules

4.4.2. Comprehensive Performance Analysis With vs. Without Transfer Learning

4.4.3. Comprehensive Performance Analysis with Focal-CIoU

4.5. Practical Application Detection

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- China Central Television. National Fire and Rescue Administration: In 2023, More Than 2.13 Million Reports and Disposals of Various Types of Police Incidents Were Received and Handled; China Central Television: Beijing, China, 2024. Available online: https://www.119.gov.cn/qmxfxw/mtbd/spbd/2024/41284.shtml (accessed on 5 January 2024).

- Zhang, J.; Li, W.B.; Han, N.; Kan, J.M. Forest fire detection system based on a ZigBee wireless sensor network. Front. For. China 2008, 3, 369–374. [Google Scholar] [CrossRef]

- Aslan, Y.E.; Korpeoglu, I.; Ulusoy, Ö. A framework for use of wireless sensor networks in forest fire detection and monitoring. Comput. Environ. Urban Syst. 2012, 36, 614–625. [Google Scholar] [CrossRef]

- Wenning, B.L.; Pesch, D.; TimmGiel, A.; Görg, C. Environmental monitoring aware routing: Making environmental sensor networks more robust. Telecommun. Syst. 2010, 43, 3–11. [Google Scholar] [CrossRef]

- Zhu, Y.; Xie, L.; Yuan, T. Monitoring system for forest fire based on wireless sensor network. In Proceedings of the 10th World Congress on Intelligent Control and Automation, Beijing, China, 6–8 July 2012; pp. 4245–4248. [Google Scholar]

- Goyal, S.; Shagill, M.; Kaur, A.; Vohra, H.; Singh, A. A yolo based technique for early forest fire detection. Int. J. Innov. Technol. Explor. Eng. 2020, 9, 1357–1362. [Google Scholar] [CrossRef]

- Lu, Y.; Li, Y.; Song, F.; Zhang, Y.; Li, X.; Zheng, C.; Wang, Y. Development of a mid-infrared early fire detection system based on dual optical path nonresonant photoacoustic cell. Microw. Opt. Technol. Lett. 2024, 66, e33758. [Google Scholar] [CrossRef]

- Truong, C.T.; Nguyen, T.H.; Vu, V.Q.; Do, V.H.; Nguyen, D.T. Enhancing fire detection technology: A UV-based system utilizing fourier spectrum analysis for reliable and accurate fire detection. Appl. Sci. 2023, 13, 7845. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, X.; Zeng, Z.C.; Yung, Y.L. Fire monitoring and detection using brightness-temperature difference and water vapor emission from the atmospheric infrared sounder. J. Quant. Spectrosc. Radiat. Transf. 2024, 317, 108930. [Google Scholar] [CrossRef]

- Zhang, S.; Gao, D.M.; Lin, H.F.; Sun, Q. Wildfire detection using sound spectrum analysis based on the internet of things. Sensors 2019, 19, 5093. [Google Scholar] [CrossRef] [PubMed]

- Quinn, C.A.; Burns, P.; Jantz, P.; Salas, L.; Goetz, S.J.; Clark, M.L. Soundscape mapping: Understanding regional spatial and temporal patterns of soundscapes incorporating remotely-sensed predictors and wildfire disturbance. Environ. Res. 2024, 3, 025002. [Google Scholar] [CrossRef]

- Damasevicius, R.; Qurthobi, A.; Maskeliunas, R. A Hybrid Machine Learning Model for Forest Wildfire Detection using Sounds. In Proceedings of the 2024 19th Conference on Computer Science and Intelligence Systems, Belgrade, Serbia, 8–11 September 2024; pp. 99–106. [Google Scholar]

- Li, X.; Liu, Y.; Zheng, L.; Zhang, W. A Lightweight Convolutional Spiking Neural Network for Fires Detection Based on Acoustics. Electronics 2024, 13, 2948. [Google Scholar] [CrossRef]

- Thepade, S.D.; Dewan, J.H.; Pritam, D.; Chaturvedi, R. Fire detection system using color and flickering behaviour of fire with Kekre’s LUV color space. In Proceedings of the 2018 Fourth International Conference on Computing Communication Control and Automation, Pune, India, 16–18 August 2018; pp. 1–6. [Google Scholar]

- Chowdhury, N.; Mushfiq, D.R.; Chowdhury, A.E.; Chaturvedi, R. Computer vision and smoke sensor based fire detection system. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–5. [Google Scholar]

- Mei, J.J.; Zhang, W. Early Fire Detection Calculations Based on ViBe and Machine Learning. Acta Opt. Sin. 2018, 38, 0710001. [Google Scholar] [CrossRef]

- Lu, C.; Lu, M.Q.; Lu, X.B.; Cai, M.; Feng, X.Q. Forest fire smoke recognition based on multiple feature fusion. IOP Conf. Ser. Mater. Sci. Eng. 2018, 435, 012006. [Google Scholar] [CrossRef]

- Zhao, W.; Yu, F.F.; Fan, X.J.; Zhang, N.N. Fire Image Recognition Simulation of Unmanned Aerial Vehicle Forest Fire Protection System. Comput. Simul. 2018, 35, 459–464. [Google Scholar] [CrossRef]

- Wang, Y.; Dang, L.; Ren, J. Forest fire image recognition based on convolutional neural network. J. Algorithms Comput. Technol. 2019, 13. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Baratov, N.; Kutlimuratov, A.; Whangbo, T.K. An improvement of the fire detection and classification method using YOLOv3 for surveillance systems. Sensors 2021, 21, 6519. [Google Scholar] [CrossRef]

- Cheng, X.B.; Qiu, G.H.; Jiang, Y.; Zhu, Z.M. An improved small object detection method based on YoloV3. Pattern Anal. Appl. 2021, 24, 1347–1355. [Google Scholar]

- Qin, Y.Y.; Cao, J.T.; Ji, X.F. Fire detection method based on depthwise separable convolution and yolov3. Pattern Anal. Appl. 2021, 18, 300–310. [Google Scholar] [CrossRef]

- Wu, H.; Hu, Y.; Wang, W.; Mei, X. Ship fire detection based on an improved YOLO algorithm with a lightweight convolutional neural network model. Sensors 2022, 22, 7420. [Google Scholar] [CrossRef]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Akhmedov, F.; Nasimov, R.; Abdusalomov, A. Dehazing Algorithm Integration with YOLO-v10 for Ship Fire Detection. Fire 2024, 7, 332. [Google Scholar] [CrossRef]

- Seydi, S.T.; Saeidi, V.; Kalantar, B.; Ueda, N.; Halin, A.A. Fire-Net: A Deep Learning Framework for Active Forest Fire Detection. Sustainability 2022, 2022, 8044390. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection withvregion proposal networks. IEEE Trans. Pattern Anal. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.Y.; Wang, D.Q.; Krähenbühl, P. Objects as Points. arXiv 2021, arXiv:1904.07850. [Google Scholar]

- Song, H.; Qu, X.J.; Yang, X.; Wan, F.J. Flame and smoke detection based on the improved YOLOv5. Comput. Eng. 2023, 49, 1000–3428. [Google Scholar]

- Titu, M.F.S.; Pavel, M.A.; Michael, G.K.O.; Babar, H.; Aman, U.; Khan, R. Real-Time Fire Detection: Integrating Lightweight Deep Learning Models on Drones with Edge Computing. Drones 2024, 8, 483. [Google Scholar] [CrossRef]

- Sun, B.S.; Bi, K.Y.; Wang, Q.Y. YOLOv7-FIRE: A tiny-fire identification and detection method applied on UAV. AIMS Math. 2024, 9, 10775–10801. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L. Aerial Imagery Pile burn detection using Deep Learning: The FLAME dataset. arXiv 2020, arXiv:2012.14036. [Google Scholar] [CrossRef]

- Bryce, H.; Leo, O.; Fatemeh, A.; Abolfazl, R.; Eric, R.; Adam, W.; Peter, F.; Janice, C. FLAME 2: Fire detection and modeLing: Aerial Multi-spectral imagE dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar]

- Ding, X.H.; Zhang, X.Y.; Ma, N.N.; Han, J.G.; Ding, G.G.; Sun, J. RepVGG: Making VGG-style convNets great again. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar]

- Dewi, C.; Chen, A.P.S.; Christanto, H.J. Deep learning for highly accurate hand recognition based on YOLOv7 model. Big Data Cogn. Comput. 2023, 7, 53. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, Y.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Hu, Y.Y.; Wu, X.J.; Zheng, G.D.; Liu, X.F. Object detection of UAV for anti-UAV based on improved YOLOv3. In Proceedings of the 2019 Chinese Control Conference, Guangzhou, China, 27–30 July 2019; pp. 8386–8390. [Google Scholar]

- Song, L.; Yasenjiang, M.S. Detection of X-ray prohibited items based on improved YOLOv7. In Proceedings of the 2023 8th International Conference on Intelligent Computing and Signal Processing, Xian, China, 21–23 April 2023; pp. 1874–1877. [Google Scholar]

- Fan, Y.X.; Tohti, G.; Geni, M.; Zhang, G.H.; Yang, J.Y. A marigold corolla detection model based on the improved YOLOv7 lightweight. Signal Image Video Process. 2024, 18, 4703–4712. [Google Scholar] [CrossRef]

- Guo, G.; Yuan, W. Short-term traffic speed forecasting based on graph attention temporal convolutional networks. Neurocomputing 2020, 410, 387–393. [Google Scholar] [CrossRef]

- Zhuang, F.Z.; Qi, Z.Y.; Duan, K.Y.; Xi, D.B.; Zhu, Y.C.; Zhu, H.S.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Azi, M.; Yanikoglu, B.; Aptoula, E. Plant identification using deep neural networks via optimization of transfer learning parameters. Neurocomputing 2021, 235, 228–235. [Google Scholar]

- Jiang, X.D. Feature extraction for image recognition and computer vision. In Proceedings of the 2009 2nd IEEE International Conference on Computer Science and Information Technology, Beijing, China, 8–11 August 2009; pp. 1–15. [Google Scholar]

- Agostino, A. Veryfiresmokedetection Dataset. Roboflow Universe. Available online: https://universe.roboflow.com/agostino-abbatecola-52ty4/veryfiresmokedetection (accessed on 30 November 2023).

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. arXiv 2022, arXiv:2208.03641. [Google Scholar]

- Chen, J.; Mai, H.S.; Luo, L.B.; Chen, X.Q.; Wu, K. Effective feature fusion network in BIFPN for small object detection. In Proceedings of the 2021 IEEE International Conference on Image Processing, Anchorage, AK, USA, 19–22 September 2021; pp. 699–703. [Google Scholar]

- Zhang, Y.F.; Ren, W.Q.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lui, M.S.; Utaminingrum, F. A comparative study of YOLOv5 models on american sign language dataset. In Proceedings of the 7th International Conference on Sustainable Information Engineering and Technology, Malang, Indonesia, 22–23 November 2022; pp. 3–7. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, Y.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the International Conference on Advances in Data Engineering and Intelligent Computing Systems, Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

| Classifications | Quantities | Proportions |

|---|---|---|

| Wilderness fires | 3961 | 48% |

| Residential habitat fires | 3205 | 39% |

| Factory fires | 500 | 6% |

| Roadway fires | 549 | 7% |

| Datasets | Training Set | Validation Set | Testing Set |

|---|---|---|---|

| Dataset A | 5172 | 1478 | 739 |

| Dataset B | 578 | 165 | 83 |

| Classification | Fire and Smoke Model (Dataset A) | Small Fire and Smoke Target Model (Dataset B) |

|---|---|---|

| Epochs | 300 | 250 |

| Batch-size | 16 | 16 |

| Image size (pixels) | 640 × 640 | 640 × 640 |

| Initial learning rate | 0.01 | 0.01 |

| Datasets | Model | Precision (%) | Recall (%) | mAP@.5 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Fire | Smoke | All | Fire | Smoke | All | Fire | Smoke | All | ||

| Dataset A | YOLOv3 | 79.1 | 81.8 | 80.4 | 70.1 | 68.2 | 69.2 | 75.7 | 75.8 | 75.8 |

| YOLOv5 | 87.8 | 86.2 | 87.5 | 84.6 | 83.5 | 84.1 | 87.3 | 85.5 | 86.4 | |

| YOLOv7 | 87.4 | 86.4 | 86.9 | 83.1 | 81.3 | 82.2 | 87.4 | 85.2 | 86.3 | |

| YOLOv8 | 85.5 | 85.5 | 85.5 | 69.5 | 70.5 | 70.0 | 78.8 | 81.8 | 80.3 | |

| YOLOv10 | 89.2 | 87.0 | 88.1 | 81.5 | 81.1 | 81.3 | 88.0 | 87.2 | 87.6 | |

| YOLOv11 | 89.3 | 89.7 | 89.5 | 82.9 | 85.5 | 84.1 | 87.3 | 89.6 | 88.8 | |

| YOLOv7std | 91.6 | 91.1 | 91.4 | 84.6 | 83.5 | 84.2 | 88.1 | 85.5 | 85.9 | |

| Dataset B | YOLOv3 | 90.6 | 83.8 | 87.2 | 92.3 | 83.3 | 87.8 | 92.3 | 83.0 | 87.6 |

| YOLOv5 | 94.0 | 83.5 | 88.8 | 94.7 | 78.9 | 86.8 | 96.8 | 83.1 | 89.9 | |

| YOLOv7 | 93.3 | 85.7 | 89.5 | 90.4 | 73.3 | 81.8 | 93.7 | 79.3 | 86.5 | |

| YOLOv8 | 93.2 | 86.1 | 89.7 | 87.2 | 80.0 | 83.6 | 92.9 | 85.1 | 89.0 | |

| YOLOv10 | 88.4 | 76.8 | 82.6 | 89.0 | 80.9 | 85.0 | 83.1 | 91.8 | 87.4 | |

| YOLOv11 | 96.5 | 82.8 | 89.6 | 87.2 | 80.0 | 83.6 | 96.5 | 84.5 | 90.2 | |

| YOLOv7std | 98.8 | 90.6 | 94.7 | 96.8 | 82.2 | 89.5 | 98.4 | 82.1 | 90.5 | |

| Datasets | Model | Precision (%) | Recall (%) | mAP@.5 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Fire | Smoke | All | Fire | Smoke | All | Fire | Smoke | All | ||

| Dataset A | [30] | 90.3 | 88.8 | 89.6 | 84.0 | 82.3 | 83.2 | 88.2 | 86.1 | 87.2 |

| [28] | 64.1 | 62.7 | 63.4 | 61.7 | 68.1 | 64.9 | 76.4 | 75.8 | 77.6 | |

| [29] | 63.6 | 74.3 | 68.9 | 61.3 | 77.0 | 69.2 | 63.6 | 74.3 | 68.9 | |

| YOLOv7std | 91.6 | 91.1 | 91.4 | 84.6 | 83.5 | 84.2 | 88.1 | 85.5 | 85.9 | |

| Dataset B | [30] | 90.8 | 82.8 | 86.8 | 88.3 | 80.0 | 84.1 | 92.2 | 82.7 | 87.5 |

| [28] | 50.9 | 55.7 | 53.3 | 55.2 | 55.1 | 55.2 | 87.4 | 81.0 | 84.2 | |

| [29] | 68.3 | 76.9 | 72.6 | 58.6 | 70.0 | 64.3 | 68.3 | 77.0 | 72.5 | |

| YOLOv7std | 98.8 | 90.6 | 94.7 | 96.8 | 82.2 | 89.5 | 98.4 | 82.1 | 90.5 | |

| Datasets | Model | Precision (%) | Recall (%) | mAP@.5 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Fire | Smoke | All | Fire | Smoke | All | Fire | Smoke | All | ||

| Dataset A | YOLOv7 | 87.4 | 86.4 | 86.9 | 83.1 | 81.3 | 82.2 | 87.4 | 85.2 | 86.3 |

| YOLOv7+C3 | 87.1 | 85.6 | 86.8 | 82.7 | 80.9 | 81.8 | 87.5 | 85.3 | 86.4 | |

| YOLOv7+BiFPN | 87.7 | 86.4 | 87.5 | 82.4 | 80.7 | 81.5 | 87.6 | 84.0 | 85.8 | |

| YOLOv7+SPD-Conv | 87.4 | 86.5 | 87.0 | 81.6 | 81.6 | 81.6 | 86.9 | 85.4 | 86.4 | |

| YOLOv7+BiFPN+C3 | 87.7 | 88.2 | 88.0 | 69.5 | 70.5 | 80.0 | 78.8 | 81.8 | 86.2 | |

| YOLOv7+SPD-Conv+C3 | 87.9 | 87.4 | 87.6 | 81.4 | 81.5 | 81.5 | 87.3 | 85.5 | 86.4 | |

| YOLOv7+BiFPN+SPD-Conv | 88.5 | 88.7 | 88.6 | 81.4 | 81.5 | 81.5 | 87.2 | 85.5 | 86.3 | |

| YOLOv7std | 91.6 | 91.1 | 91.4 | 84.6 | 83.5 | 84.2 | 88.1 | 85.5 | 85.9 | |

| Dataset B | YOLOv7 | 93.3 | 85.7 | 89.5 | 90.4 | 73.3 | 81.8 | 93.7 | 79.3 | 86.5 |

| YOLOv7+C3 | 95.0 | 86.9 | 90.9 | 90.4 | 81.0 | 85.7 | 95.2 | 83.6 | 89.0 | |

| YOLOv7+BiFPN | 89.6 | 74.7 | 82.2 | 91.4 | 78.8 | 85.1 | 93.5 | 77.3 | 85.4 | |

| YOLOv7+SPD-Conv | 91.2 | 86.0 | 88.6 | 88.3 | 81.7 | 85.0 | 93.4 | 82.5 | 88.0 | |

| YOLOv7+BiFPN+C3 | 90.1 | 86.6 | 88.3 | 87.2 | 80.0 | 85.3 | 92.9 | 85.1 | 87.0 | |

| YOLOv7+SPD-Conv+C3 | 91.0 | 86.2 | 88.6 | 86.2 | 80.0 | 83.1 | 89.8 | 83.8 | 86.8 | |

| YOLOv7+BiFPN+SPD-Conv | 90.7 | 77.6 | 84.2 | 93.5 | 81.1 | 87.3 | 97.0 | 80.0 | 88.5 | |

| YOLOv7std | 98.8 | 90.6 | 94.7 | 96.8 | 82.2 | 89.5 | 98.4 | 82.1 | 90.5 | |

| Datasets | Model | Precision (%) | Recall (%) | mAP@.5 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Fire | Smoke | All | Fire | Smoke | All | Fire | Smoke | All | ||

| Dataset B | Transfer Learning | 98.8 | 90.6 | 94.7 | 96.8 | 82.2 | 89.5 | 98.4 | 82.1 | 90.5 |

| Non-Transfer Learning | 98.7 | 80.2 | 89.5 | 85.1 | 77.8 | 81.4 | 96.3 | 75.6 | 85.9 | |

| Datasets | Model | Precision (%) | Recall (%) | mAP@.5 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Fire | Smoke | All | Fire | Smoke | All | Fire | Smoke | All | ||

| Dataset A | CIoU | 89.7 | 90.2 | 89.9 | 80.3 | 78.7 | 79.5 | 86.9 | 85.0 | 86.0 |

| Focal-CIoU | 91.6 | 91.1 | 91.4 | 84.6 | 83.5 | 84.2 | 88.1 | 85.5 | 85.9 | |

| Dataset B | CIoU | 94.5 | 85.7 | 90.1 | 92.6 | 79.7 | 86.1 | 98.4 | 81.0 | 89.7 |

| Focal-CIoU | 98.8 | 90.6 | 94.7 | 96.8 | 82.2 | 89.5 | 98.4 | 82.1 | 90.5 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, D.; Liu, Y.; Liu, G.; Wang, N.; Chen, P.; Yu, J.; Liang, G. YOLOv7scb: A Small-Target Object Detection Method for Fire Smoke Inspection. Fire 2025, 8, 62. https://doi.org/10.3390/fire8020062

Shao D, Liu Y, Liu G, Wang N, Chen P, Yu J, Liang G. YOLOv7scb: A Small-Target Object Detection Method for Fire Smoke Inspection. Fire. 2025; 8(2):62. https://doi.org/10.3390/fire8020062

Chicago/Turabian StyleShao, Dan, Yu Liu, Guoxing Liu, Ning Wang, Pu Chen, Jiaxun Yu, and Guangmin Liang. 2025. "YOLOv7scb: A Small-Target Object Detection Method for Fire Smoke Inspection" Fire 8, no. 2: 62. https://doi.org/10.3390/fire8020062

APA StyleShao, D., Liu, Y., Liu, G., Wang, N., Chen, P., Yu, J., & Liang, G. (2025). YOLOv7scb: A Small-Target Object Detection Method for Fire Smoke Inspection. Fire, 8(2), 62. https://doi.org/10.3390/fire8020062