Highlights

What are the main findings?

- A proximal policy optimization (PPO)-based semantic broadcast model for collective perception with significant communication efficiency.

- A multi-agent proximal policy optimization (MAPPO)-based decentralized adaptive formation (DecAF) framework, incorporating the semantic broadcast communication (SemanticBC) mechanism.

What is the implication of the main finding?

- The proposed solution significantly improves global perception and formation efficiency compared with methods that rely on local observations.

- The proposed framework improves scalability and robustness in large UAV fleets by enabling efficient global communication, adaptive formation, and obstacle avoidance with minimal communication overhead.

Abstract

Multiple unmanned aerial vehicle (UAV) systems have attracted considerable research interest due to their broad applications, such as formation control. However, decentralized UAV formation faces challenges stemming from limited local observations, which may lead to consistency conflicts, and excessive communication. To address these issues, this paper proposes SemanticBC-DecAF, a decentralized adaptive formation (DecAF) framework under a leader–follower architecture, incorporating a semantic broadcast communication (SemanticBC) mechanism. The framework consists of three modules: (1) a proximal policy optimization (PPO)-based semantic broadcast module, where the leader UAV transmits semantically encoded global obstacle images to followers to enhance their perception; (2) a YOLOv5-based detection and position estimation module, enabling followers to infer obstacle locations from recovered images; and (3) a multi-agent proximal policy optimization (MAPPO)-based formation module, which fuses global and local observations to achieve adaptive formation and obstacle avoidance. Experiments in the multi-agent simulation environment MPE show that the proposed framework significantly improves global perception and formation efficiency compared with methods that rely on local observations.

1. Introduction

With the advancement of AI technologies and the enhancement of single-machine processing capabilities, increasingly complex modeling of unmanned aerial vehicle (UAV) swarm formation systems has motivated the adoption of multi-agent reinforcement learning (MARL) methods for formation control [1,2]. By equipping UAVs with high-resolution cameras and integrating resource optimization algorithms, more intelligent local decision-making can be achieved. Meanwhile, UAVs equipped with high-resolution image sensors possess strong target detection and recognition capabilities, enabling them to efficiently accomplish representative tasks such as urban inspection and disaster identification [3,4]. Most decentralized MARL-based formation strategies are developed under the centralized training and decentralized execution (CTDE) paradigm [5], where real-time interaction data or historical trajectories between agents and the environment are used as control inputs. During the training phase, the parameters of deep neural networks are continuously optimized to derive control strategies that satisfy task requirements. In the testing and deployment phases, these strategies are applied to UAV swarms for executing tasks such as target tracking, autonomous obstacle avoidance, and cooperative operations [6].

However, as the number of UAVs and the overall system scale increase, the convergence and stability of such approaches face significant challenges. Moreover, during decentralized execution, agents often rely on local observations for decision-making, lacking effective awareness of the global system state. Independent agents may also generate inconsistent perceptions of obstacles due to variations in observation perspectives, which can result in conflicts in decision consistency. Within partially observable Markov decision processes (POMDPs) [5,7], such inconsistencies in local perception are further exacerbated, making it difficult for large-scale UAV swarms to efficiently accomplish collaborative tasks [8], such as autonomous obstacle avoidance.

In this regard, several studies [9,10] have proposed introducing communication modules into multi-agent systems (MAS), whereby intelligent policies are employed to regulate the content (what), timing (when), and receivers (who) of inter-agent information exchange. Such mechanisms accelerate model training and substantially enhance the efficiency and robustness of cooperative task execution. However, communication in these approaches often relies on high-dimensional latent vectors generated by neural networks, which are inherently difficult to interpret. Moreover, despite consuming considerable computational and communication resources, these methods still struggle to guarantee coordination efficiency in task execution. In UAV swarm formation tasks, agents must continuously exchange critical state information such as position, velocity, and flight status with their neighbors. In scenarios involving obstacles, agents are further required to rapidly and accurately perceive the environment and share such information to ensure effective obstacle avoidance and cooperative control. Nevertheless, frequent information exchange inevitably increases the communication load [8,11], imposing stricter requirements on bandwidth and real-time responsiveness. At the same time, UAVs are physically constrained by limited size and onboard energy, making it imperative to minimize redundant communication. Therefore, the design of a communication mechanism that is both efficient and capable of expressing critical perceptual information is essential to enhancing the overall performance of UAV swarms. Moreover, real-world deployment also requires attention to protocol standardization, sensor interoperability, and robustness under constraints [12], as well as recent advances in formation control for heterogeneous multi-agent systems based on consensus approaches [13].

In summary, this paper introduces a reinforcement learning (RL)-based semantic broadcast communication (SemanticBC) framework into UAV swarm scenarios to address adaptive formation tasks in environments with randomly distributed static obstacles. Consequently, the UAV swarm not only achieves autonomous obstacle avoidance during flight but also completes formation tasks adaptively according to the swarm size after reaching the target area. Specifically, the proposed framework is built upon a leader–follower architecture, where high-altitude leader UAVs capture real-time high-resolution aerial images of the area in which low-altitude followers operate. This architecture enhances the accuracy and robustness of image reconstruction at the receivers. After preprocessing and semantic encoding of the captured images, task-relevant semantic features are extracted and broadcast to the followers via a semantic broadcast communication mechanism based on proximal policy optimization (PPO) [14]. This enables task-oriented semantic transmission tailored to the receivers’ requirements. Based on the pre-trained semantic broadcast model, each follower, upon receiving the semantically encoded image, employs a semantic decoder to reconstruct the semantic content, and further invokes a pre-trained object detection and localization module to accurately extract the positions of all obstacles within its operational region. The obtained obstacle information serves as auxiliary environmental observation, which, combined with the UAVs’ own local observations, is fed into the decentralized formation policy to realize adaptive autonomous obstacle avoidance and formation control. In the proposed scheme, semantic broadcast communication capitalizes on the shared knowledge bases of transmitters and receivers, transmitting only key semantic features of the images (e.g., obstacle positions) that are directly relevant to the task, while eliminating redundant background content. This design optimizes communication efficiency without compromising task accomplishment. Furthermore, the introduction of semantic broadcast communication significantly enhances the swarm’s awareness of the global distribution of environmental obstacles, thereby mitigating consistency conflicts that may arise from partial and heterogeneous local observations. As a result, the swarm exhibits improved cooperative capability and task responsiveness in dynamic environments.

In brief, the contributions of this paper can be summarized as follows:

- ●

- To address the consistency conflicts caused by limited local observations in decentralized UAV swarm formation and the loss of communication efficiency due to redundant transmissions, this paper proposes SemanticBC-DecAF, a decentralized adaptive formation (DecAF) scheme based on a leader–follower architecture and incorporating SemanticBC. By leveraging the task-oriented nature of semantic communication, the proposed scheme enhances the swarm’s capability for autonomous obstacle avoidance and adaptive formation in complex environments.

- ●

- To enhance the efficiency of information transmission, the proposed semantic broadcast communication framework encodes obstacle-containing images at the transmitter (leader) and broadcasts them to the receivers (followers), thereby ensuring the effective delivery of critical information while significantly reducing communication overhead. In this framework, the semantic broadcast communication module employs the proximal policy optimization (PPO) algorithm to alternately optimize the shared encoder and multiple decoders.

- ●

- To alleviate the consistency conflicts in obstacle perception that may arise from discrepancies in local observations among swarm agents, a pre-trained YOLOv5-based object detection and localization module is deployed on the receiver UAVs. Using the images reconstructed by the semantic decoder as input, this module enables precise extraction of global obstacle position information. Subsequently, the predicted global obstacle positions are combined with each UAV’s local observations and jointly fed into the formation control module, which is built upon multi-agent proximal policy optimization (MAPPO), thereby guiding the UAVs to accomplish autonomous obstacle avoidance and adaptive formation tasks.

- ●

- Finally, the proposed scheme is implemented and evaluated in the multi-agent simulation environment MPE [15]. Experimental results demonstrate that, compared with conventional communication methods and semantic broadcast communication based on joint source–channel coding (JSCC), SemanticBC-DecAF exhibits more pronounced advantages in terms of semantic image reconstruction accuracy and adaptability to varying channel conditions. Moreover, relative to the traditional DecAF scheme that relies on local observations for obstacle avoidance and formation, the integration of semantic broadcast communication not only significantly improves the decision-making efficiency of autonomous obstacle avoidance but also accelerates the completion of adaptive formation to a certain extent.

The remainder of this paper is organized as follows: Section 2 provides a detailed description of the task and system model. Section 3 presents the implementation details of the proposed SemanticBC-DecAF scheme, including the PPO-driven semantic broadcast communication module, the obstacle recognition and localization module, and the adaptive formation module for UAV swarms incorporating semantic image information. Section 4 presents the experimental results and numerical analyses. Finally, Section 5 concludes this paper.

2. Task Description and System Model

2.1. Task Description

As illustrated in Figure 1, this paper considers a decentralized UAV swarm tasked with adaptive formation, consisting of a high-altitude UAV (leader) and a group of low-altitude UAVs (followers) . Within the operational area of the swarm, a certain number of obstacles are randomly distributed. The swarm must leverage both local observations and semantic broadcast communication to acquire obstacle information, autonomously avoid obstacles during its movement toward the target point , and perform formation coverage at the target to accomplish specific tasks. The leader captures a high-resolution aerial image of the region in which the low-altitude followers operate using its onboard camera. The image is then semantically encoded to remove redundant background content and extract obstacle boundary information. The encoded symbols are subsequently broadcast to the low-altitude followers. Each follower () restores the image as through its local decoder model and employs an object detection and localization module to predict the obstacle positions . Based on these predictions together with their local observations, the swarm executes formation control tasks.

Figure 1.

Illustration of a UAV swarm collaboration scenario with semantic broadcast communication.

Furthermore, for simplicity, we assume that the UAV swarm operates on the same horizontal plane. As shown in Figure 2, at time step t, some UAVs may temporarily detach from the swarm due to loss of communication or mobility during flight [8]. Therefore, it is necessary to dynamically adjust the formation pattern (with ) according to the current swarm size in order to ensure stable coordination and reliable task execution.

Figure 2.

Formation patterns with different numbers of follower UAVs.

2.2. System Model

The overall system model is divided into three components: the semantic broadcast communication module, the object detection and localization module, and the adaptive formation module. As illustrated in Figure 3, the system can be modeled as a shared transmitter and receivers. After the leader UAV acquires an aerial image of the region where the follower UAVs operate (), the image is converted into a sparse semantic image through a preprocessing module. This module includes OpenCV-based image processing operations and a mask extraction process. By filtering the background via the mask and retaining only the obstacle regions, a sparse target image with a white background is obtained, providing an effective input for semantic encoding. The preprocessed image is then fed into the encoder (parameterized by ) for semantic encoding, which comprises source encoding and channel encoding . The specific network architecture and training details will be presented in Section 3. Furthermore, to facilitate the transmission of the encoded image over the physical channel, a quantization module maps it into , which is subsequently fed into the channel:

where B denotes the number of quantization bits, and .

Figure 3.

System model.

At the receiver side, the message received by UAV agent () is expressed as:

where denotes the channel model, which is typically modeled as either an AWGN channel or a Rayleigh fading channel. represents a noise vector following a Gaussian distribution.

Similarly, at the receiver , the de-quantization layer and the decoding layer (parameterized by ) perform the inverse process. Accordingly, the decoded image signal can be expressed as:

The objective of semantic broadcast communication is to estimate the global positions of obstacles. To enhance the accuracy of position estimation, the goal of the decoder at receiver is to maximize the semantic accuracy of image reconstruction, which can be expressed as:

where denotes the semantic accuracy at receiver . In this paper, PSNR or SSIM is adopted as the evaluation metric [16].

Subsequently, after obtaining the reconstructed semantic image , the decoder of UAV agent inputs it into a YOLOv5-based object detection and localization module to identify obstacles within the swarm’s operational area and predict their actual position coordinates through position mapping. Since the goal of position estimation is to accurately determine the actual locations of obstacles, this module can be fine-tuned separately using collected datasets (the detailed procedure will be elaborated in Section 3.

Furthermore, since this paper does not primarily focus on the design of UAV swarm formation strategies, we adopt the consensus inference-based hierarchical reinforcement learning (CI-HRL) method [8] as the baseline formation controller and integrate semantic broadcast communication to enhance multi-agent perception and decision-making. On this basis, the semantic broadcast communication mechanism is integrated to enhance the perception and decision-making performance of multi-agent cooperative tasks. Specifically, the formation module can be modeled as a decentralized partially observable Markov decision process (Dec-POMDP). At time step t, the position and velocity of UAV are denoted by and , respectively. The neighbor set of UAV $n$, denoted by , is defined by the Euclidean distance condition , where represents the maximum observation range. The relative position and velocity of UAV with respect to its neighbor are represented by and , respectively. In the Dec-POMDP formulation, the key components include the state, action, reward function, and objective function, which are described as follows:

- State: At time step , the local state of agent is represented as , where denotes the local observation state of agent , including the observation information of its neighbors and the target, defined as . Here, represents the neighbor state, while denotes the relative position with respect to the target. In addition, corresponds to the global obstacle position information estimated by the object detection and localization module.

- Action: We assume that the UAV swarm flies at a fixed speed and altitude on the same horizontal plane. Based on the state , the formation and obstacle-avoidance policy outputs the acceleration of agent , denoted as .

- Reward: The lower-level formation control reward mainly consists of the formation reward , the target navigation reward , and the obstacle avoidance reward . The specific details of the reward will be elaborated in [8]. Finally, the reward function is formulated as follows:

Therefore, the objective function of the distributed cluster formation module is formulated to maximize the cumulative discounted reward . Correspondingly, the optimization objectives of the proposed SemanticBC-DecAF scheme can be delineated along two dimensions: (i) optimizing the semantic similarity metric in Equation (4) to enhance the transmission quality of images within the semantic broadcast communication module; (ii) employing the object detection and position estimation module to accurately infer obstacle locations from decoded images, thereby reinforcing the agents’ environmental perception and improving formation efficiency under dynamic environments. The subsequent sections provide a systematic exposition of the design principles and optimization methodologies pertaining to the aforementioned three core modules.

3. PPO-Based Semantic Broadcast Communication and Decentralized Adaptive Formation

3.1. PPO-Based Semantic Broadcast Communication

This section primarily investigates how the semantic broadcast communication mechanism can be leveraged to improve the semantic accuracy of image reconstruction at follower UAVs, thereby providing obstacle location information for the subsequent object detection and position estimation module. As for the PPO-based semantic broadcast model, the optimization of the semantic encoder and decoders follows an alternating learning paradigm. Specifically, the shared semantic encoder is optimized via PPO to achieve task-oriented objectives, while the decoders at the receivers are locally optimized using conventional bit-level objective functions.

3.1.1. Optimization of the Semantic Decoder Network

The semantic decoder is designed to reconstruct images that encapsulate obstacle location information. The overall design adheres to a progressive recovery paradigm, wherein low-dimensional semantic representations are systematically transformed into high-resolution image space, ultimately yielding the reconstructed output. In particular, the input to the semantic decoder is the semantic feature vector perturbed by the communication channel. This vector is initially processed through a linear de-quantization module , which maps it back into an intermediate feature representation with spatial structure, thereby establishing the foundation for subsequent spatial restoration. Thereafter, the network incorporates a hierarchical stack of four deconvolutional blocks (ConvTranspose2d), each followed by normalization and activation layers (BN + ReLU), to progressively upsample spatial resolution and recover semantic details within the reconstructed image.

With respect to the design of the loss function, this work aims to strengthen the model’s ability to reconstruct salient regions within images while simultaneously enhancing spatial representation accuracy in scenarios characterized by sparse structures. In contrast to conventional approaches that directly employ mean squared error (MSE), a composite loss function is developed, comprising weighted MSE and binary cross-entropy (BCE) loss [17], in order to jointly improve pixel-level fidelity and structure-level semantic accuracy. Specifically, the weighted MSE component assigns greater penalties to obstacle regions within the semantic map, thereby directing the model’s attention toward the reconstruction of areas with high semantic density. Its formulation is given as follows:

where denotes the index of the image sample, represents the batch of sampled images, and denotes the pixel-wise weight. The formulation is given as follows:

where denotes the threshold for pixel identification in the weighted MSE loss, and and represent the weights assigned to obstacle pixels and background pixels, respectively.

On the other hand, the mask-based BCE loss employs a thresholding strategy to binarize the predicted and target maps, with the aim of explicitly supervising the alignment of semantic point locations, thereby enhancing the perception of sparse semantic structures. Its formulation is expressed as follows:

where .

Finally, given the weighting coefficient , the overall loss function of the semantic decoder is formulated as:

It should be noted that the semantic encoder and decoders adopt an alternating learning mechanism. Specifically, within each training epoch, the decoder first performs local updates, followed by a single parameter update of the encoder. This process is iteratively repeated until the model converge.

3.1.2. Optimization of the Semantic Encoder Network

To more effectively align with the task requirements of the receivers and enhance the semantic accuracy of image reconstruction, the optimization objective of the semantic encoder lies not only in compressing feature representations but also in matching the task performance of multiple downstream receivers. The transmitter can be regarded as an agent, whose optimization process is formalized as a Markov decision process (MDP), i.e., . Specifically, the image vector , after being encoded via a convolutional neural network, yields the latent variable , which is regarded as the state space . The action is determined by the state and the sampling policy , as expressed below:

Here, denotes the Gaussian distribution, and represents the sampling policy parameterized by a learnable function, with mean and variance .

Furthermore, when the encoder takes an action according to the sampling policy , the sampled output is obtained as . In this case, the state transition function between the two states is also deterministic, i.e., . Similarly, to simplify computation, the discount factor is set to . The latent variable , after passing through the quantization layer , produces the final output , which is subsequently transmitted through the channel. Regarding the reward design, since the receiver can only obtain the reward of a given sample after completing the image decoding, the reward for the receiver’s task is treated as a sparse reward. Accordingly, the reward for the encoder is expressed as:

where denotes the semantic metric value corresponding to the task performance of decoder .

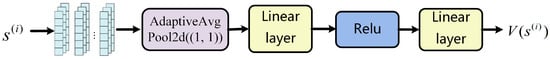

Based on the structural design of the aforementioned semantic encoder, its optimization process adopts an actor–critic architecture. Specifically, the actor component performs action sampling using the Gaussian policy in Equation (10), while the Critic component takes the high-dimensional semantic representation as input to evaluate the current state value. As illustrated in Figure 4, the value network of the PPO-driven semantic encoder is designed accordingly. In particular, the input vector is first compressed via adaptive average pooling to obtain a compact semantic representation. This representation is then processed through a linear layer followed by a ReLU activation function, ultimately producing the state value estimate , which is subsequently used for advantage computation to guide policy updates.

Figure 4.

The architecture of the value network.

In the PPO optimization process, the actor’s decision is based on the current policy and the old policy . To prevent excessively large updates of the policy within a short period, the probability ratio is defined as . Accordingly, the advantage function is expressed as , and the actor’s loss function is given by:

where denotes a hyperparameter, and constrains the ratio between the new and old policies within the interval (, thereby preventing excessive policy updates.

For the value network, after the actor part is updated, the value function is estimated through an independent value network and optimized using the MSE loss. The loss function is defined as follows:

where the target value can be simplified to the corresponding immediate reward since the current task involves only single-step decision making.

In addition, to further mitigate potential training instability that may arise from optimizing the encoder solely through the PPO policy loss, an auxiliary weighted supervision term is introduced on the decoder side, with the weighting coefficient denoted as . This auxiliary loss provides guidance for the encoder toward a more explicit and stable update direction, thereby compensating for deviations in the update trajectory that may result from the policy gradient objective. On this basis, the overall loss function of the encoder is defined as follows:

Building upon the local updates of the decoders and the PPO-driven optimization of the encoder, the encoder and decoders are trained under an alternating learning paradigm in an iterative manner. Specifically, within each update cycle, the encoder parameters remain fixed, while each receiver-side decoder independently performs local updates based on the local loss function in Equation (9). Subsequently, the transmitter-side encoder conducts a single global update according to the objective function in Equation (14).

3.2. Object Detection and Position Estimation

Based on the semantic broadcast communication module trained in Section 3.1, each follower agent is capable of semantically reconstructing the received images. To enable accurate perception of obstacles in the environment, an object detection and position estimation module based on YOLOv5 is deployed at each receiver. At any time step , this module takes as input the reconstructed image generated by the semantic decoder , extracts the two-dimensional bounding box information of obstacles in the image, and subsequently transforms the detection results into estimated physical position in the global coordinate system through scale restoration and projection mapping. To balance detection accuracy with deployment efficiency, a lightweight single-stage object detection model, YOLOv5, is adopted, which is well-suited for resource-constrained scenarios such as UAV formation. As illustrated in Ref [18], the YOLOv5 architecture primarily consists of three components: the feature extraction network (Backbone), the feature fusion module (Neck), and the prediction output module (Head). During model training, a dedicated dataset is constructed from a large number of reconstructed images generated by the semantic broadcast communication module, on which the YOLOv5 model is fine-tuned to enhance obstacle detection performance in specific environments. The loss function adopted in the training process [19] is defined as follows:

where denotes the parameters of the YOLOv5 model. represents the classification loss, which evaluates the accuracy of category predictions. denotes the objectness loss, computed by BCE to measure the confidence of whether an object exists within a predicted bounding box. refers to the bounding box regression loss, which quantifies the geometric overlap between the predicted and ground-truth bounding boxes, and it is calculated using the complete intersection over union (CIoU) metric.

Upon completion of the fine-tuning process for the YOLOv5 model, the inference stage is initiated. At each time step , the reconstructed image , generated by the semantic broadcast communication decoder, is forwarded to the YOLOv5 network. The backbone feature extractor encodes hierarchical spatial representations from the input image, thereby capturing both fine-grained structural details and high-level semantic cues of obstacles. Subsequently, the feature aggregation module integrates multi-scale semantic representations, which significantly enhances the model’s robustness in perceiving low-contrast obstacles and its discriminative capability in cluttered background scenarios. The prediction head then produces, in parallel across multiple scales, two-dimensional bounding box coordinates for all detected targets. To project these predicted bounding boxes into the physical coordinate system of the simulation environment, a coordinate transformation procedure is employed, which combines center-point normalization with linear projection. Specifically, the center coordinates of each bounding box are first normalized and subsequently projected onto the world coordinate frame of the simulation. In this manner, the estimated obstacle position for agent at time step is obtained as .

After completing the object detection and position mapping, the receiving agent takes the estimated global obstacle positions together with its locally perceived state as joint inputs to the formation control module, thereby providing the basis for subsequent decision-making in autonomous obstacle avoidance and formation tasks.

3.3. MAPPO-Based Adaptive Formation Strategy

The formation strategy employed in this study is built upon the lower-level policy of the consensus-inference hierarchical reinforcement learning algorithm (CI-HRL) [8]. This policy, which integrates alternating training with policy distillation, is adopted as the control strategy for the formation module. Its purpose is to replace the original obstacle perception method that relies on local observation distances with the global obstacle information obtained through the semantic broadcast communication mechanism, thereby improving the UAVs’ perception of the operational environment. Specifically, the lower-level policy derived from CI-HRL guides formation by addressing obstacle avoidance and coordinated movement toward the target region to accomplish formation task. Under the constraint of limited observations, the UAV determines its control actions using the neighboring states , the estimated global obstacle state , and the target observation . The objective function of the lower-level formation control is therefore expressed as follows:

where follows the reward definition given in Equation (5).

The decentralized control algorithm based on alternating training and policy distillation is primarily implemented upon the MAPPO (multi-agent proximal policy optimization) framework [20]. Although the conventional MAPPO algorithm demonstrates strong performance in fully observable multi-agent communication scenarios, it may encounter decision conflicts when simultaneously addressing navigation and obstacle avoidance tasks in partially observable environments with obstacles. To mitigate this issue, alternating training is employed to separately learn the parameters associated with these two tasks. However, the policies obtained through alternating training are typically restricted to fixed formation patterns and exhibit limited adaptability to variations in the number of UAVs within the swarm. To overcome this limitation and enable adaptive formation control under different swarm sizes, the input vectors are aligned by zero-padding, followed by the adoption of a policy distillation-based hybrid policy that generalizes across diverse formation requirements.

As illustrated in Figure 5, for UAV swarms of different sizes, corresponding teacher models are first trained under their respective formation patterns . Subsequently, a hybrid policy capable of adapting to varying formation scales is further learned through policy distillation from these teacher models. Specifically, during the training of each teacher model, the formation module policy is parameterized by a multilayer perceptron (MLP) consisting of a formation and navigation layer , an obstacle-avoidance layer , and an output layer , with the associated parameters denoted as . At each time step , the output layer computes a Gaussian distribution with mean and variance and , respectively, i.e., , . Subsequently, an action is sampled from this distribution, , which yields the output action of the output layer .

Figure 5.

Alternating training and policy distillation-based formation strategy [8].

For the training of teacher models, in order to mitigate potential conflicts arising from parameter updates of different functional modules, an alternating training strategy is employed to update the parameters of each module, as illustrated in Figure 5. The specific procedure is as follows:

- (a)

- Training of the formation and navigation layer: In an obstacle-free environment, the output of the obstacle-avoidance module is set to zero, and only the first-stage policy parameters are trained. This enables the UAVs to perform navigation and formation control based on the neighboring information and the target information . The corresponding value network parameters are updated as .

- (b)

- Training of the obstacle avoidance layer: In an environment with randomly placed static obstacles, the second-stage policy is trained to endow the agents with stable obstacle-avoidance capability. The corresponding value network parameters are updated as .

- (c)

- Joint fine-tuning: The policy parameters obtained in stage I are combined with the obstacle avoidance parameters from stage II to form the initial policy. This policy is then jointly fine-tuned in environments containing obstacles, resulting in the complete policy . The corresponding value network parameters are updated as .

To enable the UAV swarm to adapt to varying formation patterns , a set of policies is learned through the aforementioned alternating training process. The teacher models continuously interact with the environment to collect experience buffer data . Subsequently, the hybrid policy is trained via supervised learning on trajectories sampled from the experience buffer, with training performed by minimizing the MSE. The corresponding loss function is defined as follows:

where denotes the parameters of the trained hybrid policy , and denotes the experience buffer. Under the aforementioned policy distillation framework, the resulting hybrid policy exhibits strong adaptability to formation tasks, enabling the UAV swarm to avoid obstacles and navigate toward the target region to accomplish the designated formation pattern for any swarm size .

4. Simulation Results

4.1. Simulation Settings

The proposed SemanticBC-DecAF algorithm is validated in the multi-agent simulation environment MPE [15]. The key parameters involved in the formation module are summarized in Table 1. This module consists of the formation and navigation layer, the obstacle-avoidance layer, and the output layer, each implemented with a three-layer MLP architecture. In the experimental setup, the initial positions of the UAV swarm are randomly initialized within the range of [−2,2] meters along both the and axes, while the positions of static obstacles are randomly distributed within the range of meters, with the total number of obstacles fixed at six. The target point is randomly selected from the set . The formation module is trained for a total of rounds. In each round, the agents interact with the environment for episodes, and each episode consists of time steps.

Table 1.

The default parameters of the formation module.

In the semantic broadcast communication module of the follower UAVs, the leader functions as the shared transmitter, semantically encoding the acquired images and broadcasting them to follower UAVs. During the data acquisition phase, the system engages in continuous interactions with the environment via the formation control module, thereby generating a dataset comprising images with a resolution of , which are utilized as the training dataset for the semantic broadcast communication module. The dataset is partitioned into training and testing sets with a ratio of . In the training phase, the batch size is fixed at , and the module is trained for epochs. Within each epoch, batches are processed sequentially: the semantic encoder is updated once, whereas the semantic decoder is subject to local updates. The principal parameter configurations of this module are reported in Table 2.

Table 2.

The default parameters of the semantic broadcast communication module.

In our experiments, the signal-to-noise ratio is defined as , and we assume the noise power is fixed. Furthermore, as presented in Table 3, the network architectures of the semantic encoder and decoders are primarily designed based on convolutional neural networks (CNNs). The semantic encoder transforms the input image (with a resolution of into a fixed-length semantic vector, with the default bit length set to . Accordingly, the channel bandwidth ratio (CBR) can be computed as:

Table 3.

The network architecture of the semantic broadcast communication module.

To evaluate the performance of the formation strategy, this paper measures the average time required for the UAV swarm to accomplish formation. Specifically, the Hausdorff distance () between the current swarm topology and the desired formation topology is computed according to [21]. The formation is considered successfully achieved once the formation error satisfies .

Comparison schemes: We compare the performance between SemanticBC-DecAF and the following schemes including

- SemanticBC-DecAF w/t MSE: This method adopts the same network architecture as SemanticBC-DecAF; however, reinforcement learning is not incorporated during training. Instead, the optimization is carried out using the conventional MSE loss function.

- BPG+LDPC [22]: The BPG encoder (https://github.com/def-/libbpg (accessed on 24 September 2025)) enables efficient image compression, while the 5G LDPC encoder (https://github.com/NVlabs/sionna (accessed on 24 September 2025)) achieves high-performance channel error correction through sparse matrix design. To further compare the proposed semantic communication mechanism with conventional communication systems, the BPG and LDPC coding schemes are extended to the semantic broadcast communication scenario, employing standard 5G LDPC coding rates and different orders of quadrature amplitude modulation (QAM) for simulation. Specifically, LDPC coding schemes with rates of 2/3 (corresponding to code length (3072,4608)) and 1/3 (corresponding to code length (1536,4608)) are adopted, combined with 4-QAM and 16-QAM modulation for communication simulations.

- DecAF: DecAF is the underlying formation strategy proposed in [8], whose policy relies on local observation information and environmental perception to accomplish obstacle avoidance and navigation. In this chapter, it is adopted as a decentralized formation control baseline independent of any semantic communication mechanism, in order to assess the performance gains in task coordination brought by leveraging globally perceived obstacle position information via semantic broadcast communication.

4.2. Numerical Results and Analysis

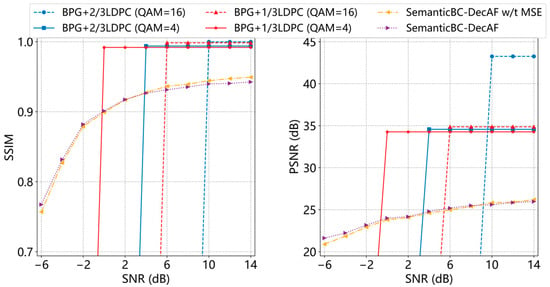

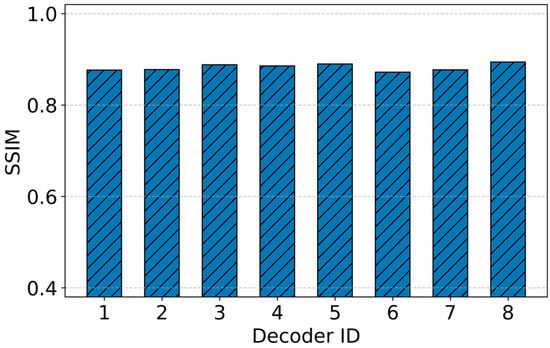

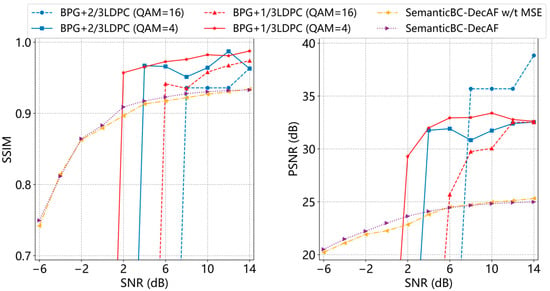

4.2.1. Semantic Transmission Performance

This study first compares the image transmission performance of various semantic broadcast communication schemes for UAV swarm broadcast communications under different channel conditions, including the conventional BPG+LDPC approach and the widely used semantic communication method optimized via the MSE loss function. Specifically, Figure 6 illustrates the variation in image reconstruction quality under an AWGN channel, with the signal-to-noise ratio (SNR) ranging from dB to dB. Under the fixed-noise assumption, SemanticBC consistently achieves higher SSIM than the baselines across the tested SNR range, indicating it can maintain comparable image quality with lower transmit power and thus reduce communication energy consumption. As observed, the conventional BPG+LDPC scheme (with different coding rates of and , as well as different QAM modulation orders) exhibits a pronounced cliff effect: the reconstructed image quality is extremely poor and nearly unrecoverable at low SNRs, yet experiences a sharp performance surge once the SNR exceeds a certain threshold. By contrast, both SemanticBC-DecAF and SemanticBC-DecAF w/t MSE demonstrate a smooth and continuous growth trend, where the proposed SemanticBC-DecAF consistently maintains higher SSIM and PSNR values. This validates that the PPO optimized semantic encoder can effectively balance receiver performance and achieves clear advantages over conventional MSE-based semantic broadcast communication schemes. Figure 7 presents the SSIM performance across multiple decoders for UAV swarm transmissions under the AWGN channel at SNR = −2 dB. The results indicate that the reconstructed image quality remains uniformly high, exhibiting only marginal discrepancies among different receivers. This consistency highlights the robustness and efficacy of the proposed approach.

Figure 6.

SSIM and PSNR of images transmitted by UAV swarms using different semantic broadcast schemes over AWGN channel.

Figure 7.

SSIM performance of SemanticBC-DecAF across different decoders for UAV swarm transmissions under AWGN channel (SNR = −2 dB).

Figure 8 further presents the reconstruction performance of the same comparative schemes under Rayleigh fading channels. Due to random fading, the SSIM and PSNR values of the conventional BPG+LDPC approach fluctuate significantly, particularly in mid-to-high SNR regions (e.g., –10 dB). In contrast, SemanticBC-DecAF continues to exhibit stable performance improvements. Notably, at SNR levels below dB, its SSIM significantly outperforms all conventional methods. This demonstrates that SemanticBC-DecAF can robustly extract semantic features even under fading impairments, thereby exhibiting strong channel adaptability. Moreover, with a default channel bandwidth ratio of , SemanticBC-DecAF achieves higher semantic accuracy and communication efficiency than conventional schemes under identical transmission overheads. This advantage is particularly pronounced in low-SNR regimes, further highlighting the superiority of the proposed approach.

Figure 8.

SSIM and PSNR of images transmitted by UAV swarms using different semantic broadcast schemes over Rayleigh fading channel.

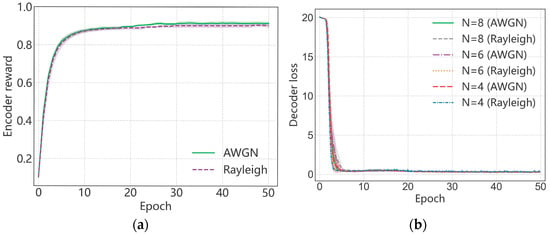

Figure 9 illustrates the convergence behavior of the proposed SemanticBC-DecAF framework, which incorporates an alternating training mechanism tailored for semantic broadcast communication. Specifically, Figure 9a,b present the variation trends of the semantic encoder’s average reward and the semantic decoder’s reconstruction loss, respectively. As shown in Figure 9a, under both AWGN and Rayleigh fading channels, the semantic encoder achieves a rapid increase in the average reward within the first training epochs, followed by a steady plateau. This indicates that the PPO algorithm enables the encoder to efficiently learn a semantically meaningful and channel adaptive representation. Moreover, the reward curves under different channel conditions exhibit minimal divergence, demonstrating the encoder’s robustness to channel variability. Figure 9b further shows the average reconstruction loss of the semantic decoders under different channels and different numbers of receivers (). In all configurations, the loss converges rapidly to a low value within approximately epochs, with limited oscillation, indicating strong convergence stability and generalization capability. In addition, the variation in the number of receivers has a limited impact on the final loss, further validating the scalability of the proposed method in multi-agent broadcast communication scenarios.

Figure 9.

Convergence of the semantic broadcast communication model in SemanticBC-DecAF. (a) depicts the evolution of mAP under two scenarios, and (b) presents the evolution of precision and recall throughout the training process.

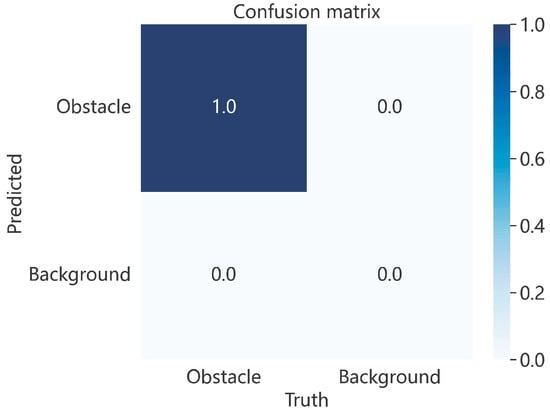

4.2.2. Position Estimation Performance

To further evaluate the effectiveness of the object detection and position estimation modules within the SemanticBC-DecAF framework under semantic broadcast communication scenarios, assessments are conducted from two perspectives: model convergence during the training phase and object recognition performance during the testing phase. Specifically, Figure 10 presents the confusion matrix generated during the testing phase. As observed, the model achieves a high classification accuracy for the “obstacle” category, reaching accuracy with no background misclassification. These results indicate that the proposed framework maintains a stable and reliable object detection capability, even when relying on images transmitted via semantic broadcast communication.

Figure 10.

Confusion matrix of the object detection and position estimation module during testing.

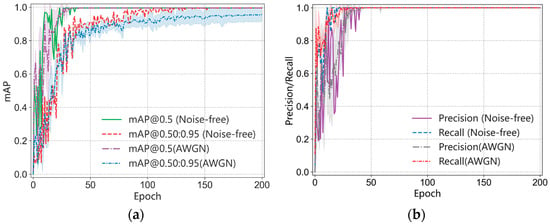

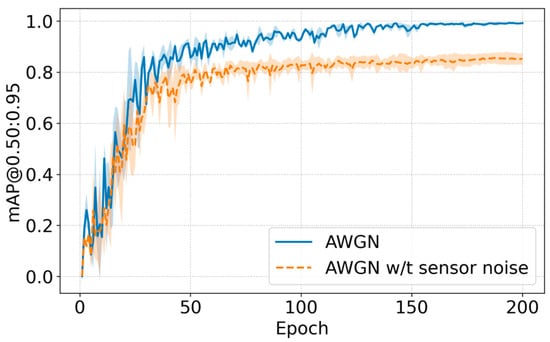

Figure 11 illustrates the performance evaluation curves of the object detection and position estimation module on the validation dataset under the AWGN channel encompassing metrics such as mean average precision (mAP), precision, and recall. Figure 9a depicts the evolution of mAP under two scenarios: (i) the absence of channel noise, wherein images acquired by the leader UAV are directly utilized by the follower UAVs without transmission distortion, and (ii) the presence of the AWGN channel, in which the leader’s visual data are transmitted to the followers via semantic broadcast communication. Two intersection-over-union (IoU) threshold settings are considered, namely, 0.5 and 0.50:0.95. As observed, in the noise-free case, the model achieves rapid convergence and superior detection accuracy, with mAP@0.5 approaching unity. This indicates the model’s strong capacity for precise obstacle identification. Conversely, under the AWGN channel, the convergence rate is reduced, and the final mAP exhibits a slight degradation, suggesting that semantic perturbations induced by channel noise can adversely affect detection performance. This degradation becomes more evident under stricter localization criteria (e.g., mAP@0.50:0.95), as indicated by increased performance gaps and greater variance. Figure 9b presents the evolution of precision and recall throughout the training process. Although the initial values of both metrics under the AWGN channel are marginally inferior to those observed in the noise-free scenario, they progressively improve and ultimately converge towards near-optimal levels (approaching 1) on the validation set. These results substantiate the robustness of the proposed method and its capacity to gradually adapt to channel impairments in the semantic broadcast communication.

Figure 11.

Validation performance for object detection (a) and position (b) estimation module.

To further evaluate robustness under realistic conditions, background noise induced by sensor estimation uncertainties was incorporated into the received images. As illustrated in Figure 12, within the AWGN channel, the presence of such noise leads to a discernible reduction in mAP@0.50:0.95 and a marked increase in variance across evaluation epochs. These findings indicate that sensor-related background noise not only undermines detection accuracy but also compromises the reliability and consistency of object detection and localization.

Figure 12.

Validation performance of mAP@0.50:0.95 for object detection and position estimation module.

4.2.3. Decentralized Adaptive Formation Performance

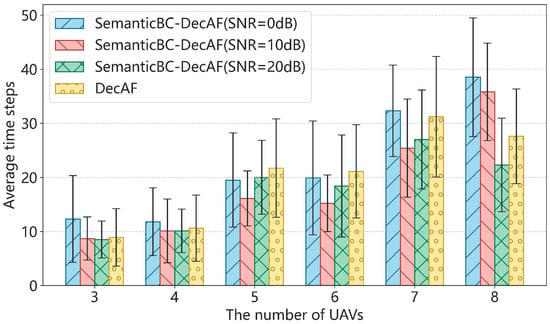

To demonstrate the effectiveness of formation completion in UAV swarms, Figure 13 reports the average time steps required by follower UAVs to accomplish formation under varying swarm sizes . To comparatively analyze the influence of semantic broadcast communication on swarm formation efficiency, experimental results from two schemes are presented: the conventional DecAF method, which relies on local observation for obstacle avoidance and navigation, and the proposed SemanticBC-DecAF method, which enables global obstacle perception through semantic broadcast communication. As for the latter, the semantic images transmitted from the leader to followers are subjected to noise perturbations under different channel conditions (SNR = dB). As illustrated in Figure 11, the performance improvement of SemanticBC-DecAF over the conventional DecAF baseline is modest for small swarm sizes (e.g., 3–5 UAVs), where the error bars exhibit significant overlap. However, as the swarm size increases (e.g., 6–8 UAVs), the advantage becomes more pronounced, particularly under high-SNR conditions. This improvement is primarily attributed to its access to global obstacle information via semantic broadcast communication, which facilitates more efficient navigation and obstacle avoidance. Although the presence of channel noise leads to a slight increase in formation time due to reduced accuracy in obstacle localization, the performance remains comparable to or even superior to that of the DecAF method in most scenarios. These results demonstrate that the proposed approach preserves a notable degree of robustness and efficiency in global obstacle perception, even under adverse communication conditions.

Figure 13.

Average time steps for formation completion across different swarm sizes.

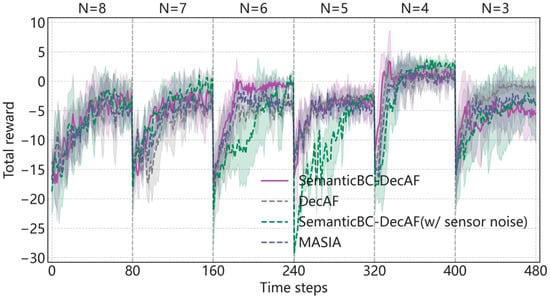

To evaluate the adaptive formation performance based on policy distillation as discussed in Section 3.3, Figure 14 illustrates the temporal evolution of total reward under both formation and obstacle avoidance tasks. During the UAV swarm’s progression, every time steps, a randomly selected follower UAV is removed from the swarm and becomes unobservable. The experiments are conducted across formation sizes ranging from to , and reward curves are recorded accordingly. As shown in Figure 14, following each perturbation, SemanticBC-DecAF is capable of rapidly restoring the reward to a higher level, with its advantage becoming more pronounced under larger formation sizes (e.g., to ). This observation indicates that the incorporation of semantic broadcast communication enhances the agents’ perception of global obstacle and formation information, thereby improving the efficiency and stability of formation reconfiguration under dynamic structural variations. To account for sensing uncertainties, Gaussian noise was injected into the position estimates of the object detection and position estimation module. As illustrated in Figure 14, the proposed SemanticBC-DecAF framework maintains rapid adaptability and stable formation performance under varying swarm sizes, even in the presence of sensor noise. Consequently, the proposed framework exhibits strong adaptability in handling variations in swarm size and is able to promptly re-establish the formation when internal changes occur within the UAV swarm. Additionally, compared to the MASIA [11] algorithm, which focuses on self-supervised information aggregation, SemanticBC-DecAF demonstrates better performance in quickly recovering formation and maintaining stability under dynamic changes. While MASIA excels in large-scale coordination, SemanticBC-DecAF’s use of semantic broadcast communication provides superior adaptability in the presence of perturbations and sensor noise, making it more effective for swarm reconfiguration.

Figure 14.

The total formation reward during swarm motion, where every 80 time steps a randomly selected follower UAV is removed from the formation and becomes unobservable.

5. Conclusions

In this paper, a decentralized adaptive formation framework for UAV swarms, termed SemanticBC-DecAF, is proposed under a leader–follower architecture. The proposed approach integrates a semantic broadcast communication mechanism to enhance autonomous obstacle avoidance and adaptive formation efficiency. The framework consists of three core modules: a semantic broadcast communication module, an object detection and position estimation module, and a formation module. In the PPO optimized semantic broadcast communication module, the leader UAV acts as a shared transmitter that continuously broadcasts images containing global obstacle position information to the follower UAVs. In the YOLOv5-based object detection and position estimation module, each follower UAV uses the reconstructed image from its semantic decoder as input to accurately estimate the positions of global obstacles. In the formation module, the predicted global obstacle positions are fused with each UAV’s local observations and jointly fed into an MAPPO-based adaptive formation strategy, enabling decentralized obstacle avoidance and adaptive formation control. The proposed framework is implemented and validated in the multi-agent simulation environment MPE. Experimental results demonstrate that, compared with the conventional DecAF approach that relies on local observation, the proposed SemanticBC-DecAF significantly improves both autonomous obstacle avoidance and formation performance. These findings verify the effectiveness of introducing semantic broad cast communication in enhancing global environment perception and coordination efficiency within UAV swarms. As future work, we will further investigate the security and resilience of decentralized swarm control under increasing swarm sizes, including scenarios such as leader failure and message corruption, to enhance real-world applicability. Moreover, we will proceed in stages with HIL and controlled flight tests to quantify SemanticBC’s robustness under varying communication and noise conditions and to integrate adaptive communication strategies.

Author Contributions

Conceptualization, X.X.; Methodology, X.X. and B.Z.; Validation, B.Z.; Formal analysis, R.L.; Investigation, B.Z.; Resources, B.Z.; Writing—original draft, X.X.; Writing—review and editing, X.X. and R.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Science and Technology Project of State Grid Hebei Information Telecommunication Branch (contact number kj2024-017).

Data Availability Statement

Data sets generated during the current study are available from the corresponding author on request.

Conflicts of Interest

Authors Xing Xu and Bo Zhang were employed by the company Information and Communication Branch of State Grid Hebei Electric Power Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Salimi, M.; Pasquier, P. Deep reinforcement learning for flocking control of UAVs in complex environments. In Proceedings of the 6th International Conference on Robotics and Automation Engineering (ICRAE), Guangzhou, China, 19–22 November 2021; pp. 344–352. [Google Scholar]

- Xing, X.; Zhou, Z.; Li, Y. Multi-UAV adaptive cooperative formation trajectory planning based onan improved MATD3 algorithm of deep reinforcement learning. IEEE Trans. Veh. Technol. 2024, 73, 12484–12499. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Zhao, Z. Real-time object detection network in UAV-vision based on CNN and transformer. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar]

- Jiang, L.; Yuan, B.; Du, J. Mffsodnet: Multi-scale feature fusion small object detection network for UAV aerial images. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar]

- Xu, Z.; Zhang, B.; Li, D. Consensus learning for cooperative multi-agent reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7 February 2023; pp. 11726–11734. [Google Scholar]

- Jiang, W.; Bao, C.; Xu, G. Research on autonomous obstacle avoidance and target tracking of UAV based on improved dueling DQN algorithm. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 5110–5115. [Google Scholar]

- Monahan, G.E. State of the art—A survey of partially observable Markov decision processes: Theory, models, and algorithms. Manag. Sci. 1982, 28, 1–16. [Google Scholar] [CrossRef]

- Xiang, Y.; Li, S.; Li, R.; Zhao, Z.; Zhang, H. Decentralized Consensus Inference-based Hierarchical Reinforcement Learning for Multi-Constrained UAV Pursuit-Evasion Game. arXiv 2025, arXiv:2506.18126. [Google Scholar]

- Foerster, J.; Assael, I.A.; De Freitas, N. Learning to communicate with deep multi-agent reinforcement learning. In Proceedings of the 30th Conference on Neural Information Processing Systems (NeurIPS), Barcelona, Spain, 5–10 December 2016; pp. 2145–2153. [Google Scholar]

- Sukhbaatar, S.; Fergus, R. Learning multiagent communication with backpropagation. In Proceedings of the 30th Conference on Neural Information Processing Systems (NeurIPS), Barcelona, Spain, 5–10 December 2016; pp. 2252–2260. [Google Scholar]

- Guan, C.; Chen, F.; Yuan, L. Efficient multi-agent communication via self-supervised information aggregation. In Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; pp. 1020–1033. [Google Scholar]

- Shahar, F.S.; Sultan, M.T.H.; Nowakowski, M.; Łukaszewicz, A. UGV-UAV Integration Advancements for Coordinated Missions: A Review. J. Intell. Robot. Syst. 2025, 111, 69. [Google Scholar] [CrossRef]

- Chang, X.; Yang, Y.; Zhang, Z.; Jiao, J.; Cheng, H.; Fu, W. Consensus-Based Formation Control for Heterogeneous Multi-Agent Systems in Complex Environments. Drones 2025, 9, 175. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Lowe, R.; Wu, Y.I.; Tamar, A. Multi-agent actor-critic for mixed cooperative-competitive environments. In Proceedings of the 31th Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 6380–6391. [Google Scholar]

- Tong, S.; Yu, X.; Li, R. Alternate Learning-Based SNR-Adaptive Sparse Semantic Visual Transmission. IEEE Trans. Wirel. Commun. 2024, 24, 1737–1752. [Google Scholar] [CrossRef]

- De Boer, P.T.; Kroese, D.P.; Mannor, S. A tutorial on the cross-entropy method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Jani, M.; Fayyad, J.; Al-Younes, Y. Model compression methods for YOLOv5: A review. arXiv 2023, arXiv:2307.11904. [Google Scholar]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar]

- Yu, C.; Velu, A.; Vinitsky, E. The surprising effectiveness of ppo in cooperative multi-agent games. In Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; pp. 24611–24624. [Google Scholar]

- Pan, C.; Yan, Y.; Zhang, Z. Flexible formation control using hausdorff distance: A multi-agent reinforcement learning approach. In Proceedings of the 30th European signal processing conference (EUSIPCO), Belgrade, Serbia, 29 August–2 September 2022; pp. 972–976. [Google Scholar]

- Richardson, T.; Kudekar, S. Design of low-density parity check codes for 5G new radio. IEEE Commun. Mag. 2018, 56, 28–34. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).