Abstract

The paper explores the uniform stability and equilibrium characteristics of a class of neural networks of fractional order with time delay. The two-point self-mapped contraction theorem is used in order to establish sufficient conditions for the uniform stability of the system. Further, the existence, uniqueness, and uniform stability of the equilibrium point are established. A set of numerical examples is presented to proclaim the validity and usefulness of the proposed results.

1. Introduction

Fractional calculus (an extension of classical integer-order calculus) has, in turn, become a significant mathematical method of modeling complex systems with memory and hereditary behaviour [1,2,3]. Although somewhat hampered by theoretical issues and a dearth of applications, recent decades have seen the revival of interest in fractional-order systems in a variety of scientific and engineering fields and data, such as in viscoelastic materials, biological processes, electromagnetic theory, as well as in financial modeling [4,5] (for more applications, we refer the reader to [6,7,8]). Fractional derivatives are especially applicable in describing a system in which historic states affect current dynamics, and therefore, they provide more modeling accuracy in such a system than integer-order methods.

The combination of fractional operators offers significant benefits for neural networks. As Lundstrom et al. revealed, a fractional differentiation provides neurons with higher computational functions, such as efficient information processing and anticipating stimuli [9]. In the same manner, Anastasio [10] proposed the oculomotor integrator to be a fractional-order system, and Anastassiou [11] demonstrated that the fractional neural networks had a high rate of approximation. Overall, these results suggest that the field of fractional-order neural networks is a significant contribution not only to theoretical neuroscience but also to practical applications, whether estimating parameters of a specific problem or recognizing a specific pattern.

In a recent research, E. Kaslika and S. Sivasundaram studied different classes of fractional-order neural networks in [12]. Thereafter, they have explored stability properties, multi-stability properties, chaos, and synchronization. However, the incorporation of time delays—a crucial feature of biological and artificial neural systems—introduces additional complexity to fractional-order network analysis. Time delays can induce oscillations, instability, or rich dynamical behaviors, making their rigorous treatment essential for both theoretical understanding and practical implementation (see, for example, [13,14]).

Previous stability analyses of delayed neural networks have predominantly relied on integer-order models [15,16], with results often obtained through Lyapunov functional methods. These approaches do not readily extend to fractional-order systems due to the non-local nature of fractional derivatives. Consequently, establishing stability criteria for fractional-order delayed neural networks remains an open challenge. Some researchers have applied contraction mapping principles to prove equilibrium existence and uniqueness in fractional-order neural networks [17,18,19,20]. Analysis and review of fractional order neural networks can be seen in [21]. The extension of the admissible class of neural models of a fractional-order nature, as well as the analysis of periodicity and the asymptotic behaviour of Cohen-Grossberg-type networks by using Riemann–Liouville fractional operators, are presented in [22]. Various stability results under appropriate sufficient conditions with systematic fixed-point structures in complex-valued contexts can be found [23,24,25]. In [26,27], the authors have investigated the finite-time stability of Caputo fractional-order neural networks.

On the other hand, contractive mappings are a fundamental notion in nonlinear analysis and have become a highly important tool for existence and uniqueness problems in scientific and engineering models. There are classes of contractions known as Kannan contraction, defined by Kannan [28] in 1968, and described by the inequality

has become a strong substitute to the classical Banach contraction principle. Unlike the Lipschitz-type dependence of being used in the framework of Banach, the Kannan condition is based on the self-distance terms of and which allow fixed-point results in the case where continuity or direct contractiveness between pairwise distances are not always true. This structural property has seen generalizations of Kannan-type operators being widely used in numerous contemporary mathematical contexts, including partial metric spaces [29], b-metric spaces [30], fuzzy and probabilistic metric spaces [31,32], and a host of other types of metrical structure of the type of fractional and integral operators. These developments and many recent studies have shown that Kannan-type contractions are useful for solving nonlinear functional, integral, and integro-differential equations and for studying the stability of complex dynamical systems found in science and engineering, including fractional-order models [33,34,35], fractals, as well as iterative numerical schemes [36]. Therefore, Kannan contractions still have a strong analytical structure, especially in those cases where classical methods do not assure convergence.

In contrast to Lyapunov methods, the Kannan theorem does not impose a classical differentiable or continuous requirement on the system; only a Lipschitz condition is required. This is more in line with the fractional-order context. Whereas Banach contractions require the mapping to decrease distances between any two points, Kannan mappings satisfy a property requiring distances between points and their images. It is a minor yet important difference that opens new possibilities for stability analysis of the fractional-order delayed neural networks. Although the condition is numerically more restrictive, it has alternative analytical properties, which can be useful in particular situations, especially when mixed with other methods, or in applications to systems with a given structural property.

Motivated by the above, we present an alternative analytical framework for studying fractional-order delayed neural networks by employing the two-point self-mapped contraction theorem. Unlike the Banach contraction principle used in previous works, the two-point self-mapped contraction theorem provides distinct sufficient conditions for the existence of unique fixed points. We derive a novel stability criterion based on this approach and prove the existence of unique and uniformly stable equilibrium points for a class of fractional-order neural networks with constant delays. Our results complement existing literature by offering an alternative mathematical pathway with different parametric constraints.

This paper is organized as follows: Section 2 briefs the fractional-order delayed neural network model and necessary mathematical preliminaries, including the two-point self-mapped contraction theorem. Section 3 presents our main results: sufficient conditions for uniform stability and an analysis of equilibrium points using the two-point self-mapping contraction theorem. Section 4 provides a few numerical examples validating the theoretical findings, and Section 5 concludes the paper.

2. Basic Definitions and Briefing on Fractional-Order Neural Network Model

Definition 1

([1,2]). The fractional integral of order for a function is defined as follows:

where is the Gamma function, .

Definition 2

([1,2]). The Riemann–Liouville derivative of fractional order ϖ of function is as follows:

Here, we have noted that .

Definition 3

([1,2]). The Caputo derivative of fractional order ϖ of function is as follows:

where .

Lemma 1

([3]). If and , which implies the following:

Remark 1.

The Caputo derivative has the advantage that initial conditions take the same form as for integer-order differential equations. Moreover, the Caputo derivative of a constant is zero. Therefore, throughout this paper, we use the Caputo fractional derivative operator to model our neural network system.

The dynamic behavior of a continuous fractional-order delayed neural network is described by the following:

for , and with . Equivalently, in vector form:

where

- is the state vector;

- with ;

- and are connection weight matrices;

- and are activation functions;

- is the transmission delay;

- is the external bias vector.

The initial conditions associated with system (6) are as follows:

where . The norm on is defined as follows:

Definition 4

([28]). Let be a metric space. A self-mapping is said to be a two-point self-mapped contraction if there exists a constant such that

for all .

Theorem 1

(Kannan Fixed Point Theorem [28]). Let be a complete metric space and let be a mapping satisfying

for all . Then possesses a fixed point in , that is unique, and for any , the sequence

converges to .

Remark 2.

The Kannan contraction is often referred to as a Two-point Self-mapped Contraction because the contractive condition

does not depend on the direct distance between two distinct points and , unlike the classical Banach contraction. Instead, it depends solely on the self-distances of each point from its own image under , namely and . Thus, the contraction property is determined through two points and their self-mapped distances, making it an intrinsic contraction based on how far each point is from its transformation.

Assumption A1.

There exist positive constants such that for all :

Assumption A2.

The system parameters satisfy the following:

where

For , let with the norm:

For initial functions , define:

Remark 3.

Above-stated Assumption 2, is an algebraic and easy-to-check sufficient condition, distinct from the usual contraction condition . It is stricter but sometimes easier to satisfy in parameter design.

Definition 5.

The solution of (4) is uniformly stable if for any , there exists such that for any two solutions and with initial conditions φ and ϱ, respectively, implies for all .

3. Existence, Uniqueness, and Uniform Stability Results

Theorem 2.

Under Assumptions 1 and 2, and if , then system (4) is uniformly stable.

Proof.

Let and be any two solutions of (4) with different initial conditions and for , where and . Let . Then from (4) we have the following:

The inverse fractional integral operator is applied on both sides of Equation (10) with the known Caputo property of and . Then we can get that which comes about is the integrative form as below:

Now multiply both sides by and take absolute values:

Using , we obtain the following:

Now, estimate the right-hand side as follows. We consider each term in the integrand separately and use appropriate bounds:

Let us denote , , and .

For the first integral, change the variable :

For the second integral, using :

For the third integral, we split the integration domain into two parts: and (if ).

Case 1: (or ). In this case, , so . Also, . Thus,

Case 2: (if ). Let . Then and . Also, when , ; when , . Thus,

Combining the estimates for the third integral, we obtain the following:

Now, putting all three estimates together into (14), we have the following:

Taking the supremum over on the left-hand side and summing over , we obtain the following:

where , and also note that . Since for all i, we have the following:

Thus,

Rearranging terms gives the following:

Under Assumption 2, we have . Since , it follows that . Therefore,

If , then , and hence . Consequently,

Now for any , we can choose .

Then, if , we have the following:

This implies the following:

which means that for all . Therefore, system (4) is uniformly stable. □

Theorem 3.

Under Assumptions 1 and 2, there exists a unique equilibrium point of the system (4).

Proof.

The equilibrium point satisfies the following:

Let . Then,

Define the mapping by the following:

We show that satisfies the two-point self-mapped contraction condition on the complete metric space , where . For any , using Assumption 1, we have the following:

Summing over i:

Thus, is Lipschitz with constant . By Assumption 2, . Now, for any ,

Using the triangle inequality:

Substituting the Lipschitz bound:

Rearranging yields the following:

Since , we have and . Thus,

with . Hence, satisfies the two-point self-mapped contraction condition. By two-point self-mapped contraction theorem, has a unique fixed point . Then is the unique equilibrium of system (4). □

Theorem 4.

Under Assumptions 1 and 2, and if , then the unique equilibrium point of system (4) is uniformly stable.

Proof.

The existence and uniqueness of follow from Theorem 3. For any solution of (4) with initial condition , let be the constant equilibrium solution. By Theorem 1, we have

where is the constant function equal to on . For any , choose . Then if , we have for all . Hence, is uniformly stable. □

Remark 4.

The condition ensures positivity of . This can be relaxed by using a weighted norm with rate , leading to .

Remark 5.

The two-point self-mapped contraction condition is stronger than the contraction condition but provides an alternative fixed-point approach.

Remark 6.

The uniform stability result is global due to Lipschitz conditions and holds independently of τ for finite time intervals.

4. Numerical Examples

Example 1.

Consider a two-state fractional-order delayed neural network:

with , , and activation functions:

For the sigmoid function, we have the Lipschitz constant (since the maximum derivative is ).

The system parameters are as follows:

Now we are going to compute and as below:

Now we are going to compute associated norms:

Now we are going to compute the two-point self-mapped contraction condition as follows:

Thus, the condition is satisfied.

Now we are going to compute an additional condition for Theorem 1:

This condition is also satisfied.

The equilibrium point satisfies:

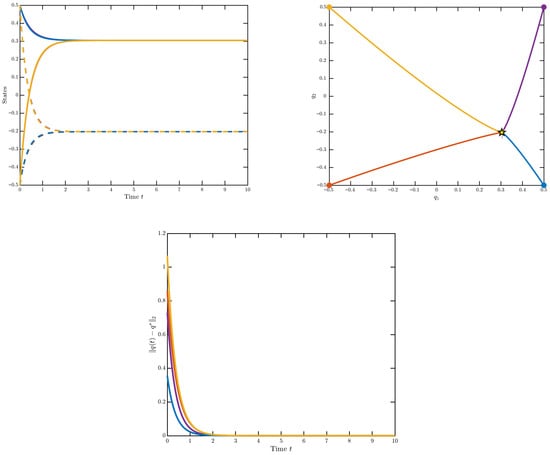

From the plots below, we can get the unique equilibrium:

Figure 1 shows the convergence of the system trajectories to the equilibrium point from different initial conditions, demonstrating the uniform stability.

Figure 1.

Time evolution, trajectories of the system, and the convergence of the system for Example 1.

Therefore, by Theorems 2–4, the system has a unique equilibrium point which is uniformly stable.

The numerical Example 1 is set to check the validity of the obtained stability conditions, demonstrate the existence and uniqueness of the equilibrium point, and visually verify the selection of the uniform stability behavior of the fractional-order delayed neural network.

Remark 7.

We see convergence in our numerical illustration Example 1, which shows important characteristics of fractional-order delayed networks: initial conditions with divergent paths all converged to the same equilibrium, which shows the uniformity of stability achieved by theory. The history-shaped smooth settling (instead of exponential) suggests the memory effect inherent with the fractional order , which allows the network to remember the previous states and change with time. No specific metrics such as Lyapunov exponents or direct convergence times were measured but the observed convergence behavior is consistent with the theoretical hypothesis that such systems stay stable with moderate delays with .

Example 2.

Consider the following two-state fractional-order delayed Hopfield neural network model:

here, we take the order , , and

which satisfies Lipschitz conditions with constants .

For this system, we have the following:

Now compute the following:

Hence,

and

Thus, Assumptions 1 and 2, together with the condition , are satisfied. By Theorems 2 and 3, the system (17) possesses a unique equilibrium point that is uniformly stable.

The equilibrium equations are as follows:

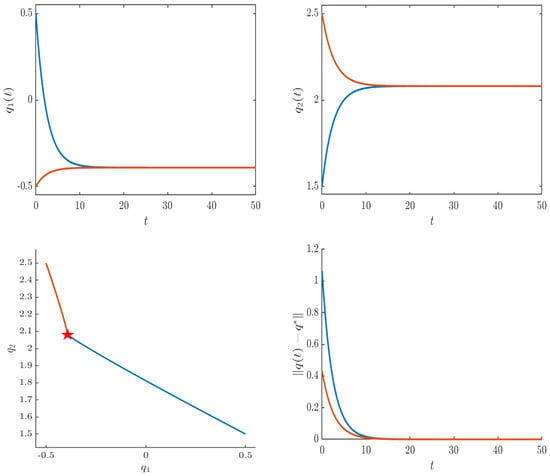

By considering the saturation regions of the piecewise linear activation yields the following:

A numerical simulation of (17) confirms that trajectories starting from various initial conditions converge to ; a typical phase portrait is shown in Figure 2. Therefore, by Theorems 2–4, the system has a unique equilibrium point which is uniformly stable.

Figure 2.

The convergence of the system and the trajectories of the system for Example 2.

The numerical Example 2 is set to check the validity of the obtained stability conditions, demonstrate the existence and uniqueness of the equilibrium point, and visually verify the selection of the uniform stability behavior of the two-state fractional-order delayed Hopfield neural network model.

Remark 8.

The chosen parameters in this example are carefully designed to satisfy both the Kannan condition and the additional condition . Several important observations can be made, namely:

- The values and are relatively large compared to the connection weights. This ensures that the self-stabilizing effect dominates the potentially destabilizing effects of the interconnections, which is crucial for stability. The condition provides a safety margin that guarantees the positivity of the stability coefficient.

- The elements of matrices and are kept small relative to . This is essential for satisfying the Kannan condition, which requires the combined effect of all connections to be less than one-third of the smallest self-inhibition rate.

- The matrices and are designed to be diagonally dominant with positive diagonal elements, representing self-excitation that is counterbalanced by the negative self-inhibition terms . This balance prevents runaway excitation while allowing meaningful information processing.

- The fractional order introduces memory effects that slightly slow the convergence compared to integer-order systems, but do not compromise stability.

Remark 9.

The proposed stability criteria are sufficient and therefore conservative. If the Lipschitz constants of the activation functions or the coupling strengths exceed the bounds given in Assumption 2, the two-point self-mapped contraction property may be violated, and the stability conclusions no longer apply. In such cases, instability or more complex dynamical behaviors (for example, oscillations or chaos) may occur, which are beyond the scope of the present analysis.

5. Conclusions

This paper has investigated the stability analysis of a class of fractional-order neural networks with time delays using an alternative fixed-point approach based on the two-point self-mapped contraction theorem. Unlike traditional methods that rely on contraction mapping principles, we have derived a sufficient condition for uniform stability that depends on the parameters of the network and the Lipschitz constants of the activation functions. Specifically, we established that under the condition , the system possesses a unique equilibrium point that is uniformly stable.

The main contributions of this work include the following:

- The paper presents this study as a new analytical method and stability analysis tool, using the Kannan fixed-point theorem to examine the stability of fractional-order delayed neural networks.

- Proving the existence and uniqueness of equilibrium points through the two-point self-mapped fixed-point theorem, which offers an alternative mathematical framework to traditional contraction mapping approaches.

- Establishing uniform stability criteria for fractional-order delayed neural networks, extending previous results that primarily focused on integer-order or delay-free fractional-order systems.

- Demonstrating the theoretical results through a few numerical examples, which confirms the validity and practical applicability of the derived conditions.

Although the derived condition is more restrictive than the aforementioned conditions based on contraction mapping principles, it offers a new perspective on the stability analysis of fractional-order neural networks. It can serve as a motivation for other analytical methods in future studies. Areas of future research might be expanding the results to networks with time-varying delays, distributed delays, or stochastic perturbations and extending the results to other types of dynamical systems of fractional order using two-point self-mapped fixed-point theorems.

Author Contributions

Conceptualization, S.K.P. and A.H.A.; methodology, N.V.; validation, J.A. and N.V.; formal analysis, N.V.; investigation, S.K.P.; writing—original draft preparation, S.K.P., N.V. and A.H.A.; writing—review and editing, S.K.P., N.V. and A.H.A.; supervision, A.H.A. and J.A.; project administration, S.K.P.; funding acquisition, J.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used and/or analyzed during the current study is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Podlubny, I. Fractional Differential Equations; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier: New York, NY, USA, 2006. [Google Scholar]

- Li, C.P.; Deng, W.H. Remarks on fractional derivatives. Appl. Math. Comput. 2007, 187, 777–784. [Google Scholar] [CrossRef]

- Cartea, I.; del-Castillo-Negrete, D. Fractional diffusion models of option prices in markets with jumps. Physica A 2007, 374, 749–763. [Google Scholar] [CrossRef]

- Cont, R.; Tankov, P. Financial Modelling with Jump Processes; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Kiryakova, V. Generalized Fractional Calculus and Applications; Pitman Research Notes in Mathematics Series; Longman: Harlow, UK, 1994; Volume 301. [Google Scholar]

- Agarwal, R.P. Difference Equations and Inequalities: Theory, Methods, and Applications; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Miller, K.S.; Ross, B. An Introduction to the Fractional Calculus and Fractional Differential Equations; John Wiley & Sons: New York, NY, USA, 1993. [Google Scholar]

- Lundstrom, B.; Higgs, M.; Spain, W.; Fairhall, A. Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 2008, 11, 1335–1342. [Google Scholar] [CrossRef]

- Anastasio, T. The fractional-order dynamics of brainstem vestibulo-oculomotor neurons. Biol. Cybern. 1994, 72, 69–79. [Google Scholar] [CrossRef]

- Anastassiou, G. Fractional neural network approximation. Comput. Math. Appl. 2012, 64, 1655–1676. [Google Scholar] [CrossRef]

- Kaslik, E.; Sivasundaram, S. Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw. 2012, 32, 245–256. [Google Scholar] [CrossRef] [PubMed]

- Arena, P.; Fortuna, L.; Porto, D. Chaotic behavior in noninteger-order cellular neural networks. Phys. Rev. E 2000, 61, 776–781. [Google Scholar] [CrossRef]

- Boroomand, A.; Menhaj, M. Fractional-order Hopfield neural networks. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5506, pp. 883–890. [Google Scholar]

- Zheng, C.; Zhang, H.; Wang, Z. Novel delay-dependent criteria for global robust exponential stability of delayed cellular neural networks with norm-bounded uncertainties. Neurocomputing 2009, 72, 1744–1754. [Google Scholar] [CrossRef]

- Zhang, H.; Dong, M.; Wang, Y.; Sun, N. Stochastic stability analysis of neutral-type impulsive neural networks with mixed time-varying delays and Markovian jumping. Neurocomputing 2010, 73, 2689–2695. [Google Scholar] [CrossRef]

- Chen, L.; Chai, Y.; Wu, R.; Ma, T.; Zhai, H. Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 2013, 111, 190–194. [Google Scholar] [CrossRef]

- Panda, S.K.; Vijayakumar, V.; Agarwal, R.P. Equilibrium points, dynamics and synchronization of neural networks. In Fixed Point Theory from Early Foundations to Contemporary Challenges; Synthesis Lectures on Mathematics & Statistics; Springer: Cham, Switzerland, 2025. [Google Scholar]

- Panda, S.K.; Vijayakumar, V.; Agarwal, R.P. On the uniform stability of fractional-order neural networks and solutions to nonlinear mixed integral equations via nonlinear ϝ-contractions. Complex Anal. Oper. Theory 2025, 19, 191. [Google Scholar] [CrossRef]

- Panda, S.K.; Vijayakumar, V.; Agarwal, R.P.; Rasham, T. Fractional-order complex-valued neural networks: Stability. Discret. Contin. Dyn. Syst. Ser. S 2025, 18, 2622–2643. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, W.; Zhang, Y. Dynamic analysis of fractional-order neural networks with inertia. AIMS Math. 2022, 7, 16889–16906. [Google Scholar] [CrossRef]

- Panda, S.K.; Vijayakumar, V. Results on finite-time stability of various fractional-order systems. Chaos Solitons Fractals 2023, 174, 113906. [Google Scholar] [CrossRef]

- Panda, S.K.; Kalla, K.S.; Nagy, A.M.; Priyanka, L. Numerical simulations and complex-valued fractional-order neural networks via (ε − μ)-uniformly contractive mappings. Chaos Solitons Fractals 2023, 173, 113738. [Google Scholar] [CrossRef]

- Panda, S.K.; Abdeljawad, T.; Nagy, A.M. On uniform stability and numerical simulations of complex-valued neural networks involving generalized Caputo fractional order. Sci. Rep. 2024, 14, 4073. [Google Scholar] [CrossRef]

- Song, C.; Cao, J. Dynamics in fractional-order neural networks. Neurocomputing 2014, 142, 494–498. [Google Scholar] [CrossRef]

- Chang, X.; Xiao, Q.; Zhu, Y.; Xiao, J. Stability analysis of two kinds of fractional-order neural networks based on Lyapunov method. IEEE Access 2021, 9, 124132–124141. [Google Scholar] [CrossRef]

- You, X.; Song, Q.; Zhao, Z. Existence and finite-time stability of discrete fractional-order complex-valued neural networks with time delays. Neural Netw. 2020, 123, 248–260. [Google Scholar] [CrossRef]

- Kannan, R. Some results on fixed points. Bull. Calcutta Math. Soc. 1968, 60, 71–76. [Google Scholar]

- Santina, D.; Othman, W.A.M.; Wong, K.B.; Mlaiki, N. Exploring strong controlled partial metric type spaces: Analysis of fixed points and theoretical contributions. Heliyon 2024, 10, 20. [Google Scholar] [CrossRef]

- Lu, N.; He, F.; Du, W.S. On the best areas for Kannan system and Chatterjea system in b-metric spaces. Optimization 2020, 69, 973–986. [Google Scholar] [CrossRef]

- Younis, M.; Abdou, A.A.N. Novel fuzzy contractions and applications to engineering science. Fractal Fract. 2024, 8, 28. [Google Scholar] [CrossRef]

- Choudhury, B.S.; Bhandari, S.K. Kannan-type cyclic contraction results in 2-Menger space. Math. Bohem. 2016, 141, 37–58. [Google Scholar] [CrossRef]

- Hussain, A. Fractional convex type contraction with solution of fractional differential equation. AIMS Math. 2020, 5, 5364–5380. [Google Scholar] [CrossRef]

- Din, M.; Ishtiaq, U.; Alnowibet, K.A.; Lazăr, T.A.; Lazăr, V.L.; Guran, L. Certain Novel Fixed-Point Theorems Applied to Fractional Differential Equations. Fractal Fract. 2024, 8, 701. [Google Scholar] [CrossRef]

- Wangwe, L.; Kumar, S. A common fixed point theorem for generalized F-Kannan mapping in metric space with applications. In Abstract and Applied Analysis; Hindawi: Cairo, Egypt, 2021; p. 6619877. [Google Scholar]

- Thangaraj, C.; Easwaramoorthy, D.; Selmi, B.; Chamola, B.P. Generation of fractals via iterated function system of Kannan contractions in controlled metric space. Math. Comput. Simul. 2024, 222, 188–198. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.