Multi-Scale Attention-Augmented YOLOv8 for Real-Time Surface Defect Detection in Fresh Soybeans

Abstract

1. Introduction

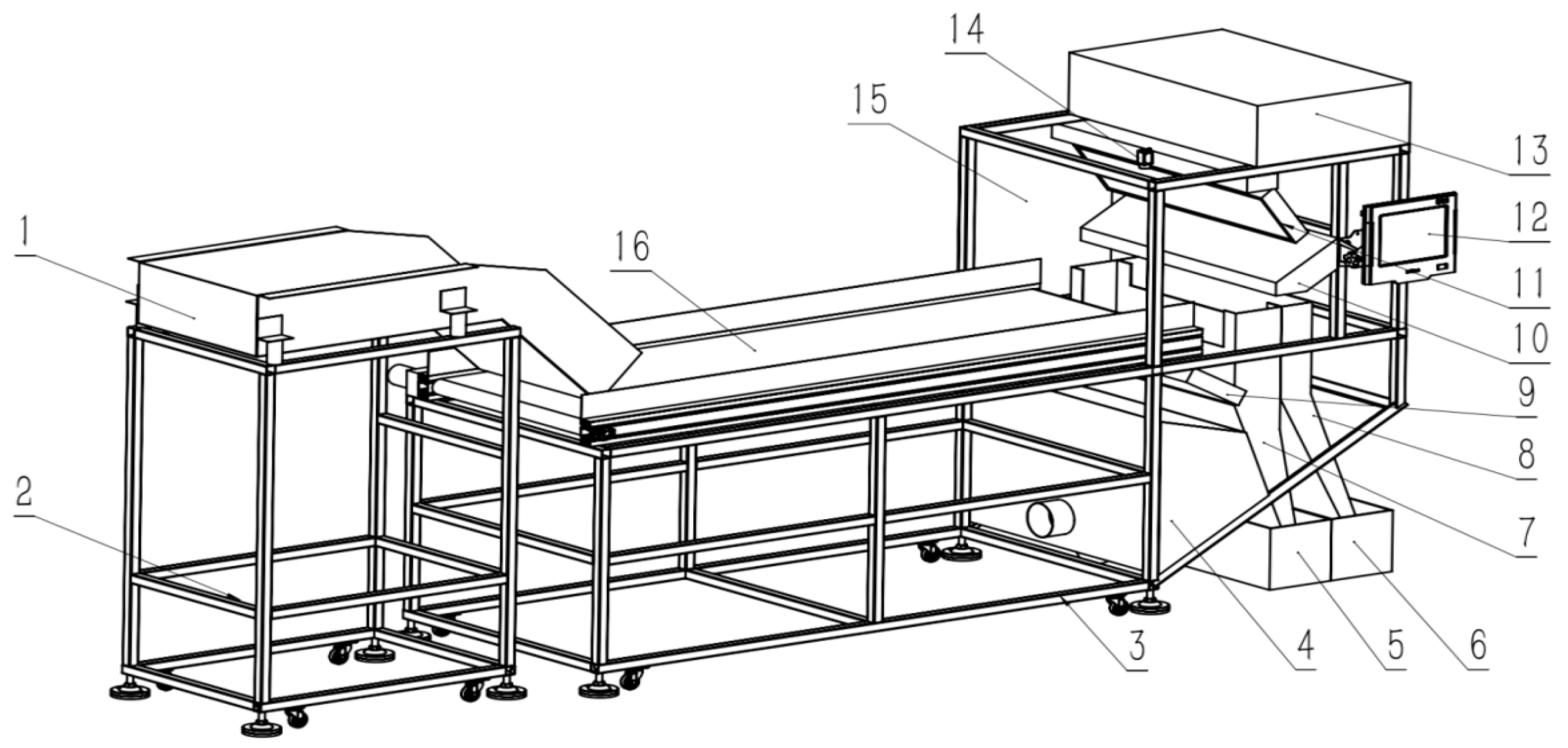

2. Materials and Methods

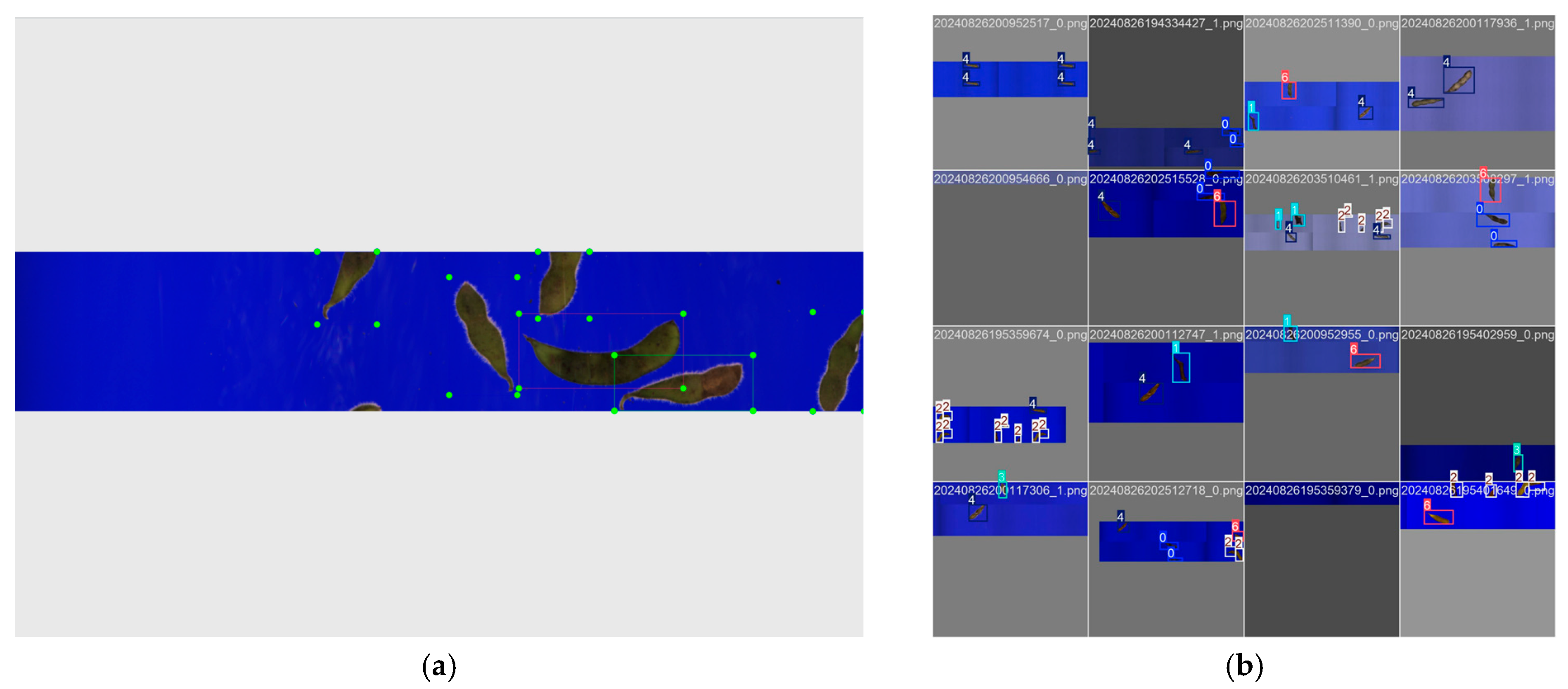

2.1. Image Acquisition and Dataset

2.2. Data Preprocessing

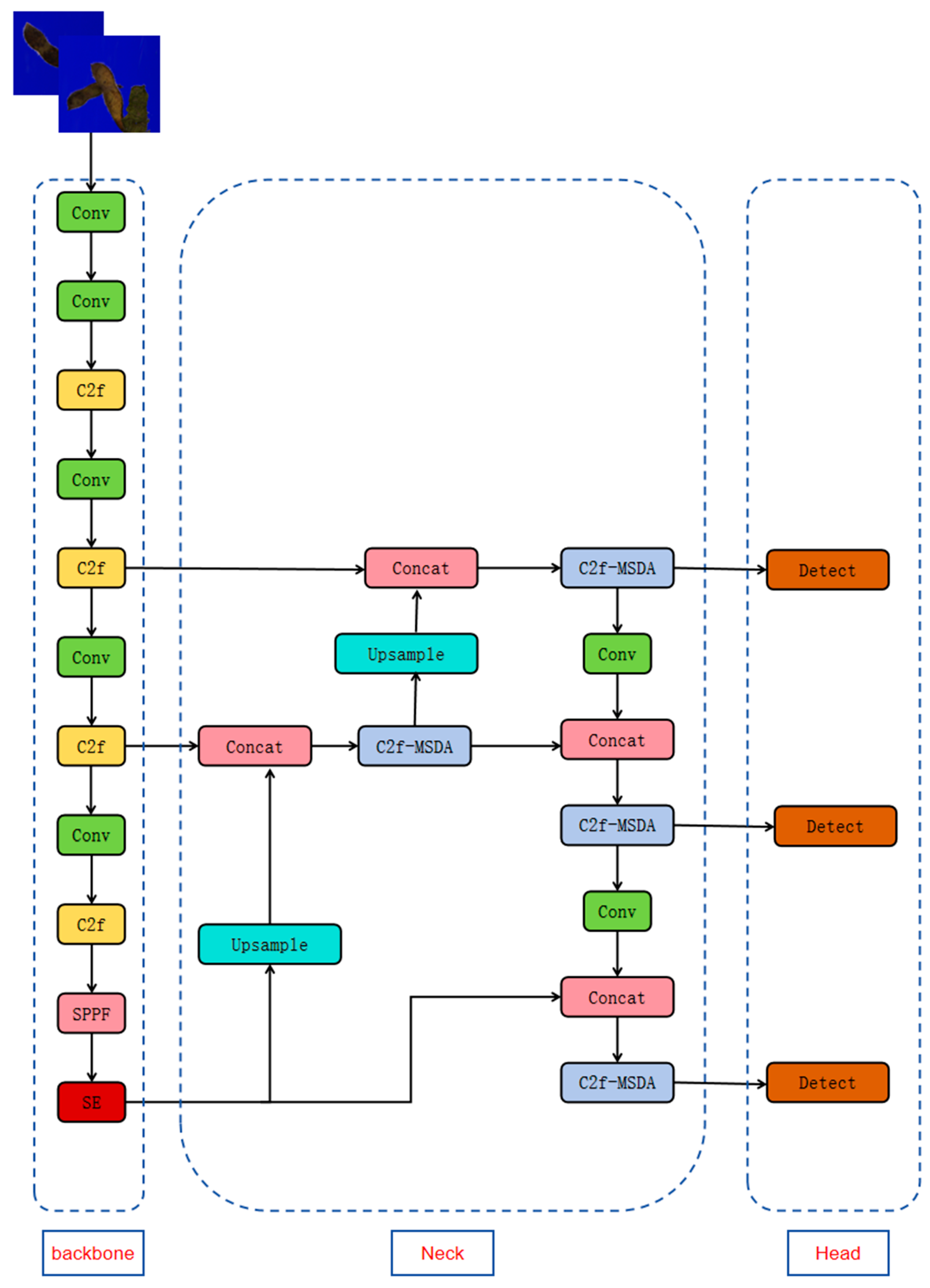

2.3. YOLOv8 Architecture and Improvements

2.3.1. YOLOv8n Baseline

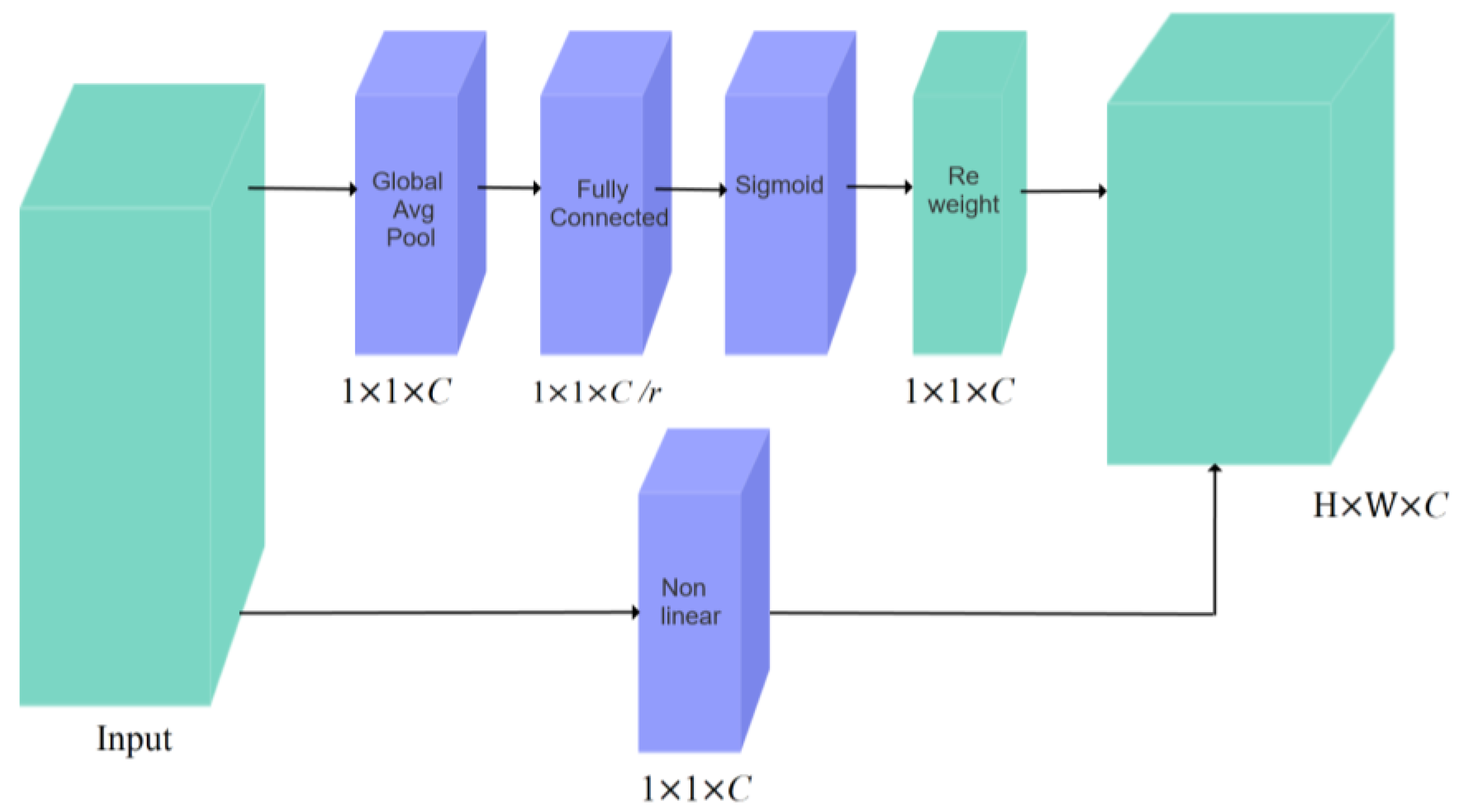

2.3.2. Squeeze-and-Excitation (SE) Attention Module

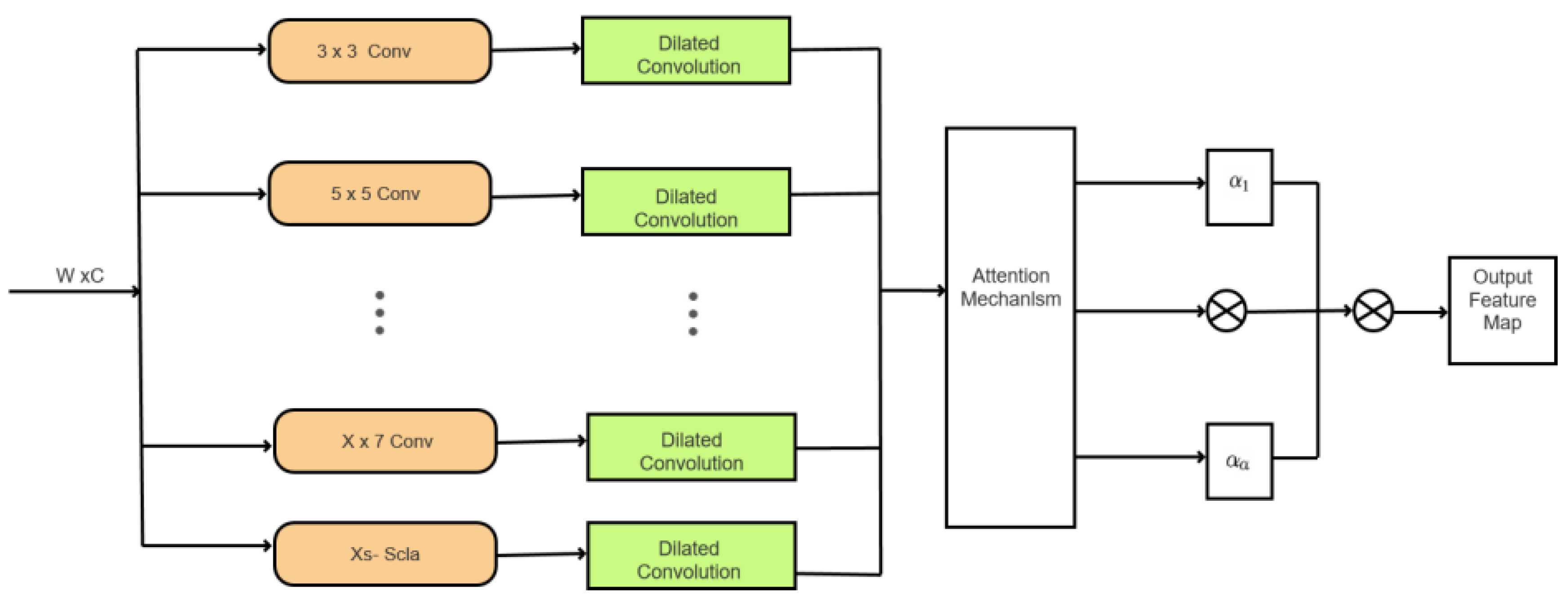

2.3.3. MSDA: Multi-Scale Dilated Attention Module

2.4. Experimental Configuration

3. Experiments and Results

3.1. Evaluation Metrics

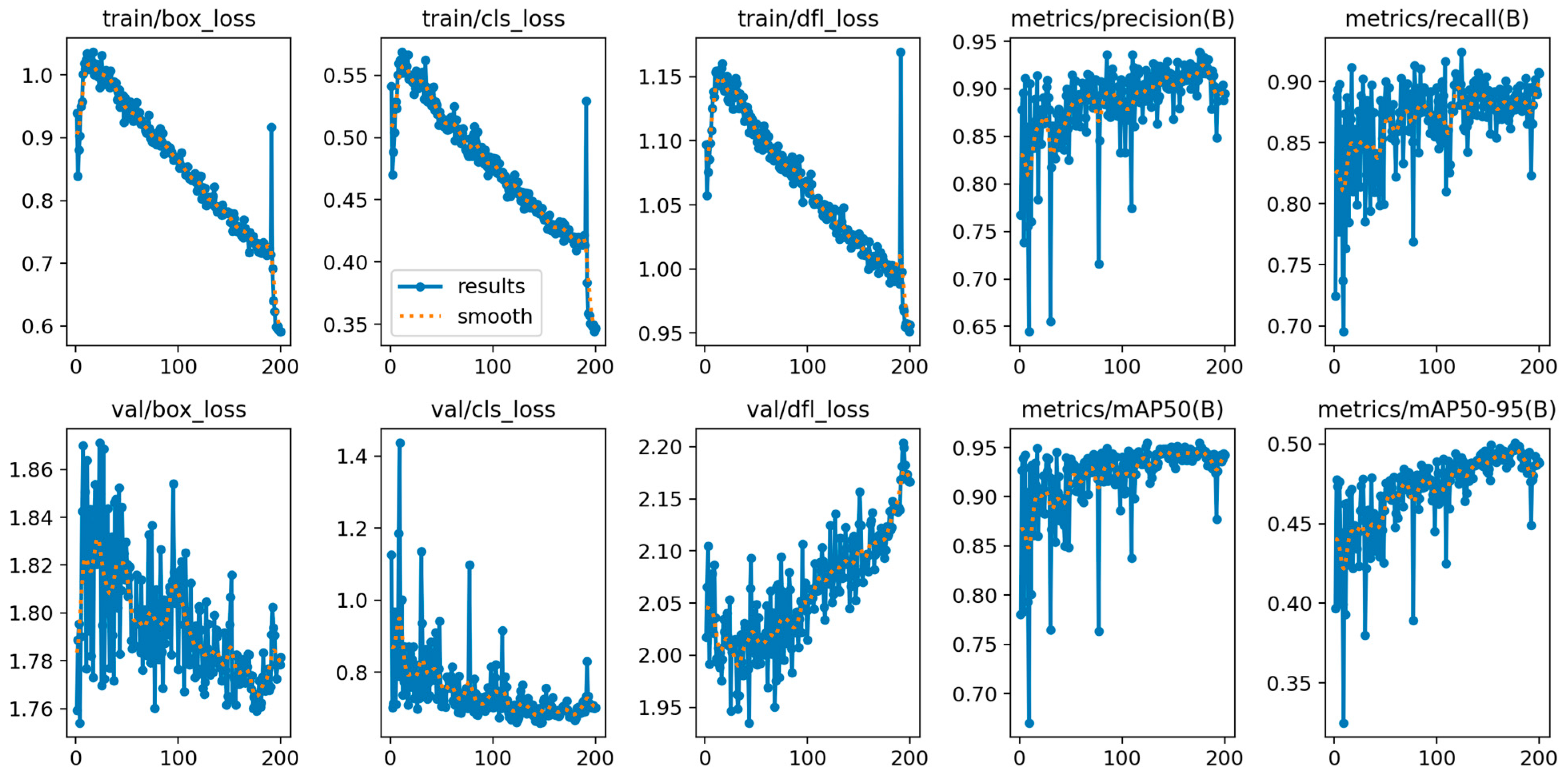

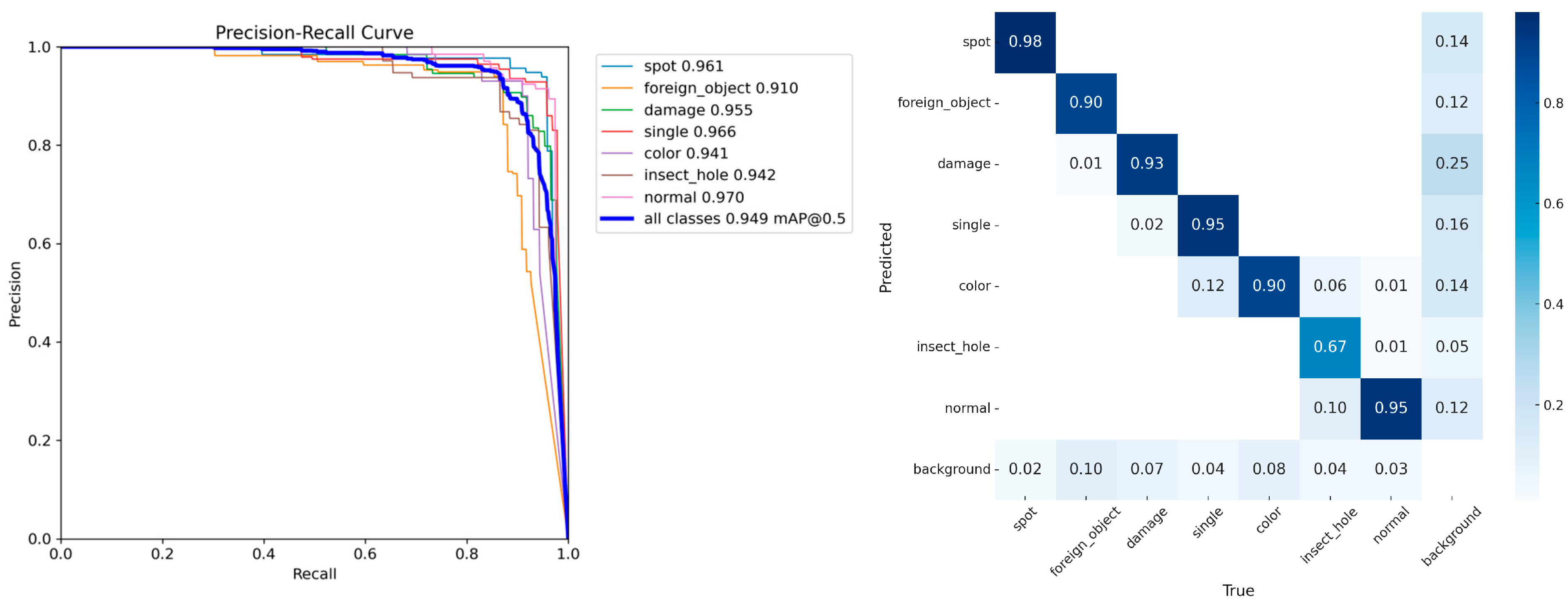

3.2. Performance of the Enhanced YOLOv8n Model

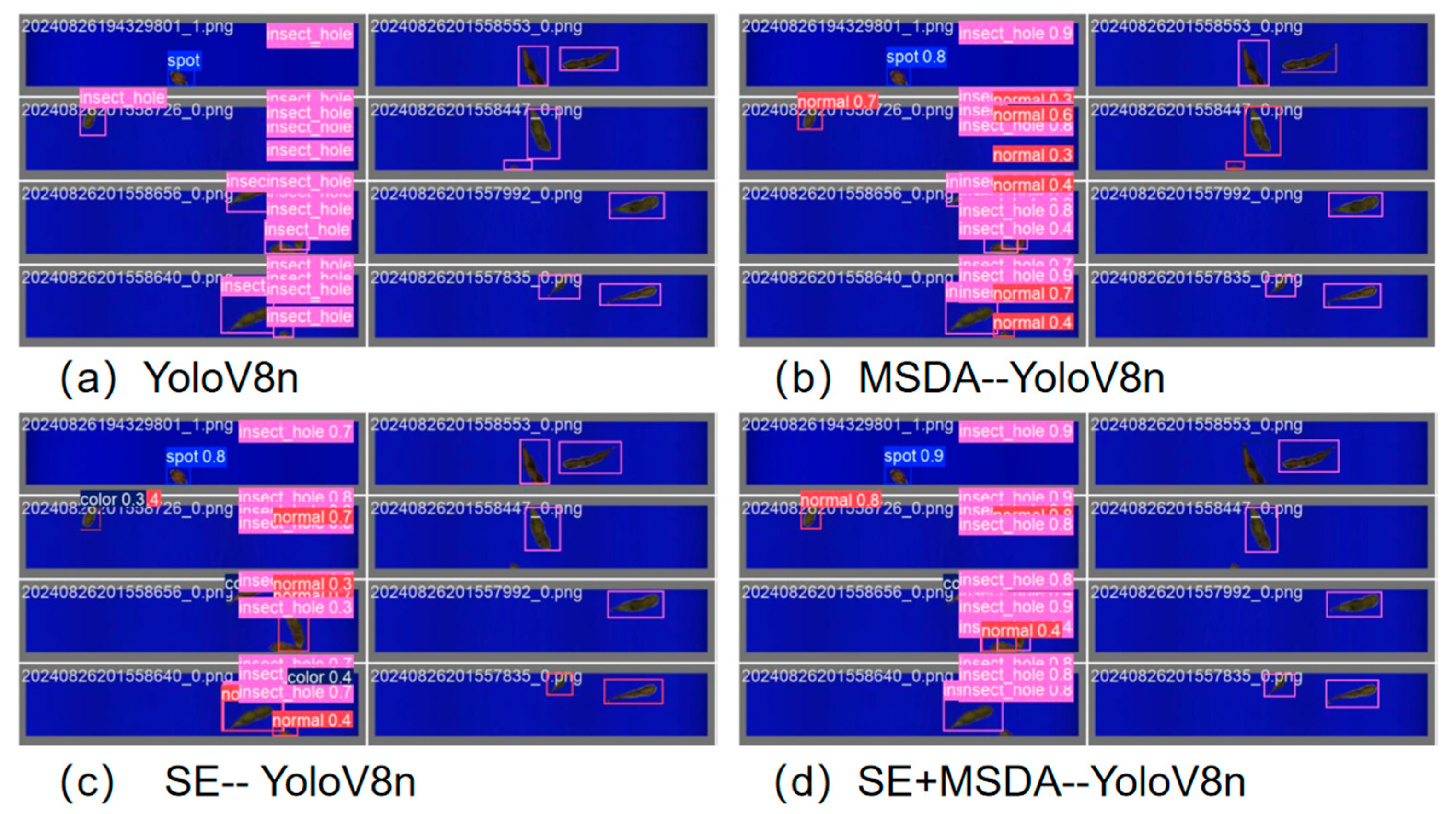

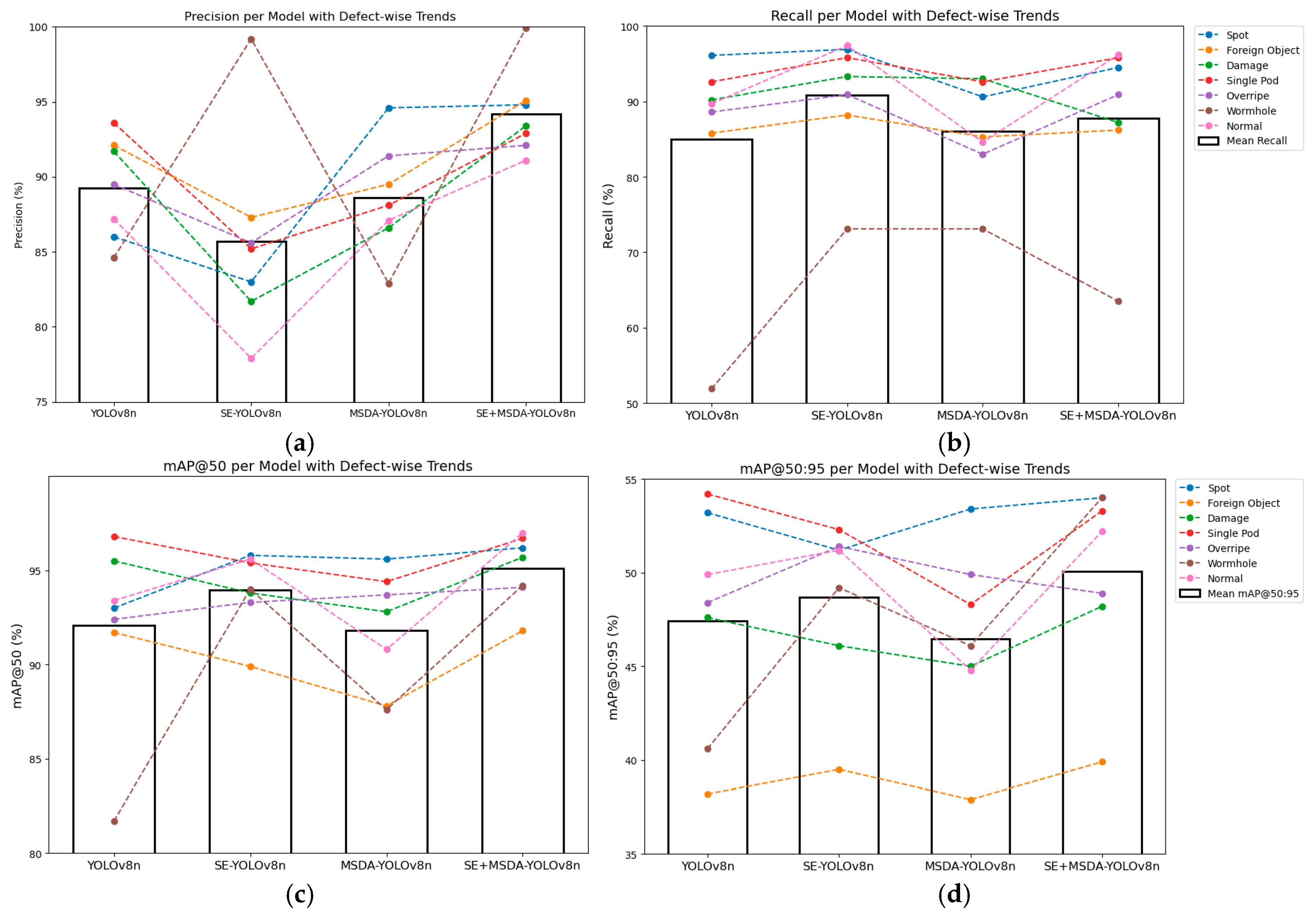

3.3. Ablation Study on Attention Modules for Unified Edamame Defect Detection

3.4. Ablation Study on Defect-Specific Detection Performance of Attention Mechanisms

4. Discussion

4.1. Main Contributions

4.2. System-Level and Practical Implications

4.3. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| FPS | Frames Per Second |

| FLOPs | Floating Point Operations per Second |

| mAP | Mean Average Precision |

| mAP@50 | Mean Average Precision at IoU threshold 50% |

| mAP@50:95 | Mean Average Precision averaged over IoU thresholds from 50% to 95% |

| MSDA | Multi-Scale Dilated Attention |

| SE | Squeeze-and-Excitation |

| YOLO | You Only Look Once |

| YOLOv8n | YOLO version 8 nano |

References

- Azad, Z.R.A.A.; Ahmad, M.F.; Siddiqui, W.A. Food Spoilage and Food Contamination. In Health and Safety Aspects of Food Processing Technologies; Malik, A., Erginkaya, Z., Erten, H., Eds.; Springer International Publishing: Cham, Swizerland, 2019; pp. 9–28. [Google Scholar] [CrossRef]

- Ma, Y.; Yang, W.; Xia, Y.; Xue, W.; Wu, H.; Li, Z.; Zhang, F.; Qiu, B.; Fu, C. Properties and Applications of Intelligent Packaging Indicators for Food Spoilage. Membranes 2022, 12, 477. [Google Scholar] [CrossRef] [PubMed]

- Djanta, M.K.A.; Agoyi, E.E.; Agbahoungba, S.; Quenum, F.J.-B.; Chadare, F.J.; Assogbadjo, A.E.; Agbangla, C.; Sinsin, B. Vegetable soybean, edamame: Research, production, utilization and analysis of its adoption in Sub-Saharan Africa. J. Hortic. For. 2020, 12, 1–12. [Google Scholar] [CrossRef]

- Wszelaki, A.L.; Delwiche, J.F.; Walker, S.D.; Liggett, R.E.; Miller, S.A.; Kleinhenz, M.D. Consumer liking and descriptive analysis of six varieties of organically grown edamame-type soybean. Food Qual. Prefer. 2005, 16, 651–658. [Google Scholar] [CrossRef]

- Grunert, K.G. Food quality and safety: Consumer perception and demand. Eur. Rev. Agric. Econ. 2005, 32, 369–391. [Google Scholar] [CrossRef]

- Gao, X.; Li, S.; Qin, S.; He, Y.; Yang, Y.; Tian, Y. Hollow discrimination of edamame with pod based on hyperspectral imaging. J. Food Compos. Anal. 2025, 137, 106904. [Google Scholar] [CrossRef]

- Macedo, R.A.G.; Belan, P.A.; Araújo, S.A. An Embedded Computer Vision System for Beans Quality Inspection. Int. J. Comput. Appl. 2020, 175, 44–53. [Google Scholar] [CrossRef]

- Vithu, P.; Moses, J.A. Machine vision system for food grain quality evaluation: A review. Trends Food Sci. Technol. 2016, 56, 13–20. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Chen, D.; Lin, F.; Lu, C.; Zhuang, J.; Su, H.; Zhang, D.; He, J. YOLOv8-MDN-Tiny: A lightweight model for multi-scale disease detection of postharvest golden passion fruit. Postharvest Biol. Technol. 2025, 219, 113281. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, S.; Chen, Y.; Xia, Y.; Wang, H.; Jin, R.; Wang, C.; Fan, Z.; Wang, Y.; Wang, B. Detection of small foreign objects in Pu-erh sun-dried green tea: An enhanced YOLOv8 neural network model based on deep learning. Food Control 2025, 168, 110890. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. Available online: https://openaccess.thecvf.com/content_cvpr_2018/html/Hu_Squeeze-and-Excitation_Networks_CVPR_2018_paper.html (accessed on 14 July 2025).

- Sui, J.; Liu, L.; Wang, Z.; Yang, L. RE-YOLO: An apple picking detection algorithm fusing receptive-field attention convolution and efficient multi-scale attention. PLoS ONE 2025, 20, e0319041. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, Z.; Xue, S.; Wu, M.; Zhu, T.; Ni, C. Lightweight SCD-YOLOv5s: The Detection of Small Defects on Passion Fruit with Improved YOLOv5s. Agriculture 2025, 15, 1111. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. Available online: https://openaccess.thecvf.com/content_ECCV_2018/html/Sanghyun_Woo_Convolutional_Block_Attention_ECCV_2018_paper.html (accessed on 14 July 2025).

- Li, M.; Liu, M.; Zhang, W.; Guo, W.; Chen, E.; Zhang, C. A Robust Multi-Camera Vehicle Tracking Algorithm in Highway Scenarios Using Deep Learning. Appl. Sci. 2024, 14, 7071. [Google Scholar] [CrossRef]

- Wu, M.; Lin, H.; Shi, X.; Zhu, S.; Zheng, B. MTS-YOLO: A Multi-Task Lightweight and Efficient Model for Tomato Fruit Bunch Maturity and Stem Detection. Horticulturae 2024, 10, 1006. [Google Scholar] [CrossRef]

- Nguyen, X.-T.; Mac, T.-T.; Nguyen, Q.-D.; Bui, H.-A. An Industrial System for Inspecting Product Quality Based on Machine Vision and Deep Learning. Vietnam. J. Comput. Sci. 2025, 12, 193–208. [Google Scholar] [CrossRef]

- Kuo, C.-J.; Chen, C.-C.; Chen, T.-T.; Tsai, Z.-J.; Hung, M.-H.; Lin, Y.-C.; Chen, Y.-C.; Wang, D.-C.; Homg, G.-J.; Su, W.-T. A Labor-Efficient GAN-based Model Generation Scheme for Deep-Learning Defect Inspection among Dense Beans in Coffee Industry. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; pp. 263–270. [Google Scholar] [CrossRef]

- Xu, M.; Yoon, S.; Fuentes, A.; Park, D.S. A Comprehensive Survey of Image Augmentation Techniques for Deep Learning. Pattern Recognit. 2023, 137, 109347. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Int. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2408.15857. [Google Scholar] [CrossRef]

- Nguyen, L.A.; Tran, M.D.; Son, Y. Empirical Evaluation and Analysis of YOLO Models in Smart Transportation. AI 2024, 5, 2518–2537. [Google Scholar] [CrossRef]

- Hussain, M. YOLOv5, YOLOv8 and YOLOv10: The Go-To Detectors for Real-time Vision. arXiv 2024, arXiv:2407.02988. [Google Scholar] [CrossRef]

- Kandasamy, K.; Natarajan, Y.; Sri Preethaa, K.R.; Ali, A.A.Y. A Robust TrafficSignNet Algorithm for Enhanced Traffic Sign Recognition in Autonomous Vehicles Under Varying Light Conditions. Neural Process. Lett. 2024, 56, 241. [Google Scholar] [CrossRef]

- Su, Q.; Mu, J. Complex Scene Occluded Object Detection with Fusion of Mixed Local Channel Attention and Multi-Detection Layer Anchor-Free Optimization. Automation 2024, 5, 176–189. [Google Scholar] [CrossRef]

- Brauwers, G.; Frasincar, F. A General Survey on Attention Mechanisms in Deep Learning. IEEE Trans. Knowl. Data Eng. 2023, 35, 3279–3298. [Google Scholar] [CrossRef]

- Venkateswara, S.M.; Padmanabhan, J. Deep learning based agricultural pest monitoring and classification. Sci. Rep. 2025, 15, 8684. [Google Scholar] [CrossRef]

- Godinho de Oliveira, B.A.; Magalhães Freitas Ferreira, F.; Martins, C.A.P. da S. Fast and Lightweight Object Detection Network: Detection and Recognition on Resource Constrained Devices. IEEE Access 2018, 6, 8714–8724. [Google Scholar] [CrossRef]

- Zhou, H.; Song, X.; Wang, G.; Li, C. Multi-Scale Attention Network for Object Detection. In Proceedings of the 2023 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Adelaide, Australia, 9–11 July 2023; pp. 80–85. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Shaoqing, W.; Yamauchi, H. A Speed-up Channel Attention Technique for Accelerating the Learning Curve of a Binarized Squeeze-and-Excitation (SE) Based ResNet Model. J. Adv. Inf. Technol. 2024, 15, 565–571. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, R.; Zhang, H.; Yin, C.; Xia, Y.; Fu, M.; Fu, W. Dragon fruit detection in natural orchard environment by integrating lightweight network and attention mechanism. Front. Plant Sci. 2022, 13, 1040923. [Google Scholar] [CrossRef]

- Wang, Z.; Xia, Y.; Wang, H.; Liu, X.; Che, R.; Guo, X.; Li, H.; Zhang, S.; Wang, B. Fresh Tea Leaf-Grading Detection: An Improved YOLOv8 Neural Network Model Utilizing Deep Learning. Horticulturae 2024, 10, 1347. [Google Scholar] [CrossRef]

- da Costa, A.Z.; Figueroa, H.E.H.; Fracarolli, J.A. Computer vision based detection of external defects on tomatoes using deep learning. Biosyst. Eng. 2020, 190, 131–144. [Google Scholar] [CrossRef]

- Sekharamantry, P.K.; Melgani, F.; Malacarne, J. Deep Learning-Based Apple Detection with Attention Module and Improved Loss Function in YOLO. Remote Sens. 2023, 15, 1516. [Google Scholar] [CrossRef]

- Obsie, E.Y.; Qu, H.; Zhang, Y.-J.; Annis, S.; Drummond, F. Yolov5s-CA: An Improved Yolov5 Based on the Attention Mechanism for Mummy Berry Disease Detection. Agriculture 2023, 13, 78. [Google Scholar] [CrossRef]

- Cubero, S.; Lee, W.S.; Aleixos, N.; Albert, F.; Blasco, J. Automated Systems Based on Machine Vision for Inspecting Citrus Fruits from the Field to Postharvest—A Review. Food Bioprocess. Technol. 2016, 9, 1623–1639. [Google Scholar] [CrossRef]

- Nithya, R.; Santhi, B.; Manikandan, R.; Rahimi, M.; Gandomi, A.H. Computer Vision System for Mango Fruit Defect Detection Using Deep Convolutional Neural Network. Foods 2022, 11, 3483. [Google Scholar] [CrossRef]

- Wang, M.; Fu, X.; Sun, Z.; Zha, Z.-J. JPEG Artifacts Removal via Compression Quality Ranker-Guided Networks. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2020; International Joint Conferences on Artificial Intelligence Organization: Yokohama, Japan, 2020; pp. 566–572. [Google Scholar]

- Pandey, V.K.; Srivastava, S.; Dash, K.K.; Singh, R.; Mukarram, S.A.; Kovács, B.; Harsányi, E. Machine Learning Algorithms and Fundamentals as Emerging Safety Tools in Preservation of Fruits and Vegetables: A Review. Processes 2023, 11, 1720. [Google Scholar] [CrossRef]

- Fan, L.; Ding, Y.; Fan, D.; Di, D.; Pagnucco, M.; Song, Y. GrainSpace: A Large-scale Dataset for Fine-grained and Domain-adaptive Recognition of Cereal Grains. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 21084–21093. [Google Scholar] [CrossRef]

- Zatsarynna, O.; Bahrami, E.; Abu Farha, Y.; Francesca, G.; Gall, J. MANTA: Diffusion Mamba for Efficient and Effective Stochastic Long-Term Dense Action Anticipation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2025; pp. 3438–3448. Available online: https://openaccess.thecvf.com/content/CVPR2025/html/Zatsarynna_MANTA_Diffusion_Mamba_for_Efficient_and_Effective_Stochastic_Long-Term_Dense_CVPR_2025_paper.html (accessed on 27 August 2025).

- Li, Y.; Huang, H.; Xie, Q.; Yao, L.; Chen, Q. Research on a Surface Defect Detection Algorithm Based on MobileNet-SSD. Appl. Sci. 2018, 8, 1678. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. Available online: https://openaccess.thecvf.com/content_CVPR_2020/html/Tan_EfficientDet_Scalable_and_Efficient_Object_Detection_CVPR_2020_paper.html (accessed on 27 August 2025).

- Zhang, M.; Jiang, Y.; Li, C.; Yang, F. Fully convolutional networks for blueberry bruising and calyx segmentation using hyperspectral transmittance imaging. Biosyst. Eng. 2020, 192, 159–175. [Google Scholar] [CrossRef]

- Jin, S.; Jiang, X.; Huang, J.; Lu, L.; Lu, S. LLMs Meet VLMs: Boost Open Vocabulary Object Detection with Fine-grained Descriptors. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=usrChqw6yK (accessed on 27 August 2025).

| Model | Precision (%) | Recall (%) | mAP@50 | mAP@50:95 | Parameters # | FLOPs |

|---|---|---|---|---|---|---|

| YOLO v5n | 90.2 | 89.5 | 94.7 | 60.7 | 2.5 × | 7.20 × |

| YOLO v8n | 89.2 | 85.0 | 92.1 | 47.4 | 3.01 × | 8.01 × |

| YOLO v10n | 88.7 | 89.6 | 93.8 | 63.7 | 2.7 × | 8.5 × |

| Proposed | 94.2 | 87.8 | 95.1 | 50.1 | 2.66 × | 7.4 × |

| Model | Precision (%) | Recall (%) | mAP@50 | mAP@50:95 | Parameters # | FLOPs | Inference Time (ms) |

|---|---|---|---|---|---|---|---|

| YOLO v8n | 89.2 | 85.0 | 92.1 | 47.4 | 3.01 × | 8.1 × | 1.6 ms |

| SE | 85.7 | 90.8 | 94.0 | 48.7 | 3.02 × | 8.0 × | 1.8 ms |

| MSDA | 88.6 | 86.0 | 91.8 | 46.5 | 2.65 × | 7.3 × | 1.9 ms |

| SE+MSDA (Proposed) | 94.2 | 87.8 | 95.1 | 50.1 | 2.66 × | 7.4 × | 1.8 ms |

| Defect Category | Metric | YOLOv8n | SE-YOLOv8n | MSDA-YOLOv8n | SE+MSDA-YOLOv8n |

|---|---|---|---|---|---|

| Spot | Precision/Recall | 86.0/96.1 | 83.0/96.9 | 94.6/90.6 | 94.8/94.5 |

| mAP@50/@50:95 | 93.0/53.2 | 95.8/51.2 | 95.6/53.4 | 96.2/54.0 | |

| Foreign Object | Precision/Recall | 92.1/85.8 | 87.3/88.2 | 89.5/85.3 | 95.1/86.2 |

| mAP@50/@50:95 | 91.7/38.2 | 89.9/39.5 | 87.8/37.9 | 91.8/39.9 | |

| Damage | Precision/Recall | 91.7/90.2 | 81.7/93.3 | 86.6/93 | 93.4/87.2 |

| mAP@50/@50:95 | 95.5/47.6 | 93.8/46.1 | 92.8/45 | 95.7/48.2 | |

| Single Pod | Precision/Recall | 93.6/92.6 | 85.2/95.8 | 88.1/92.6 | 92.9/95.8 |

| mAP@50/@50:95 | 96.8/54.2 | 95.4/52.3 | 94.4/48.3 | 96.7/53.3 | |

| Overripe | Precision/Recall | 89.5/88.6 | 85.6/90.9 | 91.4/83.0 | 92.1/90.9 |

| mAP@50/@50:95 | 92.4/48.4 | 93.3/51.4 | 93.7/49.9 | 94.1/48.9 | |

| Wormhole | Precision/Recall | 84.6/51.9 | 99.2/73.1 | 82.9/73.1 | 99.9/63.5 |

| mAP@50/@50:95 | 81.7/40.6 | 94.0/49.2 | 87.6/46.1 | 94.2/54.0 | |

| Normal | Precision/Recall | 87.2/89.7 | 77.9/97.4 | 87.1/84.6 | 91.1/96.2 |

| mAP@50/@50:95 | 93.4/49.9 | 95.6/51.2 | 90.8/44.8 | 97.0/52.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; He, Y.; Huo, D.; Zhu, Z.; Yang, Y.; Du, Z. Multi-Scale Attention-Augmented YOLOv8 for Real-Time Surface Defect Detection in Fresh Soybeans. Processes 2025, 13, 3040. https://doi.org/10.3390/pr13103040

Wu Z, He Y, Huo D, Zhu Z, Yang Y, Du Z. Multi-Scale Attention-Augmented YOLOv8 for Real-Time Surface Defect Detection in Fresh Soybeans. Processes. 2025; 13(10):3040. https://doi.org/10.3390/pr13103040

Chicago/Turabian StyleWu, Zhili, Yakai He, Da Huo, Zhiyou Zhu, Yanchen Yang, and Zhilong Du. 2025. "Multi-Scale Attention-Augmented YOLOv8 for Real-Time Surface Defect Detection in Fresh Soybeans" Processes 13, no. 10: 3040. https://doi.org/10.3390/pr13103040

APA StyleWu, Z., He, Y., Huo, D., Zhu, Z., Yang, Y., & Du, Z. (2025). Multi-Scale Attention-Augmented YOLOv8 for Real-Time Surface Defect Detection in Fresh Soybeans. Processes, 13(10), 3040. https://doi.org/10.3390/pr13103040