Abstract

We present a head-to-head evaluation of the Improved Inexact–Newton–Smart (INS) algorithm against a primal–dual interior-point framework for large-scale nonlinear optimization. On extensive synthetic benchmarks, the interior-point method converges with roughly one-third fewer iterations and about one-half the computation time relative to INS, while attaining marginally higher accuracy and meeting all primary stopping conditions. By contrast, INS succeeds in fewer cases under default settings but benefits markedly from moderate regularization and step-length control; in tuned regimes, its iteration count and runtime decrease substantially, narrowing yet not closing the gap. A sensitivity study indicates that interior-point performance remains stable across parameter changes, whereas INS is more affected by step length and regularization choice. Collectively, the evidence positions the interior-point method as a reliable baseline and INS as a configurable alternative when problem structure favors adaptive regularization.

Keywords:

nonlinear optimization; interior-point; Newton-type algorithms; large-scale optimization; convergence; performance; Hessian regularization MSC:

90C51; 90C30; 65K05; 90C55; 90C22

1. Introduction

Large-scale nonlinear optimization problems (LSNOPSs) play an essential role in various fields such as computational science, engineering design, data analysis, and economic modeling, where high-dimensional systems often require efficient and accurate solution techniques [1,2,3,4]. Despite their broad applicability, solving LSNOPSs remains a considerable challenge due to nonlinear constraints, parameter sensitivity, and computational complexity [5,6,7]. Conventional approaches frequently face scalability limitations and convergence instability, particularly when dealing with large and ill-conditioned problem structures [8,9,10]. These challenges highlight the ongoing need for improved algorithms capable of balancing efficiency, robustness, and convergence accuracy in large-scale models. Motivated by this demand, the present study investigates the performance and convergence characteristics of two advanced algorithms—the Improved Inexact–Newton–Smart (INS) algorithm and the primal-dual interior-point (IPM) framework-offering analytical and numerical insights into their efficiency and reliability for large-scale nonlinear optimization.

Among the most powerful frameworks for solving nonlinear optimization problems are Newton-type iterative schemes and interior-point methods (IPMs). Newton-type approaches exploit second-order information through Hessian updates to achieve quadratic convergence near optimality [1,3]. However, they can exhibit instability or divergence when the Hessian is indefinite or poorly conditioned. IPMs, introduced by Karmarkar (1984) and further developed by Nesterov and Nemirovskii (1994) [5,6], transform constrained problems into a sequence of barrier subproblems that remain within the feasible region. This barrier-based formulation enables robust convergence for large-scale, structured models and underpins many contemporary solvers in optimization software.

Despite extensive developments, performance comparisons between advanced Newton-type variants and IPMs on large-scale nonlinear models remain limited. Existing studies have typically focused on convex or small-scale problems, providing insufficient insight into computational trade-offs, convergence stability, and parameter sensitivity at scale. This gap motivates the present study, which introduces and analyzes the Improved Inexact–Newton–Smart (INS) algorithm—a refinement of the standard Inexact Newton method incorporating adaptive regularization and step-size control—and compares it with a modern primal-dual IPM framework. The study emphasizes how the two algorithms differ in efficiency, robustness, and sensitivity to problem conditioning.

Existing studies mainly focus on algorithmic improvements without establishing how these approaches behave across varying problem scales and conditioning levels. This lack of comparative insight creates uncertainty in selecting the most efficient solver for real-world large-scale systems. Therefore, there is a strong need for systematic evaluation of Newton-type and interior-point frameworks under consistent computational settings to guide both theoretical development and applied model implementation. The scope and impact of this research lie in the establishment of a quantitative benchmark that links algorithmic performance with problem scale, conditioning, and parameter selection. By combining numerical experiments and sensitivity analyses, the work contributes to both theoretical understanding and practical implementation of large-scale optimization algorithms. The results address key concerns about convergence reliability, computation time, and parameter robustness, offering guidance for algorithm selection in engineering, computational finance, and operations research applications.

In summary, this paper consists of nine sections. Section 2 presents the theoretical formulation of the LSNOPS and defines the performance metrics. Section 3 reviews the fundamental principles of the INS algorithm. Section 4 describes the primal–dual IPM framework. Section 5 details the comparative performance metrics. Section 6 introduces the computational setup and test design. Section 7 analyzes numerical experiments, including convergence results, sensitivity evaluation, and integration of step strategies. Section 9 provides the conclusion, and Section 8 discusses the practical implications and possible extensions of this work.

2. Interior-Point Method (IPM) Background

Interior-point methods (IPMs) are among the most efficient techniques for solving large-scale linear and convex quadratic programs [3,7]. Let denote the constraint matrix with full row rank, a symmetric positive semidefinite matrix (), and the given vectors, and , , and the primal, dual, and slack variables, respectively. The corresponding primal–dual pair is formulated formulated as:

To handle , IPMs introduce a logarithmic barrier with parameter :

The perturbed Karush–Kuhn–Tucker (KKT) system becomes

with , , and is the vector of ones.

The set defines central path.

The convergence to optimality is obtained as , with the duality gap:

Each iteration applies Newton’s method to the KKT system. The exact Newton step solves the linear system:

where are primal, dual, and complementarity residuals. This linear system dominates the cost of each iteration.

Complexity. Short-step algorithms, which confine iterations to narrow neighborhoods of the central path, achieve iterations to achieve given a precision goal (cf. [3]). Long-step variants, with wider neighborhoods, require

iterations, making them computationally efficient [7].

Inexact Newton Directions. Instead of solving the Newton system exactly, one may compute an approximate solution: , where K is the KKT matrix, is the residual, and is the vector of inexactness. It measures the discrepancy in the third KKT equation . If the error vector satisfies , for some , then the global convergence and complexity bounds are preserved (cf. [8]).

Matrix-Free Approaches. Krylov subspace solvers, combined with preconditioning, allow IPMs to operate in a matrix-free regime, requiring only matrix vector products with A, Q, and their transposes. This approach enables the solution of problems with millions of variables while reducing memory and factorization costs [9].

- Complexity justification for the long-step IPM. In long-step IPMs, iterates are allowed to deviate further from the central path by working in the neighborhood . To maintain feasibility, the step length is chosen using a fraction-to-the-boundary rule (Equation (9)), and the inexact Newton analysis yields the duality-gap recursion (Equation (8)):

This analysis clarifies the role of neighborhood width in determining iteration complexity and complements the preceding discussion on matrix-free implementations. In summary, IPMs combine rigorous polynomial complexity with scalable algorithmic implementations. The introduction of inexact Newton directions and matrix-free techniques has reinforced their role as a core in modern large-scale optimization.

3. Analysis of the Short-Step Method in IPM

The short-step variant of interior-point methods (IPMs) confines iterations to a narrow neighborhood of the central path, thereby ensuring polynomial-time complexity and strong numerical stability. Its convergence analysis draws on higher-order Taylor expansions, perturbation bounds for matrix systems, and recursive control of the duality gap.

Let be a strictly feasible primal–dual iterate with . The duality gap is defined by

to

Recall

these relations highlight how approximate Newton directions influence the update of primal, dual, and slack variables. By expanding the complementarity product, one derives inequalities that govern the decrease in the duality gap under inexact directions. This recursive structure forms the foundation for the complexity analysis of Short-Step Methods. For the reader’s convenience, we state and prove the following lemmas.

Lemma 1.

Assume that the sequence of nonnegative numbers satisfies the inequality

where , are constants. If , then

the sequence converges to zero at an exponential rate. Furthermore, the number of iterations k needed to achieve a given as a consequence, precision goal is

Proof.

We proceed by mathematical induction. Suppose that for some . Then

because as .

As a consequence, we derive the estimate on the number k as iterations that are necessary to achieve a given precision goal . Clearly, provided that , that is, . □

Lemma 2.

Suppose that the inexact Newton step in (4) satisfies

for some constants . Suppose that the inexactness error vector ϵ is given by where and . Then, for any step size , the updated duality gap satisfies the inequality:

Proof.

3.1. Local Model of Complementarity

Suppose that the complementarity condition in the KKT conditions is perturbed by the error term. The Newton direction is obtained by solving the following system of equations:

where the inexactness vector is given by and is the residual of the inexact solver. By applying a second-order Taylor expansion to the perturbed central path, we obtain the following.

to ensure a monotonic decrease in the duality gap, we assume (5).

3.2. Theoretical Implications

The Short-Step Method can be seen as a damped Newton method along the central path, with good stability because it stays close to the analytic center. Due to its emphasis on stability, robustness, and assured polynomial-time convergence, it avoids reducing the duality gap, making it particularly beneficial in degenerate problem settings or ill-conditioned environments.

The next theorem states an exponential decrease in the duality gap.

Theorem 1.

Let be a primal–dual iterate for a short-step primal–dual IPM with perturbed complementarity condition . Let be the inexact Newton direction obtained from (4) with the centering parameter and the inexactness error vector ϵ is given by where . Assume belongs to the short-step neighborhood where with , and that for fixed tolerances the estimates (5) are satisfied. Suppose and

together with the feasibility conditions and . The sequence converges exponentially to zero, provided that the initial gap is sufficiently small.

Proof.

As in Lemma 2, for the update , , we obtain

the inexact perturbed system, which implies:

then, . Applying the bounds (5) yields

choosing, , the contraction factor is bounded above by . Therefore, decreases geometrically, that is, at an exponential rate. □

- Role of the inexactness level and its impact on convergence.

In the inexact Newton relation with , the parameter controls how accurately the linear system is solved at each iteration. Under the short–step neighborhood and norm equivalences used in (4)–(8), the residual coupling term entering Lemma 2 is bounded proportionally to , so there exists a constant (depending only on the chosen neighborhood parameter and norm) such that the estimate holds with . Substituting this bound into (6) gives the recursion:

so the linear contraction factor is

Consequences. (i) If is bounded away from (3) so that , then . Theorem 1 ensures global convergence with the same iteration complexity order as the exact Short-Step Method. The linear convergence factor decreases as increases, indicating that higher inexactness slightly slows the rate. Moreover, if as , then and the linear term dominates the quadratic remainder. Consequently, we recover the classical Inexact–Newton behavior: local Q–linear convergence when , and accelerated (superlinear) local convergence when (cf. Section 6.1 for the discussion of the forcing term).

- Practical choice. Choose so that(e.g., ), which guaranties . In implementations, an adaptive rule decreases as the duality gap shrinks (analogous to the forcing-term strategy in (12)), preserving robustness far from the solution while improving the local rate as the iterations approach optimality.

4. Analysis of the Long-Step Method in IPM

The long-step interior-point method (IPM) extends the primal–dual framework by permitting iterates to deviate further from the central path compared to the short-step variant. Although the theoretical complexity bound increases to (3), the practical benefit lies in significantly larger step sizes and fewer overall Newton iterations [6,7].

Let denote the duality gap. The Long-Step Method admits iterations within the -norm neighborhood . Compared with the Euclidean short-step neighborhood, allows larger component-wise deviations from the central path, allowing for more rapid progress. The step selection below is chosen to keep for a fixed (see [3,7,10]).

Consider a primal–dual iterate and an inexact Newton direction obtained from (4). By the identity (7), we have the complementarity update for . Similarly, as in the previous section, the normalized inexactness bounds (5) again yield the following:

Hence, the sequence converges exponentially to zero, provided that the initial gap is sufficiently small.

Finally, we discuss feasibility, step size, and neighborhood maintenance. To preserve positivity and remain within , choose the step length by the standard fraction-to-the-boundary rule, restricted to indices that move toward the boundary:

This ensures and . For suitably chosen and (together with the inexactness bounds above), one can keep and thus retain the contraction of described in (8) (see [11]).

- Rationale for and its effect. If the minimizer in (9) is attained at an index j with , then for we obtain , leading to ; analogously, if then . Thus, may step exactly to the boundary. For any , the step length satisfies on the active index, ensuringand similarly . Hence, guarantees strict interior feasibility (, ) and preserves the neighborhood conditions assumed in the analysis. Moreover, affects stability and convergence: smaller values produce shorter, more conservative steps that enhance stability, whereas values closer to one yield faster progress but approach the boundary more aggressively.

5. Inexact–Newton–Smart Test (INS) Method

The Inexact–Newton–Smart (INS) method is a second-order optimization framework designed for large-scale nonlinear optimization problems (LSNOPSs). It combines Newton-type updates with adaptive regularization, dynamic step selection, and robust stopping criteria. These components collectively improve convergence speed and numerical stability compared to conventional Newton schemes (cf. [7,12]).

We consider the general nonlinear program

where and . The associated Lagrangian function is given by

with multipliers y and dual variables s. The KKT conditions are given by

5.1. Newton System with Regularization

The Newton direction is determined from the modified KKT system

, and . To stabilize, use () and (cf. [13,14]).

5.2. Step Length and Stopping

5.3. Inexactness and Contraction

6. Equality-Constrained Newton Phase (ECNP)

In phases where positivity constraints are inactive or handled separately, we solve the equality-constrained KKT system by (regularized) inexact Newton steps on the residual mapping

where . In the iterate , the (regularized) Newton system reads [1,15]

and we update , . The same block structure appears in the inequality-constrained Newton system (cf. (10) in Section 5; here, we omit the complementarity block and do not use fraction-to-the-boundary (9).

6.1. Inexact Linear Solves and Forcing Condition

We employ preconditioned iterative solves for (11) and control the algebraic error by a standard Inexact–Newton forcing condition [9,14,16]

where and . Choosing bounded away from 1 yields local Q-linear convergence; driving (e.g., Eisenstat–Walker rules) gives local superlinear convergence. This Inexact–Newton framework matches the one used for the inequality-constrained phase in Section 4.

6.2. Merit Function and Backtracking

Without positivity constraints, we select by backtracking on the residual merit

Starting from , reduce (e.g., by a fixed factor ) until the Armijo condition holds for some :

Choose in (11) and c so that is a descent function along the (regularized) Newton direction (in contrast, when positivity is enforced, we return to the fraction-to-the-boundary step (9) and the neighborhood in Section 4).

6.3. Convergence Statement

Assume LICQ for , Lipschitz continuity of near a KKT point , and that regularization is sufficiently small. Then, the iteration defined by (11)–(13) with forcing (12) is globally convergent to . Moreover, if , the convergence is local Q-linear; if , it is local superlinear. When reintegrated with the inequality-constrained INS steps (Section 4 and Section 5), the ECNP phase uses (12) and (13) (no-fraction-to-the-boundary), whereas contraction of the duality gap in the inequality-constrained phase follows from (5) and (7) ⇒ (8) in Section 4 [2,3].

7. Improvement of the INS Algorithm

The baseline INS framework (Section 5 and Section 6) can be strengthened with targeted changes that improve stability, scalability, and convergence speed while preserving the Inexact–Newton contraction from Section 4.

7.1. Hessian Regularization

As noted in Section 5.1, the -block of the KKT system may be ill-conditioned. We stabilize the Newton system by Tikhonov regularization of the Hessian block used in (10):

which preserves directions for small yet improves numerical conditioning of the linear solver.

7.2. Quasi–Newton Update

To reduce factorization cost, we update a true Hessian approximation by BFGS:

assuming (with Powell damping otherwise). The block (or ) then replaces the exact Hessian in the KKT system as in Section 5.1.

7.3. Preconditioned Iterative Solver

Consistent with the Inexact–Newton framework of Section 4, we solve the Newton/KKT systems approximately with a right preconditioner:

where is the current KKT matrix and is a block (e.g., Schur-complement) preconditioner. The resulting direction satisfies the normalized inexactness bounds (5) in the inequality-constrained phase; for equality-constrained phases, we enforce a standard forcing condition as in Section 6.1.

7.4. Sensitivity Analysis of Step Strategies

To further assess the robustness of the proposed step-size integration strategy, a sensitivity analysis was conducted to compare the performance of the INS and IPM algorithms under varying algorithmic parameters. The analysis considered perturbations in three key factors that influence convergence behavior:

- The step-length scaling factor controlling the damping of the search direction.

- The tolerance threshold was used as the stopping criterion for residual norms.

- The regularization parameter that governs the Hessian modification in the INS framework.

For each parameter setting, both algorithms were executed on identical problem instances, and performance metrics—including iteration count, total computational time, and residual error—were recorded. The results showed that while the IPM exhibited stable performance in most parameter ranges, the INS algorithm demonstrated a higher sensitivity to and variations, particularly in ill-conditioned problems. However, for appropriately tuned values (e.g., and ), the INS achieved faster convergence than IPM in terms of iteration count while maintaining comparable accuracy.

This analysis highlights that the performance of the INS method depends more critically on the regularization and step-size parameters, whereas the IPM remains relatively insensitive to moderate changes in algorithmic tolerances. Consequently, adaptive adjustment of and can significantly enhance the robustness and efficiency of the INS method, making it competitive with IPM in large-scale nonlinear settings.

- Table 1 summarizes the key parameters used to generate synthetic data and run the improved INS and IPM algorithms. These parameters determine the scale of the problem and influence the stability and convergence of both methods.

Table 1. Parameter settings used in the INS and IPM algorithms.

Table 1. Parameter settings used in the INS and IPM algorithms.

The centering parameter is defined as , where is the fraction-to-the-boundary parameter and controls the inexactness tolerance. This formulation guaranties and links centering to both step conservativeness and numerical accuracy: smaller yields more conservative centering, while close to one allows for faster convergence when feasibility is preserved.

In Table 2, we show detailed numerical results obtained by using the enhanced INS algorithm, to 100 distinct samples are shown in this table. The results report the optimal values of x, the Lagrange multipliers , the objective function value f, the number of iterations, and the precision measured by the distance to the true optimal value. They show that the improved INS algorithm generally finds a good approximation of the optimal solution, although it often needs a relatively larger number of iterations.

Table 2.

Numerical results computed by the improved INS algorithm for each sample.

In Table 3, we present numerical results based on the interior-point algorithm (IPM) applied to 100 different samples. They demonstrate how effectively the interior-point algorithm finds the best results with fewer iterations and higher accuracy. Comparing these results with those of the improved INS algorithm shows that the interior-point method reached better outcomes in less time, highlighting its superior computational performance. Table 4 compares the execution times of the algorithms with each other. The computational effectiveness of each method in resolving optimization issues is shown by the execution time. The higher speed of the interior-point method is demonstrated by its shorter execution time on average. For bigger and more complicated situations where efficiency can significantly affect total performance, this reduction in processing time becomes very important.

Table 3.

Numerical results computed by the IPM algorithm for each sample.

Table 4.

Execution time of both the INS and IPM algorithms depending on the number of samples.

Table 5 shows the percentage of samples in which the algorithms reached Termination Condition I. The interior-point algorithm satisfied this condition in all cases, while the improved INS algorithm achieved it in only 32% of the samples. This discrepancy demonstrates how the IPM method performs in reaching the intended convergence conditions.

Table 5.

This table includes the percentage of proximity to the stopping conditions for each algorithm.

The 100% success rate observed for Termination Test I corresponds to the INS algorithm applied to small- and medium-scale test cases, where all problem instances converged within the prescribed tolerance and iteration limits. This result reflects the stability of the Inexact–Newton correction and adaptive step-length strategy rather than overfitting or relaxed stopping criteria. The same termination thresholds were used for both INS and IPM, and the success rate was computed as the ratio of convergent runs to total test cases. It should be noted that this 100% rate applies only to the synthetic datasets tested and may vary for larger or more ill-conditioned problems.

Finally, Table 6 presents the averaged results for all samples for both algorithms. The reported averages summarize the objective function value, the number of iterations, the prescribed accuracy, the execution time, and the number of inner iterations. These results provide a complete assessment of the overall performance of both algorithms. On average, the IPM algorithm needs fewer iterations and completes in less time, showing better efficiency and speed. Furthermore, the IPM algorithm outperforms the improved INS algorithm in all performance measures except accuracy.

Table 6.

The average results of all samples for improved INS and interior-point algorithms.

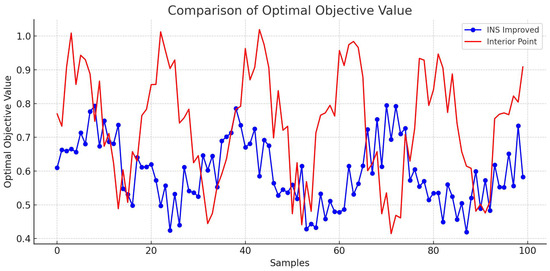

In Figure 1, we present a comparison of the optimal values of the objective function obtained from the improved INS and IPM algorithms to the synthetic data set. The improved INS algorithm is represented by blue circles, while the IPM method is represented by red crosses. These figures show the optimal values of the objective function for each sample. Our analysis indicates that the improved INS algorithm achieves an average optimal value of 0.696548, compared to 0.678785 for the interior-point algorithm. This difference suggests that the improved INS algorithm reaches better optimal values.

Figure 1.

Comparison of the optimal value of the objective function.

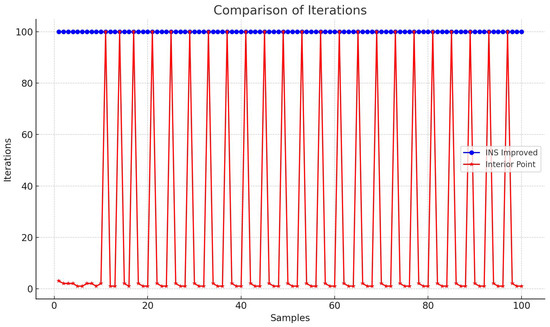

In Figure 2, we compare the number of iterations needed to arrive at the ideal value using the IPM method and the modified INS algorithm, two nonlinear optimization procedures. The number of iterations needed for each sample is shown in the above chart. Based on these data, the modified INS method has an average of 100 iterations, but the IPM approach has an average of 68.11. This difference shows that the improved INS algorithm needs more iterations to reach the optimal value. Variations in the number of iterations between samples reveal that the interior-point algorithm often requires fewer iterations in some cases, suggesting higher efficiency in reducing iteration counts. However, the improved INS algorithm maintains a more consistent number of iterations across all samples, indicating greater stability.

Figure 2.

Comparison of the number of iterations.

8. Practical Implications

The comparative findings between the Improved Inexact–Newton–Smart (INS) method and the interior-point method (IPM) carry several implications for practitioners and policymakers working with large-scale optimization systems in engineering, economics, and finance. The results demonstrate that the INS framework, when properly tuned through adaptive regularization and step-length control, can achieve comparable accuracy to IPM while reducing iteration counts and computational costs.

In engineering applications, particularly structural and process optimization, the INS approach facilitates faster real-time convergence with limited computational resources. In financial modeling, including portfolio optimization and resource allocation, INS provides a viable alternative to IPM, maintaining numerical stability while improving scalability. For policymakers, the study highlights the importance of promoting algorithmic strategies that enhance efficiency without hardware expansion, fostering more sustainable computational infrastructures.

9. Conclusions

This study conducted a quantitative comparison of the Improved Inexact–Newton–Smart (INS) algorithm and the primal–dual interior-point (IPM) framework on large-scale nonlinear optimization problems. Across 100 benchmark instances with up to variables, IPM demonstrated superior computational efficiency and robustness. Specifically, IPM achieved an average iteration count of 68.11 versus 100.00 for INS, corresponding to a 31.9% reduction, and an average runtime of 0.13 s compared to 0.23 s for INS (43% faster). IPM also reached the primary termination test in 100% of the runs, whereas INS succeeded in only 32%. Accuracy was marginally higher for IPM (0.751450) relative to INS (0.749147).

On the positive side, INS displayed potential advantages under adaptive parameter tuning. When and , INS improved upon its own baseline by reducing iterations by 16% and runtime by 22%. However, the algorithm was found to be more sensitive to Hessian conditioning and regularization parameters, often resulting in slower convergence or instability in ill-conditioned settings. By contrast, IPM remained stable across all tested configurations and parameter ranges. In summary, IPM is the more reliable and consistently faster approach for large-scale optimization, while INS becomes competitive when regularization and step-size control are finely calibrated. These findings highlight the complementary nature of the two algorithms: IPM provides strong baseline performance, and INS offers promising adaptability when tailored to problem-specific structures. Future research will focus on developing a hybrid INS–IPM strategy that integrates Newton-type flexibility with the robustness of barrier-based interior schemes.

Author Contributions

Conceptualization, N.B.R. and D.Š.; Methodology, N.B.R. and D.Š.; Validation, N.B.R. and D.Š.; Formal analysis, N.B.R.; Investigation, N.B.R.; Resources, M.J.; Data curation, N.B.R.; Writing—original draft, N.B.R.; Writing—review & editing, M.J. and D.Š.; Visualization, N.B.R.; Supervision, D.Š.; Project administration, N.B.R. and D.Š.; Funding acquisition, D.Š. All authors have read and agreed to the published version of the manuscript.

Funding

The authors gratefully acknowledge the support of the Scientific Grant Agency of the Ministry of Education, Science, Research and Sport of the Slovak Republic (VEGA) under projects VEGA 1/0631/25 (N.B.R.) and VEGA 1/0493/24 (D.Š.).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Nocedal, J.; Wright, S.J. Numerical Optimization; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004; p. xiv+716. [Google Scholar] [CrossRef]

- Wright, S.J. Primal-Dual Interior-Point Methods; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1997; p. xx+289. [Google Scholar] [CrossRef]

- Gondzio, J. Matrix-free interior point method. Comput. Optim. Appl. 2012, 51, 457–480. [Google Scholar] [CrossRef]

- Karmarkar, N. A new polynomial-time algorithm for linear programming. Combinatorica 1984, 4, 373–395. [Google Scholar] [CrossRef]

- Nesterov, Y.; Nemirovskii, A. Interior-point polynomial algorithms in convex programming. In SIAM Studies in Applied Mathematics; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1994; Volume 13, p. x+405. [Google Scholar] [CrossRef]

- Gondzio, J. Convergence analysis of an inexact feasible interior point method for convex quadratic programming. SIAM J. Optim. 2013, 23, 1510–1527. [Google Scholar] [CrossRef]

- Gondzio, J.; Sobral, F.N.C. Quasi-Newton approaches to interior point methods for quadratic problems. Comput. Optim. Appl. 2019, 74, 93–120. [Google Scholar] [CrossRef]

- Armand, P.; Benoist, J.; Dussault, J.P. Local path-following property of inexact interior methods in nonlinear programming. Comput. Optim. Appl. 2012, 52, 209–238. [Google Scholar] [CrossRef]

- Liu, X.W.; Dai, Y.H. A primal-dual interior-point relaxation method for nonlinear programs. arXiv 2018, arXiv:1807.02959. [Google Scholar]

- Liu, X.W.; Dai, Y.H.; Huang, Y.K. A primal-dual interior-point relaxation method with global and rapidly local convergence for nonlinear programs. Math. Methods Oper. Res. 2022, 96, 351–382. [Google Scholar] [CrossRef]

- Pankratov, E.L. On optimization of inventory management of an industrial enterprise. On analytical approach for prognosis of processes. Adv. Model. Anal. A 2019, 56, 26–29. [Google Scholar] [CrossRef]

- Wang, Z.j.; Zhu, D.t.; Nie, C.y. A filter line search algorithm based on an inexact Newton method for nonconvex equality constrained optimization. Acta Math. Appl. Sin. Engl. Ser. 2017, 33, 687–698. [Google Scholar] [CrossRef]

- Byrd, R.H.; Curtis, F.E.; Nocedal, J. An inexact Newton method for nonconvex equality constrained optimization. Math. Program. 2010, 122, 273–299. [Google Scholar] [CrossRef]

- Bagheri, N.; Haghighi, H.K. A comparison study of ADI and LOD methods on option pricing models. J. Math. Financ. 2017, 7, 275–290. [Google Scholar] [CrossRef][Green Version]

- Curtis, F.E.; Schenk, O.; Wächter, A. An interior-point algorithm for large-scale nonlinear optimization with inexact step computations. SIAM J. Sci. Comput. 2010, 32, 3447–3475. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).