Abstract

As large language models (LLMs) are increasingly used to support learning, there is a growing need for a principled framework to guide the design of LLM-based tools and resources that are pedagogically effective and contextually responsive. This study proposes a framework by examining how prompt engineering can enhance the quality of chatbot responses to support middle school students’ scientific reasoning and argumentation. Drawing on learning theories and established frameworks for scientific argumentation, we employed a design-based research approach to iteratively refine system prompts and evaluate LLM-generated responses across diverse student input scenarios. Our analysis highlights how different prompt configurations affect the relevance and explanatory depth of chatbot feedback. We report findings from the iterative refinement process, along with an analysis of the quality of responses generated by each version of the chatbot. The outcomes indicate how different prompt configurations influence the coherence, relevance, and explanatory processes of LLM responses. The study contributes a set of critical design principles for developing theory-aligned prompts that enable LLM-based chatbots to meaningfully support students in constructing and revising scientific arguments. These principles offer broader implications for designing LLM applications across varied educational domains.

1. Introduction

Recent advances in large language models (LLMs), such as OpenAI’s GPT, offer promising opportunities to address challenges with providing students feedback as they engage in educational activities. Within the context of developing conversational agents, LLMs offer the potential to engage students in structured dialog, provide real-time feedback, and model evidence-based reasoning in response to student inputs. When paired with carefully designed system prompts, LLMs can simulate responsive and epistemically grounded conversations that guide students through complex learning processes. This opens new opportunities for addressing long-standing challenges in education, particularly around providing timely and individualized feedback at scale in formative assessment contexts.

Despite the promise, recent research has cautioned that many current LLM applications in education rely on ad hoc design approaches and lack grounding in established learning science frameworks (). Without careful integration of design principles, LLM-generated feedback risks being generic or misaligned with instructional goals. Research has emphasized the need for more principled design processes that draw on decades of research in intelligent tutoring systems, feedback frameworks, and collaborative learning environments (; ). These studies highlight the importance of aligning LLM system behavior with the cognitive and social dimensions of learning through explicit design strategies and evaluation methods.

In this study, we respond to this call by investigating how prompt engineering can be used to design an LLM-based chatbot that supports middle school students in constructing scientific arguments during a formative assessment task focused on ecosystem interactions. The task engages students in interacting with a simulation to collect data, formulating a claim about ecosystem dynamics, and providing evidence and reasoning to support their claim. The LLM-based chatbot is embedded within this task to provide feedback to scaffold students’ engagement with the practice of scientific argumentation. The chatbot can also help students clarify the task, understand disciplinary content, and improve their reasoning process as they progress through the task.

To ensure that the LLM-based chatbot responses are relevant and epistemically aligned, we employed an iterative, human-in-the-loop design approach informed by learning theories and frameworks for scientific argumentation. This approach involved cycles of prompt drafting, revision, and evaluation, in which we refined the system prompts based on how well the chatbot responded to a set of representative student inputs simulating common task interactions. In this article, we describe the iterative design process and report on the evaluation of the three versions of the system prompt. Based on the design and evaluation findings, we propose a set of design principles for prompt engineering that can inform the development of future LLM-based educational tools, particularly those to support students’ engagement in scientific practices such as constructing and refining scientific arguments.

2. Literature Review

2.1. Scientific Argumentation in the Context of Middle School Science

Scientific argumentation, the process of constructing, critiquing, and refining claims based on evidence and reasoning, is a core practice in the Next Generation Science Standards and is considered essential for helping students engage in authentic scientific inquiry (). Argumentation mirrors the way scientific knowledge is dynamically developed and validated through evidence in the scientific community (; ), and engaging in argumentation allows students to deepen their conceptual understanding and participate in authentic scientific discourse ().

Over the past two decades, numerous frameworks and pedagogical strategies have emerged to promote student engagement in argumentation, including Argument-Driven Inquiry () and explicit modeling approaches (). These approaches emphasize the importance of providing structured opportunities for students to engage in reasoning, evidence evaluation, and reflective dialog. Despite these research efforts, teaching and learning scientific argumentation remains challenging. Students frequently misunderstand the epistemic goals of argumentation, framing it as opinion-based rather than evidence-based knowledge construction (). They also struggle to coordinate claims, evidence, and reasoning in a coherent manner (; ). Recent work has called attention to the importance of managing uncertainty in scientific argumentation, especially as students grapple with incomplete data or conflicting evidence (). As () demonstrated, carefully designed feedback and feedforward mechanisms can scaffold students’ argumentative reasoning and improve both individual writing and group discussions.

Teachers also face considerable instructional barriers for effective implementation of argumentation in science classrooms. These include limited time, insufficient training, and lack of curricular resources to support dialogic, student-centered practices (; ). Even when teachers value argumentation as a goal, their classroom practices often fall short of supporting authentic student discourse (). Additionally, developing the pedagogical content knowledge necessary to effectively support scientific argumentation remains a significant challenge ().

In light of these persistent challenges for both students and teachers, the integration of AI-supported tools has gained increasing attention as a means of scaling high-quality feedback and modeling in argumentation tasks (; ). These tools offer potential solutions to address these challenges by facilitating automated analysis of student arguments to help guide instruction. When grounded in established pedagogical frameworks, these technologies can be leveraged to extend teachers’ capacity and foster deeper engagement with the practice of scientific argumentation.

2.2. Artificial Intelligence to Support Scientific Practices

To address persistent challenges in fostering scientific argumentation, a growing body of research has explored the use of artificial intelligence (AI) within educational settings to evaluate and support students’ engagement in scientific practices. Early work (e.g., ; ) demonstrated that natural language processing and automated scoring systems could reliably assess students’ written scientific explanations. More recent studies have expanded on this by developing AI tools that not only score student responses but also provide formative feedback to guide learning through scientific argumentation (; ; ; ; ; ; ; ).

Some prior research has focused specifically on the use of AI to support students’ scientific argumentation, typically by analyzing open-ended student responses and leveraging natural language processing and machine learning techniques to automatically score student responses and deliver targeted feedback (; ; ; ). While much of the early literature emphasized the accuracy and reliability of AI systems for scoring student responses (), an important shift in focus is evident, and researchers are increasingly investigating the instructional utility of these systems for providing feedback, supporting student growth, and informing teacher decision-making (; ; ; ). These research studies underscore the importance of exploring automated methodologies for effectively responding to students and fostering their development of scientific practices. AI-driven feedback systems, when designed carefully, can scaffold students’ practice of constructing and refining evidence-based arguments. This aligns with the evolving goal of creating learning ecologies that blend human instruction with AI support to further expand the reach and responsiveness of classroom learning ().

2.3. Generative AI to Support Classroom Learning

The recent emergence of generative AI (GenAI) and LLMs has created new possibilities for supporting classroom learning and instruction. Unlike earlier AI tools, LLMs such as GPT-4 can generate human-like text and engage in extended dialog to enable dynamic forms of instructional support. Researchers have explored how LLMs can act as conversational partners, tutors, or assistants that respond to student input during learning activities (). Ethically, it remains important that GenAI tools support rather than replace teachers, thereby enabling educators to dedicate their limited time to advanced instructional tasks. Hence, classroom implementation of GenAI must be carefully designed to provide responses to students consistent with relevant conceptual frameworks and learning theories. This approach aims to ensure that students continue to engage in critical and independent thinking while using GenAI tools.

Several studies have explored the role of LLMs in delivering contextualized feedback and support during classroom activities. For example, LLM-powered tools have been deployed to offer task-specific guidance () and to simulate interactive tutoring systems that scaffold student reasoning and metacognition (; ; ; ; ). These studies investigate students’ perceptions and interactions with chatbots that have been developed for specific learning activities. Findings from these works suggest that students often respond positively to LLM-driven support, especially when the AI agent is clearly framed as a facilitator or coach within the learning task (; ; ; ; ). However, a significant gap in this emerging body of research concerns the design process behind these LLM-based systems, particularly the construction and refinement of the system prompts that govern the AI’s behavior. Few studies explicitly discuss how prompt engineering can be aligned with learning goals or learning theories to ensure that the chatbot’s responses are appropriately designed (; ; ). Without an alignment with learning theories and pedagogical strategies, LLM outputs risk becoming superficially relevant but epistemically misleading.

Recent research on prompt engineering for developing generative AI agents in educational contexts highlights a few design considerations for improving the quality and utility of GenAI interactions in education. First, LLM agents should demonstrate situational awareness by understanding both the task context and the content knowledge involved in the learning activity. Second, system prompts should incorporate clear instructions and follow dialogic principles grounded in learning theories to model reasoning, encourage elaboration, or prompt self-explanation (; ). Broader literature across domains also supports these strategies that highlight how specifying a point of view (e.g., “respond as a teacher or tutor”) and structure conversation logic (e.g., asking clarifying questions rather than providing answers) can improve the quality of LLM-generated outputs ().

Collectively, these studies emphasize the importance of purposeful, theory-informed prompt design to create GenAI tools that can deliver meaningful instructional support. The goal of the present study is to contribute to this evolving space by developing and validating a set of education-specific system prompt design principles. We developed these principles through an iterative, human-in-the-loop approach for constructing a system prompt to improve the quality of chatbot outputs (e.g., relevance, alignment with learning and pedagogical theories) for a formative assessment task focused on scientific argumentation.

2.4. Conceptual Frameworks and Learning Theories

The development and evaluation of the assessment task and chatbot central to this study was guided by conceptual frameworks for scientific argumentation and learning theories for providing feedback to support argumentation. These frameworks and theories informed our approach for supporting students’ argumentation through chatbot-delivered feedback during the formative assessment task. The argumentation framework used for this study identifies three components of scientific argumentation: stating a claim, providing supporting evidence, and connecting the evidence to the claim with reasoning (). In the context of an assessment activity, students make a claim by addressing the question posed about a particular phenomenon. Students provide evidence using scientific data, which can include collected or provided data and/or observations; the evidence should convincingly support the stated claim. Reasoning explains why the data serves as evidence to support the claim, typically by drawing on relevant and appropriate scientific principles.

The development of the assessment task with the chatbot was conceptualized through frameworks for supporting and facilitating scientific argumentation. To facilitate argumentation in the science classroom, () outlines approaches which include presenting students with a phenomenon and associated data, from which students construct an argument to explain the phenomenon and justify their reasoning. Other strategies include scaffolding the process of constructing a complete argument and presenting students with differing or competing ideas about a phenomenon, for which they can develop an argument in support of their idea (). Each of these strategies informed the design of the assessment task, for which we developed the chatbot to provide support as students engaged in the practice of scientific argumentation.

In terms of providing feedback to support argumentation, () emphasizes the importance of feedback that underscores the cognitive and metacognitive practices associated with argumentation as a scientific practice. In the context of the feedback provided by the chatbot, this includes ensuring that its responses are relevant to the components of argumentation (i.e., stating a claim, evidence, and reasoning) while prompting students to reflect on their understanding of how to use these components to construct a complete argument. As such, we sought to develop a chatbot to achieve these pedagogical goals when responding to student questions. Furthermore, in alignment with general theories for providing quality formative feedback (; ), we sought to refine the chatbot so that its responses would provide relevant, clear, and concise feedback that would likely keep students motivated, engaged, and focused on the task. Altogether, four dimensions of feedback quality (relevance, clarity, engagement and motivation, and prompts reflective thinking) shaped our approach and goals for developing the chatbot and served as a basis for evaluating the chatbot’s responses to iteratively refine the chatbot system prompt.

2.5. Design Framework

The iterative process for developing and refining the LLM chatbot system prompt was guided by a human-in-the-loop design framework that emphasizes the active role of human expertise in guiding AI behavior. This draws on growing research on leveraging human-in-the-loop prompt engineering for varied applications, from technical communication () to scoring student responses in assessment contexts (). The central goal of this approach is to prioritize expert review, iterative refinement, and evaluative feedback to align AI-generated feedback with domain-specific learning goals.

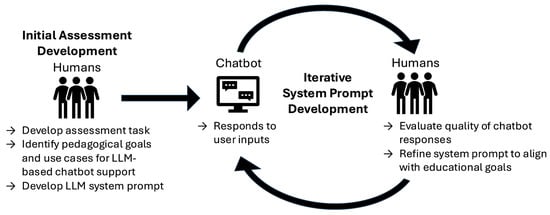

In our study, the human-in-the-loop process entailed two core functions: (1) evaluating the quality of chatbot outputs to a range of representative student inputs, and (2) informing prompt revisions through cycles of analysis, refinement, and re-evaluation. This process is illustrated in Figure 1. By integrating human evaluation and iterative refinement, we aim to better guide the chatbot’s responses to various student inputs, ensuring its alignment with the educational objectives across different student use cases. By understanding and evaluating the chatbot responses, researchers and assessment developers can improve the chatbot’s system prompt and ensure greater alignment with the standards for the assessment, the pedagogical role of the chatbot, and the underlying conceptual frameworks and learning theories. Through the human-in-the-loop approach employed in this article, we additionally sought to identify a framework of prompt engineering design principles for developing AI chatbots for a range of educational contexts.

Figure 1.

Human-in-the-loop process for iteratively developing the chatbot system prompt.

3. Research Questions

The goal of this study is to understand the prompt engineering principles that are likely to lead to better-quality feedback for an LLM-based chatbot designed to support middle school students’ scientific argumentation during an ecosystem assessment task. To achieve this goal, we ask the following research question:

Across four dimensions of feedback quality (relevance, clarity, engagement and motivation, and prompts reflective thinking), how does the quality of chatbot responses differ across the following variables?

- Versions of the system prompt;

- The quality of user inputs;

- The chatbot use cases.

4. Methods

4.1. Target Student Population and Domain

This study focuses on the iterative development and evaluation of an LLM-based chatbot designed to support middle school students’ scientific reasoning and argumentation in the context of ecosystems. The chatbot was integrated within a formative assessment task. The sections that follow describe the assessment task, the chatbot implementation and prompt engineering process, and the methods for evaluating chatbot responses to a range of synthetic student inputs.

4.2. Assessment Task Description

The assessment task begins with an introductory video that situates students within the scenario of their school’s environmental club investigating an infestation of corn rootworms damaging their corn crop. Students are asked to explore the potential effects of introducing harvest spiders (natural predators of rootworms) on the corn harvest yield and ecosystems dynamics.

Following the video, students interact with a NetLogo simulation () that allows them to manipulate variables (e.g., initial populations of rootworms and harvest spiders) and observe ecosystem interactions between rootworms, harvest spiders, and corn plants. Using data from the simulation, students then engage with scaffolded questions to construct scientific arguments consisting of (1) a claim about how adding harvest spiders affects corn plants and rootworms, (2) evidence gathered from the simulation, and (3) reasoning to explain how the evidence supports their claim. After the scaffolded activity to practice scientific argumentation, students analyze real-world data presented in a table and graph (see Figure 2) and compose a complete scientific argument to respond to a final question:

“One of your classmates found actual data collected from a corn farm that was facing a rootworm infestation, just like the garden in your school. Based on this data, some of your classmates predict that if they continue adding 10 harvest spiders, it will help improve the corn harvest in Year 6. Do you agree or disagree with this prediction? Analyze the data trend and make your own prediction about the Year 6 corn harvest. Your response should include a claim, supporting data, and valid reasoning.”

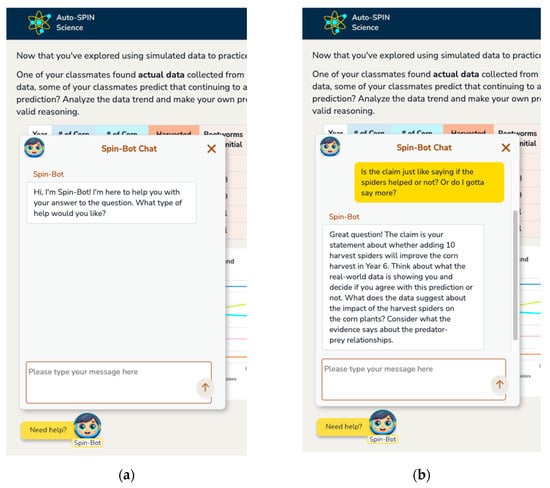

Figure 2.

The final question in the assessment task. The chatbot is in the lower left corner.

4.3. Chatbot Implementation

The LLM-based chatbot (Spin-Bot) embedded within the assessment interface is designed to offer real-time support as students progress through the task. The chatbot is intended for various needs students may have as they complete the task, particularly to clarify task activities (i.e., the simulation activity), questions (i.e., the scaffolded argumentation questions or the final question), content (i.e., the ecosystem context), and expectations (i.e., for the different components of scientific argumentation). The chatbot is implemented with ’s () GPT-4o without fine-tuning and with default parameters (i.e., temperature = 1.0 and top_p = 1.0). The chatbot has conversational memory and awareness of the current part of the task that the student is viewing. The task is programmed as a standalone application, and the chatbot is embedded within the task and available to students by clicking a “Need help?” button in the lower-left corner of the task screen (Figure 3). When students submit an input to the chatbot, the student prompt is passed to the LLM through a call to OpenAI’s API with the system prompt developed and evaluated in this study. Responses are returned to students within 1–2 s.

Figure 3.

(a) The chatbot pop-up when students click the “Need help” button; (b) The chatbot after submitting a question and receiving a response.

4.4. Iterative Chatbot Development Process

The system prompt was refined through an iterative human-in-the-loop design process, beginning with an initial version (Version 0) with minimal prompt engineering that simply instructed the chatbot to act as a tutor for middle school science students engaging in an argumentation task. Guided by the previously described learning theories and frameworks and the evaluation described in the following sections, we iteratively developed a more detailed system prompt. Subsequent versions (Versions 1 and 2) of the system prompt still instructed the chatbot to assume the role of a middle school science tutor, but the prompts now included detailed instructions for guiding the chatbot to support students’ argumentation and reasoning. For example, instructions included guidelines to avoid providing direct answers to the task and to instead ask probing questions to help students elaborate their reasoning, in alignment with the goal for the chatbot to prompt reflective thinking. The prompts also included a detailed description of the task and instructed the chatbot to use this description to provide answers to questions from students seeking to clarify the task content. The task description outlined the scenario for the task, the simulation activity, the scaffolded argumentation questions, and the final question for which students are asked to respond with a complete argument. For this study, we present the evaluation results for the three versions of the system prompt (see Table 1): Version 0 (with minimal prompt engineering), Version 1 (interim version with prompt engineering), and Version 2 (final version with prompt engineering). The full text of each system prompt is provided in the Supplementary Materials—Table S1.

Table 1.

Overview of prompt information included in the versions of the Spin-Bot evaluated for this study.

4.5. Synthetic Student Inputs

To systematically evaluate the chatbot’s output across versions, we generated a dataset of chatbot responses to a set of 18 synthetic student inputs to capture a variety of use cases (Table 2). The use of synthetic responses was chosen to allow for iterative refinement of the chatbot prototype without placing a time burden on teachers or students during prototype development. The set of use cases was articulated based on the goals of the chatbot and corresponded to commonly identified categories of K-12 student interactions with chatbots (). The use cases focused on questions students may have about the practice of scientific argumentation. To generate the synthetic inputs for each use case, we selected example student responses from a dataset collected using a previous version of the rootworm task, which was described in an earlier study (). Based on the prior analysis, we sorted responses into high-quality responses (which included more argumentation components) and low-quality responses (which were vague or included few argumentation components). We then developed a prompt in Microsoft Copilot 365 that provided context about the activity and the chatbot, listed the chatbot use cases, and included the example student responses. The Copilot prompt was used to generate three sets of synthetic chatbot inputs: Set 1—High quality, generated by providing the high-quality example student responses; Set 2—Low quality, generated by providing the low-quality example student responses; and Set 3—Typographical errors, generated by providing both the high- and low-quality example student responses and including a request in the prompt to include typographical errors (such as misspellings and incomplete sentences).

Table 2.

Synthetic student inputs for testing chatbot output.

We tested each of the three versions of the chatbot system prompt with 18 synthetic student inputs. For each input, we generated three independent sets of chatbot responses to address the non-determinism of LLM outputs. Hence, the dataset for evaluating the chatbot responses comprised 54 chatbot responses for each version of the system prompt, for a total of 162 chatbot responses.

4.6. Evaluation of the Chatbot Responses

To evaluate the quality of chatbot outputs, we developed an evaluation rubric based on argumentation frameworks () and feedback literature (; ; ). The evaluators included researchers with experience in learning science, science education, and assessment development with training to evaluate scientific argumentation in students’ writing. The rubric includes four dimensions with definitions and indicators provided in Table 3. To establish the reliability of the coding, two researchers familiar with the evaluation rubric independently analyzed the full dataset of chatbot responses, achieving 65% exact agreement across all rubric categories. The researchers met to clarify the evaluation rubric and discuss the codes with disagreements to reach consensus until achieving 70% exact agreement across all rubric categories. Afterward, the two researchers reviewed the remaining disagreements to align the coding with the consensus discussion.

Table 3.

Evaluation rubric.

4.7. Statistical Analysis

To examine the trends emerging from the iterative development process, we used the Python package statsmodels 0.14.4 () to conduct statistical analyses on our evaluation of the three versions of the system prompt. First, we conducted one-way ANOVA tests to compare the means for each rubric category (relevance, clarity, engagement and motivation, and prompts reflective thinking) across the three prompt versions. This allowed us to evaluate whether chatbot performance improved with each iteration of prompt refinement. Next, we conducted one-way ANOVA tests within each prompt version to examine whether the chatbot performed better in specific feedback categories than others. To investigate how user input quality (high, low, or inputs with typographical errors) affected chatbot response quality, we conducted one-way ANOVA tests within each prompt version. We also analyzed performance across six use cases (e.g., clarifying claim, clarifying evidence) to assess whether the chatbot handled some types of student questions more effectively than others. In cases where ANOVA revealed significant differences, we conducted Tukey’s HSD post hoc pairwise tests, controlling for family-wise error rates, to determine which specific groups (prompt versions, input quality sets, or use cases) differed from one another.

5. Results

In the following sections, we present the findings from our evaluation of the chatbot system prompt across four dimensions of feedback quality: relevance, clarity, engagement and motivation, and prompts reflective thinking. Specifically, we report the overall evaluation for the three versions of the system prompt, our analysis of the relationship between the quality of user input and chatbot responses, and our analysis of the relationship between different use cases and the quality of chatbot responses.

5.1. How Does the Quality of Chatbot Responses Differ Among the Versions of the System Prompt?

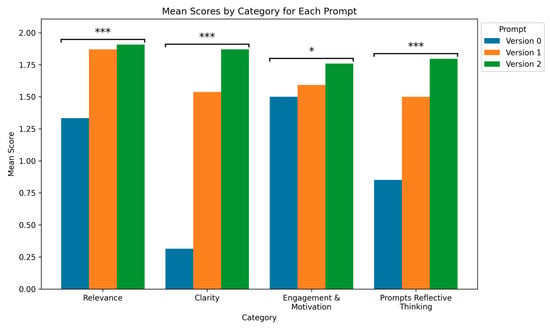

The scores for each rubric category increased significantly with each new version of the system prompt, as shown in Figure 4 (with descriptive statistics provided in the Supplementary Materials—Table S2). Results from the ANOVA and Tukey post hoc tests (detailed in the Supplementary Materials—Table S3), which compared the means for each category across the three prompt versions, show that Relevance improved significantly from Version 0 to Version 1 and from Version 0 to Version 2; however, Relevance did not significantly improve from Version 1 to Version 2. Clarity showed significant improvement across all three version comparisons. For Engagement and Motivation, the only significant improvement was between Version 0 and Version 2. Similar to Clarity, Prompts Reflective Thinking showed significant improvement across all three pairwise comparisons. Overall, these findings indicate that all four feedback categories improved as the system prompt evolved, with the most dramatic gains occurring between Version 0 and Version 1. The improvements between Version 1 and Version 2, while still present, were slightly less pronounced.

Figure 4.

Mean scores by category for each version of the system prompt. Significant results are shown from the ANOVA to compare means for each category between system prompt versions. * p < 0.05, ** p < 0.01, *** p < 0.001.

The differences in chatbot responses across the versions of the system prompt are qualitatively illustrated in Table 4, which provides responses to the synthetic input “I don’t get how to do the whole argument thing. Like what goes first and what goes last?” Across versions of the system prompt, the responses became more relevant to the task. For example, the Version 0 response includes the ideas of counterargument and rebuttal, which were not argumentation components incorporated into the task. Later versions of the system prompt provided shorter, clearer, and more targeted responses. For example, between Version 1 and 2, the length of responses shown in Table 4 decreased by approximately 100 words while still including the key information about argumentation. While all versions began with motivating language that affirmed the question in the student input, Versions 1 and 2 specifically encouraged engagement with the task, referencing details like the simulation and real-world data. The prompting to encourage reflective thinking also improved with each iteration of the system prompt. Version 2 promoted independent thinking by guiding students to review the simulation and real-world data and to connect their evidence to their knowledge of predator-prey relationships, instead of supplying direct answers. In contrast, Version 1 offered detailed examples that students could use verbatim in their own responses to the task. These trends illustrated by the responses in Table 4 were also evident across the various use cases and student inputs.

Table 4.

Chatbot responses to the same input for the three versions of the system prompt.

In addition to comparing different versions of the system prompt, we also examined differences among the four evaluation categories within each prompt version (see the Supplementary Materials—Table S4). ANOVA tests revealed that for both Version 0 and Version 1, there were significant differences among category scores. Specifically, for Version 0, Tukey post hoc tests indicated five out of six pairwise category comparisons were significant; for Version 1, two out of six pairwise comparisons showed significant differences. However, Version 2 did not display any significant differences among the categories. This finding suggests that as the system prompt improved, the evaluation of the chatbot responses became more consistent across all four feedback categories.

5.2. How Does the Quality of Chatbot Responses Differ Based on the Quality of User Inputs?

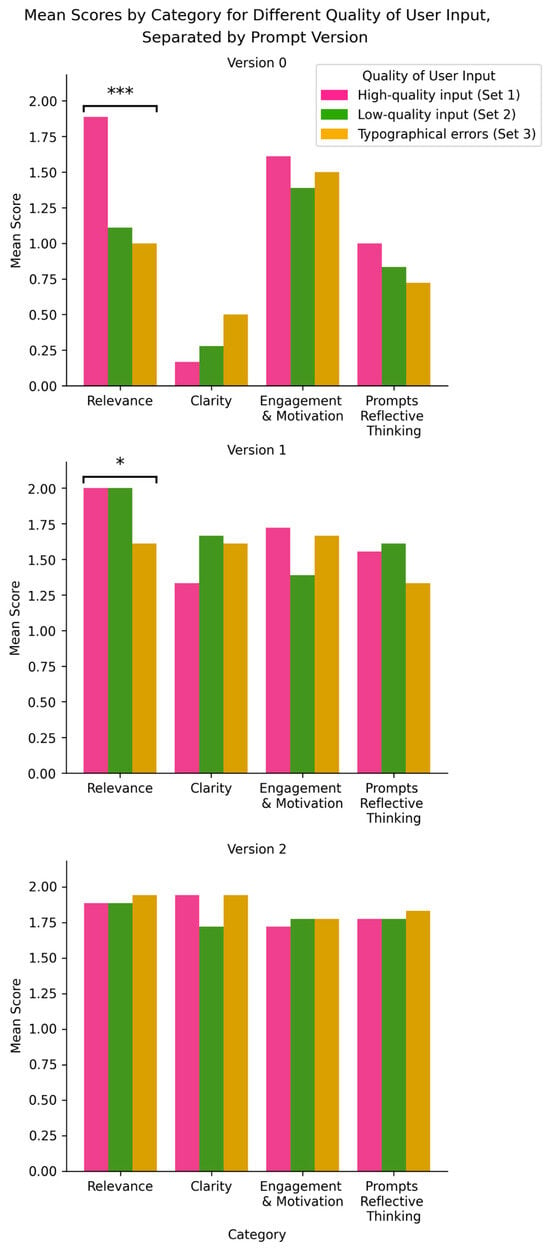

As shown in Figure 5, the variation in chatbot response quality was noticeable for differing user input quality for the first version of the system prompt (descriptive statistics provided in the Supplementary Materials—Table S5). However, this variation became progressively less pronounced in the later versions of the prompt, which provided more consistent responses across variation in the quality of user inputs. The ANOVA tests comparing input quality sets for each evaluation category and prompt version (see the Supplementary Materials—Table S6) showed significant differences only in the Relevance category for Version 0 and Version 1. Specifically, for Version 0, post hoc tests revealed that Relevance scores differed significantly between high-quality input (Set 1) and both low-quality input (Set 2) and inputs with typographical errors (Set 3), but not between the latter two. For Version 1, Relevance was significantly different between typographical error input (Set 3) and both high-quality (Set 1) and low-quality (Set 2) input, but not between high-quality (Set 1) and low-quality (Set 2) input. For all other evaluation categories and prompt versions, the ANOVA found no significant differences between input quality sets. This suggests that, with the improved prompts, the chatbot produced responses of similar quality regardless of the quality of the user’s input; however, this effect was observed mainly in the Relevance category.

Figure 5.

Mean scores by category for different quality of user input, grouped by versions of the system prompt. Significant results are shown from the ANOVA to compare means for each category for differing quality of use input and prompt version. * p < 0.05, ** p < 0.01, *** p < 0.001.

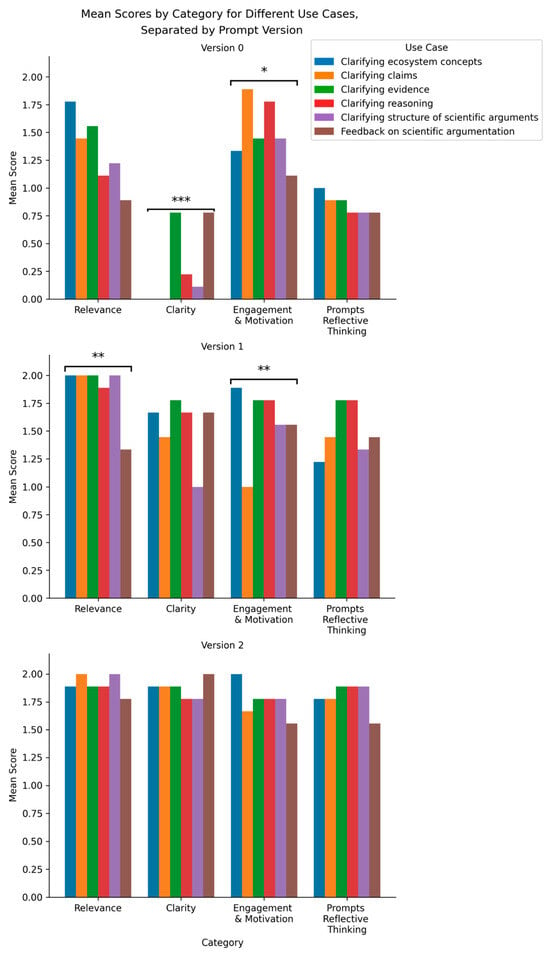

5.3. How Does the Quality of Chatbot Responses Differ for Different Chatbot Use Cases?

The finding that improvements in the system prompt led to more consistent chatbot responses was also observed across different chatbot use cases, as illustrated in Figure 6 (with descriptive statistics provided in the Supplementary Materials—Table S7). The variation in the evaluation of chatbot responses between use cases was most pronounced for Version 0 of the system prompt, and the variation became less notable in the later versions of the system prompt. The ANOVA tests comparing use cases for each evaluation category and prompt version (see the Supplementary Materials—Table S8) showed significant differences only in the Clarity and Engagement and Motivation categories for Version 0 and the Relevance and Engagement and Motivation categories for Version 1. For Clarity in Version 0, post hoc tests indicated that both the Clarifying ecosystem concepts and Clarifying claim use cases were significantly lower than the Clarifying evidence and Feedback on scientific argumentation use cases. This suggests that the chatbot with minimal system prompting tended to provide lengthy, unclear responses to the inputs focused on ecosystem concepts and the role of a claim in scientific argumentation. For Engagement and Motivation in Version 0, the post hoc tests found only a single difference between the Clarifying claims and Feedback on scientific argumentation use cases, indicating that the chatbot provided less-motivating responses for inputs seeking feedback on scientific argumentation. For Relevance in Version 1, post hoc tests revealed that the use case Feedback on scientific argumentation was significantly lower than the other use cases, except for the Clarifying reasoning use case, indicating that the inputs seeking feedback on argumentation were less relevant to the claim–evidence–reasoning framework. For Engagement and Motivation in Version 1, post hoc tests revealed that the Clarifying claims use case was significantly lower than three of the other use cases, suggesting that inputs seeking to clarify the role of the claim in a scientific argument received less-motivating responses. Similar to the trends for the differences based on quality of user input, these findings suggest that the iterative improvement of the system prompt reduced differences in chatbot response quality across use cases.

Figure 6.

Mean scores by category for different use cases, grouped by versions of the system prompt. Significant results are shown from the ANOVA to compare means for each category for differing use cases and prompt version. * p < 0.05, ** p < 0.01, *** p < 0.001.

6. Discussion

This study provides evidence that a human-in-the-loop iterative prompt engineering process can significantly enhance the quality of LLM-based chatbot responses. The most pronounced improvements were observed in relevance, clarity, and prompting reflective thinking, all of which are key to fostering effective instruction of scientific argumentation () and align with design principles for high-quality formative feedback (; ).

These findings contribute to the growing body of research exploring how LLMs can be strategically employed as chatbots to support learning and instructional practices such as scientific argumentation. While prior studies have highlighted that LLMs offer the potential for individualized feedback at scale (; ; ), their effectiveness relies on prompt design that balances guidance with opportunities for independent thought. Our findings demonstrate that for each version of the system prompt, responses became shorter, clearer, and more targeted, moving away from providing direct answers toward fostering independent student reasoning. The progression in the effectiveness of responses from Version 0 to Version 2 of the system prompt illustrates how carefully engineered prompts rooted in learning theory can shift LLMs from merely providing information to actively scaffolding students’ development of claims, evidence, and reasoning. This aligns with existing frameworks (; ; ) which state that effective feedback to support argumentation should encourage students to reflect on their understanding of the argumentation components as they construct and justify their own scientific ideas. Furthermore, the transition from providing direct answers toward prompting reflection aligns with evidence from the literature that metacognitive prompts and opportunities for student agency promote deeper engagement in science practices (; ).

Our findings additionally demonstrate that iterative prompt engineering can improve the consistency of chatbot responses, extending the prior research describing the importance of prompt engineering to structure student interactions with LLM-based tools and chatbots (; ). Initially, response quality varied according to input quality and use case, but the improved prompts produced high-quality, consistent responses regardless of these factors—especially with regard to the relevance of the chatbot responses to the argumentation framework. Additionally, the final version of the system prompt produced responses that were consistent across all evaluation categories, unlike earlier versions where significant differences existed between categories. The findings indicate that well-engineered system prompts not only improve the overall quality of chatbot feedback but also increase consistency and adaptability across different input types. These improvements in the quality of chatbot responses are vital for providing more equitable and robust support for all learners.

7. Implications

7.1. A Framework for Designing AI Chatbots

One of the most important outcomes of our work is a framework of design principles for prompt engineering that can help improve the development of chatbots for educational purposes (Table 5). These principles were developed through our iterative process of refining the system prompt. They are not limited to chatbots for scientific argumentation; instead, they can be adapted to guide the creation of educational chatbots across subjects and pedagogical goals. The main focus of these principles is to use learning theory and effective teaching strategies to structure the chatbot responses. In particular, the design principles highlight the importance of supporting student agency by encouraging students to think for themselves, rather than providing them with direct answers or examples. The principles also suggest that chatbots should help students develop their reasoning by prompting them to reflect and elaborate on their ideas, rather than simply telling them information. Other effective prompt engineering strategies include keeping system prompts concise (for example, using bullet points instead of long paragraphs) and using markers like “IMPORTANT” to draw attention to key instructions. Finally, we emphasize the value of using a human-in-the-loop approach to iteratively review and refine the system prompt and ensure continuous improvement. As demonstrated in our findings, using these design principles and the iterative, human-in-the-loop approach can improve the quality and consistency of chatbot responses.

Table 5.

System prompt design principles, illustrated with example prompt elements drawn from Version 2 of the system prompt central to this study.

7.2. Implications for Future Research

The findings from this study suggest several directions for future research at the intersection of AI, prompt engineering, and science education. Future research for developing and investigating chatbots to support student argumentation specifically can explore further breaking down the claim–evidence–reasoning framework to provide feedback on other argumentation components which may be relevant for certain assessment tasks (e.g., prompting to strengthen arguments through alternative data, to develop counterarguments, or to promote cognitive conflict to drive argumentation practice).

In terms of future directions for evaluating and improving chatbots for educational purposes, our current work provides a rubric for evaluating AI-generated feedback to students; however, the reliability of this rubric remains a limitation. To address this, future research could focus on creating and validating more robust evaluation frameworks by refining and elaborating the rubric introduced here. Additional studies should further explore approaches to iterate and improve the system prompt; in particular, as the present analysis is based on synthetic inputs, further pilot testing should subsequently occur to gather actual teacher and student interactions and perceptions of the chatbot responses to inform further system prompt design improvements. Together, these research directions will inform ongoing efforts to improve chatbot evaluation and system prompt design. Finally, as AI models and approaches for designing system prompts to promote learning continue to evolve, there is an opportunity to study how the iterative co-design of prompts between educators, students, and AI experts shapes classroom practices and student outcomes.

8. Limitations

There are several limitations associated with this work. First, the study focuses on the context of developing a chatbot to support middle school students engaging in scientific argumentation, so the specific findings may not be generalizable to other contexts or age groups. Additionally, we used a design-based approach to evaluate and develop the system prompt. We tested use cases aligned with the intended pedagogical purposes of the chatbot, using a relatively small sample of hypothetical student responses of different qualities. These decisions were made to iteratively develop the chatbot in a timely manner before providing it to students, but there remains the need for further improvement and refinement based on authentic student use of the chatbot. Furthermore, the methods could be extended to test on datasets of authentic student responses to understand whether the iterative development process results in a system that provides responses appropriate for actual classroom use. There are additional limitations with our evaluation rubric, in that our initial coding achieved moderate percent agreement between two coders. Although the researchers met to discuss disagreements and adjust the coding based on the inconsistencies that surfaced, there remains the need to further improve upon our approach for evaluating the chatbot responses. In addition to the above methodological limitations, there are also ethical considerations that could further improve our work. As () noted, ethical safeguards such as clear disclosure of chatbot roles, attention to equity, and protection of student data are essential for ensuring that chatbots serve as responsible tools for learning. These ethical dimensions were not explicitly examined in our design process. Addressing these ethical considerations in future work will be critical to ensure that AI-supported learning tools are socially responsible and aligned with broader educational values.

9. Conclusions

This study demonstrates the importance of thoughtful prompt engineering and iterative, human-in-the-loop design for developing LLM-based chatbots to support student scientific argumentation in educational settings. By evaluating different versions of the system prompt, our findings indicate that clear, concise instructions aligned with established argumentation frameworks and learning theory can improve chatbot response quality and provide better responses to prompt student reasoning. The findings also suggest how iterative system prompt refinement can improve the consistency of chatbot responses across differing qualities of input and chatbot use cases. These insights culminated in a framework for prompt engineering principles which can inform the ongoing optimization of chatbot prompts and evaluation methods to further improve the application of LLMs across educational contexts.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/educsci15111507/s1, Table S1: The three versions of the system prompt evaluated for the study; Table S2: Descriptive statistics for the results presented in Section 5.1; Table S3: ANOVA and post-hoc tests for categories across prompt versions (corresponding to Section 5.1); Table S4: ANOVA and post-hoc tests among categories within each prompt version (corresponding to Section 5.1); Table S5: Descriptive statistics for the results presented in Section 5.2; Table S6: ANOVA and post-hoc tests for categories between different pairings of input quality and prompt version (corresponding to Section 5.2); Table S7: Descriptive statistics for the results presented in Section 5.3; Table S8: ANOVA and post-hoc tests for categories between different pairings of use case and prompt version (corresponding to Section 5.3).

Author Contributions

Conceptualization, F.M.W., L.L., T.M.O., Y.S. and X.Z.; methodology, F.M.W. and T.M.O.; formal analysis, F.M.W.; investigation, F.M.W., T.M.O. and E.J.-D.V.; writing—original draft preparation, F.M.W. and L.L.; writing—review and editing, F.M.W., L.L., T.M.O., Y.S., E.J.-D.V., X.Z., Y.W. and N.L.; visualization, F.M.W.; supervision, L.L. and X.Z.; project administration, F.M.W. and E.J.-D.V.; funding acquisition, L.L., Y.S. and X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

The research reported here was supported, in whole or in part, by the Institute of Education Sciences, U.S. Department of Education, through grant R305A240356 to ETS. The opinions expressed are those of the authors and do not represent the views of the Institute or the U.S. Department of Education.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| LLM | Large Language Model |

| AI | Artificial Intelligence |

| GenAI | Generative Artificial Intelligence |

References

- Alam, A. (2023, February 14–15). Intelligence unleashed: An argument for AI-enabled learning ecologies with real world examples of today and a peek into the future. International Conference on Innovations in Computer Science, Electronics & Electrical Engineering (p. 030001), Ashta, India. [Google Scholar]

- Ali, F., Choy, D., Divaharan, S., Tay, H. Y., & Chen, W. (2023). Supporting self-directed learning and self-assessment using TeacherGAIA, a generative AI chatbot application: Learning approaches and prompt engineering. Learning: Research and Practice, 9(2), 135–147. [Google Scholar] [CrossRef]

- Berland, L. K., & Hammer, D. (2012). Students’ framings and their participation in scientific argumentation. In Perspectives on scientific argumentation: Theory, practice and research (pp. 73–93). Springer. [Google Scholar] [CrossRef]

- Berland, L. K., & McNeill, K. L. (2010). A learning progression for scientific argumentation: Understanding student work and designing supportive instructional contexts. Science Education, 94(5), 765–793. [Google Scholar] [CrossRef]

- Berland, L. K., & Reiser, B. J. (2011). Classroom communities’ adaptations of the practice of scientific argumentation. Science Education, 95(2), 191–216. [Google Scholar] [CrossRef]

- Chen, E., Wang, D., Xu, L., Cao, C., Fang, X., & Lin, J. (2024). A systematic review on prompt engineering in large language models for K-12 STEM education. Available online: https://arxiv.org/pdf/2410.11123 (accessed on 25 July 2025).

- Chen, Y., Benus, M., & Hernandez, J. (2019). Managing uncertainty in scientific argumentation. Science Education, 103(5), 1235–1276. [Google Scholar] [CrossRef]

- Cohn, C., Snyder, C., Montenegro, J., & Biswas, G. (2024). Towards a human-in-the-loop LLM approach to collaborative discourse analysis. In Communications in computer and information science (Vol. 2151, pp. 11–19). CCIS. Springer. [Google Scholar] [CrossRef]

- Cooper, M. M., & Klymkowsky, M. W. (2024). Let us not squander the affordances of LLMs for the sake of expedience: Using retrieval augmented generative AI chatbots to support and evaluate student Reasoning. Journal of Chemical Education, 101(11), 4847–4856. [Google Scholar] [CrossRef]

- Dood, A. J., Watts, F. M., Connor, M. C., & Shultz, G. V. (2024). Automated text analysis of organic chemistry students’ written hypotheses. Journal of Chemical Education, 101(3), 807–818. [Google Scholar] [CrossRef]

- Duschl, R. (2003). The assessment of argumentation and explanation. In The role of moral reasoning on socioscientific issues and discourse in science education (pp. 139–161). Springer. [Google Scholar] [CrossRef]

- Faize, F., Husain, W., & Nisar, F. (2017). A critical review of scientific argumentation in science Education. EURASIA Journal of Mathematics, Science and Technology Education, 14(1), 475–483. [Google Scholar] [CrossRef]

- Farah, J. C., Ingram, S., Spaenlehauer, B., Lasne, F. K. L., & Gillet, D. (2023). Prompting large language models to power educational chatbots. In Lecture notes in computer science (Including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) (Vol. 14409, pp. 169–188). LNCS. Springer. [Google Scholar] [CrossRef]

- Grooms, J., Sampson, V., & Enderle, P. (2018). How concept familiarity and experience with scientific argumentation are related to the way groups participate in an episode of argumentation. Journal of Research in Science Teaching, 55(9), 1264–1286. [Google Scholar] [CrossRef]

- Haudek, K. C., Prevost, L. B., Moscarella, R. A., Merrill, J., & Urban-Lurain, M. (2012). What are they thinking? Automated analysis of student writing about acid-base chemistry in introductory biology. CBE Life Sciences Education, 11(3), 283–293. [Google Scholar] [CrossRef]

- Haudek, K. C., & Zhai, X. (2024). Examining the Effect of Assessment Construct Characteristics on Machine Learning Scoring of Scientific Argumentation. International Journal of Artificial Intelligence in Education, 34(4), 1482–1509. [Google Scholar] [CrossRef]

- Kaldaras, L., Haudek, K., & Krajcik, J. (2024). Employing automatic analysis tools aligned to learning progressions to assess knowledge application and support learning in STEM. International Journal of STEM Education, 11(1), 57. [Google Scholar] [CrossRef]

- Klar, M. (2024). How should we teach chatbot interaction to students? A pilot study on perceived affordances and chatbot interaction patterns in an authentic K-12 setting. In Proceedings of DELFI workshops 2024. Gesellschaft für Informatik e.V. [Google Scholar]

- Kooli, C. (2023). Chatbots in education and research: A critical examination of ethical implications and solutions. Sustainability, 15(7), 5614. [Google Scholar] [CrossRef]

- Kumar, H., Musabirov, I., Reza, M., Shi, J., Wang, X., Williams, J. J., Kuzminykh, A., & Liut, M. (2024). Guiding students in Using LLMs in supported learning environments: Effects on interaction dynamics, learner performance, confidence, and trust. Proceedings of the ACM on Human-Computer Interaction, 8(CSCW2), 499. [Google Scholar] [CrossRef]

- Latifi, S., Noroozi, O., & Talaee, E. (2021). Peer feedback or peer feedforward? Enhancing students’ argumentative peer learning processes and outcomes. British Journal of Educational Technology, 52(2), 768–784. [Google Scholar] [CrossRef]

- Lee, A. V. Y., Tan, S. C., & Teo, C. L. (2023). Designs and practices using generative AI for sustainable student discourse and knowledge creation. Smart Learning Environments, 10(1), 59. [Google Scholar] [CrossRef]

- Lee, H. S., Gweon, G. H., Lord, T., Paessel, N., Pallant, A., & Pryputniewicz, S. (2021). Machine learning-enabled automated feedback: Supporting students’ revision of scientific arguments based on data drawn from simulation. Journal of Science Education and Technology, 30(2), 168–192. [Google Scholar] [CrossRef]

- Lee, J. H., Shin, D., & Hwang, Y. (2024). Investigating the capabilities of large language model-based task-oriented dialogue chatbots from a learner’s perspective. System, 127, 103538. [Google Scholar] [CrossRef]

- Linn, M. C., Gerard, L., Ryoo, K., McElhaney, K., Liu, O. L., & Rafferty, A. N. (2014). Computer-guided inquiry to improve science learning. Science, 344(6180), 155–156. [Google Scholar] [CrossRef]

- Liu, L., Cisterna, D., Kinsey, D., Qi, Y., & Steimel, K. (2024). AI-Based Diagnosis of Student Reasoning Patterns in NGSS Assessments. Uses of Artificial Intelligence in STEM Education, 162–176. [Google Scholar] [CrossRef]

- Liu, O. L., Rios, J. A., Heilman, M., Gerard, L., & Linn, M. C. (2016). Validation of automated scoring of science assessments. Journal of Research in Science Teaching, 53(2), 215–233. [Google Scholar] [CrossRef]

- Makransky, G., Shiwalia, B. M., Herlau, T., & Blurton, S. (2025). Beyond the “wow” factor: Using generative AI for increasing generative sense-making. Educational Psychology Review, 37(3), 60. [Google Scholar] [CrossRef]

- McNeill, K. L., & Knight, A. M. (2013). Teachers’ pedagogical content knowledge of scientific argumentation: The impact of professional development on K–12 teachers. Science Education, 97(6), 936–972. [Google Scholar] [CrossRef]

- McNeill, K. L., & Krajcik, J. (2008). Scientific explanations: Characterizing and evaluating the effects of teachers’ instructional practices on student learning. Journal of Research in Science Teaching, 45(1), 53–78. [Google Scholar] [CrossRef]

- Meyer, J., Jansen, T., Schiller, R., Liebenow, L. W., Steinbach, M., Horbach, A., & Fleckenstein, J. (2024). Using LLMs to bring evidence-based feedback into the classroom: AI-generated feedback increases secondary students’ text revision, motivation, and positive emotions. Computers and Education: Artificial Intelligence, 6, 100199. [Google Scholar] [CrossRef]

- Neumann, A. T., Yin, Y., Sowe, S., Decker, S., & Jarke, M. (2025). An LLM-driven chatbot in higher education for databases and information systems. IEEE Transactions on Education, 68(1), 103–116. [Google Scholar] [CrossRef]

- Nicol, D., & MacFarlane-Dick, D. (2006). Formative assessment and selfregulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. [Google Scholar] [CrossRef]

- NRC. (2012). A framework for K-12 science education: Practices, crosscutting concepts, and core ideas (pp. 1–385). The National Academies Press. [Google Scholar] [CrossRef]

- OpenAI. (2024). GPT-4o system card. Available online: https://arxiv.org/pdf/2410.21276 (accessed on 25 July 2025).

- Osborne, J., Erduran, S., & Simon, S. (2004). Enhancing the quality of argumentation in school science. Journal of Research in Science Teaching, 41(10), 994–1020. [Google Scholar] [CrossRef]

- Panadero, E., & Lipnevich, A. A. (2022). A review of feedback models and typologies: Towards an integrative model of feedback elements. Educational Research Review, 35, 100416. [Google Scholar] [CrossRef]

- Ping, I., Halim, L., & Osman, K. (2020). Explicit teaching of scientific argumentation as an approach in developing argumentation skills, science process skills and biology understanding. Journal of Baltic Science Education, 19(2), 276–288. [Google Scholar] [CrossRef]

- Ranade, N., Saravia, M., & Johri, A. (2025). Using rhetorical strategies to design prompts: A human-in-the-loop approach to make AI useful. AI and Society, 40(2), 711–732. [Google Scholar] [CrossRef]

- Rapanta, C. (2021). Can teachers implement a student-centered dialogical argumentation method across the curriculum? Teaching and Teacher Education, 105, 103404. [Google Scholar] [CrossRef]

- Sampson, V., & Blanchard, M. R. (2012). Science teachers and scientific argumentation: Trends in views and practice. Journal of Research in Science Teaching, 49(9), 1122–1148. [Google Scholar] [CrossRef]

- Sampson, V., Grooms, J., & Walker, J. P. (2011). Argument-driven inquiry as a way to help students learn how to participate in scientific argumentation and craft written arguments: An exploratory study. Science Education, 95(2), 217–257. [Google Scholar] [CrossRef]

- Schmucker, R., Xia, M., Azaria, A., & Mitchell, T. (2024). Ruffle & Riley: Insights from designing and evaluating a large language model-based conversational tutoring system. Available online: https://arxiv.org/pdf/2404.17460 (accessed on 25 July 2025).

- Scholz, N., Nguyen, M. H., Singla, A., & Nagashima, T. (2025). Partnering with AI: A pedagogical feedback system for LLM integration into programming education. Available online: https://arxiv.org/pdf/2507.00406v1 (accessed on 25 July 2025).

- Seabold, S., & Perktold, J. (2010). Statsmodels: Econometric and statistical modeling with Python. Scipy, 7, 92–96. [Google Scholar] [CrossRef]

- Stamper, J., Xiao, R., & Hou, X. (2024). Enhancing LLM-based feedback: Insights from intelligent tutoring systems and the learning sciences. In Communications in computer and information science (Vol. 2150, pp. 32–43). CCIS. Springer. [Google Scholar] [CrossRef]

- Tian, L., Ding, Y., Tian, X., Chen, Y., & Wang, J. (2025). Design and implementation of an intelligent assessment technology for elementary school students’ scientific argumentation ability. Assessment in Education: Principles, Policy & Practice, 32(2), 231–251. [Google Scholar] [CrossRef]

- Watts, F. M., Dood, A. J., & Shultz, G. V. (2022). Developing machine learning Models for automated analysis of organic chemistry students’ written descriptions of organic reaction mechanisms. Student Reasoning in Organic Chemistry, 285–303. [Google Scholar] [CrossRef]

- Watts, F. M., Dood, A. J., & Shultz, G. V. (2023). Automated, content-focused feedback for a writing-to-learn assignment in an undergraduate organic chemistry course. ACM International Conference Proceeding Series, 531–537. [Google Scholar] [CrossRef]

- White, J., Fu, Q., Hays, S., Sandborn, M., Olea, C., Gilbert, H., Elnashar, A., Spencer-Smith, J., & Schmidt, D. C. (2023). A prompt pattern catalog to enhance prompt engineering with ChatGPT. Available online: https://arxiv.org/pdf/2302.11382 (accessed on 25 July 2025).

- Wilensky, U. (1999). NetLogo home page. Available online: https://ccl.northwestern.edu/netlogo/ (accessed on 25 July 2025).

- Wilson, C. D., Haudek, K. C., Osborne, J. F., Buck Bracey, Z. E., Cheuk, T., Donovan, B. M., Stuhlsatz, M. A. M., Santiago, M. M., & Zhai, X. (2024). Using automated analysis to assess middle school students’ competence with scientific argumentation. Journal of Research in Science Teaching, 61(1), 38–69. [Google Scholar] [CrossRef]

- Zhai, X., Haudek, K. C., Shi, L., Nehm, R. H., & Urban-Lurain, M. (2020). From substitution to redefinition: A framework of machine learning-based science assessment. Journal of Research in Science Teaching, 57(9), 1430–1459. [Google Scholar] [CrossRef]

- Zhai, X., & Nehm, R. H. (2023). AI and formative assessment: The train has left the station. Journal of Research in Science Teaching, 60(6), 1390–1398. [Google Scholar] [CrossRef]

- Zhu, M., Lee, H. S., Wang, T., Liu, O. L., Belur, V., & Pallant, A. (2017). Investigating the impact of automated feedback on students’ scientific argumentation. International Journal of Science Education, 39(12), 1648–1668. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).