Teaching Field Data Crowdsourcing Using a GPS-Enabled Cellphone Application: Soil Erosion by Water as a Case Study

Abstract

:1. Introduction

- Crowd characteristics:

- Member composition: Type and skill of participants.

- 2.

- Member tasks: Complexity and difficulty.

- 3.

- Member participation rewards: Types of compensation.

- Crowdsourcing initiator:

- Event initiator: Individual, public entity, company.

- 2.

- Cost of crowdsourcing deployment: Personnel, computer resources, data validation. Existing online platforms (e.g., ArcGIS Survey123) allow the development of crowdsourcing systems efficiently because the computer resources necessary include an existing cloud infrastructure and the data collection devices which are the existing student smartphones.

- 3.

- Benefits of crowdsourcing: Knowledge, ideas, value creation.

- Crowdsourcing process:

- Method used: Participatory, distributed online process.

- 2.

- Identification of participants: Open, limited to a knowledge community, or a combination.

- 3.

- Medium used: Internet.

- Ethical and regulatory considerations:

2. Materials and Methods

2.1. Design

2.2. Background of the “Test” Course

2.3. Field Data Collection Using ArcGIS Survey123

3. Results

3.1. Pre-Testing Responses to the Web-Based Survey

3.2. Laboratory Exercise and Quiz Results

3.3. Comparison of Pre- and Post-Testing Responses to the Web-Based Surveys

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ENR | Environmental and Natural Resources |

| FOR | Forestry |

| RLO | Reusable learning object |

| STEM | Science, technology, engineering, and mathematics |

| USDA | United States Department of Agriculture |

| WFB | Wildlife and Fisheries Biology |

References

- Estellés-Arolas, E.; González-Ladrón-De-Guevara, F. Towards an integrated crowdsourcing definition. J. Inf. Sci. 2012, 38, 189–200. [Google Scholar] [CrossRef] [Green Version]

- Pirttinen, E. Crowdsourcing in Computer Science Education. In Proceedings of the 17th ACM Conference on International Computing Education Research (ICER 2021), Virtual Event USA, 16–19 August 2021; ACM: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Rahim, A.A.A.; Khamis, N.Y.; Majid, M.S.Z.A.; Uthayakumaran, A.; Isa, N.M. Crowdsourcing as content collaboration for STEM edutainment. Univers. J. Educ. Res. 2021, 9, 99–107. [Google Scholar] [CrossRef]

- Khan, J.; Papangelis, K.; Markopoulos, P. Completing a crowdsourcing task instead of an assignment; What do university students think? Case Study. In Proceedings of the 30th ACM Conference on Human Factors in Computing Systems (CHI ′20), Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Goncalves, J.; Feldman, M.; Hu, S.; Kostakos, V.; Bernstein, A. Task routing and assignment in crowdsourcing based on cognitive abilities. In Proceedings of the 26th International World Wide Web Conference (WWW ′17), Perth, Australia, 3–7 April 2017. [Google Scholar] [CrossRef] [Green Version]

- Cappa, F.; Rosso, F.; Hayes, D. Monetary and social rewards for crowdsourcing. Sustainability 2019, 11, 2834. [Google Scholar] [CrossRef] [Green Version]

- Dunlap, J.C.; Lowenthal, P.R. Online educators’ recommendations for teaching online: Crowdsourcing in action. Open Prax. 2018, 10, 79–89. [Google Scholar] [CrossRef] [Green Version]

- Yang, R.; Xue, Y.; Gomes, C.P. Pedagogical value-aligned crowdsourcing: Inspiring the wisdom of crowds via interactive teaching. In Proceedings of the 17th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2018), Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Yu, H.; Shen, Z.; Miao, C.; An, B. A Reputation-aware Decision-making Approach for Improving the Efficiency of Crowdsourcing Systems. In Proceedings of the 2013 International Conference on Autonomous Agents and Multi-agent Systems (AAMAS ′13), St. Paul, MN, USA, 6–10 May 2013; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA, 2013. [Google Scholar]

- Guo, H.; Ajmeri, N.; Singh, M.P. Teaching crowdsourcing: An experience report. IEEE Internet Comput. 2018, 22, 44–52. [Google Scholar] [CrossRef]

- Zdravkova, K. Ethical issues of crowdsourcing in education. J. Responsible Technol. 2020, 2, 100004. [Google Scholar] [CrossRef]

- Zheng, F.; Tao, R.; Maier, H.R.; See, L.; Savic, D.; Zhang, T.; Chen, Q.; Assumpção, T.H.; Yang, P.; Heidari, B.; et al. Crowdshourcing methods for data collection in geophysics: State of the art, issues, and future direction. Rev. Geophys. 2018, 56, 698–740. [Google Scholar] [CrossRef]

- Jiang, Y.; Schlagwein, D.; Benetallah, B. A review on crowdsourcing for education: State of the art of literature and practice. In PACIS 2018—Opportunities and Challenges for the Digitized Society: Are we Ready, Proceedings of the 2018 Pacific Asia Conference on Information Systems, Yokohama, Japan, 26–30 June 2020; Association for Information Systems (AIS): Atlanta, GA, USA, 2020; Available online: https://aisel.aisnet.org/pacis2018/ (accessed on 20 January 2022).

- Dahlqvist, M. Stimulating Engagement and Learning through Gamified Crowdsourcing: Development and Evaluation of a Digital Platform. Master’s Thesis, Faculty of Arts, Uppsala University, Uppsala, Sweden, 2017. Available online: http://www.diva-portal.org/smash/record.jsf?dswid=-9848&pid=diva2%3A1113649 (accessed on 20 January 2022).

- Clemson University. Undergraduate Announcements; Clemson University: Clemson, SC, USA, 2021. [Google Scholar]

- Redmond, C.; Davies, C.; Cornally, D.; Adam, E.; Daly, O.; Fegan, M.; O’Toole, M. Using reusable learning objects (RLOs) in wound care education: Undergraduate student nurse’s evaluation of their learning gain. Nurse Educ. Today 2018, 60, 3–10. [Google Scholar] [CrossRef] [PubMed]

- Urbańska, M.; Świtoniak, M.; Charzyński, P. Rusty soils—“lost” in school education. Soil Sci. Annu. 2021, 72, 143466. [Google Scholar] [CrossRef]

- Hartemink, A.E.; Balks, M.R.; Chen, Z.; Drohan, P.; Field, D.J.; Krasilnikov, P.; Lowe, D.J.; Rabenhorst, M.; van Rees, K.; Schad, P.; et al. The joy of teaching soil science. Geoderma 2014, 217, 1–9. [Google Scholar] [CrossRef]

- Urbańska, M.; Charzyński, P.; Gadsby, H.; Novák, T.J.; Şahin, S.; Yilmaz, M.D. Environmental threats and geographical education: Students’ sustainability awareness—Evaluation. Educ. Sci. 2022, 12, 1. [Google Scholar] [CrossRef]

- Urbańska, M.; Sojka, T.; Charzyński, P.; Świtoniak, M. Digital media in soil education. Geogr. Tour. 2019, 7, 41–52. [Google Scholar]

- Ostadabbas, H.; Weippert, H.; Behr, F.J. Using the synergy of QField for collecting data on-site and QGIS for interactive map creation by ALKIS® data extraction and implementation in PostgreSQL for urban planning processes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 679–683. [Google Scholar] [CrossRef]

| Crowdsourcing Involves Collaborative Work to Complete Tasks Defined by: | ||

|---|---|---|

| Crowd Characteristics | Crowdsourcing Initiator | Crowdsourcing Process |

| 1. Member composition: Type and skill of participants | 1. Event initiator: Individual, public entity, company | 1. Method used: Participatory, distributed online process |

| 2. Member tasks: Complexity and difficulty | 2. Cost of crowdsourcing deployment: Personnel, computer resources, data validation | 2. Identification of participants: Open, limited to a knowledge community, or a combination |

| 3. Member participation rewards: Types of compensation | 3. Benefits of crowdsourcing: Knowledge, ideas, value creation | 3. Medium used: Internet (web 2.0 and 3.0 in the future) |

| Ethical and Regulatory Considerations | ||

| Steps | Description of Activities |

|---|---|

| 1. Pre-assessment | Students fill out a web-based survey (Google Forms) on their familiarity with soil erosion by water, GPS, crowdsourcing, and remote sensing (Table 4). |

| 2. Lecture | Students are presented with a lecture entitled “Soil Erosion and Control” in PowerPoint and video formats. |

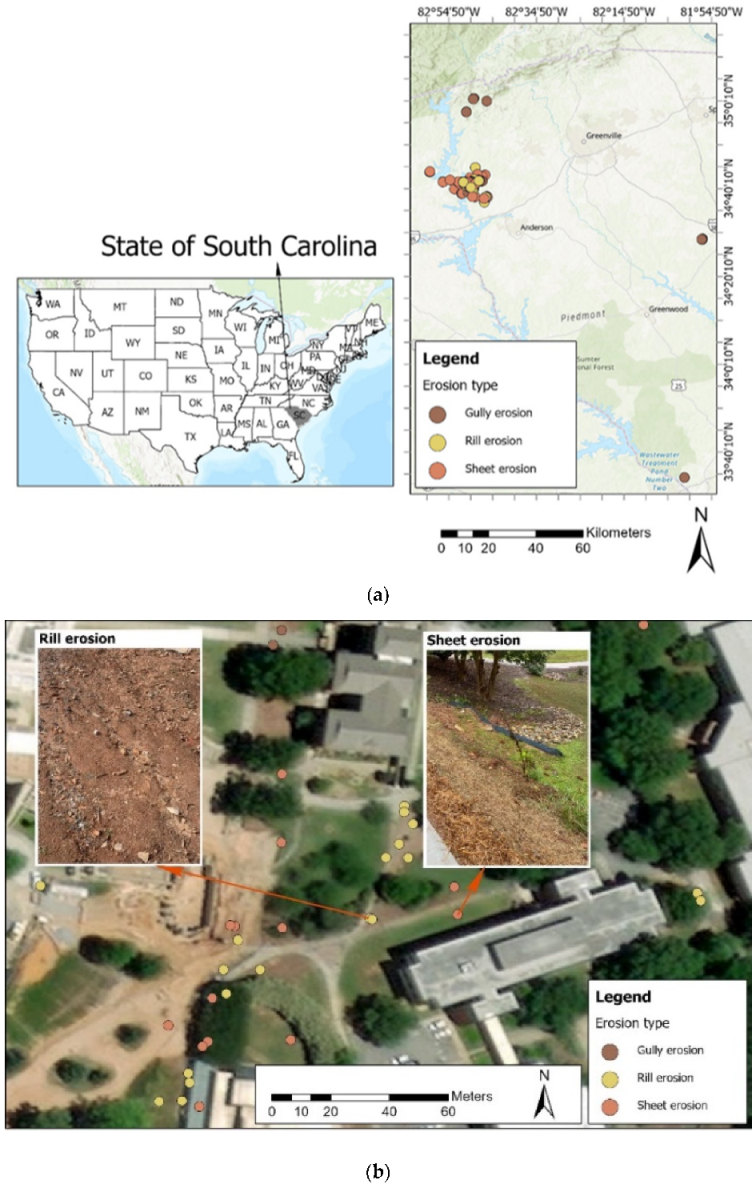

| 3. Laboratory exercise | Students are presented with a video laboratory explanation and accompanying PowerPoint slide deck that show how to collect field data using a custom ArcGIS Survey123 form created for this exercise, as well as examples of the types of erosion (with photos) that they could document in the field and an explanation of GPS-based cellphone data collection. Students were instructed to install the ArcGIS Survey123 application and to go to three separate locations in the area to find examples of water-derived soil erosion. At each location, students completed the custom form on the ArcGIS Survey123 application (Figure 1) and submitted the form along with photos from the site. The submitted data from all students were available in one arcgis.com account and included site locations, form data and submitted photos. |

| 4. Graded online quiz | Students take an online quiz (ten questions) using Canvas LMS (Table 5). |

| 5. Post-assessment | Students fill out a follow-up web-based survey (Google Forms) on their laboratory experience on the topics of soil erosion by water and crowdsourcing (Table 4). |

| Survey Questions | Responses | |||

|---|---|---|---|---|

| What is your major program? | FOR (9) | ENR (16) | WFB (21) | Other (12) |

| How would you best describe your academic classification (year)? | Sophomore (20) | Junior (23) | Senior (14) | Other (1) |

| How would you describe yourself? | Female (23) | Male (35) | ||

| Did you take online courses before? | Yes (56) | No (2) | ||

| Responses | |||

|---|---|---|---|

| Survey Questions and Answers | Pre-Assessment (%) (n = 58) | Post-Assessment (%) (n = 53) | Difference (%) |

| Please, rate your familiarity with the types of soil erosion caused by water. | |||

| 1 = not at all familiar | 3.4 | 0 | −3.4 |

| 2 = slighlty familiar | 34.5 | 1.9 | −32.6 |

| 3 = somewhat familiar | 46.6 | 1.9 | −44.7 |

| 4 = moderately familiar | 15.5 | 47.2 | +31.7 |

| 5 = extremely familiar | 0 | 49.1 | +49.1 |

| Please, rate your familiarity with the concept of Global Positioning System (GPS). | |||

| 1 = not at all familiar | 0 | 0 | 0 |

| 2 = slighlty familiar | 8.6 | 3.8 | −4.8 |

| 3 = somewhat familiar | 44.8 | 7.5 | −37.3 |

| 4 = moderately familiar | 41.4 | 47.2 | +5.8 |

| 5 = extremely familiar | 5.2 | 41.5 | +36.3 |

| Does every cellphone have a GPS built in? | |||

| Yes | 75.9 | 96.2 | +20.3 |

| No | 24.1 | 3.8 | −20.3 |

| How accurate do you think a cellphone GPS is? | |||

| Within 5 feet | 0 | 45.3 | +45.3 |

| Within 10 feet | 100 | 30.2 | −69.8 |

| Within 100 feet | 0 | 18.9 | +18.9 |

| Within 1000 feet | 0 | 5.7 | +5.7 |

| Have you ever used a cellphone app for field data collection? | |||

| Yes | 58.6 | - | - |

| No | 41.4 | - | - |

| Please, rate your familiarity with the concept of crowdsourcing. | |||

| 1 = not at all familiar | 56.9 | 3.8 | −53.1 |

| 2 = slighlty familiar | 22.4 | 7.5 | −14.9 |

| 3 = somewhat familiar | 10.3 | 18.9 | +8.6 |

| 4 = moderately familiar | 10.3 | 41.5 | +31.2 |

| 5 = extremely familiar | 0 | 28.3 | +28.3 |

| Please, rate your familiarity with the concept of remote sensing. | |||

| 1 = not at all familiar | 51.7 | 1.9 | −49.8 |

| 2 = slighlty familiar | 31.0 | 7.5 | −23.5 |

| 3 = somewhat familiar | 10.3 | 17.0 | +6.7 |

| 4 = moderately familiar | 6.9 | 50.9 | +44.0 |

| 5 = extremely familiar | 0 | 22.6 | +22.6 |

| Can a cellphone be used for remote sensing? | |||

| Yes | 81 | 98.1 | +17.1 |

| No | 19 | 1.9 | −17.1 |

| The laboratory was an effective way to learn about soil erosion caused by water. | |||

| 1 = strongly disagree | - | 0 | - |

| 2 = disagree | - | 1.9 | - |

| 3 = neither agree nor disagree | - | 9.4 | - |

| 4 = agree | - | 52.8 | - |

| 5 = strongly agree | - | 35.8 | - |

| The laboratory was an effective way to learn about crowdsourcing. | |||

| 1 = strongly disagree | - | 0 | - |

| 2 = disagree | - | 3.8 | - |

| 3 = neither agree nor disagree | - | 11.3 | - |

| 4 = agree | - | 49.1 | - |

| 5 = strongly agree | - | 35.8 | - |

| A cellphone data gathering app (e.g., Survey123) is an accurate and efficient way to collect field data. | |||

| 1 = strongly disagree | - | 0 | - |

| 2 = disagree | - | 3.8 | - |

| 3 = neither agree nor disagree | - | 5.7 | - |

| 4 = agree | - | 49.1 | - |

| 5 = strongly agree | - | 41.5 | - |

| Quiz Questions and Answers | Respondents | Responses (%) |

|---|---|---|

| What is soil erosion by water? | ||

| Removal and deposition of soil | 56 | 100 |

| Action of wind that moves soil | 0 | 0 |

| The thickness of a soil layer | 0 | 0 |

| Degree of soil compaction | 0 | 0 |

| Soil erosion caused by water damages water resources by… | ||

| Clarifying water | 2 | 4 |

| Reducing flooding | 1 | 2 |

| Dredging lakes | 0 | 0 |

| Polluting lakes/streams with soil | 53 | 95 |

| What factors impact soil erosion? | ||

| All of the listed factors | 56 | 100 |

| Soil slope | 0 | 0 |

| Soil texture | 0 | 0 |

| Soil cover | 0 | 0 |

| Soil surface roughness | 0 | 0 |

| What is gully erosion? | ||

| Erosion forming deep channels | 55 | 98 |

| Erosion caused by wind | 0 | 0 |

| Erosion forming small channels | 1 | 2 |

| Removal of a thin layer of soil | 0 | 0 |

| What is rill erosion? | ||

| Erosion forming small channels | 56 | 100 |

| Erosion caused by wind | 0 | 0 |

| Erosion forming deep channels | 0 | 0 |

| Removal of a thin layer of soil | 0 | 0 |

| What is sheet erosion? | ||

| Removal of a thin layer of soil | 56 | 100 |

| Erosion caused by ice | 0 | 0 |

| Erosion forming deep channels | 0 | 0 |

| Erosion forming small channels | 0 | 0 |

| Slope is composed of grade and length. | ||

| True | 56 | 100 |

| False | 0 | 0 |

| A rough surface slows water runoff. | ||

| True | 55 | 98 |

| False | 1 | 2 |

| Increased plant residue reduces water erosion. | ||

| True | 53 | 95 |

| False | 3 | 5 |

| Tillage across the slope promotes water downhill flow. | ||

| True | 18 | 32 |

| False | 38 | 68 |

| Responses |

|---|

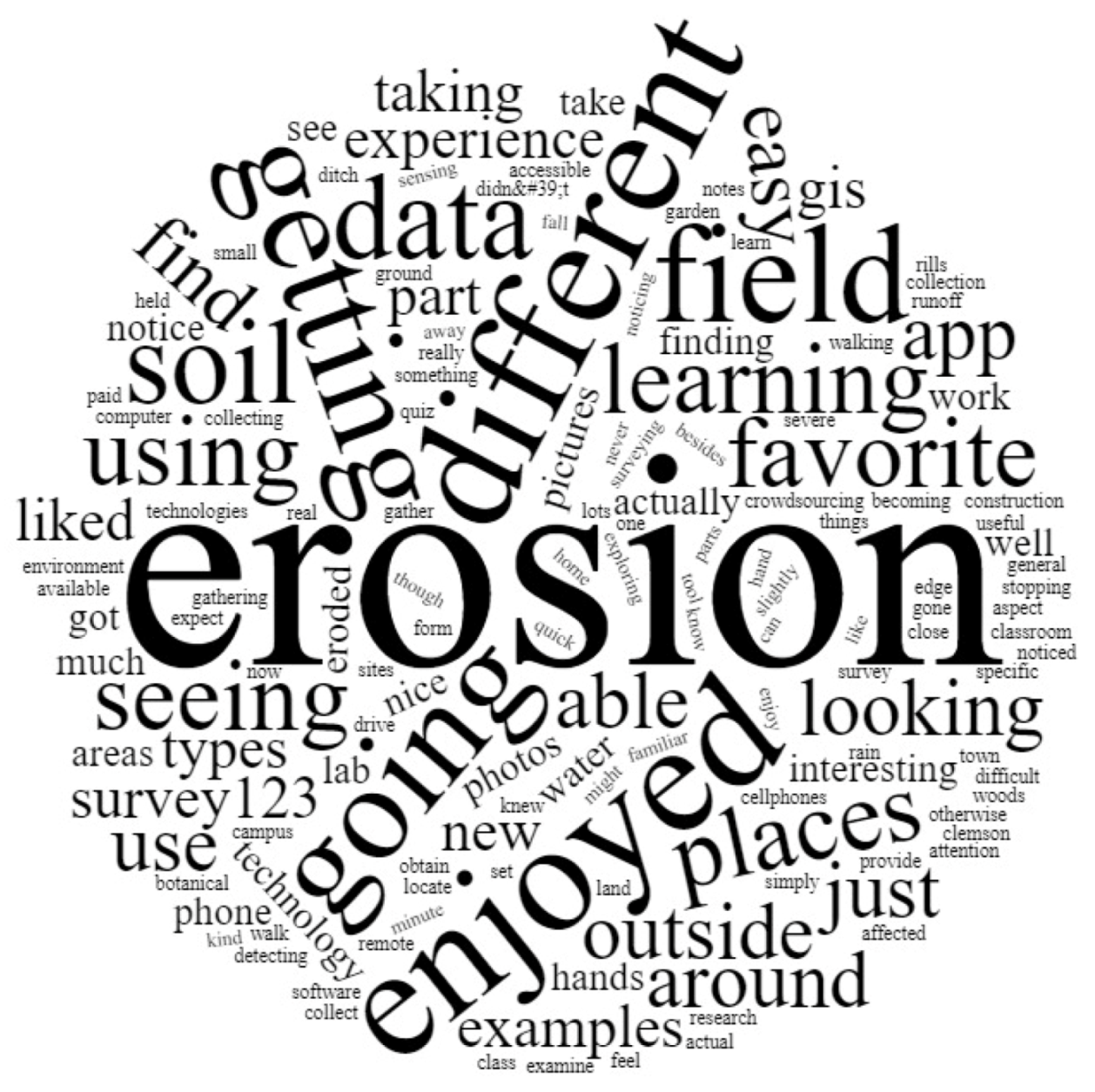

| T1. Enjoyment of learning |

| I liked going out into botanical garden to find soil erosion, as well as around Clemson in general. |

| My favorite experience was learning about the different technologies useful in detecting erosion. |

| I liked looking for erosion on our land. |

| I enjoyed being able to actually see some real examples in the field. |

| My favorite part was getting to go out and collect data all over town. |

| I enjoyed going out and finding different examples of erosion. |

| I enjoyed gathering photos in the field. |

| I liked going out to new places I might not have gone before and looking for things I would have otherwise not noticed. |

| T2. Value of multimedia |

| Using Survey123, it was really easy. |

| I enjoyed learning how cellphones are now an easy tool to use for surveying (Survey123) as well as remote sensing through photos. |

| Taking pictures of erosion. |

| I enjoyed the hands-on aspect of going out and collecting data using the Survey123 app. |

| Being able to use my phone to locate different areas and obtain data from my home. |

| My favorite experience was learning about a form of GIS and crowdsourcing software available and becoming familiar with this technology. |

| The app was very easy to use, and there were lots of examples of erosion. |

| T3. Flexibility of learning |

| Getting to go outside. |

| I like the breakdown steps of each part of the lab. |

| Getting outside of the classroom and exploring. |

| Getting away from my computer to walk in the woods. |

| Walking around looking at soil. |

| Seeing new places to gather data. |

| T4. Applicability of content |

| Seeing the different types of erosion that I knew were there but never paid attention to. |

| I didn’t expect to find as much erosion around campus as I did. It was interesting to examine the ground and notice the small rills. |

| My favorite experience was getting to go out and notice erosion in more places than one and what kind it was. |

| My favorite part was just simply stopping to take a minute to take pictures of the erosion, it was more so just taking in and noticing my environment slightly more than before. |

| Going out to find different types of soil erosion. |

| Finding places with water erosion. |

| T5. Criticism |

| Mention that you have to update your location every time you stop on the app. I did all three locations in the exact same place the first time around and had to redo it because I didn’t realize that it does not automatically update. |

| Did not like how survey123 would only let me submit 1 image per spot. |

| I wish I could have known I would need to go out and find places before the day of my lab so I could plan ahead. |

| I did not like the pre-lab quiz simply because I did not know much of it. |

| Give us more time to work on it so we have time to go to better sites to collect data. |

| Pre-Test Definition by Students | Post-Test Definition by Students |

|---|---|

| Soil erosion caused by water | |

| Impact that raindrops or running water have on soil surface. | Removal and deposition of soil by water. |

| Soil erosion caused by water means that some sort of runoff and/or stream is moving sediment from one area and depositing it in another. | The removal and deposition of soil by water. |

| Water washes away the soil from an area. | The removal/transportation of soil or soil particles by water. |

| Removal of top layer of soil by rainfall, snow, runoff, etc. | Removal and deposition of soil particles by water. |

| When water moves the soil around. | Removal, transportation, and deposition of soils caused by water. |

| Water takes away soil. | The detachment and removal of soil material by water. |

| Global Positioning System (GPS) and what it is used for | |

| To find places. | GPS uses multiple satellites in coalition with one another in order to give the lat., long., and elevation of a point on Earth’s surface. |

| GPS is a device that can tell you where you are. | GPS is basically navigation and surveys all the world. It’s used for mapping, directions, etc. |

| Use to mark a location on a map. | GPS uses satellite technology to determine latitude and longitude on a location. |

| It can tell where you are on the earth and can be used to locate and guide you. | GPS provides latitude and longitude for finding places where data was obtained and recorded, such as sites of erosion. |

| GPS is satellite mapping technology that maps out geographical areas around the globe. It is used for navigation and long-distance research. | GPS is a system of satellites and antennae that transmit and receive signals that are cross referenced with time to determine location on the Earth’s surface. |

| Crowdsourcing | |

| n/a | Using a large group of people to collect data for a research project. |

| No idea. | People obtain information or data via a large population participating in the study. |

| Not sure. | Getting data for a project from many people. |

| I am not familiar with this term. | Gathering information with the help of multiple people. |

| People get money from people who are interested in their projects | When a bunch of people collect data and upload to central network. |

| Getting information from a crowd of people. | Using a group of people to collect data. |

| Remote sensing | |

| n/a | Remote sensing is obtaining information without making any direct contact via things like satellites. |

| Sensing location from a remote area. | Using satellites or aerial vehicles to collect data about the earth’s surface, such as light reflection, elevation changes, etc. |

| I’m not familiar. | As simple as taking a photo of a subject. It helps capture visual information about the object. |

| I have no idea what remote sensing is. | Capturing information about something without contacting it. |

| Using technology to find something. | Remote sensing is using instruments (such as a camera) to gather information about something without touching it. |

| Gathering data remotely, without being present at the site of data collection. | Remote sensing uses instruments to collect data about a location without being physically present. |

| Crowdsourcing subject matter selection: Soil erosion by water was selected because it was an important course topic with a geospatial nature that was available for student identification at various locations. | ||

| Crowdsourcing Involves Collaborative Work to Complete Tasks Defined by: | ||

| Crowd Characteristics | Crowdsourcing Initiator | Crowdsourcing Process |

| 1. Member composition: Type and skill of participants. This study: Students in FNR 2040: Soil Information Systems with basic computer and soil science skills. Prior knowledge and skills were assessed using a Google Form questionnaire. | 1. Event initiator: Individual, public entity, company. This study: The exercise was designed and managed by a team that included professors and a laboratory assistant. | 1. Method used: Participatory, distributed online process. This study: Students participated in a distributed online process of data collection using a cellphone application. |

| 2. Member tasks: Complexity and difficulty. This study: Students had to complete a video lecture on both soil erosion and on how to use ArcGIS Survey123 and information on GPS technology. Students were provided with a laboratory assignment to find and describe soil erosion types using the ArcGIS Survey123 mobile phone application. | 2. Cost of crowdsourcing deployment: Personnel, computer resources, data validation. This study: ArcGIS Survey123 was freely available because of a university ESRI site license. Students provided their own transportation costs. | 2. Identification of participants: Open, limited to a knowledge community, or a combination. This study: Participation was limited to students who had similar backgrounds, ages, and levels of education. This background information was acquired using a Google Form questionnaire. |

| 3. Member participation rewards: Types of compensation. This study: Students were given a grade based on their successful completion of the laboratory exercise. | 3. Benefits of crowdsourcing: Knowledge, ideas, value creation. This study: Students acquired knowledge on soil erosion, GPS, remote sensing and crowdsourcing. | 3. Medium used: Internet (web 2.0). This study: Students used a customized ArcGIS Survey123 that was made available through the ArcGIS Survey123 cellphone application. |

| Ethical and Regulatory Considerations: This study: Students were instructed to avoid trespassing and any situation with personal risk. The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of Clemson University Institutional Review Board (protocol code IRB2020-257 and date of approval: 25 September 2020). | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mikhailova, E.A.; Post, C.J.; Zurqani, H.A.; Younts, G.L. Teaching Field Data Crowdsourcing Using a GPS-Enabled Cellphone Application: Soil Erosion by Water as a Case Study. Educ. Sci. 2022, 12, 151. https://doi.org/10.3390/educsci12030151

Mikhailova EA, Post CJ, Zurqani HA, Younts GL. Teaching Field Data Crowdsourcing Using a GPS-Enabled Cellphone Application: Soil Erosion by Water as a Case Study. Education Sciences. 2022; 12(3):151. https://doi.org/10.3390/educsci12030151

Chicago/Turabian StyleMikhailova, Elena A., Christopher J. Post, Hamdi A. Zurqani, and Grayson L. Younts. 2022. "Teaching Field Data Crowdsourcing Using a GPS-Enabled Cellphone Application: Soil Erosion by Water as a Case Study" Education Sciences 12, no. 3: 151. https://doi.org/10.3390/educsci12030151

APA StyleMikhailova, E. A., Post, C. J., Zurqani, H. A., & Younts, G. L. (2022). Teaching Field Data Crowdsourcing Using a GPS-Enabled Cellphone Application: Soil Erosion by Water as a Case Study. Education Sciences, 12(3), 151. https://doi.org/10.3390/educsci12030151